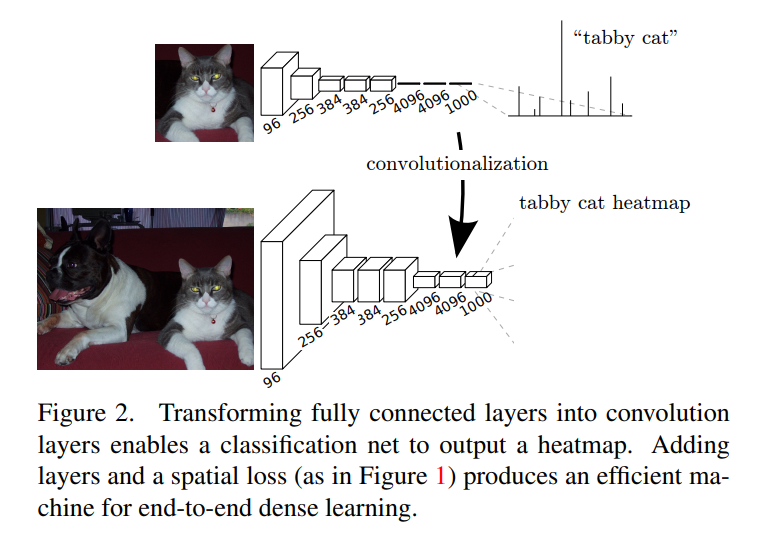

简介 :FCN图像分割的开山之作,全卷积化网络,模型由卷积层、池化层组成(没有全连接层),可以接受任意大小的输入。通过特征提取网络得到(_,512,7,7)的深层特征后恢复到原始尺寸的分割预测效果。

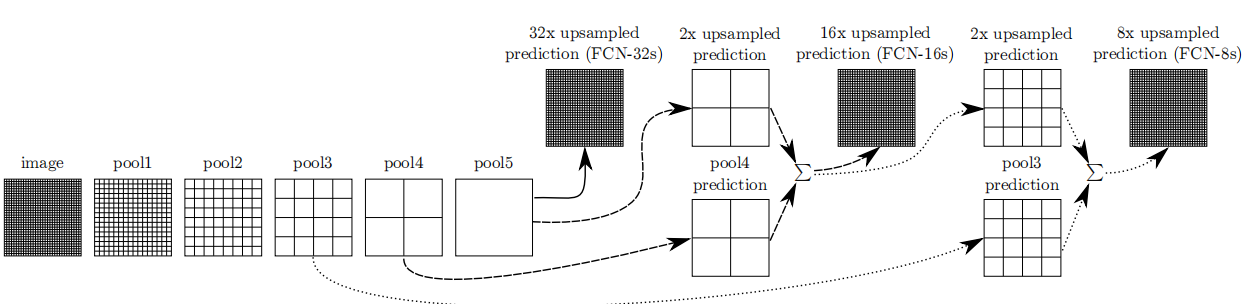

作者对比三种结构,FCN32s、FCN16s、FCN8s

FCN-32s: 直接上采样32倍

FCN-16s: 融合1次跳跃连接 + 上采样16倍

FCN-8s: 融合2次跳跃连接 + 上采样8倍

模型结构-FCN8s

分割头

python

class _FCNHead(nn.Module):

'''

FCN分割头模块 - 将主干网络提取的特征映射到分割类别空间

作用:

1. 将高维特征(512维)降维到与类别数匹配的维度

2. 通过额外的卷积层进一步融合特征,提升分割精度

3. 作为FCN模型中的解码器头部

参数:

in_channels: 输入特征图的通道数,通常为512(VGG最后一层输出)

out_channels: 输出通道数,等于分割的类别数(如PASCAL VOC的21类)

norm_layer: 归一化层类型,默认为BatchNorm2d

'''

def __init__(self, in_channels, out_channels, norm_layer=nn.BatchNorm2d, **kwargs):

super(_FCNHead, self).__init__()

# 中间通道数:将输入通道数压缩到1/4,作为中间表示

inter_channels = in_channels // 4

# 定义分割头的网络块

self.block = nn.Sequential(

nn.Conv2d(in_channels=in_channels,

out_channels=inter_channels,

kernel_size=3, # 使用3×3卷积核(非1×1)可以融合空间上下文信息

padding=1, # padding=1保持特征图尺寸不变

bias=False), # bias=False因为后续有BatchNorm,bias参数冗余

norm_layer(inter_channels), # 2. 归一化层:加速训练,稳定梯度

nn.ReLU(inplace=True),

nn.Dropout(0.1), # 正则化, 随机丢弃10%的神经元,增强模型泛化能力

nn.Conv2d(inter_channels, out_channels, 1) # 1×1卷积层:通道数映射到类别数

)

def forward(self, x):

"""

x: 例如:(1, 512, 16, 16)

返回: 例如:(1, 21, 16, 16),每个位置有21个类别的得分

"""

return self.block(x)FCN主网络

python

class FCN8s(nn.Module):

"""

1. 融合pool3(8倍下采样)、pool4(16倍下采样)、pool5(32倍下采样)三个层次的特征

2. 两次跳跃连接,逐步上采样,获得精细的分割结果

3. 输出相对于输入下采样8倍(8s的含义)

"""

def __init__(self, nclass, backbone='vgg16', aux=False, pretrained_base=True,

norm_layer=nn.BatchNorm2d, **kwargs):

super(FCN8s, self).__init__()

self.aux = aux # 是否使用辅助损失

# 加载并拆分VGG16主干网络

if backbone == 'vgg16':

self.pretrained = vgg16(pretrained=pretrained_base).features # 只使用VGG的卷积部分(features)

else:

raise RuntimeError('unknown backbone: {}'.format(backbone))

# 将VGG的卷积层拆分为三个部分,对应三个下采样级别, VGG16 features共有31层(0-30)

self.pool3 = nn.Sequential(*self.pretrained[:17]) # 层0-16: Conv1_1 ~ Conv3_3 (第3个池化层前)

self.pool4 = nn.Sequential(*self.pretrained[17:24]) # 层17-23: Conv4_1 ~ Conv4_3 (第4个池化层前)

self.pool5 = nn.Sequential(*self.pretrained[24:]) # 层24-30: Conv5_1 ~ Conv5_3+MaxPool5

# 分割头

self.head = _FCNHead(512, nclass, norm_layer)

# 跳跃连接的1x1卷积 - 调整浅层特征的通道数

self.score_pool3 = nn.Conv2d(256, nclass, 1) # pool3输出256通道

self.score_pool4 = nn.Conv2d(512, nclass, 1) # pool4输出512通道

# 辅助输出头(可选) - 用于训练时的辅助监督

if aux:

self.auxlayer = _FCNHead(512, nclass, norm_layer)

# 标识需要特殊训练处理的模块,可设定不同的学习率

self.__setattr__('exclusive',

['head', 'score_pool3', 'score_pool4', 'auxlayer'] if aux

else ['head', 'score_pool3', 'score_pool4'])

def forward(self, x):

"""

x: 输入图像,形状为(batch_size, 3, H, W)

返回: 分割结果,形状为(batch_size, nclass, H, W)

"""

# ========== 阶段1:特征提取 ==========

pool3 = self.pool3(x) # 提取pool3特征 (下采样8倍) → (batch, 256, H/8, W/8)

pool4 = self.pool4(pool3) # 提取pool4特征 (下采样16倍) → (batch, 512, H/16, W/16)

pool5 = self.pool5(pool4) # 提取pool5特征 (下采样32倍) → (batch, 512, H/32, W/32)

outputs = [] # 存储所有输出,主要方便查看中间结果,可以没有

# ========== 阶段2:三个层次的特征预测 ==========

score_fr = self.head(pool5) # 深层特征预测 → (batch, nclass, H/32, W/32)

score_pool4 = self.score_pool4(pool4) # 中层特征预测 → (batch, nclass, H/16, W/16)

score_pool3 = self.score_pool3(pool3) # 浅层特征预测 → (batch, nclass, H/8, W/8)

# ========== 阶段3:第一次融合 (pool5 + pool4) ==========

# 将深层特征上采样2倍到pool4的分辨率, 深层语义 + 中层细节

upscore2 = F.interpolate(score_fr, score_pool4.size()[2:], mode='bilinear', align_corners=True)

fuse_pool4 = upscore2 + score_pool4 # → (batch, nclass, H/16, W/16)

# ========== 阶段4:第二次融合 (fuse_pool4 + pool3) ==========

# 将融合后的特征上采样2倍到pool3的分辨率, 中层融合特征 + 浅层细节

upscore_pool4 = F.interpolate(fuse_pool4, score_pool3.size()[2:], mode='bilinear', align_corners=True)

fuse_pool3 = upscore_pool4 + score_pool3 # → (batch, nclass, H/8, W/8)

# ========== 阶段5:最终上采样 ==========

# 将融合了三个层次的特征上采样8倍到原始输入尺寸

out = F.interpolate(fuse_pool3, x.size()[2:], mode='bilinear', align_corners=True)

outputs.append(out)

# ========== 阶段6:辅助输出 (可选) ==========

if self.aux:

# 直接从pool5特征预测并上采样32倍

auxout = self.auxlayer(pool5)

auxout = F.interpolate(auxout, x.size()[2:], mode='bilinear', align_corners=True)

outputs.append(auxout) # 辅助输出

return tuple(outputs) # 返回元组: (主输出, 辅助输出) 或 (主输出,)损失函数与评估指标

逐像素的交叉熵损失(Pixel-wise Cross-Entropy Loss)

python

class CrossEntropyLoss2d(_WeightedLoss):

"""

Standard pytorch weighted nn.CrossEntropyLoss

"""

def __init__(self, weight=None, ignore_label=255, reduction='mean'):

super(CrossEntropyLoss2d, self).__init__()

self.loss = nn.CrossEntropyLoss(weight, ignore_index=ignore_label, reduction=reduction)

def forward(self, output, target):

"""

Forward pass

:param output: torch.tensor (NxC)

:param target: torch.tensor (N)

:return: scalar

"""

return self.loss(output, target)评估指标,论文用到MeanIU、pixel acc、mean acc、 f.w.IU

python

"""

Reference to https://github.com/jfzhang95/pytorch-deeplab-xception/blob/master/utils/metrics.py

Add metrics: Precision、Recall、F1-Score

"""

import numpy as np

np.seterr(divide='ignore', invalid='ignore')

__all__ = ['SegmentationMetric']

"""

confusionMetric # 注意:此处横着代表预测值,竖着代表真实值,与之前介绍的相反

P\L P N

P TP FP

N FN TN

sum(axis=0) TP+FN

sum(axis=1) TP+FP

np.diag().sum() TP+TN

"""

class SegmentationMetric(object):

def __init__(self, numClass):

self.numClass = numClass

self.confusionMatrix = np.zeros((self.numClass,)*2)

def pixelAccuracy(self):

# return all class overall pixel accuracy

# PA = acc = (TP + TN) / (TP + TN + FP + FN)

acc = np.diag(self.confusionMatrix).sum() / self.confusionMatrix.sum()

return acc

def meanPixelAccuracy(self):

# return each category pixel accuracy(A more accurate way to call it precision)

# acc = TP / (TP + FP)

Cpa = np.diag(self.confusionMatrix) / self.confusionMatrix.sum(axis=1)

Mpa = np.nanmean(Cpa) # 求各类别Cpa的平均

return Mpa, Cpa # 返回的是一个列表值,如:[0.90, 0.80, 0.96],表示类别1 2 3各类别的预测准确率

def meanIntersectionOverUnion(self):

# Intersection = TP ;Union = TP + FP + FN

# Ciou = TP / (TP + FP + FN)

intersection = np.diag(self.confusionMatrix) # 取对角元素的值,返回列表

union = np.sum(self.confusionMatrix, axis=1) + np.sum(self.confusionMatrix, axis=0) - np.diag(self.confusionMatrix) # axis = 1表示混淆矩阵行的值,返回列表; axis = 0表示取混淆矩阵列的值,返回列表

Ciou = (intersection / np.maximum(1.0, union)) # 返回列表,其值为各个类别的Ciou

mIoU = np.nanmean(Ciou) # 求各类别Ciou的平均

return mIoU, Ciou

def Frequency_Weighted_Intersection_over_Union(self):

# FWIOU = [(TP+FN)/(TP+FP+TN+FN)] *[TP / (TP + FP + FN)]

freq = np.sum(self.confusionMatrix, axis=1) / np.sum(self.confusionMatrix)

iu = np.diag(self.confusionMatrix) / (

np.sum(self.confusionMatrix, axis=1) + np.sum(self.confusionMatrix, axis=0) -

np.diag(self.confusionMatrix))

FWIoU = (freq[freq > 0] * iu[freq > 0]).sum()

return FWIoU

def precision(self):

# precision = TP / (TP+FP)

precision = np.diag(self.confusionMatrix) / np.sum(self.confusionMatrix, axis=1)

return precision

def recall(self):

# recall = TP / (TP+FN)

recall = np.diag(self.confusionMatrix) / np.sum(self.confusionMatrix, axis=0)

return recall

def genConfusionMatrix(self, imgPredict, imgLabel): # 同FCN中score.py的fast_hist()函数

# remove classes from unlabeled pixels in gt image and predict

mask = (imgLabel >= 0) & (imgLabel < self.numClass)

label = self.numClass * imgLabel[mask].astype('int') + imgPredict[mask]

count = np.bincount(label, minlength=self.numClass**2)

confusionMatrix = count.reshape(self.numClass, self.numClass)

return confusionMatrix

def addBatch(self, imgPredict, imgLabel):

assert imgPredict.shape == imgLabel.shape

self.confusionMatrix += self.genConfusionMatrix(imgPredict, imgLabel)

def reset(self):

self.confusionMatrix = np.zeros((self.numClass, self.numClass))

if __name__ == '__main__':

imgPredict = np.array([0, 0, 1, 1, 2, 2]) # 可直接换成预测图片

imgLabel = np.array([0, 0, 1, 1, 1, 2]) # 可直接换成标注图片

metric = SegmentationMetric(3) # 3表示有3个分类,有几个分类就填几

metric.addBatch(imgPredict, imgLabel)

pa = metric.pixelAccuracy()

mpa = metric.meanPixelAccuracy()

fwIU = metric.Frequency_Weighted_Intersection_over_Union()

mIoU, per = metric.meanIntersectionOverUnion()

print('pa is : %f' % pa)

print('fwiu is : %f' % fwIU) # 列表

print('mpa is : %f' % mpa[0], mpa[1])

print('mIoU is : %f' % mIoU, per)FCN32s、FCN16s、FCN8s全部

python

import torch

import torch.nn as nn

import torch.nn.functional as F

from vgg import vgg16

class _FCNHead(nn.Module):

'''

FCN分割头模块 - 将主干网络提取的特征映射到分割类别空间

作用:

1. 将高维特征(512维)降维到与类别数匹配的维度

2. 通过额外的卷积层进一步融合特征,提升分割精度

3. 作为FCN模型中的解码器头部

参数:

in_channels: 输入特征图的通道数,通常为512(VGG最后一层输出)

out_channels: 输出通道数,等于分割的类别数(如PASCAL VOC的21类)

norm_layer: 归一化层类型,默认为BatchNorm2d

'''

def __init__(self, in_channels, out_channels, norm_layer=nn.BatchNorm2d, **kwargs):

super(_FCNHead, self).__init__()

# 中间通道数:将输入通道数压缩到1/4,作为中间表示

inter_channels = in_channels // 4

# 定义分割头的网络块

self.block = nn.Sequential(

nn.Conv2d(in_channels=in_channels,

out_channels=inter_channels,

kernel_size=3, # 使用3×3卷积核(非1×1)可以融合空间上下文信息

padding=1, # padding=1保持特征图尺寸不变

bias=False), # bias=False因为后续有BatchNorm,bias参数冗余

norm_layer(inter_channels), # 2. 归一化层:加速训练,稳定梯度

nn.ReLU(inplace=True),

nn.Dropout(0.1), # 正则化, 随机丢弃10%的神经元,增强模型泛化能力

nn.Conv2d(inter_channels, out_channels, 1) # 1×1卷积层:通道数映射到类别数

)

def forward(self, x):

"""

x: 例如:(1, 512, 16, 16)

返回: 例如:(1, 21, 16, 16),每个位置有21个类别的得分

"""

return self.block(x)

class FCN32s(nn.Module):

def __init__(self, nclass, backbone='vgg16', aux=False, pretrained_base=True,

norm_layer=nn.BatchNorm2d, **kwargs):

super(FCN32s, self).__init__()

self.aux = aux

# 只加载VGG16的卷积部分(去除全连接层)

if backbone == 'vgg16':

self.pretrained = vgg16(pretrained=pretrained_base).features

else:

raise RuntimeError('unknown backbone: {}'.format(backbone))

# 分割头(1x1卷积)

self.head = _FCNHead(512, nclass, norm_layer=norm_layer)

if aux:

self.auxlayer = _FCNHead(512, nclass, norm_layer=norm_layer)

self.__setattr__('exclusive', ['head', 'auxlayer'] if aux else ['head'])

def forward(self, x):

# ([1, 3, 224, 224])

size = x.size()[2:]

# VGG16特征提取器(固定权重) conv1~conv5 下采样32倍

pool5 = self.pretrained(x) # ([1, 512, 7, 7])

outputs = []

# 分割头将其分割到目标的类别数

out = self.head(pool5) # ([1, 21, 7, 7])

# 上采样32倍

out = F.interpolate(out, size=size, mode='bilinear', align_corners=True) # ([1, 21, 224, 224])

outputs.append(out)

if self.aux:

auxout = self.auxlayer(pool5)

auxout = F.interpolate(auxout, size=size, mode='bilinear', align_corners=True)

outputs.append(auxout)

return tuple(outputs)

class FCN16s(nn.Module):

def __init__(self, nclass, backbone='vgg16', aux=False, pretrained_base=True,

norm_layer=nn.BatchNorm2d, **kwargs):

super(FCN16s, self).__init__()

self.aux = aux

if backbone == 'vgg16':

self.pretrained = vgg16(pretrained=pretrained_base).features

else:

raise RuntimeError('unknown backbone: {}'.format(backbone))

self.pool4 = nn.Sequential(*self.pretrained[:24]) # vgg 00~23层

self.pool5 = nn.Sequential(*self.pretrained[24:]) # vgg 24~30层

self.head = _FCNHead(512, nclass, norm_layer=norm_layer)

self.score_pool4 = nn.Conv2d(512, nclass, 1) # 跳跃连接模块

if aux:

self.auxlayer = _FCNHead(512, nclass, norm_layer=norm_layer)

self.__setattr__('exclusive', ['head', 'score_pool4', 'auxlayer'] if aux else ['head', 'score_pool4'])

def forward(self, x):

# input size 1 3 224 224

# vgg 特征提取

pool4 = self.pool4(x) # (_, 512, 14, 14) ← 下采样16倍, 较浅特征,细节丰富

pool5 = self.pool5(pool4) # (_, 512, 7, 7) ← 下采样32倍, 深层特征,语义丰富

# 分别进行类别预测

outputs = []

score_fr = self.head(pool5) # (nclass, 7, 7) 深层预测

score_pool4 = self.score_pool4(pool4) # (nclass, 14, 14) 浅层预测

# 第一次上采样:深层特征上采样2倍到浅层分辨率

upscore2 = F.interpolate(score_fr, score_pool4.size()[2:], mode='bilinear', align_corners=True) # (_, nclass, 14, 14)

# 特征融合:深层语义 + 浅层细节

fuse_pool4 = upscore2 + score_pool4 # (_, nclass, 14, 14)

# 最终上采样:16倍到原图尺寸

out = F.interpolate(fuse_pool4, x.size()[2:], mode='bilinear', align_corners=True) # (_, nclass, 224, 224)

outputs.append(out)

if self.aux:

auxout = self.auxlayer(pool5)

auxout = F.interpolate(auxout, x.size()[2:], mode='bilinear', align_corners=True)

outputs.append(auxout)

return tuple(outputs)

class FCN8s(nn.Module):

"""

1. 融合pool3(8倍下采样)、pool4(16倍下采样)、pool5(32倍下采样)三个层次的特征

2. 两次跳跃连接,逐步上采样,获得精细的分割结果

3. 输出相对于输入下采样8倍(8s的含义)

"""

def __init__(self, nclass, backbone='vgg16', aux=False, pretrained_base=True,

norm_layer=nn.BatchNorm2d, **kwargs):

super(FCN8s, self).__init__()

self.aux = aux # 是否使用辅助损失

# 加载并拆分VGG16主干网络

if backbone == 'vgg16':

self.pretrained = vgg16(pretrained=pretrained_base).features # 只使用VGG的卷积部分(features)

else:

raise RuntimeError('unknown backbone: {}'.format(backbone))

# 将VGG的卷积层拆分为三个部分,对应三个下采样级别, VGG16 features共有31层(0-30)

self.pool3 = nn.Sequential(*self.pretrained[:17]) # 层0-16: Conv1_1 ~ Conv3_3 (第3个池化层前)

self.pool4 = nn.Sequential(*self.pretrained[17:24]) # 层17-23: Conv4_1 ~ Conv4_3 (第4个池化层前)

self.pool5 = nn.Sequential(*self.pretrained[24:]) # 层24-30: Conv5_1 ~ Conv5_3+MaxPool5

# 分割头

self.head = _FCNHead(512, nclass, norm_layer)

# 跳跃连接的1x1卷积 - 调整浅层特征的通道数

self.score_pool3 = nn.Conv2d(256, nclass, 1) # pool3输出256通道

self.score_pool4 = nn.Conv2d(512, nclass, 1) # pool4输出512通道

# 辅助输出头(可选) - 用于训练时的辅助监督

if aux:

self.auxlayer = _FCNHead(512, nclass, norm_layer)

# 标识需要特殊训练处理的模块,可设定不同的学习率

self.__setattr__('exclusive',

['head', 'score_pool3', 'score_pool4', 'auxlayer'] if aux

else ['head', 'score_pool3', 'score_pool4'])

def forward(self, x):

"""

x: 输入图像,形状为(batch_size, 3, H, W)

返回: 分割结果,形状为(batch_size, nclass, H, W)

"""

# ========== 阶段1:特征提取 ==========

pool3 = self.pool3(x) # 提取pool3特征 (下采样8倍) → (batch, 256, H/8, W/8)

pool4 = self.pool4(pool3) # 提取pool4特征 (下采样16倍) → (batch, 512, H/16, W/16)

pool5 = self.pool5(pool4) # 提取pool5特征 (下采样32倍) → (batch, 512, H/32, W/32)

outputs = [] # 存储所有输出,主要方便查看中间结果,可以没有

# ========== 阶段2:三个层次的特征预测 ==========

score_fr = self.head(pool5) # 深层特征预测 → (batch, nclass, H/32, W/32)

score_pool4 = self.score_pool4(pool4) # 中层特征预测 → (batch, nclass, H/16, W/16)

score_pool3 = self.score_pool3(pool3) # 浅层特征预测 → (batch, nclass, H/8, W/8)

# ========== 阶段3:第一次融合 (pool5 + pool4) ==========

# 将深层特征上采样2倍到pool4的分辨率, 深层语义 + 中层细节

upscore2 = F.interpolate(score_fr, score_pool4.size()[2:], mode='bilinear', align_corners=True)

fuse_pool4 = upscore2 + score_pool4 # → (batch, nclass, H/16, W/16)

# ========== 阶段4:第二次融合 (fuse_pool4 + pool3) ==========

# 将融合后的特征上采样2倍到pool3的分辨率, 中层融合特征 + 浅层细节

upscore_pool4 = F.interpolate(fuse_pool4, score_pool3.size()[2:], mode='bilinear', align_corners=True)

fuse_pool3 = upscore_pool4 + score_pool3 # → (batch, nclass, H/8, W/8)

# ========== 阶段5:最终上采样 ==========

# 将融合了三个层次的特征上采样8倍到原始输入尺寸

out = F.interpolate(fuse_pool3, x.size()[2:], mode='bilinear', align_corners=True)

outputs.append(out)

# ========== 阶段6:辅助输出 (可选) ==========

if self.aux:

# 直接从pool5特征预测并上采样32倍

auxout = self.auxlayer(pool5)

auxout = F.interpolate(auxout, x.size()[2:], mode='bilinear', align_corners=True)

outputs.append(auxout) # 辅助输出

return tuple(outputs) # 返回元组: (主输出, 辅助输出) 或 (主输出,)

if __name__ == '__main__':

from thop import profile

device = torch.device('cpu')

model = FCN8s(21)

# model = FCN16s(21)

# model = FCN32s(21)

model = model.to(device)

input = torch.randn(1, 3, 224, 224)

input = input.to(device)

flops, params = profile(model, inputs=(input, ))

print('FLOPs = ' + str(flops / 1000 ** 3) + 'G')

print('Params = ' + str(params / 1000 ** 2) + 'M')