目录

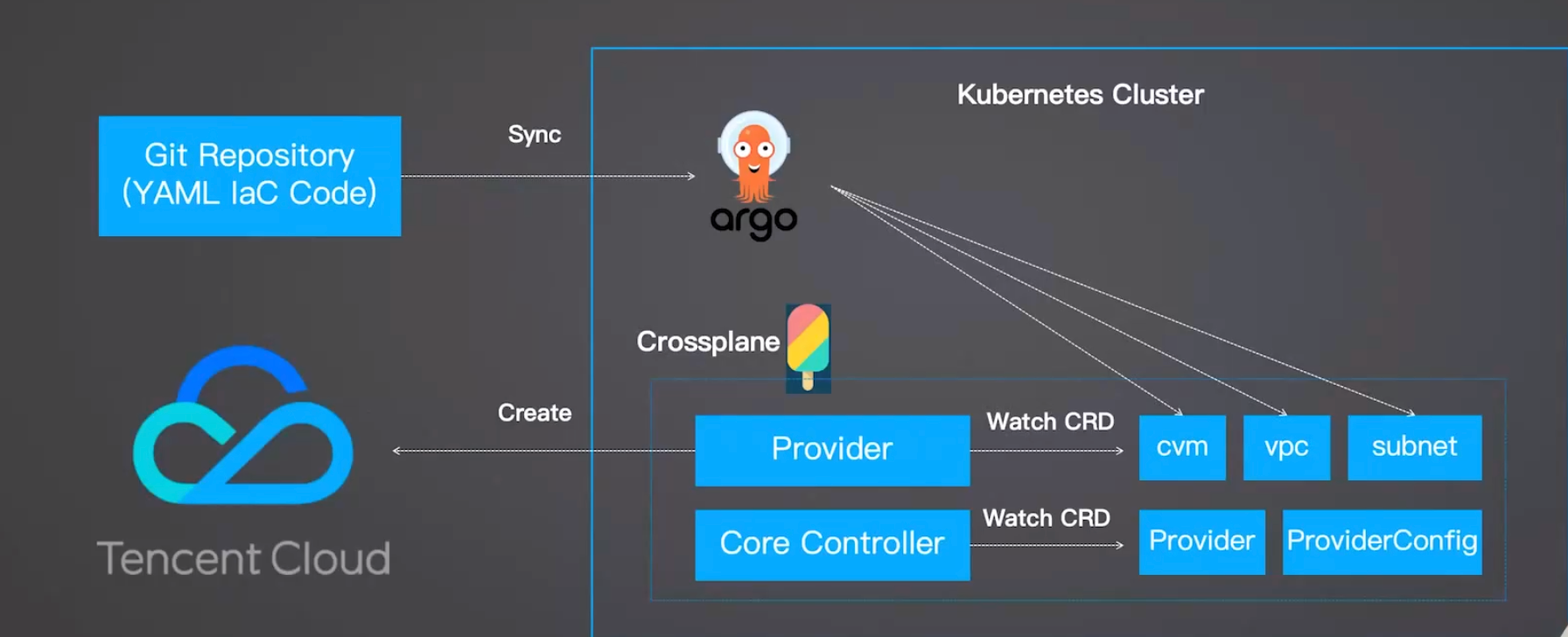

通过Crossplane使用K8sYAML格式的API接口,创建虚拟云资源,同时利用ArgoCD达到GitOps效果

流程介绍

前情提要:本地需要有helm,k8s.

- 使用terraform 先申请一个cvm,然后在cvm上安装k3s,把k3s形成的配置文件拿到自己的本地,方便从本地访问k8s集群。

- 然后使用本地helm操控远程的k3s安装Crossplane。

流程图图下:

关键依赖/文件

步骤2:本地Helm部署CrossPlane(远程操控)

步骤1:Terraform 自动化部署(本地执行)

- 执行 terraform apply 2. 调用腾讯云API 3. 创建资源 4. 通过SSH连接CVM 5. K3s生成集群配置 6. 远程执行命令 7. SCP下载 8. 配置环境变量 9. 读取集群凭证 10. 本地执行helm命令 11. 通过config.yaml调用K3s API 12. 拉取CrossPlane Chart包

(从https://charts.crossplane.io/stable) 13. 创建资源 本地机器

Terraform 代码

腾讯云平台

腾讯云CVM

(香港节点)

在CVM上安装K3s集群

CVM内的k3s.yaml

(默认路径:/etc/rancher/k3s/)

修改k3s.yaml:

替换IP为CVM公网IP

修改权限

本地config.yaml

(K3s集群访问凭证)

本地机器

export KUBECONFIG=./config.yaml

本地Helm程序

远程K3s集群API Server

远程K3s集群

CrossPlane组件运行

(Pod/Service/Secret)

本地安装Helm

CrossPlane Helm仓库

CVM公网IP

SSH密钥/密码

以上是准备工作,读者也可以自行准备相关环境,最终目的是使用CrossPlane操纵云资源,而不是terraform

-

配置好CrossPlane相关的Provider后,就可以用CrossPlane通过YAML的方式申请一个CVM啦。

-

K3s集群已装CrossPlane核心 2. 部署Provider插件+配置云凭证 3. kubectl apply -f 提交到K3s 4. 调用腾讯云API 5. 同步状态 前置准备

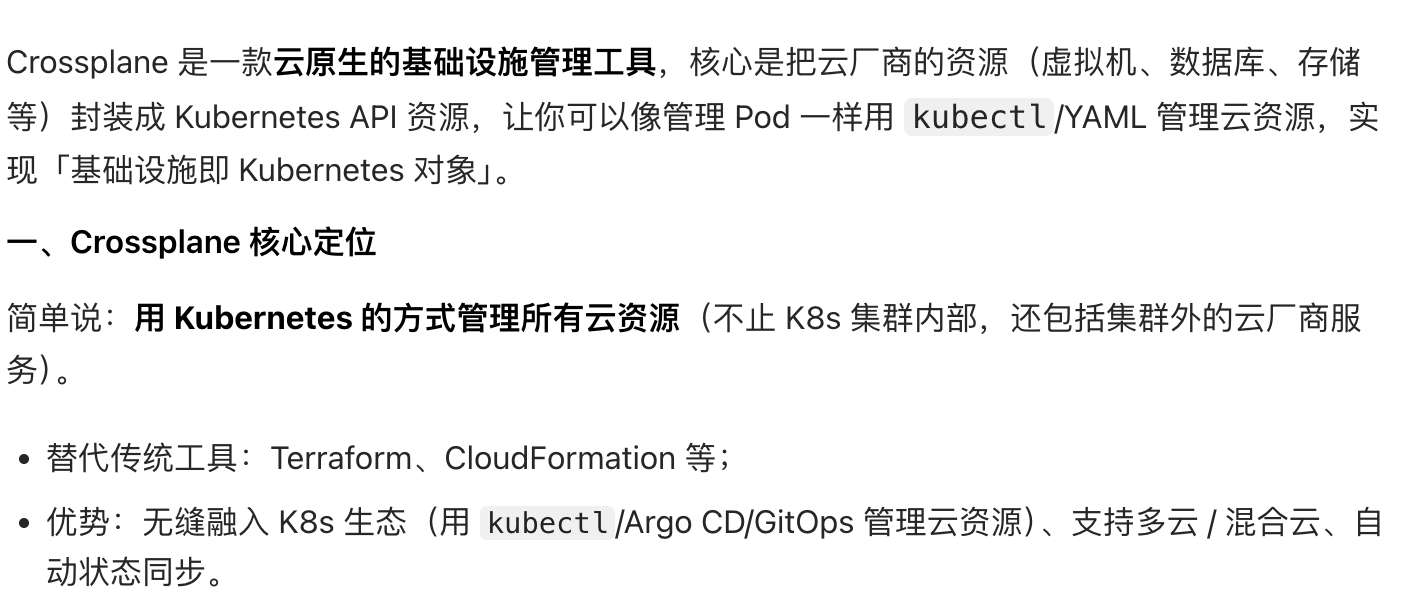

配置腾讯云Provider

CrossPlane获腾讯云操作权限

编写CVM YAML

CrossPlane控制器监听

腾讯云平台创建CVM

K3s内CVM资源状态更新

(Ready: True)

技术工具介绍

- Terraform可以根据定义的.tf文件,来申请和管理云资源。

- Crossplane是可以借助K8s平台,操纵云资源的开源框架,它把云厂商的资源封装成k8s接口。简单来说,如果是k8s集群内部资源,自动会调用原生的K8s的API,如果监测到是云资源,会调用Crossplane封装好的K8sAPI来调用相关的Provider。

- Argo-CD是一款面向开源的持续部署的GitOps工具。一切以监控的Git仓库为准。它会持续监控Git仓库的资源(YAML/Helm/Kustomize)同时对比K8s集群的状态,自动让集群状态和 Git 保持一致,实现「声明式部署」和全自动化的应用发布。

实战部署

目录结构如下:

bash

.

├── crossplane

│ ├── kind

│ │ ├── kind.tf

│ │ ├── terraform.tfstate.backup

│ │ └── test-cluster-config

│ └── tencent

│ ├── cvm.tf

│ ├── graph.svg

│ ├── helm.tf

│ ├── k3s.tf

│ ├── outputs.tf

│ ├── README.md

│ ├── sshkey

│ │ ├── key

│ │ └── key.pub

│ ├── variables.tf

│ └── version.tf

└── tencent

├── cvm

│ ├── cvm.yaml

│ ├── subnet.yaml

│ └── vpc.yaml

├── provider.yaml

├── providerConfig.yaml

└── secret.yaml

7 directories, 20 files安装CrossPlane

该步骤是流程1、2,目录结构是crossplane下的所有文件。

bash

# cvm.tf

# Configure the TencentCloud Provider

provider "tencentcloud" {

region = var.region

}

# # Get availability zones

# data "tencentcloud_availability_zones_by_product" "default" {}

# Get availability zones

data "tencentcloud_availability_zones" "default" {

}

# 直接使用控制台复制的Ubuntu镜像ID(确保可用)

data "tencentcloud_images" "default" {

image_id = "img-mmytdhbn" # 替换为你截图中复制的镜像ID

}

# Get availability instance types

data "tencentcloud_instance_types" "default" {

cpu_core_count = 2

memory_size = 4

}

# Create a web server

resource "tencentcloud_instance" "web" {

instance_name = "web server"

availability_zone = "ap-hongkong-2" # 官网选择的"香港二区"

image_id = "img-mmytdhbn" # Ubuntu 22.04 镜像(官网需同步选择)

instance_type = "S5.MEDIUM4" # 官网选择的"标准型S5, 2核4GiB"

system_disk_type = "CLOUD_SSD" # 对应官网的"通用型SSD云硬盘"

# availability_zone = data.tencentcloud_availability_zones.default.zones.0.name

# image_id = data.tencentcloud_images.default.images.0.image_id

# instance_type = data.tencentcloud_instance_types.default.instance_types.0.instance_type

# system_disk_type = "CLOUD_BASIC"

system_disk_size = 50

allocate_public_ip = true

internet_max_bandwidth_out = 20

security_groups = [tencentcloud_security_group.default.id]

count = 1

password = var.password

}

# Create security group

resource "tencentcloud_security_group" "default" {

name = "tf-security-group"

description = "make it accessible for both production and stage ports"

}

# # Create security group rule allow web and ssh request

# resource "tencentcloud_security_group_rule_set" "base" {

# security_group_id = tencentcloud_security_group.default.id

# ingress {

# action = "ACCEPT"

# cidr_block = "0.0.0.0/0"

# protocol = "TCP"

# port = "80,8080,6443,20,443"

# description = "Create security group rule allow web request"

# }

# egress {

# action = "ACCEPT"

# cidr_block = "0.0.0.0/0"

# protocol = "TCP"

# port = "22,443,20"

# description = "Create security group rule allow ssh request"

# }

# }

# Create security group rule allow ssh request

resource "tencentcloud_security_group_lite_rule" "default" {

security_group_id = tencentcloud_security_group.default.id

ingress = [

# "ACCEPT#0.0.0.0/0#22#TCP",

# "ACCEPT#0.0.0.0/0#6443#TCP",

"ACCEPT#0.0.0.0/0#ALL#ALL"

]

egress = [

"ACCEPT#0.0.0.0/0#ALL#ALL"

]

}

#helm.tf

esource "helm_release" "crossplane" {

depends_on = [module.k3s, null_resource.download_k3s_yaml]

name = "crossplane"

repository = "https://charts.crossplane.io/stable"

chart = "crossplane"

namespace = "crossplane"

create_namespace = true

}

#k3s.tf

module "k3s" {

source = "xunleii/k3s/module"

k3s_version = "v1.28.11+k3s2"

generate_ca_certificates = true

drain_timeout = "30s"

global_flags = [

"--tls-san ${tencentcloud_instance.web[0].public_ip}", #加入白名单

"--write-kubeconfig-mode 644",

"--disable=traefik",

"--kube-controller-manager-arg bind-address=0.0.0.0",

"--kube-proxy-arg metrics-bind-address=0.0.0.0",

"--kube-scheduler-arg bind-address=0.0.0.0",

]

servers = {

"k3s" = {

ip = tencentcloud_instance.web[0].private_ip

connection = {

timeout = "60s"

type = "ssh"

host = tencentcloud_instance.web[0].public_ip

password = var.password

user = "ubuntu"

}

}

}

}

# resource "local_sensitive_file" "kubeconfig" {

# content = module.k3s.kube_config

# filename = "${path.module}/config.yaml"

# }

resource "null_resource" "fetch_kubeconfig" {

provisioner "remote-exec" {

connection {

type = "ssh"

host = tencentcloud_instance.web[0].public_ip

user = "ubuntu"

password = var.password

}

inline = [

"mkdir -p ~/.ssh",

"echo '${file("${path.module}/sshkey/key.pub")}' >> ~/.ssh/authorized_keys", # 把Terraform公钥,cp到cvm中

"chmod 700 ~/.ssh",

"chmod 600 ~/.ssh/authorized_keys",

"sudo cp /etc/rancher/k3s/k3s.yaml /tmp/k3s.yaml", # 复制cvm中的yaml文件复制到cvm的tem目录下,方便处理

"sudo chown ubuntu:ubuntu /tmp/k3s.yaml",

"sed -i 's/127.0.0.1/${tencentcloud_instance.web[0].public_ip}/g' /tmp/k3s.yaml"

]

}

depends_on = [module.k3s]

}

resource "null_resource" "download_k3s_yaml" {

provisioner "local-exec" {

command = "scp -i ${path.module}/sshkey/key -o StrictHostKeyChecking=no ubuntu@${tencentcloud_instance.web[0].public_ip}:/tmp/k3s.yaml ${path.module}/config.yaml"

}

depends_on = [null_resource.fetch_kubeconfig]

}

#outputs.tf

output "public_ip" {

description = "vm public ip address"

value = tencentcloud_instance.web[0].public_ip

}

output "kube_config" {

description = "kubeconfig"

value = "${path.module}/config.yaml"

}

output "password" {

description = "vm password"

value = var.password

}

#variables.tf

variable "secret_id" {

default = "Your Access ID"

}

variable "secret_key" {

default = "Your Access Key"

}

variable "region" {

default = "ap-hongkong"

}

variable "password" {

default = "password123"

}

#version.tf

terraform {

required_providers {

tencentcloud = {

source = "tencentcloudstack/tencentcloud"

}

helm = {

source = "hashicorp/helm"

version = "~> 2.14"

}

}

}

provider "helm" {

kubernetes {

config_path = "${path.module}/config.yaml"

}

}使用CrossPlane安装CVM

目录在tencent/目录下:

bash

#cvm/cvm.yaml

apiVersion: cvm.tencentcloud.crossplane.io/v1alpha1

kind: Instance

metadata:

name: example-cvm

spec:

forProvider:

instanceName: "test-crossplane-cvm"

availabilityZone: "ap-hongkong-2"

instanceChargeType: "SPOTPAID"

imageId: "img-mmytdhbn"

instanceType: "S5.MEDIUM4"

systemDiskType: "CLOUD_BSSD"

vpcIdRef:

name: "example-cvm-vpc"

subnetIdRef:

name: "example-cvm-subnet"

# subnet.yaml

apiVersion: vpc.tencentcloud.crossplane.io/v1alpha1

kind: Subnet

metadata:

name: example-cvm-subnet

spec:

forProvider:

availabilityZone: "ap-hongkong-2"

cidrBlock: "10.2.2.0/24"

name: "test-crossplane-cvm-subnet"

vpcIdRef:

name: "example-cvm-vpc"

# cvm/vpc.yaml

apiVersion: vpc.tencentcloud.crossplane.io/v1alpha1

kind: VPC

metadata:

name: example-cvm-vpc

spec:

forProvider:

cidrBlock: "10.2.0.0/16"

name: "test-crossplane-cvm-vpc"

# provider.yaml

apiVersion: pkg.crossplane.io/v1

kind: Provider

metadata:

name: provider-tencentcloud

spec:

package: xpkg.upbound.io/crossplane-contrib/provider-tencentcloud:v0.8.3

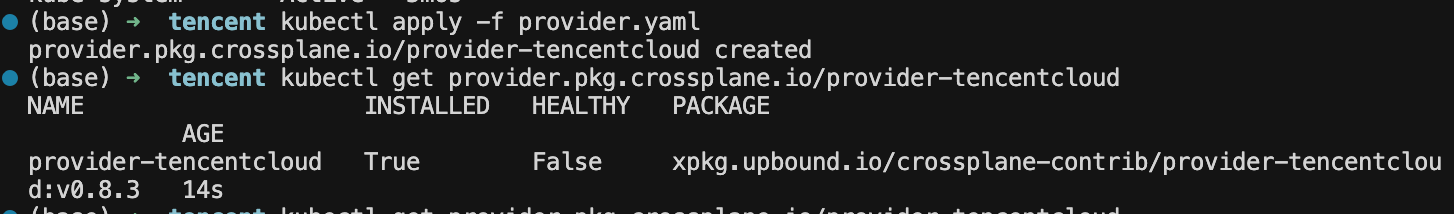

#providerConfig.yaml

apiVersion: tencentcloud.crossplane.io/v1alpha1

kind: ProviderConfig

metadata:

name: default

spec:

credentials:

secretRef:

key: credentials

name: example-creds

namespace: crossplane

source: Secret

# sercret.yaml

apiVersion: v1

kind: Secret

metadata:

name: example-creds

namespace: crossplane

type: Opaque

stringData:

credentials: |

{

"secret_id": "xxxxxxxxxxxxx",

"secret_key": "xxxxxxxxxxxxxx",

"region": "ap-hongkong"

}操作过程

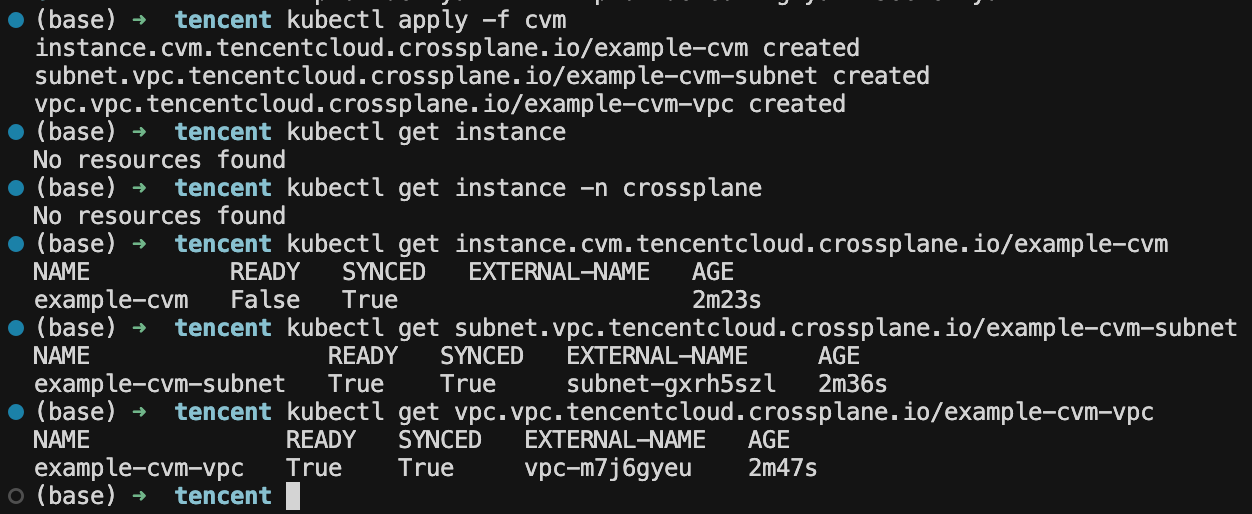

- 现在crossplane/tencent目录下运行:

terraform init & terraform plan方法

执行成功如下所示:

bash

Apply complete! Resources: 25 added, 0 changed, 0 destroyed.

Outputs:

kube_config = "./config.yaml"

password = "password123"

public_ip = "43.154.35.231"然后运行export KUBECONFIG="$(pwd)/config.yaml"可以让本地的kubectl访问远程的集群信息,同时helm也会访问到这个远程集群的信息。使用如下命令查看集群的命名空间。

bash

(base) ➜ tencent kubectl get ns

NAME STATUS AGE

crossplane Active 101s

default Active 5m6s

kube-node-lease Active 5m6s

kube-public Active 5m6s

kube-system Active 5m6s- 通过crossplane把定义好的YAML文件apply上去。

bash

# 先部署腾讯云的provider

kubectl apply -f provider.yaml

#再部署一个Secret,使用provider所需要的密钥

kubectl apply -f secret.yaml

# 将密钥与provider绑定

kubectl apply -f providerConfig.yaml

# 创建虚拟机

kubectl apply -f cvm部署过程中可以使用kubectl get provider.pkg.crossplane.io/provider-tencentcloud 查看。

最终去官网验证一下:发现确实有两个机器(一个是用terraform创建的,一个是用crossplane创建的)

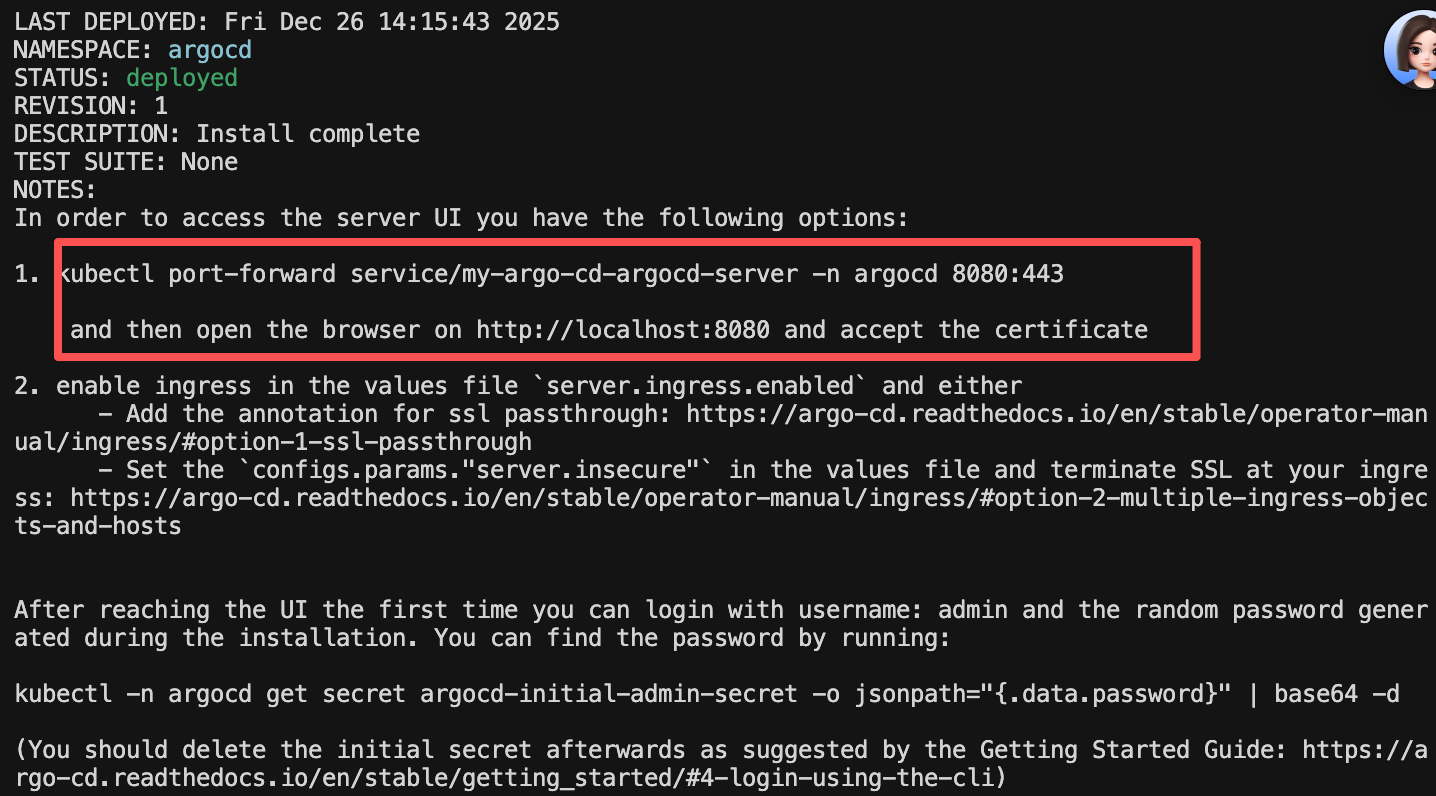

- 现在两个机器都已经创建好了,一切都已经就绪。现在使用helm安装argocd。

helm仓库地址

bash

# 创建一个实例名为my-argo-cd的组件,argo/argo-cd是仓库地址,-n argocd指定命名空间,--create-namespace若命名空间不存在自动创建

(base) ➜ tencent helm repo add argo https://argoproj.github.io/argo-helm

"argo" has been added to your repositories

(base) ➜ tencent helm install my-argo-cd argo/argo-cd -n argocd --create-namespace

根据指引去操作。

bash

#先获取密码:

(base) ➜ tencent kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d

hFZiLQkZVBTHsF6x%

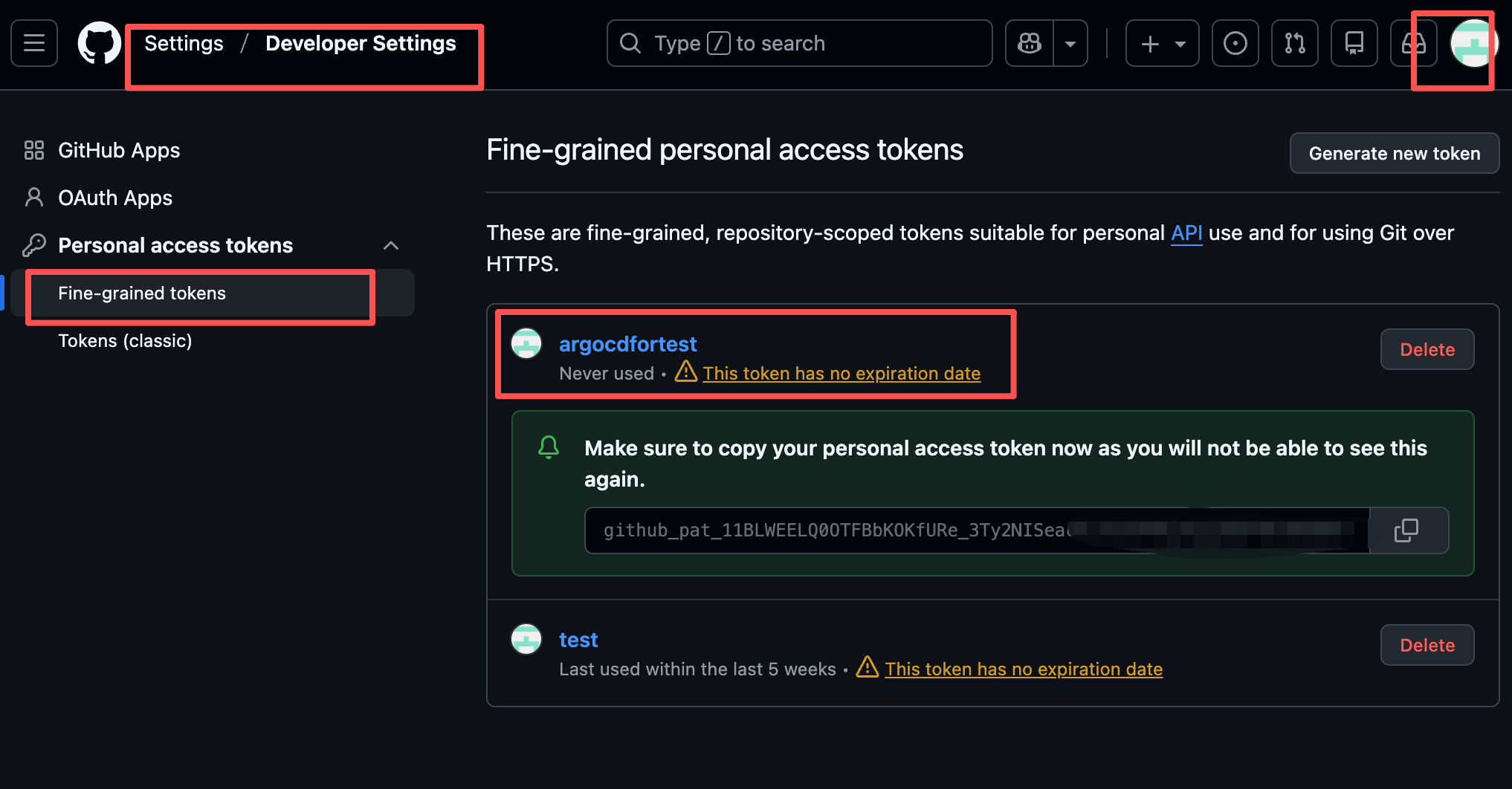

kubectl port-forward service/my-argo-cd-argocd-server -n argocd 8080:443安装好后,给指定的仓库设置一个密钥,给argocd绑定仓库用:

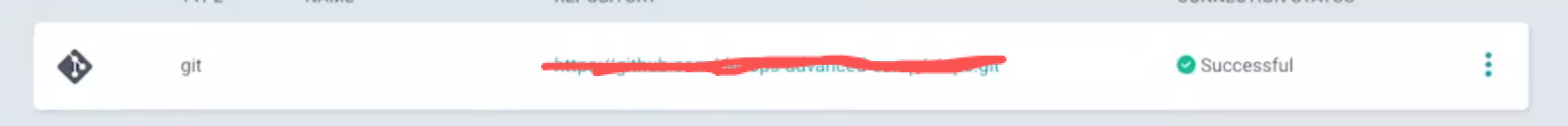

如下图所示:

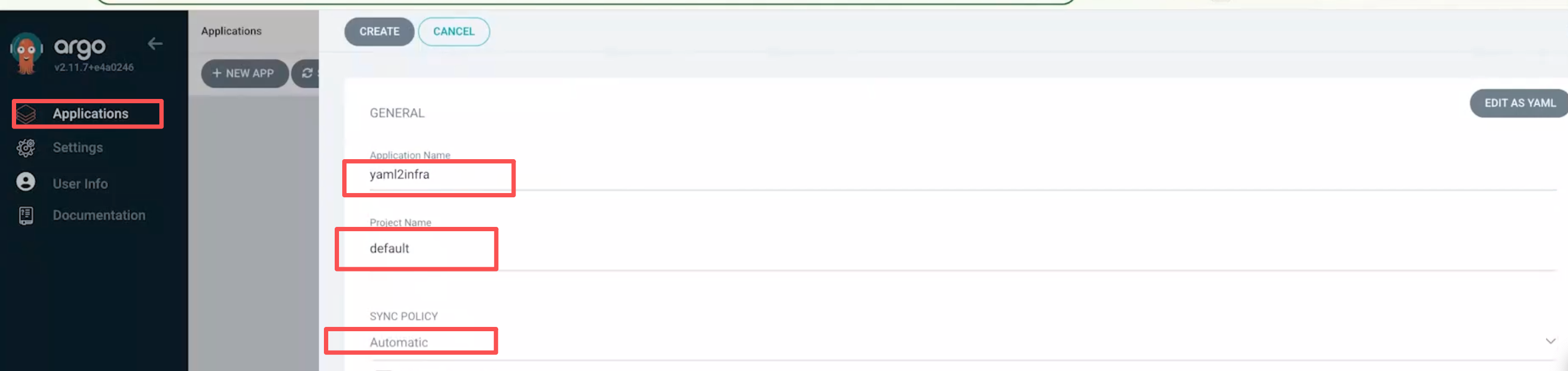

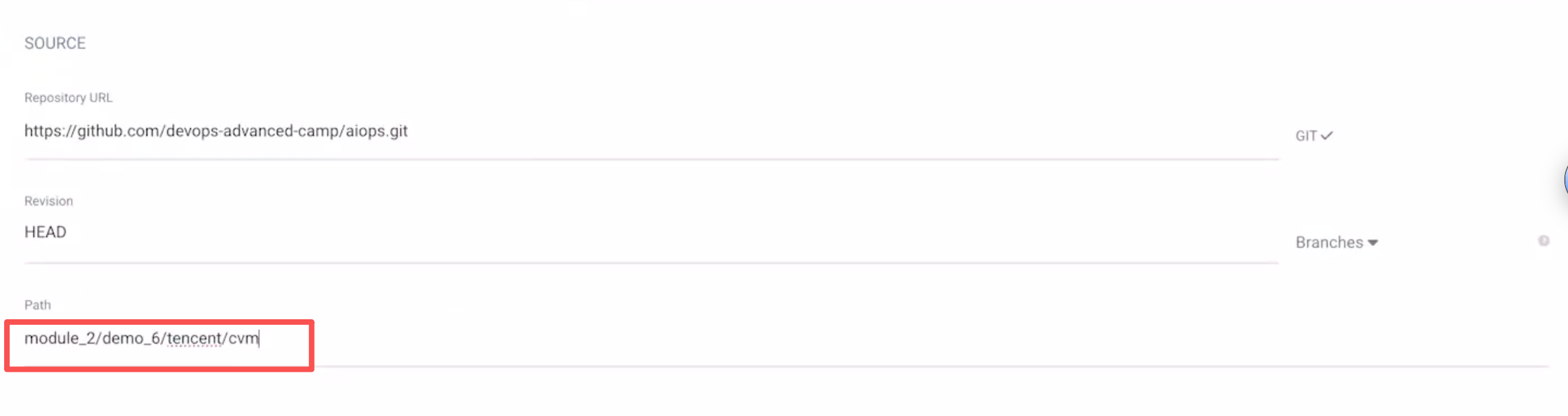

连上之后,需要建立一个application:

给出他要观测的目录:

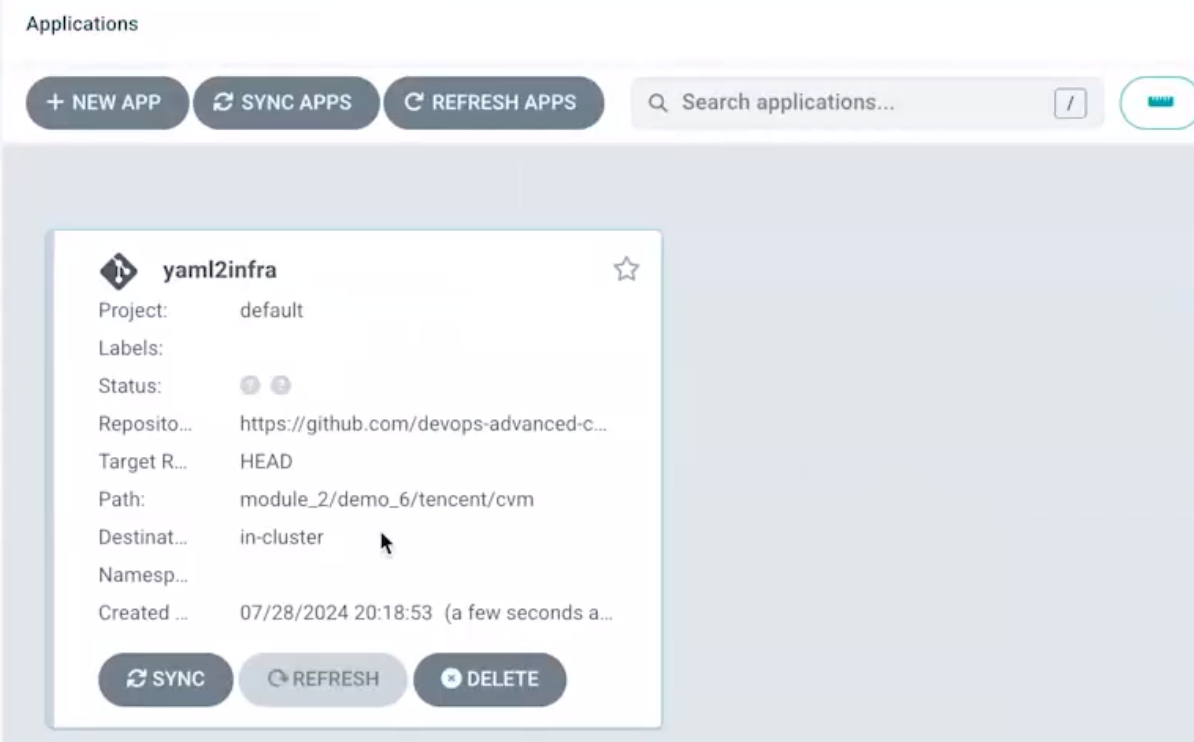

点击create就可以了。

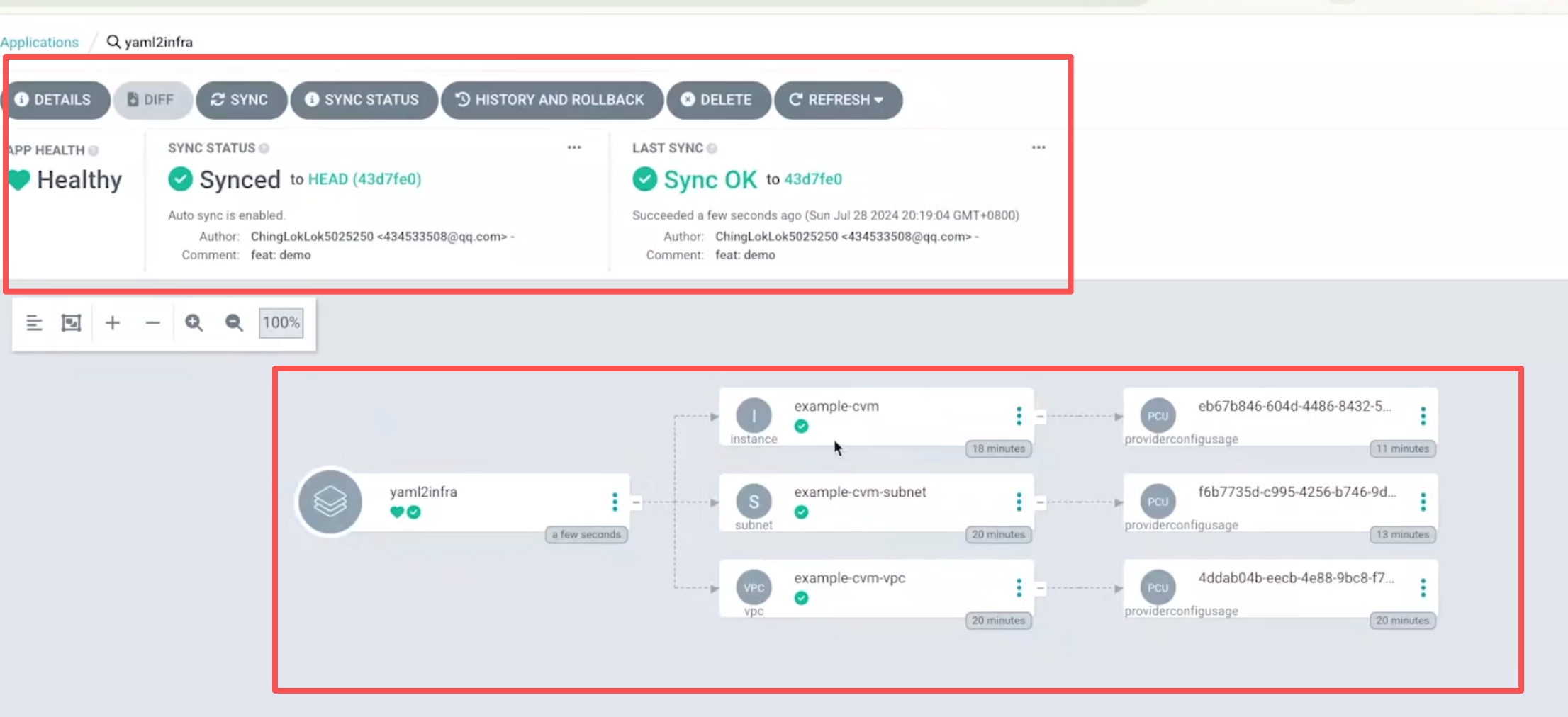

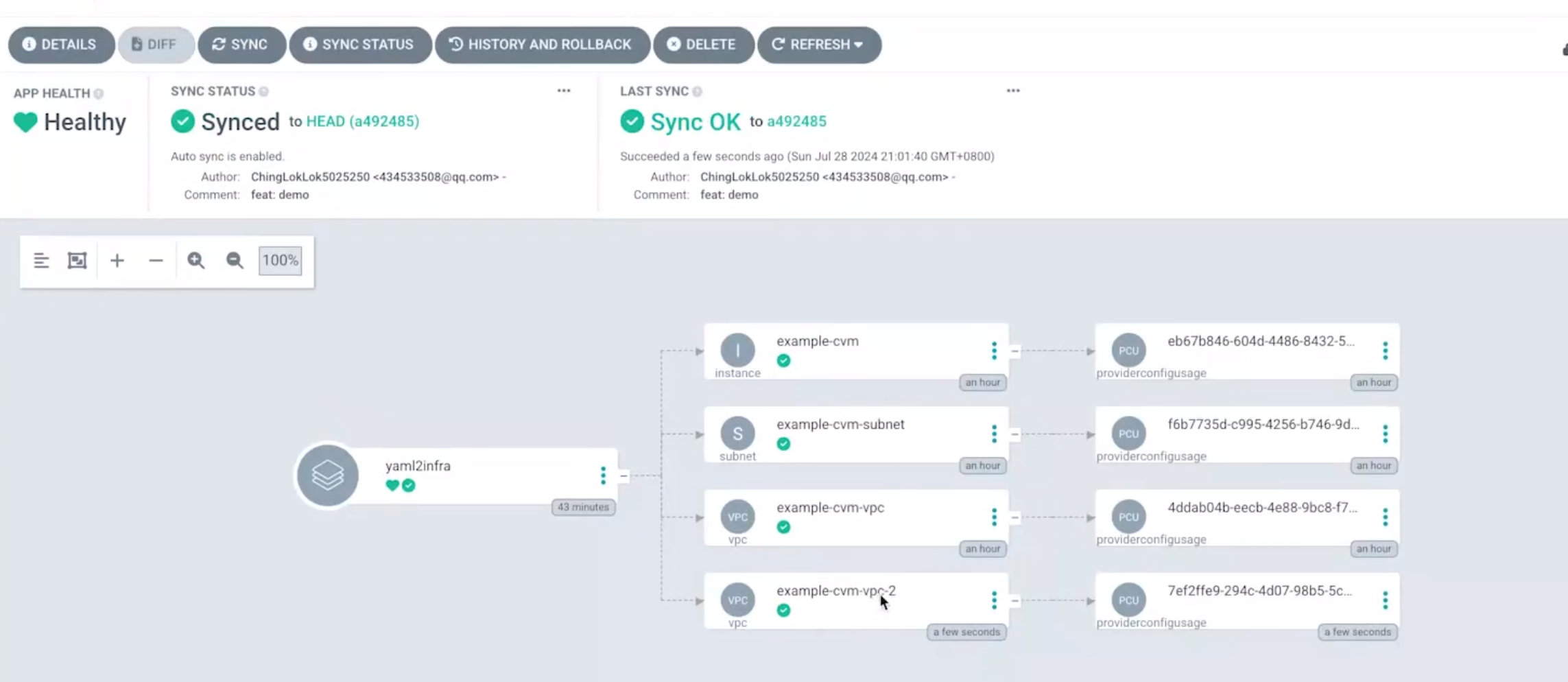

后面argocd就会持续监控仓库代码,实现持续交付了。

如果我们向github仓库再提交一个cvm.tf文件,它就会检测到改变,并申请一个虚拟机资源:

总的流程如下所示:

可选:GitOps联动(ArgoCD管控)

步骤2:通过YAML申请腾讯云CVM

步骤1:配置CrossPlane腾讯云Provider

前置环境(已就绪)

- 已部署 2. 已安装 3. 编写Provider YAML 4. kubectl apply -f 5. CrossPlane拉取Provider包 6. 部署Provider控制器 7. 编写腾讯云凭证Secret 8. kubectl apply -f 9. 编写ProviderConfig YAML 10. kubectl apply -f 11. 加载凭证 12. 编写CVM资源YAML 13. kubectl apply -f 14. CrossPlane控制器监听CRD 15. 调用腾讯云API 16. 创建资源 17. 同步状态到K8s 18. 将CVM YAML推送到Git 19. ArgoCD自动同步 20. 触发CrossPlane创建CVM 本地机器

K3s集群

(腾讯云CVM内)

CrossPlane核心组件

(helm install部署)

Provider配置文件

(pkg.crossplane.io/v1)

Upbound Marketplace

(provider-tencentcloud)

CrossPlane Provider Pod

(crossplane-system命名空间)

Secret(存储SecretId/SecretKey)

ProviderConfig(绑定Secret)

Instance CRD

(ecm.tencentcloud.crossplane.io/v1alpha1)

腾讯云平台

腾讯云CVM

(由CrossPlane管控)

K3s集群内CVM CRD状态更新

(Ready: True)

Git仓库

整体的架构如下所示: