目录

[kuboard k8s多集群管理平台](#kuboard k8s多集群管理平台)

集群前期环境准备

以下前期环境准备需要在所有节点都执行

1.修改主机名

hostnamectl set-hostname master01

hostnamectl set-hostname worker01

hostnamectl set-hostname worker02

2.配置本地解析

bash

echo "10.0.0.106 master01" >> /etc/hosts

echo "10.0.0.107 worker01" >> /etc/hosts

echo "10.0.0.108 worker02" >> /etc/hosts3.开启bridge网桥过滤

bash

cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

#需要加载br_netfilter模块

modprobe br_netfilter && lsmod | grep br_netfilter

#加载配置文件,使上述配置生效

sysctl -p /etc/sysctl.d/k8s.conf4.配置ipvs功能

bash

yum -y install ipset ipvsadm

#将需要加载的ipvs相关模块写入文件中

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

#执行文件加载模块

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack5.关闭swap分区

bash

#临时关闭

swapoff -a

#永久关闭

sed -ri 's/.*swap.*/#&/' /etc/fstab

grep ".*swap.*" /etc/fstab6.Containerd环境准备

1)添加containerd的yum仓库

bash

tee /etc/yum.repos.d/containerd.repo <<EOF

[containerd]

name=containerd

baseurl=https://download.docker.com/linux/centos/7/\$basearch/stable

enabled=1

gpgcheck=1

gpgkey=https://download.docker.com/linux/centos/gpg

EOF2)安装containerd

bash

[root@master01 ~]#yum install containerd.io-1.6.20-3.1.el7.x86_64 -y

#查看containerd版本

[root@master01 ~]#ctr version

Client:

Version: 1.6.20

Revision: 2806fc1057397dbaeefbea0e4e17bddfbd388f38

Go version: go1.19.73)生成containerd配置文件

bash

containerd config default | tee /etc/containerd/config.toml4)配置镜像加速

bash

#创建docker.io目录

mkdir -p /etc/containerd/certs.d/docker.io

#创建hosts.toml

cat > /etc/containerd/certs.d/docker.io/hosts.toml << EOF

server = "https://docker.io"

[host."https://te0awxei.mirror.aliyuncs.com"] #这个是我的阿里云专属镜像加速地址,不要复制

capabilities = ["pull", "resolve"]

[host."https://docker.1ms.run"]

capabilities = ["pull", "resolve"]

[host."https://docker.xuanyuan.me"]

capabilities = ["pull", "resolve"]

[host."https://docker.mirrors.ustc.edu.cn"]

capabilities = ["pull", "resolve"]

[host."https://dockerproxy.com"]

capabilities = ["pull", "resolve"]

EOF4)修改配置文件

bash

#启动Cgroup控制组

sed -i 's#SystemdCgroup = false#SystemdCgroup = true#' /etc/containerd/config.toml

#替换pause镜像的下载地址

sed -i 's#sandbox_image = "registry.k8s.io/pause:3.6"#sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.6"#' /etc/containerd/config.toml

#指定镜像仓库目录

[plugins."io.containerd.grpc.v1.cri".registry]

config_path = "/etc/containerd/certs.d" #修改path,指定镜像仓库路径5)指定containerd套接字文件地址

bash

在k8s环境钟,kubelet通过containerd.sock文件与containerd进行通信,对容器进行管理

cat <<EOF | tee /etc/crictl.yaml

runtime-endpoint: unix:///var/run/containerd/containerd.sock

image-endpoint: unix:///var/run/containerd/containerd.sock

timeout: 10

debug: false

EOF

#参数说明

runtime-endpoint //指定了容器运行时的sock文件位置

image-endpoint //指定了容器镜像使用的sock文件位置

timeout //容器运行时或容器镜像服务之间的通信超时时间

debug //指定了crictl工具的调试模式,false表示调试模式未启用,true则会在输出中包含更多的调试日志信息,有助于故障排除和问题调试6)启动containerd

bash

systemctl daemon-reload && systemctl start containerd && systemctl enable containerd && systemctl status containerd7.配置k8s的yum仓库

bash

cat > /etc/yum.repos.d/k8s.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF8.安装kubeadm,kubectl,kubelet

bash

yum -y install kubeadm-1.27.0 kubelet-1.27.0 kubectl-1.27.09.配置kubelet的Cgroup

bash

tee > /etc/sysconfig/kubelet <<EOF

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

EOF

#设置kubelet开机自启动,集群初始化会随着集群启动

systemctl enable kubeletkubeadm部署k8s集群

1.集群初始化

bash

#创建集群初始化配置文件

[root@master01 ~]#kubeadm config print init-defaults > kubeadm-config.yaml

[root@master01 ~]#ll

total 8

drwxr-xr-x 2 root root 19 May 13 2025 a

-rw-r--r-- 1 root root 6 May 13 2025 b.txt

-rw-r--r-- 1 root root 807 Jan 8 22:56 kubeadm-config.yaml

#修改以下内容

advertiseAddress: 192.168.0.10 #本机的IP地址

name: master01 #本机名称

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers #集群镜像下载地址,修改为阿里云

#初始化集群

[root@master01 ~]# kubeadm init --config kubeadm-config.yaml

提示:如果哪个节点出现问题,可以使用下列命令重置当前节点

kubeadm reset

#根据提示执行如下命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

export KUBECONFIG=/etc/kubernetes/admin.conf

#在工作节点中加入集群

kubeadm join 10.0.0.106:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:155083b12e7bde93625bdad29c82b442eb31709817c8b60456a93fa6c5196dff

[root@worker01 ~]#kubeadm join 10.0.0.106:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:155083b12e7bde93625bdad29c82b442eb31709817c8b60456a93fa6c5196dff

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

#验证是否已经加入

[root@master01 ~]#kubectl get nodes

NAME STATUS ROLES AGE VERSION

master01 NotReady control-plane 3m36s v1.27.0

worker01 NotReady <none> 85s v1.27.0

worker02 NotReady <none> 79s v1.27.02.部署Calico网络

bash

#下载calico文件

[root@master01 ~]#wget https://raw.githubusercontent.com/projectcalico/calico/v3.24.1/manifests/calico.yaml

#根据文件创建calico网络

[root@master01 ~]#kubectl apply -f calico.yaml

#查看kube-system空间的calico的pod状态,等待pod状态为Running

[root@master01 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-784cc4bcb7-gnwhl 1/1 Running 0 43m

calico-node-8tnfj 1/1 Running 1 (3m26s ago) 43m

calico-node-h8lpk 1/1 Running 0 43m

calico-node-v4r4h 0/1 Running 1 (3m27s ago) 43m

coredns-65dcc469f7-hg25j 1/1 Running 1 (3m27s ago) 21h

coredns-65dcc469f7-kp9hc 1/1 Running 1 (3m27s ago) 21h

etcd-master01 1/1 Running 1 (3m28s ago) 21h

kube-apiserver-master01 1/1 Running 1 (3m27s ago) 21h

kube-controller-manager-master01 1/1 Running 1 (3m27s ago) 21h

kube-proxy-s6hbs 1/1 Running 1 (3m26s ago) 21h

kube-proxy-x5sqb 1/1 Running 1 (3m27s ago) 21h

kube-proxy-xm5w9 1/1 Running 1 (3m18s ago) 21h

kube-scheduler-master01 1/1 Running 1 (3m27s ago) 21h

#查看集群节点 #查看status是否都是Ready

[root@master01 ~]#kubectl get nodes

NAME STATUS ROLES AGE VERSION

master01 Ready control-plane 21h v1.27.0

worker01 Ready <none> 21h v1.27.0

worker02 Ready <none> 21h v1.27.0

注意:如果创建pod失败,大概率是containerd拉取镜像失败,换一下镜像源

#基本排错命令

查看pod被调度到哪个节点

kubectl get pods -n kube-system -o wide

查看pod详情

kubectl describe pod -n kube-system pod名称部署Nginx程序测试

需要注意的是,containerd相比于docker,多了namespace概念,每个image都会在各自的namespace下可见,目前k8s会使用k8s.io作为命名空间

bash

[root@master01 ~]#ctr ns ls

NAME LABELS

default

k8s.io 在k8s中管理镜像和容器可以使用crictl命令更加方便,ctr是containerd自带的CLI命令行工具,crictl是k8s中CRI(容器运行时接口)的客户端,k8s使用该客户端和containerd进行交互

bash

#查看镜像

[root@worker01 ~]#crictl images

IMAGE TAG IMAGE ID SIZE

docker.io/calico/cni v3.24.1 67fd9ab484510 87.4MB

docker.io/calico/kube-controllers v3.24.1 f9c3c1813269c 31.1MB

docker.io/calico/node v3.24.1 75392e3500e36 80.2MB

registry.aliyuncs.com/google_containers/pause 3.6 6270bb605e12e 302kB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.27.0 5f82fc39fa816 23.9MB1.创建一个nginx的pod

bash

[root@master01 ~]#kubectl create deployment nginx --image=docker.io/library/nginx:1.20.0

deployment.apps/nginx created

#查看是否pod状态

[root@master01 ~]#kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-5765467855-47w9f 1/1 Running 0 76s2.暴露pod端口供外部网络访问

bash

[root@master01 ~]#kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

#查看pod端口

[root@master01 ~]#kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 22h

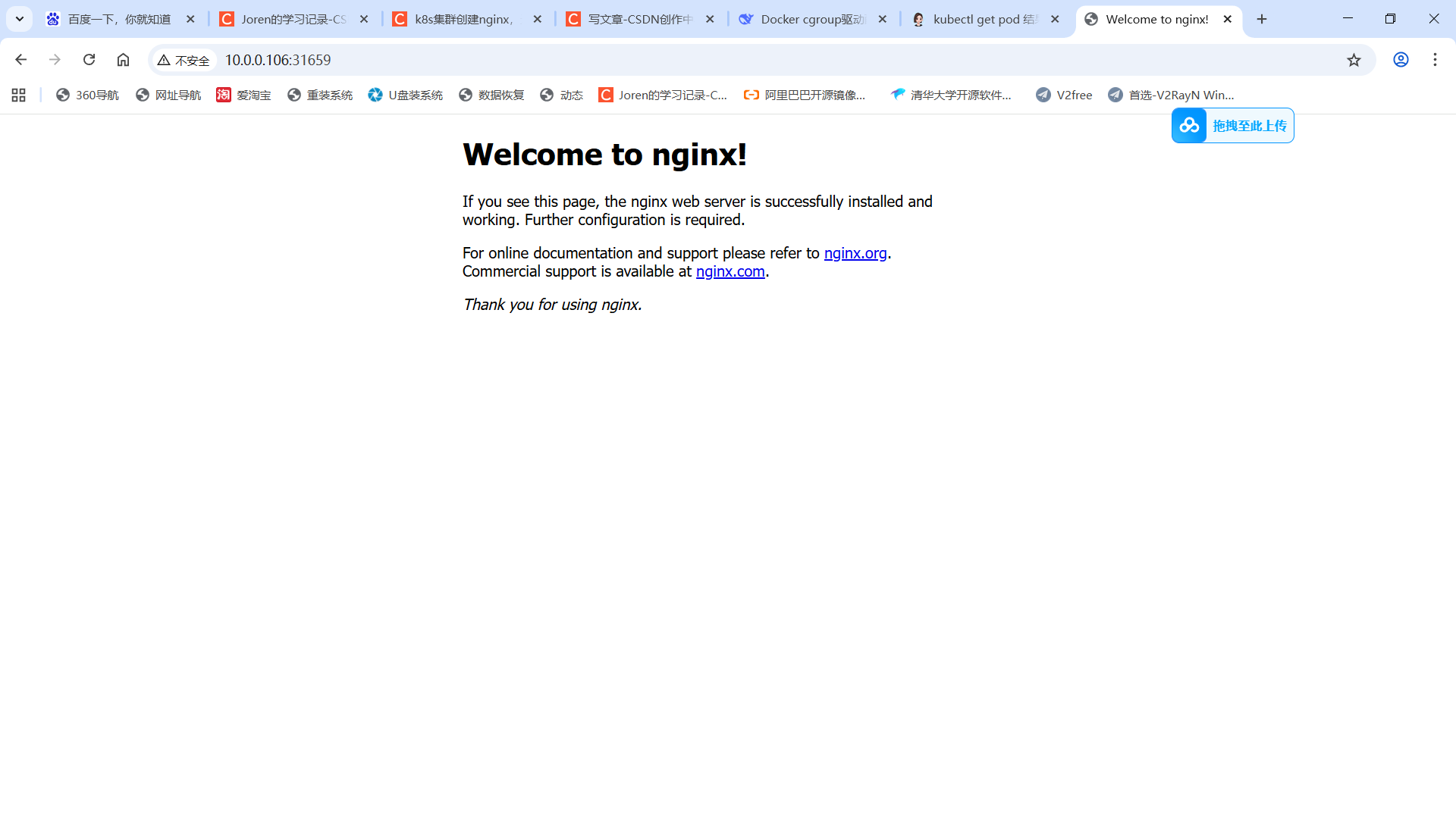

nginx NodePort 10.101.244.208 <none> 80:31659/TCP 9s浏览器访问测试:http://任意节点ip:31659

kuboard k8s多集群管理平台

参考地址:https://kuboard.cn/install/v3/install-built-in.html#%E9%83%A8%E7%BD%B2%E8%AE%A1%E5%88%92 再准备一台docker服务器,通过docker安装kuboard

bash

docker run -d \

--restart=unless-stopped \

--privileged \

--name=kuboard \

-p 80:80/tcp \

-p 10081:10081/tcp \

-e KUBOARD_ENDPOINT="http://服务器内网ip:80" \

-e KUBOARD_AGENT_SERVER_TCP_PORT="10081" \

-v /root/kuboard-data:/data \

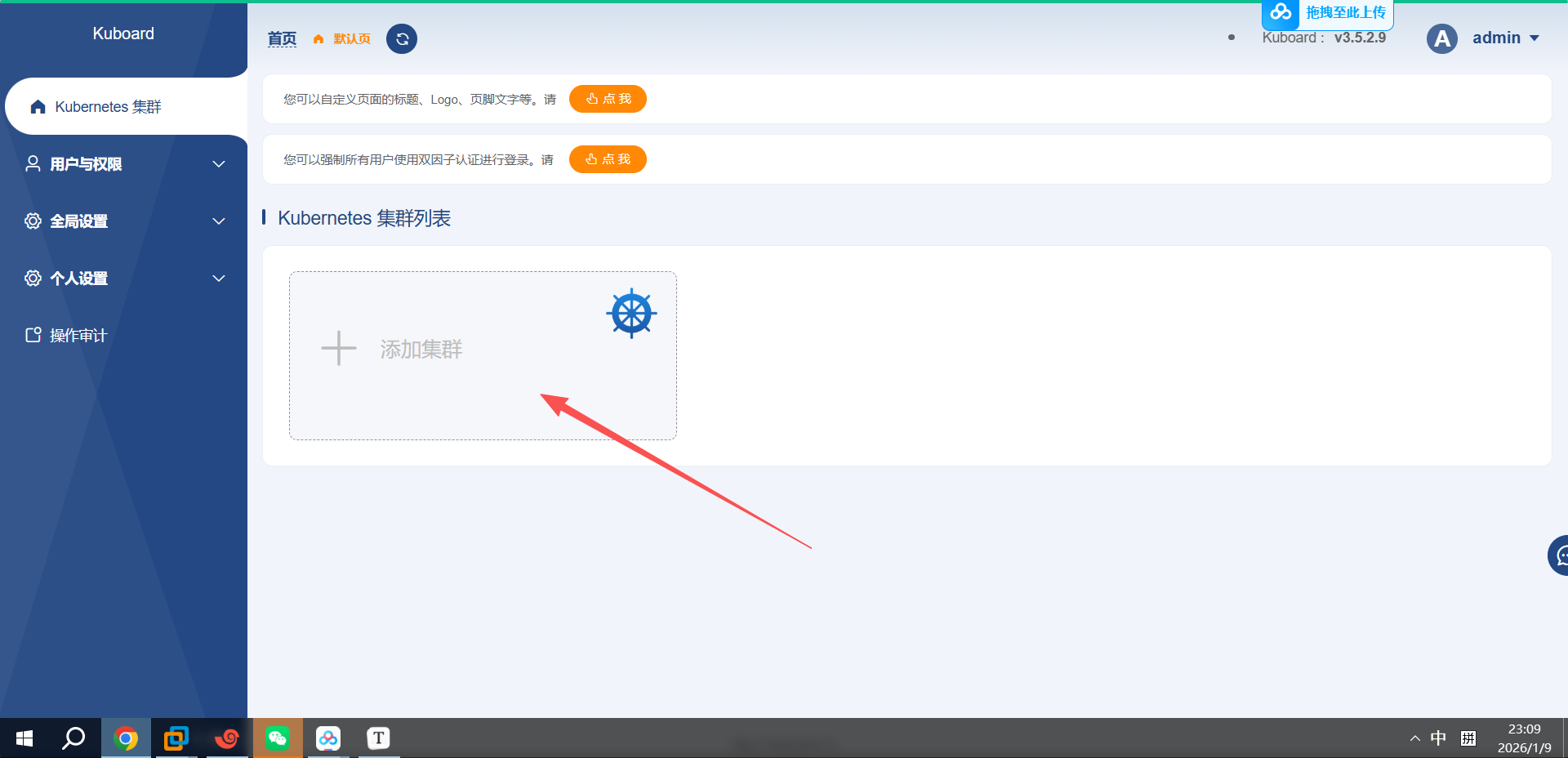

eipwork/kuboard:v3浏览器访问:http://服务器ip

-

用户名:admin

-

密码:Kuboard123 #大写的K

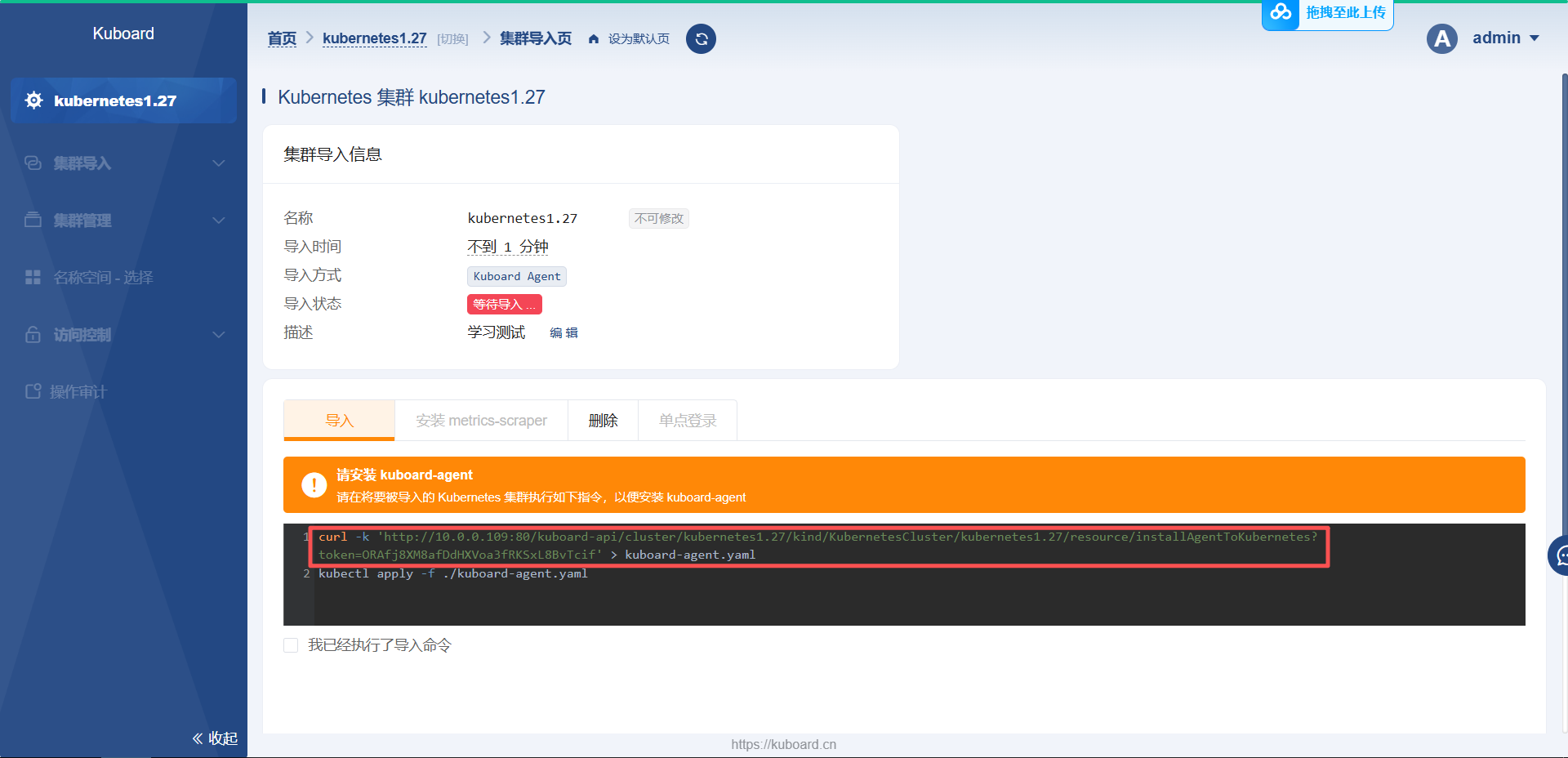

选择Agent

在master节点生成agent文件,直接复制即可

使用kubectl执行文件

bash

[root@master01 ~]#kubectl apply -f kuboard-agent.yaml

namespace/kuboard created

serviceaccount/kuboard-admin created

clusterrolebinding.rbac.authorization.k8s.io/kuboard-admin-crb created

serviceaccount/kuboard-viewer created

clusterrolebinding.rbac.authorization.k8s.io/kuboard-viewer-crb created

deployment.apps/kuboard-agent-wo2ujz created

deployment.apps/kuboard-agent-wo2ujz-2 created

#查看名称空间

[root@master01 ~]#kubectl get ns

NAME STATUS AGE

default Active 24h

kube-node-lease Active 24h

kube-public Active 24h

kube-system Active 24h

kuboard Active 61s #创建完成

#查看pod是否running

[root@master01 ~]#cat /etc/cokubectl get pod -n kuboard

NAME READY STATUS RESTARTS AGE

kuboard-agent-wo2ujz-2-b57cc66d8-whhv2 1/1 Running 0 5m58s

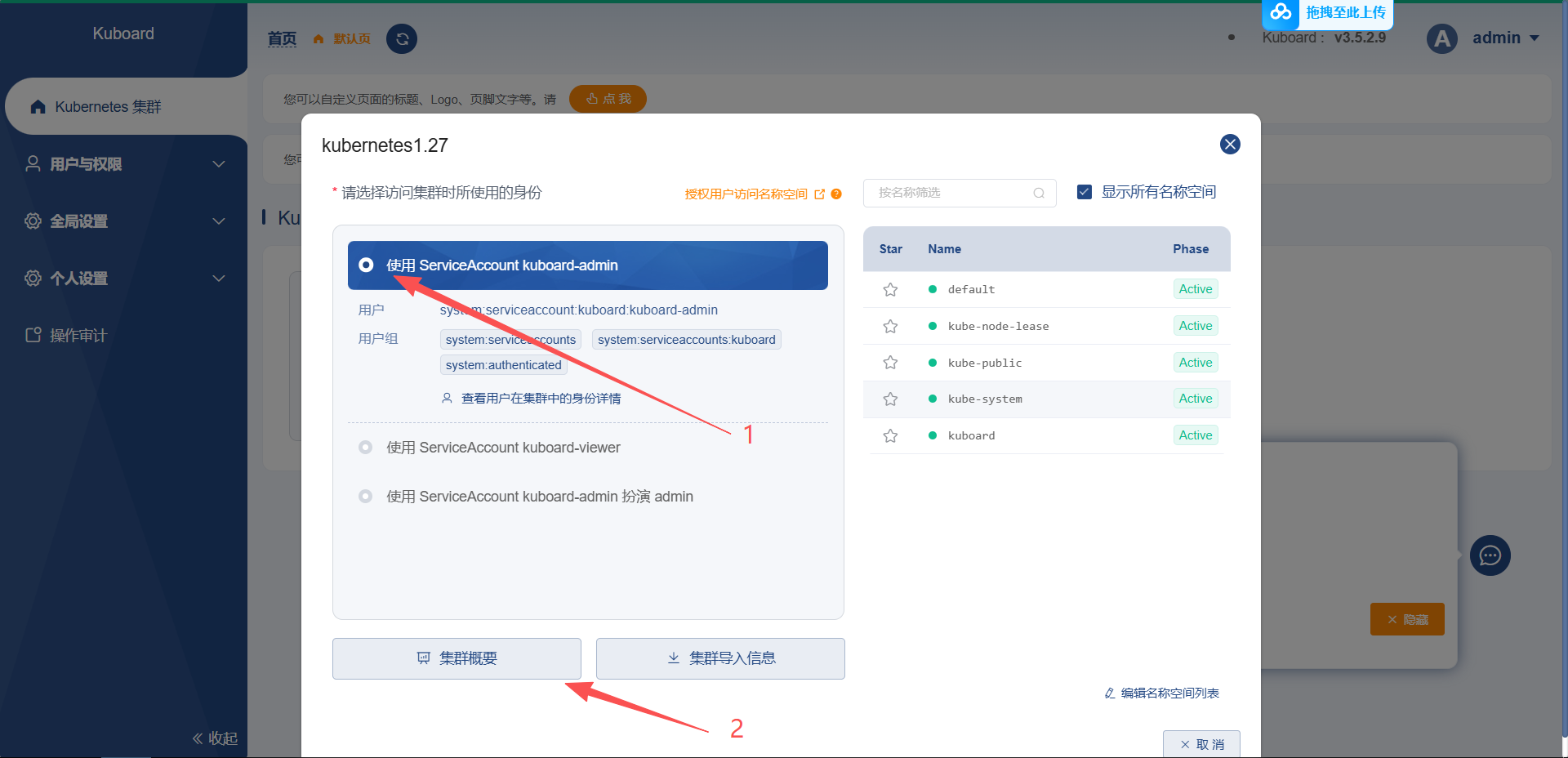

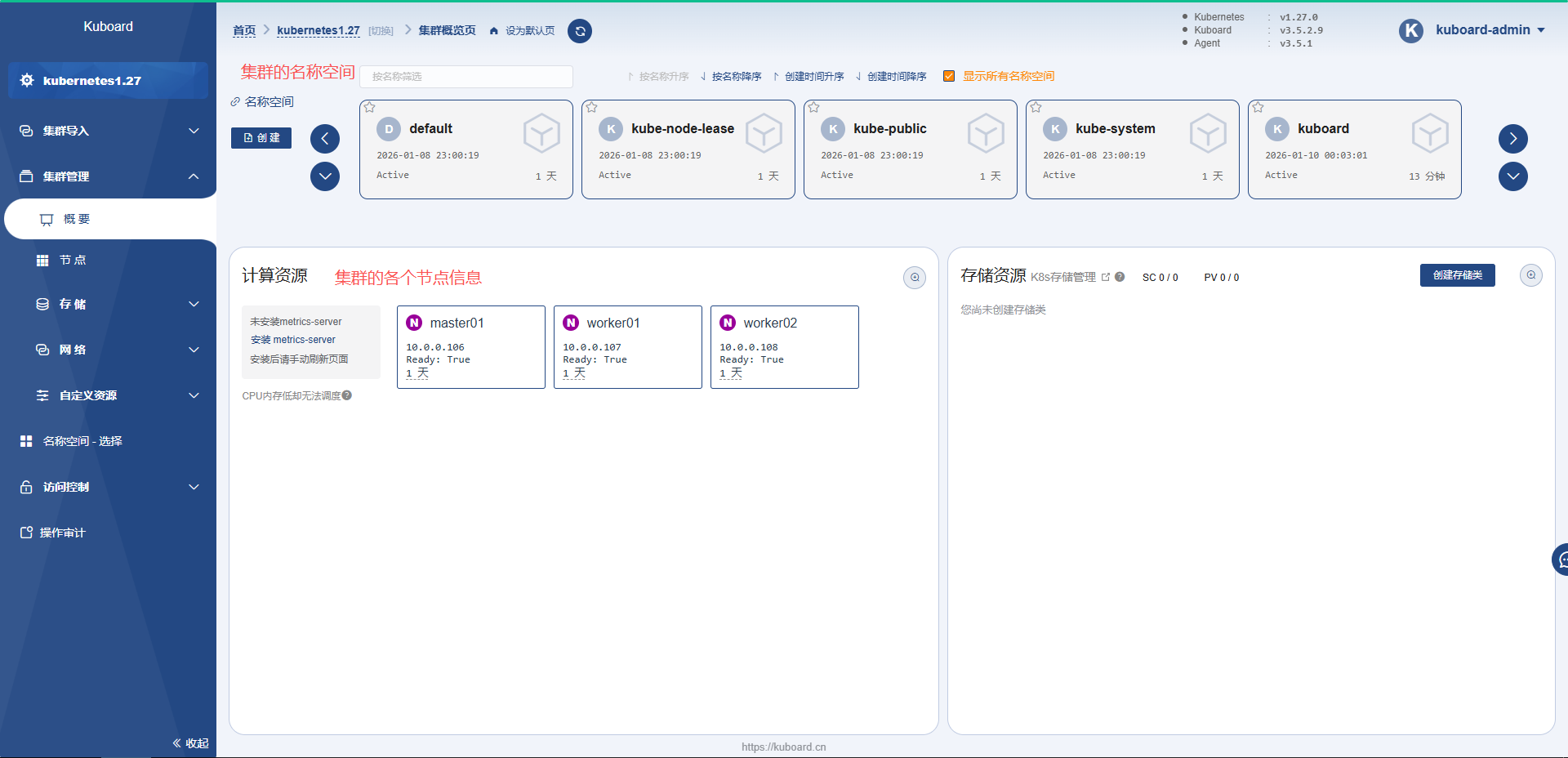

kuboard-agent-wo2ujz-f776c9856-87tn4 1/1 Running 0 5m58s返回kuboard的web页面,选择使用admin用户,然后点击集群概要,就可以查看集群相关信息了