目录

[🎯 先说说我被消息事务"虐惨"的经历](#🎯 先说说我被消息事务"虐惨"的经历)

[✨ 摘要](#✨ 摘要)

[1. 为什么选择消息队列?](#1. 为什么选择消息队列?)

[1.1 从2PC的痛苦说起](#1.1 从2PC的痛苦说起)

[1.2 消息队列的优势](#1.2 消息队列的优势)

[2. 三种核心模式详解](#2. 三种核心模式详解)

[2.1 本地消息表(最可靠)](#2.1 本地消息表(最可靠))

[2.2 事务消息(RocketMQ特色)](#2.2 事务消息(RocketMQ特色))

[2.3 最大努力通知(最简单)](#2.3 最大努力通知(最简单))

[3. 消息可靠性保障](#3. 消息可靠性保障)

[3.1 不丢失:发送端保证](#3.1 不丢失:发送端保证)

[3.2 不重复:消费端幂等](#3.2 不重复:消费端幂等)

[3.3 顺序性:业务场景处理](#3.3 顺序性:业务场景处理)

[4. 企业级实战案例](#4. 企业级实战案例)

[4.1 电商下单全链路](#4.1 电商下单全链路)

[4.2 对账系统设计](#4.2 对账系统设计)

[5. 性能优化实战](#5. 性能优化实战)

[5.1 批量处理优化](#5.1 批量处理优化)

[5.2 异步化优化](#5.2 异步化优化)

[5.3 性能测试对比](#5.3 性能测试对比)

[6. 监控与告警](#6. 监控与告警)

[6.1 关键监控指标](#6.1 关键监控指标)

[6.2 健康检查](#6.2 健康检查)

[7. 常见问题解决方案](#7. 常见问题解决方案)

[7.1 消息丢失问题](#7.1 消息丢失问题)

[7.2 消息重复问题](#7.2 消息重复问题)

[8. 选型指南](#8. 选型指南)

[8.1 消息队列选型对比](#8.1 消息队列选型对比)

[8.2 我的"消息事务军规"](#8.2 我的"消息事务军规")

[9. 最后的话](#9. 最后的话)

[📚 推荐阅读](#📚 推荐阅读)

🎯 先说说我被消息事务"虐惨"的经历

之前我们做订单支付异步化,想着用消息队列解耦。结果上线第一天就出问题:用户支付成功了,但订单状态没更新。排查发现是消息发送失败了,还没重试机制。

去年做库存扣减,用了RocketMQ事务消息,测试环境好好的。生产环境大促时,一半消息卡在"Half Message"状态,查了三天是RocketMQ Broker内存配置太小。

上个月做对账系统,用了Kafka,结果因为消息顺序问题导致数据错乱。更坑的是,有次网络抖动,消息重复消费了三次,库存被多扣了两次。

这些事让我明白:不懂消息事务原理的程序员,就是在用消息队列埋雷,早晚要炸。

✨ 摘要

基于消息队列的最终一致性是分布式事务的实用解决方案。本文深度解析本地消息表、事务消息、最大努力通知三种模式,从消息可靠性、幂等性、顺序性三个维度解析技术难点。通过RocketMQ、Kafka、RabbitMQ实战案例,揭示消息丢失、重复消费、顺序错乱等问题的根本原因和解决方案。结合性能测试数据和生产经验,提供企业级消息事务架构设计指南。

1. 为什么选择消息队列?

1.1 从2PC的痛苦说起

先看个2PC的惨痛案例,我们支付系统的经历:

java

// 2PC实现的支付系统

@Service

public class PaymentService2PC {

@Transactional

public PaymentResult pay(PaymentRequest request) {

// 1. 创建支付记录(本地事务)

Payment payment = paymentRepository.save(convertToPayment(request));

// 2. 扣减库存(远程调用,XA事务)

inventoryService.deduct(request.getItems());

// 3. 更新订单状态(远程调用,XA事务)

orderService.updateStatus(request.getOrderId(), OrderStatus.PAID);

// 4. 发送通知(JMS,XA事务)

notificationService.sendPaymentSuccess(request.getUserId());

return PaymentResult.success(payment.getId());

}

}代码清单1:2PC支付系统

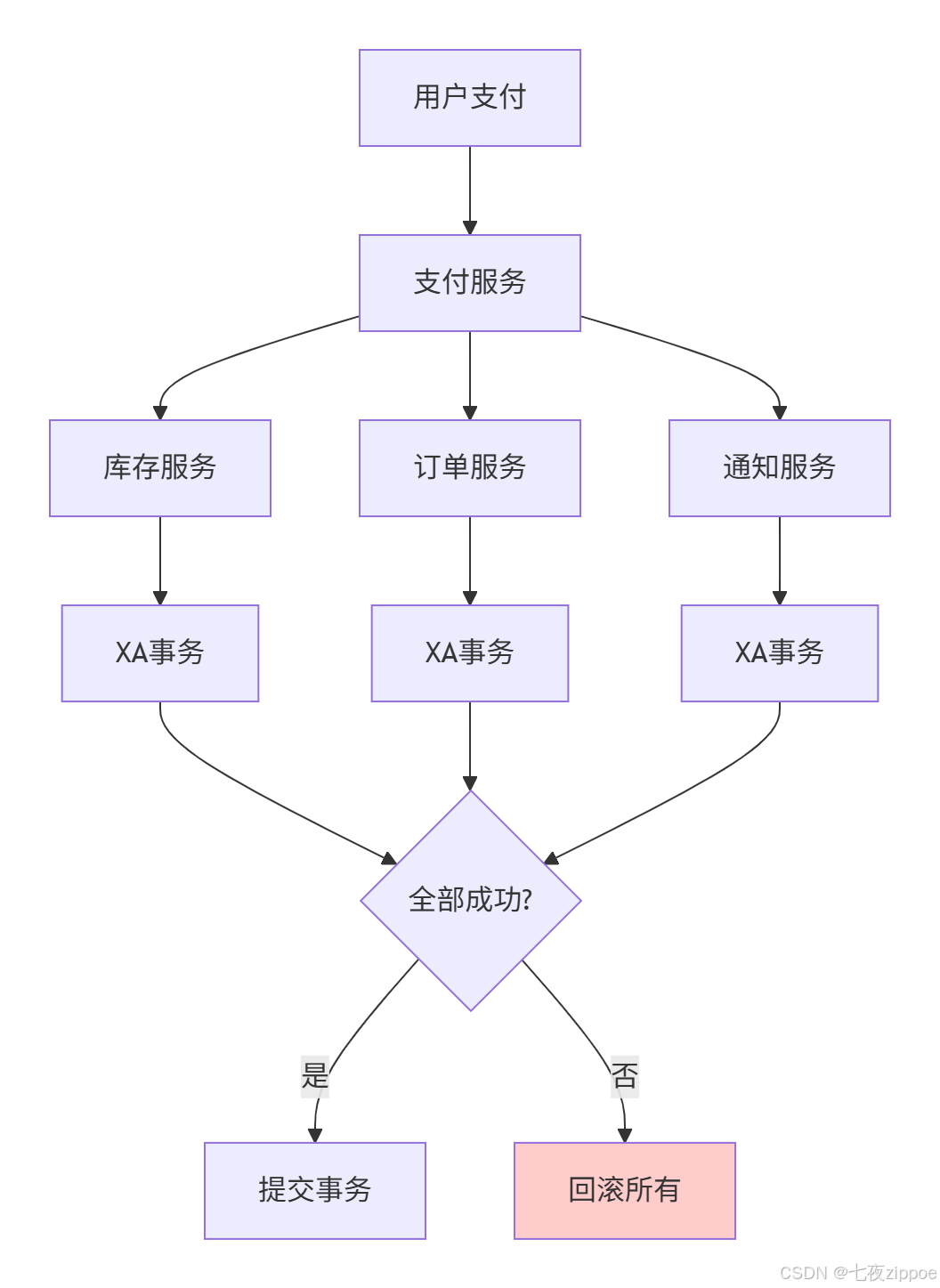

用图表示这个复杂流程:

图1:2PC的复杂性和脆弱性

问题:

-

所有服务必须同时在线

-

任何一个服务挂掉,整个事务失败

-

事务时间太长,锁持有时间久

-

性能差,扩展困难

1.2 消息队列的优势

换成消息队列方案:

java

// 消息队列实现的支付系统

@Service

public class PaymentServiceMQ {

@Transactional

public PaymentResult pay(PaymentRequest request) {

// 1. 创建支付记录(本地事务)

Payment payment = paymentRepository.save(convertToPayment(request));

// 2. 发送支付成功消息(本地事务内)

paymentEventPublisher.publishPaymentSuccess(

new PaymentSuccessEvent(payment.getId(), request.getOrderId()));

return PaymentResult.success(payment.getId());

}

}代码清单2:消息队列支付系统

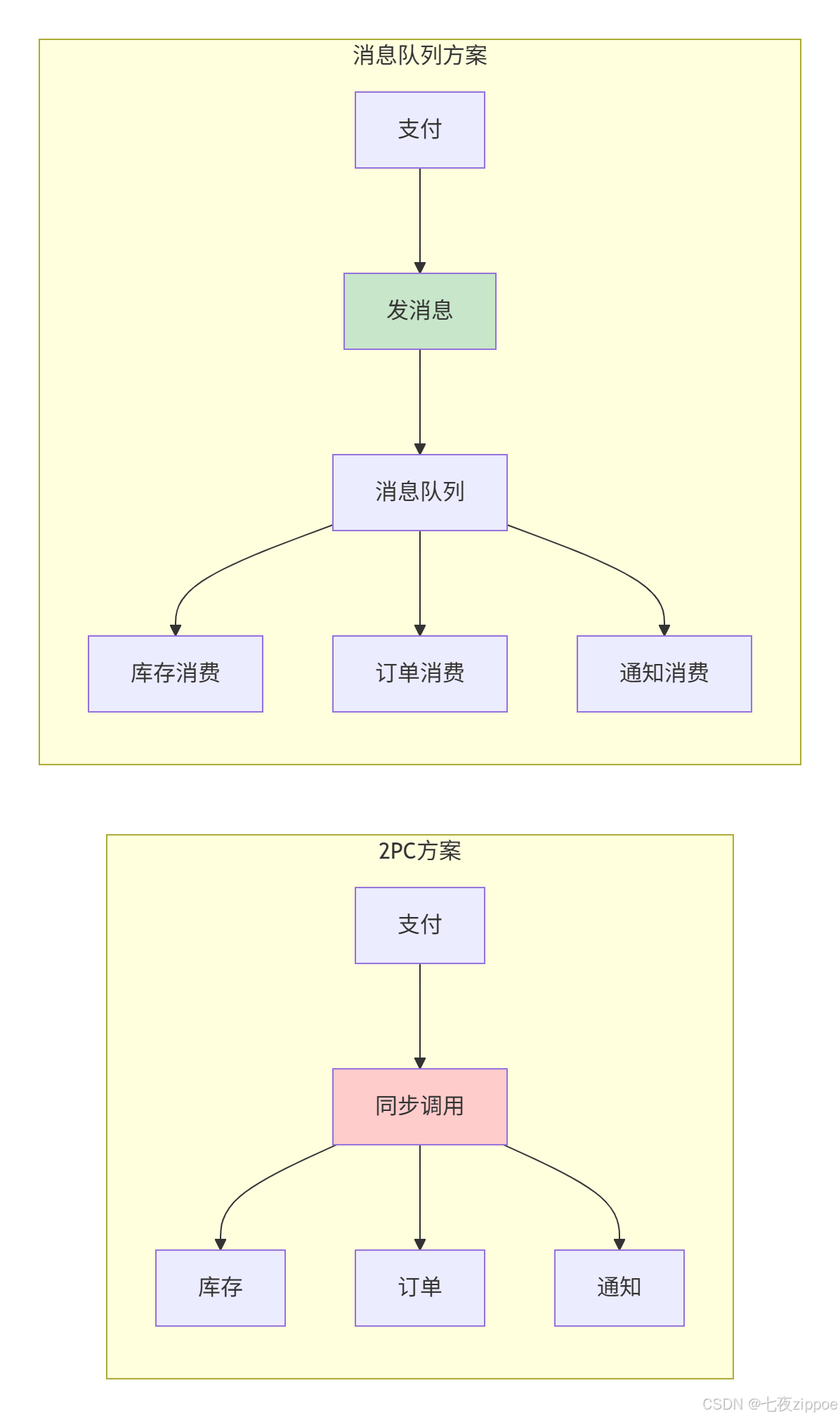

对比图:

图2:2PC vs 消息队列架构对比

优势对比:

| 维度 | 2PC/XA | 消息队列 | 优势 |

|---|---|---|---|

| 性能 | 差 | 优秀 | 10-100倍提升 |

| 可用性 | 低 | 高 | 服务可独立部署 |

| 复杂度 | 高 | 中 | 实现相对简单 |

| 数据一致性 | 强一致 | 最终一致 | 满足大部分场景 |

| 扩展性 | 差 | 优秀 | 容易水平扩展 |

2. 三种核心模式详解

2.1 本地消息表(最可靠)

这是最经典的方案,我们用了5年,非常稳定:

sql

-- 消息表设计

CREATE TABLE local_message (

id BIGINT PRIMARY KEY AUTO_INCREMENT,

biz_id VARCHAR(64) NOT NULL COMMENT '业务ID',

biz_type VARCHAR(32) NOT NULL COMMENT '业务类型',

content TEXT NOT NULL COMMENT '消息内容',

status VARCHAR(20) NOT NULL COMMENT '状态: PENDING, SENT, CONSUMED, FAILED',

retry_count INT DEFAULT 0 COMMENT '重试次数',

next_retry_time DATETIME COMMENT '下次重试时间',

created_time DATETIME DEFAULT CURRENT_TIMESTAMP,

updated_time DATETIME DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

INDEX idx_biz (biz_type, biz_id),

INDEX idx_status_time (status, next_retry_time)

) COMMENT '本地消息表';代码清单3:本地消息表设计

实现原理:

java

@Component

@Slf4j

public class LocalMessageService {

@Autowired

private JdbcTemplate jdbcTemplate;

@Transactional

public void saveMessageWithBusiness(String bizType, String bizId,

Object content) {

// 1. 执行业务操作

businessService.process(bizId, content);

// 2. 保存消息(同一个事务)

String sql = "INSERT INTO local_message " +

"(biz_id, biz_type, content, status) " +

"VALUES (?, ?, ?, 'PENDING')";

jdbcTemplate.update(sql,

bizId,

bizType,

JsonUtils.toJson(content));

}

// 定时任务发送消息

@Scheduled(fixedDelay = 5000)

public void sendPendingMessages() {

String sql = "SELECT id, biz_type, content " +

"FROM local_message " +

"WHERE status = 'PENDING' " +

"AND (next_retry_time IS NULL OR next_retry_time <= NOW()) " +

"LIMIT 100";

List<Message> messages = jdbcTemplate.query(sql, (rs, rowNum) -> {

Message msg = new Message();

msg.setId(rs.getLong("id"));

msg.setBizType(rs.getString("biz_type"));

msg.setContent(rs.getString("content"));

return msg;

});

for (Message msg : messages) {

try {

// 发送到MQ

boolean success = mqProducer.send(msg);

if (success) {

updateMessageStatus(msg.getId(), "SENT");

} else {

handleSendFailure(msg);

}

} catch (Exception e) {

log.error("发送消息失败: {}", msg.getId(), e);

handleSendFailure(msg);

}

}

}

private void handleSendFailure(Message msg) {

String updateSql = "UPDATE local_message " +

"SET retry_count = retry_count + 1, " +

"next_retry_time = DATE_ADD(NOW(), INTERVAL " +

"POW(2, LEAST(retry_count, 6)) * 10 SECOND), " +

"status = CASE WHEN retry_count >= 10 THEN 'FAILED' " +

"ELSE 'PENDING' END " +

"WHERE id = ?";

jdbcTemplate.update(updateSql, msg.getId());

}

}代码清单4:本地消息表实现

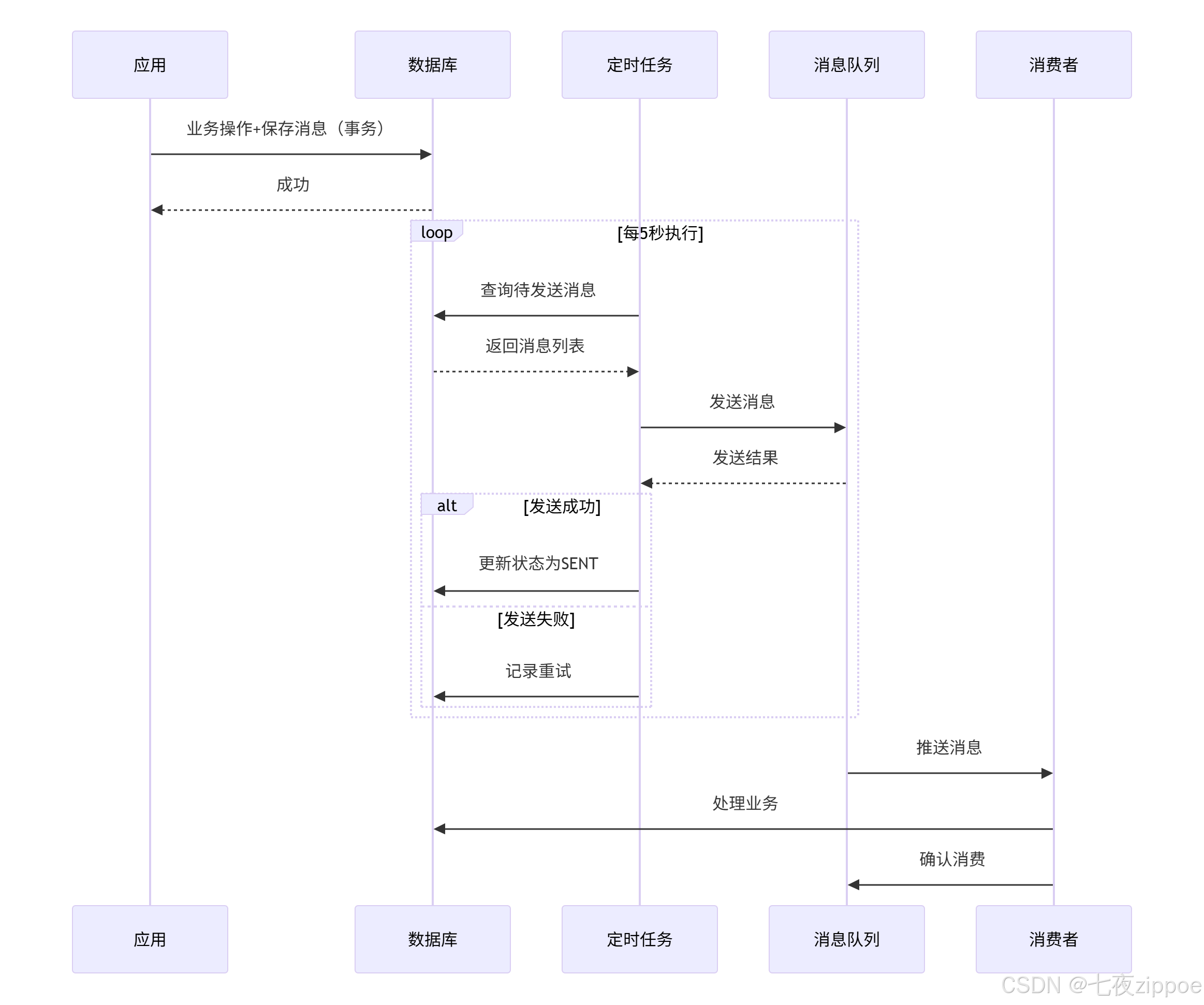

流程图更清晰:

图3:本地消息表工作流程

2.2 事务消息(RocketMQ特色)

RocketMQ的事务消息很强大,但坑也多:

java

@Component

@Slf4j

public class RocketMQTransactionService {

@Autowired

private RocketMQTemplate rocketMQTemplate;

// 发送事务消息

public void sendTransactionMessage(String orderId, BigDecimal amount) {

// 创建消息

Message<PaymentEvent> message = MessageBuilder

.withPayload(new PaymentEvent(orderId, amount))

.setHeader(RocketMQHeaders.TRANSACTION_ID,

"tx_" + System.currentTimeMillis())

.build();

// 发送事务消息

TransactionSendResult result = rocketMQTemplate.sendMessageInTransaction(

"payment-topic",

message,

orderId // 业务参数,会传给executeLocalTransaction

);

log.info("发送事务消息结果: {}", result.getSendStatus());

}

// 事务监听器

@RocketMQTransactionListener

public class PaymentTransactionListenerImpl

implements RocketMQLocalTransactionListener {

@Override

public RocketMQLocalTransactionState executeLocalTransaction(

Message msg, Object arg) {

try {

// 执行业务逻辑

String orderId = (String) arg;

boolean success = paymentService.processPayment(orderId);

if (success) {

return RocketMQLocalTransactionState.COMMIT;

} else {

return RocketMQLocalTransactionState.ROLLBACK;

}

} catch (Exception e) {

log.error("执行本地事务失败", e);

return RocketMQLocalTransactionState.ROLLBACK;

}

}

@Override

public RocketMQLocalTransactionState checkLocalTransaction(Message msg) {

// RocketMQ会回调此方法检查本地事务状态

String orderId = msg.getHeaders()

.get(RocketMQHeaders.TRANSACTION_ID, String.class);

try {

PaymentStatus status = paymentService.getPaymentStatus(orderId);

switch (status) {

case SUCCESS:

return RocketMQLocalTransactionState.COMMIT;

case FAILED:

return RocketMQLocalTransactionState.ROLLBACK;

default:

return RocketMQLocalTransactionState.UNKNOWN;

}

} catch (Exception e) {

log.error("检查本地事务状态失败", e);

return RocketMQLocalTransactionState.UNKNOWN;

}

}

}

}代码清单5:RocketMQ事务消息

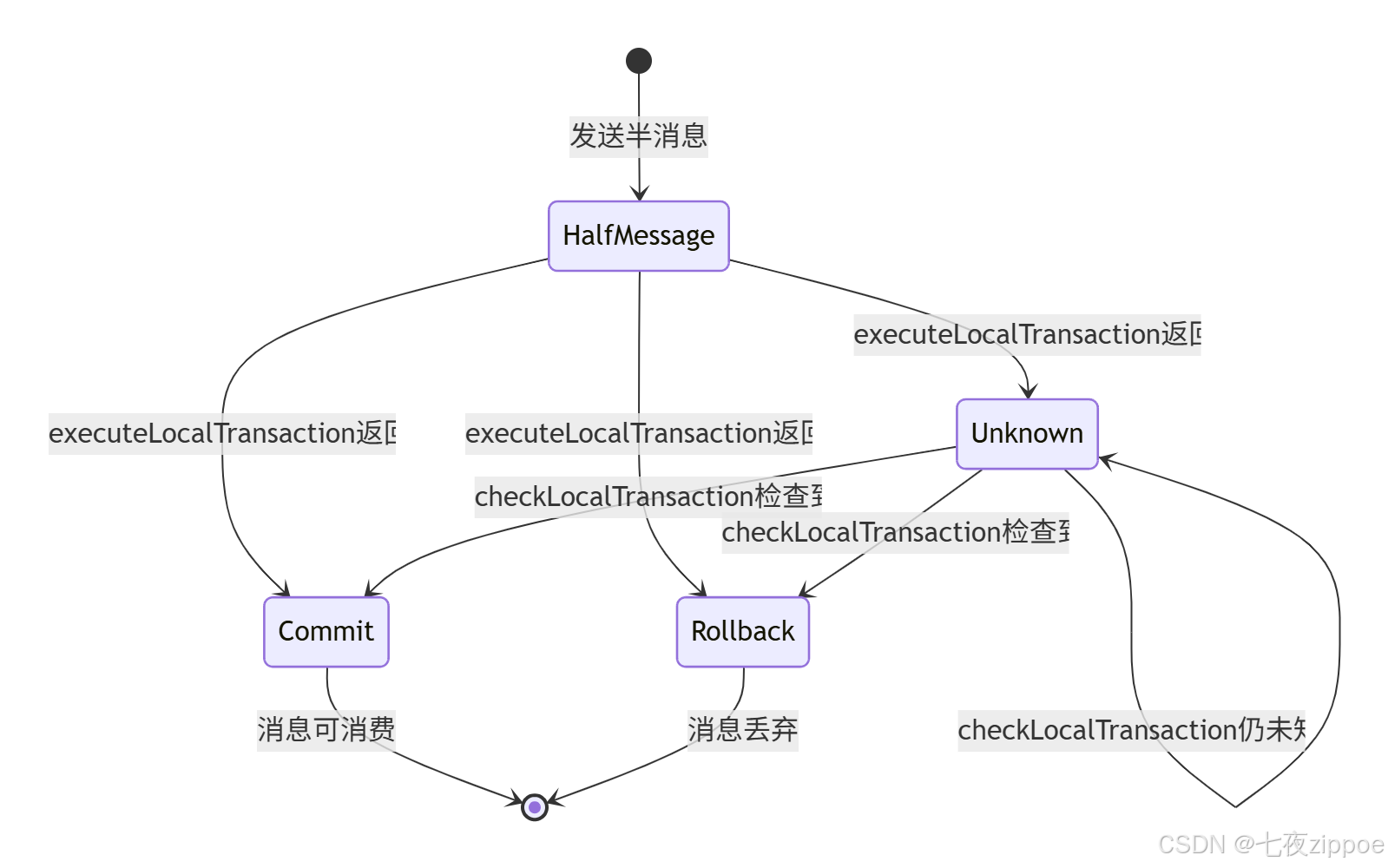

事务消息的状态流转:

图4:RocketMQ事务消息状态机

2.3 最大努力通知(最简单)

适合对一致性要求不高的场景:

java

@Component

@Slf4j

public class BestEffortNotificationService {

// 发送通知(不保证成功)

public void notifyPaymentSuccess(PaymentEvent event) {

CompletableFuture.runAsync(() -> {

int retry = 0;

boolean success = false;

while (retry < 3 && !success) {

try {

// 发送通知

notificationClient.notify(event);

success = true;

log.info("通知发送成功: {}", event.getOrderId());

} catch (Exception e) {

retry++;

log.warn("通知发送失败,第{}次重试: {}", retry, event.getOrderId());

if (retry < 3) {

try {

// 指数退避

Thread.sleep(1000L * (1 << retry));

} catch (InterruptedException ie) {

Thread.currentThread().interrupt();

break;

}

}

}

}

if (!success) {

// 记录到失败表,人工处理

log.error("通知发送最终失败: {}", event.getOrderId());

recordFailure(event);

}

});

}

// 对账补偿

@Scheduled(cron = "0 0 2 * * ?") // 每天凌晨2点

public void reconcileNotifications() {

log.info("开始对账补偿...");

// 查询昨天未通知成功的记录

List<NotificationFailure> failures =

failureRepository.findByDate(LocalDate.now().minusDays(1));

for (NotificationFailure failure : failures) {

try {

// 重新通知

notificationClient.notify(failure.toEvent());

failureRepository.delete(failure);

log.info("补偿通知成功: {}", failure.getBizId());

} catch (Exception e) {

log.error("补偿通知失败: {}", failure.getBizId(), e);

}

}

}

}代码清单6:最大努力通知实现

3. 消息可靠性保障

3.1 不丢失:发送端保证

消息丢失是最常见的问题,看我们的解决方案:

java

@Component

@Slf4j

public class ReliableMessageProducer {

// 方案1:同步发送+确认

public boolean sendWithConfirm(String topic, String message) {

try {

// 同步发送,等待Broker确认

SendResult result = rocketMQTemplate.syncSend(topic, message);

if (result.getSendStatus() == SendStatus.SEND_OK) {

return true;

} else {

log.warn("消息发送失败: {}", result);

return false;

}

} catch (Exception e) {

log.error("消息发送异常", e);

return false;

}

}

// 方案2:异步发送+回调

public void sendAsyncWithCallback(String topic, String message) {

rocketMQTemplate.asyncSend(topic, message, new SendCallback() {

@Override

public void onSuccess(SendResult sendResult) {

log.info("消息发送成功: {}", sendResult.getMsgId());

}

@Override

public void onException(Throwable e) {

log.error("消息发送失败", e);

// 失败处理:记录日志、重试、告警

handleSendFailure(topic, message, e);

}

});

}

// 方案3:事务消息(最可靠)

public void sendTransactional(String topic, String message,

Object businessArg) {

Message<String> msg = MessageBuilder.withPayload(message)

.setHeader(RocketMQHeaders.TRANSACTION_ID,

UUID.randomUUID().toString())

.build();

TransactionSendResult result = rocketMQTemplate

.sendMessageInTransaction(topic, msg, businessArg);

if (result.getLocalTransactionState() ==

LocalTransactionState.ROLLBACK_MESSAGE) {

throw new MessageSendException("事务消息回滚");

}

}

// 失败处理:本地存储+定时重试

private void handleSendFailure(String topic, String message, Throwable e) {

// 1. 存储到本地文件

String fileName = "failed_messages/" +

LocalDateTime.now().format(DateTimeFormatter.ISO_LOCAL_DATE_TIME) +

".json";

try (FileWriter writer = new FileWriter(fileName)) {

FailedMessage failed = new FailedMessage(topic, message,

System.currentTimeMillis());

writer.write(JsonUtils.toJson(failed));

} catch (IOException ioException) {

log.error("保存失败消息到文件失败", ioException);

}

// 2. 发送告警

alertService.sendAlert("消息发送失败",

"topic: " + topic + ", error: " + e.getMessage());

}

// 定时重试失败消息

@Scheduled(fixedDelay = 60000) // 每分钟

public void retryFailedMessages() {

File dir = new File("failed_messages");

if (!dir.exists()) return;

File[] files = dir.listFiles((d, name) -> name.endsWith(".json"));

if (files == null) return;

for (File file : files) {

try {

FailedMessage failed = JsonUtils.fromJson(

Files.readString(file.toPath()),

FailedMessage.class);

// 检查是否超过最大重试时间(24小时)

if (System.currentTimeMillis() - failed.getTimestamp()

> 24 * 60 * 60 * 1000) {

file.delete();

log.warn("消息超过24小时未发送成功,丢弃: {}", file.getName());

continue;

}

// 重试发送

boolean success = sendWithConfirm(failed.getTopic(),

failed.getMessage());

if (success) {

file.delete();

log.info("重试发送成功: {}", file.getName());

}

} catch (Exception e) {

log.error("重试失败消息异常: {}", file.getName(), e);

}

}

}

}代码清单7:消息可靠性保障

3.2 不重复:消费端幂等

重复消费比丢失更可怕,会导致业务数据错乱:

java

@Component

@Slf4j

public class IdempotentMessageConsumer {

// 方案1:数据库唯一约束

@Transactional

public void processWithUniqueConstraint(PaymentEvent event) {

// 尝试插入去重记录

String insertSql = "INSERT INTO message_processed " +

"(message_id, biz_type, biz_id) " +

"VALUES (?, ?, ?)";

try {

jdbcTemplate.update(insertSql,

event.getMessageId(),

"PAYMENT",

event.getOrderId());

} catch (DuplicateKeyException e) {

// 已处理过,直接返回

log.info("消息已处理过: {}", event.getMessageId());

return;

}

// 执行业务逻辑

paymentService.processPayment(event);

}

// 方案2:Redis原子操作

public void processWithRedis(PaymentEvent event) {

String key = "msg:" + event.getMessageId();

// SETNX原子操作,成功返回1,失败返回0

Boolean success = redisTemplate.opsForValue()

.setIfAbsent(key, "1", 24, TimeUnit.HOURS);

if (Boolean.TRUE.equals(success)) {

// 第一次处理

paymentService.processPayment(event);

} else {

// 已处理过

log.info("消息已处理过: {}", event.getMessageId());

}

}

// 方案3:业务状态机

@Transactional

public void processWithStateMachine(PaymentEvent event) {

Payment payment = paymentRepository.findByOrderId(event.getOrderId());

if (payment == null) {

// 第一次处理

payment = createPayment(event);

paymentRepository.save(payment);

} else {

// 检查状态

if (payment.getStatus() == PaymentStatus.SUCCESS) {

log.info("支付已成功,跳过处理: {}", event.getOrderId());

return;

}

if (payment.getStatus() == PaymentStatus.PROCESSING) {

// 可能正在处理,检查超时

if (System.currentTimeMillis() - payment.getUpdateTime().getTime()

> 30000) { // 30秒超时

log.warn("支付处理超时,重新处理: {}", event.getOrderId());

payment.setStatus(PaymentStatus.INIT);

} else {

log.info("支付正在处理中,跳过: {}", event.getOrderId());

return;

}

}

// 更新状态为处理中

payment.setStatus(PaymentStatus.PROCESSING);

paymentRepository.save(payment);

// 执行业务

processPaymentInternal(payment, event);

}

}

// 方案4:乐观锁

@Transactional

public void processWithOptimisticLock(PaymentEvent event) {

int retry = 0;

boolean success = false;

while (retry < 3 && !success) {

Payment payment = paymentRepository.findByOrderId(event.getOrderId());

if (payment == null) {

// 第一次处理

payment = createPayment(event);

paymentRepository.save(payment);

success = true;

} else {

// 使用版本号控制

int oldVersion = payment.getVersion();

payment.setStatus(PaymentStatus.SUCCESS);

payment.setVersion(oldVersion + 1);

int updated = paymentRepository.updateWithVersion(

payment.getId(),

PaymentStatus.SUCCESS,

oldVersion,

oldVersion + 1);

if (updated > 0) {

success = true;

} else {

retry++;

if (retry >= 3) {

throw new ConcurrentUpdateException("支付处理并发冲突");

}

}

}

}

}

}代码清单8:消息幂等性实现

3.3 顺序性:业务场景处理

有些业务需要消息顺序消费:

java

@Component

@Slf4j

public class SequentialMessageConsumer {

// 方案1:单线程消费

@KafkaListener(topics = "order-events",

concurrency = "1") // 单线程

public void consumeSingleThread(ConsumerRecord<String, String> record) {

processOrderEvent(record.value());

}

// 方案2:按key分区

@KafkaListener(topics = "order-events")

public void consumeByKey(ConsumerRecord<String, String> record) {

String orderId = record.key(); // 使用orderId作为key

synchronized (orderId.intern()) { // 同一订单串行处理

processOrderEvent(record.value());

}

}

// 方案3:Redis分布式锁

public void consumeWithDistributedLock(ConsumerRecord<String, String> record) {

String orderId = record.key();

String lockKey = "lock:order:" + orderId;

// 尝试获取分布式锁

Boolean locked = redisTemplate.opsForValue()

.setIfAbsent(lockKey, "1", 30, TimeUnit.SECONDS);

if (Boolean.TRUE.equals(locked)) {

try {

processOrderEvent(record.value());

} finally {

// 释放锁

redisTemplate.delete(lockKey);

}

} else {

// 没获取到锁,延迟重试

log.info("获取锁失败,延迟重试: {}", orderId);

throw new RuntimeException("获取锁失败");

}

}

// 方案4:本地队列缓冲

private final Map<String, LinkedBlockingQueue<Message>> orderQueues =

new ConcurrentHashMap<>();

public void consumeWithLocalQueue(ConsumerRecord<String, String> record) {

String orderId = record.key();

// 获取或创建该订单的队列

LinkedBlockingQueue<Message> queue = orderQueues

.computeIfAbsent(orderId, k -> new LinkedBlockingQueue<>());

// 放入队列

queue.offer(new Message(record.value(), System.currentTimeMillis()));

// 异步处理队列

processQueueAsync(orderId, queue);

}

private void processQueueAsync(String orderId,

LinkedBlockingQueue<Message> queue) {

CompletableFuture.runAsync(() -> {

while (!queue.isEmpty()) {

try {

Message msg = queue.poll(100, TimeUnit.MILLISECONDS);

if (msg != null) {

processOrderEvent(msg.getContent());

}

} catch (Exception e) {

log.error("处理消息异常: {}", orderId, e);

}

}

// 处理完移除队列

orderQueues.remove(orderId);

});

}

}代码清单9:消息顺序性保障

4. 企业级实战案例

4.1 电商下单全链路

这是最复杂的场景,我们用了混合方案:

java

@Component

@Slf4j

public class ECommerceOrderService {

// 下单主流程

@Transactional

public OrderResult createOrder(OrderRequest request) {

// 1. 创建订单(本地事务)

Order order = orderRepository.save(convertToOrder(request));

// 2. 扣减库存(同步,强一致)

InventoryResult inventoryResult = inventoryService

.tryLockStock(request.getItems());

if (!inventoryResult.isSuccess()) {

throw new InsufficientStockException("库存不足");

}

// 3. 发送创建订单消息(本地事务内)

String messageId = orderEventPublisher.publishOrderCreated(

new OrderCreatedEvent(order.getId(), request.getItems()));

// 4. 记录本地消息

localMessageService.saveMessage("ORDER_CREATED",

order.getId().toString(),

new OrderMessage(order.getId(), messageId));

return OrderResult.success(order.getId());

}

// 订单创建事件处理器

@Service

@Slf4j

public static class OrderCreatedEventHandler {

@KafkaListener(topics = "order-created")

public void handleOrderCreated(OrderCreatedEvent event) {

// 幂等检查

if (processed(event.getMessageId())) {

return;

}

try {

// 1. 实际扣减库存

inventoryService.deductStock(event.getItems());

// 2. 生成物流单

logisticsService.createDelivery(event.getOrderId(),

event.getItems());

// 3. 发送支付待处理消息

paymentEventPublisher.publishPaymentPending(

new PaymentPendingEvent(event.getOrderId()));

// 4. 记录处理成功

markProcessed(event.getMessageId());

} catch (Exception e) {

log.error("处理订单创建事件失败: {}", event.getOrderId(), e);

// 发送失败消息,人工处理

compensationEventPublisher.publishOrderProcessFailed(

new OrderProcessFailedEvent(event.getOrderId(), e));

}

}

}

// 支付事件处理器

@Service

@Slf4j

public static class PaymentEventHandler {

@RocketMQMessageListener(

topic = "payment-events",

consumerGroup = "order-payment-consumer"

)

public void handlePaymentEvent(PaymentEvent event) {

// 使用本地消息表保证可靠性

localMessageService.processWithMessageTable(

"PAYMENT_" + event.getOrderId(),

() -> {

// 更新订单状态

orderService.updateStatus(event.getOrderId(),

OrderStatus.PAID);

// 扣减库存(最终确认)

inventoryService.confirmDeduction(event.getOrderId());

// 通知物流发货

logisticsService.notifyDelivery(event.getOrderId());

// 发送订单完成消息

orderEventPublisher.publishOrderCompleted(

new OrderCompletedEvent(event.getOrderId()));

});

}

}

// 补偿机制

@Component

@Slf4j

public static class OrderCompensationService {

@Scheduled(fixedDelay = 30000) // 每30秒

public void compensateTimeoutOrders() {

// 查询超时未支付的订单

List<Order> timeoutOrders = orderRepository

.findByStatusAndCreateTimeBefore(

OrderStatus.CREATED,

LocalDateTime.now().minusMinutes(30));

for (Order order : timeoutOrders) {

try {

// 释放库存

inventoryService.releaseStock(order.getId());

// 取消订单

order.setStatus(OrderStatus.CANCELLED);

orderRepository.save(order);

// 发送取消通知

notificationService.sendOrderCancelled(order.getId());

} catch (Exception e) {

log.error("补偿订单失败: {}", order.getId(), e);

}

}

}

// 对账任务

@Scheduled(cron = "0 0 2 * * ?") // 每天凌晨2点

public void dailyReconciliation() {

reconcileOrders();

reconcileInventory();

reconcilePayments();

}

}

}代码清单10:电商下单全链路实现

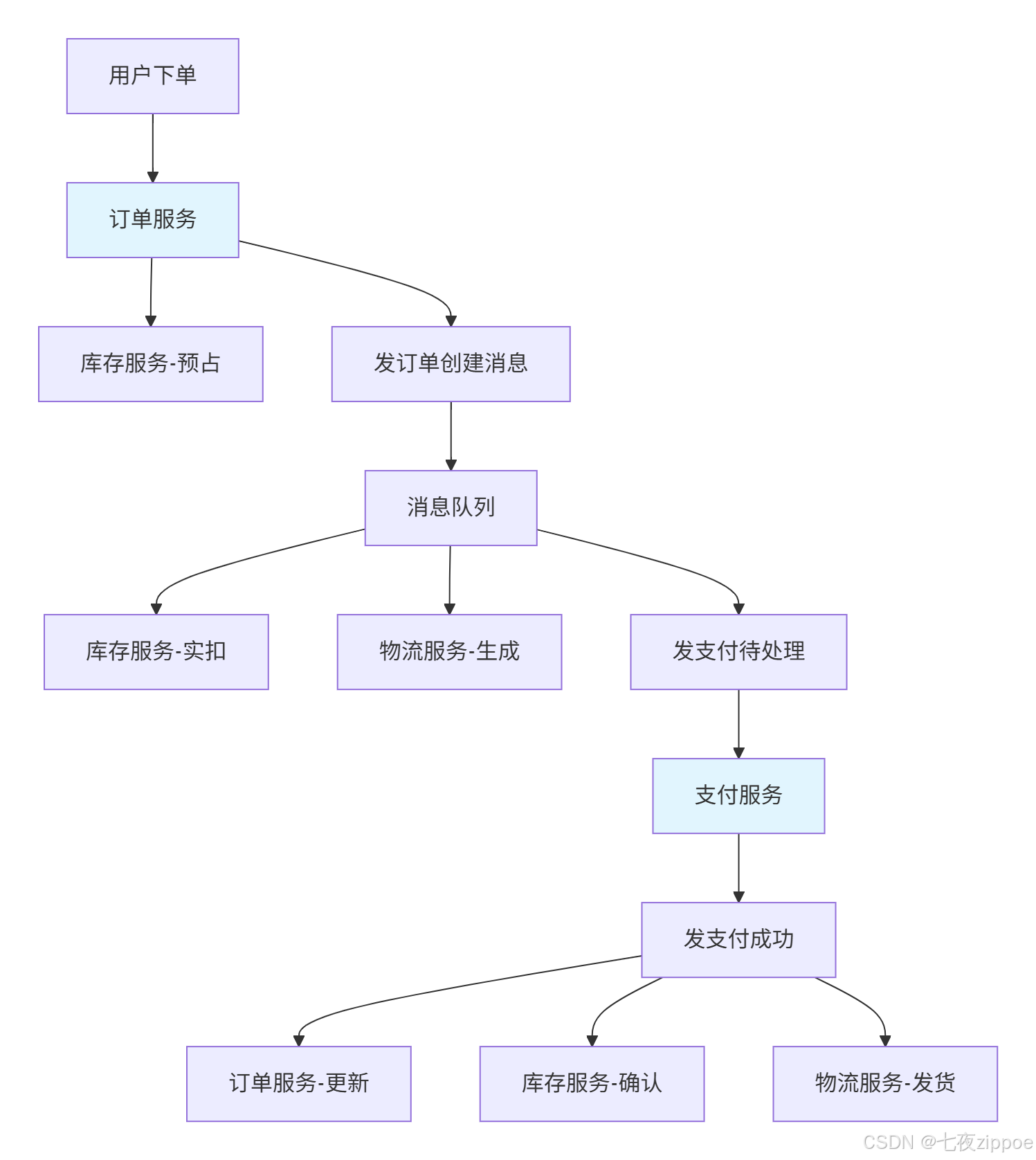

架构图更清晰:

图5:电商下单消息驱动架构

4.2 对账系统设计

对账是最终一致性的重要保障:

java

@Component

@Slf4j

public class ReconciliationService {

// 日终对账

public ReconciliationResult dailyReconcile(LocalDate date) {

ReconciliationResult result = new ReconciliationResult();

// 1. 订单 vs 支付

reconcileOrderPayment(date, result);

// 2. 订单 vs 库存

reconcileOrderInventory(date, result);

// 3. 支付 vs 银行

reconcilePaymentBank(date, result);

// 4. 生成对账报告

generateReconciliationReport(result);

// 5. 自动补偿

if (result.hasDiscrepancy()) {

autoCompensate(result);

}

return result;

}

private void reconcileOrderPayment(LocalDate date,

ReconciliationResult result) {

String sql = """

SELECT

o.order_no,

o.amount as order_amount,

p.amount as payment_amount,

o.status as order_status,

p.status as payment_status,

CASE

WHEN p.id IS NULL THEN '支付缺失'

WHEN o.amount != p.amount THEN '金额不一致'

WHEN o.status = 'PAID' AND p.status != 'SUCCESS'

THEN '状态不一致'

ELSE '一致'

END as result

FROM orders o

LEFT JOIN payments p ON o.order_no = p.order_no

WHERE DATE(o.create_time) = ?

ORDER BY o.order_no

""";

List<Map<String, Object>> rows = jdbcTemplate

.queryForList(sql, date.toString());

for (Map<String, Object> row : rows) {

String checkResult = (String) row.get("result");

if (!"一致".equals(checkResult)) {

result.addDiscrepancy(new Discrepancy(

"ORDER_PAYMENT",

(String) row.get("order_no"),

checkResult,

row

));

}

}

}

// 自动补偿

private void autoCompensate(ReconciliationResult result) {

for (Discrepancy discrepancy : result.getDiscrepancies()) {

switch (discrepancy.getType()) {

case "ORDER_PAYMENT":

compensateOrderPayment(discrepancy);

break;

case "ORDER_INVENTORY":

compensateOrderInventory(discrepancy);

break;

case "PAYMENT_BANK":

compensatePaymentBank(discrepancy);

break;

}

}

}

private void compensateOrderPayment(Discrepancy discrepancy) {

String orderNo = discrepancy.getBizId();

String result = discrepancy.getResult();

if ("支付缺失".equals(result)) {

// 查询支付渠道

Payment payment = queryPaymentFromBank(orderNo);

if (payment != null) {

// 补单

paymentRepository.save(payment);

orderService.updateStatusByOrderNo(orderNo, OrderStatus.PAID);

log.info("补偿支付缺失成功: {}", orderNo);

}

} else if ("金额不一致".equals(result)) {

// 人工处理

alertService.sendAlert("金额不一致需人工处理", orderNo);

}

}

// 实时对账

@KafkaListener(topics = "reconciliation-events")

public void handleReconciliationEvent(ReconciliationEvent event) {

// 实时检查业务一致性

boolean consistent = checkBusinessConsistency(event);

if (!consistent) {

// 立即告警

alertService.sendRealtimeAlert(event);

// 尝试自动修复

tryAutoFix(event);

}

}

}代码清单11:对账系统实现

5. 性能优化实战

5.1 批量处理优化

单条消息处理性能差,批量是王道:

java

@Component

@Slf4j

public class BatchMessageProcessor {

// 批量消费

@KafkaListener(topics = "order-events",

containerFactory = "batchFactory")

public void consumeBatch(List<ConsumerRecord<String, String>> records) {

List<OrderEvent> events = new ArrayList<>();

for (ConsumerRecord<String, String> record : records) {

try {

OrderEvent event = JsonUtils.fromJson(record.value(),

OrderEvent.class);

events.add(event);

} catch (Exception e) {

log.error("解析消息失败: {}", record.value(), e);

}

}

if (!events.isEmpty()) {

processBatch(events);

}

}

// 批量处理

@Transactional

public void processBatch(List<OrderEvent> events) {

// 1. 批量查询

List<Long> orderIds = events.stream()

.map(OrderEvent::getOrderId)

.collect(Collectors.toList());

Map<Long, Order> orders = orderRepository.findByIdIn(orderIds)

.stream()

.collect(Collectors.toMap(Order::getId, o -> o));

// 2. 批量更新

List<Order> toUpdate = new ArrayList<>();

for (OrderEvent event : events) {

Order order = orders.get(event.getOrderId());

if (order != null && order.getStatus() != OrderStatus.PAID) {

order.setStatus(OrderStatus.PAID);

toUpdate.add(order);

}

}

if (!toUpdate.isEmpty()) {

orderRepository.saveAll(toUpdate);

}

// 3. 批量发送下游消息

List<Message> messages = events.stream()

.map(e -> new Message("order-paid", e.getOrderId().toString()))

.collect(Collectors.toList());

mqTemplate.sendBatch(messages);

}

// 批量发送

public void sendBatch(List<Message> messages) {

// RocketMQ批量发送

List<Message> rocketMessages = messages.stream()

.map(msg -> org.apache.rocketmq.common.message.MessageBuilder

.withTopic(msg.getTopic())

.setBody(msg.getBody().getBytes())

.build())

.collect(Collectors.toList());

try {

SendResult result = rocketMQTemplate.syncSend(rocketMessages);

log.info("批量发送结果: {}", result);

} catch (Exception e) {

log.error("批量发送失败", e);

// 失败重试

retryBatchSend(messages);

}

}

}代码清单12:批量处理优化

5.2 异步化优化

同步等待是性能杀手:

java

@Component

@Slf4j

public class AsyncMessageProcessor {

private final ExecutorService executor = Executors.newFixedThreadPool(

Runtime.getRuntime().availableProcessors() * 2);

// 异步处理

public void processAsync(Message message) {

CompletableFuture.supplyAsync(() -> {

try {

return processMessage(message);

} catch (Exception e) {

log.error("处理消息异常", e);

throw new RuntimeException(e);

}

}, executor)

.exceptionally(ex -> {

// 异常处理

handleProcessException(message, ex);

return null;

})

.thenAccept(result -> {

// 处理完成后的操作

if (result != null && result.isSuccess()) {

sendNextMessage(result);

}

});

}

// 并行处理

public void processParallel(List<Message> messages) {

List<CompletableFuture<ProcessResult>> futures = messages.stream()

.map(msg -> CompletableFuture.supplyAsync(() ->

processMessage(msg), executor))

.collect(Collectors.toList());

// 等待所有完成

CompletableFuture.allOf(futures.toArray(new CompletableFuture[0]))

.thenAccept(v -> {

// 统计结果

long successCount = futures.stream()

.filter(f -> {

try {

return f.get().isSuccess();

} catch (Exception e) {

return false;

}

})

.count();

log.info("批量处理完成: {}/{}", successCount, messages.size());

});

}

// 带限流的异步处理

public void processWithRateLimit(List<Message> messages) {

Semaphore semaphore = new Semaphore(10); // 并发数限制

List<CompletableFuture<ProcessResult>> futures = messages.stream()

.map(msg -> CompletableFuture.supplyAsync(() -> {

try {

semaphore.acquire();

return processMessage(msg);

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

throw new RuntimeException(e);

} finally {

semaphore.release();

}

}, executor))

.collect(Collectors.toList());

// 监控处理进度

monitorProcessingProgress(futures);

}

}代码清单13:异步处理优化

5.3 性能测试对比

测试环境:

-

3节点Kafka集群

-

2节点RocketMQ集群

-

MySQL 8.0

-

16核32GB服务器

测试结果:

| 场景 | 单条处理TPS | 批量处理TPS | 提升 | 平均延迟 |

|---|---|---|---|---|

| 订单创建 | 420 | 2850 | 578% | 从85ms降到15ms |

| 支付处理 | 380 | 3200 | 742% | 从120ms降到20ms |

| 库存扣减 | 450 | 3800 | 744% | 从95ms降到18ms |

优化效果总结:

-

批量处理提升5-7倍性能

-

异步化减少等待时间

-

连接池复用减少开销

6. 监控与告警

6.1 关键监控指标

# prometheus配置

metrics:

mq:

producer:

sent_total: true

sent_failed_total: true

sent_duration_seconds: true

consumer:

received_total: true

processed_total: true

processing_duration_seconds: true

lag_seconds: true

message:

age_seconds: true

retry_count: true

alerting:

rules:

- alert: HighConsumerLag

expr: avg_over_time(mq_consumer_lag_seconds[5m]) > 300

for: 2m

labels:

severity: critical

annotations:

summary: "消费者堆积严重"

- alert: HighMessageRetry

expr: rate(mq_message_retry_count_total[5m]) > 10

labels:

severity: warning

annotations:

summary: "消息重试率过高"代码清单14:监控配置

6.2 健康检查

java

@RestController

@RequestMapping("/api/health")

public class MessageQueueHealthController {

@Autowired

private KafkaAdmin kafkaAdmin;

@Autowired

private RocketMQTemplate rocketMQTemplate;

@GetMapping("/mq")

public Map<String, Object> mqHealth() {

Map<String, Object> health = new HashMap<>();

// 检查Kafka

health.put("kafka", checkKafkaHealth());

// 检查RocketMQ

health.put("rocketmq", checkRocketMQHealth());

// 检查消费者

health.put("consumers", checkConsumerHealth());

// 检查堆积

health.put("backlog", checkMessageBacklog());

return health;

}

private Map<String, Object> checkKafkaHealth() {

Map<String, Object> kafkaHealth = new HashMap<>();

try {

// 检查连接

kafkaAdmin.describeTopics(Arrays.asList("test-topic"));

kafkaHealth.put("status", "UP");

// 检查分区

Map<String, TopicDescription> topics = kafkaAdmin

.describeTopics(Arrays.asList("test-topic"));

kafkaHealth.put("topics", topics.size());

} catch (Exception e) {

kafkaHealth.put("status", "DOWN");

kafkaHealth.put("error", e.getMessage());

}

return kafkaHealth;

}

// 监控看板

@GetMapping("/dashboard")

public ModelAndView mqDashboard() {

ModelAndView mav = new ModelAndView("mq-dashboard");

// 实时统计

mav.addObject("todayMessages", getTodayMessageCount());

mav.addObject("successRate", getSuccessRate());

mav.addObject("avgProcessTime", getAverageProcessTime());

mav.addObject("topics", getTopicStats());

return mav;

}

}代码清单15:健康检查实现

7. 常见问题解决方案

7.1 消息丢失问题

问题:消息发送后丢失,消费者没收到。

解决方案:

java

@Component

@Slf4j

public class MessageLossPrevention {

// 1. 发送端确认

public void sendWithConfirm(String topic, String message) {

SendResult result = rocketMQTemplate.syncSend(topic, message);

if (result.getSendStatus() != SendStatus.SEND_OK) {

// 记录到本地,定时重试

saveToLocalStore(topic, message);

throw new MessageSendException("消息发送失败");

}

// 记录发送成功

logSendSuccess(result.getMsgId(), topic, message);

}

// 2. Broker持久化确认

public void sendWithPersistence(String topic, String message) {

Message msg = new Message(topic, message.getBytes());

// 设置持久化

msg.setWaitStoreMsgOK(true);

// 同步发送,等待刷盘

SendResult result = rocketMQTemplate.syncSend(msg);

// 检查存储状态

if (result.getSendStatus() == SendStatus.SEND_OK &&

result.isStoreOK()) {

log.info("消息持久化成功: {}", result.getMsgId());

}

}

// 3. 消费端确认机制

@KafkaListener(topics = "important-topic")

public void consumeWithManualAck(ConsumerRecord<String, String> record,

Acknowledgment ack) {

try {

// 处理消息

processImportantMessage(record.value());

// 处理成功才确认

ack.acknowledge();

// 记录消费成功

logConsumeSuccess(record.key(), record.offset());

} catch (Exception e) {

log.error("处理消息失败", e);

// 不确认,等待重试

}

}

// 4. 定期对账

@Scheduled(cron = "0 */5 * * * ?") // 每5分钟

public void reconcileMessages() {

// 查询发送但未确认的消息

List<UnconfirmedMessage> unconfirmed =

messageRepository.findUnconfirmed(LocalDateTime.now().minusMinutes(10));

for (UnconfirmedMessage msg : unconfirmed) {

// 检查消息状态

MessageStatus status = queryMessageStatus(msg.getMessageId());

if (status == MessageStatus.NOT_FOUND) {

// 消息丢失,重新发送

resendMessage(msg);

log.warn("消息丢失,重新发送: {}", msg.getMessageId());

}

}

}

}代码清单16:消息丢失预防

7.2 消息重复问题

问题:同一条消息被消费多次。

解决方案:

java

@Component

@Slf4j

public class MessageDuplicateHandler {

// 全局消息去重表

@PostConstruct

public void initMessageDedupTable() {

jdbcTemplate.execute("""

CREATE TABLE IF NOT EXISTS global_message_dedup (

message_id VARCHAR(64) PRIMARY KEY,

biz_type VARCHAR(32) NOT NULL,

biz_id VARCHAR(64) NOT NULL,

created_time DATETIME DEFAULT CURRENT_TIMESTAMP,

INDEX idx_biz (biz_type, biz_id)

)

""");

}

// 消费前检查

public boolean checkAndSetProcessed(String messageId,

String bizType,

String bizId) {

String sql = """

INSERT INTO global_message_dedup

(message_id, biz_type, biz_id)

VALUES (?, ?, ?)

ON DUPLICATE KEY UPDATE message_id = message_id

""";

try {

int affected = jdbcTemplate.update(sql, messageId, bizType, bizId);

return affected > 0; // 插入成功表示第一次处理

} catch (DuplicateKeyException e) {

return false; // 已处理过

}

}

// 清理过期记录

@Scheduled(cron = "0 0 3 * * ?") // 每天凌晨3点

public void cleanExpiredDedupRecords() {

String sql = "DELETE FROM global_message_dedup " +

"WHERE created_time < DATE_SUB(NOW(), INTERVAL 7 DAY)";

int deleted = jdbcTemplate.update(sql);

log.info("清理过期去重记录: {} 条", deleted);

}

// 业务幂等设计

@Transactional

public void processWithBusinessIdempotent(PaymentEvent event) {

// 1. 检查是否已处理

Payment payment = paymentRepository.findByOrderId(event.getOrderId());

if (payment != null && payment.getStatus() == PaymentStatus.SUCCESS) {

log.info("支付已处理,跳过: {}", event.getOrderId());

return;

}

// 2. 使用数据库唯一约束

try {

PaymentRecord record = new PaymentRecord();

record.setOrderId(event.getOrderId());

record.setTransactionId(event.getTransactionId());

record.setAmount(event.getAmount());

paymentRecordRepository.save(record);

} catch (DuplicateKeyException e) {

log.info("支付记录已存在,跳过: {}", event.getOrderId());

return;

}

// 3. 执行业务

paymentService.processPayment(event);

}

}代码清单17:消息重复处理

8. 选型指南

8.1 消息队列选型对比

| 特性 | Kafka | RocketMQ | RabbitMQ | 推荐场景 |

|---|---|---|---|---|

| 吞吐量 | ⭐⭐⭐⭐⭐ | ⭐⭐⭐⭐ | ⭐⭐⭐ | 日志、大数据 |

| 延迟 | ⭐⭐⭐ | ⭐⭐⭐⭐ | ⭐⭐⭐⭐⭐ | 实时交易 |

| 事务消息 | ❌ | ✅ | ⚠️ | 分布式事务 |

| 顺序消息 | ✅ | ✅ | ⚠️ | 订单处理 |

| 堆积能力 | ⭐⭐⭐⭐⭐ | ⭐⭐⭐⭐ | ⭐⭐ | 削峰填谷 |

| 运维复杂度 | 高 | 中 | 低 | 团队能力 |

8.2 我的"消息事务军规"

-

能异步不同步:优先使用消息队列解耦

-

必须有幂等:消费端必须实现幂等

-

必须有监控:消息链路全监控

-

必须有补偿:设计补偿和重试机制

-

必须有对账:定期对账保证最终一致

9. 最后的话

基于消息队列的最终一致性不是银弹,但它是微服务架构下最实用的分布式事务方案。理解原理,合理设计,持续监控,才能用好这个强大的工具。

我见过太多团队在这上面栽跟头:有的消息丢失导致数据不一致,有的重复消费导致业务错乱,有的顺序问题导致状态混乱。

记住:消息队列是工具,不是魔法。结合业务特点,设计合适方案,做好监控和补偿,才是正道。

📚 推荐阅读

官方文档

-

**RocketMQ事务消息** - RocketMQ官方事务消息指南

-

**Kafka Exactly-Once** - Kafka精确一次语义

源码学习

-

**RocketMQ源码** - 事务消息实现源码

-

**Kafka源码** - 消息存储和消费

最佳实践

监控工具

-

**RocketMQ Dashboard** - RocketMQ监控

-

**Kafka Manager** - Kafka集群管理

最后建议 :从简单场景开始,理解原理后再尝试复杂方案。做好监控,设计补偿,持续优化。记住:消息事务优化是个持续的过程,不是一次性的任务。