首先,感谢ultralytics又推出了新的yolo系列模型

先安装最新版本的ultralytics

bash

pip install -U ultralytics一、检测模型的onnxruntime推理

1. 导出onnx模型

然后,导出onnx模型

python

from ultralytics import YOLO

def export():

model = YOLO('yolo26s.pt")

model.export(format="onnx")

if __name__ == '__main__':

export()或者使用命令行的方式导出:

bash

yolo export model=./yolo26s.pt format=onnx imgsz=640 opset=122. 使用onnxruntime推理

与v8、v11最大的不同,detect模型v26输出的是300*6, 6->x1,y1,x2,y2,score,class_id

python

import cv2

import os

import numpy as np

import onnxruntime

onnxruntime.preload_dlls()#新版本的onnxruntime-gpu如果调用不了gpu,可以加上这一句命令,或者在import onnxruntime之间import torch

class Yolov26():

def __init__(self, modelpath,classes=80,size=640,confThreshold=0.25,nmsThreshold=0.5):

self.net = onnxruntime.InferenceSession(modelpath,providers='CUDAExecutionProvider'])

self.confThreshold=confThreshold

self.nmsThreshold=nmsThreshold

self.inpWidth = size

self.inpHeight = self.inpWidth

self.classes = classes

self.color = []

for i in range(classes):

self.color.append([np.random.randint(0, 256), np.random.randint(0, 256), np.random.randint(0, 256)])

def resize_image(self, srcimg, keep_ratio=True):

top, left, newh, neww = 0, 0, self.inpWidth, self.inpHeight

if keep_ratio and srcimg.shape[0] != srcimg.shape[1]:

hw_scale = srcimg.shape[0] / srcimg.shape[1]

if hw_scale > 1:

newh, neww = self.inpHeight, int(self.inpWidth / hw_scale)

img = cv2.resize(srcimg, (neww, newh), interpolation=cv2.INTER_AREA)

left = int((self.inpWidth - neww) * 0.5)

img = cv2.copyMakeBorder(img, 0, 0, left, self.inpWidth - neww - left, cv2.BORDER_CONSTANT,

value=(114, 114, 114)) # add border

else:

newh, neww = int(self.inpHeight * hw_scale), self.inpWidth

img = cv2.resize(srcimg, (neww, newh), interpolation=cv2.INTER_AREA)

top = int((self.inpHeight - newh) * 0.5)

img = cv2.copyMakeBorder(img, top, self.inpHeight - newh - top, 0, 0, cv2.BORDER_CONSTANT,

value=(114, 114, 114))

else:

img = cv2.resize(srcimg, (self.inpWidth, self.inpHeight), interpolation=cv2.INTER_AREA)

return img, newh, neww, top, left

def postprocessbox(self, frame, outs, padsize=None):

frameHeight = frame.shape[0]

frameWidth = frame.shape[1]

newh, neww, padh, padw = padsize

ratioh, ratiow = frameHeight / newh, frameWidth / neww

confidences = []

boxes = []

classIds = []

for detection in outs:

'''与v8、v11最大的不同,detect模型v26输出的是x1,y1,x2,y2,score,class_id'''

confidence = detection[4]

classId = detection[5]

if confidence > self.confThreshold:

x1 = int( np.ceil( (detection[0] - padw) * ratiow))

y1 = int( np.ceil( (detection[1] - padh) * ratioh))

x2 = int( np.ceil( (detection[2] - padw) * ratiow))

y2 = int( np.ceil( (detection[3] - padh) * ratioh))

boxes.append([int(x1), int(y1), int(x2-x1), int(y2-y1)])

confidences.append(float(confidence))

classIds.append(classId)

# Perform non maximum suppression to eliminate redundant overlapping boxes with

# lower confidences.

idxs = cv2.dnn.NMSBoxes(boxes, confidences, self.confThreshold,self.nmsThreshold)

box=np.zeros((0,4))

labels=np.zeros((0,))

confs=np.zeros((0,))

if len(idxs)>0:

box_seq = idxs.flatten()

box = np.array(boxes)[box_seq]

labels = np.array(classIds)[box_seq]

confs = np.array(confidences)[box_seq]

box[:, 2] += box[:, 0]

box[:, 3] += box[:, 1]

return box.reshape(-1,4).astype('int32'),labels.astype('int32'),confs

else:

return np.array([]),np.array([]),np.array([])

def detect(self,srcimg,crop=False):

self.srcimg = srcimg

img, newh, neww, padh, padw = self.resize_image(self.srcimg)

blob = cv2.dnn.blobFromImage(img, scalefactor=1 / 255.0, swapRB=True)

out = self.net.run(['output0'], {'images': blob})

detouts=out[0][0]

boxes,labels,confs = self.postprocessbox(self.srcimg, detouts, padsize=(newh, neww, padh, padw))

if boxes.shape[0]==0:

return np.zeros((0,4),dtype=np.int32),np.zeros((0,),dtype=np.int32),np.zeros((0,),dtype=np.float32)

else:

return boxes,labels,confs

def draw(self,srcimg,box,label,conf):

for i in range(box.shape[0]):

cv2.rectangle(srcimg, (box[i,0], box[i,1]), (box[i,2], box[i,3]), self.color[label[i]], 2)

cv2.putText(srcimg,'%d:%.2f'%(label[i],conf[i]), (box[i,0], box[i,1]), cv2.FONT_ITALIC, 1, self.color[label[i]], 1)

return srcimg

if __name__ == '__main__':

detectnet1 = Yolov26("yolo26s.onnx",use_gpu=True,classes=80,size=640,confThreshold=0.5)

filepath ='general_images/'

save_path ='temp_result/'

os.makedirs(save_path,exist_ok=True)

for path in os.listdir(filepath):

print(path)

if not path.endswith(".png") and not path.endswith(".jpg") and not path.endswith(".jpeg"):

continue

imgpath=filepath+path

srcimg = cv2.imread(imgpath)

boxes,labels,confs = detectnet1.detect(srcimg)

srcimg = detectnet1.draw(srcimg,boxes,labels,confs)

cv2.imwrite(os.path.join(save_path, path), srcimg)

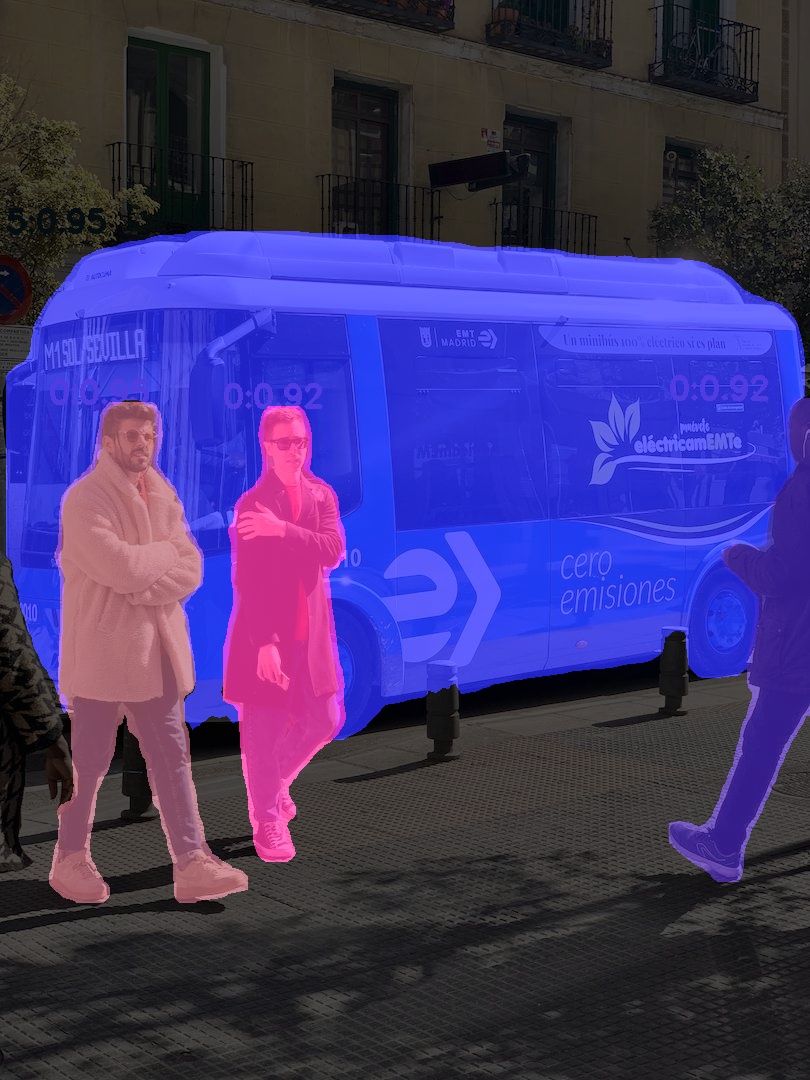

二、分割模型的onnxruntime推理

首先依旧是导出onnx模型

python

from ultralytics import YOLO

def export():

model = YOLO('yolo26s-seg.pt")

model.export(format="onnx")

if __name__ == '__main__':

export()或者使用命令行的方式导出:

bash

yolo export model=./yolo26s-seg.pt format=onnx imgsz=640 opset=122. 使用onnxruntime推理

与v8、v11最大的不同,分割模型一个输出的则是30038 , x1,y1,x2,y2,score,class_id,mask1,mask2,...,mask32

另一个输出跟v8/v11就是一样的了,都是32160*160

python

import os

import time

import cv2

import numpy as np

import onnxruntime

onnxruntime.preload_dlls()

from numpy.random import randint

class Yolov8Seg():

def __init__(self, modelpath,classes=80,input_size = 640):

self.net = onnxruntime.InferenceSession(modelpath,providers=['CUDAExecutionProvider'])

self.confThreshold=0.7

self.nmsThreshold=0.5

self.maskThreshold=0.3

self.inpWidth = input_size

self.inpHeight = self.inpWidth

self.classes = classes

self.segchannel = 32

self.segwidth = self.inpWidth//4

self.segheight = self.inpHeight//4

self.color = []

for i in range(classes):

self.color.append([randint(0, 256), randint(0, 256), randint(0, 256)])

def resize_image(self, srcimg, keep_ratio=True):

top, left, newh, neww = 0, 0, self.inpWidth, self.inpHeight

if keep_ratio and srcimg.shape[0] != srcimg.shape[1]:

hw_scale = srcimg.shape[0] / srcimg.shape[1]

if hw_scale > 1:

newh, neww = self.inpHeight, int(self.inpWidth / hw_scale)

img = cv2.resize(srcimg, (neww, newh), interpolation=cv2.INTER_AREA)

left = int((self.inpWidth - neww) * 0.5)

img = cv2.copyMakeBorder(img, 0, 0, left, self.inpWidth - neww - left, cv2.BORDER_CONSTANT,

value=(114, 114, 114)) # add border

else:

newh, neww = int(self.inpHeight * hw_scale), self.inpWidth

img = cv2.resize(srcimg, (neww, newh), interpolation=cv2.INTER_AREA)

top = int((self.inpHeight - newh) * 0.5)

img = cv2.copyMakeBorder(img, top, self.inpHeight - newh - top, 0, 0, cv2.BORDER_CONSTANT,

value=(114, 114, 114))

else:

img = cv2.resize(srcimg, (self.inpWidth, self.inpHeight), interpolation=cv2.INTER_AREA)

return img, newh, neww, top, left

def postprocessbox(self, frame, outs, padsize=None):

frameHeight = frame.shape[0]

frameWidth = frame.shape[1]

newh, neww, padh, padw = padsize

ratioh, ratiow = frameHeight / newh, frameWidth / neww

confidences = []

boxes = []

classIds = []

temp_proto = []

for detection in outs:

confidence = detection[4]

classId = detection[5]

if confidence > self.confThreshold:

x1 = int( np.ceil( (detection[0] - padw) * ratiow))

y1 = int( np.ceil( (detection[1] - padh) * ratioh))

x2 = int( np.ceil( (detection[2] - padw) * ratiow))

y2 = int( np.ceil( (detection[3] - padh) * ratioh))

# 更新检测出来的框

boxes.append([int(x1), int(y1), int(x2-x1), int(y2-y1)])

confidences.append(float(confidence))

classIds.append(classId)

temp_proto.append(detection[6:6+self.segchannel])

# Perform non maximum suppression to eliminate redundant overlapping boxes with

# lower confidences.

idxs = cv2.dnn.NMSBoxes(boxes, confidences, self.confThreshold,self.nmsThreshold)

box=np.zeros((0,4))

labels=np.zeros((0,))

confs=np.zeros((0,))

if len(idxs)>0:

box_seq = idxs.flatten()

box = np.array(boxes)[box_seq]

labels = np.array(classIds)[box_seq]

confs = np.array(confidences)[box_seq]

temp_proto= np.array(temp_proto)[box_seq]

box[:, 2] += box[:, 0]

box[:, 3] += box[:, 1]

return box.reshape(-1,4).astype('int32'),labels.astype('int32'),confs,temp_proto.reshape(-1,32)

else:

return np.array([]),np.array([]),np.array([]),np.array([])

def postprocessmask(self,srcimg,maskouts,temp_proto,boxes,padsize):

newh, neww, padh, padw = padsize

protos=maskouts.reshape(self.segchannel,-1)

matmulRes = temp_proto .dot( protos) #n*(160*160)

masks = matmulRes.reshape(-1,self.segwidth,self.segheight)

masks = 1 / (1+np.exp(-masks))

left,top,width,height=int(padw/self.inpWidth*self.segwidth),int(padh/self.inpHeight*self.segheight),\

int(self.segwidth-padw/2),int(self.segheight-padh/2)

masks_roi=masks[:,top:top+height,left:left+width]

output_mask=[]

for i in range(masks.shape[0]):

masks_roi_resize=cv2.resize(masks_roi[i],(srcimg.shape[1],srcimg.shape[0]))

rect=boxes[i]

mask=masks_roi_resize[rect[1]:rect[3],rect[0]:rect[2]]

mask[mask< self.maskThreshold]=0

mask[mask>0]=1

#缩小一点 减少误识别

#element = cv2.getStructuringElement(cv2.MORPH_RECT, (5,5));

#mask=cv2.erode(mask, element)

# 只保留最大的连通域

contours, _ = cv2.findContours(mask.astype(np.uint8), cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE)

m = []

for contour in contours:

m.append(cv2.contourArea(contour))

if m:

largest_contour_index = np.argmax(m)

mask = np.zeros_like(mask)

cv2.drawContours(mask, [contours[largest_contour_index]], -1, 1, -1)

output_mask.append(mask)

return output_mask

def detect(self, srcimg):

t0=time.time()

img, newh, neww, padh, padw = self.resize_image(srcimg)

blob = cv2.dnn.blobFromImage(img, scalefactor=1 / 255.0, swapRB=True)

t1=time.time()

# print("preprocess time:",t1-t0)

out = self.net.run(['output0','output1'], {'images': blob})

t2=time.time()

detouts,maskouts=out[0][0],out[1][0]

# print(detouts)

boxes,labels,confs,temp_proto = self.postprocessbox(srcimg, detouts, padsize=(newh, neww, padh, padw))

if boxes.shape[0]==0:

return np.zeros((0,4)),labels,confs,temp_proto

output_mask=self.postprocessmask(srcimg, maskouts,temp_proto, boxes,padsize=(newh, neww, padh, padw))

return boxes,labels,confs,output_mask

def draw(self, srcimg, box, label, conf, output_mask):

mask = np.zeros(srcimg.shape, dtype=np.float32)

for i in range(box.shape[0]):

cv2.rectangle(srcimg, (box[i,0], box[i,1]), (box[i,2], box[i,3]), self.color[label[i]], 3)

cv2.putText(srcimg, '%d:%.2f' % (label[i], conf[i]), (box[i,0], box[i,1]),

cv2.FONT_ITALIC, 1, self.color[label[i]], 3)

x1, y1, x2, y2 = box[i,0], box[i,1], box[i,2], box[i,3]

mask_h, mask_w = output_mask[i].shape[0], output_mask[i].shape[1]

if mask_h > 0 and mask_w > 0 and y2 > y1 and x2 > x1:

mask[y1:y2, x1:x2] = np.maximum(mask[y1:y2, x1:x2],

output_mask[i][:mask_h, :mask_w, np.newaxis].astype(np.float32) * self.color[i])

mask = mask.astype('uint8')

srcimgt = cv2.addWeighted(srcimg, 0.3, mask, 0.8, 1)

return srcimgt

path = './'

detectnet2 = Yolov8Seg(path+'yolo26s-seg.onnx',classes=80,input_size=640)

path =path+ 'images/'

savepath = path+'result/'

for ip in os.listdir(path):

t=time.time()

imgpath=path+ip

srcimg = cv2.imread(imgpath)

boxes1,labels1,confs1,output_mask1 = detectnet2.detect(srcimg)#

srcimg = detectnet2.draw(srcimg, boxes1, labels1, confs1, output_mask1)

print(time.time()-t)

cv2.imwrite(savepath+ip,srcimg)