树莓派4B平台测试部署SSR-Net,总体可以跑起来,帧率10以下。CPU占用4个核60-70%。

一、环境准备

硬件环境:

树莓派4B一台,USB摄像头一部。

安装Miniforge

wget https://github.com/conda-forge/miniforge/releases/download/23.3.1-1/Miniforge3-Linux-aarch64.sh

bash Miniforge3-Linux-aarch64.sh # 按提示安装

#修改profile文件,添加路径,重启系统或终端

vim /etc/profile

PATH="/home/tony/miniforge3/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/local/games:/usr/games"创建虚拟环境

conda create -n ssrnet_env python=3.7

conda init

conda activate ssrnet_env安装依赖

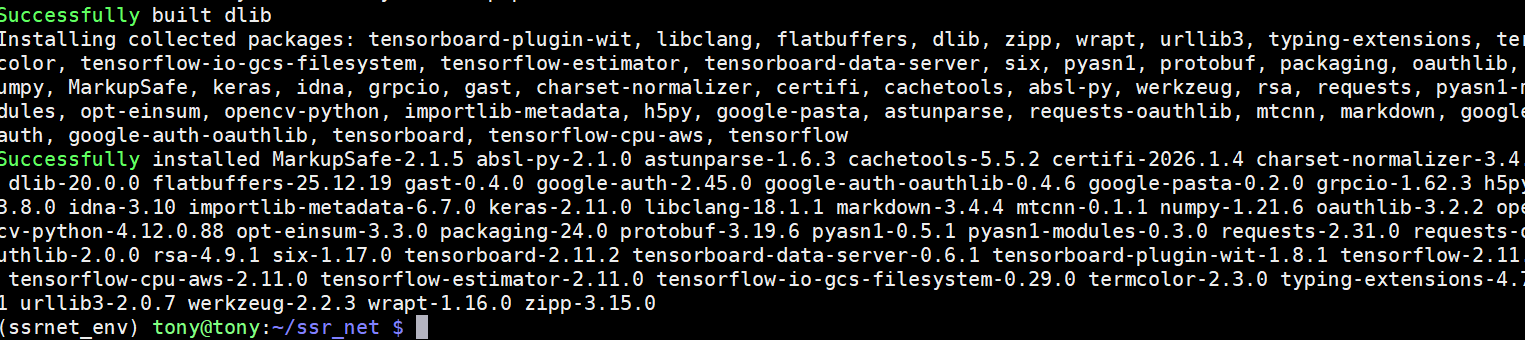

确保已安装依赖(基于python3.7,不要指定版本):

安装核心依赖

pip install keras tensorflow opencv-python numpy dlib mtcnn moviepy -i https://mirrors.aliyun.com/pypi/simple建议设置国内镜像源高速下载:

#国内源:

https://repo.huaweicloud.com/pypi/simple/

https://mirrors.aliyun.com/pypi/simple

#命令行指定下载源

pip install tensorflow-io==0.27.0 -i https://mirrors.aliyun.com/pypi/simple

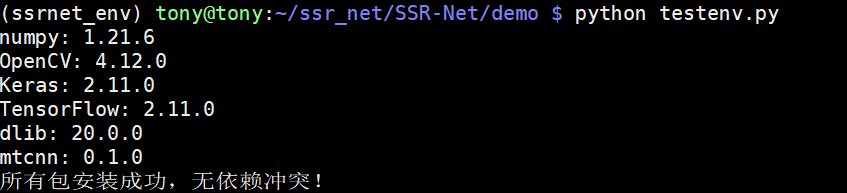

检查依赖环境

执行以下命令检查包版本和兼容性:

import numpy as np

import cv2

import keras

import tensorflow as tf

import dlib

import mtcnn

print(f"numpy: {np.__version__}")

print(f"OpenCV: {cv2.__version__}")

print(f"Keras: {keras.__version__}")

print(f"TensorFlow: {tf.__version__}")

print(f"dlib: {dlib.__version__}")

print(f"mtcnn: {mtcnn.__version__}")

print("所有包安装成功,无依赖冲突!")如果执行testenv.py有tensorflow-io警告,是版本冲突,重装下述版本。

pip install tensorflow-io==0.27.0 -i https://mirrors.aliyun.com/pypi/simple

树莓派4b部署ssr-net软件环境:

OS:Debian GNU/Linux 13 (trixie)

Miniforge3

python:3.7

numpy: 1.21.6

OpenCV: 4.12.0

Keras: 2.11.0

TensorFlow: 2.11.0

dlib: 20.0.0

mtcnn: 0.1.0

下载SSR-Net源码

git clone https://github.com/shamangary/SSR-Net.git二、预训练模型文件说明

仓库中预训练模型以 JSON(模型结构)+ H5(权重) 形式存储,路径示例:

SSR-Net/pre-trained/

├── imdb/

│ ├── mobilenet_reg_0.25_64/ # 宽度因子0.25,输入64x64

│ │ ├── mobilenet_reg_0.25_64.json # 模型结构

│ │ └── mobilenet_reg_0.25_64.h5 # 模型权重(需自行下载/训练生成)

│ └── mobilenet_reg_0.5_64/ # 宽度因子0.5,输入64x64

└── wiki/

└── mobilenet_reg_0.25_64/ # WIKI数据集训练的同规格模型注:权重文件(.h5)需从仓库作者提供的链接下载,或自行基于数据集训练生成。

三、使用仓库自带 Demo 快速验证

仓库提供了直接调用预训练模型的 Demo 脚本,无需手动加载模型:

1. 视频文件推理(CPU)

cd SSR-Net/demo

# 使用MTCNN人脸检测 + 预训练模型推理视频

KERAS_BACKEND=tensorflow CUDA_VISIBLE_DEVICES='' python TYY_demo_mtcnn.py TGOP.mp4SSRNET_model源代码修订

完整源码:

import logging

import sys

import numpy as np

from keras.models import Model

# 1. 整合所有层导入,包含 BatchNormalization(移除旧的 normalization 子模块导入)

from keras.layers import (

Input, Activation, add, Dense, Flatten, Dropout, Multiply,

Embedding, Lambda, Add, Concatenate, Conv2D, AveragePooling2D,

MaxPooling2D, BatchNormalization, Layer # 核心:Layer 从 keras.layers 导入

)

from keras.regularizers import l2

from keras import backend as K

from keras.optimizers import SGD, Adam

from keras.utils import plot_model

from keras import activations, initializers, regularizers, constraints

sys.setrecursionlimit(2 ** 20)

np.random.seed(2 ** 10)

class SSR_net:

def __init__(self, image_size,stage_num,lambda_local,lambda_d):

if K.image_data_format() == "channels_first":

logging.debug("image_data_format = 'channels_first'")

self._channel_axis = 1

self._input_shape = (3, image_size, image_size)

else:

logging.debug("image_data_format = 'channels_last'")

self._channel_axis = -1

self._input_shape = (image_size, image_size, 3)

self.stage_num = stage_num

self.lambda_local = lambda_local

self.lambda_d = lambda_d

# def create_model(self):

def __call__(self):

logging.debug("Creating model...")

inputs = Input(shape=self._input_shape)

#-------------------------------------------------------------------------------------------------------------------------

x = Conv2D(32,(3,3))(inputs)

x = BatchNormalization(axis=self._channel_axis)(x)

x = Activation('relu')(x)

x_layer1 = AveragePooling2D(2,2)(x)

x = Conv2D(32,(3,3))(x_layer1)

x = BatchNormalization(axis=self._channel_axis)(x)

x = Activation('relu')(x)

x_layer2 = AveragePooling2D(2,2)(x)

x = Conv2D(32,(3,3))(x_layer2)

x = BatchNormalization(axis=self._channel_axis)(x)

x = Activation('relu')(x)

x_layer3 = AveragePooling2D(2,2)(x)

x = Conv2D(32,(3,3))(x_layer3)

x = BatchNormalization(axis=self._channel_axis)(x)

x = Activation('relu')(x)

#-------------------------------------------------------------------------------------------------------------------------

s = Conv2D(16,(3,3))(inputs)

s = BatchNormalization(axis=self._channel_axis)(s)

s = Activation('tanh')(s)

s_layer1 = MaxPooling2D(2,2)(s)

s = Conv2D(16,(3,3))(s_layer1)

s = BatchNormalization(axis=self._channel_axis)(s)

s = Activation('tanh')(s)

s_layer2 = MaxPooling2D(2,2)(s)

s = Conv2D(16,(3,3))(s_layer2)

s = BatchNormalization(axis=self._channel_axis)(s)

s = Activation('tanh')(s)

s_layer3 = MaxPooling2D(2,2)(s)

s = Conv2D(16,(3,3))(s_layer3)

s = BatchNormalization(axis=self._channel_axis)(s)

s = Activation('tanh')(s)

#-------------------------------------------------------------------------------------------------------------------------

# Classifier block

s_layer4 = Conv2D(10,(1,1),activation='relu')(s)

s_layer4 = Flatten()(s_layer4)

s_layer4_mix = Dropout(0.2)(s_layer4)

s_layer4_mix = Dense(units=self.stage_num[0], activation="relu")(s_layer4_mix)

x_layer4 = Conv2D(10,(1,1),activation='relu')(x)

x_layer4 = Flatten()(x_layer4)

x_layer4_mix = Dropout(0.2)(x_layer4)

x_layer4_mix = Dense(units=self.stage_num[0], activation="relu")(x_layer4_mix)

feat_a_s1_pre = Multiply()([s_layer4,x_layer4])

delta_s1 = Dense(1,activation='tanh',name='delta_s1')(feat_a_s1_pre)

feat_a_s1 = Multiply()([s_layer4_mix,x_layer4_mix])

feat_a_s1 = Dense(2*self.stage_num[0],activation='relu')(feat_a_s1)

pred_a_s1 = Dense(units=self.stage_num[0], activation="relu",name='pred_age_stage1')(feat_a_s1)

#feat_local_s1 = Lambda(lambda x: x/10)(feat_a_s1)

#feat_a_s1_local = Dropout(0.2)(pred_a_s1)

local_s1 = Dense(units=self.stage_num[0], activation='tanh', name='local_delta_stage1')(feat_a_s1)

#-------------------------------------------------------------------------------------------------------------------------

s_layer2 = Conv2D(10,(1,1),activation='relu')(s_layer2)

s_layer2 = MaxPooling2D(4,4)(s_layer2)

s_layer2 = Flatten()(s_layer2)

s_layer2_mix = Dropout(0.2)(s_layer2)

s_layer2_mix = Dense(self.stage_num[1],activation='relu')(s_layer2_mix)

x_layer2 = Conv2D(10,(1,1),activation='relu')(x_layer2)

x_layer2 = AveragePooling2D(4,4)(x_layer2)

x_layer2 = Flatten()(x_layer2)

x_layer2_mix = Dropout(0.2)(x_layer2)

x_layer2_mix = Dense(self.stage_num[1],activation='relu')(x_layer2_mix)

feat_a_s2_pre = Multiply()([s_layer2,x_layer2])

delta_s2 = Dense(1,activation='tanh',name='delta_s2')(feat_a_s2_pre)

feat_a_s2 = Multiply()([s_layer2_mix,x_layer2_mix])

feat_a_s2 = Dense(2*self.stage_num[1],activation='relu')(feat_a_s2)

pred_a_s2 = Dense(units=self.stage_num[1], activation="relu",name='pred_age_stage2')(feat_a_s2)

#feat_local_s2 = Lambda(lambda x: x/10)(feat_a_s2)

#feat_a_s2_local = Dropout(0.2)(pred_a_s2)

local_s2 = Dense(units=self.stage_num[1], activation='tanh', name='local_delta_stage2')(feat_a_s2)

#-------------------------------------------------------------------------------------------------------------------------

s_layer1 = Conv2D(10,(1,1),activation='relu')(s_layer1)

s_layer1 = MaxPooling2D(8,8)(s_layer1)

s_layer1 = Flatten()(s_layer1)

s_layer1_mix = Dropout(0.2)(s_layer1)

s_layer1_mix = Dense(self.stage_num[2],activation='relu')(s_layer1_mix)

x_layer1 = Conv2D(10,(1,1),activation='relu')(x_layer1)

x_layer1 = AveragePooling2D(8,8)(x_layer1)

x_layer1 = Flatten()(x_layer1)

x_layer1_mix = Dropout(0.2)(x_layer1)

x_layer1_mix = Dense(self.stage_num[2],activation='relu')(x_layer1_mix)

feat_a_s3_pre = Multiply()([s_layer1,x_layer1])

delta_s3 = Dense(1,activation='tanh',name='delta_s3')(feat_a_s3_pre)

feat_a_s3 = Multiply()([s_layer1_mix,x_layer1_mix])

feat_a_s3 = Dense(2*self.stage_num[2],activation='relu')(feat_a_s3)

pred_a_s3 = Dense(units=self.stage_num[2], activation="relu",name='pred_age_stage3')(feat_a_s3)

#feat_local_s3 = Lambda(lambda x: x/10)(feat_a_s3)

#feat_a_s3_local = Dropout(0.2)(pred_a_s3)

local_s3 = Dense(units=self.stage_num[2], activation='tanh', name='local_delta_stage3')(feat_a_s3)

#-------------------------------------------------------------------------------------------------------------------------

def merge_age(x,s1,s2,s3,lambda_local,lambda_d):

a = x[0][:,0]*0

b = x[0][:,0]*0

c = x[0][:,0]*0

A = s1*s2*s3

V = 101

for i in range(0,s1):

a = a+(i+lambda_local*x[6][:,i])*x[0][:,i]

a = K.expand_dims(a,-1)

a = a/(s1*(1+lambda_d*x[3]))

for j in range(0,s2):

b = b+(j+lambda_local*x[7][:,j])*x[1][:,j]

b = K.expand_dims(b,-1)

b = b/(s1*(1+lambda_d*x[3]))/(s2*(1+lambda_d*x[4]))

for k in range(0,s3):

c = c+(k+lambda_local*x[8][:,k])*x[2][:,k]

c = K.expand_dims(c,-1)

c = c/(s1*(1+lambda_d*x[3]))/(s2*(1+lambda_d*x[4]))/(s3*(1+lambda_d*x[5]))

age = (a+b+c)*V

return age

pred_a = Lambda(merge_age,arguments={'s1':self.stage_num[0],'s2':self.stage_num[1],'s3':self.stage_num[2],'lambda_local':self.lambda_local,'lambda_d':self.lambda_d},output_shape=(1,),name='pred_a')([pred_a_s1,pred_a_s2,pred_a_s3,delta_s1,delta_s2,delta_s3, local_s1, local_s2, local_s3])

model = Model(inputs=inputs, outputs=pred_a)

return model

class SSR_net_general:

def __init__(self, image_size,stage_num,lambda_local,lambda_d):

if K.image_data_format() == "channels_first":

logging.debug("image_data_format = 'channels_first'")

self._channel_axis = 1

self._input_shape = (3, image_size, image_size)

else:

logging.debug("image_data_format = 'channels_last'")

self._channel_axis = -1

self._input_shape = (image_size, image_size, 3)

self.stage_num = stage_num

self.lambda_local = lambda_local

self.lambda_d = lambda_d

# def create_model(self):

def __call__(self):

logging.debug("Creating model...")

inputs = Input(shape=self._input_shape)

#-------------------------------------------------------------------------------------------------------------------------

x = Conv2D(32,(3,3))(inputs)

x = BatchNormalization(axis=self._channel_axis)(x)

x = Activation('relu')(x)

x_layer1 = AveragePooling2D(2,2)(x)

x = Conv2D(32,(3,3))(x_layer1)

x = BatchNormalization(axis=self._channel_axis)(x)

x = Activation('relu')(x)

x_layer2 = AveragePooling2D(2,2)(x)

x = Conv2D(32,(3,3))(x_layer2)

x = BatchNormalization(axis=self._channel_axis)(x)

x = Activation('relu')(x)

x_layer3 = AveragePooling2D(2,2)(x)

x = Conv2D(32,(3,3))(x_layer3)

x = BatchNormalization(axis=self._channel_axis)(x)

x = Activation('relu')(x)

#-------------------------------------------------------------------------------------------------------------------------

s = Conv2D(16,(3,3))(inputs)

s = BatchNormalization(axis=self._channel_axis)(s)

s = Activation('tanh')(s)

s_layer1 = MaxPooling2D(2,2)(s)

s = Conv2D(16,(3,3))(s_layer1)

s = BatchNormalization(axis=self._channel_axis)(s)

s = Activation('tanh')(s)

s_layer2 = MaxPooling2D(2,2)(s)

s = Conv2D(16,(3,3))(s_layer2)

s = BatchNormalization(axis=self._channel_axis)(s)

s = Activation('tanh')(s)

s_layer3 = MaxPooling2D(2,2)(s)

s = Conv2D(16,(3,3))(s_layer3)

s = BatchNormalization(axis=self._channel_axis)(s)

s = Activation('tanh')(s)

#-------------------------------------------------------------------------------------------------------------------------

# Classifier block

s_layer4 = Conv2D(10,(1,1),activation='relu')(s)

s_layer4 = Flatten()(s_layer4)

s_layer4_mix = Dropout(0.2)(s_layer4)

s_layer4_mix = Dense(units=self.stage_num[0], activation="relu")(s_layer4_mix)

x_layer4 = Conv2D(10,(1,1),activation='relu')(x)

x_layer4 = Flatten()(x_layer4)

x_layer4_mix = Dropout(0.2)(x_layer4)

x_layer4_mix = Dense(units=self.stage_num[0], activation="relu")(x_layer4_mix)

feat_s1_pre = Multiply()([s_layer4,x_layer4])

delta_s1 = Dense(1,activation='tanh',name='delta_s1')(feat_s1_pre)

feat_s1 = Multiply()([s_layer4_mix,x_layer4_mix])

feat_s1 = Dense(2*self.stage_num[0],activation='relu')(feat_s1)

pred_s1 = Dense(units=self.stage_num[0], activation="relu",name='pred_stage1')(feat_s1)

local_s1 = Dense(units=self.stage_num[0], activation='tanh', name='local_delta_stage1')(feat_s1)

#-------------------------------------------------------------------------------------------------------------------------

s_layer2 = Conv2D(10,(1,1),activation='relu')(s_layer2)

s_layer2 = MaxPooling2D(4,4)(s_layer2)

s_layer2 = Flatten()(s_layer2)

s_layer2_mix = Dropout(0.2)(s_layer2)

s_layer2_mix = Dense(self.stage_num[1],activation='relu')(s_layer2_mix)

x_layer2 = Conv2D(10,(1,1),activation='relu')(x_layer2)

x_layer2 = AveragePooling2D(4,4)(x_layer2)

x_layer2 = Flatten()(x_layer2)

x_layer2_mix = Dropout(0.2)(x_layer2)

x_layer2_mix = Dense(self.stage_num[1],activation='relu')(x_layer2_mix)

feat_s2_pre = Multiply()([s_layer2,x_layer2])

delta_s2 = Dense(1,activation='tanh',name='delta_s2')(feat_s2_pre)

feat_s2 = Multiply()([s_layer2_mix,x_layer2_mix])

feat_s2 = Dense(2*self.stage_num[1],activation='relu')(feat_s2)

pred_s2 = Dense(units=self.stage_num[1], activation="relu",name='pred_stage2')(feat_s2)

local_s2 = Dense(units=self.stage_num[1], activation='tanh', name='local_delta_stage2')(feat_s2)

#-------------------------------------------------------------------------------------------------------------------------

s_layer1 = Conv2D(10,(1,1),activation='relu')(s_layer1)

s_layer1 = MaxPooling2D(8,8)(s_layer1)

s_layer1 = Flatten()(s_layer1)

s_layer1_mix = Dropout(0.2)(s_layer1)

s_layer1_mix = Dense(self.stage_num[2],activation='relu')(s_layer1_mix)

x_layer1 = Conv2D(10,(1,1),activation='relu')(x_layer1)

x_layer1 = AveragePooling2D(8,8)(x_layer1)

x_layer1 = Flatten()(x_layer1)

x_layer1_mix = Dropout(0.2)(x_layer1)

x_layer1_mix = Dense(self.stage_num[2],activation='relu')(x_layer1_mix)

feat_s3_pre = Multiply()([s_layer1,x_layer1])

delta_s3 = Dense(1,activation='tanh',name='delta_s3')(feat_s3_pre)

feat_s3 = Multiply()([s_layer1_mix,x_layer1_mix])

feat_s3 = Dense(2*self.stage_num[2],activation='relu')(feat_s3)

pred_s3 = Dense(units=self.stage_num[2], activation="relu",name='pred_stage3')(feat_s3)

local_s3 = Dense(units=self.stage_num[2], activation='tanh', name='local_delta_stage3')(feat_s3)

#-------------------------------------------------------------------------------------------------------------------------

def SSR_module(x,s1,s2,s3,lambda_local,lambda_d):

a = x[0][:,0]*0

b = x[0][:,0]*0

c = x[0][:,0]*0

V = 1

for i in range(0,s1):

a = a+(i+lambda_local*x[6][:,i])*x[0][:,i]

a = K.expand_dims(a,-1)

a = a/(s1*(1+lambda_d*x[3]))

for j in range(0,s2):

b = b+(j+lambda_local*x[7][:,j])*x[1][:,j]

b = K.expand_dims(b,-1)

b = b/(s1*(1+lambda_d*x[3]))/(s2*(1+lambda_d*x[4]))

for k in range(0,s3):

c = c+(k+lambda_local*x[8][:,k])*x[2][:,k]

c = K.expand_dims(c,-1)

c = c/(s1*(1+lambda_d*x[3]))/(s2*(1+lambda_d*x[4]))/(s3*(1+lambda_d*x[5]))

out = (a+b+c)*V

return out

pred = Lambda(SSR_module,arguments={'s1':self.stage_num[0],'s2':self.stage_num[1],'s3':self.stage_num[2],'lambda_local':self.lambda_local,'lambda_d':self.lambda_d},name='pred')([pred_s1,pred_s2,pred_s3,delta_s1,delta_s2,delta_s3, local_s1, local_s2, local_s3])

model = Model(inputs=inputs, outputs=pred)

return modelTYY_demo_mtcnn.py文件修订

完整源码:

import os

import cv2

import dlib

import numpy as np

import argparse

from SSRNET_model import SSR_net

import sys

import timeit

# from moviepy.editor import *

from mtcnn.mtcnn import MTCNN

from keras import backend as K

def draw_label(image, point, label, font=cv2.FONT_HERSHEY_SIMPLEX,

font_scale=1, thickness=2):

size = cv2.getTextSize(label, font, font_scale, thickness)[0]

x, y = point

cv2.rectangle(image, (x, y - size[1]), (x + size[0], y), (255, 0, 0), cv2.FILLED)

cv2.putText(image, label, point, font, font_scale, (255, 255, 255), thickness)

def main():

K.set_learning_phase(0) # make sure its testing mode

weight_file = "../pre-trained/wiki/ssrnet_3_3_3_64_1.0_1.0/ssrnet_3_3_3_64_1.0_1.0.h5"

# for face detection

detector = MTCNN()

try:

os.mkdir('./img')

except OSError:

pass

# load model and weights

img_size = 64

stage_num = [3,3,3]

lambda_local = 1

lambda_d = 1

model = SSR_net(img_size,stage_num, lambda_local, lambda_d)()

model.load_weights(weight_file)

video_path = sys.argv[1]

cap = cv2.VideoCapture(video_path)

if not cap.isOpened():

print(f"Error:{video_path}")

sys.exit(1)

pyFlag = '3'

if len(sys.argv)==3:

pyFlag = sys.argv[2]

img_idx = 0

detected = []

time_detection = 0

time_network = 0

time_plot = 0

ad = 0.4

skip_frame = 5 # every 5 frame do 1 detection and network forward propagation

while cap.isOpened():

ret, img = cap.read()

if not ret:

break

img_idx += 1

input_img = img.copy()

img_h, img_w, _ = np.shape(input_img)

input_img = cv2.resize(input_img, (1024, int(1024*img_h/img_w)))

img_h, img_w, _ = np.shape(input_img)

if img_idx==1 or img_idx%skip_frame == 0:

# detect faces using MTCNN

start_time = timeit.default_timer()

detected = detector.detect_faces(input_img)

elapsed_time = timeit.default_timer()-start_time

time_detection += elapsed_time

faces = np.empty((len(detected), img_size, img_size, 3))

for i, d in enumerate(detected):

if d['confidence'] > 0.95:

x1,y1,w,h = d['box']

x2 = x1 + w

y2 = y1 + h

xw1 = max(int(x1 - ad * w), 0)

yw1 = max(int(y1 - ad * h), 0)

xw2 = min(int(x2 + ad * w), img_w - 1)

yw2 = min(int(y2 + ad * h), img_h - 1)

cv2.rectangle(input_img, (x1, y1), (x2, y2), (255, 0, 0), 2)

faces[i,:,:,:] = cv2.resize(input_img[yw1:yw2 + 1, xw1:xw2 + 1, :], (img_size, img_size))

start_time = timeit.default_timer()

predicted_ages = []

if len(detected) > 0:

results = model.predict(faces)

predicted_ages = results

for i, d in enumerate(detected):

if d['confidence'] > 0.95:

x1,y1,w,h = d['box']

label = "{}".format(int(predicted_ages[i]))

draw_label(input_img, (x1, y1), label)

elapsed_time = timeit.default_timer()-start_time

time_network += elapsed_time

start_time = timeit.default_timer()

cv2.imwrite(f'img/{img_idx}.png', input_img)

cv2.imshow("SSR-Net Age Detection", input_img)

elapsed_time = timeit.default_timer()-start_time

time_plot += elapsed_time

else:

for i, d in enumerate(detected):

if d['confidence'] > 0.95:

x1,y1,w,h = d['box']

x2 = x1 + w

y2 = y1 + h

xw1 = max(int(x1 - ad * w), 0)

yw1 = max(int(y1 - ad * h), 0)

xw2 = min(int(x2 + ad * w), img_w - 1)

yw2 = min(int(y2 + ad * h), img_h - 1)

cv2.rectangle(input_img, (x1, y1), (x2, y2), (255, 0, 0), 2)

for i, d in enumerate(detected):

if d['confidence'] > 0.95:

x1,y1,w,h = d['box']

label = "{}".format(int(predicted_ages[i]))

draw_label(input_img, (x1, y1), label)

start_time = timeit.default_timer()

cv2.imshow("SSR-Net Age Detection", input_img)

elapsed_time = timeit.default_timer()-start_time

time_plot += elapsed_time

print(f'avefps_time_detection: {img_idx/time_detection if time_detection>0 else 0}')

print(f'avefps_time_network: {img_idx/time_network if time_network>0 else 0}')

print(f'avefps_time_plot: {img_idx/time_plot if time_plot>0 else 0}')

print('===============================')

if cv2.waitKey(1) & 0xFF == 27:

break

cap.release()

cv2.destroyAllWindows()

if __name__ == '__main__':

main()

2. 实时摄像头推理

cd SSR-Net/demo

# 使用LBP人脸检测 + 预训练模型实时推理

KERAS_BACKEND=tensorflow CUDA_VISIBLE_DEVICES='' python TYY_demo_ssrnet_lbp_webcam.pyDemo 会自动加载默认预训练模型,若需更换模型,修改 Demo 脚本中 model_path 变量即可。

注:本测试中TYY_demo_ssrnet_lbp_webcam.py源码无需修改,依赖文件SSRNET_model.py参考上个demo(视频文件推理)修订