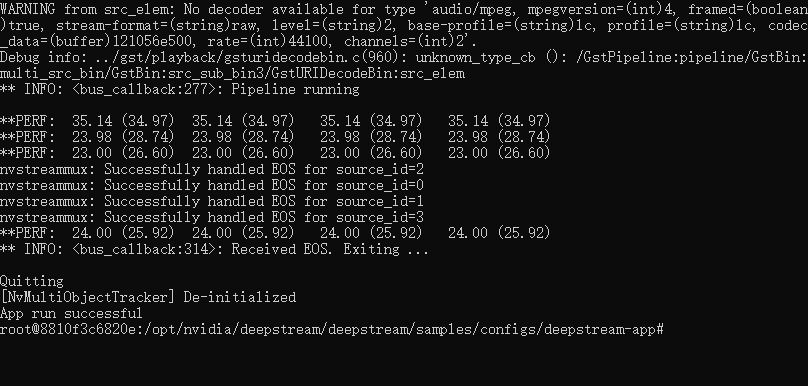

成功运行官方示例

整合后,启动出问题,每一步初始化都是通的,但是开始分析,管道里可能有未知错误,python程序捕捉不到异常,直接出现开始分析然后服务就重启

| 项目 | 状态 | 说明 |

|---|---|---|

| WebSocket 连接 | ✅ 成功 | 客户端 172.18.0.1 已连接 |

| RTSP 流拉取 | ✅ 成功 | rtsp://192.168.1.102:8554/mystream 被接收 |

| TensorRT 引擎加载 | ✅ 成功 | 从 /app/models/detection/person.onnx_b1_gpu0_fp16.engine 正确反序列化 |

| nvinfer 推理插件 | ✅ 初始化成功 | 模型加载完成,无 NVDSINFER_*_FAILED 错误 |

| GStreamer 管道 | ✅ 启动成功 | 日志显示 "管道启动成功"、"DeepStream分析启动成功" |

| DeepStream 插件检测 | ✅ 可用 | nvstreammux 和 nvinfer 均能创建 |

日志如下:

bash

2026-01-21 19:00:33 - src.server.websocket_server - INFO - 新连接: ('172.18.0.1', 33188)

2026-01-21 19:00:33 - src.server.connection_manager - INFO - 新连接已添加: conn_1768993233_130497904152240, 客户端: ('172.18.0.1', 33188)

2026-01-21 19:00:36 - src.server.deepstream_manager - INFO - 启动DeepStream分析: test_stream_001, URL: rtsp://192.168.1.102:8554/mystream

2026-01-21 19:00:36 - src.server.deepstream_manager - INFO - 创建管道: test_stream_001

Unknown or legacy key specified 'is-classifier' for group [property]

2026-01-21 19:00:36 - src.server.deepstream_manager - INFO - appsink回调已设置: test_stream_001

2026-01-21 19:00:36 - src.server.deepstream_manager - INFO - 启动管道: test_stream_001

0:18:10.582165560 1 0x643276f19550 WARN nvinfer gstnvinfer.cpp:679:gst_nvinfer_logger:<nvinfer0> NvDsInferContext[UID 1]: Warning from NvDsInferContextImpl::initialize() <nvdsinfer_context_impl.cpp:1244> [UID = 1]: Warning, OpenCV has been deprecated. Using NMS for clustering instead of cv::groupRectangles with topK = 20 and NMS Threshold = 0.5

WARNING: [TRT]: The getMaxBatchSize() function should not be used with an engine built from a network created with NetworkDefinitionCreationFlag::kEXPLICIT_BATCH flag. This function will always return 1.

INFO: ../nvdsinfer/nvdsinfer_model_builder.cpp:612 [Implicit Engine Info]: layers num: 2

0 INPUT kFLOAT images 3x640x640

1 OUTPUT kFLOAT output0 300x6

0:18:18.087474452 1 0x643276f19550 INFO nvinfer gstnvinfer.cpp:682:gst_nvinfer_logger:<nvinfer0> NvDsInferContext[UID 1]: Info from NvDsInferContextImpl::deserializeEngineAndBackend() <nvdsinfer_context_impl.cpp:2095> [UID = 1]: deserialized trt engine from :/app/models/detection/person.onnx_b1_gpu0_fp16.engine

0:18:18.264057886 1 0x643276f19550 INFO nvinfer gstnvinfer.cpp:682:gst_nvinfer_logger:<nvinfer0> NvDsInferContext[UID 1]: Info from NvDsInferContextImpl::generateBackendContext() <nvdsinfer_context_impl.cpp:2198> [UID = 1]: Use deserialized engine model: /app/models/detection/person.onnx_b1_gpu0_fp16.engine

0:18:18.277659566 1 0x643276f19550 INFO nvinfer gstnvinfer_impl.cpp:343:notifyLoadModelStatus:<nvinfer0> [UID 1]: Load new model:/app/configs/deepstream_config.txt sucessfully

2026-01-21 19:00:43 - src.server.deepstream_manager - INFO - 管道启动成功: test_stream_001

2026-01-21 19:00:43 - src.server.deepstream_manager - INFO - DeepStream分析启动成功: test_stream_001

INFO: 127.0.0.1:37238 - "GET /health HTTP/1.1" 200 OK

✓ GStreamer initialized

Starting FlexVision-Server...

Importing main module...

WARNING: PIL not available, some image processing features disabled

Trying to load plugins:

Multistream plugin: /opt/nvidia/deepstream/deepstream/lib/gst-plugins/libnvdsgst_multistream.so

Infer plugin: /opt/nvidia/deepstream/deepstream/lib/gst-plugins/libnvdsgst_infer.so

Plugin load results:

Multistream plugin: <Gst.Plugin object at 0x7255ce3d5600 (GstPlugin at 0x5a633f9ed7a0)>

Infer plugin: <Gst.Plugin object at 0x7255cb010f00 (GstPlugin at 0x5a633f9ed370)>

Element creation results:

nvstreammux: <__gi__.GstNvStreamMux object at 0x7255c83d9080 (GstNvStreamMux at 0x5a63401044d0)>

nvinfer: <__gi__.GstNvInfer object at 0x7255cafeef40 (GstNvInfer at 0x5a63401059e0)>

DeepStream plugins available: nvstreammux=True, nvinfer=True1. Python 主进程崩溃(最可能,但尝试修改无果)

- 虽然 DeepStream 管道启动成功,但你的 Python 应用(如 Uvicorn/FastAPI + GStreamer appsink 回调)可能在处理帧时出错。

- 例如:

- 在

appsink回调中访问了无效内存(GstBuffer 已释放) - 尝试用

PIL处理图像但未安装 → 抛出ImportError或AttributeError - 多线程/异步操作冲突(如在非主线程调用 GTK/GStreamer 不安全 API)

- 在

💥 一旦 Python 主进程崩溃,整个容器或服务就会退出,导致 WebSocket 断开 + 服务重启。

2. GStreamer 管道错误未被捕获(很有可能)

- 虽然管道启动成功,但后续流中断(如 RTSP 源断开、网络抖动)可能触发

error或eos信号。 - 如果你的代码没有监听

bus消息并处理错误,可能导致未预期行为。

3. 内存或 GPU 资源耗尽(不存在)

- DeepStream 占用较高 GPU 显存,若同时运行多个流或模型,可能触发 OOM(Out-Of-Memory)。

- 容器被系统 kill(查看

docker logs <container>是否有Killed字样)。

4. WebSocket 心跳超时或客户端主动断开(排查过不太可能)

- 较少见,但如果你的前端长时间未发送 ping,或后端未实现 pong 响应,连接可能被关闭。

- 但这种情况不会导致服务重启,只会断开连接。

可能缺少.engine模型,或者找不到

DeepStream 尝试加载一个 TensorRT 引擎文件 (.engine):

/app/models/detection/person.onnx_b1_gpu0_fp16.engine

但该文件不存在。

于是它回退到尝试从原始模型重建引擎 (比如 .onnx 文件),但在目录 /app/models/detection/ 中也找不到对应的 ONNX 或其他格式的原始模型文件,导致构建失败。

bash

2026-01-21 15:22:07 - src.server.websocket_server - INFO - WebSocket服务器已启动,监听地址: 0.0.0.0:8765

INFO: Uvicorn running on http://0.0.0.0:8080 (Press CTRL+C to quit)

2026-01-21 15:22:19 - src.server.websocket_server - INFO - 新连接: ('172.17.0.1', 33070)

2026-01-21 15:22:19 - src.server.connection_manager - INFO - 新连接已添加: conn_1768980139_123685807818160, 客户端: ('172.17.0.1', 33070)

2026-01-21 15:22:22 - src.server.deepstream_manager - INFO - 启动DeepStream分析: test_stream_001, URL: rtsp://192.168.1.102:8554/mystream

2026-01-21 15:22:22 - src.server.deepstream_manager - INFO - 创建管道: test_stream_001

0:00:14.946489078 1 0x5eef2a9acbc0 WARN nvinfer gstnvinfer.cpp:679:gst_nvinfer_logger:<nvinfer0> NvDsInferContext[UID 1]: Warning from NvDsInferContextImpl::initialize() <nvdsinfer_context_impl.cpp:1244> [UID = 1]: Warning, OpenCV has been deprecated. Using NMS for clustering instead of cv::groupRectangles with topK = 20 and NMS Threshold = 0.5

0:00:21.523934791 1 0x5eef2a9acbc0 WARN nvinfer gstnvinfer.cpp:679:gst_nvinfer_logger:<nvinfer0> NvDsInferContext[UID 1]: Warning from NvDsInferContextImpl::deserializeEngineAndBackend() <nvdsinfer_context_impl.cpp:2083> [UID = 1]: deserialize engine from file :/app/models/detection/person.onnx_b1_gpu0_fp16.engine failed

WARNING: ../nvdsinfer/nvdsinfer_model_builder.cpp:1494 Deserialize engine failed because file path: /app/models/detection/person.onnx_b1_gpu0_fp16.engine open error

0:00:21.683380121 1 0x5eef2a9acbc0 WARN nvinfer gstnvinfer.cpp:679:gst_nvinfer_logger:<nvinfer0> NvDsInferContext[UID 1]: Warning from NvDsInferContextImpl::generateBackendContext() <nvdsinfer_context_impl.cpp:2188> [UID = 1]: deserialize backend context from engine from file :/app/models/detection/person.onnx_b1_gpu0_fp16.engine failed, try rebuild

0:00:21.686198045 1 0x5eef2a9acbc0 INFO nvinfer gstnvinfer.cpp:682:gst_nvinfer_logger:<nvinfer0> NvDsInferContext[UID 1]: Info from NvDsInferContextImpl::buildModel() <nvdsinfer_context_impl.cpp:2109> [UID = 1]: Trying to create engine from model files

ERROR: ../nvdsinfer/nvdsinfer_model_builder.cpp:870 failed to build network since there is no model file matched.

ERROR: ../nvdsinfer/nvdsinfer_model_builder.cpp:809 failed to build network.

0:00:26.010287886 1 0x5eef2a9acbc0 ERROR nvinfer gstnvinfer.cpp:676:gst_nvinfer_logger:<nvinfer0> NvDsInferContext[UID 1]: Error in NvDsInferContextImpl::buildModel() <nvdsinfer_context_impl.cpp:2129> [UID = 1]: build engine file failed

0:00:26.170914628 1 0x5eef2a9acbc0 ERROR nvinfer gstnvinfer.cpp:676:gst_nvinfer_logger:<nvinfer0> NvDsInferContext[UID 1]: Error in NvDsInferContextImpl::generateBackendContext() <nvdsinfer_context_impl.cpp:2215> [UID = 1]: build backend context failed

0:00:26.172788284 1 0x5eef2a9acbc0 ERROR nvinfer gstnvinfer.cpp:676:gst_nvinfer_logger:<nvinfer0> NvDsInferContext[UID 1]: Error in NvDsInferContextImpl::initialize() <nvdsinfer_context_impl.cpp:1352> [UID = 1]: generate backend failed, check config file settings

0:00:26.173049907 1 0x5eef2a9acbc0 WARN nvinfer gstnvinfer.cpp:912:gst_nvinfer_start:<nvinfer0> error: Failed to create NvDsInferContext instance

0:00:26.173075735 1 0x5eef2a9acbc0 WARN nvinfer gstnvinfer.cpp:912:gst_nvinfer_start:<nvinfer0> error: Config file path: /app/configs/deepstream_config.txt, NvDsInfer Error: NVDSINFER_CONFIG_FAILED还是开始分析就重启

bash

发起启动分析后输出如下日志,为什么会重启

2026-01-22 12:50:53 - src.server.deepstream_manager - INFO - 启动DeepStream分析: test_stream_001, URL: rtsp://192.168.1.102:8554/mystream

2026-01-22 12:50:53 - src.server.deepstream_manager - INFO - 创建管道: test_stream_001

2026-01-22 12:50:53 - src.server.deepstream_manager - INFO - appsink回调已设置: test_stream_001

2026-01-22 12:50:53 - src.server.deepstream_manager - INFO - 启动管道: test_stream_001

0:00:11.766587517 1 0x64317d657be0 WARN nvinfer gstnvinfer.cpp:679:gst_nvinfer_logger:<nvinfer0> NvDsInferContext[UID 1]: Warning from NvDsInferContextImpl::initialize() <nvdsinfer_context_impl.cpp:1244> [UID = 1]: Warning, OpenCV has been deprecated. Using NMS for clustering instead of cv::groupRectangles with topK = 20 and NMS Threshold = 0.5

WARNING: [TRT]: The getMaxBatchSize() function should not be used with an engine built from a network created with NetworkDefinitionCreationFlag::kEXPLICIT_BATCH flag. This function will always return 1.

INFO: ../nvdsinfer/nvdsinfer_model_builder.cpp:612 [Implicit Engine Info]: layers num: 2

0 INPUT kFLOAT images 3x640x640

1 OUTPUT kFLOAT output0 300x6

0:00:18.602649702 1 0x64317d657be0 INFO nvinfer gstnvinfer.cpp:682:gst_nvinfer_logger:<nvinfer0> NvDsInferContext[UID 1]: Info from NvDsInferContextImpl::deserializeEngineAndBackend() <nvdsinfer_context_impl.cpp:2095> [UID = 1]: deserialized trt engine from :/app/models/detection/person.onnx_b1_gpu0_fp16.engine

0:00:18.766174530 1 0x64317d657be0 INFO nvinfer gstnvinfer.cpp:682:gst_nvinfer_logger:<nvinfer0> NvDsInferContext[UID 1]: Info from NvDsInferContextImpl::generateBackendContext() <nvdsinfer_context_impl.cpp:2198> [UID = 1]: Use deserialized engine model: /app/models/detection/person.onnx_b1_gpu0_fp16.engine

0:00:18.774005421 1 0x64317d657be0 INFO nvinfer gstnvinfer_impl.cpp:343:notifyLoadModelStatus:<nvinfer0> [UID 1]: Load new model:/app/configs/deepstream_config.txt sucessfully

2026-01-22 12:51:01 - src.server.deepstream_manager - INFO - 管道启动成功: test_stream_001

2026-01-22 12:51:01 - src.server.deepstream_manager - INFO - DeepStream分析启动成功: test_stream_001

✓ GStreamer initialized

Starting FlexVision-Server...

Importing main module...

Trying to load plugins:

Multistream plugin: /opt/nvidia/deepstream/deepstream/lib/gst-plugins/libnvdsgst_multistream.so

Infer plugin: /opt/nvidia/deepstream/deepstream/lib/gst-plugins/libnvdsgst_infer.so

Plugin load results:

Multistream plugin: <Gst.Plugin object at 0x76db1710e4c0 (GstPlugin at 0x5fbd6486e6a0)>

Infer plugin: <Gst.Plugin object at 0x76db17119280 (GstPlugin at 0x5fbd6486e2c0)>

Element creation results:

nvstreammux: <__gi__.GstNvStreamMux object at 0x76dae539c7c0 (GstNvStreamMux at 0x5fbd64f97ec0)>

nvinfer: <__gi__.GstNvInfer object at 0x76dae5231b40 (GstNvInfer at 0x5fbd64f993d0)>

DeepStream plugins available: nvstreammux=True, nvinfer=True

Main module imported successfully

Running asyncio main...

2026-01-22 12:51:08 - src.utils.logging_utils - INFO - 日志配置完成,级别: INFO

2026-01-22 12:51:08 - src.utils.logging_utils - INFO - 日志文件: logs/inference_server.log

2026-01-22 12:51:08 - src.main - INFO - ==================================================

2026-01-22 12:51:08 - src.main - INFO - 推理服务器 V2.0 正在启动...

2026-01-22 12:51:08 - src.main - INFO - ==================================================

2026-01-22 12:51:08 - src.main - INFO - 服务器配置: {'host': '0.0.0.0', 'port': 8767, 'max_connections': 100, 'heartbeat_interval': 30, 'connection_timeout': 300}

2026-01-22 12:51:08 - src.server.connection_manager - INFO - 连接管理器初始化,最大连接数: 100

2026-01-22 12:51:08 - src.server.deepstream_manager - INFO - GStreamer initialized successfully: version (major=1, minor=20, micro=3, nano=0)

2026-01-22 12:51:08 - src.server.deepstream_manager - INFO - DeepStreamManager initialized - GStreamer: True, PyDS: True

2026-01-22 12:51:08 - src.server.websocket_server - INFO - WebSocket服务器初始化完成,配置: {'host': '0.0.0.0', 'port': 8767, 'max_connections': 100, 'heartbeat_interval': 30, 'connection_timeout': 300}

2026-01-22 12:51:08 - src.main - INFO - API服务器已启动: http://0.0.0.0:8082方案对比

| 方案 | 性能 (GPU利用率) | 开发难度 | 灵活性 | 推荐场景 |

|---|---|---|---|---|

| DeepStream | ⭐⭐⭐⭐⭐ (极高) | ⭐⭐⭐⭐⭐ (极难) | 高 | 需要处理 30+ 路视频,压榨硬件极限 |

| Savant | ⭐⭐⭐⭐⭐ (极高) | ⭐⭐ (简单) | 高 | 最推荐。想要 DeepStream 的性能,但不想写 C/GStreamer |

| Ultralytics | ⭐⭐⭐⭐ (高) | ⭐ (极简) | 中 | 只关注检测/追踪,快速落地项目 |

| MediaPipe | ⭐⭐⭐ (中) | ⭐⭐ (简单) | 中 | 需要手势、姿态等复杂交互逻辑 |