文章目录

- 前言

- [1. `scrape_duration_seconds`](#1.

scrape_duration_seconds) -

- [1.1 job=kube-state-metrics](#1.1 job=kube-state-metrics)

- [1.2 job="kubernetes-apiservers"](#1.2 job="kubernetes-apiservers")

- [1.3 job="kubernetes-cadvisor"](#1.3 job="kubernetes-cadvisor")

- [1.4 job="kubernetes-nodes"](#1.4 job="kubernetes-nodes")

- [1.5 job="kubernetes-pods "](#1.5 job="kubernetes-pods ")

- [1.6 job="kubernetes-service-endpoints"](#1.6 job="kubernetes-service-endpoints")

- [1.7 其他job](#1.7 其他job)

- [2 `scrape_samples_scraped`](#2

scrape_samples_scraped) - [3 `scrape_samples_post_metric_relabeling`](#3

scrape_samples_post_metric_relabeling)

前言

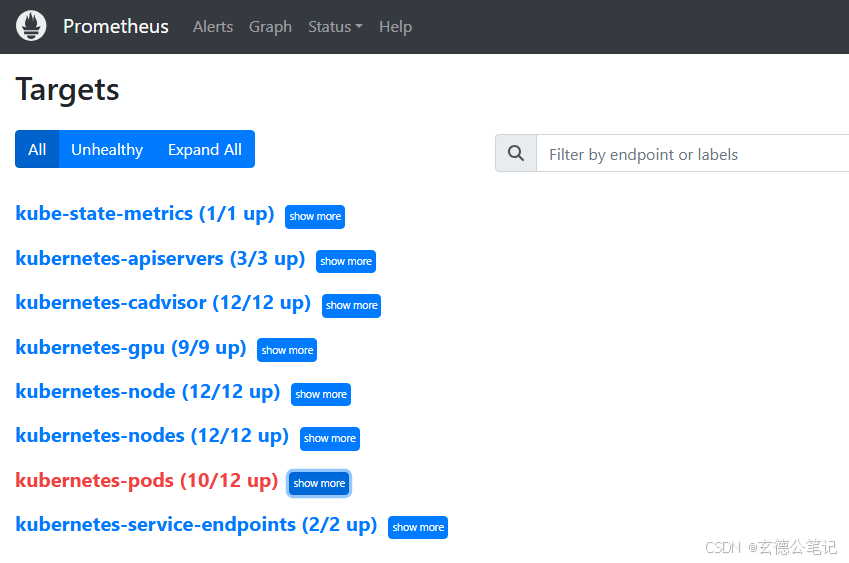

- 这部分可以说是prometheus监控k8s的总纲,所有监控的job将都在这里显示。

- 监控到的内容对应prometheus配置文件中的每一个job

- 也对应了prometheus界面上 STATUS ---> TARGETS 上的内容

1. scrape_duration_seconds

- 作用:Prometheus 抓取指定目标所需的时间,单位为秒

- 示例:

shell

scrape_duration_seconds{app="cainjector", app_kubernetes_io_component="cainjector", app_kubernetes_io_instance="cert-manager", app_kubernetes_io_name="cainjector", app_kubernetes_io_version="v1.19.1", cluster="cto-k8s-pro", instance="10.244.7.87:9402", job="kubernetes-pods", kubernetes_namespace="cert-manager", kubernetes_pod_name="cert-manager-cainjector-7c67d4d67-2nfb9", pod_template_hash="7c67d4d67"} 0.01754294

scrape_duration_seconds{app="cert-manager", app_kubernetes_io_component="controller", app_kubernetes_io_instance="cert-manager", app_kubernetes_io_name="cert-manager", app_kubernetes_io_version="v1.19.1", cluster="cto-k8s-pro", instance="10.244.7.88:9402", job="kubernetes-pods", kubernetes_namespace="cert-manager", kubernetes_pod_name="cert-manager-7b865f4557-9kt9l", pod_template_hash="7b865f4557"} 0.009598836

scrape_duration_seconds{app="otel-collector-center", app_kubernetes_io_component="opentelemetry-collector", app_kubernetes_io_instance="otel.center", app_kubernetes_io_managed_by="opentelemetry-operator", app_kubernetes_io_name="center-collector", app_kubernetes_io_part_of="opentelemetry", app_kubernetes_io_version="0.136.0", cluster="cto-k8s-pro", instance="10.244.7.126:8888", job="kubernetes-pods", kubernetes_namespace="otel", kubernetes_pod_name="center-collector-6f696c8f78-wqkxz", pod_template_hash="6f696c8f78"} 0.370698032

scrape_duration_seconds{app="pulsarv3", cluster="cto-k8s-pro", component="bookie", controller_revision_hash="milvus-256-pulsarv3-bookie-5f56c959dd", instance="10.244.11.94:8000", job="kubernetes-pods", kubernetes_namespace="milvus", kubernetes_pod_name="milvus-256-pulsarv3-bookie-2", release="milvus-256", statefulset_kubernetes_io_pod_name="milvus-256-pulsarv3-bookie-2"} 0.02896609

scrape_duration_seconds{app="pulsarv3", cluster="cto-k8s-pro", component="bookie", controller_revision_hash="milvus-256-pulsarv3-bookie-5f56c959dd", instance="10.244.12.109:8000", job="kubernetes-pods", kubernetes_namespace="milvus", kubernetes_pod_name="milvus-256-pulsarv3-bookie-0", release="milvus-256", statefulset_kubernetes_io_pod_name="milvus-256-pulsarv3-bookie-0"} 0.03194798

scrape_duration_seconds{app="pulsarv3", cluster="cto-k8s-pro", component="bookie", controller_revision_hash="milvus-256-pulsarv3-bookie-5f56c959dd", instance="10.244.9.105:8000", job="kubernetes-pods", kubernetes_namespace="milvus", kubernetes_pod_name="milvus-256-pulsarv3-bookie-1", release="milvus-256", statefulset_kubernetes_io_pod_name="milvus-256-pulsarv3-bookie-1"} 0.022548432

scrape_duration_seconds{app="pulsarv3", cluster="cto-k8s-pro", component="broker", controller_revision_hash="milvus-256-pulsarv3-broker-67b8df5bbc", instance="10.244.11.42:8080", job="kubernetes-pods", kubernetes_namespace="milvus", kubernetes_pod_name="milvus-256-pulsarv3-broker-1", release="milvus-256", statefulset_kubernetes_io_pod_name="milvus-256-pulsarv3-broker-1"} 0.034689994

scrape_duration_seconds{app="pulsarv3", cluster="cto-k8s-pro", component="broker", controller_revision_hash="milvus-256-pulsarv3-broker-67b8df5bbc", instance="10.244.9.72:8080", job="kubernetes-pods", kubernetes_namespace="milvus", kubernetes_pod_name="milvus-256-pulsarv3-broker-0", release="milvus-256", statefulset_kubernetes_io_pod_name="milvus-256-pulsarv3-broker-0"} 0.033956163

scrape_duration_seconds{app="pulsarv3", cluster="cto-k8s-pro", component="proxy", controller_revision_hash="milvus-256-pulsarv3-proxy-69cd9cf5f4", instance="10.244.11.43:80", job="kubernetes-pods", kubernetes_namespace="milvus", kubernetes_pod_name="milvus-256-pulsarv3-proxy-1", release="milvus-256", statefulset_kubernetes_io_pod_name="milvus-256-pulsarv3-proxy-1"} 0.297310359

scrape_duration_seconds{app="pulsarv3", cluster="cto-k8s-pro", component="proxy", controller_revision_hash="milvus-256-pulsarv3-proxy-69cd9cf5f4", instance="10.244.9.73:80", job="kubernetes-pods", kubernetes_namespace="milvus", kubernetes_pod_name="milvus-256-pulsarv3-proxy-0", release="milvus-256", statefulset_kubernetes_io_pod_name="milvus-256-pulsarv3-proxy-0"} 0.000480907

scrape_duration_seconds{app="pulsarv3", cluster="cto-k8s-pro", component="recovery", controller_revision_hash="milvus-256-pulsarv3-recovery-6f5cf88954", instance="10.244.12.107:8000", job="kubernetes-pods", kubernetes_namespace="milvus", kubernetes_pod_name="milvus-256-pulsarv3-recovery-0", release="milvus-256", statefulset_kubernetes_io_pod_name="milvus-256-pulsarv3-recovery-0"} 0.016820959

scrape_duration_seconds{app="webhook", app_kubernetes_io_component="webhook", app_kubernetes_io_instance="cert-manager", app_kubernetes_io_name="webhook", app_kubernetes_io_version="v1.19.1", cluster="cto-k8s-pro", instance="10.244.7.89:9402", job="kubernetes-pods", kubernetes_namespace="cert-manager", kubernetes_pod_name="cert-manager-webhook-bd6f7f7f6-kc45b", pod_template_hash="bd6f7f7f6"} 0.014095764

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", es="es01", fisam="used", gpu="gpu", gpu_type="xxx", instance="node-69", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-69", kubernetes_io_os="linux"} 0.097352804

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", es="es01", fisam="used", gpu="gpu", gpu_type="xxx", instance="node-69", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-69", kubernetes_io_os="linux"} 0.046201156

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", es="es02", gpu="gpu", gpu_type="xxx",instance="node-70", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-70", kubernetes_io_os="linux"} 0.124005837

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", es="es02", gpu="gpu", gpu_type="xxx",instance="node-70", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-70", kubernetes_io_os="linux"} 0.061426852

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", es="es03", gpu="gpu", gpu_type="xxx",instance="node-71", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-71", kubernetes_io_os="linux"} 0.163037819

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", es="es03", gpu="gpu", gpu_type="xxx",instance="node-71", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-71", kubernetes_io_os="linux"} 0.600427132

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", es="es03new", gpu="gpu", gpu_type="xxx",instance="node-68", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-68", kubernetes_io_os="linux"} 0.153473501

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", es="es03new", gpu="gpu", gpu_type="xxx", instance="node-68", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-68", kubernetes_io_os="linux"} 0.073451193

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", gpu="gpu", gpu_type="xxx", instance="cto-gpu-pro-n01", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="cto-gpu-pro-n01", kubernetes_io_os="linux", net_link="zk"} 0.120324218

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", gpu="gpu", gpu_type="xxx", instance="cto-gpu-pro-n01", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="cto-gpu-pro-n01", kubernetes_io_os="linux", net_link="zk"} 0.502582395

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", gpu="gpu", gpu_type="xxx", instance="node-64", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-64", kubernetes_io_os="linux"} 0.170867781

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", gpu="gpu", gpu_type="xxx", instance="node-64", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-64", kubernetes_io_os="linux"} 0.064873524

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", gpu="gpu", gpu_type="xxx", instance="node-65", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-65", kubernetes_io_os="linux"} 0.193870637

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", gpu="gpu", gpu_type="xxx", instance="node-65", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-65", kubernetes_io_os="linux"} 0.074536606

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", gpu="gpu", gpu_type="xxx", instance="node-66", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-66", kubernetes_io_os="linux"} 0.713061325

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", gpu="gpu", gpu_type="xxx", instance="node-66", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-66", kubernetes_io_os="linux"} 0.302423999

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", gpu="gpu", gpu_type="xxx", instance="node-67", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-67", kubernetes_io_os="linux"} 0.932493035

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", gpu="gpu", gpu_type="xxx", instance="node-67", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-67", kubernetes_io_os="linux"} 0.063775824

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", instance="cto-gpu-pro-m01", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="cto-gpu-pro-m01", kubernetes_io_os="linux"} 0.057422456

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", instance="cto-gpu-pro-m01", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="cto-gpu-pro-m01", kubernetes_io_os="linux"} 0.025336912

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", instance="cto-gpu-pro-m02", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="cto-gpu-pro-m02", kubernetes_io_os="linux"} 0.067266312

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", instance="cto-gpu-pro-m02", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="cto-gpu-pro-m02", kubernetes_io_os="linux"} 0.029564758

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", instance="cto-gpu-pro-m03", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="cto-gpu-pro-m03", kubernetes_io_os="linux"} 0.072238085

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", instance="cto-gpu-pro-m03", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="cto-gpu-pro-m03", kubernetes_io_os="linux"} 0.032076491

scrape_duration_seconds{cluster="cto-k8s-pro", instance="10.10.xxx.60:6443", job="kubernetes-apiservers"} 0.753500409

scrape_duration_seconds{cluster="cto-k8s-pro", instance="10.10.xxx.60:9100", job="kubernetes-node"} 0.072102277

scrape_duration_seconds{cluster="cto-k8s-pro", instance="10.10.xxx.61:6443", job="kubernetes-apiservers"} 0.10539402

scrape_duration_seconds{cluster="cto-k8s-pro", instance="10.10.xxx.61:9100", job="kubernetes-node"} 0.06335435

scrape_duration_seconds{cluster="cto-k8s-pro", instance="10.10.xxx.62:6443", job="kubernetes-apiservers"} 1.6356698459999999

scrape_duration_seconds{cluster="cto-k8s-pro", instance="10.10.xxx.62:9100", job="kubernetes-node"} 0.062383867

scrape_duration_seconds{cluster="cto-k8s-pro", instance="10.10.1xxx.63:9100", job="kubernetes-node"} 2.475333221

scrape_duration_seconds{cluster="cto-k8s-pro", instance="10.10.xxx.64:9100", job="kubernetes-node"} 3.623134646

scrape_duration_seconds{cluster="cto-k8s-pro", instance="10.10.xxx.65:9100", job="kubernetes-node"} 3.788129557

scrape_duration_seconds{cluster="cto-k8s-pro", instance="10.10.xxx.66:9100", job="kubernetes-node"} 4.041651902

scrape_duration_seconds{cluster="cto-k8s-pro", instance="10.10.xxx.67:9100", job="kubernetes-node"} 3.525849848

scrape_duration_seconds{cluster="cto-k8s-pro", instance="10.10.xxx.68:9100", job="kubernetes-node"} 4.13933891

scrape_duration_seconds{cluster="cto-k8s-pro", instance="10.10.xxx.69:9100", job="kubernetes-node"} 3.879500798

scrape_duration_seconds{cluster="cto-k8s-pro", instance="10.10.xxx.70:9100", job="kubernetes-node"} 4.091566113

scrape_duration_seconds{cluster="cto-k8s-pro", instance="10.10.xxx.71:9100", job="kubernetes-node"} 3.742608531

scrape_duration_seconds{cluster="cto-k8s-pro", instance="10.244.0.28:9153", job="kubernetes-service-endpoints", k8s_app="kube-dns", kubernetes_io_cluster_service="true", kubernetes_io_name="CoreDNS", kubernetes_name="kube-dns", kubernetes_namespace="kube-system"} 0.006869182

scrape_duration_seconds{cluster="cto-k8s-pro", instance="10.244.0.29:9153", job="kubernetes-service-endpoints", k8s_app="kube-dns", kubernetes_io_cluster_service="true", kubernetes_io_name="CoreDNS", kubernetes_name="kube-dns", kubernetes_namespace="kube-system"} 0.007048918

scrape_duration_seconds{cluster="cto-k8s-pro", instance="kube-state-metrics.kube-system.svc.cluster.local:8080", job="kube-state-metrics"} 0.11021884

scrape_duration_seconds{instance="10.10.xxx.63:9400", job="kubernetes-gpu"} 0.002277725

scrape_duration_seconds{instance="10.10.xxx.64:9400", job="kubernetes-gpu"} 0.002227555

scrape_duration_seconds{instance="10.10.xxx.65:9400", job="kubernetes-gpu"} 0.002210496

scrape_duration_seconds{instance="10.10.xxx.66:9400", job="kubernetes-gpu"} 0.001979742

scrape_duration_seconds{instance="10.10.xxx.67:9400", job="kubernetes-gpu"} 0.002847058

scrape_duration_seconds{instance="10.10.xxx.68:9400", job="kubernetes-gpu"} 0.001884898

scrape_duration_seconds{instance="10.10.xxx.69:9400", job="kubernetes-gpu"} 0.002245773

scrape_duration_seconds{instance="10.10.xxx.70:9400", job="kubernetes-gpu"} 0.002060277

scrape_duration_seconds{instance="10.10.xxx.71:9400", job="kubernetes-gpu"} 0.002139183- 说明:

job是Prometheus 抓取的job_name,这些job你可以在配置文件或者prometheus的targets页面看见

1.1 job=kube-state-metrics

-

说明:这个是我自己部署的 kube-state-metrics 组件,prometheus从它上边抓取数据所需要的时间

-

示例:

shell

scrape_duration_seconds{cluster="cto-k8s-pro", instance="kube-state-metrics.kube-system.svc.cluster.local:8080", job="kube-state-metrics"} 0.107576404- kube-state-metrics 组件 说明:

- 用与监控k8s中这种对象的状态。

kube_开头的指标都是它提供的(除非有人故意捣乱,其它采集工具一般不会使用这个开头)- 你可以用

count({__name__=~"kube_.*"}) by (__name__)来查询 - 具体含义我将在单独章节说明

- 你可以用

shell

kube_apiserver_clusterip_allocator_allocated_ips{} 3

kube_apiserver_clusterip_allocator_allocation_total{} 3

kube_apiserver_clusterip_allocator_available_ips{} 3

kube_apiserver_pod_logs_pods_logs_backend_tls_failure_total{} 1

kube_apiserver_pod_logs_pods_logs_insecure_backend_total{} 1

kube_configmap_created{} 79

kube_configmap_info{} 79

kube_configmap_metadata_resource_version{} 79

kube_daemonset_created{} 5

kube_daemonset_metadata_generation{} 5

kube_daemonset_status_current_number_scheduled{} 5

kube_daemonset_status_desired_number_scheduled{} 5

kube_daemonset_status_number_available{} 5

kube_daemonset_status_number_misscheduled{} 5

kube_daemonset_status_number_ready{} 5

kube_daemonset_status_number_unavailable{} 5

kube_daemonset_status_observed_generation{} 5

kube_daemonset_status_updated_number_scheduled{} 5

kube_deployment_created{} 100

kube_deployment_metadata_generation{} 100

kube_deployment_spec_paused{} 100

kube_deployment_spec_replicas{} 100

kube_deployment_spec_strategy_rollingupdate_max_surge{} 98

kube_deployment_spec_strategy_rollingupdate_max_unavailable{} 98

kube_deployment_status_condition{} 600

kube_deployment_status_observed_generation{} 100

kube_deployment_status_replicas{} 100

kube_deployment_status_replicas_available{} 100

kube_deployment_status_replicas_ready{} 100

kube_deployment_status_replicas_unavailable{} 100

kube_deployment_status_replicas_updated{} 100

kube_endpoint_address{} 175

kube_endpoint_address_available{} 120

kube_endpoint_address_not_ready{} 120

kube_endpoint_created{} 120

kube_endpoint_info{} 120

kube_endpoint_ports{} 159

kube_ingress_created{} 2

kube_ingress_info{} 2

kube_ingress_metadata_resource_version{} 2

kube_ingress_path{} 2

kube_ingress_tls{} 2

kube_job_complete{} 6

kube_job_created{} 2

kube_job_info{} 2

kube_job_owner{} 2

kube_job_spec_completions{} 2

kube_job_spec_parallelism{} 2

kube_job_status_active{} 2

kube_job_status_completion_time{} 2

kube_job_status_failed{} 2

kube_job_status_start_time{} 2

kube_job_status_succeeded{} 2

kube_lease_owner{} 18

kube_lease_renew_time{} 18

kube_mutatingwebhookconfiguration_created{} 2

kube_mutatingwebhookconfiguration_info{} 2

kube_mutatingwebhookconfiguration_metadata_resource_version{} 2

kube_mutatingwebhookconfiguration_webhook_clientconfig_service{} 6

kube_namespace_created{} 34

kube_namespace_status_phase{} 68

kube_networkpolicy_created{} 2

kube_networkpolicy_spec_egress_rules{} 2

kube_networkpolicy_spec_ingress_rules{} 2

kube_node_created{} 12

kube_node_info{} 12

kube_node_role{} 6

kube_node_spec_taint{} 4

kube_node_spec_unschedulable{} 12

kube_node_status_addresses{} 24

kube_node_status_allocatable{} 81

kube_node_status_capacity{} 81

kube_node_status_condition{} 180

kube_persistentvolume_capacity_bytes{} 63

kube_persistentvolume_claim_ref{} 63

kube_persistentvolume_created{} 63

kube_persistentvolume_info{} 63

kube_persistentvolume_status_phase{} 315

kube_persistentvolume_volume_mode{} 63

kube_persistentvolumeclaim_access_mode{} 63

kube_persistentvolumeclaim_created{} 63

kube_persistentvolumeclaim_info{} 63

kube_persistentvolumeclaim_resource_requests_storage_bytes{} 63

kube_persistentvolumeclaim_status_phase{} 189

kube_pod_completion_time{} 2

kube_pod_container_info{} 220

kube_pod_container_resource_limits{} 194

kube_pod_container_resource_requests{} 279

kube_pod_container_state_started{} 218

kube_pod_container_status_last_terminated_exitcode{} 100

kube_pod_container_status_last_terminated_reason{} 100

kube_pod_container_status_last_terminated_timestamp{} 100

kube_pod_container_status_ready{} 220

kube_pod_container_status_restarts_total{} 220

kube_pod_container_status_running{} 220

kube_pod_container_status_terminated{} 220

kube_pod_container_status_terminated_reason{} 2

kube_pod_container_status_waiting{} 220

kube_pod_container_status_waiting_reason{} 2

kube_pod_created{} 218

kube_pod_info{} 218

kube_pod_init_container_info{} 47

kube_pod_init_container_resource_requests{} 30

kube_pod_init_container_status_ready{} 47

kube_pod_init_container_status_restarts_total{} 47

kube_pod_init_container_status_running{} 47

kube_pod_init_container_status_terminated{} 47

kube_pod_init_container_status_terminated_reason{} 46

kube_pod_init_container_status_waiting{} 47

kube_pod_init_container_status_waiting_reason{} 1

kube_pod_ips{} 218

kube_pod_owner{} 218

kube_pod_restart_policy{} 218

kube_pod_scheduler{} 218

kube_pod_service_account{} 218

kube_pod_spec_volumes_persistentvolumeclaims_info{} 67

kube_pod_spec_volumes_persistentvolumeclaims_readonly{} 67

kube_pod_start_time{} 218

kube_pod_status_container_ready_time{} 214

kube_pod_status_initialized_time{} 217

kube_pod_status_phase{} 1090

kube_pod_status_qos_class{} 654

kube_pod_status_ready{} 654

kube_pod_status_ready_time{} 214

kube_pod_status_reason{} 1090

kube_pod_status_scheduled{} 654

kube_pod_status_scheduled_time{} 218

kube_pod_tolerations{} 727

kube_poddisruptionbudget_created{} 4

kube_poddisruptionbudget_status_current_healthy{} 4

kube_poddisruptionbudget_status_desired_healthy{} 4

kube_poddisruptionbudget_status_expected_pods{} 4

kube_poddisruptionbudget_status_observed_generation{} 4

kube_poddisruptionbudget_status_pod_disruptions_allowed{} 4

kube_replicaset_created{} 293

kube_replicaset_metadata_generation{} 293

kube_replicaset_owner{} 293

kube_replicaset_spec_replicas{} 293

kube_replicaset_status_fully_labeled_replicas{} 293

kube_replicaset_status_observed_generation{} 293

kube_replicaset_status_ready_replicas{} 293

kube_replicaset_status_replicas{} 293

kube_secret_created{} 123

kube_secret_info{} 123

kube_secret_metadata_resource_version{} 123

kube_secret_owner{} 123

kube_secret_type{} 123

kube_service_created{} 119

kube_service_info{} 119

kube_service_spec_type{} 119

kube_statefulset_created{} 13

kube_statefulset_metadata_generation{} 13

kube_statefulset_replicas{} 13

kube_statefulset_status_current_revision{} 13

kube_statefulset_status_observed_generation{} 13

kube_statefulset_status_replicas{} 13

kube_statefulset_status_replicas_available{} 13

kube_statefulset_status_replicas_current{} 13

kube_statefulset_status_replicas_ready{} 13

kube_statefulset_status_replicas_updated{} 13

kube_statefulset_status_update_revision{} 13

kube_storageclass_created{} 1

kube_storageclass_info{} 1

kube_validatingwebhookconfiguration_created{} 3

kube_validatingwebhookconfiguration_info{} 3

kube_validatingwebhookconfiguration_metadata_resource_version{} 3

kube_validatingwebhookconfiguration_webhook_clientconfig_service{} 10- 附录:它在prometheus中的配置

yml

- job_name: 'kube-state-metrics'

static_configs:

- targets:

- kube-state-metrics.kube-system.svc.cluster.local:8080

relabel_configs: # 下边仅是一个标签,非必要,你可以随意起名字

- target_label: cluster

replacement: iai-test-k8s1.2 job="kubernetes-apiservers"

- 作用:prometheus从apiservers 获取数据所花费的时间

- 示例

shell

scrape_duration_seconds{cluster="cto-k8s-pro", instance="10.10.xxx.60:6443", job="kubernetes-apiservers"} 0.273956679

scrape_duration_seconds{cluster="cto-k8s-pro", instance="10.10.xxx.61:6443", job="kubernetes-apiservers"} 0.118874508

scrape_duration_seconds{cluster="cto-k8s-pro", instance="10.10.xxx.62:6443", job="kubernetes-apiservers"} 0.107484333- job="kubernetes-apiservers" 获取到数据的说明

- 你可以用

count({__name__=~"apiserver_.*"}) by (__name__)来查询 apiserver_开头的指标都是它提供的- 我将在其他章节说明

- 你可以用

shell

apiserver_admission_controller_admission_duration_seconds_bucket{} 889

apiserver_admission_controller_admission_duration_seconds_count{} 127

apiserver_admission_controller_admission_duration_seconds_sum{} 127

apiserver_admission_step_admission_duration_seconds_bucket{} 175

apiserver_admission_step_admission_duration_seconds_count{} 25

apiserver_admission_step_admission_duration_seconds_sum{} 25

apiserver_admission_step_admission_duration_seconds_summary{} 75

apiserver_admission_step_admission_duration_seconds_summary_count{} 25

apiserver_admission_step_admission_duration_seconds_summary_sum{} 25

apiserver_admission_webhook_admission_duration_seconds_bucket{} 77

apiserver_admission_webhook_admission_duration_seconds_count{} 11

apiserver_admission_webhook_admission_duration_seconds_sum{} 11

apiserver_admission_webhook_request_total{} 11

apiserver_audit_event_total{} 15

apiserver_audit_requests_rejected_total{} 15

apiserver_cache_list_fetched_objects_total{} 126

apiserver_cache_list_returned_objects_total{} 124

apiserver_cache_list_total{} 126

apiserver_client_certificate_expiration_seconds_bucket{} 225

apiserver_client_certificate_expiration_seconds_count{} 15

apiserver_client_certificate_expiration_seconds_sum{} 15

apiserver_crd_webhook_conversion_duration_seconds_bucket{} 16

apiserver_crd_webhook_conversion_duration_seconds_count{} 1

apiserver_crd_webhook_conversion_duration_seconds_sum{} 1

apiserver_current_inflight_requests{} 6

apiserver_current_inqueue_requests{} 6

apiserver_delegated_authn_request_duration_seconds_bucket{} 108

apiserver_delegated_authn_request_duration_seconds_count{} 12

apiserver_delegated_authn_request_duration_seconds_sum{} 12

apiserver_delegated_authn_request_total{} 12

apiserver_delegated_authz_request_duration_seconds_bucket{} 108

apiserver_delegated_authz_request_duration_seconds_count{} 12

apiserver_delegated_authz_request_duration_seconds_sum{} 12

apiserver_delegated_authz_request_total{} 12

apiserver_envelope_encryption_dek_cache_fill_percent{} 15

apiserver_flowcontrol_current_executing_requests{} 23

apiserver_flowcontrol_current_inqueue_requests{} 21

apiserver_flowcontrol_current_r{} 21

apiserver_flowcontrol_dispatch_r{} 17

apiserver_flowcontrol_dispatched_requests_total{} 29

apiserver_flowcontrol_latest_s{} 17

apiserver_flowcontrol_next_discounted_s_bounds{} 34

apiserver_flowcontrol_next_s_bounds{} 34

apiserver_flowcontrol_priority_level_request_count_samples_bucket{} 504

apiserver_flowcontrol_priority_level_request_count_samples_count{} 42

apiserver_flowcontrol_priority_level_request_count_samples_sum{} 42

apiserver_flowcontrol_priority_level_request_count_watermarks_bucket{} 1008

apiserver_flowcontrol_priority_level_request_count_watermarks_count{} 84

apiserver_flowcontrol_priority_level_request_count_watermarks_sum{} 84

apiserver_flowcontrol_priority_level_seat_count_samples_bucket{} 252

apiserver_flowcontrol_priority_level_seat_count_samples_count{} 21

apiserver_flowcontrol_priority_level_seat_count_samples_sum{} 21

apiserver_flowcontrol_priority_level_seat_count_watermarks_bucket{} 504

apiserver_flowcontrol_priority_level_seat_count_watermarks_count{} 42

apiserver_flowcontrol_priority_level_seat_count_watermarks_sum{} 42

apiserver_flowcontrol_read_vs_write_request_count_samples_bucket{} 144

apiserver_flowcontrol_read_vs_write_request_count_samples_count{} 12

apiserver_flowcontrol_read_vs_write_request_count_samples_sum{} 12

apiserver_flowcontrol_read_vs_write_request_count_watermarks_bucket{} 288

apiserver_flowcontrol_read_vs_write_request_count_watermarks_count{} 24

apiserver_flowcontrol_read_vs_write_request_count_watermarks_sum{} 24

apiserver_flowcontrol_request_concurrency_in_use{} 23

apiserver_flowcontrol_request_concurrency_limit{} 21

apiserver_flowcontrol_request_execution_seconds_bucket{} 377

apiserver_flowcontrol_request_execution_seconds_count{} 29

apiserver_flowcontrol_request_execution_seconds_sum{} 29

apiserver_flowcontrol_request_queue_length_after_enqueue_bucket{} 189

apiserver_flowcontrol_request_queue_length_after_enqueue_count{} 21

apiserver_flowcontrol_request_queue_length_after_enqueue_sum{} 21

apiserver_flowcontrol_request_wait_duration_seconds_bucket{} 299

apiserver_flowcontrol_request_wait_duration_seconds_count{} 23

apiserver_flowcontrol_request_wait_duration_seconds_sum{} 23

apiserver_init_events_total{} 16

apiserver_kube_aggregator_x509_missing_san_total{} 3

apiserver_longrunning_gauge{} 165

apiserver_longrunning_requests{} 165

apiserver_registered_watchers{} 138

apiserver_request_aborts_total{} 5

apiserver_request_duration_seconds_bucket{} 14432

apiserver_request_duration_seconds_count{} 656

apiserver_request_duration_seconds_sum{} 656

apiserver_request_filter_duration_seconds_bucket{} 165

apiserver_request_filter_duration_seconds_count{} 15

apiserver_request_filter_duration_seconds_sum{} 15

apiserver_request_post_timeout_total{} 4

apiserver_request_slo_duration_seconds_bucket{} 14432

apiserver_request_slo_duration_seconds_count{} 656

apiserver_request_slo_duration_seconds_sum{} 656

apiserver_request_terminations_total{} 6

apiserver_request_total{} 747

apiserver_requested_deprecated_apis{} 2

apiserver_response_sizes_bucket{} 3376

apiserver_response_sizes_count{} 422

apiserver_response_sizes_sum{} 422

apiserver_selfrequest_total{} 213

apiserver_storage_data_key_generation_duration_seconds_bucket{} 225

apiserver_storage_data_key_generation_duration_seconds_count{} 15

apiserver_storage_data_key_generation_duration_seconds_sum{} 15

apiserver_storage_data_key_generation_failures_total{} 15

apiserver_storage_envelope_transformation_cache_misses_total{} 15

apiserver_storage_list_evaluated_objects_total{} 173

apiserver_storage_list_fetched_objects_total{} 173

apiserver_storage_list_returned_objects_total{} 173

apiserver_storage_list_total{} 173

apiserver_storage_objects{} 170

apiserver_tls_handshake_errors_total{} 3

apiserver_watch_events_sizes_bucket{} 1242

apiserver_watch_events_sizes_count{} 138

apiserver_watch_events_sizes_sum{} 138

apiserver_watch_events_total{} 138

apiserver_webhooks_x509_missing_san_total{} 15- 附录:它在prometheus 中的配置

yml

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- target_label: cluster

replacement: iai-test-k8s1.3 job="kubernetes-cadvisor"

- 作用:prometheus通过

kubernetes.default.svc:443这个service来获取节点上 cAdvisor 的容器监控数据,所需的时间- 如下可见,每个节点提供一条数据

- 示例:

shell

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", es="es01", fisam="used", gpu="gpu", gpu_type="xxx", instance="node-69", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-69", kubernetes_io_os="linux"} 0.106558038

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", es="es02", gpu="gpu", gpu_type="xxx", instance="node-70", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-70", kubernetes_io_os="linux"} 0.129973512

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", es="es03", gpu="gpu", gpu_type="xxx", instance="node-71", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-71", kubernetes_io_os="linux"} 0.15734925

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", es="es03new", gpu="gpu", gpu_type="xxx", instance="node-68", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-68", kubernetes_io_os="linux"} 0.312178524

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", gpu="gpu", gpu_type="xxx", instance="cto-gpu-pro-n01", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="cto-gpu-pro-n01", kubernetes_io_os="linux", net_link="zk"} 0.118771899

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", gpu="gpu", gpu_type="xxx", instance="node-64", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-64", kubernetes_io_os="linux"} 0.153539667

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", gpu="gpu", gpu_type="xxx", instance="node-65", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-65", kubernetes_io_os="linux"} 0.198451562

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", gpu="gpu", gpu_type="xxx", instance="node-66", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-66", kubernetes_io_os="linux"} 0.111499167

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", gpu="gpu", gpu_type="xxx", instance="node-67", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-67", kubernetes_io_os="linux"} 0.118666562

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", instance="cto-gpu-pro-m01", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="cto-gpu-pro-m01", kubernetes_io_os="linux"} 0.078148259

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", instance="cto-gpu-pro-m02", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="cto-gpu-pro-m02", kubernetes_io_os="linux"} 0.072733466

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", instance="cto-gpu-pro-m03", job="kubernetes-cadvisor", kubernetes_io_arch="amd64", kubernetes_io_hostname="cto-gpu-pro-m03", kubernetes_io_os="linux"} 0.065211987-

说明:我们可以看到很多乱七八糟的标签,这些都是k8s节点上被打的标签。

- job="kubernetes-cadvisor" 所获取到的metric 可以在k8s上通过以下命名获取

kubectl get --raw /api/v1/nodes/<node-name>/proxy/metrics/cadvisor

container_开头的metric正常情况都是。

- job="kubernetes-cadvisor" 所获取到的metric 可以在k8s上通过以下命名获取

-

它在prometheus中的配置:

yml

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- target_label: cluster # 这两行是一个自定义标签,非必要,可任意修改

replacement: iai-test-k8s1.4 job="kubernetes-nodes"

- 作用:prometheus通过

kubernetes.default.svc:443这个service来获取节点的基本数据,所需的时间- 如下可见,每个节点提供一条数据,结构和kubernetes-cadvisor中一样

- 示例

shell

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", es="es01", fisam="used", gpu="gpu", gpu_type="xxx",instance="node-69", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-69", kubernetes_io_os="linux"} 0.039352234

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", es="es02", gpu="gpu", gpu_type="xxx",instance="node-70", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-70", kubernetes_io_os="linux"} 0.066360032

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", es="es03", gpu="gpu", gpu_type="xxx", instance="node-71", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-71", kubernetes_io_os="linux"} 0.075027663

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", es="es03new", gpu="gpu", gpu_type="xxx",instance="node-68", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-68", kubernetes_io_os="linux"} 0.069378684

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", gpu="gpu", gpu_type="xxx", instance="cto-gpu-pro-n01", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="cto-gpu-pro-n01", kubernetes_io_os="linux", net_link="zk"} 0.049452078

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", gpu="gpu", gpu_type="xxx",instance="node-64", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-64", kubernetes_io_os="linux"} 0.056614205

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", gpu="gpu", gpu_type="xxx",instance="node-65", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-65", kubernetes_io_os="linux"} 0.401426462

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", gpu="gpu", gpu_type="xxx",instance="node-66", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-66", kubernetes_io_os="linux"} 0.056242544

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", gpu="gpu", gpu_type="xxx",instance="node-67", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="node-67", kubernetes_io_os="linux"} 0.10643301

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", instance="cto-gpu-pro-m01", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="cto-gpu-pro-m01", kubernetes_io_os="linux"} 0.029358185

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", instance="cto-gpu-pro-m02", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="cto-gpu-pro-m02", kubernetes_io_os="linux"} 0.029033807

scrape_duration_seconds{beta_kubernetes_io_arch="amd64", beta_kubernetes_io_os="linux", cluster="cto-k8s-pro", instance="cto-gpu-pro-m03", job="kubernetes-nodes", kubernetes_io_arch="amd64", kubernetes_io_hostname="cto-gpu-pro-m03", kubernetes_io_os="linux"} 0.500530486-

job="kubernetes-nodes" 所获取到的metric 可以在k8s上通过以下命名获取

kubectl get --raw /api/v1/nodes/<node-name>/proxy/metrics

-

附录:它在prometheus中的配置

yml

- job_name: 'kubernetes-nodes'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics1.5 job="kubernetes-pods "

- 作用:这个job是自动获取k8s集群中特定配置的pod

- 用户可以自己配置后,不需要再在prometheus中单独配置

- 以minio为例,编排的yaml文件中加入如下配置。

yml

spec:

template:

metadata:

annotations:

prometheus.io/path: /minio/v2/metrics/cluster

prometheus.io/port: "9000"

prometheus.io/scrape: "true"- 附录:它在prometheus中的配置

yml

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

- target_label: cluster

replacement: iai-test-k8s1.6 job="kubernetes-service-endpoints"

- 作用:这个job是自动获取k8s集群中特定配置的service所代理的pod

- 用户可以自己配置后,不需要再在prometheus中单独配置

- 以minio为例,编排的yaml文件中加入如下配置。

yml

metadata:

annotations:

# 必须的注解:启用 Prometheus 抓取

prometheus.io/scrape: "true"

# 可选的注解:指定抓取端口(默认使用 Service 的第一个端口)

prometheus.io/port: "8080"

# 可选的注解:指定 metrics 路径(默认为 /metrics)

prometheus.io/path: "/metrics"

# 可选的注解:指定协议(默认为 http)

prometheus.io/scheme: "http"- 以下是 kube-dns的实际配置:

yml

apiVersion: v1

kind: Service

metadata:

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

creationTimestamp: "2025-09-09T07:28:17Z"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: CoreDNS

name: kube-dns

namespace: kube-system

resourceVersion: "278"

......- 附录:他在prometheus中的配置

shell

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- target_label: cluster

replacement: iai-test-k8s1.7 其他job

以是自己在prometheus配置文件中定义的job

- job="kubernetes-gpu"

- job="kubernetes-node" 这个是我配置的 node-exporter的job,和 job="kubernetes-node" 基本功能是重复的(注意:并不是指具体metric的名字),通常有一个即可。但是我的环境牵扯一些历史遗留问题,一些古老的自研软件依赖了node-exporter 的metric。

2 scrape_samples_scraped

- 作用:从该目标一次抓取(scrape)中获取的样本(sample)数量

- 结构和上文相同

- 说直白了就是一次抓取了多少条数据

- 示例:查看master-01上的node-exporter一次抓取到多少样本

- 查询语句

scrape_samples_scraped{instance="10.10.xxx.60:9100"} - 结果:

- 查询语句

yml

scrape_samples_scraped{cluster="cto-k8s-pro", instance="10.10.xxx.60:9100", job="kubernetes-node"} 35953 scrape_samples_post_metric_relabeling

- 作用:经过指标重标记(metric relabeling)后剩余的样本数量。

- relabeling :指对采集到的原始数据进行标签(label)的修改、过滤或者删除的过程

- 和

scrape_samples_scraped的结构一模一样,只不过一个是处理前的数据量,一个是处理后的数量。