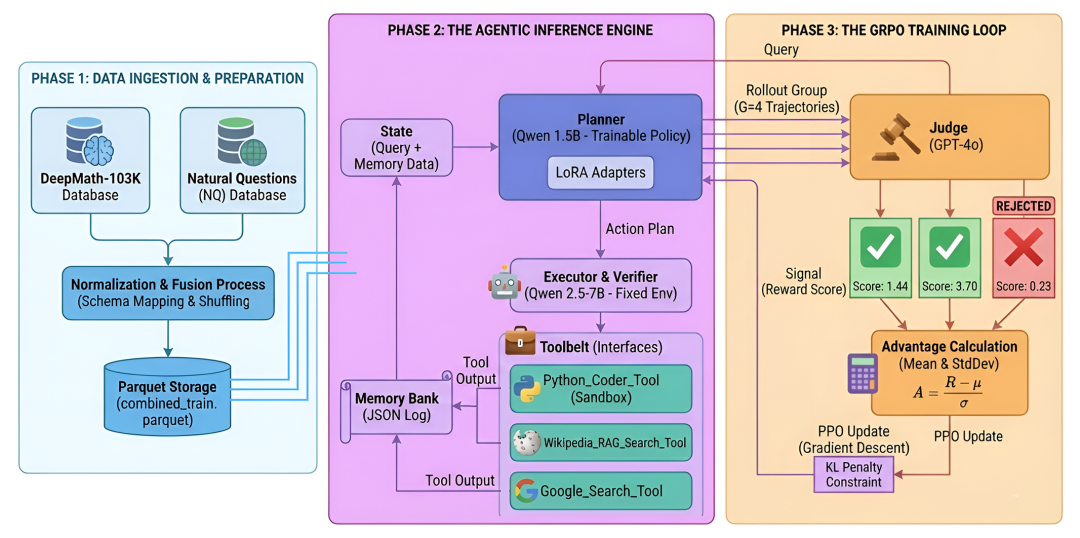

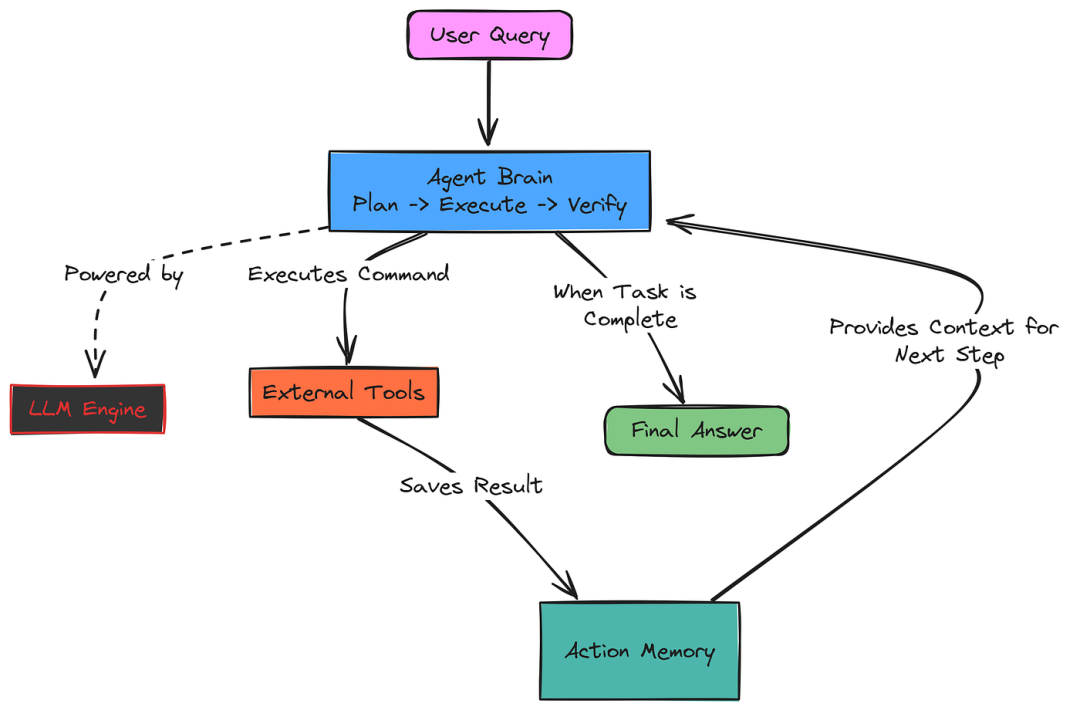

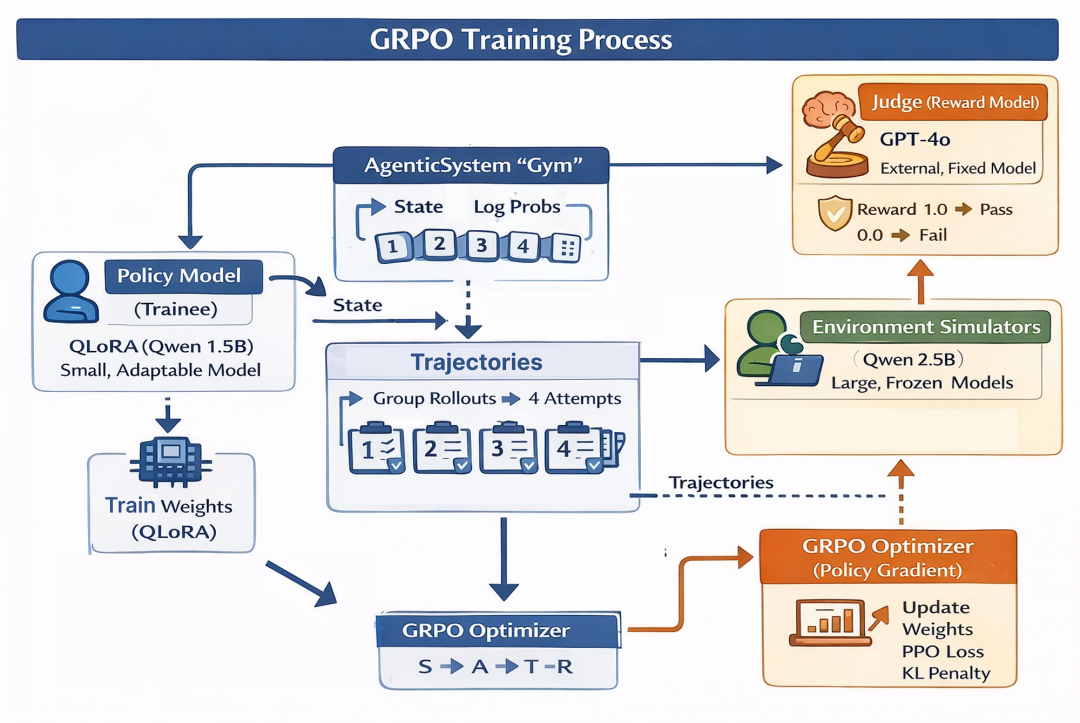

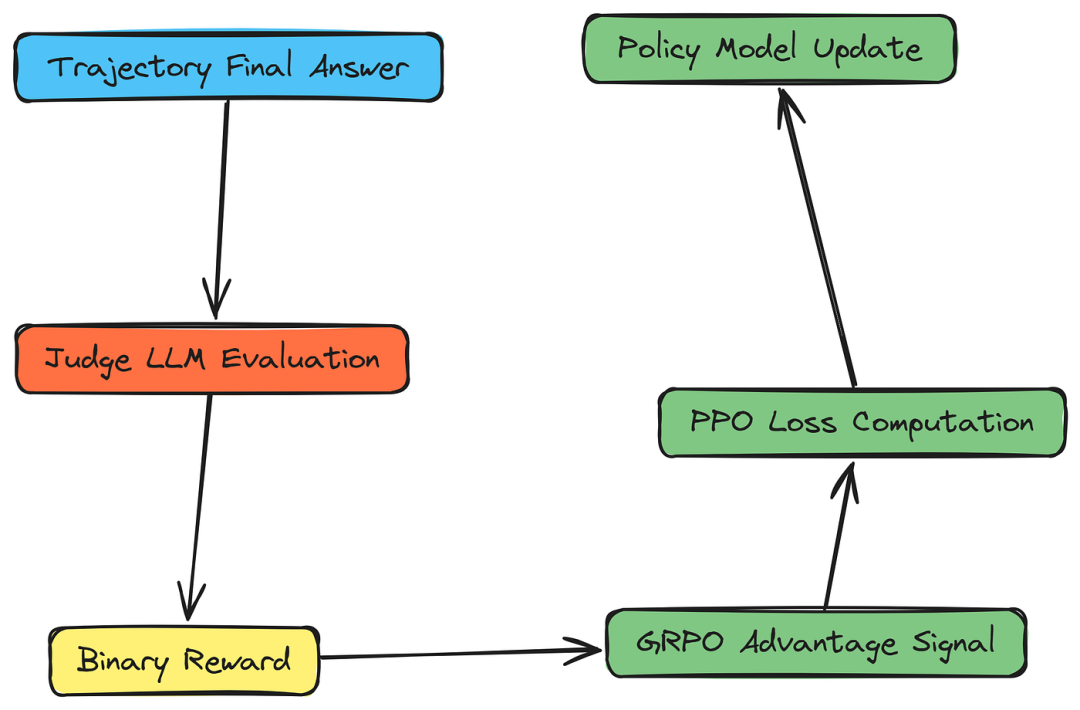

长期任务的Agent系统一般都需要进行任务规划,工具调用,最后逐步执行。大多数现代Agent系统依赖推理,每次每个组件都重新开始,且缺乏预训练,这增加了长期任务中任何阶段错误规划或错误工具调用的可能性。GRPO 算法是一种现代强化学习方法,可以持续训练agent正确规划和执行扩展任务。一个典型的基于 GRPO 的智能训练系统看起来像这样......

GRPO 如何驱动Agent系统训练:

-

基于组的评估:GRPO 对同一查询评估多个轨迹,使智能体能够比较策略,而非依赖单步奖励。

-

相对优势学习: 成功的路径相对于群体平均值得到强化,提高了正确规划和执行的概率。

-

错误抑制: 不良轨迹会接收负面优势信号,从而减少幻觉和工具使用错误。

-

迭代优化: 智能体通过反复Rollout不断提升,学会更可靠地规划长期任务。

-

子智能体间的协调: 通过在群体环境中训练,GRPO 帮助多个子

智能体协调其动作,提升整体多智能体系统性能。

本文,我们将学习并理解GRPO算法与AI Agent的关系,然后创建一个Multi-Agent系统并用GRPO进行训练。

所有代码地址:https://github.com/ArronAI007/Awesome-AGI/tree/main/Agent/Tutorials/multi-agent-training-grpo

代码库的组织方式如下(理论 + 逐步工作流程):

python

Directory structure:

└── GRPO_Training_Agentic/

├── 01_data_preprocessing.ipynb # Preprocessing training data

├── 02_agentic_architecture.ipynb # Multi-Agentic System

├── 03_grpo_training.ipynb # GRPO Training Architecture

└── utils.py # Utils of agents for training一、GRPO在Agent系统中的作用

几乎所有强化学习算法都基于奖励机制。Agent在环境中采取行动,并根据该行动获得奖励或惩罚,目标是随着时间最大化累计奖励。

然而,在多智能体系统中,多个智能体相互交互及环境,传统的强化学习算法在有效协调和优化其动作方面可能存在困难。

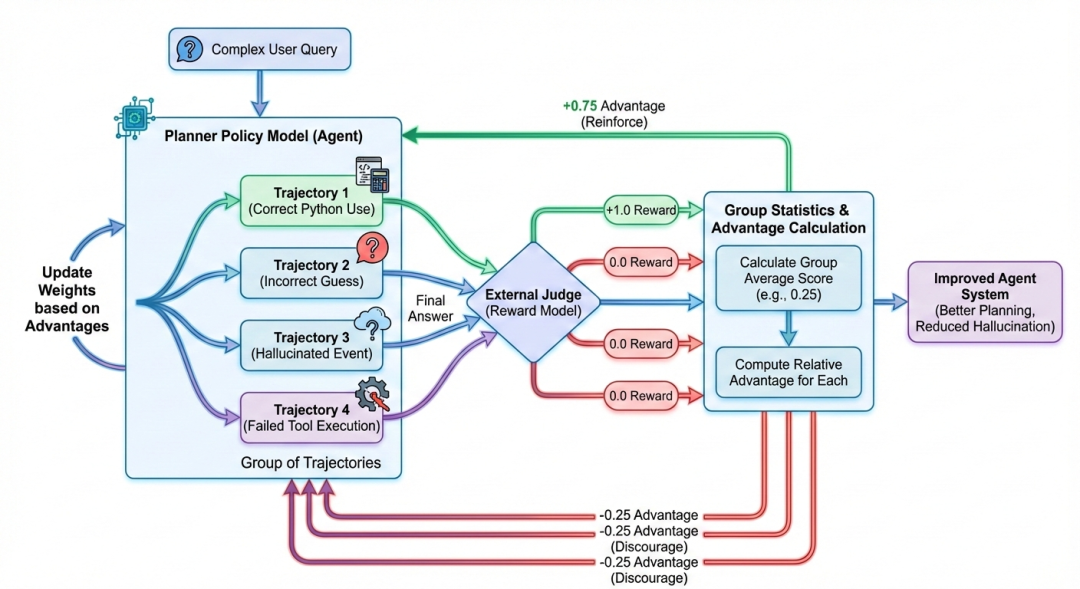

GRPO(广义强化策略优化)可以巧妙地解决传统强化学习算法在多智能体环境中面临的一些挑战。在基于智能体的 GRPO 中,它不是对每一个动作单独评分,而是要求智能体多次尝试同一问题(称为"组"),比较结果,并强化比平均值更有效的策略。

让我们用一个例子来理解一下,该例子也将成为我们博客中的实现。

-

向系统请求一个复杂的查询, 例如"计算 12 平方,然后使用维基百科查找当年发生的重要历史事件"。

-

规划策略会为该单一查询生成一组不同的轨迹 (例如 4 次尝试)。由于模型使用非零温度,它探索了不同的策略:一种轨迹可能正确使用 Python 计算 122122,另一种可能错误猜测数字,第三种可能幻觉历史事件而不进行搜索。

-

外部评估器会根据真实情况评估每个方向的最终答案。成功计算出 144 并找到正确事件的轨迹奖励为 1.0,而猜错、未使用工具或产生幻觉的轨迹奖励为 0.0。

-

该算法通过将每条轨迹得分与该组的平均值进行比较来计算相对优势 。如果群体平均值为 0.25,成功的轨迹(1.0)获得较高的正优势(+0.75),失败的轨迹(0.0)则获得负的优势(-0.25)。

-

策略模型会根据这些优势更新权重,会显著提高了成功轨迹中所用规划步骤的概率,因为它优于小组平均水平,有效地"强化"了正确的逻辑,同时抑制了失败的策略。

我们将在多智能体系统中编写精确的 GRPO 算法,来改善规划阶段,减少幻觉和偏离轨道的结果。

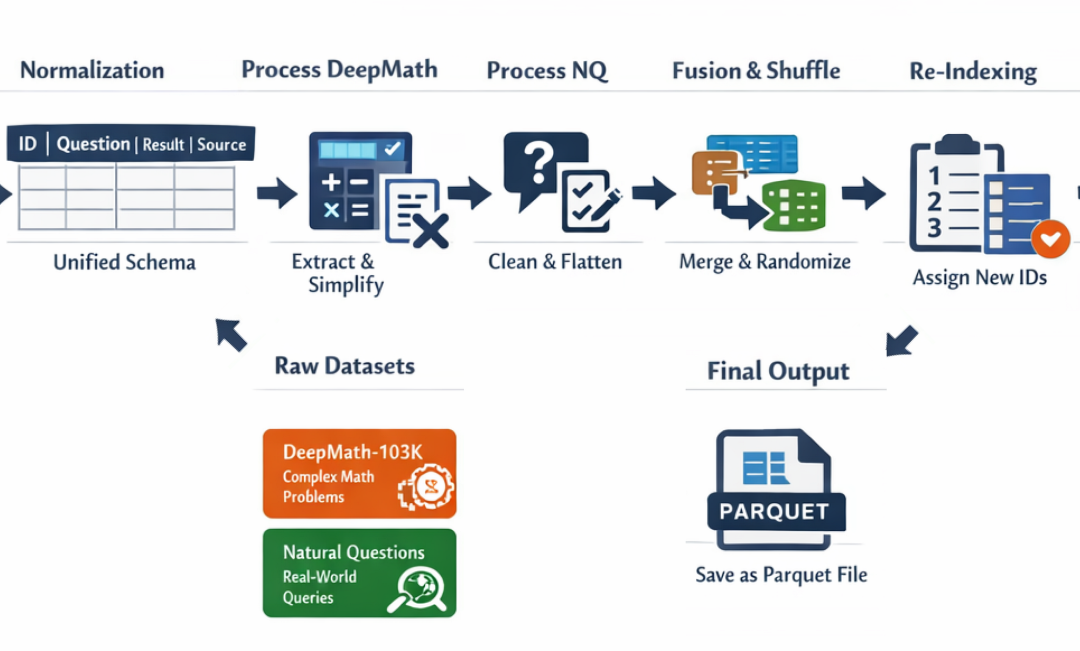

二、Agent数据预处理

多智能体系统通常依赖不同用途的子智能体来执行不同的任务。例如,可以有网页搜索智能体、规划智能体、任务执行智能体等。

在推理时间上,这些智能体的表现高度依赖于规划阶段。如果每次迭代后规划都不顺利,智能体可能会偏离轨道,产生无关紧要的结果,甚至让整个流程产生幻觉。

在这篇博客中,我们将模拟一个现实世界的多智能体系统,其中包含多种子智能体,其中几个子智能体目的相同但方法不同。这样我们才能真正测试 GRPO 如何减少幻觉和偏离赛道的概率。

我们将使用两个 huggingface 数据集来准备训练数据:

-

DeepMath-103K:包含超过 10 万个数学题目及其解答。在用户执行任务时,这可以帮助智能体在规划阶段进行结构化的逐步推理。

-

Natural Questions (NQ): 该数据集包含真实用户问题,有助于客服更好地规划整个流程中的工作方式。

接下来,导入必要的库,并为训练和验证数据创建输出目录

python

# Standard library imports for interacting with the operating system and handling JSON data.

import os

import json

# Core data science libraries for data manipulation and numerical computation.

import pandas as pd

import numpy as np

# Hugging Face library for dataset loading and processing.

from datasets import load_dataset, concatenate_datasets, Dataset

# Utility for displaying progress bars, making long-running operations more informative.

from tqdm import tqdm我们现在可以创建训练和验证数据的输出目录。

python

# Define the path for the training data output directory.

train_output_dir = "./data/train"

# Define the path for the validation data output directory.

val_output_dir = "./data/val"我们的train目录将保存 DeepMath 和 NQ 的合并训练数据集,而 val 目录则包含我们的 AIME 2024 验证集。

我们必须将这两个数据集合并成一个训练集。这会使我们的智能体系统能够学习问题的多样化,提升其有效规划和执行任务的能力。

首先,我们将处理 DeepMath-103K 数据集,加载并探索其结构。

python

print("\n=== Loading DeepMath-103K ===")

# Use the `load_dataset` function from the `datasets` library.

# We specify the dataset name on the Hugging Face Hub: "zwhe99/DeepMath-103K".

# We also specify that we only want the "train" split of this dataset.

math_dataset = load_dataset(

"zwhe99/DeepMath-103K",

split="train"

)在处理之前,先检查一下数据集的列、样本总数,并查看一个示例记录以理解其结构。

python

# The `.column_names` attribute gives us a list of all columns in the dataset.

print("Columns:", math_dataset.column_names)

# The `len()` function tells us the total number of records (rows) in the dataset.

print("Total samples:", len(math_dataset))

#### Output:

Columns: ['question', 'final_answer', 'difficulty', 'topic', 'r1_solution_1', 'r1_solution_2', 'r1_solution_3']

Total samples: 103022可以看到有一个问题和三个不同的解决方案,还有最终答案,这是训练的基础。

让我们来看一个样本记录,来了解数据格式和内容。

python

# Accessing an item by index, like a list, gives us a single record.

sample = math_dataset[0]

# The solution fields ('r1_solution_*') can be very long.

# For a clean printout, we'll truncate them.

truncated_sample = sample.copy()

for key in ['r1_solution_1', 'r1_solution_2', 'r1_solution_3']:

truncated_sample[key] = sample[key][:400]

# Use `json.dumps` with indentation for a pretty, readable print of the sample record.

print(json.dumps(truncated_sample, indent=2))可以得到如下结果:

python

# Output:

{

"question": "Evaluate the limit: \\[ \\lim_{x \\to \\infty} \\sqrt{x} \\left( \\sqrt[3]{x+1} - \\sqrt[3]{x-1} \\right) \\]",

"final_answer": "0",

"difficulty": 4.5,

"topic": "Mathematics -> Precalculus -> Limits",

"r1_solution_1": "Okay, so I have this limit to evaluate the limit as x approaches...",

"r1_solution_2": "Okay, so I need to evaluate the limit as x approaches infinity...",

"r1_solution_3": "Okay, so I need to evaluate the limit as x approaches infinity..."

}r1_solution_1、r1_solution_2 和 r1_solution_3 是三种不同的解决方案,但我们不会用它们进行训练,只用question和 final_answer 字段,因为我们的智能体会执行代码并尝试得到最终答案。

现在我们会遍历每条记录,并将其转换为所需的标准格式。这种格式是通用的,之后我们可以将其与其他数据集合并。

我们的目标schema将是:

- id:每个样本的唯一标识符。

- question:问题还是查询文本。

- chain:用来表示思考链或推理步骤的占位符(暂且不留空)。

- result:最终答案。

- source:表示原始数据集的字符串。

- extra_info:用于存储原始记录中其他有用元数据的词典。

python

print("\n=== Processing MathHard ===")

# Initialize an empty list to store our processed records.

math_rows = []

# We iterate through the dataset using tqdm to get a nice progress bar.

# `enumerate` gives us both the index (`idx`) and the item for each record.

for idx, item in enumerate(tqdm(math_dataset, desc="Processing MathHard")):

# Some datasets might use different keys for the same concept (e.g., 'question' vs 'Problem').

# This logic handles such inconsistencies gracefully.

if "question" in item:

question = item["question"]

elif "Problem" in item:

question = item["Problem"]

else:

# If neither key is found, raise an error to stop execution, as this is unexpected.

raise KeyError("Missing question field")

# Similarly, handle potential inconsistencies for the answer field.

if "final_answer" in item:

answer = item["final_answer"]

elif "Answer" in item:

answer = item["Answer"]

else:

raise KeyError("Missing answer field")

# Append a new dictionary to our list, structured according to our standard format.

math_rows.append({

"id": idx, # Use the loop index as a temporary ID.

"question": question,

"chain": "", # Placeholder for reasoning steps.

"result": str(answer), # Ensure the answer is always a string.

"source": "mathhard", # Tag the data source.

"extra_info": { # Store original metadata.

"ground_truth": str(answer),

"idx": idx

}

})

### OUTPUT

Processing MathHard: 100%|██████████| 103022/103022 [00:03<00:00, 33261.05it/s]这将处理 DeepMath 数据集中所有 103,022 条记录,我们通过检查处理样本数量并打印一个样本,验证处理是否正确。

python

# Verify that the number of processed rows matches the original dataset size.

print("Processed math samples:", len(math_rows))

print("\nProcessed sample:")

# Print the first processed sample to confirm it matches our target format.

print(json.dumps(math_rows[0], indent=2))

#### Output:

Processed math samples: 103022

{

"id": 0,

"question": "Evaluate the limit: \\[ \\lim_{x \\to \\infty} \\sqrt{x} \\left( \\sqrt[3]{x+1} - \\sqrt[3]{x-1} \\right) \\]",

"chain": "",

"result": "0",

"source": "mathhard",

"extra_info": {

"ground_truth": "0",

"idx": 0

}

}我们得到一个 Python 字典列表。为了提升性能和与 Hugging Face 生态系统(如 Trainer API)的兼容性,我们将其转换为datasets.Dataset对象。

python

# First, convert the list of dictionaries into a pandas DataFrame.

# Then, use `Dataset.from_pandas` to create the Hugging Face Dataset object.

# `preserve_index=False` tells the function not to add the DataFrame's index as a new column.

ds_math = Dataset.from_pandas(

pd.DataFrame(math_rows),

preserve_index=False

)现在我们对Natural Questions数据集重复这一过程。该数据集包含用户向谷歌搜索提出的真实问题及其在维基百科上的相应答案。

python

#### Loading the NQ Dataset

print("\n=== Loading FlashRAG NQ ===")

# `load_dataset` can take multiple arguments.

# The first is the dataset group, "RUC-NLPIR/FlashRAG_datasets".

# The second is the specific dataset name within that group, "nq".

nq_dataset = load_dataset(

"RUC-NLPIR/FlashRAG_datasets",

"nq",

split="train"

)成功加载后,我们将检查其中一条记录,以了解其结构和内容。

python

# Look at the first sample to understand the data format.

print("\nRaw NQ sample:")

print(json.dumps(nq_dataset[0], indent=2))

### Output:

Raw NQ sample:

{

"id": "train_0",

"question": "total number of death row inmates in the us",

"golden_answers": [

"2,718"

]

}可以看到question字段包含用户查询,golden_answers 字段包含答案列表。

NQ 的处理稍微复杂一些,我们需要进行一些数据清洗:

-

格式问题:确保每个问题结尾都带有问号以保持一致性。

-

处理答案类型:golden_answers 字段可以包含多种格式的数据(列表、数字数组、字符串等)。我们的代码需要处理所有这些情况,提取答案,并将它们转换为单一字符串。

-

多重答案加入:有些问题可能有多个有效答案。我们将它们连接成一个字符串,中间用分号分隔。

下面是处理代码:

python

print("\n=== Processing NQ ===")

# Initialize an empty list to store processed NQ records.

nq_rows = []

# Iterate through the NQ dataset with a progress bar.

for idx, item in enumerate(tqdm(nq_dataset, desc="Processing NQ")):

# Get the question, remove leading/trailing whitespace.

question = item.get("question", "").strip()

# Ensure the question ends with a '?' for consistency.

if question and not question.endswith("?"):

question += "?"

# Get the answers, defaulting to an empty list if not present.

golden_answers = item.get("golden_answers", [])

cleaned_answers = [] # This list will hold valid, string-formatted answers.

# The following block robustly handles various data types for the answers.

if isinstance(golden_answers, np.ndarray):

for x in golden_answers.flatten(): # Flatten in case of multi-dimensional array.

if x is not None and pd.notna(x):

cleaned_answers.append(str(x))

elif isinstance(golden_answers, (list, tuple)):

for x in golden_answers:

if x is not None and pd.notna(x):

cleaned_answers.append(str(x))

elif isinstance(golden_answers, str):

if golden_answers.strip():

cleaned_answers.append(golden_answers.strip())

elif isinstance(golden_answers, (int, float, np.generic)):

if not pd.isna(golden_answers):

cleaned_answers.append(str(golden_answers))

else: # Catch-all for any other types.

s = str(golden_answers).strip()

if s and s != "nan": # Avoid adding 'nan' as an answer.

cleaned_answers.append(s)

# Join all cleaned answers into a single string, separated by "; ".

final_result = "; ".join(cleaned_answers)

# Append the record in our standard format.

nq_rows.append({

"id": idx, # Temporary ID.

"question": question,

"chain": "",

"result": final_result,

"source": "nq", # Tag the source as Natural Questions.

"extra_info": {

"ground_truth": final_result,

"idx": idx

}

})我们现在检查处理样本数量并输出一个样本,验证处理是否正确。

python

# Verify the number of processed samples and check the first record.

print("\nProcessed NQ sample:")

print(json.dumps(nq_rows[0], indent=2))

### Output:

{

"id": 0,

"question": "total number of death row inmates in the us?",

"chain": "",

"result": "2,718",

"source": "nq",

"extra_info": {

"ground_truth": "2,718",

"idx": 0

}

}与math数据集类似,我们将处理后的 NQ 数据转换为 Hugging Face 数据集对象,以提高性能和与训练流程的兼容性。

python

# Convert the processed NQ data into a Hugging Face Dataset object.

ds_nq = Dataset.from_pandas(

pd.DataFrame(nq_rows),

preserve_index=False

)在两个数据集都处理并标准化后,最后一步是将它们合并为一个训练集。然后我们会对这个合并后的数据集进行洗牌,并为每条记录分配新的、唯一的 ID。

python

#### Concatenating Datasets

# `concatenate_datasets` takes a list of Dataset objects and merges them row-wise.

combined = concatenate_datasets([ds_nq, ds_math])

print("Combined size:", len(combined))

### Output:

# Combined size: 182190我们还需要做以下事情:

-

Shuffling:如果不洗牌,模型首先会看到全部 79,168 个 NQ 样本,然后是全部 103,022 个数学样本。这会影响学习过程。

-

重新索引是必要的,因为合并和洗牌后,原始的 ids 不再唯一或顺序。我们应用映射函数,将一个新的、干净的顺序 ID,从 0 分配到 N-1。

python

# The `.shuffle()` method randomizes the order of the rows in the dataset.

# Providing a `seed` ensures that the shuffle is reproducible. Anyone running this code

# with the same seed will get the exact same shuffled order.

combined = combined.shuffle(seed=42)

# The `.map()` method applies a function to each element of the dataset.

# Here, we use a lambda function that ignores the sample (`_`) and uses the index (`idx`).

# `with_indices=True` provides the index of each row to our function.

# This effectively replaces the old 'id' column with a new one from 0 to len-1.

combined = combined.map(

lambda _, idx: {"id": idx},

with_indices=True

)最后,我们将完成的训练数据集保存为文件。我们使用 Parquet 格式,这是一种高效的列向数据格式,非常适合大型数据集。它被广泛支持,通常比 CSV 或 JSON 等格式更快。

python

# Construct the full output file path using the directory we defined earlier.

output_path = os.path.join(train_output_dir, "combined_train.parquet")

# Use the `.to_parquet()` method to save the dataset.

combined.to_parquet(output_path)让我们验证每个最终数据集中的记录总数。

python

# The length of our in-memory Dataset objects gives the total number of samples.

train_count = len(combined)

print(f"\nTotal train samples: {train_count}")

### Output:

Total train samples: 182190我们成功地将 DeepMath-103K 和 Natural Questions 数据集处理并合并为一个包含 182,190 个样本的单一训练集。该数据集现已准备好用于训练我们的多智能体系统。

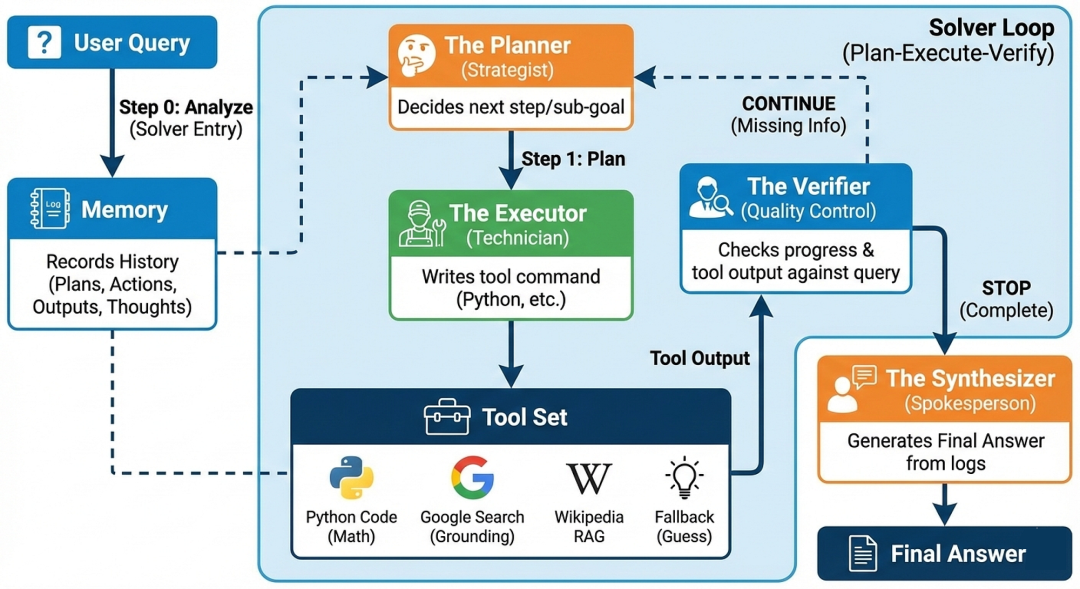

三、构建多智能体架构

智能体工作流,或称多智能体系统,是一种由一系列专业组件(或称"智能体")协作解决问题的框架。这种框架不再依赖单一的大语言模型调用来解决复杂查询,而是将问题拆解成可管理的阶段:

-

Planning: 智能体分析初始查询,制定高层次计划或决定立即的下一个最佳行动。

-

Tool Use: 智能体选择并使用专用工具(如代码解释器、网页搜索或数据库查询工具)来收集信息或执行作。

-

Execution: 专用组件生成精确命令以运行所选工具。

-

Observation & Reflection: 智能体观察工具执行结果,并反思目标是否已达成,或是否需要更多步骤。

-

Iteration: 该过程循环进行,直到验证智能体确认查询已被完全回答。

-

Synthesis: 最后,智能体会将整个过程中收集的所有信息综合成一个全面的最终答案。

我们的目标是改进planning阶段 ,监控整个工作流程和每次迭代后的变更。这样我们可以减少幻觉和偏离轨道的几率。为了优化planning阶段,我们需要能够访问能够在整个训练阶段学习的模型权重。因此我们将使用基于 vLLM 的开源服务器,在吞吐量和延迟方面都非常高效。

这里使用的是 1xA100 80GB 的 GPU,planning阶段用 Qwen/Qwen2.5-7B-Instruct 模型,当然可以根据不同任务,选择不同的AI大模型。

下面,使用 pip install vllm 安装 vllm,然后启动服务器。

python

# Start the vLLM server with the same model name as MODEL_NAME below

# This can be a fine-tuned model or a base model.

vllm serve Qwen/Qwen2.5-7B-Instruct \

--api-key a-secret-key \ # API key for OpenAI-compatible auth

--port 8000 \ # Port for the local server

--max-model-len 8192 # Maximum context length我们首先要搭建环境,包括安装所需的 Python 库,导入必要的模块,并配置连接到我们的 vLLM 服务器和其他外部服务。

!pip install -q openai pydantic tenacity beautifulsoup4 requests wikipedia google-genai numpy json_repair在这里,我们导入所有必要的模块并定义核心配置变量,包括 vLLM 服务器的 URL、模型名称以及我们工具所需 API 密钥的占位符。

python

# Standard library imports

import os

import json

import re

import sys

import inspect

import threading

from io import StringIO

from typing import Any, Dict, List, Union, Optional, Tuple

from abc import ABC, abstractmethod

from contextlib import contextmanager

# Pydantic and API Libraries

from pydantic import BaseModel

from openai import OpenAI

from tenacity import retry, stop_after_attempt, wait_random_exponential

import requests

from bs4 import BeautifulSoup

import wikipedia

from google import genai

from google.genai import types

import numpy as np

import json_repair # For fixing malformed JSON from the LLM现在我们需要设置核心配置,用于连接 vLLM 服务器,并定义规划阶段使用的模型。

python

# --- Core Configuration ---

# The base URL where your vLLM server is running.

VLLM_BASE_URL = "http://localhost:8000"

# The API key for your vLLM server (can be a dummy key if not required by your setup).

VLLM_API_KEY = "a-secret-key"

# The exact name of the model being served by vLLM or your fine-tuned model. This should match the model name configured in your vLLM server.

MODEL_NAME = "Qwen/Qwen2.5-7B-Instruct"

# --- Environment Variables for Tools ---

# IMPORTANT: You must provide your own API keys for the search tools to function.

# If you leave these as placeholders, the corresponding tools will operate in a 'mock' mode.

os.environ['OPENAI_API_KEY'] = 'YOUR_OPENAI_API_KEY_HERE' # Needed for embeddings in Web_Search_Tool

os.environ['GOOGLE_API_KEY'] = 'YOUR_GOOGLE_API_KEY_HERE' # Needed for Google_Search_Tool为了与大模型交互,我们将创建一个包装类。这是一个重要的设计模式,会带来多项好处:

- 抽象: 它隐藏了 API 调用的具体细节,使我们可以轻松替换后端(例如从vLLM 切换到其他提供者),而无需更改其余代码。

- 鲁棒性: 我们可以内置功能,比如对失败的 API 调用自动重试。

- 功能增强: 我们可以添加自定义逻辑,比如强制 LLM 生成结构化 JSON 输出。

3.1 定义智能体的Thoughts

我们从一个抽象基类(ABC)开始,定义一个标准接口,所有 LLM 引擎包装器都必须遵循它。

这确保了一致性和可互换性。任何继承自 EngineLM 类都必须实现generate方法。

python

class EngineLM(ABC):

"""An abstract base class for a language model engine."""

def __call__(self, *args, **kwargs):

"""Allows the class instance to be called like a function, making the syntax cleaner."""

return self.generate(*args, **kwargs)

@abstractmethod

def generate(self, prompt, system_prompt=None, **kwargs):

"""The core method that must be implemented by any subclass. It takes a prompt and generates a response."""

pass在这里,我们定义了 ChatVLLM 类,它是 EngineLM 接口的具体实现。该类负责格式化提示、调用 vLLM 服务器的 API 以及解析响应。

python

class ChatVLLM(EngineLM):

"""A language model engine that connects to a vLLM server with an OpenAI-compatible API."""

def __init__(self, model_string, base_url, api_key, temperature=0.0):

"""Initializes the engine with connection details and the OpenAI client."""

self.model_string = model_string

self.base_url = base_url

self.api_key = api_key

self.temperature = temperature

# The OpenAI client is configured to point to our local vLLM server.

self.client = OpenAI(base_url=self.base_url, api_key=self.api_key)

self.default_system_prompt = "You are a helpful, creative, and smart assistant."

@retry(wait=wait_random_exponential(min=1, max=5), stop=stop_after_attempt(3))

def generate(self, content: Union[str, List[Union[str, bytes]]], system_prompt=None, response_format=None, **kwargs):

"""Generates a response from the LLM, with robust JSON parsing and retry logic."""

# Use the provided system prompt or fall back to the default.

sys_prompt_arg = system_prompt if system_prompt else self.default_system_prompt

user_content = content

# Format the request in the standard chat completions message format.

messages = [

{"role": "system", "content": sys_prompt_arg},

{"role": "user", "content": user_content}

]

# Prepare the parameters for the API request.

request_params = {

"model": self.model_string,

"messages": messages,

"temperature": self.temperature,

"max_tokens": kwargs.get("max_tokens", 4096),

}

# This block is key for structured output. If a Pydantic model is provided,

# we serialize its JSON schema and append it to the prompt, instructing the LLM

# to format its response accordingly. This is a form of "prompt engineering".

if response_format and issubclass(response_format, BaseModel):

json_schema = response_format.model_json_schema()

schema_instruction = (f"\n\nPlease format your entire response as a single JSON object that strictly adheres to the following Pydantic schema.\n"+

f"Do not include any other text, explanations, or markdown formatting outside of the JSON object.\n\n"+

f"Schema:\n```json\n{json.dumps(json_schema, indent=2)}\n```")

# Append the instruction to the last user message.

if isinstance(request_params['messages'][-1]['content'], str):

request_params['messages'][-1]['content'] += schema_instruction

print(f"\n{'─'*50}\n[LLM_INPUT] Sending request to model: {self.model_string}\n{'─'*50}")

try:

# Execute the API call to the vLLM server.

response = self.client.chat.completions.create(**request_params)

response_text = response.choices[0].message.content

except Exception as e:

print(f"[LLM ERROR] API Call Failed: {e}")

raise e

print(f"\n{'─'*50}\n[LLM_OUTPUT] Raw response from model:\n{response_text}\n{'─'*50}")

# If we requested a structured format, we now parse the response.

if response_format and issubclass(response_format, BaseModel):

# First, try to extract the JSON from within markdown code blocks (e.g., ```json ... ```).

match = re.search(r"```json\s*(.*?)\s*```", response_text, re.DOTALL)

json_str = match.group(1) if match else response_text

try:

# Attempt to parse the JSON strictly using the Pydantic model. This enforces the schema.

parsed_obj = response_format.model_validate_json(json_str.strip())

print("[LLM Engine] Successfully parsed structured output (Strict).")

return parsed_obj

except Exception as e:

# If strict parsing fails (e.g., due to trailing commas, missing quotes), we attempt to repair it.

print(f"[LLM Engine info] Strict parsing failed ({str(e)[:100]}...). Attempting repair...")

try:

# The `json_repair` library can fix many common LLM-generated JSON errors.

decoded_obj = json_repair.loads(json_str)

# After repairing, we validate the repaired object against the Pydantic model again.

parsed_obj = response_format.model_validate(decoded_obj)

print("[LLM Engine] Successfully parsed structured output (Repaired).")

return parsed_obj

except Exception as e2:

# If even the repair fails, we log a critical warning. Returning the raw text allows

# the agent to potentially see the error, but it might crash the next step.

print(f"[LLM Engine CRITICAL WARNING] Failed to parse output even with repair: {e2}")

return response_text

# If no structured format was requested, return the raw text response.

return response_text在我们的多智能体系统中,ChatVLLM 类将被所有智能体(规划者、执行者、验证者)用来与 LLM 交互。通过将 API 交互逻辑集中到该类,我们确保所有智能体都能享受到同样强大的解析和错误处理功能,并且如果需要,我们未来可以轻松更新 LLM 后端。

接下来,我们定义一个工厂函数来创建我们的 LLM 引擎实例。这是一种常见的设计模式,提供灵活性和封装性。如果以后决定切换到其他 LLM 提供商或添加额外配置选项,我们可以在这个函数中完成,而无需更改代码库的其他部分。

python

def create_llm_engine(model_string: str, **kwargs) -> ChatVLLM:

"""Factory function to create an instance of our vLLM chat engine."""

return ChatVLLM(model_string=model_string, base_url=VLLM_BASE_URL, api_key=VLLM_API_KEY, temperature=kwargs.get('temperature', 0.0))我们通过创建一个 ChatVLLM 引擎实例并发送一个简单的提示符来测试与 vLLM 服务器的连接。这将确认我们的设置正确,并且能够成功与模型通信。

python

# --- Test Connection ---

print("--- Testing vLLM engine connection ---")

# Create an engine instance.

test_engine = create_llm_engine(MODEL_NAME)

# Send a simple message to see if we get a response.

test_response = test_engine.generate("Ping")

print(f"\n✅ Connection successful!")输出结果,如下所示:

python

# Output:

--- Testing vLLM engine connection ---

──────────────────────────────────────────────────

[LLM_INPUT] Sending request to model: Qwen/Qwen2.5-7B-Instruct

──────────────────────────────────────────────────

──────────────────────────────────────────────────

[LLM_OUTPUT] Raw response from model:

Pong

──────────────────────────────────────────────────

✅ Connection successful!可以看到,连接成功了。现在我们可以着手定义智能体之间以及与工具沟通时使用的结构化输出格式。

我们可以将这些结构化格式定义为Pydantic,有如下优势:

- 类型安全: 确保数据符合预期类型(例如,字段是string,而非list)。

- 验证: 自动检查 LLM 数据是否有效且完整。

- 自我说明: 模型本身作为清晰的文档,说明每个组件期望输入和期望输出。

- 可靠的沟通: 它们构成了我们系统中不同由 LLM 驱动的"角色"之间的合同(Planner、Verifier等)。

在多智能体工作流程中,任何工具或规划的第一步是分析用户的查询。这种初步分析帮助规划者理解当前任务,识别相关技能和工具,并考虑可能影响其解决问题方式的特殊因素。

python

class QueryAnalysis(BaseModel):

"""Represents the initial breakdown and analysis of the user's query."""

concise_summary: str

required_skills: str

relevant_tools: str

additional_considerations: str查询执行后,在主循环的每次迭代中,Planner 智能体都会决定下一步动作,基于当前的决策,明确使用哪种工具及其用途。

python

class NextStep(BaseModel):

"""Defines the plan for the next action to be taken in the agent's loop."""

justification: str

context: str

sub_goal: str

tool_name: str一旦选定了一个工具和子目标,执行智能体的工作就是生成运行该工具的精确代码,执行的输出结果也会结构化。

python

class ToolCommand(BaseModel):

"""Represents the generated command for a specific tool, ready for execution."""

analysis: str

explanation: str

command: str执行动作后,Verifier智能体会基于当前状态进行响应。该模型会总结:智能体是否应该停止,还是需要继续进行更多步骤?

python

class MemoryVerification(BaseModel):

"""Represents the verifier's analysis of whether the task is complete."""

analysis: str

stop_signal: bool现在我们需要创建一个由 Wikipedia_Search_Tool 内部使用的专用模型。在搜索维基百科时,它会获得可能的页面标题列表,并调用带有这种响应格式的 LLM 去选择最相关的页面。

python

class Select_Relevant_Queries(BaseModel):

"""A specialized model for the Wikipedia tool to select relevant search results."""

matched_queries: list[str]

matched_query_ids: list[int]智能体需要一种方式来记住自己做过什么。Memory 类作为一个简单的日志簿,记录每一个动作、所用工具、执行的命令以及获得的结果。

这些历史会在后续步骤反馈给规划者和验证者智能体,为他们做出明智决策提供必要的背景信息。让我们实现这个记忆结构。

python

class Memory:

"""A simple class to store the history of actions taken by the agent."""

def __init__(self):

"""Initializes an empty dictionary to store actions."""

self.actions: Dict[str, Dict[str, Any]] = {}

def add_action(self, step_count: int, tool_name: str, sub_goal: str, command: str, result: Any) -> None:

"""Adds a new action to the memory log."""

self.actions[f"Action Step {step_count}"] = {

'tool_name': tool_name,

'sub_goal': sub_goal,

'command': command,

'result': result

}

def get_actions(self) -> Dict[str, Dict[str, Any]]:

"""Retrieves the entire history of actions."""

return self.actions工具输出可以是复杂的对象,也可以是非常长的字符串。在将结果存储到记忆(并反馈到 LLM 有限的上下文窗口)之前,必须将其序列化为干净、截断的 JSON 格式。以下函数可以递归进行处理该转换。

python

def make_json_serializable_truncated(obj, max_length: int = 2000):

"""Recursively converts an object into a JSON-serializable and truncated format."""

# Handle basic, JSON-native types.

if isinstance(obj, (int, float, bool, type(None))): return obj

# Truncate long strings.

elif isinstance(obj, str): return obj if len(obj) <= max_length else obj[:max_length - 3] + "..."

# Recursively process dictionaries.

elif isinstance(obj, dict): return {str(k): make_json_serializable_truncated(v, max_length) for k, v in obj.items()}

# Recursively process lists.

elif isinstance(obj, list): return [make_json_serializable_truncated(element, max_length) for element in obj]

# For all other types, convert to a string representation and truncate.

else:

result = repr(obj)

return result if len(result) <= max_length else result[:max_length - 3] + "..."工具赋予智能体力量,它们是允许智能体与外部世界交互、执行计算或访问超出自身认知范围的信息。

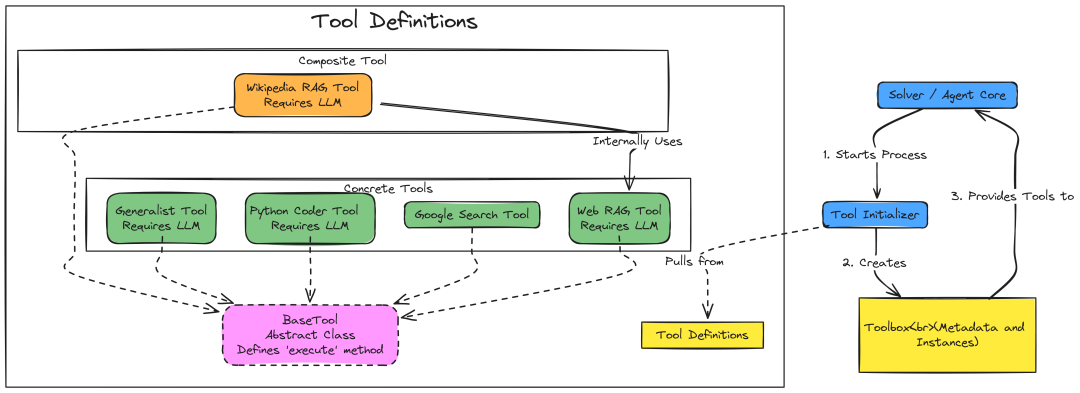

3.2 创建工具集

我们首先定义一个 BaseTool 抽象类,确保所有工具结构一致,并暴漏其实现功能的元数据。

这些元数据对规划智能体决定适合特定任务的工具至关重要。

python

class BaseTool(ABC):

"""An abstract base class that defines the standard interface for all tools."""

# A flag to indicate if the tool requires an LLM engine to function.

require_llm_engine = False

def __init__(self, tool_name=None, tool_description=None, tool_version=None, input_types=None, output_type=None, demo_commands=None, user_metadata=None, model_string=None):

"""Initializes the tool with its descriptive metadata."""

self.tool_name, self.tool_description, self.tool_version, self.input_types, self.output_type, self.demo_commands, self.user_metadata, self.model_string = tool_name, tool_description, tool_version, input_types, output_type, demo_commands, user_metadata, model_string

def get_metadata(self) -> dict:

"""Returns all the tool's metadata in a dictionary. This is fed to the Planner agent."""

return {"tool_name": self.tool_name, "tool_description": self.tool_description, "tool_version": self.tool_version, "input_types": self.input_types, "output_type": self.output_type, "demo_commands": self.demo_commands, "user_metadata": self.user_metadata, "require_llm_engine": self.require_llm_engine}

def set_custom_output_dir(self, output_dir):

"""A placeholder method for tools that might need to save files."""

pass

@abstractmethod

def execute(self, *args, **kwargs):

"""The core method where the tool's logic is implemented. Must be overridden by subclasses."""

raise NotImplementedError在我们的 BaseTool 中,我们定义了所有工具必须具备的结构和元数据。每个工具都必须实现执行方法, 该方法包含工具的实际逻辑。

现在,我们可以通过继承 BaseTool 来实现特定工具。让我们先从一个简单的通用工具开始,使用大型语言模型直接回答查询。

python

class Base_Generator_Tool(BaseTool):

"""A general-purpose tool that uses an LLM to answer a query directly."""

# This tool's primary function is to call an LLM, so it requires an engine.

require_llm_engine = True

def __init__(self, model_string="gpt-4o-mini"):

"""Initializes the tool's metadata and its own LLM engine."""

super().__init__(

tool_name="Generalist_Solution_Generator_Tool",

tool_description="A generalized tool that takes query from the user, and answers the question step by step to the best of its ability.",

tool_version="1.0.0",

input_types={"query": "str"},

output_type="str",

user_metadata={

"limitation": "The Generalist_Solution_Generator_Tool may provide hallucinated or incorrect responses.",

"best_practice": "Use for general queries. Verify important information from its responses."

}

)

self.llm_engine = create_llm_engine(model_string, temperature=0.0)

def execute(self, query, **kwargs):

"""Executes the tool by passing the query directly to its LLM engine."""

return self.llm_engine.generate([query])该工具允许智能体编写并执行 Python 代码。这对于涉及计算、数据处理或逻辑操作的任务来说极为强大。主要实现的安全功能如下:

- Sandboxing: 代码通过 exec()在受控的空作用域中执行,以防止其访问或修改主程序的状态。

- Timeout: 定时器防止代码无限运行,这对于处理无限循环或长时间计算至关重要。

- Output Capturing: 从执行代码中可以捕获任何print语句。

python

class TimeoutException(Exception):

"""Custom exception to be raised when an operation times out."""

pass

@contextmanager

def timeout(seconds):

"""A context manager to enforce a timeout on a block of code."""

# Define a function that will be called by the timer to raise the exception.

def raise_timeout(signum, frame):

raise TimeoutException("Code execution timed out")

# Use a threading.Timer to run the raise_timeout function after a delay.

timer = threading.Timer(seconds, lambda: raise_timeout(None, None))

timer.start()

try:

# The 'yield' passes control back to the 'with' block.

yield

finally:

# This code always runs, whether the 'with' block finished or an exception occurred.

# It's crucial to cancel the timer to prevent the timeout from firing later.

timer.cancel()在timeout实现中,我们使用threading.Timer定义函数,并设置在指定秒数后触发TimeoutException。 timeout上下文管理器在进入代码块时启动计时器,并确保在退出时取消,防止意外超时。

现在我们可以利用这种超时机制来实现由LLM生成的 Python 代码的 Python_Coder_Tool。工具会提示 LLM 根据用户查询生成代码片段,从响应中提取代码,然后在沙盒环境中执行,同时捕获任何输出。

python

class Python_Coder_Tool(BaseTool):

"""A tool to generate and execute Python code in a sandboxed environment."""

require_llm_engine = True

def __init__(self, model_string="gpt-4o"):

super().__init__(

tool_name="Python_Code_Generator_Tool",

tool_description="A tool that generates and executes simple Python code snippets for basic arithmetical calculations and math-related problems.",

tool_version="1.0.0",

input_types={"query": "str"},

output_type="dict",

user_metadata={

"limitations": "Restricted to basic Python arithmetic and built-in math functions. Cannot use external libraries, file I/O, or network requests. Execution times out after 10 seconds.",

"best_practices": "Provide clear queries with all necessary numerical inputs. Good for math and logic problems."

}

)

self.llm_engine = create_llm_engine(model_string, temperature=0.0)

def execute(self, query, **kwargs):

# 1. Prompt an LLM to generate the Python code.

task_description = "Given a query, generate a Python code snippet that performs the specified operation. Ensure to print the final result. The final output should be presented in the following format:\n\n```python\n<code snippet>\n```"

full_prompt = f"Task:\n{task_description}\n\nQuery:\n{query}"

response = self.llm_engine.generate(full_prompt)

# 2. Extract the code from the LLM's response.

match = re.search(r"```python\s*(.*?)\s*```", response, re.DOTALL)

if not match: return {"error": "No Python code block found in the response", "raw_response": response}

code_snippet = match.group(1).strip()

# 3. Execute the code in a safe, controlled environment.

output_capture = StringIO() # Create an in-memory text buffer to capture print statements.

old_stdout, old_stderr = sys.stdout, sys.stderr # Store the original stdout/stderr

local_vars = {} # A dictionary to hold variables created by the executed code.

try:

# Redirect stdout and stderr to our in-memory buffer.

sys.stdout = sys.stderr = output_capture

with timeout(10): # Enforce a 10-second timeout.

# `exec` runs the code. We provide empty global and a local dict for the scope.

exec(code_snippet, {}, local_vars)

printed_output = output_capture.getvalue().strip()

# Return the captured output and any variables created by the code.

return {"printed_output": printed_output, "variables": {k: repr(v) for k, v in local_vars.items() if not k.startswith('__')}}

except TimeoutException as e: return {"error": str(e), "code": code_snippet}

except Exception as e: return {"error": str(e), "code": code_snippet, "captured_output": output_capture.getvalue().strip()}

finally:

# CRITICAL: Always restore the original stdout and stderr.

sys.stdout, sys.stderr = old_stdout, old_stderr该工具使客服能够在网上实时搜索信息。它使用了 Google Gemini API 内置的grounding功能,这是一种简单且有效的基于搜索结果生成grounding的方法。如果没有提供 GOOGLE_API_KEY,它将以模拟模式运行,返回占位字符串。

python

class Google_Search_Tool(BaseTool):

"""A tool for performing web searches using Google's Gemini API with grounding."""

def __init__(self, model_string="gemini-1.5-flash"):

super().__init__(

tool_name="Ground_Google_Search_Tool",

tool_description="A web search tool powered by Google's Gemini AI that provides real-time information.",

tool_version="1.0.0",

input_types={"query": "str"},

output_type="str",

user_metadata={"limitations": "Only suitable for general information search.", "best_practices": "Choose for question-type queries."}

)

self.search_model = model_string

# Check for a valid API key. If not present, set client to None to enable mock mode.

if not os.getenv("GOOGLE_API_KEY") or 'YOUR_GOOGLE_API_KEY' in os.getenv("GOOGLE_API_KEY"):

print("WARNING: Google_Search_Tool is in mock mode. Provide a GOOGLE_API_KEY to enable.")

self.client = None

else:

# We'll use the recommended `genai.GenerativeModel` for modern usage, but the logic is similar.

genai.configure(api_key=os.getenv("GOOGLE_API_KEY"))

self.client = genai.GenerativeModel(self.search_model)

def execute(self, query: str, add_citations: bool = True, **kwargs):

# If in mock mode, return a placeholder response.

if not self.client:

return f"[Mock Response] Search results for: '{query}'"

try:

# Configure the Gemini API to use its internal Google Search tool for grounding.

grounding_tool = genai.Tool(

google_search=genai.GoogleSearch()

)

# Generate content with the search tool enabled.

response = self.client.generate_content(

query,

tools=[grounding_tool],

)

return response.text

except Exception as e:

return f"Error during Google Search: {e}"该工具实现了完整的检索增强生成(RAG)流水线,基于特定 URL 的内容回答查询。它比一般的网络搜索更有针对性。流程如下:

-

Fetch & Parse: 从网址下载 HTML,提取所有干净的文本。

-

Chunk: 把长文拆分成更小、易于管理的段落。

-

Embed: 使用嵌入模型(这里是 OpenAI 的)将用户查询和每个文本块转换为数值向量。

-

Retrieve: 计算查询向量与所有块向量之间的余弦相似度,以找到与查询语义上最相关的块。

-

Synthesize: 将原始查询和最相关的块内容传递给 LLM,要求其仅根据提供的上下文综合最终答案。

python

class Web_Search_Tool(BaseTool):

"""Answers questions by retrieving info from a website using a RAG pipeline."""

require_llm_engine = True

def __init__(self, model_string="gpt-4o-mini"):

super().__init__(tool_name="Web_RAG_Search_Tool", tool_description="Answers questions by retrieving info from a website using RAG.", tool_version="1.0.0", input_types={"query": "str", "url": "str"}, output_type="str", user_metadata={"limitation": "May not work with JS-heavy sites or those requiring authentication.", "best_practice": "Use specific, targeted queries on text-rich websites."})

self.llm_engine = create_llm_engine(model_string, temperature=0.0)

# This tool requires an OpenAI key for its embedding model.

if not os.getenv("OPENAI_API_KEY") or 'YOUR_OPENAI_API_KEY' in os.getenv("OPENAI_API_KEY"):

print("WARNING: Web_Search_Tool is in mock mode. Provide an OPENAI_API_KEY to enable embeddings."); self.embedding_client = None

else: self.embedding_client = OpenAI()

def execute(self, query, url, **kwargs):

if not self.embedding_client: return f"[Mock Response] RAG summary for query '{query}' on URL '{url}'"

try:

# 1. Fetch & Parse: Use requests and BeautifulSoup to get text from the URL.

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36'}

content = BeautifulSoup(requests.get(url, headers=headers, timeout=10).content, 'html.parser').get_text(separator='\n', strip=True)

# 2. Chunk: Split the text into overlapping chunks of 200 words.

words = content.split(); chunks = [" ".join(words[i:i+200]) for i in range(0, len(words), 180)]

# 3. Embed: Get embeddings for the query and all chunks in a single API call.

embeddings = self.embedding_client.embeddings.create(input=[query] + chunks, model="text-embedding-3-small").data

query_embedding, chunk_embeddings = np.array(embeddings[0].embedding), np.array([e.embedding for e in embeddings[1:]])

# 4. Retrieve: Calculate cosine similarity and get the top 10 most relevant chunks.

similarities = [np.dot(query_embedding, ce) / (np.linalg.norm(query_embedding) * np.linalg.norm(ce)) for ce in chunk_embeddings]

top_chunks = [chunks[i] for i in np.argsort(similarities)[-10:][::-1]]

reference_info = "\n".join([f"[{i+1}] {chunk}" for i, chunk in enumerate(top_chunks)])

# 5. Synthesize: Prompt a separate LLM to generate an answer based on the retrieved chunks.

summary_prompt = f"You are an expert AI assistant. Your task is to provide a clear, concise, and accurate answer to the user's query based **exclusively** on the provided reference information.\n\n## Step-by-Step Instructions\n1. **Analyze the Query:** First, fully understand the user's query and identify the specific information being asked for.\n2. **Scan for Relevance:** Read through each numbered chunk in the reference information. Identify all chunks that contain information directly relevant to answering the query.\n3. **Extract Key Facts & Synthesize:** From the relevant chunks, extract only the key facts and figures needed. Synthesize these extracted facts into a comprehensive, single-paragraph answer.\n\n---\n## Your Turn\n\n### User Query\n{query}\n\n### Reference Information\n{reference_info}\n\n### Output\n"

return self.llm_engine.generate(summary_prompt)

except Exception as e: return f"Error in Web_Search_Tool: {e}"这是一种复合工具或元工具,它可以协调其他组件来执行其任务。其过程如下:

-

Search: 使用维基百科库获取与用户查询相关的潜在页面标题列表。

-

Select: 调用LLM (配合 Select_Relevant_Queries 的 Pyndantic 模型)智能地筛选出最有潜力的候选者。

-

Process: 对于每个选定页面,它调用 Web_Search_Tool对该维基百科页面执行完整的 RAG 流程。

-

Aggregate: 它返回一个结构化词典,包含从所有相关页面检索到的信息。

python

class Wikipedia_Search_Tool(BaseTool):

"""A composite tool that searches Wikipedia, selects relevant pages, and applies RAG."""

require_llm_engine = True

def __init__(self, model_string="gpt-4o-mini"):

super().__init__(tool_name="Wikipedia_RAG_Search_Tool", tool_description="Searches Wikipedia and uses RAG to get grounded information from pages.", tool_version="1.0.0", input_types={"query": "str"}, output_type="dict", user_metadata={"limitation": "Wikipedia only. Accuracy depends on Wikipedia content. Filtering of pages depends on LLM performance.", "best_practice": "Use specific, targeted queries. Trust the 'relevant_pages' results."})

self.llm_engine = create_llm_engine(model_string, temperature=0.0)

# This tool internally uses another tool.

self.web_rag_tool = Web_Search_Tool(model_string=model_string)

def execute(self, query, **kwargs):

try:

# 1. Search: Get up to 10 potential page titles from the Wikipedia API.

search_results = wikipedia.search(query, results=10)

if not search_results: return {"error": f"No results found for '{query}'"}

# 2. Select: Prompt an LLM to choose the most relevant titles from the search results.

query_candidates_str = "\n".join([f"{i}. {query}" for i, query in enumerate(search_results)])

prompt = f"""You are an expert AI assistant. Your task is to identify and select the most relevant queries from a list of Wikipedia search results that are most likely to address the user's original question.\n\n## Input\n\nOriginal Query: `{query}`\nQuery Candidates from Wikipedia Search: `{query_candidates_str}`\n\n## Instructions\n1. Carefully read the original query and the list of query candidates.\n2. Select the query candidates that are most relevant to the original query.\n3. Return up to 3 most relevant queries."""

selection = self.llm_engine.generate(prompt, response_format=Select_Relevant_Queries)

# Fallback logic in case the LLM fails to produce a valid structured response.

if not isinstance(selection, Select_Relevant_Queries):

print("Warning: Failed to parse relevant queries, using first result as fallback.")

selection = Select_Relevant_Queries(matched_queries=[search_results[0]], matched_query_ids=[0])

# 3. Process & Aggregate: Loop through the selected titles.

relevant_pages = []

for title in selection.matched_queries:

try:

# Get the full page object from the Wikipedia API.

page = wikipedia.page(title, auto_suggest=False)

# Use the Web_RAG_Tool to process the content of the page's URL.

info = self.web_rag_tool.execute(query=query, url=page.url)

relevant_pages.append({"title": title, "url": page.url, "retrieved_information": info})

except Exception as page_e:

# Handle cases where a page might be a disambiguation page or cause an error.

relevant_pages.append({"title": title, "url": f"https://en.wikipedia.org/wiki/{title.replace(' ', '_')}", "error": str(page_e)})

# Return a structured dictionary of the findings.

return {"query": query, "relevant_pages": relevant_pages}

except Exception as e: return {"error": f"Wikipedia search failed: {e}"}现在我们把所有线索都拼凑起来。编排引擎负责管理智能体的生命周期,从初始化工具到运行主要问题解决循环。

该类负责智能体工具箱的设置,它会收集所需工具的列表,实例化各自的类,并存储工具实例(用于执行)和其元数据(用于 Planner 代理)。这种关注点的分离使Solver类保持更清晰。

python

class Initializer:

"""Handles the loading and configuration of all tools for the agent."""

def __init__(self, enabled_tools: List[str], tool_engine: List[str], model_string: str):

self.toolbox_metadata, self.tool_instances_cache, self.available_tools = {}, {}, []

print("\n==> Initializing agent..."); self._set_up_tools(enabled_tools, tool_engine, model_string)

def _set_up_tools(self, enabled_tools: List[str], tool_engine: List[str], model_string: str):

print(f"Enabled tools: {enabled_tools} with engines: {tool_engine}")

# A registry of all available tool classes.

all_tool_classes = {

"Base_Generator_Tool": Base_Generator_Tool,

"Python_Coder_Tool": Python_Coder_Tool,

"Google_Search_Tool": Google_Search_Tool,

"Wikipedia_RAG_Search_Tool": Wikipedia_Search_Tool

}

# Loop through the list of tools to enable.

for i, tool_class_name in enumerate(enabled_tools):

if tool_class_name in all_tool_classes:

tool_class = all_tool_classes[tool_class_name]

# Determine which LLM engine this tool instance should use.

engine = tool_engine[i] if i < len(tool_engine) else model_string

print(f" -> Loading '{tool_class_name}' with engine '{engine}'...")

# Create an instance of the tool class.

instance = tool_class() if engine == "Default" else tool_class(model_string=engine)

ext_name = instance.tool_name

# Store the instance for execution and its metadata for planning.

self.tool_instances_cache[ext_name] = instance

self.toolbox_metadata[ext_name] = instance.get_metadata()

self.available_tools.append(ext_name)

print(f" ✓ Loaded and cached as '{ext_name}'")

print(f"\n✅ Tool setup complete. Final available tools: {self.available_tools}")Solver类是主编排器,创建了整个智能体的工作流程。它初始化所有必要的组件,并包含执行多步推理循环的主要solve方法。

init 方法通过为每个任务创建独立的 LLM 引擎实例,来设置智能体的不同"角色"。本文中虽然它们是同一个模型,但在更先进的系统中,它们可能是专门用于规划、验证或代码生成的不同模型。

python

class Solver:

"""The main class that orchestrates the entire agentic problem-solving workflow."""

def __init__(self, planner_main_engine, planner_fixed_engine, verifier_engine, executor_engine, enabled_tools, tool_engine, max_steps=5):

"""Initializes all components of the agent: LLM engines, tools, and memory."""

self.max_steps = max_steps

print("\n==> Initializing LLM engines for different roles...")

# Initialize an LLM engine for each distinct role in the workflow.

self.llm_planner_main = create_llm_engine(planner_main_engine); print(f" - Planner (Main): {planner_main_engine}")

self.llm_planner_fixed = create_llm_engine(planner_fixed_engine); print(f" - Planner (Fixed/Aux): {planner_fixed_engine}")

self.llm_verifier = create_llm_engine(verifier_engine); print(f" - Verifier: {verifier_engine}")

self.llm_executor = create_llm_engine(executor_engine); print(f" - Executor: {executor_engine}")

# Use the Initializer class to set up the toolbox.

initializer = Initializer(enabled_tools, tool_engine, planner_main_engine)

self.tool_instances_cache = initializer.tool_instances_cache

self.toolbox_metadata = initializer.toolbox_metadata

self.available_tools = initializer.available_tools

# Initialize the agent's memory.

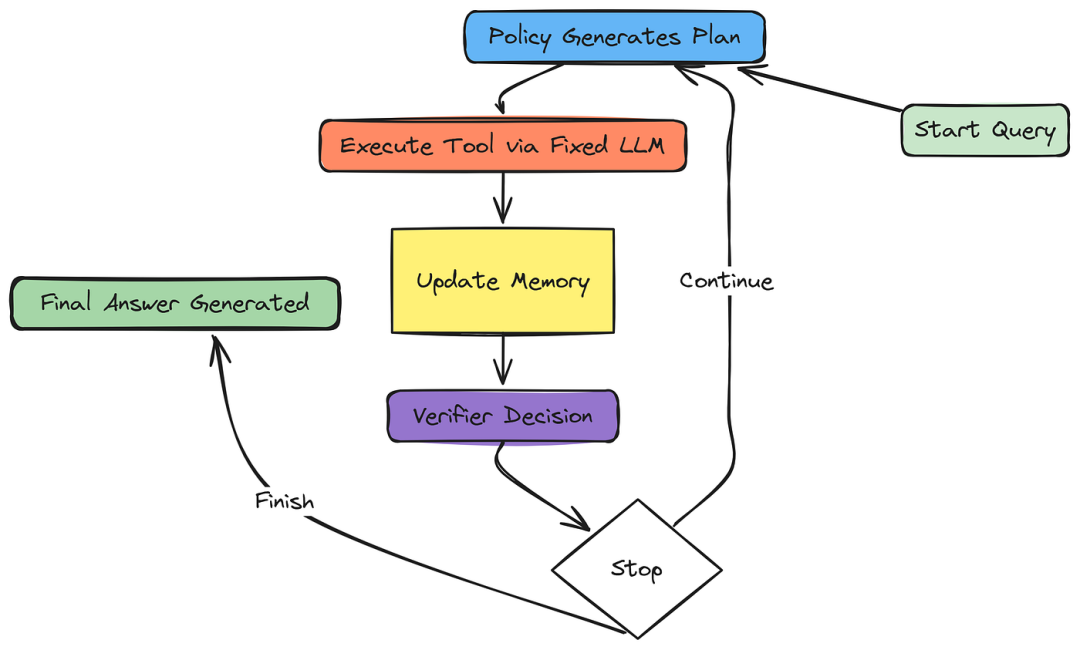

self.memory = Memory()这是核心逻辑,solve方法会接收用户查询并执行智能体循环,直到问题解决或达到最大步数。让我们逐阶段分析循环。

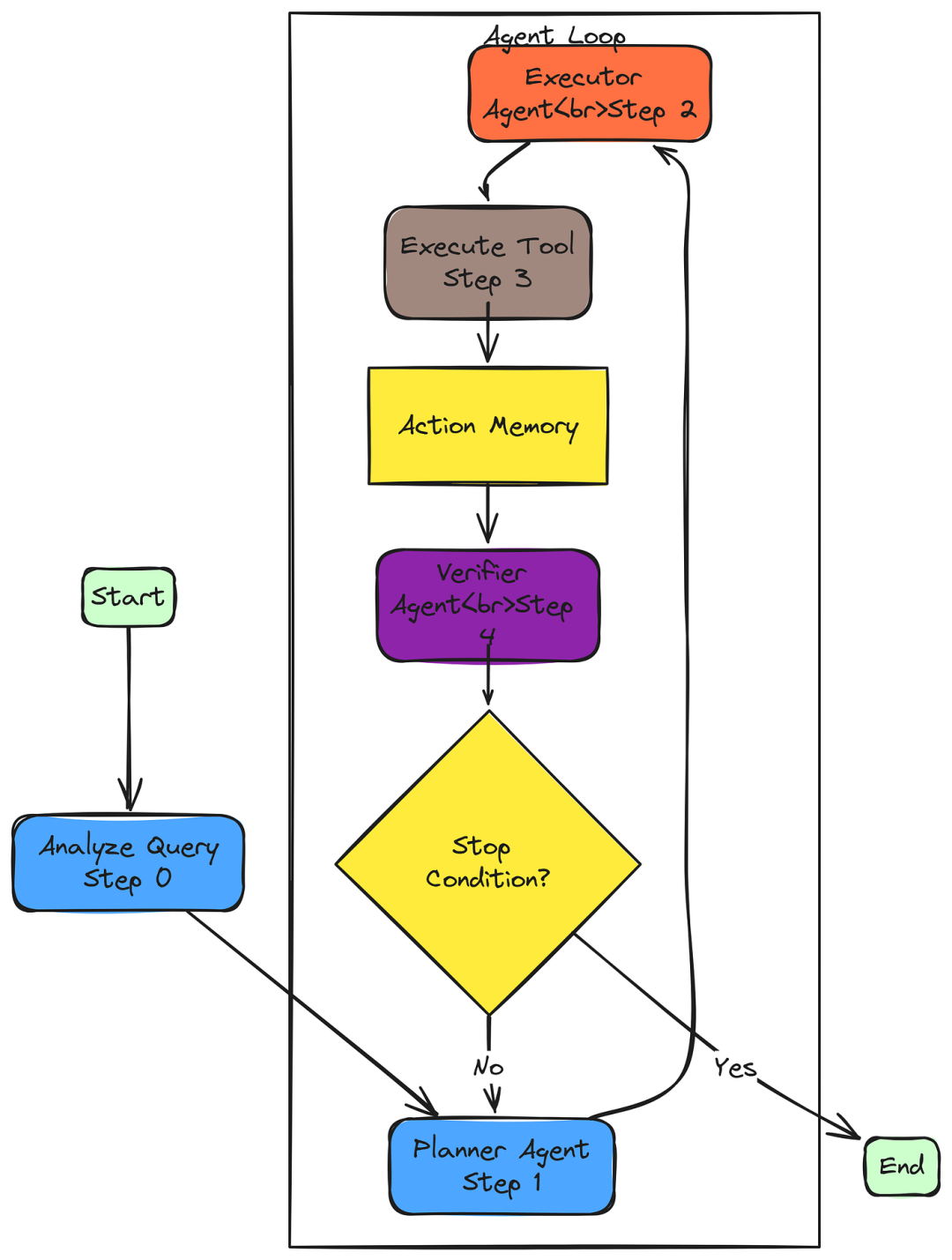

3.3 Solver, Planner, Executor, and Verifier

在循环开始前,智能体会对查询进行一次性分析,对用户的意图、所需技能以及可能相关的工具进行高层次理解。

本分析为后续所有步骤提供了背景。

python

def solve_step_0_analyze(self, question: str) -> QueryAnalysis:

"""Performs the initial analysis of the user's query."""

print(f"\n{'='*80}\n==> 🔍 Received Query: {question}\n{'='*80}")

# Clear memory from any previous runs.

self.memory.actions.clear()

print("\n==> 🔍 Step 0: Query Analysis (using planner_fixed_engine)")

# This is the prompt that instructs the LLM on how to analyze the query.

# It's given the query, the list of available tools, and the detailed tool metadata.

prompt_analyze_query = f"""Task: Analyze the given query to determine necessary skills and tools.

Inputs:

- Query: {question}

- Available tools: {json.dumps(self.available_tools)}

- Metadata for tools: {json.dumps(self.toolbox_metadata, indent=2)}

Instructions:

1. Identify the main objectives in the query.

2. List the necessary skills and tools.

3. For each skill and tool, explain how it helps address the query.

4. Note any additional considerations.

Format your response with a summary of the query, lists of skills and tools with explanations, and a section for additional considerations. Be brief and precise with insight."""

# The LLM is asked to respond in the format of the QueryAnalysis Pydantic model.

query_analysis = self.llm_planner_fixed.generate(prompt_analyze_query, response_format=QueryAnalysis)

print(f"\n--- Analysis Result ---\n{json.dumps(query_analysis.model_dump(), indent=2)}")

return query_analysis

# We will attach this method to the Solver class later.

Solver.solve_step_0_analyze = solve_step_0_analyze每次循环迭代开始时,Planner 智能体决定下一步行动,它考虑原始查询、初始分析、存储在内存中的所有先前作的历史以及可用的工具。其目标是选择单一最佳工具,并为其在此步骤中明确、具体地定义一个子目标。

python

def solve_step_1_plan(self, question: str, query_analysis: QueryAnalysis, step_count: int) -> NextStep:

"""Plans the next best action for the current step of the loop."""

print(f"\n{'='*80}\n==> 🎯 Step {step_count}: Planning Next Action (using planner_main_engine)\n{'='*80}")

# The prompt for the Planner. It receives all current context.

prompt_next_step = f"""Task: Determine the optimal next step to address the query using available tools and previous steps.

Context:

- **Query:** {question}

- **Query Analysis:** {query_analysis}

- **Available Tools:** {json.dumps(self.available_tools)}

- **Toolbox Metadata:** {json.dumps(self.toolbox_metadata, indent=2)}

- **Previous Steps:** {json.dumps(self.memory.get_actions())}

Instructions:

1. Analyze the query, previous steps, and available tools.

2. Select the **single best tool** for the next step.

3. Formulate a specific, achievable **sub-goal** for that tool.

4. Provide all necessary **context** (data, file names, variables) for the tool to function.

Response Format:

1. **Justification:** Explain your choice of tool and sub-goal.

2. **Context:** Provide all necessary information for the tool.

3. **Sub-Goal:** State the specific objective for the tool.

4. **Tool Name:** State the exact name of the selected tool."""

# The LLM must respond in the format of the NextStep Pydantic model.

next_step = self.llm_planner_main.generate(prompt_next_step, response_format=NextStep)

print(f"[Planner Justification]: {next_step.justification}\n[Selected Tool]: {next_step.tool_name}\n[Sub-Goal]: {next_step.sub_goal}")

return next_step

Solver.solve_step_1_plan = solve_step_1_plan一旦选定了工具和子目标,Executer智能体便接手,它的任务是将高层子目标转化为精确的可执行 Python 代码,调用所选工具并使用正确的参数。

python

def solve_step_2_generate_command(self, question: str, next_step: NextStep, step_count: int) -> str:

"""Generates the executable Python command for the chosen tool."""

print(f"\n==> 📝 Step {step_count}: Generating Command for '{next_step.tool_name}' (using executor_engine)")

# The prompt for the Executor. It gets the sub-goal, tool metadata, and relevant context.

prompt_tool_command = f"""Task: Generate a precise command to execute the selected tool.

Context:

- **Query:** {question}

- **Sub-Goal:** {next_step.sub_goal}

- **Tool Name:** {next_step.tool_name}

- **Tool Metadata:** {self.toolbox_metadata.get(next_step.tool_name, {})}

- **Relevant Data:** {next_step.context}

Instructions:

1. Analyze the tool's required parameters from its metadata.

2. Construct valid Python code that addresses the sub-goal using the provided context and data.

3. The command must include at least one call to `tool.execute()`.

4. Each `tool.execute()` call must be assigned to a variable named **`execution`**.

5. Please give the exact numbers and parameters should be used in the `tool.execute()` call.

"""

# The LLM must respond in the format of the ToolCommand Pydantic model.

tool_command_obj = self.llm_executor.generate(prompt_tool_command, response_format=ToolCommand)

command_to_run = tool_command_obj.command.strip()

print(f"[Generated Command]:\n```python\n{command_to_run}\n```")

return command_to_run

Solver.solve_step_2_generate_command = solve_step_2_generate_command这就是智能体与世界互动的地方,生成的命令被执行。工具实例会在执行调用的本地作用域中公开,并捕获结果。执行过程中的任何错误都会被捕获并存储在结果中,这样智能体可以看到命令失败,并在下一步中可能进行纠正。

python

def solve_step_3_execute_command(self, next_step: NextStep, command_to_run: str, step_count: int):

"""Executes the generated command and stores the result in memory."""

print(f"\n==> 🛠️ Step {step_count}: Executing Command for '{next_step.tool_name}'")

tool_instance = self.tool_instances_cache.get(next_step.tool_name)

local_context = {'tool': tool_instance}

if not tool_instance:

result = f"Error: Tool '{next_step.tool_name}' not found."

else:

try:

# Execute the command. The result must be stored in a variable named 'execution'.

exec(command_to_run, {}, local_context)

result = local_context.get('execution', "Error: No 'execution' variable returned.")

except Exception as e:

result = f"Execution Error: {str(e)}"

# Sanitize and truncate the result before adding it to memory.

serializable_result = make_json_serializable_truncated(result)

self.memory.add_action(step_count, next_step.tool_name, next_step.sub_goal, command_to_run, serializable_result)

print(f"[Execution Result]:\n{json.dumps(serializable_result, indent=2)}")执行动作后,智能体必须暂停并反思。Verifier智能体会审查所有内容,包括原始查询、初始分析以及完整的动作和结果记忆,以确定查询是否已被完全回答。它的输出是一个简单但关键的布尔值:stop_signal。如果为True ,循环终止。如果 False,代理将进入下一次迭代。

python

def solve_step_4_verify(self, question: str, query_analysis: QueryAnalysis, step_count: int) -> bool:

"""Verifies if the task is complete, returning True to stop or False to continue."""

print(f"\n==> 🤖 Step {step_count}: Verifying Context (using verifier_engine)")

# The prompt for the Verifier. It sees the full state of the problem.

prompt_verify = f"""Task: Evaluate if the current memory is complete and accurate enough to answer the query, or if more tools are needed.

Context:

- **Query:** {question}

- **Available Tools:** {json.dumps(self.available_tools)}

- **Toolbox Metadata:** {json.dumps(self.toolbox_metadata, indent=2)}

- **Initial Analysis:** {query_analysis}

- **Memory (Tools Used & Results):** {json.dumps(self.memory.get_actions())}

Instructions:

1. Review the query, initial analysis, and memory.

2. Assess the completeness of the memory: Does it fully address all parts of the query?

3. Determine if any unused tools could provide missing information.

4. If the memory is sufficient, explain why and set 'stop_signal' to true.

5. If more information is needed, explain what's missing, which tools could help, and set 'stop_signal' to false.

"""

# The LLM must respond in the format of the MemoryVerification Pydantic model.

verification = self.llm_verifier.generate(prompt_verify, response_format=MemoryVerification)

conclusion = 'STOP' if verification.stop_signal else 'CONTINUE'

print(f"[Verifier Analysis]: {verification.analysis}\n[Verifier Conclusion]: {conclusion}")

return verification.stop_signal

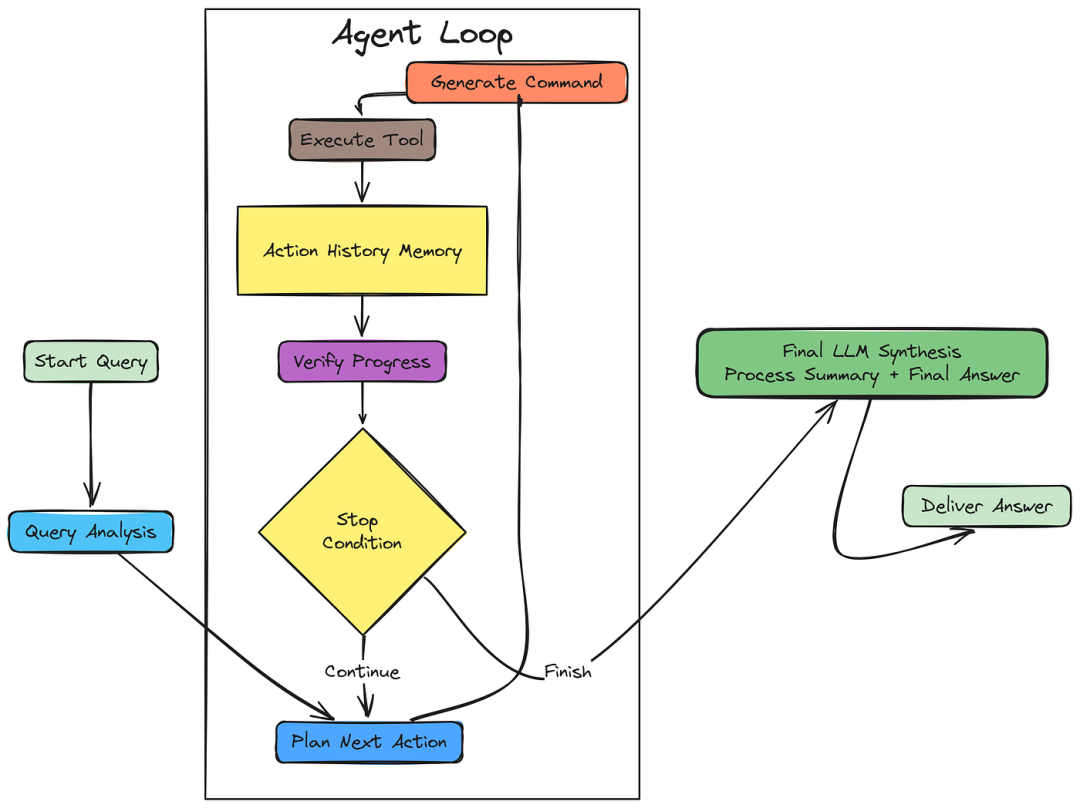

Solver.solve_step_4_verify = solve_step_4_verify一旦Verifier发出停止信号,循环即告终止。智能体现在已经在记忆中拥有了所有必要的信息。

3.4 编排代理循环

最后一步是使用最后一次 LLM 调用,将所有动作和结果综合成一个统一、连贯、易读的答案,直接回答原始用户查询。

python

def solve_final_step_synthesize(self, question: str, query_analysis: QueryAnalysis) -> str:

"""Generates the final, synthesized answer for the user."""

print(f"\n{'='*80}\n==> 🐙 Generating Final Output (using planner_fixed_engine)\n{'='*80}")

# The prompt for the final synthesis. It gets the query and the complete action history.

prompt_final_output = f"""Task: Generate a concise final answer to the query based on all provided context.

Context:

- **Query:** {question}

- **Initial Analysis:** {query_analysis}

- **Actions Taken:** {json.dumps(self.memory.get_actions())}

Instructions:

1. Review the query and the results from all actions.

2. Synthesize the key findings into a clear, step-by-step summary of the process.

3. Provide a direct, precise answer to the original query.

Output Structure:

1. **Process Summary:** A clear, step-by-step breakdown of how the query was addressed.

2. **Answer:** A direct and concise final answer to the query."""

# This is a free-form generation, no Pydantic model is needed.

return self.llm_planner_fixed.generate(prompt_final_output)

Solver.solve_final_step_synthesize = solve_final_step_synthesize

"""

#### The Main `solve` Method

Finally, we assemble all the previously defined step methods into the main `solve` method. This method orchestrates the calls to each step function in the correct order, manages the loop, and returns the final answer.

"""

def solve(self, question: str):

"""Main method to run the entire agentic loop from start to finish."""

# Step 0: Initial Analysis

query_analysis = self.solve_step_0_analyze(question)

step_count = 0

while step_count < self.max_steps:

step_count += 1

# Step 1: Plan next action

next_step = self.solve_step_1_plan(question, query_analysis, step_count)

# Step 2: Generate command

command_to_run = self.solve_step_2_generate_command(question, next_step, step_count)

# Step 3: Execute command

self.solve_step_3_execute_command(next_step, command_to_run, step_count)

# Step 4: Verify and decide whether to continue

should_stop = self.solve_step_4_verify(question, query_analysis, step_count)

if should_stop:

break

# Final Step: Synthesize the final answer

final_answer = self.solve_final_step_synthesize(question, query_analysis)

return final_answer

# Monkey-patch the main solve method onto the Solver class.

Solver.solve = solve所有组件组装完成后,是时候运行我们的智能体了。construct_solver 函数整合了我们特定运行的配置,定义每个角色使用哪种 LLM 模型以及应启用哪些工具。

四、分析查询幻觉

我们运行一个复杂的查询,调用多个工具 multi-tuns,看看输出......

python

def construct_solver():

"""Configures and constructs an instance of the Solver agent."""

# Define which LLM model to use for each agent role.

planner_main_engine, planner_fixed_engine, verifier_engine, executor_engine = MODEL_NAME, MODEL_NAME, MODEL_NAME, MODEL_NAME

# Define the list of tools the agent should have access to.

enabled_tools = ["Base_Generator_Tool", "Python_Coder_Tool", "Google_Search_Tool", "Wikipedia_RAG_Search_Tool"]

# Define the specific LLM engine for each tool. 'Default' means the tool doesn't need an LLM.

tool_engine = [MODEL_NAME, MODEL_NAME, "Default", MODEL_NAME]

return Solver(planner_main_engine, planner_fixed_engine, verifier_engine, executor_engine, enabled_tools, tool_engine, max_steps=5)让我们观察我们的智能体架构师处理复杂问题......

python

# ===================

# RUN THE SOLVER

# ===================

# 1. Create the solver instance based on our configuration.

solver = construct_solver()

# 2. Define the complex, multi-step query we want the agent to solve.

query_to_solve = "Calculate 12 squared, and then use Wikipedia to find out what major historical event happened in that year (AD)."

# 3. Call the .solve() method to start the agentic workflow.

final_answer = solver.solve(query_to_solve)

# 4. Print the final, synthesized answer produced by the agent.

print(f"\n{'='*80}\n✅ FINAL ANSWER\n{'='*80}\n{final_answer}")

python

# ==> Initializing LLM engines...

Planner / Verifier / Executor: Qwen/Qwen2.5-7B-Instruct

==> Initializing agent...

Enabled tools:

['Generalist_Solution_Generator_Tool',

'Python_Code_Generator_Tool',

'Ground_Google_Search_Tool',

'Wikipedia_RAG_Search_Tool']

# ================================================================================

🔍 Query: Calculate 12 squared, then find a major historical event in that year AD

# ================================================================================

==> Step 0: Query Analysis

[Planner Summary]:

- Tasks: math calculation + historical lookup

- Relevant Tools:

- Ground_Google_Search_Tool

- Python_Code_Generator_Tool

- Note: Wikipedia tool marked "optional" <-- ⚠️ Poor planning choice

# ================================================================================

==> Step 1: Planning

# ================================================================================

Sub-goal: "Find historical year first"

Selected Tool: Ground_Google_Search_Tool <-- ❌ Wrong order

Execution:

tool.execute(query="important events in year 12 squared")

Output:

"Events around early Roman Empire..."

[Agent Memory]:

- Assumed year ≈ 120 AD <-- ❌ premature assumption

# ================================================================================

==> Step 2: Planning

# ================================================================================

Sub-goal: "Confirm math using Python"

Selected Tool: Python_Code_Generator_Tool

Generated Code:

result = 12 ** 2

print(result)

Output:

144 <-- ✅ correct calculation

[Agent Memory Updated]:

- Correct year = 144 AD

# ================================================================================

==> Step 3: Planning

# ================================================================================

Sub-goal: "Verify using Wikipedia"

Selected Tool: Wikipedia_RAG_Search_Tool

Execution:

tool.execute(query="events year 144")

Output:

{ "relevant_pages": [] }

[Planner Reaction]:

- Concluded: "No major events exist in 144 AD" <-- ❌ wrong inference

# ================================================================================

==> Step 4: Planning

# ================================================================================

Sub-goal: "Generate historical answer directly"

Selected Tool: Generalist_Solution_Generator_Tool <-- ⚠️ risky fallback

Output:

"In 144 AD, Rome expanded trade routes in North Africa."

[Agent Memory]:

- Accepted without verification <-- ⚠️ weak reasoning

# ================================================================================

==> Step 5: Verifier

# ================================================================================

[Verifier Analysis]:

- Math correct ✔

- Historical info plausible ✔

- Tool usage acceptable ✔ <-- ❌ missed planning errors

Stop Signal: TRUE

# ================================================================================

✅ FINAL ANSWER

# ================================================================================

12 squared = 144

Major historical event in 144 AD:

"Rome expanded trade routes across North Africa,

marking a significant economic milestone."可以从智能体推理流程中看到,他们在规划和推断过程中犯了几个关键错误。让我们来了解到底哪里出了问题:

-

糟糕的规划选择: 智能体选择先搜索历史事件,再确认数学计算。这导致它寻找错误年份(公元 12 年,而非公元 144 年)。

-

过早的假设: 特工根据初步搜索结果推测年份大约是公元 120 年左右,但事实并非如此。

-

错误的推断: 在没有找到公元 144 年相关页面后,智能体错误地认为那一年没有重大事件,而没有考虑到搜索查询可能过于狭窄或信息可能缺乏充分记录。

-

风险后备方案: 这位智能体采用了一种通用工具来生成历史信息,却没有充分的基础,这导致了一个关于罗马在公元 144 年扩展北非贸易路线的幻觉回答。

可以看到,即使有多种工具和验证步骤,我们的智能体系统仍然可能出现重大错误。我们现在将在代理架构师上实施基于 GRPO 的训练,以解决这些问题,提升其推理和规划能力。

五、GRPO 算法实现

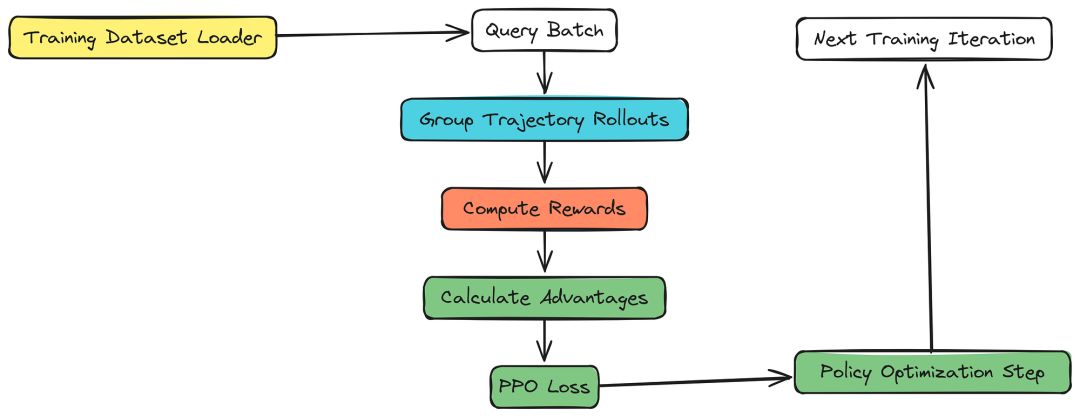

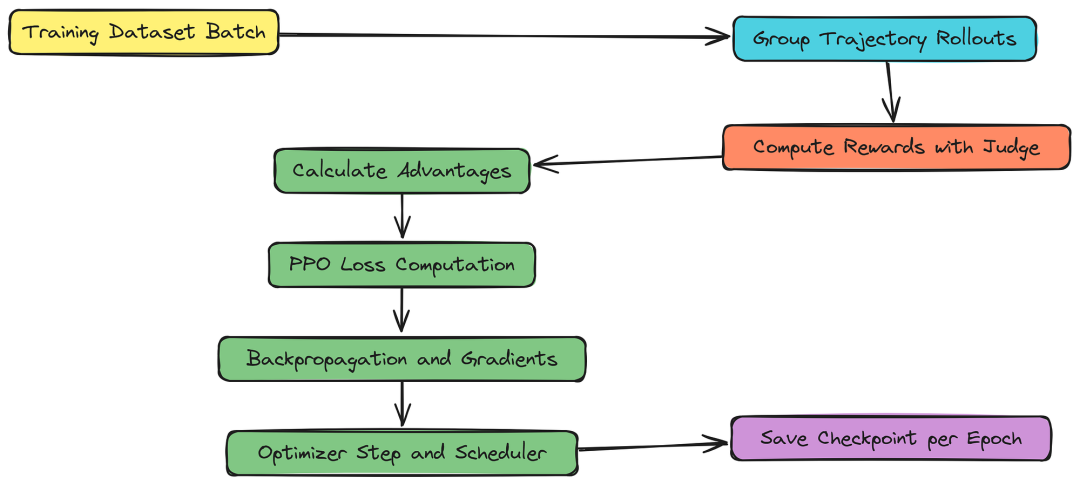

既然我们已经了解了 GRPO 在智能体系统中的工作原理,接下来让我们用 GRPO 实现智能体架构师的训练循环,包括生成轨迹、计算奖励,并根据轨迹的相对优势更新政策模型。

首先导入所有必要的 Python 模块,设置硬件设备,并通过配置数据类定义全局超参数和模型路径。

python

# Import standard libraries

import os

import re

import json

import torch

import random

import numpy as np

import torch.nn.functional as F

from tqdm import tqdm

from typing import List, Dict, Any, Tuple, Optional

from dataclasses import dataclass, field

from torch.utils.data import DataLoader, Dataset

from datasets import load_dataset

from torch.optim import AdamW我们还需要专门的库来实现大型语言模型交互、工具实现和 GRPO 算法。Transformers 库用于加载和微调我们的语言模型, 而 peft 则允许我们应用参数高效的微调技术,如 LoRA。

python

# Transformers & PEFT for efficient training

from transformers import (

AutoModelForCausalLM,

AutoTokenizer,

BitsAndBytesConfig,

get_scheduler

)

from peft import (

LoraConfig,

get_peft_model,

prepare_model_for_kbit_training,

PeftModel

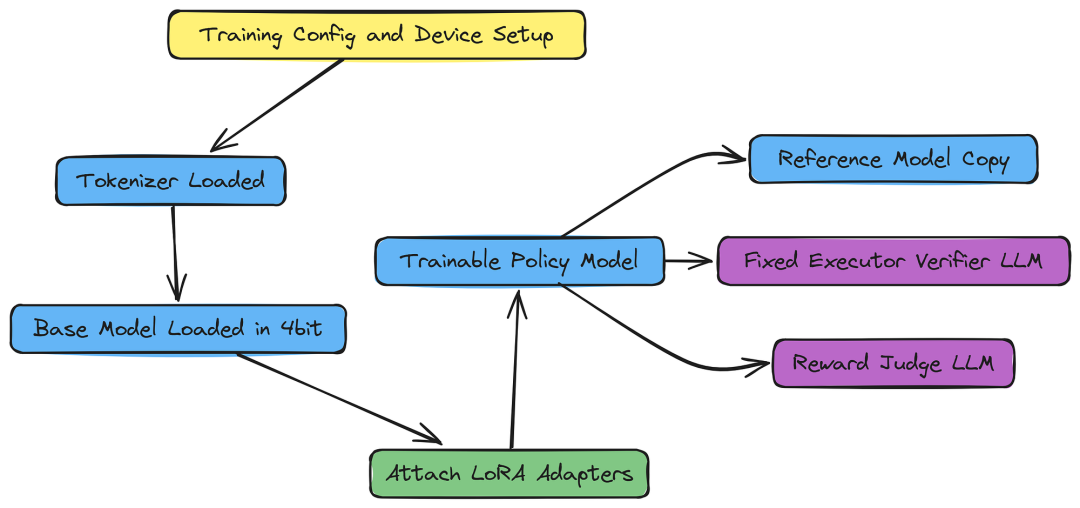

)TrainingConfig 数据类包含所有关键超参数。请注意模型的精心选择: 可训练的策略/规划器使用较小的 Qwen 1.5B 模型,而更强大的模型(Qwen 2.5B,可能外部托管)用于固定环境 (执行者/验证者)和奖励法官 (GPT-4o)。

这种区分很重要:我们训练较小的模型做出最优决策,但我们信任较大的模型能够模拟复杂环境并准确评估性能。

python

@dataclass

class TrainingConfig:

"""Global configuration for the training run, using Python's dataclass for structured setup."""

# --- Data Config ---

data_file: str = "./data/train/combined_train.parquet" # Input path for the combined training data.

# --- Model Config ---

base_model_name: str = "Qwen/Qwen2-1.5B-Instruct" # The model being trained (the Policy/Planner).

fixed_model_name: str = "Qwen/Qwen2.5-7B-Instruct" # The powerful, fixed model for Execution/Verification.

fixed_model_api_base: str = "http://localhost:8001/v1" # Endpoint for the fixed model (assumes a vLLM server).

# --- Training Hyperparameters ---

run_name: str = "flow_grpo_training_run_v1"

output_dir: str = "./agentflow_checkpoints" # Directory to save checkpoints.

learning_rate: float = 1e-6

train_batch_size: int = 2 # Number of unique queries processed per optimization loop.

rollout_n: int = 4 # N: Number of trajectories generated per unique query (GRPO group size).

gradient_accumulation_steps: int = 4 # Accumulate gradients over this many effective steps before updating weights.

num_train_epochs: int = 1

# --- GRPO/PPO Hyperparameters ---

ppo_clip_eps: float = 0.2 # PPO Clipping range (e.g., 20%). Prevents drastic policy updates.

kl_coef: float = 0.01 # Coefficient for the KL-Divergence penalty (KL regularization).

max_grad_norm: float = 1.0 # Gradient clipping value.

# --- Agent Execution Config ---

max_turns: int = 5 # Max steps the agent can take for a single query (trajectory length limit).

max_seq_length: int = 4096 # Context window limit for the base model.

# --- Tools Config ---

# The list of tools the agent can use.

enabled_tools: List[str] = field(default_factory=lambda: ["Python_Coder_Tool", "Wikipedia_RAG_Search_Tool", "Google_Search_Tool", "Base_Generator_Tool"])

# The engine used by each tool instance (can be different from the Policy model).

tool_engine: List[str] = field(default_factory=lambda: ["gpt-4o-mini", "gpt-4o-mini", "gpt-4o-mini", "gpt-4o-mini"])

# --- Reward Config ---

reward_model_name: str = "gpt-4o" # The high-quality model used as the impartial Judge.我们的基础模型是一个 1.5B 参数模型,体积小到可以用 QLoRA 和 LoRA 适配器高效微调,同时又足够强大来学习复杂的推理模式。固定模型和奖励模型更大(25 亿和 GPT-4o),以确保它们能提供丰富的环境和准确的奖励。

让我们来了解一些训练超参数:

- train_batch_size:这是我们在每个优化步骤中处理的唯一查询数量。每个查询都会生成 rollout_n 条轨迹,因此策略更新的有效批处理大小为 train_batch_size * rollout_n。

- rollout_n:这是我们为每个唯一查询生成的轨迹数量。这对 GRPO 算法至关重要,因为它允许我们根据组内所有轨迹的奖励计算每个轨迹的相对优势。

- ppo_clip_eps:这是 PPO 的clip参数,它防止新策略在更新时偏离旧策略过多,有助于保持训练稳定性。

- kl_coef:该系数控制了 KL 发散惩罚的强度,也有助于防止策略在一次更新中发生过大变化。

- max_grad_norm:这是梯度clip的数值,防止梯度爆炸并帮助保持训练稳定。

5.1 策略模型初始化(QLoRA 和 PEFT)

我们现在可以初始化配置并设置设备进行训练。

python

# Initialize Config

config = TrainingConfig()

os.makedirs(config.output_dir, exist_ok=True) # Ensure output directory exists.

# Set Device (prioritize GPU)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(f"Using device: {device}")

### Output:

Using device: cuda在强化学习中,每一步交互都需要被准确记录,以计算策略梯度。TurnData 类捕捉策略模型 (规划器)在智能体多步骤决策过程中生成的关键信息。

python

@dataclass

class TurnData:

"""Stores data for a single step (turn) in a trajectory for training."""

prompt_str: str # The input prompt (current state) given to the Planner LLM.

action_str: str # The LLM's full output (the action plan).

prompt_ids: torch.Tensor # Tokenized version of the prompt.

action_ids: torch.Tensor # Tokenized version of the action.

# CRITICAL: The log likelihood of the action tokens under the *current* Policy model.

# This is $log(\pi_{old}(a|s))$ in the PPO formulation.

action_log_probs: torch.Tensor在 TurnData 中,我们存储原始字符串(用于解释性和调试)和标记化版本(用于训练)。action_log_probs 很重要,因为它代表了旧政策下行动的概率。

我们现在可以初始化训练系统的核心组件:

-

Tokenizer: 对于将文本提示词转换为token再转换回来至关重要。

-

策略模型(policy_model): 我们正在训练的模型。我们使用 QLoRA(量化低秩适应) 以 4 位精度加载,大幅降低显存使用,同时使用 PEFT(参数高效微调) 连接 LoRA 适配器,使我们只能训练模型参数的一小部分。

-

参考模型(ref_model): 在 PPO/GRPO 中,之前的策略是需要计算重要性比。这里,我们最初将参考模型设置为与策略模型相等,随后使用上下文管理器(disable_adapter())计算参考日志概率,不受当前 LoRA 权重的影响。

-

固定外部LLM: 初始化执行/验证所需的LLM,并奖励计算。

python

print("--> Loading Tokenizer...")

tokenizer = AutoTokenizer.from_pretrained(config.base_model_name, trust_remote_code=True)

# Ensure padding token exists and set padding side to left (standard for generation/decoding).

if tokenizer.pad_token is None:

tokenizer.pad_token = tokenizer.eos_token

tokenizer.padding_side = "left"

print(f"--> Loading Trainable Planner Model ({config.base_model_name})...")

# Load model in 4-bit using BitsAndBytesConfig (QLoRA).

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4", # Normalized Float 4-bit quantization.

bnb_4bit_compute_dtype=torch.bfloat16 # Use bfloat16 for computation.

)

policy_model = AutoModelForCausalLM.from_pretrained(

config.base_model_name,

quantization_config=bnb_config,

device_map="auto", # Automatically distributes the model across available GPUs.

trust_remote_code=True,

use_cache=False # Disable cache for gradient checkpointing during training.

)量化会缩小模型大小,这样我们可以以 4 位精度加载 1.5 亿参数模型,这在训练中更高效且更高效。对于某些带有自定义加载或分词代码的模型,trust_remote_code=True 是必要的。

现在我们可以为使用 LoRA 适配器训练模型做准备。我们针对transformer架构中的所有主要投影层,确保模型能够学习有效的适应性,以应对复杂的推理任务。

python

# Prepare model for k-bit training and define LoRA configuration.

policy_model = prepare_model_for_kbit_training(policy_model)

peft_config = LoraConfig(

r=16,

lora_alpha=32,

# Target all major projection layers for optimal performance.

target_modules=["q_proj", "k_proj", "v_proj", "o_proj", "gate_proj", "up_proj", "down_proj"],

lora_dropout=0.05,

bias="none",

task_type="CAUSAL_LM"

)

policy_model = get_peft_model(policy_model, peft_config)

policy_model.print_trainable_parameters()

# The reference model starts identical to the policy model.

ref_model = policy_model

print("--> Initializing Fixed LLM Engines (Executor, Verifier, Reward)...")

try:

# Initialize the fixed LLM for executing tool commands and verification logic.

fixed_llm = create_llm_engine(config.fixed_model_name, base_url=config.fixed_model_api_base, temperature=0.0)

# Initialize the reward LLM (Judge).

reward_llm = create_llm_engine(config.reward_model_name, temperature=0.0)

# Test connections to external APIs/servers.

fixed_llm.generate("Ping")

reward_llm.generate("Ping")

print(" ✅ Fixed LLM and Reward LLM connections successful.")

except Exception as e:

# Halt execution if critical external components are unreachable.

raise ConnectionError(f"Could not connect to one of the LLM endpoints. Ensure servers are running. Error: {e}")应用 LoRA 后,我们打印出可训练参数数量,以确认我们仅训练了模型总参数的一小部分。

python

### Output:

--> Loading Tokenizer...

--> Loading Trainable Planner Model (Qwen/Qwen2-1.5B-Instruct)...

trainable params: 16,777,216 || all params: 1,518,804,992 || trainable%: 1.1046

--> Initializing Fixed LLM Engines (Executor, Verifier, Reward)...

✅ Fixed LLM and Reward LLM connections successful.我们训练的参数是 1670 万 ,比例为 1.5B,约占总参数的 1.1%, 这得益于 LoRA 的高效。

5.2 智能体系统包装器

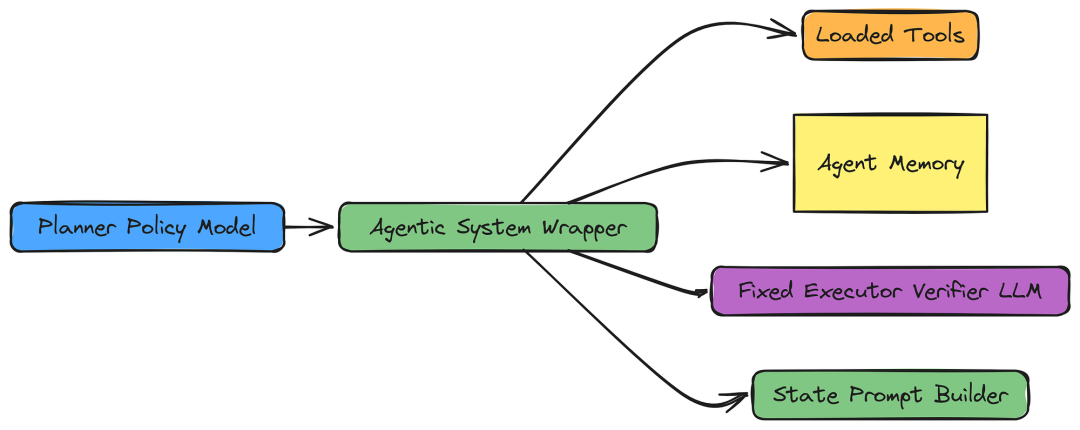

AgenticSystem 类模拟规划器策略运行的环境,它涵盖了单一训练推广所需的核心组成部分:

-

工具管理: 加载并提供专用工具的访问权限。

-

状态生成: 根据查询和记忆为规划师制定提示词(State St)。

-

动作生成与对数概率计算: 使用策略模型生成下一个动作,并捕捉该动作的对数概率,这对 PPO 目标至关重要。

python

class AgenticSystem:

"""Manages the interaction between the Policy, the Tools, and the Fixed LLM Environment."""

def __init__(self, policy_model, tokenizer, fixed_llm):

self.policy_model = policy_model # The trainable model.

self.tokenizer = tokenizer

self.fixed_llm = fixed_llm # The external Executor/Verifier model.

self.tools_map = self._load_tools() # Dictionary of active tool instances.

self.memory = None # Agent's memory instance, reset per trajectory.

def _load_tools(self) -> Dict[str, BaseTool]:

"""Initializes the tools specified in the global configuration."""

print("--> Loading Agent Tools...")

tools = {}

# Mapping tool names to their respective classes from utils.py.

tool_classes = {

"Python_Coder_Tool": Python_Coder_Tool,

"Wikipedia_RAG_Search_Tool": Wikipedia_Search_Tool,

"Base_Generator_Tool": Base_Generator_Tool

}

for i, name in enumerate(config.enabled_tools):

engine = config.tool_engine[i]

if name in tool_classes:

print(f" - Loading '{name}' with engine '{engine}'")

# Instantiate the tool, passing the required engine name.

tools[name] = tool_classes[name](model_string=engine)

print(" ✅ Tools loaded.")

return tools该方法将当前上下文(查询和内存)格式化为连贯的提示词。该提示表示政策模型观察到的当前状态(St)。

python

def build_planner_prompt(self, question, available_tools, memory_actions):

"""Constructs the state prompt for the Planner model, providing all relevant context."""

return f"""Task: Determine the optimal next step to address the query.

Context:

- Query: {question}

- Available Tools: {json.dumps(available_tools)} # List of tools for the Planner to choose from.

- Previous Steps: {json.dumps(memory_actions)} # The history (memory) of executed actions.

Response Format:

1. Justification: ...

2. Context: ...

3. Sub-Goal: ...

4. Tool Name: ...

Response:""" # The Planner continues the prompt from here, generating the action.

# Attaching the method to the class dynamically.

AgenticSystem.build_planner_prompt = build_planner_prompt这可以说是策略推广中最复杂的部分。

5.3 生成轨迹与计算对数概率

对于强化学习训练,我们需要策略模型中的两个东西:生成的动作(文本计划)和生成该token序列的精确对数概率。

该对数概率(logπ(a∣s))是 PPO 重要性比的基础。

python

@torch.no_grad()

def generate_planner_action(self, prompt_str: str) -> Tuple[str, torch.Tensor, torch.Tensor]:

"""Generates a thought/action plan from the policy model and computes log probabilities."""

self.policy_model.eval() # Policy generation is done in evaluation mode.

inputs = self.tokenizer(prompt_str, return_tensors="pt", truncation=True, max_length=config.max_seq_length).to(device)

# Generate with sampling to allow exploration and diverse trajectories (crucial for GRPO).

outputs = self.policy_model.generate(

**inputs,

max_new_tokens=512,

do_sample=True,

temperature=0.7, # Higher temperature for exploration.

top_p=0.9,

pad_token_id=self.tokenizer.eos_token_id,

output_scores=True, # MUST be True to get the logits (scores) for log prob calculation.

return_dict_in_generate=True

)

# Extract sequences (only the generated part, excluding the input prompt).

generated_ids = outputs.sequences[0, inputs.input_ids.shape[1]:]

generated_text = self.tokenizer.decode(generated_ids, skip_special_tokens=True)

# Compute Log Probs from the raw scores (logits).

# 1. Stack scores: (num_generated_tokens x 1 x vocab_size) -> (1 x num_generated_tokens x vocab_size).

all_logits = torch.stack(outputs.scores, dim=1)

# 2. Convert logits to log probabilities using log_softmax.

log_probs = F.log_softmax(all_logits, dim=-1)

# 3. Gather the log probs corresponding to the specific tokens the model actually chose.

# generated_ids: [seq_len] -> unsqueeze to [1, seq_len, 1] for torch.gather.

action_log_probs = log_probs.gather(2, generated_ids.unsqueeze(0).unsqueeze(-1)).squeeze(-1).squeeze(0)

# Return action text, token IDs, and their log probabilities (moved to CPU).

return generated_text, generated_ids.cpu(), action_log_probs.cpu()

AgenticSystem.generate_planner_action = generate_planner_action策略只生成一个计划 (行动为 AtAt)。环境必须执行该计划(执行),并决定代理是否继续(验证)。这一任务被委托给强大的固定 LLM,以确保工具的使用和反思可靠,使其与可训练的策略模型脱钩。

python

def run_executor_verifier(self, query: str, plan: NextStep) -> Tuple[str, str, str]:

"""Executes the chosen tool and uses the Fixed LLM to verify the result."""

command_used, tool_output = "N/A", f"Error: Tool '{plan.tool_name}' not found."

# 1. Execute Tool

if plan.tool_name in self.tools_map:

tool = self.tools_map[plan.tool_name]

# Prompt the fixed LLM (Executor) to write the exact Python command.

executor_prompt = f"""Task: Generate a precise command to execute the selected tool.

Context:

- **Query:** {query}

- **Sub-Goal:** {plan.sub_goal}

- **Tool Name:** {plan.tool_name}

- **Relevant Data:** {plan.context}

Instructions: Construct valid Python code to call `tool.execute()` with the correct arguments to achieve the sub-goal. Assign the result to a variable named `execution`. Output only the code wrapped in ```python```."""

try: