基于协同过滤的电影推荐与大数据分析的可视化系统

在大数据时代,数据分析和可视化是从大量数据中提取有价值信息的关键步骤。本文将介绍如何使用Python进行数据爬取,Hive进行数据分析,ECharts进行数据可视化,以及基于协同过滤算法进行电影推荐。

目录

1、豆瓣电影数据爬取

2、hive数据分析

3、echarts数据可视化

4、基于系统过滤进行电影推荐

1. 豆瓣电影数据爬取

首先,我们使用Python爬取豆瓣电影的相关数据。爬取的数据包括电影名称、评分、评价人数、电影详情链接、图片链接、摘要和相关信息,然后将mysql数据存到mysql中。

python

import pymysql

from bs4 import BeautifulSoup

import re # 正则表达式,进行文字匹配

import urllib.request, urllib.error # 指定URL,获取网页数据

import xlwt # 进行excel操作

from data.mapper import savedata2mysql

def main():

baseurl = "https://movie.douban.com/top250?start="

datalist = getdata(baseurl)

savedata2mysql(datalist)

findLink = re.compile(r'<a href="(.*?)">') # 正则表达式模式的匹配,影片详情

findImgSrc = re.compile(r'<img.*src="(.*?)"', re.S) # re.S让换行符包含在字符中,图片信息

findTitle = re.compile(r'<span class="title">(.*)</span>') # 影片片名

findRating = re.compile(r'<span class="rating_num" property="v:average">(.*)</span>') # 找到评分

findJudge = re.compile(r'<span>(\d*)人评价</span>') # 找到评价人数 #\d表示数字

findInq = re.compile(r'<span class="inq">(.*)</span>') # 找到概况

findBd = re.compile(r'<p class="">(.*?)</p>', re.S) # 找到影片的相关内容,如导演,演员等

##获取网页数据

def getdata(baseurl):

datalist = []

for i in range(0, 10):

url = baseurl + str(i * 25) ##豆瓣页面上一共有十页信息,一页爬取完成后继续下一页

html = geturl(url)

soup = BeautifulSoup(html, "html.parser") # 构建了一个BeautifulSoup类型的对象soup,是解析html的

for item in soup.find_all("div", class_='item'): ##find_all返回的是一个列表

data = [] # 保存HTML中一部电影的所有信息

item = str(item) ##需要先转换为字符串findall才能进行搜索

link = re.findall(findLink, item)[0] ##findall返回的是列表,索引只将值赋值

data.append(link)

imgSrc = re.findall(findImgSrc, item)[0]

data.append(imgSrc)

titles = re.findall(findTitle, item) ##有的影片只有一个中文名,有的有中文和英文

if (len(titles) == 2):

onetitle = titles[0]

data.append(onetitle)

twotitle = titles[1].replace("/", "") # 去掉无关的符号

data.append(twotitle)

else:

data.append(titles)

data.append(" ") ##将下一个值空出来

rating = re.findall(findRating, item)[0] # 添加评分

data.append(rating)

judgeNum = re.findall(findJudge, item)[0] # 添加评价人数

data.append(judgeNum)

inq = re.findall(findInq, item) # 添加概述

if len(inq) != 0:

inq = inq[0].replace("。", "")

data.append(inq)

else:

data.append(" ")

bd = re.findall(findBd, item)[0]

bd = re.sub('<br(\s+)?/>(\s+)?', " ", bd)

bd = re.sub('/', " ", bd)

data.append(bd.strip()) # 去掉前后的空格

datalist.append(data)

return datalist

def geturl(url):

head = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) "

"AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36"

}

req = urllib.request.Request(url, headers=head)

try: ##异常检测

response = urllib.request.urlopen(req)

html = response.read().decode("utf-8")

except urllib.error.URLError as e:

if hasattr(e, "code"): ##如果错误中有这个属性的话

print(e.code)

if hasattr(e, "reason"):

print(e.reason)

return html

2. 数据分析

接下来,我们将爬取的数据导入Hive进行分析。Hive是一个基于Hadoop的数据仓库工具,提供了类SQL的查询功能。

导入数据到Hive

首先,将数据上传到HDFS(Hadoop分布式文件系统):

powershell

hdfs dfs -put douban_movies.csv /user/hive/warehouse/douban_movies.csv在Hive中创建一个表并导入数据:

powershell

CREATE TABLE douban_movies (

title STRING,

rating FLOAT,

review_count INT,

link STRING,

image STRING,

summary STRING,

info STRING

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY ','

STORED AS TEXTFILE;

powershell

LOAD DATA INPATH '/user/hive/warehouse/douban_movies.csv' INTO TABLE douban_movies;数据分析

sql

SELECT rating, COUNT(*) AS movie_count

FROM douban_movies

GROUP BY rating

ORDER BY rating DESC;

sql

select chinese_name,rating

from douban_movies order

by rating desc limit 20

sql

select chinese_name,review_count

from douban_movies

order by review_count desc limit 20

sql

SELECT

type,

COUNT(*) AS movie_count

FROM (

SELECT

CASE

WHEN related_info LIKE '%犯罪%' THEN '犯罪'

WHEN related_info LIKE '%剧情%' THEN '剧情'

WHEN related_info LIKE '%爱情%' THEN '爱情'

WHEN related_info LIKE '%同性%' THEN '同性'

WHEN related_info LIKE '%喜剧%' THEN '喜剧'

WHEN related_info LIKE '%动画%' THEN '动画'

WHEN related_info LIKE '%奇幻%' THEN '奇幻'

WHEN related_info LIKE '%冒险%' THEN '冒险'

ELSE '其他'

END AS type

FROM

douban_movies

) AS subquery

GROUP BY

type

ORDER BY

movie_count DESC;

sql

SELECT

year,

COUNT(*) AS movie_count

FROM (

SELECT

REGEXP_SUBSTR(related_info, '[[:digit:]]{4}') AS year

FROM

douban_movies

) AS subquery

WHERE

year IS NOT NULL

GROUP BY

year

ORDER BY

year desc limit 20;

sql

SELECT

CASE

WHEN related_info LIKE '%美国%' THEN '美国'

WHEN related_info LIKE '%中国%' THEN '中国'

WHEN related_info LIKE '%中国大陆%' THEN '中国'

WHEN related_info LIKE '%中国香港%' THEN '中国香港'

WHEN related_info LIKE '%法国%' THEN '法国'

WHEN related_info LIKE '%日本%' THEN '日本'

WHEN related_info LIKE '%英国%' THEN '英国'

WHEN related_info LIKE '%德国%' THEN '德国'

ELSE '其他'

END AS country,

COUNT(*) AS movie_count

FROM douban_movies

GROUP BY country;3. 数据可视化

为了更直观地展示数据分析结果,我们使用ECharts进行数据可视化。ECharts是一个基于JavaScript的数据可视化库,同时使用django框架查询mysql数据返回给前端。

前端代码

html

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Data Analysis</title>

<!-- 引入 Pyecharts 的依赖库 -->

{{ chart_html | safe }}

<style>

body {

display: flex;

justify-content: center;

align-items: center;

height: 100vh;

margin: 0;

}

.container {

text-align: center;

}

</style>

</head>

<body>

<div class="container">

<h2>{{ button_name }}</h2>

<!-- 其他页面内容... -->

</div>

</body>

</html>后端代码

python

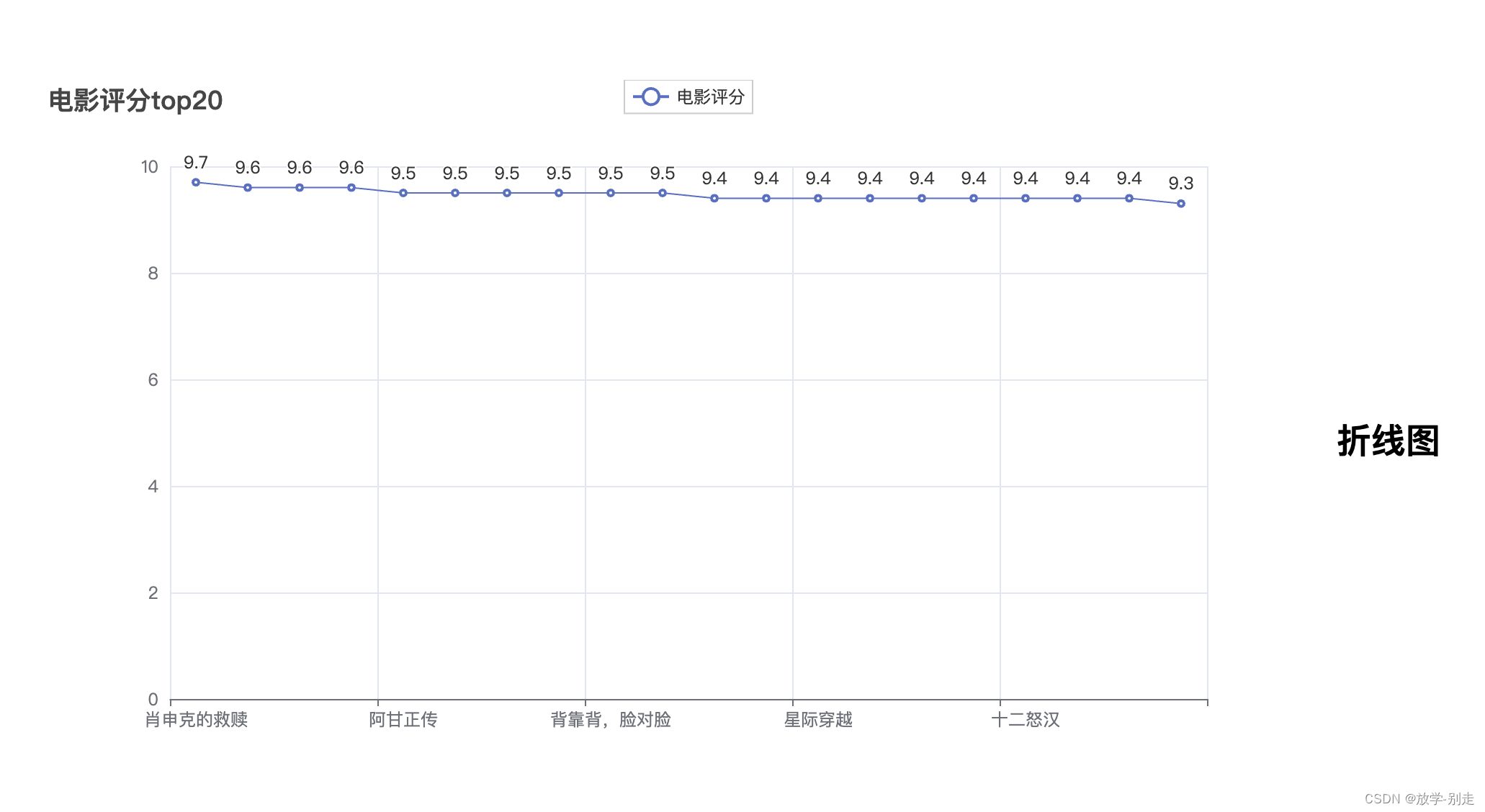

def data_analysis(request, button_id):

if button_id == 1:

x,y = top20_movie_rating()

line_chart = (

Line()

.add_xaxis(xaxis_data=x)

.add_yaxis(series_name="电影评分", y_axis=y)

.set_global_opts(title_opts=opts.TitleOpts(title="电影评分top20"))

)

chart_html = line_chart.render_embed()

button_name = "折线图"

elif button_id == 2:

x,y = movie_review_count()

bar_chart = (

Bar()

.add_xaxis(xaxis_data=x)

.add_yaxis(series_name="电影评论数",y_axis=y)

.set_global_opts(title_opts=opts.TitleOpts(title="电影评论数top20"))

)

chart_html = bar_chart.render_embed()

button_name = "条形图"

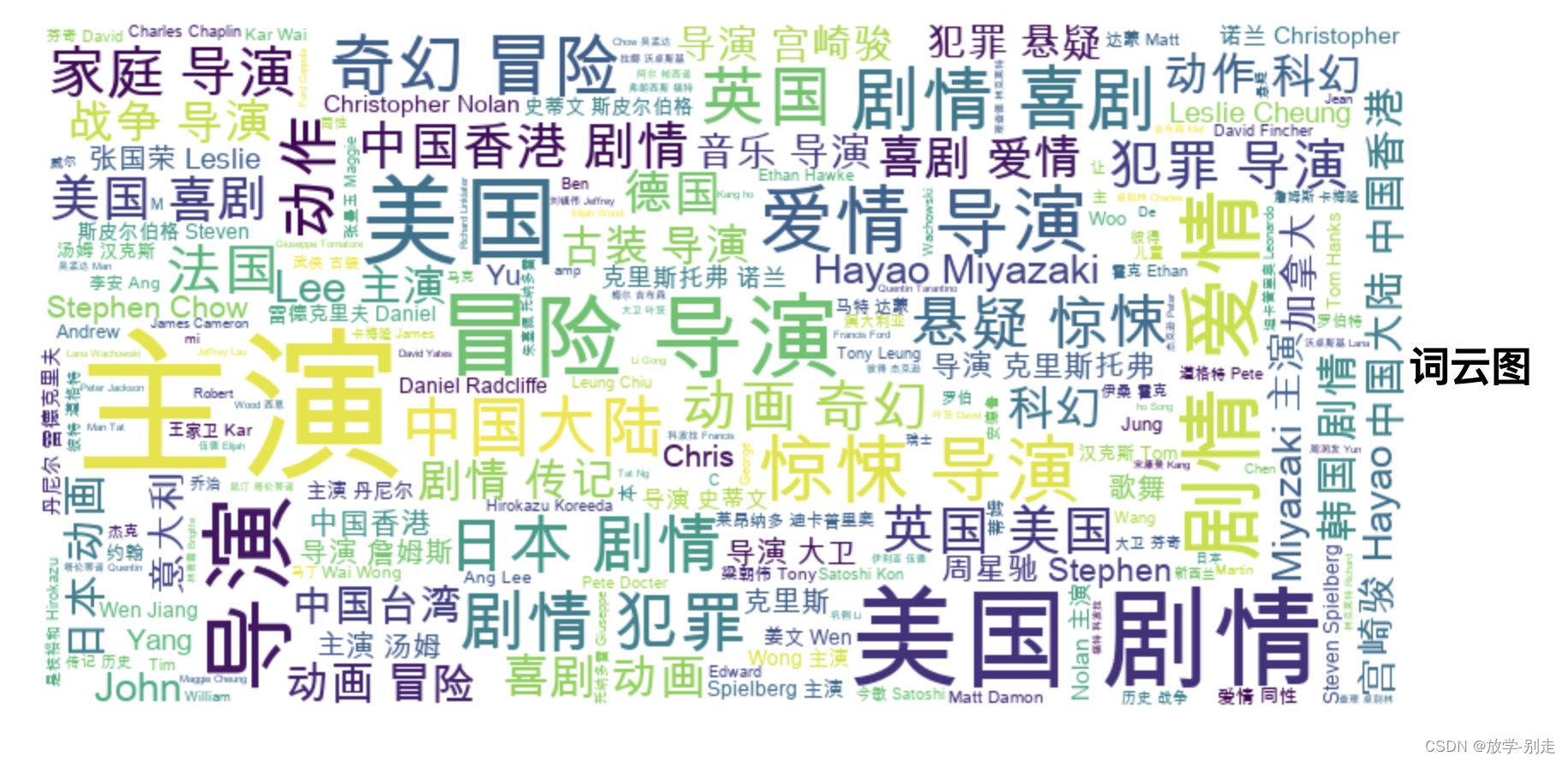

elif button_id == 3:

chart_html = wordcloud_to_html()

button_name = "词云图"

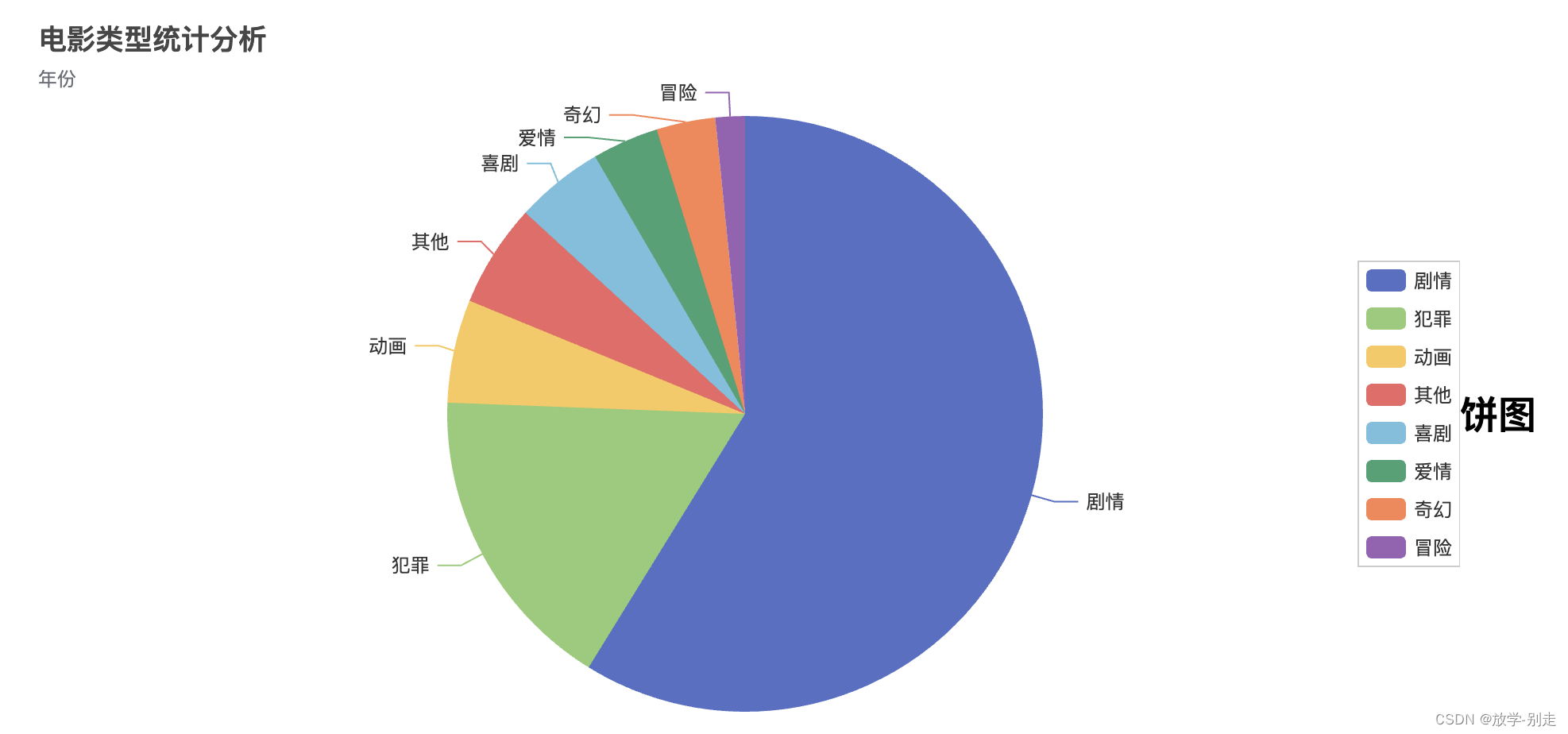

elif button_id == 4:

x, y = movie_type_count()

pie = Pie()

pie.add("", list(zip(x, y)))

pie.set_global_opts(title_opts={"text": "电影类型统计分析", "subtext": "年份"},

legend_opts=opts.LegendOpts(orient="vertical", pos_right="right", pos_top="center"))

chart_html = pie.render_embed()

button_name = "饼图"

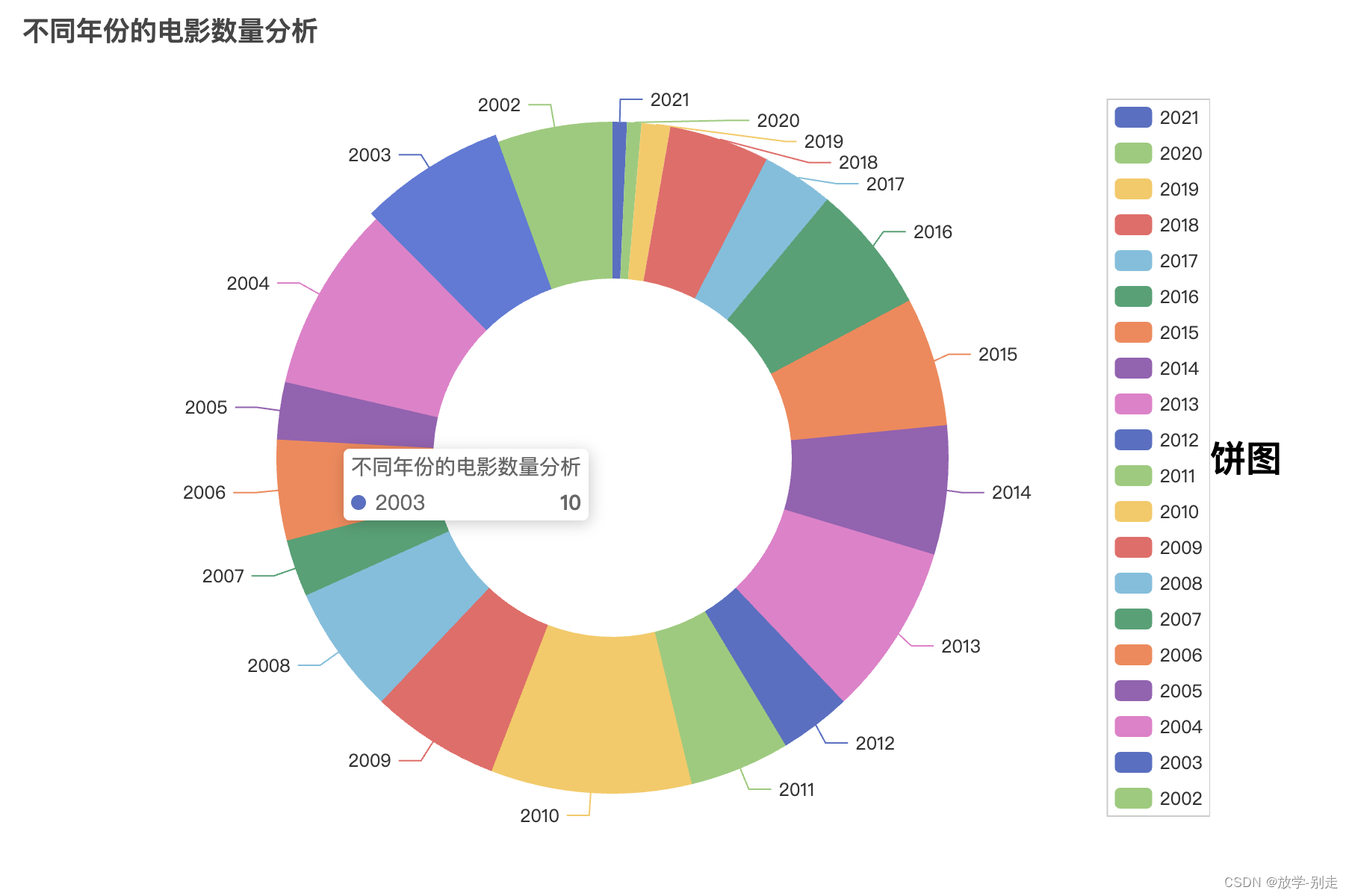

elif button_id == 5:

x,y=movie_year_count()

# 创建饼图

pie = (

Pie(init_opts=opts.InitOpts(width="800px", height="600px"))

.add(

series_name="不同年份的电影数量分析",

data_pair=list(zip(x, y)),

radius=["40%", "75%"], # 设置内外半径,实现空心效果

label_opts=opts.LabelOpts(is_show=True, position="inside"),

)

.set_global_opts(title_opts=opts.TitleOpts(title="不同年份的电影数量分析"),

legend_opts=opts.LegendOpts(orient="vertical", pos_right="right", pos_top="center"),

)

.set_series_opts( # 设置系列选项,调整 is_show 阈值

label_opts=opts.LabelOpts(is_show=True)

)

)

chart_html = pie.render_embed()

button_name = "饼图"

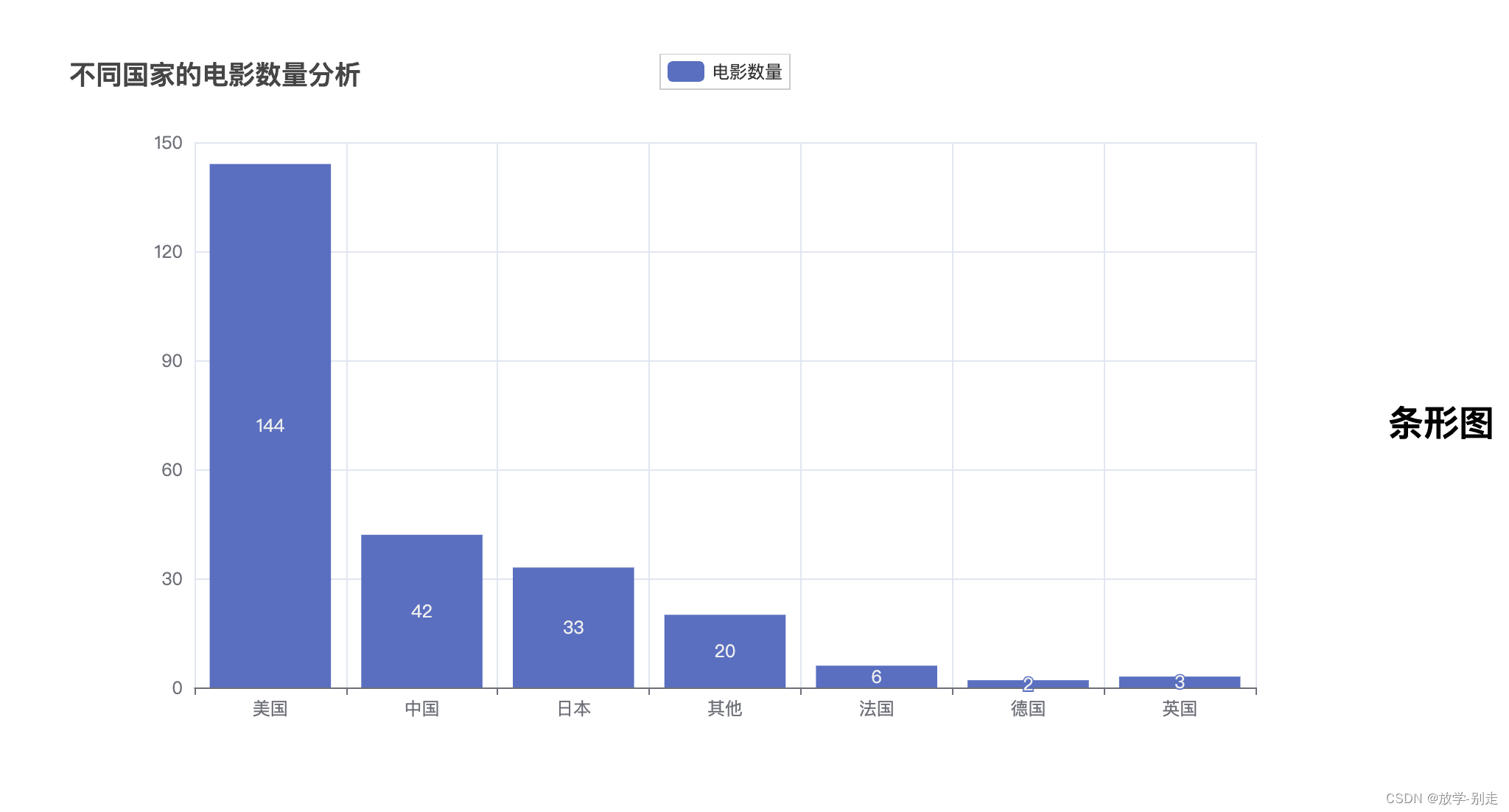

elif button_id == 6:

x, y = movie_count_count()

bar_chart = (

Bar()

.add_xaxis(xaxis_data=x)

.add_yaxis(series_name="电影数量", y_axis=y)

.set_global_opts(title_opts=opts.TitleOpts(title="不同国家的电影数量分析"))

)

chart_html = bar_chart.render_embed()

button_name = "条形图"

elif button_id == 0:

return redirect('movie_recommendation')

return render(request, 'data_analysis.html', {'chart_html': chart_html, 'button_name': button_name})

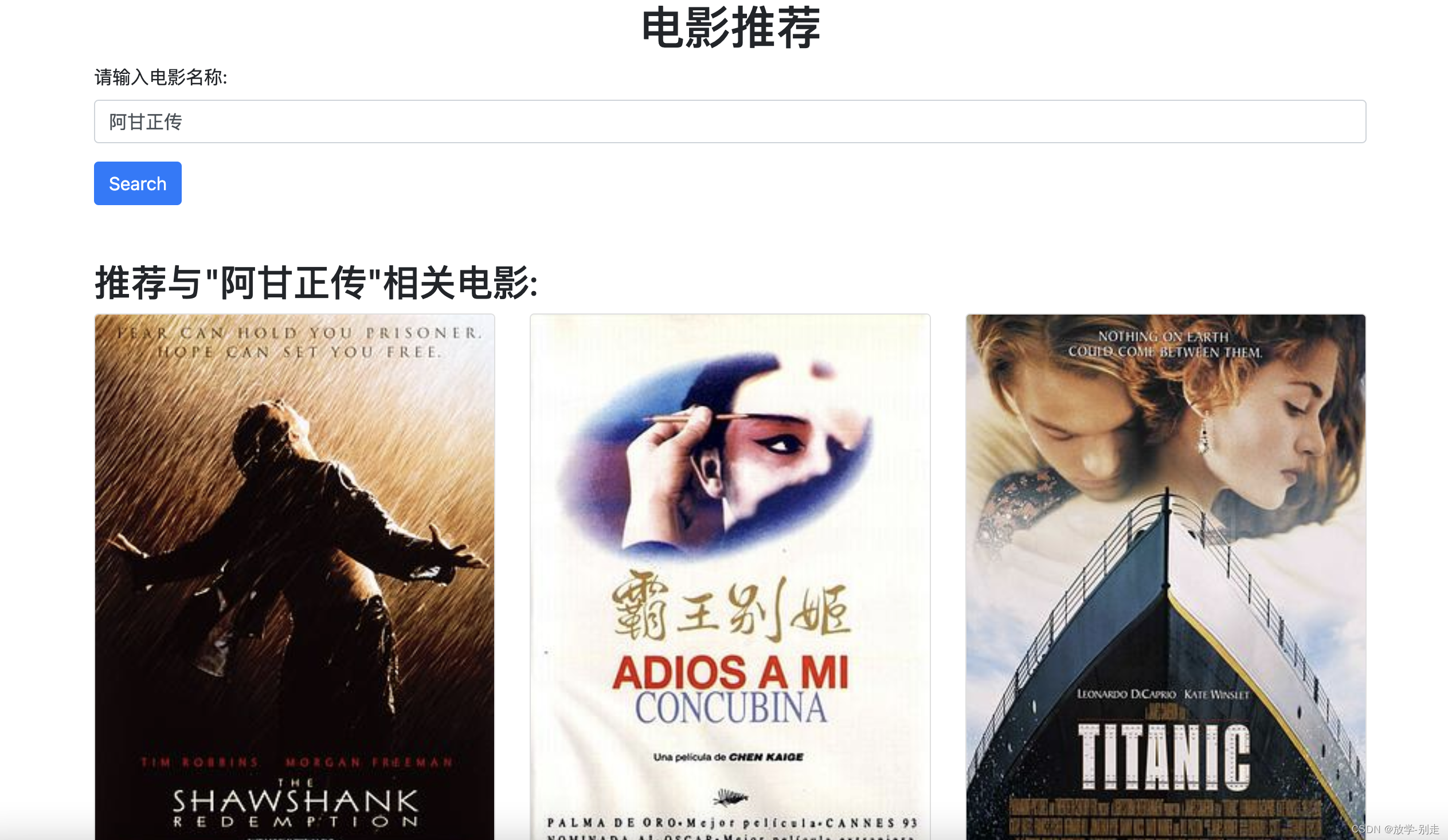

4. 电影推荐

最后,我们基于协同过滤算法进行电影推荐。协同过滤是推荐系统中常用的一种算法,通过用户的行为数据(如评分、点击等)来预测用户可能感兴趣的项目。

这里我们使用 sklearn 库来实现协同过滤推荐系统。

python

import pandas as pd

import numpy as np

from sklearn.preprocessing import StandardScaler

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.metrics.pairwise import cosine_similarity

import mysql.connector

# 数据库连接参数

db_config = {

'user': 'root',

'password': '12345678',

'host': '127.0.0.1',

'database': 'mydb'

}

# 连接到数据库

conn = mysql.connector.connect(**db_config)

cursor = conn.cursor()

# 读取电影数据

query = "SELECT id, foreign_name, chinese_name, rating, review_count, summary, related_info FROM douban_movies"

movies_df = pd.read_sql(query, conn)

# 处理文本特征:电影外文名、简介、相关信息

tfidf_vectorizer = TfidfVectorizer(stop_words='english')

# 外文名的TF-IDF向量

foreign_name_tfidf = tfidf_vectorizer.fit_transform(movies_df['foreign_name'].fillna(''))

# 简介的TF-IDF向量

summary_tfidf = tfidf_vectorizer.fit_transform(movies_df['summary'].fillna(''))

# 相关信息的TF-IDF向量

related_info_tfidf = tfidf_vectorizer.fit_transform(movies_df['related_info'].fillna(''))

# 数值特征:评分和评论数

scaler = StandardScaler()

rating_scaled = scaler.fit_transform(movies_df[['rating']].fillna(0))

review_count_scaled = scaler.fit_transform(movies_df[['review_count']].fillna(0))

# 合并所有特征

features = np.hstack([

foreign_name_tfidf.toarray(),

summary_tfidf.toarray(),

related_info_tfidf.toarray(),

rating_scaled,

review_count_scaled

])

# 计算电影之间的余弦相似度

cosine_sim = cosine_similarity(features)

# 将相似度矩阵转换为DataFrame

cosine_sim_df = pd.DataFrame(cosine_sim, index=movies_df['id'], columns=movies_df['id'])

# 将相似度结果存储到数据库

similarities = []

for movie_id in cosine_sim_df.index:

similar_movies = cosine_sim_df.loc[movie_id].sort_values(ascending=False).index[1:6] # 取前5个相似的电影

for similar_movie_id in similar_movies:

similarity = cosine_sim_df.loc[movie_id, similar_movie_id]

similarities.append((int(movie_id), int(similar_movie_id), float(similarity)))

print(similarities)

# 插入相似度数据到数据库

insert_query = """

INSERT INTO movie_similarities (movie_id, similar_movie_id, similarity)

VALUES (%s, %s, %s)

"""

cursor.executemany(insert_query, similarities)

conn.commit()

# 关闭连接

cursor.close()

conn.close()

总结

通过本文,我们展示了如何使用Python进行数据爬取,如何将数据导入Hive进行分析,如何使用ECharts进行数据可视化,以及如何使用协同过滤算法进行电影推荐。这个流程展示了从数据采集、数据分析到数据可视化和推荐系统的完整数据处理流程。

如有遇到问题可以找小编沟通交流哦。另外小编帮忙辅导大课作业,学生毕设等。不限于MapReduce, MySQL, python,java,大数据,模型训练等。 hadoop hdfs yarn spark Django flask flink kafka flume datax sqoop seatunnel echart可视化 机器学习等