目录

- [1. ResNet block](#1. ResNet block)

- [2. ResNet18网络结构](#2. ResNet18网络结构)

- [3. 完整代码](#3. 完整代码)

-

- [3.1 网络代码](#3.1 网络代码)

- [3.2 训练代码](#3.2 训练代码)

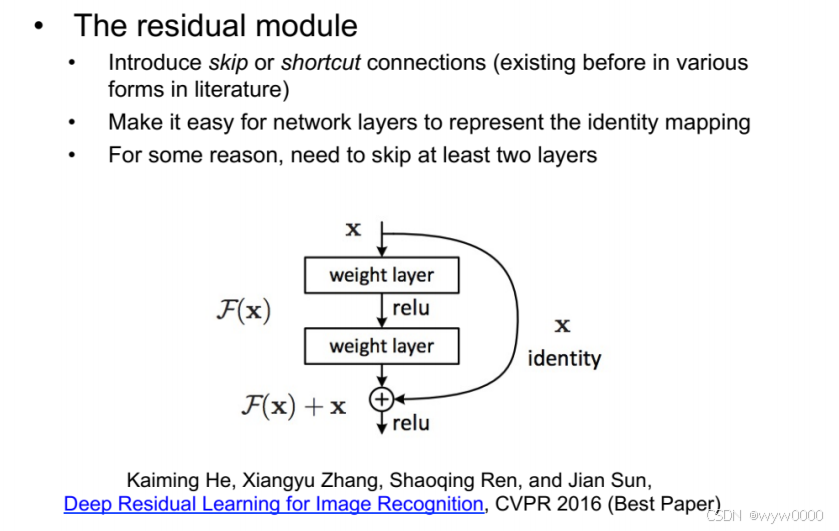

1. ResNet block

ResNet block有两个convolution和一个short cut层,如下图:

代码:

python

class ResBlk(nn.Module):

def __init__(self, ch_in, ch_out, stride):

super(ResBlk, self).__init__()

self.conv1 = nn.Conv2d(ch_in, ch_out, kernel_size=3, stride=stride, padding=1)

self.bn1 = nn.BatchNorm2d(ch_out)

self.conv2 = nn.Conv2d(ch_out, ch_out, kernel_size=3, stride=1, padding=1)

self. bn2 = nn.BatchNorm2d(ch_out)

self.extra = nn.Sequential()

if ch_in != ch_out:

self.extra = nn.Sequential(

nn.Conv2d(ch_in, ch_out, kernel_size=1, stride=stride),

nn.BatchNorm2d(ch_out)

)

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = self.bn2(self.conv2(out))

out = self.extra(x) + out

out = F.relu(out)

return out2. ResNet18网络结构

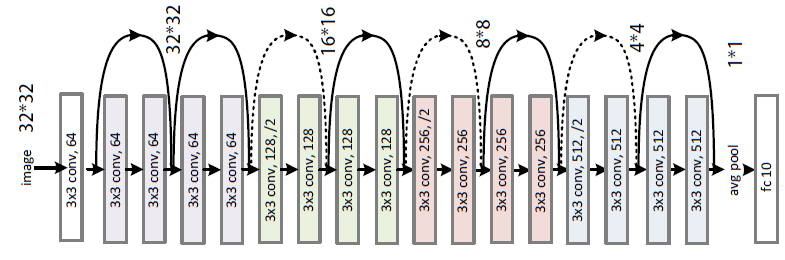

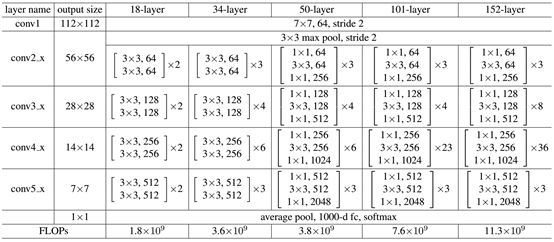

从上图可以看出,resnet18有1个卷积层,4个残差层和1一个线性输出层,其中每个残差层有2个resnet块,每个块有2个卷积层。

对于cifar10数据来说,输入层[b, 64, 32,32],输出是10分类

代码:

python

class ResNet(nn.Module):

def __init__(self, block, num_blocks, num_classes=1000):

super(ResNet, self).__init__()

self.in_planes = 64

# 初始卷积层

self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(64)

# 四个残差层

self.layer1 = self._make_layer(block, 64, num_blocks[0], stride=1)

self.layer2 = self._make_layer(block, 128, num_blocks[1], stride=2)

self.layer3 = self._make_layer(block, 256, num_blocks[2], stride=2)

self.layer4 = self._make_layer(block, 512, num_blocks[3], stride=2)

# 全连接层

self.linear = nn.Linear(512 * block.expansion, num_classes)

# 创建一个残差层

def _make_layer(self, block, planes, num_blocks, stride):

strides = [stride] + [1] * (num_blocks - 1)

layers = []

for stride in strides:

layers.append(block(self.in_planes, planes, stride))

self.in_planes = planes * block.expansion

return nn.Sequential(*layers)

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = F.max_pool2d(out, kernel_size=3, stride=2, padding=1)

out = self.layer1(out)

out = self.layer2(out)

out = self.layer3(out)

out = self.layer4(out)

#out = F.avg_pool2d(out, 4)

out = F.adaptive_avg_pool2d(out, [1, 1])

out = out.view(out.size(0), -1)

out = self.linear(out)

return out3. 完整代码

3.1 网络代码

python

import torch

from torch import nn

from torch.nn import functional as F

class ResBlk(nn.Module):

expansion = 1

def __init__(self, ch_in, ch_out, stride):

super(ResBlk, self).__init__()

self.conv1 = nn.Conv2d(ch_in, ch_out, kernel_size=3, stride=stride, padding=1)

self.bn1 = nn.BatchNorm2d(ch_out)

self.conv2 = nn.Conv2d(ch_out, ch_out, kernel_size=3, stride=1, padding=1)

self.bn2 = nn.BatchNorm2d(ch_out)

self.extra = nn.Sequential()

if ch_in != ch_out:

self.extra = nn.Sequential(

nn.Conv2d(ch_in, ch_out, kernel_size=1, stride=stride),

nn.BatchNorm2d(ch_out)

)

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = self.bn2(self.conv2(out))

out = self.extra(x) + out

out = F.relu(out)

return out

class ResNet(nn.Module):

def __init__(self, block, num_blocks, num_classes=1000):

super(ResNet, self).__init__()

self.in_planes = 64

# 初始卷积层

self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(64)

# 四个残差层

self.layer1 = self._make_layer(block, 64, num_blocks[0], stride=1)

self.layer2 = self._make_layer(block, 128, num_blocks[1], stride=2)

self.layer3 = self._make_layer(block, 256, num_blocks[2], stride=2)

self.layer4 = self._make_layer(block, 512, num_blocks[3], stride=2)

# 全连接层

self.linear = nn.Linear(512 * block.expansion, num_classes)

# 创建一个残差层

def _make_layer(self, block, planes, num_blocks, stride):

strides = [stride] + [1] * (num_blocks - 1)

layers = []

for stride in strides:

layers.append(block(self.in_planes, planes, stride))

self.in_planes = planes * block.expansion

return nn.Sequential(*layers)

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = F.max_pool2d(out, kernel_size=3, stride=2, padding=1)

out = self.layer1(out)

out = self.layer2(out)

out = self.layer3(out)

out = self.layer4(out)

#out = F.avg_pool2d(out, 4)

out = F.adaptive_avg_pool2d(out, [1, 1])

out = out.view(out.size(0), -1)

out = self.linear(out)

return out

def ResNet18():

return ResNet(ResBlk, [2, 2, 2, 2], 10)

if __name__ == '__main__':

model = ResNet18()

print(model)3.2 训练代码

python

import torch

from torchvision import datasets

from torch.utils.data import DataLoader

from torchvision import transforms

from torch import nn, optim

import sys

sys.path.append('.')

#from Lenet5 import Lenet5

from resnet import ResNet18

def main():

batchz = 128

cifar_train = datasets.CIFAR10('cifa', True, transform=transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

]), download=True)

cifar_train = DataLoader(cifar_train, batch_size=batchz, shuffle=True)

cifar_test = datasets.CIFAR10('cifa', False, transform=transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

]), download=True)

cifar_test = DataLoader(cifar_test, batch_size=batchz, shuffle=True)

device = torch.device('cuda')

#model = Lenet5().to(device)

model = ResNet18().to(device)

crition = nn.CrossEntropyLoss().to(device)

optimizer = optim.Adam(model.parameters(), lr=1e-3)

for epoch in range(1000):

model.train()

for batch, (x, label) in enumerate(cifar_train):

x, label = x.to(device), label.to(device)

logits = model(x)

loss = crition(logits, label)

optimizer.zero_grad()

loss.backward()

optimizer.step()

# test

model.eval()

with torch.no_grad():

total_correct = 0

total_num = 0

for x, label in cifar_test:

x, label = x.to(device), label.to(device)

logits = model(x)

pred = logits.argmax(dim=1)

correct = torch.eq(pred, label).float().sum().item()

total_correct += correct

total_num += x.size(0)

acc = total_correct / total_num

print(epoch, 'test acc:', acc)

if __name__ == '__main__':

main()