系列文章目录

文章目录

- 系列文章目录

- 前言

- 一、环境

- 二、Prometheus部署

- 三、kube-prometheus添加自定义监控项

-

- 1.准备yaml文件

- 2.创建新的secret并应用到prometheus

- 3.将yaml文件应用到集群

- [4.重启prometheus-k8s pod](#4.重启prometheus-k8s pod)

- 5.访问Prometheus-ui

- 四、k8s中实践基于consul的服务发现

-

- 1.示例nginx.yaml

- [2.创建nginx pod](#2.创建nginx pod)

- [3.检查Prometheus Targets中是否产生了对应的job_name](#3.检查Prometheus Targets中是否产生了对应的job_name)

- 五、告警链路启动

-

- 1.修改alertmanager-secret.yaml文件

- [2.启动alertWebhook pod](#2.启动alertWebhook pod)

- 3.测试能否收到告警

- 总结

前言

在云原生技术蓬勃发展的今天,Kubernetes(K8S)已成为容器编排领域的事实标准,而监控作为保障系统稳定性和可观测性的核心环节,其重要性不言而喻。Prometheus 凭借其强大的时序数据采集能力和灵活的查询语言(PromQL),成为云原生监控体系的基石。然而,在动态变化的 K8S 环境中,传统静态配置的服务发现方式往往难以适应频繁的服务扩缩容和实例迁移。如何实现监控目标的自动化发现与动态管理,成为提升运维效率的关键挑战。

为此,服务发现技术应运而生。Consul 作为一款成熟的服务网格与分布式服务发现工具,能够实时感知 K8S 集群中服务的注册与健康状态,并与 Prometheus 无缝集成,为监控系统注入动态感知能力。这种组合不仅简化了配置复杂度,更让监控体系具备了"自愈"和"自适应"的云原生特性。

yaml

本文将以 实战为导向,深入剖析 K8S 环境下 Prometheus 与 Consul 的集成全流程、同时接入自研alertwebhook告警工具,涵盖以下核心内容:

1、环境架构解析:从零搭建 K8S 集群,部署 Prometheus 与 Consul 的标准化方案;

2、动态服务发现:通过 Consul 自动注册服务实例,实现 Prometheus 抓取目标的动态感知;

3、配置优化实践:揭秘 Relabel 规则、抓取策略与告警规则的进阶调优技巧;

4、故障排查指南:针对服务发现失效、指标抓取异常等场景,提供高效排查思路。

5、告警通道配置:实现钉钉、邮箱、企业微信三个告警通知渠道。整体架构图如下所示

一、环境

一套最小配置的k8s1.28集群

pod自动注册到consul <具体可看顶部文章>

二、Prometheus部署

1.下载

代码如下(示例):

shell

[root@k8s-master ~]# git clone https://github.com/prometheus-operator/kube-prometheus.git

[root@k8s-master ~]# cd kube-prometheus2.部署

shell

[root@k8s-master ~]# kubectl apply --server-side -f manifests/setup

[root@k8s-master ~]# until kubectl get servicemonitors --all-namespaces ; do date; sleep 1; echo ""; done

[root@k8s-master ~]# kubectl apply -f manifests/3.验证

部署成功后,结果如下(如果部署失败,可手动想办法更换镜像地址

三、kube-prometheus添加自定义监控项

1.准备yaml文件

代码如下(示例):

yaml

[root@k8s-master prometheus]# cat prometheus-additional.yaml

- job_name: 'consul-k8s' #自定义

scrape_interval: 10s

consul_sd_configs:

- server: 'consul-server.middleware.svc.cluster.local:8500' #consul节点的ip和svc暴露出的端口

token: "9bfbe81f-2648-4673-af14-d13e0a170050" #consul的acl token

relabel_configs:

# 1. 保留包含 "container" 标签的服务

- source_labels: [__meta_consul_tags]

regex: .*container.*

action: keep

# 2. 设置抓取地址为服务的 ip:port

- source_labels: [__meta_consul_service_address]

target_label: __address__

replacement: "$1:9113" #9113是nginx-exporter的端口,如果有修改自行替换

# 3. 其他标签映射(具体的consul标签根据自己的实际环境替换,如果你使用的是顶部文章中的consul注册工具,可以不用修改)

#具体可看顶部文章prometheus监控标签进行学习理解

- source_labels: [__meta_consul_service_address]

target_label: ip

- source_labels: [__meta_consul_service_metadata_podPort]

target_label: port

- source_labels: [__meta_consul_service_metadata_project]

target_label: project

- source_labels: [__meta_consul_service_metadata_monitorType]

target_label: monitorType

- source_labels: [__meta_consul_service_metadata_hostNode]

target_label: hostNode2.创建新的secret并应用到prometheus

shell

# 创建secret

[root@k8s-master prometheus]# kubectl create secret generic additional-scrape-configs -n monitoring --from-file=prometheus-additional.yaml --dry-run=client -o yaml > ./additional-scrape-configs.yaml

# 应用到prometheus

[root@k8s-master prometheus]# kubectl apply -f additional-scrape-configs.yaml -n monitoring

[root@k8s-master prometheus]# kubectl get secrets -n monitoring

NAME TYPE DATA AGE

additional-scrape-configs Opaque 1 3h18m3.将yaml文件应用到集群

添加以下配置到文件中

shell

[root@k8s-master prometheus]# vim manifests/prometheus-prometheus.yaml

......

additionalScrapeConfigs:

name: additional-scrape-configs #必须跟上述secret名称一致

key: prometheus-additional.yaml

.......

#应用变更到K8S生效

[root@k8s-master prometheus]# kubectl apply -f manifests/prometheus-prometheus.yaml -n monitoring

4.重启prometheus-k8s pod

shell

[root@k8s-master prometheus]# kubectl rollout restart -n monitoring statefulset prometheus-k8s5.访问Prometheus-ui

查看prometheus的target列表即可,或者prometheus--> Status-->Configuration 中可以搜到job_name为canal的配置信息

四、k8s中实践基于consul的服务发现

准备一个nginx.yaml,结合consul的自动注册镜像,将其注册到consul,然后结合所配置的consul服务发现进行pod监控

1.示例nginx.yaml

通过配置nginx自带的stub_status模块和nginx-exporter暴露的9113端口,实现对nginx进行监控,使其Prometheus能从http://pod Ip:9113/metrics获取到监控数据

yaml

[root@k8s-master consul]# cat nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

run: nginx

name: nginx

namespace: middleware

spec:

replicas: 1

selector:

matchLabels:

run: nginx

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

type: RollingUpdate

template:

metadata:

labels:

run: nginx

spec:

tolerations:

- key: "node-role.kubernetes.io/control-plane"

operator: "Exists"

effect: "NoSchedule"

initContainers:

- name: service-registrar

image: harbor.jdicity.local/registry/pod_registry:v14

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: CONSUL_IP

valueFrom:

configMapKeyRef:

name: global-config

key: CONSUL_IP

- name: ACL_TOKEN

valueFrom:

secretKeyRef:

name: acl-token

key: ACL_TOKEN

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

volumeMounts:

- mountPath: /shared-bin # 共享卷挂载到 initContainer

name: shared-bin

command: ["sh", "-c"]

args:

- |

cp /usr/local/bin/consulctl /shared-bin/ &&

/usr/local/bin/consulctl register \

"$CONSUL_IP" \

"$ACL_TOKEN" \

"80" \

"容器监控" \

"k8s"

containers:

- image: swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/nginx:stable

env:

- name: CONSUL_IP # 必须显式声明

valueFrom:

configMapKeyRef:

name: global-config

key: CONSUL_IP

- name: ACL_TOKEN # 必须显式声明

valueFrom:

secretKeyRef:

name: acl-token

key: ACL_TOKEN

- name: CONSUL_NODE_NAME

value: "consul-0"

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

lifecycle:

preStop:

exec:

command: ["sh", "-c", "/usr/local/bin/consulctl deregister $CONSUL_IP $ACL_TOKEN 80 $CONSUL_NODE_NAME"]

imagePullPolicy: IfNotPresent

name: nginx

volumeMounts:

- mountPath: /usr/local/bin/consulctl # 挂载到 minio 容器的 PATH 目录

name: shared-bin

subPath: consulctl

- name: nginx-config

mountPath: /etc/nginx/nginx.conf

subPath: nginx.conf

livenessProbe:

httpGet:

path: /

port: 80

initialDelaySeconds: 3

periodSeconds: 3

ports:

- containerPort: 80

- name: nginx-exporter # 容器名称

image: swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io/nginx/nginx-prometheus-exporter:1.3.0

args:

- "--nginx.scrape-uri=http://localhost:80/stub_status" # ? 使用新参数格式

ports:

- containerPort: 9113

restartPolicy: Always

terminationGracePeriodSeconds: 30

volumes:

- name: shared-bin # 共享卷

emptyDir: {}

- name: nginx-config

configMap:

name: nginx-configconfigmap文件

yaml

[root@k8s-master consul]# cat nginx-config.yaml

# nginx-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-config

namespace: middleware

data:

nginx.conf: |

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

server {

listen 80;

location /stub_status {

stub_status;

allow 127.0.0.1;

deny all;

}

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

}

}2.创建nginx pod

shell

[root@k8s-master consul]# kubectl apply -f nginx-config.yaml

[root@k8s-master consul]# kubectl apply -f nginx.yaml 等待pod初始化容器启动后,会将其注册到consul,然后Prometheus通过配置的consul服务发现进行pod监控

3.检查Prometheus Targets中是否产生了对应的job_name

至此,Prometheus已能成功采集到对应的监控指标数据

五、告警链路启动

alertwebhook源码地址: https://gitee.com/wd_ops/alertmanager-webhook_v2

包含了源码、镜像构建、启动alertwebhook的yaml文件、告警实现架构图,再此不过多描述

1.修改alertmanager-secret.yaml文件

自己写的alertWebHook工具,实现了基于邮件、钉钉、企业微信三种方式的告警发送渠道

shell

[root@k8s-master manifests]# cat alertmanager-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: alertmanager-main

namespace: monitoring

stringData:

alertmanager.yaml: |-

global:

resolve_timeout: 5m

route:

group_by: ['alertname']

group_interval: 10s

group_wait: 10s

receiver: 'webhook'

repeat_interval: 5m

receivers:

- name: 'webhook'

webhook_configs:

- "url": "http://alertmanager-webhook.monitoring.svc.cluster.local:19093/api/v1/wechat"

- "url": "http://alertmanager-webhook.monitoring.svc.cluster.local:19093/api/v1/email"

- "url": "http://alertmanager-webhook.monitoring.svc.cluster.local:19093/api/v1/dingding"

type: Opaque

[root@k8s-master manifests]# kubectl apply -f alertmanager-secret.yaml2.启动alertWebhook pod

关于下方的邮件、钉钉、企业微信的key、secret等密钥自行百度官网文档获取,不过多描述

shell

[root@k8s-master YamlTest]# cat alertWebhook.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: alertmanager-webhook

namespace: monitoring # 建议根据实际需求选择命名空间

labels:

app: alertmanager-webhook

spec:

replicas: 1

selector:

matchLabels:

app: alertmanager-webhook

template:

metadata:

labels:

app: alertmanager-webhook

spec:

containers:

- name: webhook

image: harbor.jdicity.local/registry/alertmanager-webhook:v4.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 19093

protocol: TCP

resources:

requests:

memory: "256Mi"

cpu: "50m"

limits:

memory: "512Mi"

cpu: "100m"

volumeMounts:

- name: logs

mountPath: /export/alertmanagerWebhook/logs

- name: config

mountPath: /export/alertmanagerWebhook/settings.yaml

subPath: settings.yaml

volumes:

- name: logs

emptyDir: {}

- name: config

configMap:

name: alertmanager-webhook-config

---

# 配置文件通过ConfigMap管理(推荐)

apiVersion: v1

kind: ConfigMap

metadata:

name: alertmanager-webhook-config

namespace: monitoring

data:

settings.yaml: |

DingDing:

enabled: false

dingdingKey: "9zzzzc39"

signSecret: "SEzzzff859a7b"

chatId: "chat3zz737e49beb9"

atMobiles:

- "14778987659"

- "17657896784"

QyWeChat:

enabled: true

qywechatKey: "4249406zz305"

corpID: "ww4zzz7b"

corpSecret: "mM23zOozwEZM"

atMobiles:

- "14778987659"

Email:

enabled: true

smtp_host: "smtp.163.com"

smtp_port: 25

smtp_from: "rzzxd@163.com"

smtp_password: "UzzH"

smtp_to: "1zz030@qq.com"

Redis:

redisServer: "redis-master.redis.svc.cluster.local"

mode: "master-slave" # single/master-slave/cluster

redisPort: "6379" # 主节点端口

redisPassword: "G0LzzW"

requirePassword: true

# 主从模式配置

slaveNodes:

- "redis-slave.redis.svc.cluster.local:6379"

# 集群模式配置

clusterNodes:

- "192.168.75.128:7001"

- "192.168.75.128:7002"

- "192.168.75.128:7003"

System:

projectName: "测试项目"

prometheus_addr: "prometheus-k8s.monitoring.svc.cluster.local:9090"

host: 0.0.0.0

port: 19093

env: release

logFileDir: /export/alertmanagerWebhook/logs/

logFilePath: alertmanager-webhook.log

logMaxSize: 100

logMaxBackup: 5

logMaxDay: 30

---

# 新增 Service 配置

apiVersion: v1

kind: Service

metadata:

name: alertmanager-webhook

namespace: monitoring

labels:

app: alertmanager-webhook

spec:

type: ClusterIP # 默认类型,集群内访问

selector:

app: alertmanager-webhook # 必须与 Deployment 的 Pod 标签匹配

ports:

- name: http

port: 19093 # Service 暴露的端口

targetPort: 19093 # 对应容器的 containerPort

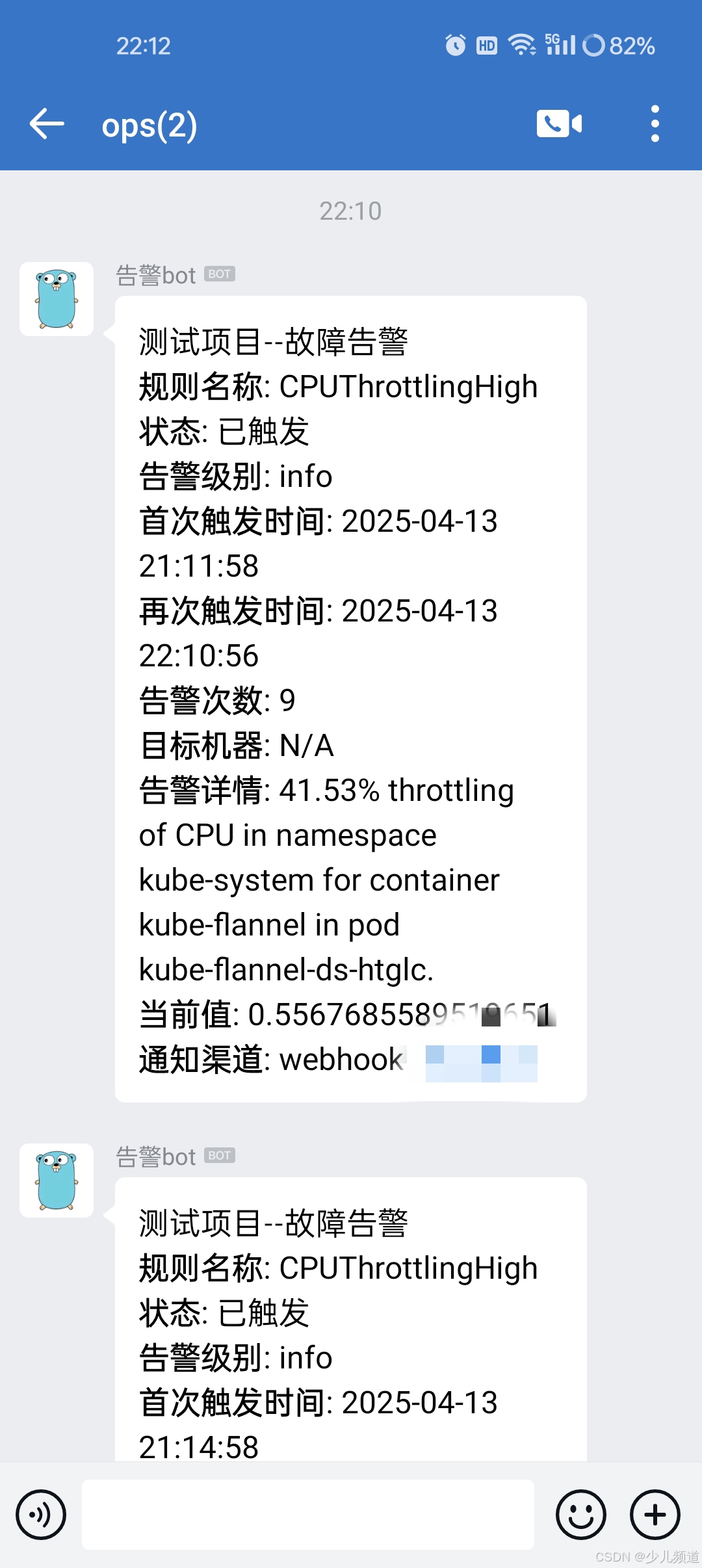

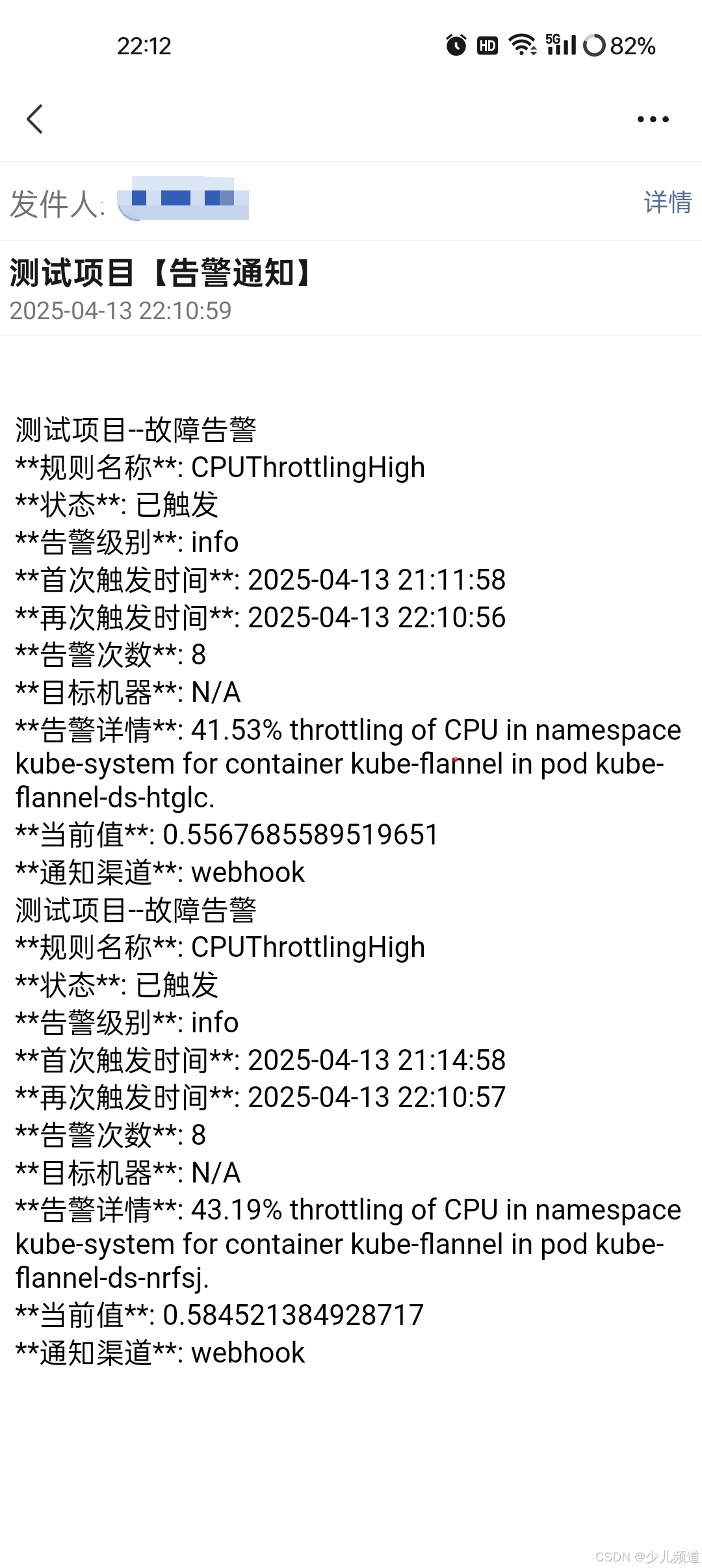

protocol: TCP3.测试能否收到告警

当前k8s集群存在告警,看是否能收到告警通知

启动alertWebhook

shell

[root@k8s-master YamlTest]# kubectl apply -f alertWebhook.yaml

deployment.apps/alertmanager-webhook created

configmap/alertmanager-webhook-config created

service/alertmanager-webhook created邮件部分日志示例

钉钉

企业微信

邮箱

该处使用的url网络请求的数据。

总结

至此一套完整的开源的监控注册、监控告警方案成功落地完成!!!