接上文:https://blog.csdn.net/soso678/article/details/153775348?spm=1001.2014.3001.5501

上文介绍了minio的原理,下面我将详细介绍在 Kubernetes 中手动部署 MinIO 集群的完整步骤.

MinIO 有两个最主要的默认端口:

| 端口号 | 协议 | 用途 | 说明 |

|---|---|---|---|

9000 |

HTTP | API 端口 | 这是最核心的端口。 应用程序通过这个端口与 MinIO 服务进行通信,执行上传、下载、删除等所有 S3 操作。 |

9001 |

HTTP | 控制台端口(Web UI) | 通过这个端口可以访问 MinIO 的 Web 管理界面,用于管理用户、策略、存储桶和监控等。 |

由于我们正常部署minlo集群最少需要四个节点,每个节点均有独立的磁盘。本次部署在k8s集群中分别给四个节点添加了磁盘,用于制作pv作为minlo的存储。

首先先对四个节点的磁盘进行格式化并挂载使用

ini

[root@master ~]# mkfs.ext4 /dev/nvme0n2

[root@node01 ~]# mkfs.ext4 /dev/nvme0n2

[root@node02 ~]# mkfs.ext4 /dev/nvme0n2

[root@node03 ~]# mkfs.ext4 /dev/nvme0n2

[root@master ~]# mkdir /mnt/data

[root@node01 ~]# mkdir /mnt/data

[root@node02 ~]# mkdir /mnt/data

[root@node03 ~]# mkdir /mnt/data

[root@master ~]# cat /etc/fstab

UUID=8bed5399-5358-44d3-a120-41c1265858e7 /mnt/data ext4 defaults 0 0

[root@node01 ~]# cat /etc/fstab

UUID=42f78c55-0a16-4562-a78b-92c073c38054 /mnt/data ext4 defaults 0 0

[root@node02 ~]# cat /etc/fstab

UUID=319ea715-c68f-4aef-8d54-546e0f30a54a /mnt/data ext4 defaults 0 0

[root@node03 ~]# cat /etc/fstab

UUID=f889447e-9c9a-4863-8b92-2611d99abd43 /mnt/data ext4 defaults 0 0制作本地持久卷(Local PV)

为每个节点创建 PV

ini

[root@master ~]# mkdir minio

[root@master ~]# cd minio/

[root@master minio]# cat minio-pv.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: minio-pv-0

labels:

type: local

app: minio

spec:

capacity:

storage: 18Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

local:

path: /mnt/data #节点上的实际路径

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- master #替换为实际节点名

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: minio-pv-1

labels:

type: local

app: minio

spec:

capacity:

storage: 18Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

local:

path: /mnt/data

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- node01

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: minio-pv-2

labels:

type: local

app: minio

spec:

capacity:

storage: 18Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

local:

path: /mnt/data

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- node02

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: minio-pv-3

labels:

type: local

app: minio

spec:

capacity:

storage: 18Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

local:

path: /mnt/data

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- node03

[root@master minio]# kubectl apply -f minio-pv

persistentvolume/minio-pv-0 created

persistentvolume/minio-pv-1 created

persistentvolume/minio-pv-2 created

persistentvolume/minio-pv-3 created

[root@master minio]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

minio-pv-0 18Gi RWO Retain Available <unset> 5m7s

minio-pv-1 18Gi RWO Retain Available <unset> 5m7s

minio-pv-2 18Gi RWO Retain Available <unset> 5m7s

minio-pv-3 18Gi RWO Retain Available <unset> 5m7s创建命名空间

ini

[root@master minlo]# vim minio-namespace.yml

apiVersion: v1

kind: Namespace

metadata:

name: minio

labels:

name: minio

[root@master minio]# kubectl apply -f minio-namespace.yml

namespace/minio created

[root@master minio]# kubectl get ns | grep minio

minio Active 7s创建secret

设置管理员用户与密码

ini

[root@master ~]# echo -n "minioadmin" | base64

bWluaW9hZG1pbg==

[root@master ~]# echo -n "minioadmin123" | base64

bWluaW9hZG1pbjEyMw==

[root@master minio]# cat minio-secret.yml

apiVersion: v1

kind: Secret

metadata:

name: minio-secret

namespace: minio

type: Opaque

data:

rootuser: bWluaW9hZG1pbg==

rootpassword: bWluaW9hZG1pbjEyMw==

[root@master minio]# kubectl apply -f minio-secret

secret/minio-secret created

[root@master minio]# kubectl get secret -n minio

NAME TYPE DATA AGE

minio-secret Opaque 2 7s创建 Headless Service

用于集群内部访问,现在用了DNS的方式,minio-{0...3}.minio-service.minio.svc.cluster.local,因为StatefulSet的DNS别名是...svc.cluster.local

ini

[root@master minio]# cat minio-headless-service.yaml

apiVersion: v1

kind: Service

metadata:

name: minio-service

namespace: minio

labels:

app: minio

spec:

clusterIP: None # Headless Service

ports:

- port: 9000

name: minio-api

targetPort: 9000

- port: 9001

name: minio-console

targetPort: 9001

selector:

app: minio

publishNotReadyAddresses: true # 重要:发布未就绪的地址:当 Pod 启动中/未就绪时,仍将其 IP 加入 Service 的 DNS 记录

[root@master minio]# kubectl apply -f minio-headless-service.yaml

service/minio-service created

[root@master minio]# kubectl get svc -n minio

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

minio-service ClusterIP None <none> 9000/TCP,9001/TCP 12s参数解释:

ini

publishNotReadyAddresses: true #当 Pod 启动中/未就绪时,仍将其 IP 加入 Service 的 DNS 记录

典型场景:StatefulSet 部署的 MinIO 集群需要节点间直接通信(如分布式配置同步)

默认行为 (false) 会等待 Pod 进入 Ready 状态才注册 DNS创建 StatefulSet用于管理minio集群

ini

[root@master minio]# cat minio-statefulset.yml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: minio

namespace: minio

spec:

serviceName: minio-service

replicas: 4

selector:

matchLabels:

app: minio

template:

metadata:

labels:

app: minio

spec:

containers:

- name: minio

image: minio/minio:RELEASE.2023-10-25T06-33-25Z

command:

- /bin/bash

- -c

args:

- minio server --console-address ":9001" http://minio-{0...3}.minio-service.minio.svc.cluster.local/data #指定启动集群命令

env:

- name: MINIO_ROOT_USER

valueFrom:

secretKeyRef:

name: minio-secret

key: rootuser

- name: MINIO_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: minio-secret

key: rootpassword

ports:

- containerPort: 9000

name: minio

- containerPort: 9001

name: console

volumeMounts:

- name: data

mountPath: /data

livenessProbe:

httpGet:

path: /minio/health/live

port: 9000

initialDelaySeconds: 30

periodSeconds: 10

readinessProbe:

httpGet:

path: /minio/health/ready

port: 9000

initialDelaySeconds: 30

periodSeconds: 10

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- minio

topologyKey: kubernetes.io/hostname

tolerations:

- key: "node-role.kubernetes.io/control-plane"

operator: "Exists"

effect: "NoSchedule"

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 18Gi

[root@master minio]# kubectl apply -f minio-stateflset.yml

[root@master minio]# kubectl get pod -n minio -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATESminio-0 1/1 Running 0 4m20s 10.244.219.104 master <none> <none>

minio-1 1/1 Running 0 3m43s 10.244.196.132 node01 <none> <none>

minio-2 1/1 Running 0 3m9s 10.244.140.116 node02 <none> <none>

minio-3 1/1 Running 0 2m35s 10.244.186.226 node03 <none> <none>

[root@master minio]# kubectl get pod,pv,pvc -n minio -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/minio-0 1/1 Running 0 4m53s 10.244.219.104 master <none> <none>

pod/minio-1 1/1 Running 0 4m16s 10.244.196.132 node01 <none> <none>

pod/minio-2 1/1 Running 0 3m42s 10.244.140.116 node02 <none> <none>

pod/minio-3 1/1 Running 0 3m8s 10.244.186.226 node03 <none> <none>

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE VOLUMEMODE

persistentvolume/minio-pv-0 18Gi RWO Retain Bound minio/data-minio-0 <unset> 18m Filesystem

persistentvolume/minio-pv-1 18Gi RWO Retain Bound minio/data-minio-1 <unset> 18m Filesystem

persistentvolume/minio-pv-2 18Gi RWO Retain Bound minio/data-minio-2 <unset> 18m Filesystem

persistentvolume/minio-pv-3 18Gi RWO Retain Bound minio/data-minio-3 <unset> 18m Filesystem

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE VOLUMEMODE

persistentvolumeclaim/data-minio-0 Bound minio-pv-0 18Gi RWO <unset> 22m Filesystem

persistentvolumeclaim/data-minio-1 Bound minio-pv-1 18Gi RWO <unset> 17m Filesystem

persistentvolumeclaim/data-minio-2 Bound minio-pv-2 18Gi RWO <unset> 14m Filesystem

persistentvolumeclaim/data-minio-3 Bound minio-pv-3 18Gi RWO <unset> 11m Filesystem创建service暴露端口

本案例使用的是LoadBalancer暴露的服务,由于我们使用的是虚拟机搭建的k8s并没有云环境所以在本地先部署了metalLB服务,同时大家也可以考虑使用nodePort来暴露服务。

LoadBalancer :外部负载均衡器 + NodePort 的组合,通过外部负载均衡器(如云厂商的 ELB、裸机的 MetalLB)将流量转发到集群节点的 NodePort,最终路由到 Pod.

ini

[root@master minio]# cat minio-external-svc.yml

apiVersion: v1

kind: Service

metadata:

name: minio-external

namespace: minio

annotations:

# 使用MetalLB

metallb.universe.tf/address-pool: "first-pool" #指定使用的地址池名称

spec:

type: LoadBalancer #服务类型:LoadBalancer(触发外部负载均衡器,如 MetalLB 或云厂商 LB)

loadBalancerIP: 192.168.209.151 # 可选:从池里面指定静态IP,若不指定,MetalLB 会从 address-pool中自动分配一个 IP)。

ports:

- port: 9000

targetPort: 9000

name: api

protocol: TCP

- port: 9001

targetPort: 9001

name: console

protocol: TCP

selector:

app: minio

sessionAffinity: ClientIP # 会话亲和性策略:根据客户端 IP 路由流量(同一客户端始终访问同一 Pod)

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800 # 会话保持时间(单位:秒,此处为 3 小时)

[root@master minio]# kubectl apply -f minio-external-svc.yml

service/minio-external created

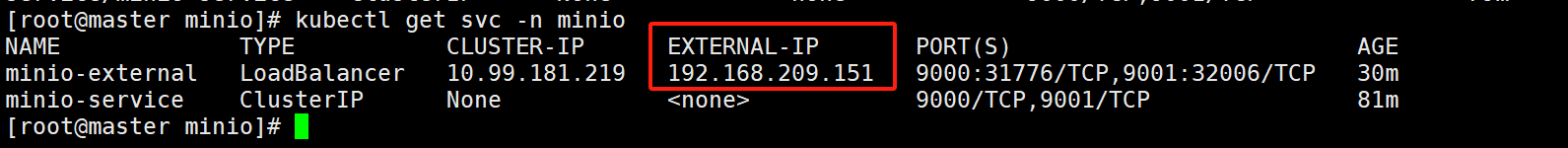

[root@master minio]# kubectl get svc -n minio

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

minio-external LoadBalancer 10.99.181.219 192.168.209.151 9000:31776/TCP,9001:32006/TCP 29m

minio-service ClusterIP None <none> 9000/TCP,9001/TCP 90m

ini

10.99.181.219:为clusterIP

192.168.209.151: 为ExteneralIP

9000/9001端口:为Port端口

31776/32006端口: 我们没有指定节点端口,但是创建完之后自动启动了31776/32006节点端口 ,其类型为NodePort端口(因为 LoadBanlance类型其实就是基于NodePort类型的扩展LoadBalancer基于nodeport,在创建LoadBalancer的时候会自动创建nodeport的端口。访问的时候可以通过以下方式访问:

ini

方式一:

通过使用 NodePort 的方式来访问服务(节点 IP+节点端口);

NodeIP:NodePort

方式二:

同时也 可以通过 EXTERNAL-IP 来访问

External-IP:port

访问方式三:

ClusterIP:port测试访问

安装客户端命令

ini

[root@master ~]# wget https://dl.min.io/client/mc/release/linux-amd64/mc

[root@master ~]# chmod +x mc

[root@master ~]# mv mc /usr/local/bin/

设置别名后续操作用此名称指代服务

[root@master ~]# mc alias set myminio http://192.168.209.151:9001 你的AK 你的SK

mc: Configuration written to `/root/.mc/config.json`. Please update your access credentials.

mc: Successfully created `/root/.mc/share`.

mc: Initialized share uploads `/root/.mc/share/uploads.json` file.

mc: Initialized share downloads `/root/.mc/share/downloads.json` file.

Added `myminio` successfully.

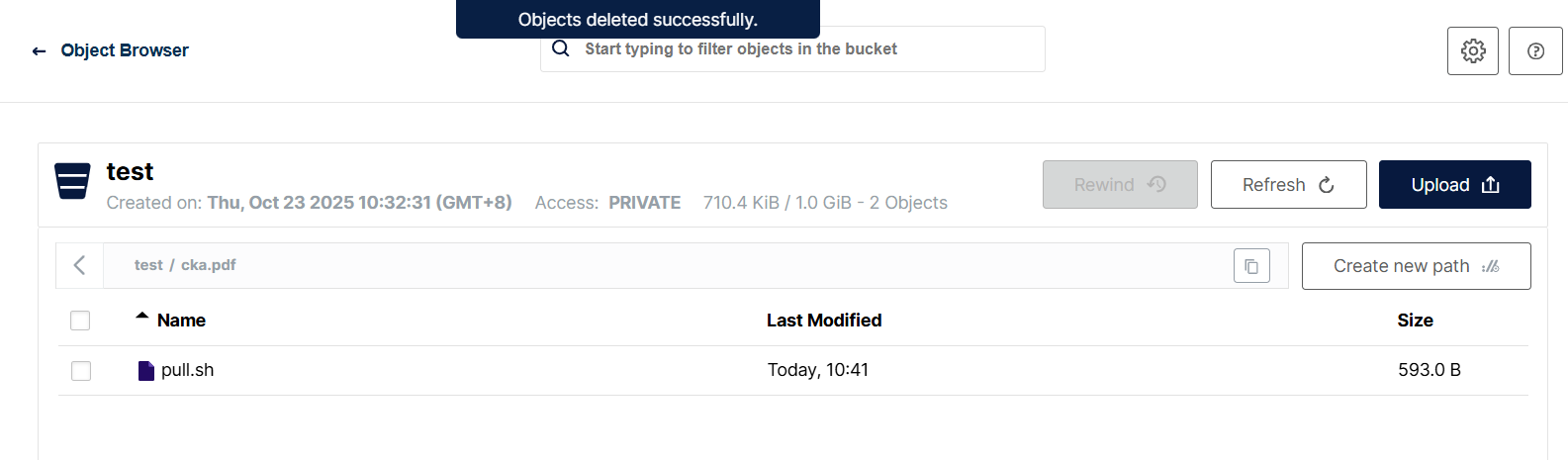

上传

[root@master ~]# mc cp pull.sh myminio/test #指定桶名

/root/pull.sh: 593 B / 593 B ┃▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓▓┃ 3.50 KiB/s 0s