rtsp协议之.c++实现,rtsp,rtp协议框架,模拟多路音视频h264,265,aac,数据帧传输,接收(二)

1、RTSP 服务器核心:处理 RTSP 会话管理、请求解析和响应生成

2、媒体源抽象:定义了通用媒体源接口,支持不同类型的媒体流

具体媒体源实现:

H264MediaSource:模拟 H.264 视频流

H265MediaSource:模拟 H.265 视频流

AACMediaSource:模拟 AAC 音频流

3、RTP 包生成:根据不同媒体类型生成相应的 RTP 包

使用时,只需创建 RTSP 服务器实例,添加多个媒体源,然后启动服务器即可。服务器会模拟多个相机的码流,可以通过 RTSP 客户端访问这些模拟的媒体流。

在给出例子前,先解释一些概念。

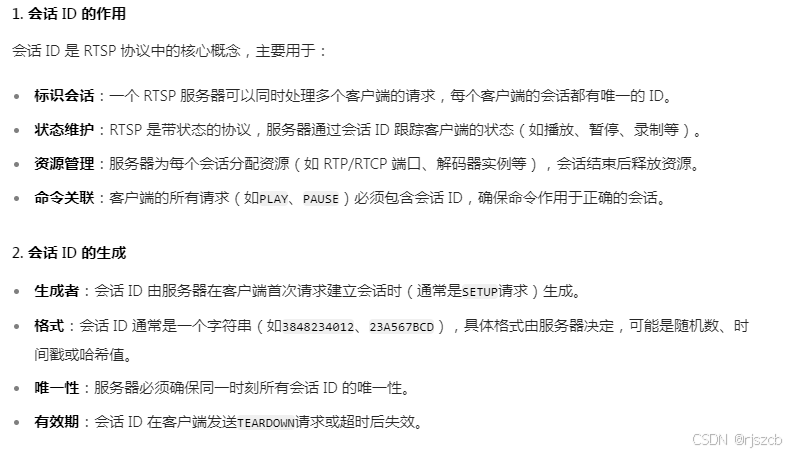

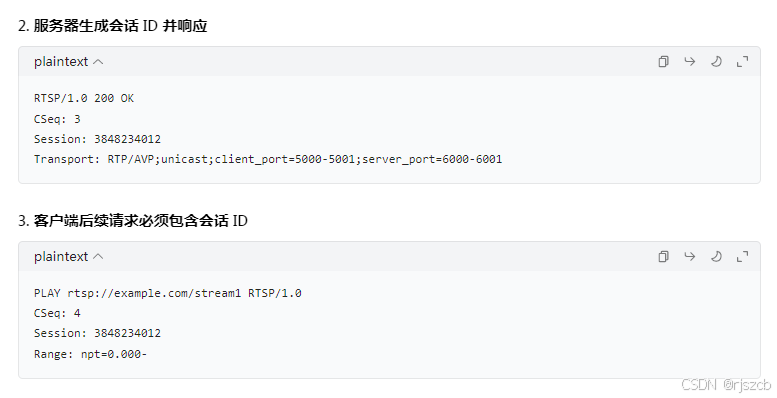

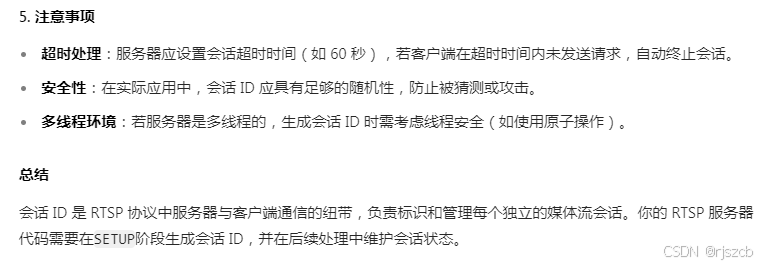

1、会话id

c

// 伪代码示例(需在实际代码中实现)

std::string RTSPServer::generateSessionId() {

// 生成唯一会话ID(例如随机数+时间戳)

static std::atomic<uint32_t> counter(0);

return std::to_string(time(nullptr)) + "_" + std::to_string(counter++);

}

void RTSPServer::handleSetupRequest(const RTSPRequest& request, RTSPResponse& response) {

// 为客户端分配会话ID

std::string sessionId = generateSessionId();

response.setHeader("Session", sessionId);

// 保存会话信息(如端口、媒体源等)

SessionInfo session;

session.id = sessionId;

session.clientPort = parseClientPort(request.getHeader("Transport"));

// ... 其他会话信息

// 存储会话(通常用std::map)

m_sessions[sessionId] = session;

}

2、

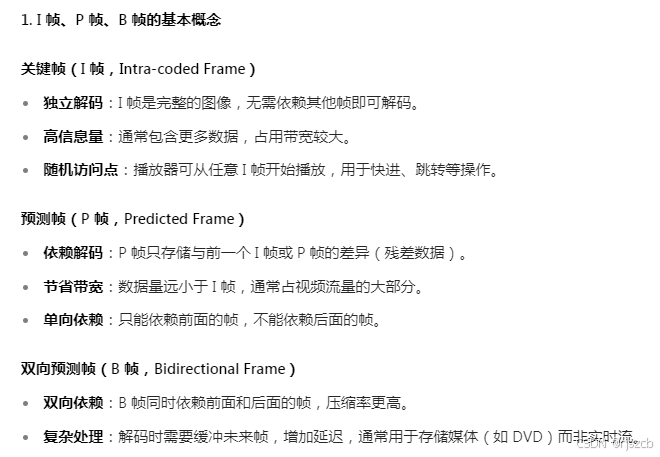

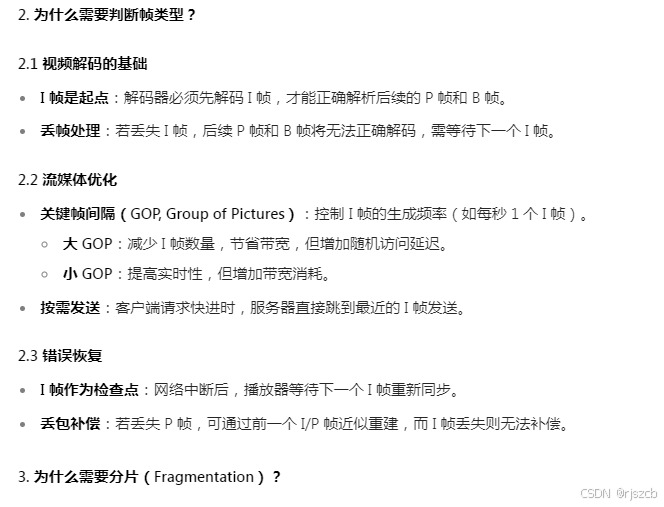

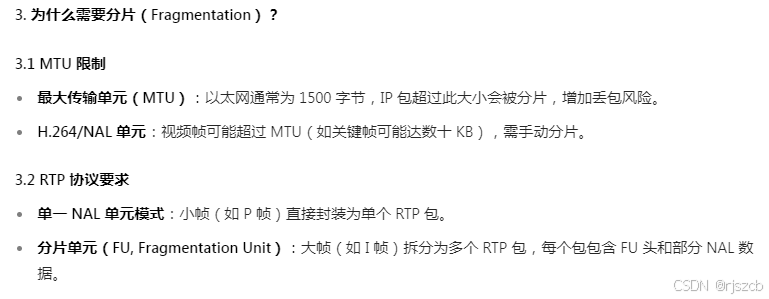

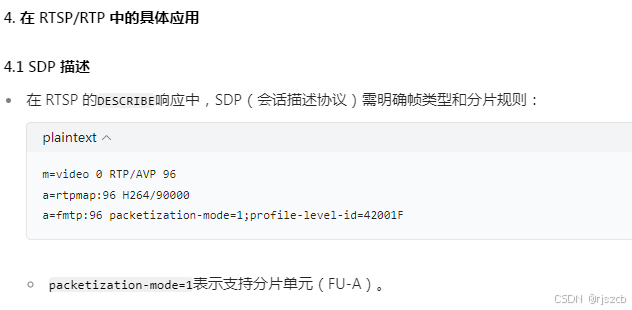

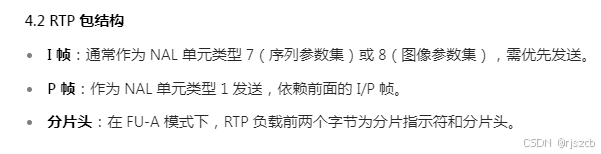

在视频流处理中,区分关键帧(I 帧)、预测帧(P 帧)和分片(Fragmentation)是保证视频正确解码和高效传输的关键。以下从原理、协议和实际应用角度详细解释:

在你的 RTSP 模拟器中,可通过以下方式模拟帧类型和分片:

c

class MediaSource {

public:

enum FrameType {

FRAME_I,

FRAME_P,

FRAME_B

};

// 生成模拟帧(需在子类实现)

virtual bool getNextFrame(uint8_t*& data, size_t& size, FrameType& type) = 0;

};

class H264MediaSource : public MediaSource {

public:

bool getNextFrame(uint8_t*& data, size_t& size, FrameType& type) override {

// 模拟:每30帧生成一个I帧,其余为P帧

static int frameCounter = 0;

if (frameCounter++ % 30 == 0) {

type = FRAME_I;

generateIFrame(data, size);

} else {

type = FRAME_P;

generatePFrame(data, size);

}

return true;

}

};分片处理

c

class RTPGenerator {

public:

void generateRtpPackets(const uint8_t* nalData, size_t nalSize,

FrameType frameType, std::vector<RtpPacket>& packets) {

const int MAX_RTP_PAYLOAD = 1400;

if (nalSize <= MAX_RTP_PAYLOAD) {

// 小NAL单元直接封装

packets.emplace_back(nalData, nalSize, frameType, false);

} else {

// 分片封装

const uint8_t* payload = nalData + 1; // 跳过NAL头

size_t payloadSize = nalSize - 1;

size_t offset = 0;

// 第一个分片

packets.emplace_back(createFirstFragment(nalData, payload, offset, MAX_RTP_PAYLOAD));

offset += MAX_RTP_PAYLOAD - 2; // 减去FU头大小

// 中间分片

while (offset + MAX_RTP_PAYLOAD - 2 < payloadSize) {

packets.emplace_back(createMiddleFragment(nalData, payload, offset, MAX_RTP_PAYLOAD));

offset += MAX_RTP_PAYLOAD - 2;

}

// 最后一个分片

packets.emplace_back(createLastFragment(nalData, payload, offset, payloadSize - offset));

}

}

};

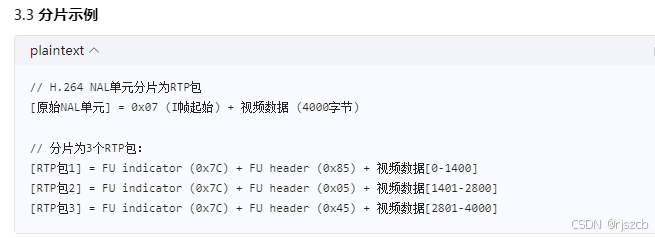

组合后就是如下

或者

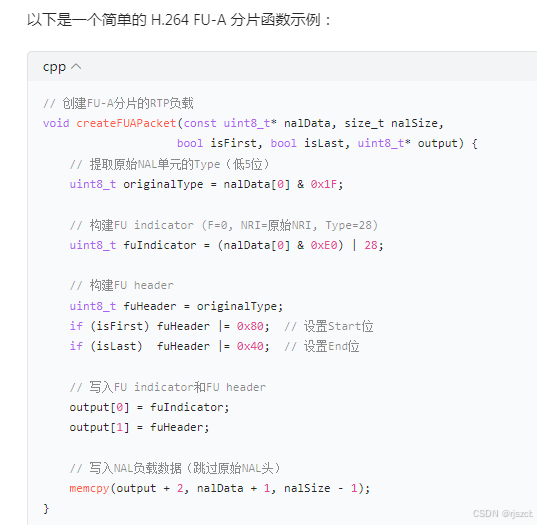

c

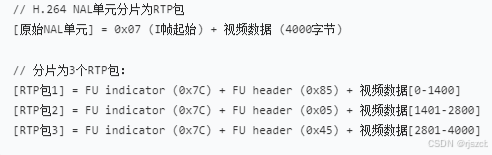

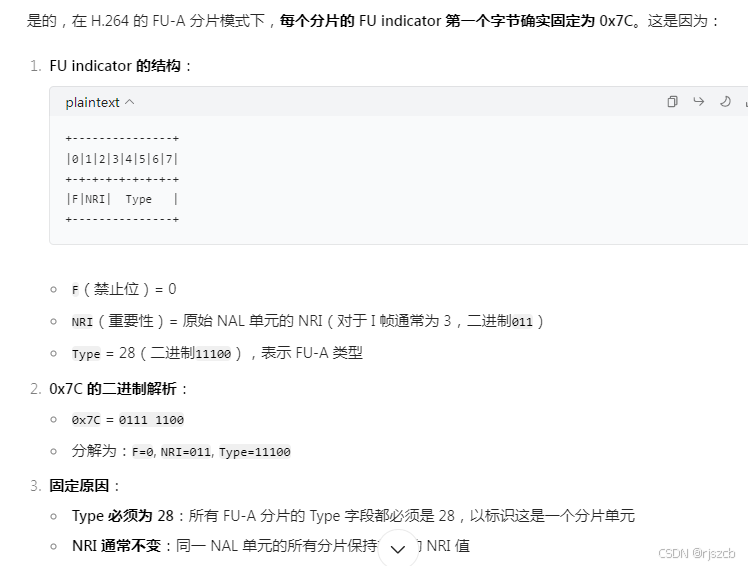

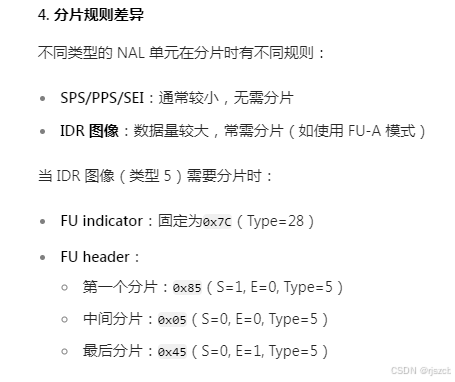

// H.264 NAL单元分片为RTP包

void fragmentNalUnit(const uint8_t* nalData, size_t nalSize, std::vector<RtpPacket>& packets) {

const int MAX_PAYLOAD_SIZE = 1400; // MTU - RTP头(12字节) - FU头(2字节)

const uint8_t FU_INDICATOR = 0x7C; // 固定为0x7C (Type=28)

// 提取原始NAL类型(低5位)

uint8_t originalNalType = nalData[0] & 0x1F;

// 计算需要的分片数

size_t numFragments = (nalSize - 1 + MAX_PAYLOAD_SIZE - 1) / MAX_PAYLOAD_SIZE;

size_t offset = 1; // 跳过原始NAL头(1字节)

for (size_t i = 0; i < numFragments; i++) {

size_t fragmentSize = std::min(nalSize - offset, MAX_PAYLOAD_SIZE);

bool isFirst = (i == 0);

bool isLast = (i == numFragments - 1);

// 创建FU header

uint8_t fuHeader = originalNalType;

if (isFirst) fuHeader |= 0x80; // 设置起始位

if (isLast) fuHeader |= 0x40; // 设置结束位

// 创建RTP包

RtpPacket packet;

packet.payloadSize = 2 + fragmentSize; // FU指示符(1) + FU头(1) + 数据

packet.payload[0] = FU_INDICATOR;

packet.payload[1] = fuHeader;

memcpy(packet.payload + 2, nalData + offset, fragmentSize);

packets.push_back(packet);

offset += fragmentSize;

}

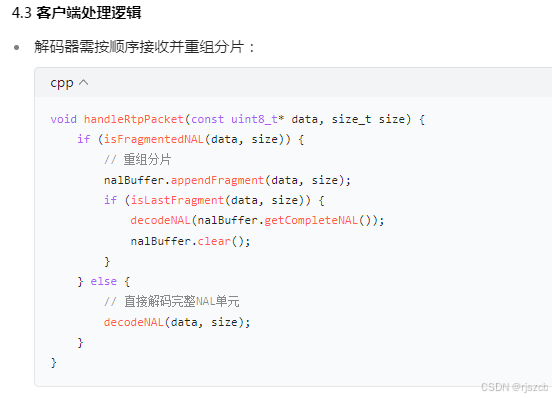

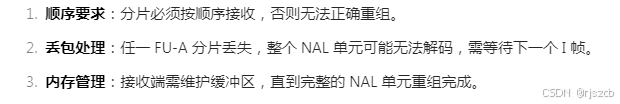

}接收端重组逻辑

接收端需要根据 FU-A 头信息重组原始 NAL 单元

或者

c

// 重组H.264 FU-A分片

bool reassembleFuA(const uint8_t* fragment, size_t fragSize,

bool isFirst, bool isLast, std::vector<uint8_t>& nalBuffer) {

if (isFirst) {

// 开始新的NAL单元

nalBuffer.clear();

// 从FU indicator中提取NRI

uint8_t nri = (fragment[0] & 0x60);

// 从FU header中提取原始NAL类型

uint8_t originalType = fragment[1] & 0x1F;

// 重建原始NAL头

uint8_t originalNalHeader = nri | originalType;

nalBuffer.push_back(originalNalHeader);

}

// 添加分片数据(跳过FU indicator和FU header)

nalBuffer.insert(nalBuffer.end(), fragment + 2, fragment + fragSize);

// 返回是否完成重组

return isLast;

}

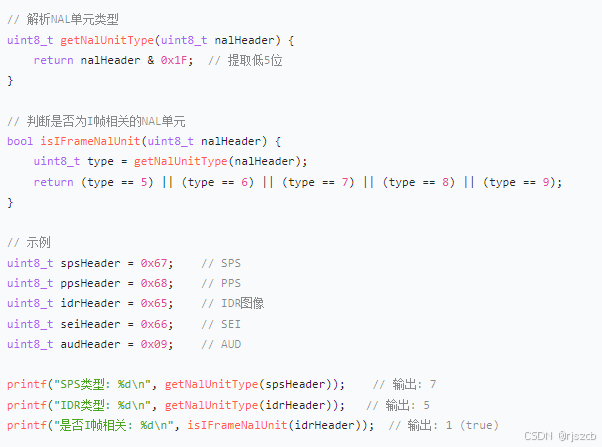

在 H.264/H.265 视频流中,判断一帧是 I 帧、P 帧还是 B 帧需要分析 NAL 单元的类型和内容。以下是具体的判断方法:

- 通过 NAL 单元类型判断(最直接方法)

c

// 判断H.264 NAL单元是否为I帧

bool isH264IFrame(const uint8_t* nalData, size_t nalSize) {

if (nalSize < 1) return false;

// 提取NAL头部的Type字段(低5位)

uint8_t nalType = nalData[0] & 0x1F;

// 如果是IDR图像(Type=5),直接判定为I帧

if (nalType == 5) return true;

// 对于Type=1(非IDR图像),需要进一步分析slice_type

if (nalType == 1 && nalSize >= 5) {

// 定位到slice_header中的slice_type字段

// 注意:实际解析需要考虑NAL单元的RBSP解码和EBSP去封装

uint8_t sliceType = parseSliceType(nalData + 1, nalSize - 1);

return (sliceType == 2 || sliceType == 7); // slice_type=2或7表示I帧

}

return false;

}

// 判断H.264 NAL单元是否为B帧

bool isH264BFrame(const uint8_t* nalData, size_t nalSize) {

if (nalSize < 1) return false;

uint8_t nalType = nalData[0] & 0x1F;

// 对于Type=1(非IDR图像),分析slice_type

if (nalType == 1 && nalSize >= 5) {

uint8_t sliceType = parseSliceType(nalData + 1, nalSize - 1);

return (sliceType == 1 || sliceType == 6); // slice_type=1或6表示B帧

}

return false;

}

// 解析slice_type字段(简化版)

uint8_t parseSliceType(const uint8_t* data, size_t size) {

// 实际解析需要考虑:

// 1. RBSP解码(移除防止竞争字节0x03)

// 2. EBSP去封装

// 3. 按位解析slice_header结构

// 此处为简化示例,实际代码需根据H.264标准实现

return data[0] & 0x07; // 假设slice_type在第一个字节的低3位

}

c

// 处理RTP包回调

void handleRtpPacket(const uint8_t* rtpData, size_t rtpSize) {

// 解析RTP头部

uint16_t seqNum = (rtpData[2] << 8) | rtpData[3];

uint32_t timestamp = (rtpData[4] << 24) | (rtpData[5] << 16) |

(rtpData[6] << 8) | rtpData[7];

// 提取RTP负载

const uint8_t* payload = rtpData + 12; // RTP头部默认12字节

size_t payloadSize = rtpSize - 12;

// 判断是否为FU-A分片

if (payloadSize >= 2 && (payload[0] & 0x1F) == 28) { // FU-A类型

uint8_t fuHeader = payload[1];

bool isStart = (fuHeader & 0x80) != 0;

bool isEnd = (fuHeader & 0x40) != 0;

if (isStart) {

// 开始新的NAL单元重组

nalBuffer.clear();

// 重建原始NAL头部

uint8_t originalNalHeader = (payload[0] & 0xE0) | (fuHeader & 0x1F);

nalBuffer.push_back(originalNalHeader);

}

// 添加分片数据(跳过FU indicator和FU header)

nalBuffer.insert(nalBuffer.end(), payload + 2, payload + payloadSize);

if (isEnd) {

// NAL单元重组完成,判断帧类型

if (isH264IFrame(nalBuffer.data(), nalBuffer.size())) {

printf("收到I帧,时间戳: %u\n", timestamp);

} else if (isH264BFrame(nalBuffer.data(), nalBuffer.size())) {

printf("收到B帧,时间戳: %u\n", timestamp);

} else {

printf("收到P帧,时间戳: %u\n", timestamp);

}

}

} else {

// 非分片NAL单元,直接判断

if (isH264IFrame(payload, payloadSize)) {

printf("收到完整I帧,时间戳: %u\n", timestamp);

}

}

}

rtsp_server.h

c

#ifndef RTSP_SERVER_H

#define RTSP_SERVER_H

#include <string>

#include <vector>

#include <map>

#include <thread>

#include <mutex>

#include <memory>

#include <cstdint>

#include <functional>

#include <unordered_map>

#include <queue>

#include <condition_variable>

class RTSPSession;

class MediaSource;

class RTSPServer {

public:

RTSPServer(int port = 8554);

~RTSPServer();

bool start();

void stop();

void addMediaSource(const std::string& streamName, std::shared_ptr<MediaSource> source);

void removeMediaSource(const std::string& streamName);

private:

void handleClient(int clientSocket);

void handleRTSPRequest(int clientSocket, const std::string& request);

std::shared_ptr<RTSPSession> createSession(const std::string& streamName);

int m_serverSocket;

bool m_running;

std::thread m_serverThread;

std::mutex m_sessionsMutex;

std::map<std::string, std::shared_ptr<RTSPSession>> m_sessions;

std::unordered_map<std::string, std::shared_ptr<MediaSource>> m_mediaSources;

int m_nextSessionId;

};

class RTSPSession {

public:

RTSPSession(int sessionId, std::shared_ptr<MediaSource> mediaSource);

~RTSPSession();

int getSessionId() const;

std::string getSessionDescription() const;

void setup(int clientRtpPort, int clientRtcpPort);

void play();

void pause();

void teardown();

private:

void sendRTPData();

int m_sessionId;

std::shared_ptr<MediaSource> m_mediaSource;

int m_clientRtpPort;

int m_clientRtcpPort;

int m_rtpSocket;

bool m_playing;

std::thread m_senderThread;

bool m_senderRunning;

};

class MediaSource {

public:

virtual ~MediaSource() = default;

virtual std::string getMimeType() const = 0;

virtual std::string getMediaDescription() const = 0;

virtual std::string getAttribute() const = 0;

virtual void getNextFrame(std::vector<uint8_t>& frame, uint32_t& timestamp, bool& marker) = 0;

virtual uint32_t getClockRate() const = 0;

};

#endif // RTSP_SERVER_H rtsp_server.cpp

c

#include "rtsp_server.h"

#include <iostream>

#include <sstream>

#include <cstring>

#include <sys/socket.h>

#include <arpa/inet.h>

#include <unistd.h>

#include <netdb.h>

#include <ctime>

#include <random>

RTSPServer::RTSPServer(int port)

: m_serverSocket(-1)

, m_running(false)

, m_nextSessionId(1000) {

// 创建套接字

m_serverSocket = socket(AF_INET, SOCK_STREAM, 0);

if (m_serverSocket == -1) {

std::cerr << "Failed to create socket" << std::endl;

return;

}

// 设置套接字选项

int opt = 1;

if (setsockopt(m_serverSocket, SOL_SOCKET, SO_REUSEADDR, &opt, sizeof(opt)) == -1) {

std::cerr << "Failed to set socket options" << std::endl;

close(m_serverSocket);

m_serverSocket = -1;

return;

}

// 绑定套接字

sockaddr_in serverAddr;

memset(&serverAddr, 0, sizeof(serverAddr));

serverAddr.sin_family = AF_INET;

serverAddr.sin_addr.s_addr = INADDR_ANY;

serverAddr.sin_port = htons(port);

if (bind(m_serverSocket, (sockaddr*)&serverAddr, sizeof(serverAddr)) == -1) {

std::cerr << "Failed to bind socket" << std::endl;

close(m_serverSocket);

m_serverSocket = -1;

return;

}

// 监听连接

if (listen(m_serverSocket, 5) == -1) {

std::cerr << "Failed to listen on socket" << std::endl;

close(m_serverSocket);

m_serverSocket = -1;

return;

}

}

RTSPServer::~RTSPServer() {

stop();

if (m_serverSocket != -1) {

close(m_serverSocket);

}

}

bool RTSPServer::start() {

if (m_running) return true;

if (m_serverSocket == -1) return false;

m_running = true;

m_serverThread = std::thread([this]() {

while (m_running) {

sockaddr_in clientAddr;

socklen_t clientAddrLen = sizeof(clientAddr);

int clientSocket = accept(m_serverSocket, (sockaddr*)&clientAddr, &clientAddrLen);

if (clientSocket == -1) {

if (m_running) {

std::cerr << "Failed to accept client connection" << std::endl;

}

continue;

}

// 处理客户端连接

std::thread([this, clientSocket]() {

handleClient(clientSocket);

close(clientSocket);

}).detach();

}

});

return true;

}

void RTSPServer::stop() {

if (!m_running) return;

m_running = false;

if (m_serverSocket != -1) {

// 关闭服务器套接字以中断accept调用

close(m_serverSocket);

m_serverSocket = -1;

}

if (m_serverThread.joinable()) {

m_serverThread.join();

}

// 清理所有会话

std::lock_guard<std::mutex> lock(m_sessionsMutex);

for (auto& session : m_sessions) {

session.second->teardown();

}

m_sessions.clear();

}

void RTSPServer::addMediaSource(const std::string& streamName, std::shared_ptr<MediaSource> source) {

std::lock_guard<std::mutex> lock(m_sessionsMutex);

m_mediaSources[streamName] = source;

}

void RTSPServer::removeMediaSource(const std::string& streamName) {

std::lock_guard<std::mutex> lock(m_sessionsMutex);

m_mediaSources.erase(streamName);

}

void RTSPServer::handleClient(int clientSocket) {

char buffer[4096];

memset(buffer, 0, sizeof(buffer));

// 读取RTSP请求

ssize_t bytesRead = recv(clientSocket, buffer, sizeof(buffer) - 1, 0);

if (bytesRead <= 0) {

return;

}

std::string request(buffer, bytesRead);

handleRTSPRequest(clientSocket, request);

}

void RTSPServer::handleRTSPRequest(int clientSocket, const std::string& request) {

std::istringstream iss(request);

std::string method, url, version;

iss >> method >> url >> version;

std::stringstream response;

if (method == "OPTIONS") {

response << "RTSP/1.0 200 OK\r\n";

response << "CSeq: " << 1 << "\r\n";

response << "Public: OPTIONS, DESCRIBE, SETUP, TEARDOWN, PLAY, PAUSE\r\n";

response << "\r\n";

}

else if (method == "DESCRIBE") {

// 解析URL获取流名称

size_t pos = url.find_last_of('/');

std::string streamName = (pos != std::string::npos) ? url.substr(pos + 1) : url;

std::lock_guard<std::mutex> lock(m_sessionsMutex);

auto it = m_mediaSources.find(streamName);

if (it == m_mediaSources.end()) {

response << "RTSP/1.0 404 Not Found\r\n";

response << "CSeq: " << 1 << "\r\n";

response << "\r\n";

} else {

std::shared_ptr<MediaSource> mediaSource = it->second;

response << "RTSP/1.0 200 OK\r\n";

response << "CSeq: " << 1 << "\r\n";

response << "Content-Type: application/sdp\r\n";

response << "Content-Length: ";

std::string sdp = "v=0\r\n";

sdp += "o=- " + std::to_string(time(nullptr)) + " 1 IN IP4 127.0.0.1\r\n";

sdp += "s=Media Session\r\n";

sdp += "i=" + streamName + "\r\n";

sdp += "c=IN IP4 0.0.0.0\r\n";

sdp += "t=0 0\r\n";

sdp += "m=video 0 RTP/AVP " + std::to_string(96) + "\r\n";

sdp += "a=rtpmap:" + std::to_string(96) + " " + mediaSource->getMimeType() + "/" + std::to_string(mediaSource->getClockRate()) + "\r\n";

sdp += "a=control:trackID=0\r\n";

sdp += mediaSource->getAttribute() + "\r\n";

response << sdp.length() << "\r\n\r\n";

response << sdp;

}

}

else if (method == "SETUP") {

// 解析URL获取流名称

size_t pos = url.find_last_of('/');

std::string streamName = (pos != std::string::npos) ? url.substr(pos + 1) : url;

// 解析客户端端口

pos = request.find("client_port=");

int clientRtpPort = 0, clientRtcpPort = 0;

if (pos != std::string::npos) {

pos += 12;

size_t endPos = request.find('-', pos);

if (endPos != std::string::npos) {

clientRtpPort = std::stoi(request.substr(pos, endPos - pos));

pos = endPos + 1;

endPos = request.find('\r', pos);

if (endPos != std::string::npos) {

clientRtcpPort = std::stoi(request.substr(pos, endPos - pos));

}

}

}

std::lock_guard<std::mutex> lock(m_sessionsMutex);

auto it = m_mediaSources.find(streamName);

if (it == m_mediaSources.end()) {

response << "RTSP/1.0 404 Not Found\r\n";

response << "CSeq: " << 1 << "\r\n";

response << "\r\n";

} else {

// 创建会话

std::shared_ptr<RTSPSession> session = createSession(streamName);

session->setup(clientRtpPort, clientRtcpPort);

response << "RTSP/1.0 200 OK\r\n";

response << "CSeq: " << 1 << "\r\n";

response << "Session: " << session->getSessionId() << ";timeout=60\r\n";

response << "Transport: RTP/AVP/UDP;unicast;client_port="

<< clientRtpPort << "-" << clientRtcpPort

<< ";server_port=" << (clientRtpPort + 1) << "-" << (clientRtcpPort + 1) << "\r\n";

response << "\r\n";

}

}

else if (method == "PLAY") {

// 解析会话ID

size_t pos = request.find("Session: ");

int sessionId = 0;

if (pos != std::string::npos) {

pos += 9;

size_t endPos = request.find('\r', pos);

if (endPos != std::string::npos) {

sessionId = std::stoi(request.substr(pos, endPos - pos));

}

}

std::lock_guard<std::mutex> lock(m_sessionsMutex);

auto it = m_sessions.find(std::to_string(sessionId));

if (it == m_sessions.end()) {

response << "RTSP/1.0 454 Session Not Found\r\n";

response << "CSeq: " << 1 << "\r\n";

response << "\r\n";

} else {

it->second->play();

response << "RTSP/1.0 200 OK\r\n";

response << "CSeq: " << 1 << "\r\n";

response << "Session: " << sessionId << "\r\n";

response << "Range: npt=0.000-\r\n";

response << "\r\n";

}

}

else if (method == "PAUSE") {

// 解析会话ID

size_t pos = request.find("Session: ");

int sessionId = 0;

if (pos != std::string::npos) {

pos += 9;

size_t endPos = request.find('\r', pos);

if (endPos != std::string::npos) {

sessionId = std::stoi(request.substr(pos, endPos - pos));

}

}

std::lock_guard<std::mutex> lock(m_sessionsMutex);

auto it = m_sessions.find(std::to_string(sessionId));

if (it == m_sessions.end()) {

response << "RTSP/1.0 454 Session Not Found\r\n";

response << "CSeq: " << 1 << "\r\n";

response << "\r\n";

} else {

it->second->pause();

response << "RTSP/1.0 200 OK\r\n";

response << "CSeq: " << 1 << "\r\n";

response << "Session: " << sessionId << "\r\n";

response << "\r\n";

}

}

else if (method == "TEARDOWN") {

// 解析会话ID

size_t pos = request.find("Session: ");

int sessionId = 0;

if (pos != std::string::npos) {

pos += 9;

size_t endPos = request.find('\r', pos);

if (endPos != std::string::npos) {

sessionId = std::stoi(request.substr(pos, endPos - pos));

}

}

std::lock_guard<std::mutex> lock(m_sessionsMutex);

auto it = m_sessions.find(std::to_string(sessionId));

if (it == m_sessions.end()) {

response << "RTSP/1.0 454 Session Not Found\r\n";

response << "CSeq: " << 1 << "\r\n";

response << "\r\n";

} else {

it->second->teardown();

m_sessions.erase(std::to_string(sessionId));

response << "RTSP/1.0 200 OK\r\n";

response << "CSeq: " << 1 << "\r\n";

response << "Session: " << sessionId << "\r\n";

response << "\r\n";

}

}

else {

response << "RTSP/1.0 501 Not Implemented\r\n";

response << "CSeq: " << 1 << "\r\n";

response << "\r\n";

}

// 发送响应

std::string responseStr = response.str();

send(clientSocket, responseStr.c_str(), responseStr.length(), 0);

}

std::shared_ptr<RTSPSession> RTSPServer::createSession(const std::string& streamName) {

auto it = m_mediaSources.find(streamName);

if (it == m_mediaSources.end()) {

return nullptr;

}

int sessionId = m_nextSessionId++;

std::shared_ptr<RTSPSession> session = std::make_shared<RTSPSession>(sessionId, it->second);

m_sessions[std::to_string(sessionId)] = session;

return session;

}

RTSPSession::RTSPSession(int sessionId, std::shared_ptr<MediaSource> mediaSource)

: m_sessionId(sessionId)

, m_mediaSource(mediaSource)

, m_clientRtpPort(0)

, m_clientRtcpPort(0)

, m_rtpSocket(-1)

, m_playing(false)

, m_senderRunning(false) {

// 创建RTP套接字

m_rtpSocket = socket(AF_INET, SOCK_DGRAM, 0);

if (m_rtpSocket == -1) {

std::cerr << "Failed to create RTP socket" << std::endl;

}

}

RTSPSession::~RTSPSession() {

teardown();

if (m_rtpSocket != -1) {

close(m_rtpSocket);

}

}

int RTSPSession::getSessionId() const {

return m_sessionId;

}

std::string RTSPSession::getSessionDescription() const {

return m_mediaSource->getMediaDescription();

}

void RTSPSession::setup(int clientRtpPort, int clientRtcpPort) {

m_clientRtpPort = clientRtpPort;

m_clientRtcpPort = clientRtcpPort;

}

void RTSPSession::play() {

if (m_playing) return;

m_playing = true;

m_senderRunning = true;

m_senderThread = std::thread([this]() {

sendRTPData();

});

}

void RTSPSession::pause() {

m_playing = false;

if (m_senderThread.joinable()) {

m_senderThread.join();

}

}

void RTSPSession::teardown() {

m_playing = false;

m_senderRunning = false;

if (m_senderThread.joinable()) {

m_senderThread.join();

}

}

void RTSPSession::sendRTPData() {

if (m_clientRtpPort == 0 || m_rtpSocket == -1) return;

// 设置目标地址

sockaddr_in destAddr;

memset(&destAddr, 0, sizeof(destAddr));

destAddr.sin_family = AF_INET;

destAddr.sin_addr.s_addr = inet_addr("127.0.0.1");

destAddr.sin_port = htons(m_clientRtpPort);

// RTP包计数器和时间戳

uint16_t sequenceNumber = 0;

uint32_t timestamp = 0;

uint32_t ssrc = rand();

// 帧率控制

uint32_t clockRate = m_mediaSource->getClockRate();

uint32_t frameDuration = clockRate / 25; // 25fps

while (m_senderRunning) {

if (!m_playing) {

std::this_thread::sleep_for(std::chrono::milliseconds(10));

continue;

}

// 获取下一帧数据

std::vector<uint8_t> frame;

bool marker = false;

m_mediaSource->getNextFrame(frame, timestamp, marker);

if (frame.empty()) {

std::this_thread::sleep_for(std::chrono::milliseconds(40));

continue;

}

// 构建RTP头部

const int RTP_HEADER_SIZE = 12;

uint8_t rtpHeader[RTP_HEADER_SIZE];

// 版本(2)、填充(0)、扩展(0)、CSRC计数(0)

rtpHeader[0] = (2 << 6) | (0 << 5) | (0 << 4) | 0;

// 标记位和负载类型(96 for H.264)

rtpHeader[1] = (marker ? 0x80 : 0) | 96;

// 序列号

rtpHeader[2] = (sequenceNumber >> 8) & 0xFF;

rtpHeader[3] = sequenceNumber & 0xFF;

// 时间戳

rtpHeader[4] = (timestamp >> 24) & 0xFF;

rtpHeader[5] = (timestamp >> 16) & 0xFF;

rtpHeader[6] = (timestamp >> 8) & 0xFF;

rtpHeader[7] = timestamp & 0xFF;

// SSRC

rtpHeader[8] = (ssrc >> 24) & 0xFF;

rtpHeader[9] = (ssrc >> 16) & 0xFF;

rtpHeader[10] = (ssrc >> 8) & 0xFF;

rtpHeader[11] = ssrc & 0xFF;

// 发送RTP包

std::vector<uint8_t> rtpPacket;

rtpPacket.insert(rtpPacket.end(), rtpHeader, rtpHeader + RTP_HEADER_SIZE);

rtpPacket.insert(rtpPacket.end(), frame.begin(), frame.end());

sendto(m_rtpSocket, rtpPacket.data(), rtpPacket.size(), 0,

(sockaddr*)&destAddr, sizeof(destAddr));

// 更新序列号和时间戳

sequenceNumber++;

timestamp += frameDuration;

// 控制帧率

std::this_thread::sleep_for(std::chrono::milliseconds(40)); // 25fps

}

} 模拟生产frame视频帧

media_sources.h

c

#ifndef MEDIA_SOURCES_H

#define MEDIA_SOURCES_H

#include "rtsp_server.h"

#include <random>

class H264MediaSource : public MediaSource {

public:

H264MediaSource(int width = 640, int height = 480);

std::string getMimeType() const override;

std::string getMediaDescription() const override;

std::string getAttribute() const override;

void getNextFrame(std::vector<uint8_t>& frame, uint32_t& timestamp, bool& marker) override;

uint32_t getClockRate() const override;

private:

int m_width;

int m_height;

uint32_t m_currentTimestamp;

std::mt19937 m_rng;

};

class H265MediaSource : public MediaSource {

public:

H265MediaSource(int width = 640, int height = 480);

std::string getMimeType() const override;

std::string getMediaDescription() const override;

std::string getAttribute() const override;

void getNextFrame(std::vector<uint8_t>& frame, uint32_t& timestamp, bool& marker) override;

uint32_t getClockRate() const override;

private:

int m_width;

int m_height;

uint32_t m_currentTimestamp;

std::mt19937 m_rng;

};

class AACMediaSource : public MediaSource {

public:

AACMediaSource(int sampleRate = 44100, int channels = 2);

std::string getMimeType() const override;

std::string getMediaDescription() const override;

std::string getAttribute() const override;

void getNextFrame(std::vector<uint8_t>& frame, uint32_t& timestamp, bool& marker) override;

uint32_t getClockRate() const override;

private:

int m_sampleRate;

int m_channels;

uint32_t m_currentTimestamp;

std::mt19937 m_rng;

};

#endif // MEDIA_SOURCES_H media_sources.cpp

c

#include "media_sources.h"

#include <random>

H264MediaSource::H264MediaSource(int width, int height)

: m_width(width)

, m_height(height)

, m_currentTimestamp(0) {

std::random_device rd;

m_rng = std::mt19937(rd());

}

std::string H264MediaSource::getMimeType() const {

return "H264";

}

std::string H264MediaSource::getMediaDescription() const {

return "H.264 Video Stream";

}

std::string H264MediaSource::getAttribute() const {

return "a=fmtp:96 packetization-mode=1;profile-level-id=42E01F;sprop-parameter-sets=Z0LAH9kA8AoAAAMAAgAAAwDAAAAwDxYuS,aM48gA==";

}

void H264MediaSource::getNextFrame(std::vector<uint8_t>& frame, uint32_t& timestamp, bool& marker) {

static bool isKeyFrame = true;

static int frameCount = 0;

// 每30帧生成一个关键帧

if (frameCount++ % 30 == 0) {

isKeyFrame = true;

} else {

isKeyFrame = false;

}

// 模拟H.264帧

size_t frameSize = isKeyFrame ? 10000 + (m_rng() % 5000) : 2000 + (m_rng() % 3000);

frame.resize(frameSize);

// 模拟H.264 NAL单元头

if (isKeyFrame) {

// IDR帧

frame[0] = 0x00;

frame[1] = 0x00;

frame[2] = 0x00;

frame[3] = 0x01;

frame[4] = 0x65; // IDR NAL单元类型

} else {

// P帧

frame[0] = 0x00;

frame[1] = 0x00;

frame[2] = 0x00;

frame[3] = 0x01;

frame[4] = 0x41; // P NAL单元类型

}

// 填充随机数据

for (size_t i = 5; i < frameSize; ++i) {

frame[i] = m_rng() % 256;

}

timestamp = m_currentTimestamp;

m_currentTimestamp += 90000 / 25; // 25fps,90kHz时钟

marker = true; // 每帧都设置标记位

}

uint32_t H264MediaSource::getClockRate() const {

return 90000; // 90kHz

}

H265MediaSource::H265MediaSource(int width, int height)

: m_width(width)

, m_height(height)

, m_currentTimestamp(0) {

std::random_device rd;

m_rng = std::mt19937(rd());

}

std::string H265MediaSource::getMimeType() const {

return "H265";

}

std::string H265MediaSource::getMediaDescription() const {

return "H.265 Video Stream";

}

std::string H265MediaSource::getAttribute() const {

return "a=fmtp:96 profile-id=1;level-id=110;packetization-mode=1";

}

void H265MediaSource::getNextFrame(std::vector<uint8_t>& frame, uint32_t& timestamp, bool& marker) {

static bool isKeyFrame = true;

static int frameCount = 0;

// 每30帧生成一个关键帧

if (frameCount++ % 30 == 0) {

isKeyFrame = true;

} else {

isKeyFrame = false;

}

// 模拟H.265帧

size_t frameSize = isKeyFrame ? 12000 + (m_rng() % 6000) : 2500 + (m_rng() % 3500);

frame.resize(frameSize);

// 模拟H.265 NAL单元头

if (isKeyFrame) {

// IDR帧

frame[0] = 0x00;

frame[1] = 0x00;

frame[2] = 0x00;

frame[3] = 0x01;

frame[4] = 0x40; // IDR_W_RADL NAL单元类型

frame[5] = 0x01;

} else {

// P帧

frame[0] = 0x00;

frame[1] = 0x00;

frame[2] = 0x00;

frame[3] = 0x01;

frame[4] = 0x02; // TRAIL_R NAL单元类型

frame[5] = 0x01;

}

// 填充随机数据

for (size_t i = 6; i < frameSize; ++i) {

frame[i] = m_rng() % 256;

}

timestamp = m_currentTimestamp;

m_currentTimestamp += 90000 / 25; // 25fps,90kHz时钟

marker = true; // 每帧都设置标记位

}

uint32_t H265MediaSource::getClockRate() const {

return 90000; // 90kHz

}

AACMediaSource::AACMediaSource(int sampleRate, int channels)

: m_sampleRate(sampleRate)

, m_channels(channels)

, m_currentTimestamp(0) {

std::random_device rd;

m_rng = std::mt19937(rd());

}

std::string AACMediaSource::getMimeType() const {

return "MPEG4-GENERIC";

}

std::string AACMediaSource::getMediaDescription() const {

return "AAC Audio Stream";

}

std::string AACMediaSource::getAttribute() const {

return "a=fmtp:96 streamtype=5;profile-level-id=15;mode=AAC-hbr;sizelength=13;indexlength=3;indexdeltalength=3;config=1408";

}

void AACMediaSource::getNextFrame(std::vector<uint8_t>& frame, uint32_t& timestamp, bool& marker) {

// 模拟AAC帧

size_t frameSize = 1024 + (m_rng() % 512); // 1024-1536字节

frame.resize(frameSize);

// 填充随机数据

for (size_t i = 0; i < frameSize; ++i) {

frame[i] = m_rng() % 256;

}

timestamp = m_currentTimestamp;

m_currentTimestamp += 1024; // 每帧1024个样本

marker = true;

}

uint32_t AACMediaSource::getClockRate() const {

return m_sampleRate;

} main.cpp

c

#include "rtsp_server.h"

#include "media_sources.h"

#include <iostream>

#include <memory>

int main() {

// 创建RTSP服务器,监听8554端口

RTSPServer server(8554);

// 创建并添加多路媒体源

auto camera1_h264 = std::make_shared<H264MediaSource>(1280, 720);

auto camera2_h264 = std::make_shared<H264MediaSource>(800, 600);

auto camera3_h265 = std::make_shared<H265MediaSource>(1920, 1080);

auto audio_aac = std::make_shared<AACMediaSource>(44100, 2);

server.addMediaSource("camera1", camera1_h264);

server.addMediaSource("camera2", camera2_h264);

server.addMediaSource("camera3", camera3_h265);

server.addMediaSource("audio", audio_aac);

// 启动服务器

if (server.start()) {

std::cout << "RTSP Server started on port 8554" << std::endl;

std::cout << "Available streams:" << std::endl;

std::cout << "rtsp://localhost:8554/camera1 (H.264 1280x720)" << std::endl;

std::cout << "rtsp://localhost:8554/camera2 (H.264 800x600)" << std::endl;

std::cout << "rtsp://localhost:8554/camera3 (H.265 1920x1080)" << std::endl;

std::cout << "rtsp://localhost:8554/audio (AAC 44.1kHz stereo)" << std::endl;

// 保持服务器运行

std::cout << "Press Enter to stop the server..." << std::endl;

std::cin.get();

} else {

std::cerr << "Failed to start RTSP server" << std::endl;

}

// 停止服务器

server.stop();

return 0;

} rtsp_client.h

c

#ifndef RTSP_CLIENT_H

#define RTSP_CLIENT_H

#include <string>

#include <memory>

#include <thread>

#include <mutex>

#include <vector>

#include <functional>

#include <cstdint>

// RTP包结构

struct RTPPacket {

uint8_t version; // 版本号

uint8_t padding; // 填充标志

uint8_t extension; // 扩展标志

uint8_t cc; // CSRC计数器

uint8_t marker; // 标记位

uint8_t payloadType; // 负载类型

uint16_t sequence; // 序列号

uint32_t timestamp; // 时间戳

uint32_t ssrc; // 同步源标识符

std::vector<uint8_t> payload; // 负载数据

};

// RTSP客户端类

class RTSPClient {

public:

// 帧数据回调函数类型

using FrameCallback = std::function<void(const uint8_t*, size_t, uint32_t, bool)>;

RTSPClient();

~RTSPClient();

// 连接到RTSP服务器

bool connect(const std::string& rtspUrl);

// 断开连接

void disconnect();

// 设置帧数据回调

void setFrameCallback(const FrameCallback& callback);

// 开始播放

bool play();

// 暂停播放

bool pause();

// 获取媒体信息

std::string getMediaInfo() const;

private:

// 状态枚举

enum class State {

INIT,

READY,

PLAYING,

PAUSED,

TEARDOWN

};

// 解析RTSP URL

bool parseUrl(const std::string& rtspUrl);

// 发送RTSP请求

std::string sendRequest(const std::string& request);

// 接收RTP/RTCP数据

void receiveRTPData();

// 解析SDP信息

bool parseSDP(const std::string& sdp);

// 解析RTP包

bool parseRTPPacket(const std::vector<uint8_t>& data, RTPPacket& packet);

// 处理视频帧

void processVideoFrame(const RTPPacket& packet);

// 处理音频帧

void processAudioFrame(const RTPPacket& packet);

// 成员变量

std::string m_serverAddress;

int m_serverPort;

std::string m_streamPath;

int m_rtspSocket;

std::string m_sessionId;

State m_state;

int m_cseq;

int m_rtpPort;

int m_rtcpPort;

std::thread m_receiveThread;

bool m_running;

mutable std::mutex m_mutex;

FrameCallback m_frameCallback;

std::string m_mediaInfo;

std::string m_codec;

int m_clockRate;

};

#endif // RTSP_CLIENT_H rtsp_client.cpp

c

#include "rtsp_client.h"

#include <iostream>

#include <sstream>

#include <cstring>

#include <sys/socket.h>

#include <arpa/inet.h>

#include <netinet/in.h>

#include <unistd.h>

#include <regex>

RTSPClient::RTSPClient()

: m_serverPort(554)

, m_rtspSocket(-1)

, m_state(State::INIT)

, m_cseq(0)

, m_rtpPort(0)

, m_rtcpPort(0)

, m_running(false)

, m_clockRate(90000) {

}

RTSPClient::~RTSPClient() {

disconnect();

}

bool RTSPClient::connect(const std::string& rtspUrl) {

std::lock_guard<std::mutex> lock(m_mutex);

if (m_state != State::INIT) {

std::cerr << "RTSPClient: Already connected or in progress" << std::endl;

return false;

}

if (!parseUrl(rtspUrl)) {

std::cerr << "RTSPClient: Failed to parse URL: " << rtspUrl << std::endl;

return false;

}

// 创建RTSP套接字

m_rtspSocket = socket(AF_INET, SOCK_STREAM, 0);

if (m_rtspSocket < 0) {

std::cerr << "RTSPClient: Failed to create socket" << std::endl;

return false;

}

// 设置服务器地址

sockaddr_in serverAddr;

memset(&serverAddr, 0, sizeof(serverAddr));

serverAddr.sin_family = AF_INET;

serverAddr.sin_port = htons(m_serverPort);

// 解析服务器地址

if (inet_pton(AF_INET, m_serverAddress.c_str(), &serverAddr.sin_addr) <= 0) {

std::cerr << "RTSPClient: Invalid address/ Address not supported" << std::endl;

close(m_rtspSocket);

m_rtspSocket = -1;

return false;

}

// 连接服务器

if (connect(m_rtspSocket, (struct sockaddr*)&serverAddr, sizeof(serverAddr)) < 0) {

std::cerr << "RTSPClient: Connection failed" << std::endl;

close(m_rtspSocket);

m_rtspSocket = -1;

return false;

}

// 发送OPTIONS请求

std::string request = "OPTIONS " + rtspUrl + " RTSP/1.0\r\n";

request += "CSeq: " + std::to_string(++m_cseq) + "\r\n";

request += "User-Agent: RTSPClient\r\n\r\n";

std::string response = sendRequest(request);

if (response.empty()) {

std::cerr << "RTSPClient: No response to OPTIONS request" << std::endl;

disconnect();

return false;

}

// 发送DESCRIBE请求

request = "DESCRIBE " + rtspUrl + " RTSP/1.0\r\n";

request += "CSeq: " + std::to_string(++m_cseq) + "\r\n";

request += "Accept: application/sdp\r\n";

request += "User-Agent: RTSPClient\r\n\r\n";

response = sendRequest(request);

if (response.empty()) {

std::cerr << "RTSPClient: No response to DESCRIBE request" << std::endl;

disconnect();

return false;

}

// 解析SDP信息

if (!parseSDP(response)) {

std::cerr << "RTSPClient: Failed to parse SDP" << std::endl;

disconnect();

return false;

}

m_state = State::READY;

return true;

}

void RTSPClient::disconnect() {

std::lock_guard<std::mutex> lock(m_mutex);

if (m_state == State::TEARDOWN) {

return;

}

// 发送TEARDOWN请求

if (m_state != State::INIT && m_rtspSocket != -1) {

std::string request = "TEARDOWN " + m_streamPath + " RTSP/1.0\r\n";

request += "CSeq: " + std::to_string(++m_cseq) + "\r\n";

if (!m_sessionId.empty()) {

request += "Session: " + m_sessionId + "\r\n";

}

request += "User-Agent: RTSPClient\r\n\r\n";

sendRequest(request);

}

m_state = State::TEARDOWN;

// 停止接收线程

m_running = false;

if (m_receiveThread.joinable()) {

m_receiveThread.join();

}

// 关闭套接字

if (m_rtspSocket != -1) {

close(m_rtspSocket);

m_rtspSocket = -1;

}

}

bool RTSPClient::play() {

std::lock_guard<std::mutex> lock(m_mutex);

if (m_state != State::READY && m_state != State::PAUSED) {

std::cerr << "RTSPClient: Cannot play in current state" << std::endl;

return false;

}

// 发送SETUP请求

std::string request = "SETUP " + m_streamPath + "/track1 RTSP/1.0\r\n";

request += "CSeq: " + std::to_string(++m_cseq) + "\r\n";

if (!m_sessionId.empty()) {

request += "Session: " + m_sessionId + "\r\n";

}

request += "Transport: RTP/AVP;unicast;client_port=" +

std::to_string(m_rtpPort) + "-" + std::to_string(m_rtcpPort) + "\r\n";

request += "User-Agent: RTSPClient\r\n\r\n";

std::string response = sendRequest(request);

if (response.empty()) {

std::cerr << "RTSPClient: No response to SETUP request" << std::endl;

return false;

}

// 提取会话ID

std::regex sessionRegex("Session: (.*?)\r\n");

std::smatch sessionMatch;

if (std::regex_search(response, sessionMatch, sessionRegex) && sessionMatch.size() > 1) {

m_sessionId = sessionMatch[1].str();

// 去除可能的超时参数

size_t semicolonPos = m_sessionId.find(';');

if (semicolonPos != std::string::npos) {

m_sessionId = m_sessionId.substr(0, semicolonPos);

}

} else {

std::cerr << "RTSPClient: Session ID not found in SETUP response" << std::endl;

return false;

}

// 提取服务器端口

std::regex serverPortRegex("server_port=(\\d+)-(\\d+)");

std::smatch portMatch;

if (std::regex_search(response, portMatch, serverPortRegex) && portMatch.size() > 2) {

// 这里可以获取服务器的RTP和RTCP端口,但实际使用中我们通常只需要客户端端口

}

// 发送PLAY请求

request = "PLAY " + m_streamPath + " RTSP/1.0\r\n";

request += "CSeq: " + std::to_string(++m_cseq) + "\r\n";

request += "Session: " + m_sessionId + "\r\n";

request += "User-Agent: RTSPClient\r\n\r\n";

response = sendRequest(request);

if (response.empty()) {

std::cerr << "RTSPClient: No response to PLAY request" << std::endl;

return false;

}

// 启动接收线程

m_running = true;

m_receiveThread = std::thread(&RTSPClient::receiveRTPData, this);

m_state = State::PLAYING;

return true;

}

bool RTSPClient::pause() {

std::lock_guard<std::mutex> lock(m_mutex);

if (m_state != State::PLAYING) {

std::cerr << "RTSPClient: Cannot pause in current state" << std::endl;

return false;

}

// 发送PAUSE请求

std::string request = "PAUSE " + m_streamPath + " RTSP/1.0\r\n";

request += "CSeq: " + std::to_string(++m_cseq) + "\r\n";

request += "Session: " + m_sessionId + "\r\n";

request += "User-Agent: RTSPClient\r\n\r\n";

std::string response = sendRequest(request);

if (response.empty()) {

std::cerr << "RTSPClient: No response to PAUSE request" << std::endl;

return false;

}

m_state = State::PAUSED;

return true;

}

std::string RTSPClient::getMediaInfo() const {

std::lock_guard<std::mutex> lock(m_mutex);

return m_mediaInfo;

}

void RTSPClient::setFrameCallback(const FrameCallback& callback) {

std::lock_guard<std::mutex> lock(m_mutex);

m_frameCallback = callback;

}

bool RTSPClient::parseUrl(const std::string& rtspUrl) {

// 解析RTSP URL: rtsp://server:port/path

std::regex urlRegex("rtsp://([^:/]+)(?::(\\d+))?(/.*)");

std::smatch match;

if (std::regex_match(rtspUrl, match, urlRegex) && match.size() >= 3) {

m_serverAddress = match[1].str();

if (match.length(2) > 0) {

m_serverPort = std::stoi(match[2].str());

}

m_streamPath = "rtsp://" + m_serverAddress +

(match.length(2) > 0 ? (":" + match[2].str()) : "") +

match[3].str();

return true;

}

return false;

}

std::string RTSPClient::sendRequest(const std::string& request) {

if (m_rtspSocket == -1) {

return "";

}

// 发送请求

ssize_t sent = send(m_rtspSocket, request.c_str(), request.length(), 0);

if (sent < 0) {

std::cerr << "RTSPClient: Error sending request" << std::endl;

return "";

}

// 接收响应

char buffer[4096];

std::string response;

while (true) {

memset(buffer, 0, sizeof(buffer));

ssize_t recvSize = recv(m_rtspSocket, buffer, sizeof(buffer) - 1, 0);

if (recvSize <= 0) {

break;

}

response.append(buffer, recvSize);

// 检查是否接收到完整的响应

if (response.find("\r\n\r\n") != std::string::npos) {

break;

}

}

return response;

}

bool RTSPClient::parseSDP(const std::string& sdp) {

// 查找SDP部分

size_t sdpStart = sdp.find("\r\n\r\n");

if (sdpStart == std::string::npos) {

return false;

}

std::string sdpContent = sdp.substr(sdpStart + 4);

// 解析媒体信息

std::regex mediaRegex("m=([^ ]+) ([^ ]+) ([^ ]+) ([^\r\n]+)");

std::sregex_iterator currentMatch(sdpContent.begin(), sdpContent.end(), mediaRegex);

std::sregex_iterator end;

bool foundVideo = false;

bool foundAudio = false;

while (currentMatch != end) {

std::smatch match = *currentMatch;

std::string mediaType = match[1].str();

std::string port = match[2].str();

std::string proto = match[3].str();

std::string fmt = match[4].str();

if (mediaType == "video" && !foundVideo) {

foundVideo = true;

m_mediaInfo += "Video: " + fmt + "\n";

// 查找编码信息

std::regex codecRegex("a=rtpmap:(\\d+) (H264|H265|MP4V-ES)(?:/([^/\\r\\n]+))?");

std::smatch codecMatch;

if (std::regex_search(sdpContent, codecMatch, codecRegex) && codecMatch.size() >= 3) {

m_codec = codecMatch[2].str();

if (codecMatch.size() > 3 && codecMatch.length(3) > 0) {

m_clockRate = std::stoi(codecMatch[3].str());

}

}

} else if (mediaType == "audio" && !foundAudio) {

foundAudio = true;

m_mediaInfo += "Audio: " + fmt + "\n";

// 查找编码信息

std::regex codecRegex("a=rtpmap:(\\d+) (MPEG4-GENERIC|PCMA|PCMU)(?:/([^/\\r\\n]+))?");

std::smatch codecMatch;

if (std::regex_search(sdpContent, codecMatch, codecRegex) && codecMatch.size() >= 3) {

if (codecMatch[2].str() == "MPEG4-GENERIC") {

m_codec = "AAC";

} else {

m_codec = codecMatch[2].str();

}

if (codecMatch.size() > 3 && codecMatch.length(3) > 0) {

m_clockRate = std::stoi(codecMatch[3].str());

}

}

}

++currentMatch;

}

// 为RTP和RTCP选择随机端口

m_rtpPort = 10000 + (rand() % 5000) * 2;

m_rtcpPort = m_rtpPort + 1;

return true;

}

void RTSPClient::receiveRTPData() {

// 创建RTP套接字

int rtpSocket = socket(AF_INET, SOCK_DGRAM, 0);

if (rtpSocket < 0) {

std::cerr << "RTSPClient: Failed to create RTP socket" << std::endl;

return;

}

// 绑定RTP端口

sockaddr_in rtpAddr;

memset(&rtpAddr, 0, sizeof(rtpAddr));

rtpAddr.sin_family = AF_INET;

rtpAddr.sin_addr.s_addr = INADDR_ANY;

rtpAddr.sin_port = htons(m_rtpPort);

if (bind(rtpSocket, (struct sockaddr*)&rtpAddr, sizeof(rtpAddr)) < 0) {

std::cerr << "RTSPClient: Failed to bind RTP socket" << std::endl;

close(rtpSocket);

return;

}

// 设置非阻塞模式

// int flags = fcntl(rtpSocket, F_GETFL, 0);

// fcntl(rtpSocket, F_SETFL, flags | O_NONBLOCK);

char buffer[16000];

sockaddr_in senderAddr;

socklen_t senderAddrLen = sizeof(senderAddr);

std::vector<uint8_t> frameData;

uint32_t lastTimestamp = 0;

bool isKeyFrame = false;

while (m_running) {

memset(buffer, 0, sizeof(buffer));

ssize_t recvSize = recvfrom(rtpSocket, buffer, sizeof(buffer), 0,

(struct sockaddr*)&senderAddr, &senderAddrLen);

if (recvSize > 0) {

std::vector<uint8_t> packetData(buffer, buffer + recvSize);

RTPPacket packet;

if (parseRTPPacket(packetData, packet)) {

// 处理视频帧

if (m_codec == "H264" || m_codec == "H265") {

processVideoFrame(packet);

}

// 处理音频帧

else if (m_codec == "AAC") {

processAudioFrame(packet);

}

}

} else {

// 超时或错误,短暂休眠

std::this_thread::sleep_for(std::chrono::milliseconds(10));

}

}

close(rtpSocket);

}

bool RTSPClient::parseRTPPacket(const std::vector<uint8_t>& data, RTPPacket& packet) {

if (data.size() < 12) {

return false;

}

// 解析RTP头部

packet.version = (data[0] >> 6) & 0x03;

packet.padding = (data[0] >> 5) & 0x01;

packet.extension = (data[0] >> 4) & 0x01;

packet.cc = data[0] & 0x0F;

packet.marker = (data[1] >> 7) & 0x01;

packet.payloadType = data[1] & 0x7F;

packet.sequence = (data[2] << 8) | data[3];

packet.timestamp = (data[4] << 24) | (data[5] << 16) | (data[6] << 8) | data[7];

packet.ssrc = (data[8] << 24) | (data[9] << 16) | (data[10] << 8) | data[11];

// 提取负载数据

size_t headerSize = 12 + (packet.cc * 4);

if (packet.extension && (data.size() > headerSize + 4)) {

uint16_t extLength = (data[headerSize + 2] << 8) | data[headerSize + 3];

headerSize += 4 + (extLength * 4);

}

if (data.size() > headerSize) {

packet.payload.assign(data.begin() + headerSize, data.end());

return true;

}

return false;

}

void RTSPClient::processVideoFrame(const RTPPacket& packet) {

std::lock_guard<std::mutex> lock(m_mutex);

if (!m_frameCallback) {

return;

}

if (m_codec == "H264" && packet.payload.size() > 0) {

// H.264 NAL单元类型

uint8_t nalType = packet.payload[0] & 0x1F;

// 判断是否为关键帧

bool isKeyFrame = (nalType == 7) || (nalType == 5);

// 调用回调函数

m_frameCallback(packet.payload.data(), packet.payload.size(),

packet.timestamp, isKeyFrame);

} else if (m_codec == "H265" && packet.payload.size() > 0) {

// H.265 NAL单元类型

uint8_t nalType = (packet.payload[0] >> 1) & 0x3F;

// 判断是否为关键帧

bool isKeyFrame = (nalType >= 16 && nalType <= 23);

// 调用回调函数

m_frameCallback(packet.payload.data(), packet.payload.size(),

packet.timestamp, isKeyFrame);

}

}

void RTSPClient::processAudioFrame(const RTPPacket& packet) {

std::lock_guard<std::mutex> lock(m_mutex);

if (!m_frameCallback) {

return;

}

// 对于AAC,每个RTP包通常包含一个完整的ADTS帧

m_frameCallback(packet.payload.data(), packet.payload.size(),

packet.timestamp, false);

} client_example.cpp

c

#include "rtsp_client.h"

#include <iostream>

#include <fstream>

#include <atomic>

#include <thread>

// 简单的帧处理器

class FrameProcessor {

public:

FrameProcessor(const std::string& outputPath)

: m_outputPath(outputPath), m_frameCount(0) {}

void handleFrame(const uint8_t* data, size_t size, uint32_t timestamp, bool isKeyFrame) {

std::cout << "Frame received: size=" << size

<< ", timestamp=" << timestamp

<< ", keyframe=" << (isKeyFrame ? "YES" : "NO")

<< std::endl;

// 保存帧数据到文件

if (m_outputPath.empty()) {

return;

}

std::stringstream ss;

ss << m_outputPath << "/frame_" << m_frameCount++ << ".h264";

std::ofstream frameFile(ss.str(), std::ios::binary);

if (frameFile.is_open()) {

frameFile.write(reinterpret_cast<const char*>(data), size);

frameFile.close();

}

}

private:

std::string m_outputPath;

std::atomic<int> m_frameCount;

};

int main(int argc, char* argv[]) {

if (argc < 2) {

std::cout << "Usage: " << argv[0] << " <rtsp_url> [output_dir]" << std::endl;

return 1;

}

std::string rtspUrl = argv[1];

std::string outputPath = (argc > 2) ? argv[2] : "";

// 创建帧处理器

FrameProcessor processor(outputPath);

// 创建RTSP客户端

RTSPClient client;

// 设置帧回调

client.setFrameCallback([&processor](const uint8_t* data, size_t size, uint32_t timestamp, bool isKeyFrame) {

processor.handleFrame(data, size, timestamp, isKeyFrame);

});

// 连接到RTSP服务器

if (!client.connect(rtspUrl)) {

std::cerr << "Failed to connect to RTSP server" << std::endl;

return 1;

}

// 打印媒体信息

std::cout << "Media information:" << std::endl;

std::cout << client.getMediaInfo() << std::endl;

// 开始播放

if (!client.play()) {

std::cerr << "Failed to start playing" << std::endl;

client.disconnect();

return 1;

}

std::cout << "Playing stream from: " << rtspUrl << std::endl;

std::cout << "Press Enter to stop..." << std::endl;

// 等待用户输入

std::cin.get();

// 停止播放

client.disconnect();

std::cout << "RTSP client stopped" << std::endl;

return 0;

} 2、完整一点的判断帧类型,分包,解包

c

#include <iostream>

#include <string>

#include <vector>

#include <map>

#include <memory>

#include <cstring>

#include <thread>

#include <atomic>

#include <random>

#include <ctime>

// RTSP协议常量

const int RTSP_PORT = 8554;

const int RTP_PORT_BASE = 5000;

const int RTCP_PORT_BASE = 5001;

const int MAX_PACKET_SIZE = 1400; // MTU - IP头 - UDP头

// NAL单元类型

enum NalUnitType {

NAL_UNKNOWN = 0,

NAL_SLICE = 1,

NAL_IDR_SLICE = 5,

NAL_SEI = 6,

NAL_SPS = 7,

NAL_PPS = 8,

NAL_AUD = 9

};

// 帧类型

enum FrameType {

FRAME_I = 0,

FRAME_P = 1,

FRAME_B = 2

};

// RTP包结构

struct RTPPacket {

uint8_t version; // 版本号(2 bits)

uint8_t padding; // 填充标志(1 bit)

uint8_t extension; // 扩展标志(1 bit)

uint8_t cc; // CSRC计数器(4 bits)

uint8_t marker; // 标记位(1 bit)

uint8_t payloadType; // 负载类型(7 bits)

uint16_t sequence; // 序列号

uint32_t timestamp; // 时间戳

uint32_t ssrc; // 同步源标识符

std::vector<uint8_t> payload; // 负载数据

RTPPacket() : version(2), padding(0), extension(0), cc(0), marker(0),

payloadType(96), sequence(0), timestamp(0), ssrc(0) {}

};

// 会话信息

struct Session {

std::string sessionId;

std::string streamName;

int clientRtpPort;

int clientRtcpPort;

int serverRtpPort;

int serverRtcpPort;

std::atomic<bool> isPlaying;

std::thread streamThread;

Session() : clientRtpPort(0), clientRtcpPort(0),

serverRtpPort(0), serverRtcpPort(0), isPlaying(false) {}

};

// 媒体源接口

class MediaSource {

public:

virtual ~MediaSource() {}

virtual std::string getMimeType() = 0;

virtual std::string getSdpDescription() = 0;

virtual bool getNextFrame(std::vector<uint8_t>& frameData, FrameType& frameType) = 0;

};

// H.264媒体源实现

class H264MediaSource : public MediaSource {

private:

int width;

int height;

int fps;

uint32_t frameCount;

std::mt19937 randomGenerator;

std::uniform_int_distribution<int> frameTypeDist;

public:

H264MediaSource(int w, int h, int f = 30)

: width(w), height(h), fps(f), frameCount(0) {

randomGenerator.seed(std::time(nullptr));

frameTypeDist = std::uniform_int_distribution<int>(0, 9); // 0-9的随机数

}

std::string getMimeType() override {

return "video/H264";

}

std::string getSdpDescription() override {

std::string sdp =

"m=video 0 RTP/AVP 96\r\n"

"a=rtpmap:96 H264/90000\r\n"

"a=fmtp:96 packetization-mode=1;profile-level-id=42001F;sprop-parameter-sets=Z0IAKeKQDwBE/LwEBAAMAAgAAAwAymA0A,aM48gA==\r\n"

"a=control:streamid=0\r\n";

return sdp;

}

bool getNextFrame(std::vector<uint8_t>& frameData, FrameType& frameType) override {

// 模拟生成H.264帧

frameCount++;

// 每30帧生成一个I帧,其余为P帧(90%)或B帧(10%)

if (frameCount % 30 == 0) {

frameType = FRAME_I;

generateIFrame(frameData);

} else {

int randVal = frameTypeDist(randomGenerator);

if (randVal < 9) {

frameType = FRAME_P;

generatePFrame(frameData);

} else {

frameType = FRAME_B;

generateBFrame(frameData);

}

}

return true;

}

private:

void generateIFrame(std::vector<uint8_t>& frameData) {

// 模拟生成I帧 (SPS + PPS + IDR)

// SPS (Sequence Parameter Set)

std::vector<uint8_t> sps = {

0x67, 0x42, 0x00, 0x1F, 0xE9, 0x01, 0x40, 0x16,

0xE3, 0x88, 0x80, 0x00, 0x00, 0x03, 0x00, 0x40,

0x00, 0x00, 0x0C, 0x8C, 0x3C, 0x60

};

// PPS (Picture Parameter Set)

std::vector<uint8_t> pps = {

0x68, 0xCE, 0x38, 0x80

};

// IDR Slice (随机数据模拟)

std::vector<uint8_t> idr(1024 * 4); // 4KB的IDR图像

idr[0] = 0x65; // NAL单元类型: IDR Slice

for (size_t i = 1; i < idr.size(); i++) {

idr[i] = rand() % 256;

}

// 组合成完整的I帧

frameData.insert(frameData.end(), sps.begin(), sps.end());

frameData.insert(frameData.end(), pps.begin(), pps.end());

frameData.insert(frameData.end(), idr.begin(), idr.end());

}

void generatePFrame(std::vector<uint8_t>& frameData) {

// 模拟生成P帧

frameData.resize(1024 * 2); // 2KB的P帧

frameData[0] = 0x41; // NAL单元类型: 非IDR Slice

for (size_t i = 1; i < frameData.size(); i++) {

frameData[i] = rand() % 256;

}

}

void generateBFrame(std::vector<uint8_t>& frameData) {

// 模拟生成B帧

frameData.resize(1024); // 1KB的B帧

frameData[0] = 0x41; // NAL单元类型: 非IDR Slice (与P帧相同)

for (size_t i = 1; i < frameData.size(); i++) {

frameData[i] = rand() % 256;

}

}

};

// AAC媒体源实现

class AACMediaSource : public MediaSource {

private:

int sampleRate;

int channels;

public:

AACMediaSource(int sr = 44100, int ch = 2)

: sampleRate(sr), channels(ch) {}

std::string getMimeType() override {

return "audio/AAC";

}

std::string getSdpDescription() override {

std::string sdp =

"m=audio 0 RTP/AVP 97\r\n"

"a=rtpmap:97 MPEG4-GENERIC/" + std::to_string(sampleRate) + "/" + std::to_string(channels) + "\r\n"

"a=fmtp:97 profile-level-id=1;mode=AAC-hbr;sizelength=13;indexlength=3;indexdeltalength=3;config=1210\r\n"

"a=control:streamid=1\r\n";

return sdp;

}

bool getNextFrame(std::vector<uint8_t>& frameData, FrameType& frameType) override {

// 模拟生成AAC音频帧

frameData.resize(1024); // 1KB的AAC帧

for (size_t i = 0; i < frameData.size(); i++) {

frameData[i] = rand() % 256;

}

return true;

}

};

// RTSP服务器

class RTSPServer {

private:

int serverSocket;

std::map<std::string, std::shared_ptr<MediaSource>> mediaSources;

std::map<std::string, Session> sessions;

std::atomic<bool> running;

std::thread acceptThread;

public:

RTSPServer(int port = RTSP_PORT) : serverSocket(-1), running(false) {

// 创建TCP套接字

serverSocket = socket(AF_INET, SOCK_STREAM, 0);

if (serverSocket < 0) {

std::cerr << "Failed to create socket" << std::endl;

return;

}

// 设置套接字选项

int opt = 1;

if (setsockopt(serverSocket, SOL_SOCKET, SO_REUSEADDR, &opt, sizeof(opt)) < 0) {

std::cerr << "Failed to set socket options" << std::endl;

close(serverSocket);

serverSocket = -1;

return;

}

// 绑定地址

sockaddr_in serverAddr;

memset(&serverAddr, 0, sizeof(serverAddr));

serverAddr.sin_family = AF_INET;

serverAddr.sin_addr.s_addr = INADDR_ANY;

serverAddr.sin_port = htons(port);

if (bind(serverSocket, (struct sockaddr*)&serverAddr, sizeof(serverAddr)) < 0) {

std::cerr << "Failed to bind socket" << std::endl;

close(serverSocket);

serverSocket = -1;

return;

}

// 监听连接

if (listen(serverSocket, 5) < 0) {

std::cerr << "Failed to listen on socket" << std::endl;

close(serverSocket);

serverSocket = -1;

return;

}

}

~RTSPServer() {

stop();

if (serverSocket >= 0) {

close(serverSocket);

}

}

void addMediaSource(const std::string& name, std::shared_ptr<MediaSource> source) {

mediaSources[name] = source;

}

bool start() {

if (serverSocket < 0 || running) {

return false;

}

running = true;

acceptThread = std::thread(&RTSPServer::acceptConnections, this);

return true;

}

void stop() {

running = false;

if (acceptThread.joinable()) {

acceptThread.join();

}

// 停止所有会话

for (auto& pair : sessions) {

pair.second.isPlaying = false;

if (pair.second.streamThread.joinable()) {

pair.second.streamThread.join();

}

}

sessions.clear();

}

private:

void acceptConnections() {

while (running) {

sockaddr_in clientAddr;

socklen_t clientAddrLen = sizeof(clientAddr);

int clientSocket = accept(serverSocket, (struct sockaddr*)&clientAddr, &clientAddrLen);

if (clientSocket < 0) {

if (running) {

std::cerr << "Failed to accept client connection" << std::endl;

}

continue;

}

// 为客户端创建处理线程

std::thread([this, clientSocket]() {

handleClient(clientSocket);

close(clientSocket);

}).detach();

}

}

void handleClient(int clientSocket) {

char buffer[4096];

std::string sessionId;

while (running) {

memset(buffer, 0, sizeof(buffer));

int bytesRead = recv(clientSocket, buffer, sizeof(buffer) - 1, 0);

if (bytesRead <= 0) {

break; // 客户端断开连接

}

std::string request(buffer, bytesRead);

std::string response = processRequest(request, sessionId);

send(clientSocket, response.c_str(), response.size(), 0);

}

}

std::string processRequest(const std::string& request, std::string& sessionId) {

// 解析RTSP请求方法

size_t methodEnd = request.find(' ');

if (methodEnd == std::string::npos) {

return "RTSP/1.0 400 Bad Request\r\n\r\n";

}

std::string method = request.substr(0, methodEnd);

size_t uriEnd = request.find(' ', methodEnd + 1);

if (uriEnd == std::string::npos) {

return "RTSP/1.0 400 Bad Request\r\n\r\n";

}

std::string uri = request.substr(methodEnd + 1, uriEnd - methodEnd - 1);

size_t seqStart = request.find("CSeq: ") + 6;

size_t seqEnd = request.find("\r\n", seqStart);

std::string cseq = request.substr(seqStart, seqEnd - seqStart);

// 处理不同的RTSP方法

if (method == "OPTIONS") {

return "RTSP/1.0 200 OK\r\n"

"CSeq: " + cseq + "\r\n"

"Public: OPTIONS, DESCRIBE, SETUP, PLAY, PAUSE, TEARDOWN\r\n\r\n";

}

else if (method == "DESCRIBE") {

// 提取流名称

size_t streamStart = uri.find_last_of('/') + 1;

std::string streamName = uri.substr(streamStart);

auto it = mediaSources.find(streamName);

if (it == mediaSources.end()) {

return "RTSP/1.0 404 Not Found\r\n"

"CSeq: " + cseq + "\r\n\r\n";

}

std::string sdp = it->second->getSdpDescription();

return "RTSP/1.0 200 OK\r\n"

"CSeq: " + cseq + "\r\n"

"Content-Base: " + uri + "/\r\n"

"Content-Type: application/sdp\r\n"

"Content-Length: " + std::to_string(sdp.size()) + "\r\n\r\n"

+ sdp;

}

else if (method == "SETUP") {

// 提取流名称

size_t streamStart = uri.find_last_of('/') + 1;

std::string streamName = uri.substr(streamStart);

auto it = mediaSources.find(streamName);

if (it == mediaSources.end()) {

return "RTSP/1.0 404 Not Found\r\n"

"CSeq: " + cseq + "\r\n\r\n";

}

// 提取客户端端口

size_t transportStart = request.find("Transport: ") + 11;

size_t transportEnd = request.find("\r\n", transportStart);

std::string transport = request.substr(transportStart, transportEnd - transportStart);

size_t clientPortStart = transport.find("client_port=") + 12;

size_t clientPortEnd = transport.find("-", clientPortStart);

int clientRtpPort = std::stoi(transport.substr(clientPortStart, clientPortEnd - clientPortStart));

int clientRtcpPort = std::stoi(transport.substr(clientPortEnd + 1));

// 生成会话ID

sessionId = generateSessionId();

// 创建会话

Session session;

session.sessionId = sessionId;

session.streamName = streamName;

session.clientRtpPort = clientRtpPort;

session.clientRtcpPort = clientRtcpPort;

session.serverRtpPort = RTP_PORT_BASE + (sessions.size() * 2);

session.serverRtcpPort = RTCP_PORT_BASE + (sessions.size() * 2);

session.isPlaying = false;

sessions[sessionId] = session;

return "RTSP/1.0 200 OK\r\n"

"CSeq: " + cseq + "\r\n"

"Session: " + sessionId + "\r\n"

"Transport: RTP/AVP;unicast;client_port=" +

std::to_string(clientRtpPort) + "-" + std::to_string(clientRtcpPort) +

";server_port=" + std::to_string(session.serverRtpPort) + "-" +

std::to_string(session.serverRtcpPort) + "\r\n\r\n";

}

else if (method == "PLAY") {

if (sessionId.empty()) {

return "RTSP/1.0 454 Session Not Found\r\n"

"CSeq: " + cseq + "\r\n\r\n";

}

auto it = sessions.find(sessionId);

if (it == sessions.end()) {

return "RTSP/1.0 454 Session Not Found\r\n"

"CSeq: " + cseq + "\r\n\r\n";

}

// 启动流发送线程

if (!it->second.isPlaying) {

it->second.isPlaying = true;

it->second.streamThread = std::thread(&RTSPServer::sendMediaStream, this, sessionId);

}

return "RTSP/1.0 200 OK\r\n"

"CSeq: " + cseq + "\r\n"

"Session: " + sessionId + "\r\n"

"RTP-Info: url=" + uri + "\r\n\r\n";

}

else if (method == "PAUSE") {

if (sessionId.empty()) {

return "RTSP/1.0 454 Session Not Found\r\n"

"CSeq: " + cseq + "\r\n\r\n";

}

auto it = sessions.find(sessionId);

if (it == sessions.end()) {

return "RTSP/1.0 454 Session Not Found\r\n"

"CSeq: " + cseq + "\r\n\r\n";

}

it->second.isPlaying = false;

if (it->second.streamThread.joinable()) {

it->second.streamThread.join();

}

return "RTSP/1.0 200 OK\r\n"

"CSeq: " + cseq + "\r\n"

"Session: " + sessionId + "\r\n\r\n";

}

else if (method == "TEARDOWN") {

if (sessionId.empty()) {

return "RTSP/1.0 454 Session Not Found\r\n"

"CSeq: " + cseq + "\r\n\r\n";

}

auto it = sessions.find(sessionId);

if (it == sessions.end()) {

return "RTSP/1.0 454 Session Not Found\r\n"

"CSeq: " + cseq + "\r\n\r\n";

}

it->second.isPlaying = false;

if (it->second.streamThread.joinable()) {

it->second.streamThread.join();

}

sessions.erase(sessionId);

sessionId.clear();

return "RTSP/1.0 200 OK\r\n"

"CSeq: " + cseq + "\r\n"

"Session: " + sessionId + "\r\n\r\n";

}

else {

return "RTSP/1.0 501 Not Implemented\r\n"

"CSeq: " + cseq + "\r\n\r\n";

}

}

std::string generateSessionId() {

static std::atomic<uint32_t> sessionCounter(0);

return std::to_string(time(nullptr)) + "_" + std::to_string(sessionCounter++);

}

void sendMediaStream(const std::string& sessionId) {

auto it = sessions.find(sessionId);

if (it == sessions.end()) {

return;

}

Session& session = it->second;

auto mediaSourceIt = mediaSources.find(session.streamName);

if (mediaSourceIt == mediaSources.end()) {

return;

}

std::shared_ptr<MediaSource> mediaSource = mediaSourceIt->second;

// 创建RTP套接字

int rtpSocket = socket(AF_INET, SOCK_DGRAM, 0);

if (rtpSocket < 0) {

std::cerr << "Failed to create RTP socket" << std::endl;

return;

}

// 设置目标地址

sockaddr_in clientAddr;

memset(&clientAddr, 0, sizeof(clientAddr));

clientAddr.sin_family = AF_INET;

clientAddr.sin_addr.s_addr = INADDR_ANY; // 注意:实际应用中应使用客户端IP

clientAddr.sin_port = htons(session.clientRtpPort);

// 初始化RTP参数

RTPPacket rtpPacket;

rtpPacket.ssrc = rand();

rtpPacket.sequence = rand() % 65536;

rtpPacket.timestamp = rand() % 4294967296;

// 帧率控制

const int fps = 30;

const int frameDuration = 1000 / fps; // 毫秒

uint32_t frameCount = 0;

while (session.isPlaying) {

std::vector<uint8_t> frameData;

FrameType frameType;

// 获取下一帧

if (!mediaSource->getNextFrame(frameData, frameType)) {

break;

}

// 分片并发送

if (mediaSource->getMimeType() == "video/H264") {

sendH264Frame(rtpSocket, clientAddr, frameData, frameType, rtpPacket);

} else {

// 其他媒体类型的处理

sendRtpPacket(rtpSocket, clientAddr, frameData, rtpPacket);

}

// 更新时间戳和序列号

rtpPacket.sequence = (rtpPacket.sequence + 1) % 65536;

rtpPacket.timestamp += 90000 / fps; // 90kHz时钟

frameCount++;

// 控制帧率

std::this_thread::sleep_for(std::chrono::milliseconds(frameDuration));

}

close(rtpSocket);

}

void sendH264Frame(int socket, sockaddr_in& addr, const std::vector<uint8_t>& frameData,

FrameType frameType, RTPPacket& rtpPacket) {

// 查找帧中的各个NAL单元

size_t offset = 0;

while (offset < frameData.size()) {

// 查找NAL单元起始码 (0x00000001或0x000001)

size_t nalStart = offset;

if (frameData.size() - offset >= 4 &&

frameData[offset] == 0x00 && frameData[offset+1] == 0x00 &&

frameData[offset+2] == 0x00 && frameData[offset+3] == 0x01) {

nalStart += 4;

offset += 4;

} else if (frameData.size() - offset >= 3 &&

frameData[offset] == 0x00 && frameData[offset+1] == 0x00 &&

frameData[offset+2] == 0x01) {

nalStart += 3;

offset += 3;

} else {

// 没有找到起始码,可能是第一个NAL

nalStart = offset;

}

// 查找下一个NAL单元的起始位置

size_t nextNalStart = frameData.size();

for (size_t i = offset; i < frameData.size() - 3; i++) {

if ((frameData[i] == 0x00 && frameData[i+1] == 0x00 &&

frameData[i+2] == 0x00 && frameData[i+3] == 0x01) ||

(frameData[i] == 0x00 && frameData[i+1] == 0x00 &&

frameData[i+2] == 0x01)) {

nextNalStart = i;

break;

}

}

size_t nalSize = nextNalStart - nalStart;

if (nalSize <= 0) {

break;

}

// 处理NAL单元

const uint8_t* nalData = &frameData[nalStart];

uint8_t nalType = nalData[0] & 0x1F;

// 根据NAL单元类型设置RTP标记位

bool isKeyFrame = (nalType == NAL_IDR_SLICE || nalType == NAL_SPS || nalType == NAL_PPS);

rtpPacket.marker = isKeyFrame ? 1 : 0;

// 分片处理

if (nalSize > MAX_PACKET_SIZE) {

fragmentAndSendNAL(socket, addr, nalData, nalSize, rtpPacket);

} else {

// 不需要分片,直接发送

rtpPacket.payload.assign(nalData, nalData + nalSize);

sendRtpPacket(socket, addr, rtpPacket);

rtpPacket.sequence = (rtpPacket.sequence + 1) % 65536;

}

offset = nextNalStart;

}

}

void fragmentAndSendNAL(int socket, sockaddr_in& addr, const uint8_t* nalData,

size_t nalSize, RTPPacket& rtpPacket) {

uint8_t originalNalType = nalData[0] & 0x1F;

uint8_t fuIndicator = 0x7C; // FU-A indicator (Type=28)

size_t payloadSize = MAX_PACKET_SIZE - 2; // 减去FU indicator和FU header的大小

size_t offset = 1; // 跳过原始NAL头部

while (offset < nalSize) {

size_t fragmentSize = std::min(payloadSize, nalSize - offset);

bool isFirst = (offset == 1);

bool isLast = (offset + fragmentSize >= nalSize);

// 创建FU header

uint8_t fuHeader = originalNalType;

if (isFirst) fuHeader |= 0x80; // 设置起始位

if (isLast) fuHeader |= 0x40; // 设置结束位

// 构建RTP负载

rtpPacket.payload.resize(2 + fragmentSize);

rtpPacket.payload[0] = fuIndicator;

rtpPacket.payload[1] = fuHeader;

memcpy(&rtpPacket.payload[2], nalData + offset, fragmentSize);

// 设置标记位(仅最后一个分片设置)

rtpPacket.marker = isLast ? 1 : 0;

// 发送RTP包

sendRtpPacket(socket, addr, rtpPacket);

// 更新序列号

rtpPacket.sequence = (rtpPacket.sequence + 1) % 65536;

offset += fragmentSize;

}

}

void sendRtpPacket(int socket, sockaddr_in& addr, const std::vector<uint8_t>& payload,

RTPPacket& rtpPacket) {

// 构建RTP头部

uint8_t rtpHeader[12];

rtpHeader[0] = (rtpPacket.version << 6) | (rtpPacket.padding << 5) |

(rtpPacket.extension << 4) | rtpPacket.cc;

rtpHeader[1] = (rtpPacket.marker << 7) | rtpPacket.payloadType;

rtpHeader[2] = (rtpPacket.sequence >> 8) & 0xFF;

rtpHeader[3] = rtpPacket.sequence & 0xFF;

rtpHeader[4] = (rtpPacket.timestamp >> 24) & 0xFF;

rtpHeader[5] = (rtpPacket.timestamp >> 16) & 0xFF;

rtpHeader[6] = (rtpPacket.timestamp >> 8) & 0xFF;

rtpHeader[7] = rtpPacket.timestamp & 0xFF;

rtpHeader[8] = (rtpPacket.ssrc >> 24) & 0xFF;

rtpHeader[9] = (rtpPacket.ssrc >> 16) & 0xFF;

rtpHeader[10] = (rtpPacket.ssrc >> 8) & 0xFF;

rtpHeader[11] = rtpPacket.ssrc & 0xFF;

// 发送RTP包

std::vector<uint8_t> packet;

packet.insert(packet.end(), rtpHeader, rtpHeader + 12);

packet.insert(packet.end(), payload.begin(), payload.end());

sendto(socket, packet.data(), packet.size(), 0,

(struct sockaddr*)&addr, sizeof(addr));

}

void sendRtpPacket(int socket, sockaddr_in& addr, RTPPacket& rtpPacket) {

sendRtpPacket(socket, addr, rtpPacket.payload, rtpPacket);

}

};

// RTSP客户端

class RTSPClient {

private:

int rtspSocket;

std::string sessionId;

int cseq;

std::string serverAddress;

int serverPort;

int rtpPort;

int rtcpPort;

std::atomic<bool> isPlaying;

std::thread receiveThread;

std::function<void(const uint8_t*, size_t, uint32_t, bool)> frameCallback;

public:

RTSPClient() : rtspSocket(-1), cseq(0), serverPort(RTSP_PORT),

rtpPort(0), rtcpPort(0), isPlaying(false) {}

~RTSPClient() {

disconnect();

}

bool connect(const std::string& url) {

// 解析URL

size_t protocolEnd = url.find("://");

if (protocolEnd == std::string::npos) {

std::cerr << "Invalid RTSP URL" << std::endl;

return false;

}

std::string protocol = url.substr(0, protocolEnd);

if (protocol != "rtsp") {

std::cerr << "Unsupported protocol: " << protocol << std::endl;

return false;

}

size_t hostStart = protocolEnd + 3;

size_t hostEnd = url.find(":", hostStart);

size_t pathStart = url.find("/", hostStart);

if (hostEnd != std::string::npos && pathStart != std::string::npos && hostEnd < pathStart) {

// 包含端口号

serverAddress = url.substr(hostStart, hostEnd - hostStart);

serverPort = std::stoi(url.substr(hostEnd + 1, pathStart - hostEnd - 1));

} else if (pathStart != std::string::npos) {

// 不包含端口号,使用默认端口

serverAddress = url.substr(hostStart, pathStart - hostStart);

} else {

std::cerr << "Invalid RTSP URL" << std::endl;

return false;

}

std::string path = url.substr(pathStart);

// 创建RTSP套接字

rtspSocket = socket(AF_INET, SOCK_STREAM, 0);

if (rtspSocket < 0) {

std::cerr << "Failed to create RTSP socket" << std::endl;

return false;

}

// 连接服务器

sockaddr_in serverAddr;

memset(&serverAddr, 0, sizeof(serverAddr));

serverAddr.sin_family = AF_INET;

serverAddr.sin_port = htons(serverPort);

inet_pton(AF_INET, serverAddress.c_str(), &serverAddr.sin_addr);

if (connect(rtspSocket, (struct sockaddr*)&serverAddr, sizeof(serverAddr)) < 0) {

std::cerr << "Failed to connect to RTSP server" << std::endl;

close(rtspSocket);

rtspSocket = -1;

return false;

}

// 发送OPTIONS请求

std::string response = sendRequest("OPTIONS " + url + " RTSP/1.0\r\n\r\n");

if (!parseResponseStatus(response, 200)) {

std::cerr << "OPTIONS request failed" << std::endl;

disconnect();

return false;

}

// 发送DESCRIBE请求

response = sendRequest("DESCRIBE " + url + " RTSP/1.0\r\n"

"Accept: application/sdp\r\n\r\n");

if (!parseResponseStatus(response, 200)) {

std::cerr << "DESCRIBE request failed" << std::endl;

disconnect();

return false;

}

// 解析SDP信息

std::string sdp = parseSDP(response);

if (sdp.empty()) {

std::cerr << "Failed to parse SDP" << std::endl;

disconnect();

return false;

}

// 随机选择RTP/RTCP端口

rtpPort = 10000 + (rand() % 5000) * 2;

rtcpPort = rtpPort + 1;

// 发送SETUP请求

response = sendRequest("SETUP " + url + " RTSP/1.0\r\n"

"Transport: RTP/AVP;unicast;client_port=" +

std::to_string(rtpPort) + "-" + std::to_string(rtcpPort) + "\r\n\r\n");

if (!parseResponseStatus(response, 200)) {

std::cerr << "SETUP request failed" << std::endl;

disconnect();

return false;

}

// 解析会话ID

sessionId = parseSessionId(response);

if (sessionId.empty()) {

std::cerr << "Failed to get session ID" << std::endl;

disconnect();

return false;

}

return true;

}

void disconnect() {

if (isPlaying) {

pause();

}

if (rtspSocket >= 0) {

// 发送TEARDOWN请求

if (!sessionId.empty()) {

sendRequest("TEARDOWN * RTSP/1.0\r\n"

"Session: " + sessionId + "\r\n\r\n");

}

close(rtspSocket);

rtspSocket = -1;

sessionId.clear();

}

if (receiveThread.joinable()) {

receiveThread.join();

}

}

bool play() {

if (!isPlaying && rtspSocket >= 0 && !sessionId.empty()) {

std::string response = sendRequest("PLAY * RTSP/1.0\r\n"

"Session: " + sessionId + "\r\n\r\n");

if (!parseResponseStatus(response, 200)) {

std::cerr << "PLAY request failed" << std::endl;

return false;

}

isPlaying = true;

receiveThread = std::thread(&RTSPClient::receiveRtpPackets, this);

return true;

}

return false;

}

bool pause() {

if (isPlaying && rtspSocket >= 0 && !sessionId.empty()) {

isPlaying = false;

if (receiveThread.joinable()) {

receiveThread.join();

}

std::string response = sendRequest("PAUSE * RTSP/1.0\r\n"

"Session: " + sessionId + "\r\n\r\n");

return parseResponseStatus(response, 200);

}

return false;

}

void setFrameCallback(const std::function<void(const uint8_t*, size_t, uint32_t, bool)>& callback) {

frameCallback = callback;

}

std::string getMediaInfo() {

// 这里应该返回从SDP解析的媒体信息

return "Media Info: RTSP Stream";

}

private:

std::string sendRequest(const std::string& request) {

if (rtspSocket < 0) {

return "";

}

// 增加CSeq

cseq++;

std::string requestWithCSeq = request;

size_t crlfPos = request.find("\r\n");

if (crlfPos != std::string::npos) {

requestWithCSeq.insert(crlfPos + 2, "CSeq: " + std::to_string(cseq) + "\r\n");

}

// 发送请求

send(rtspSocket, requestWithCSeq.c_str(), requestWithCSeq.size(), 0);

// 接收响应

char buffer[4096];

memset(buffer, 0, sizeof(buffer));

int bytesRead = recv(rtspSocket, buffer, sizeof(buffer) - 1, 0);

return std::string(buffer, bytesRead);

}

bool parseResponseStatus(const std::string& response, int expectedStatus) {

size_t statusStart = response.find("RTSP/1.0 ") + 9;

size_t statusEnd = response.find(" ", statusStart);

if (statusStart == std::string::npos || statusEnd == std::string::npos) {

return false;

}

int statusCode = std::stoi(response.substr(statusStart, statusEnd - statusStart));

return (statusCode == expectedStatus);

}

std::string parseSDP(const std::string& response) {

size_t sdpStart = response.find("\r\n\r\n");

if (sdpStart == std::string::npos) {

return "";

}

return response.substr(sdpStart + 4);

}

std::string parseSessionId(const std::string& response) {

size_t sessionStart = response.find("Session: ");

if (sessionStart == std::string::npos) {

return "";

}

sessionStart += 9;