一、定义

-

gpu部署

-

vllm +openai 请求

-

qwen 架构

-

训练数据格式

-

损失函数

-

训练demo

-

问题解决

二、实现

- gpu 部署

https://www.modelscope.cn/models/Qwen/Qwen3-Embedding-8B/summary

注意: SentenceTransformer 部署时,可能内存不足。采用transformer方式部署,能自动讲模型、数据分配到其他显存卡上,避免单卡不足的问题。

vllm 部署时,使用脚本启动,指定任务embed.

- vllm +openai 请求

Python

python -m vllm.entrypoints.openai.api_server \

--model Qwen3-Embedding-8B \

--task embed \

--tensor-parallel-size 4 \

--host 0.0.0.0 \

--port 8000 \

--enforce_eager \

--dtype float16 \

--gpu_memory_utilization 0.35 \

--served-model-name kejiqiye

Bash

curl http://localhost:8000/v1/embeddings \

-H "Content-Type: application/json" \

-d '{

"model": "kejiqiye",

"input": [

"What is the capital of China?",

"Explain gravity"

]

}'

from openai import OpenAI

client = OpenAI(

base_url="http://localhost:8000/v1",

api_key="EMPTY" # vllm 不需要 key,这里随便写

)

resp = client.embeddings.create(

model="kejiqiye",

input=[

"What is the capital of China?",

"Explain gravity"

]

)

for item in resp.data:

print(len(item.embedding)) # 向量维度- 模型架构

模型架构为Qwen3Model

Python

Qwen3Model(

(embed_tokens): Embedding(151665, 4096)

(layers): ModuleList(

(0-35): 36 x Qwen3DecoderLayer(

(self_attn): Qwen3Attention(

(q_proj): Linear(in_features=4096, out_features=4096, bias=False)

(k_proj): Linear(in_features=4096, out_features=1024, bias=False)

(v_proj): Linear(in_features=4096, out_features=1024, bias=False)

(o_proj): Linear(in_features=4096, out_features=4096, bias=False)

(q_norm): Qwen3RMSNorm((128,), eps=1e-06)

(k_norm): Qwen3RMSNorm((128,), eps=1e-06)

)

(mlp): Qwen3MLP(

(gate_proj): Linear(in_features=4096, out_features=12288, bias=False)

(up_proj): Linear(in_features=4096, out_features=12288, bias=False)

(down_proj): Linear(in_features=12288, out_features=4096, bias=False)

(act_fn): SiLU()

)

(input_layernorm): Qwen3RMSNorm((4096,), eps=1e-06)

(post_attention_layernorm): Qwen3RMSNorm((4096,), eps=1e-06)

)

)

(norm): Qwen3RMSNorm((4096,), eps=1e-06)

(rotary_emb): Qwen3RotaryEmbedding()

)-

数据训练格式

#两种方式均可, 损失函数选择--loss_type infonce

JSON

# sample without rejected_response

{"query": "sentence1", "response": "sentence1-positive"}

# sample with multiple rejected_response

{"query": "sentence1", "response": "sentence1-positive", "rejected_response": ["sentence1-negative1", "sentence1-negative2", ...]}#其他---loss_type 也可以,如cosine_similarity 格式,但需要其对应的数据集格式。

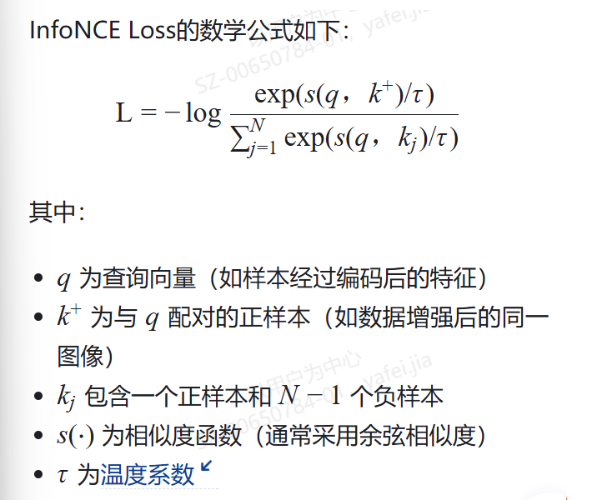

- 损失函数

infonce loss:(信息噪声对比损失):通过最大化正样本对的相似度并最小化负样本对的相似度,帮助模型学习有效的特征表示。

核心机制:通过softmax函数将正样本对的相似度与所有样本对的相似度进行对比,使正样本对的概率最大化。这一过程等效于优化互信息下界(I(q; k^+)),从而让模型捕捉数据中的本质特征

损失函数demo: ms-swift→plugin/loss.py

Python

# split tensors into single sample, 将拼接的样本进行拆分

# for example: batch_size=2 with tensor anchor(1)+positive(1)+negatives(3) + anchor(1)+positive(1)+negatives(2)

# labels will be [1,0,0,0,1,0,0], meaning 1 positive, 3 negatives, 1 positive, 2 negatives

split_tensors = _parse_multi_negative_sentences(sentences, labels, hard_negatives)

# negative numbers are equal

# [B, neg+2, D]

sentences = torch.stack(split_tensors, dim=0)

# [B, 1, D] * [B, neg+1, D]

similarity_matrix = torch.matmul(sentences[:, 0:1], sentences[:, 1:].transpose(1, 2)) / temperature

# The positive one is the first element

labels = torch.zeros(len(split_tensors), dtype=torch.int64).to(sentences.device)

loss = nn.CrossEntropyLoss()(similarity_matrix.squeeze(1), labels)

#InfoNCE 就是把对比学习问题转化为分类问题,再用交叉熵来优化。

Python

outputs = {'last_hidden_state': tensor([[-0.0289, -0.0035, -0.0092, ..., 0.0090, 0.0110, -0.0197],

[-0.0469, 0.0026, -0.0105, ..., 0.0029, -0.0238, 0.0090],

[ 0.0062, 0.0334, -0.0051, ..., 0.0036, 0.0011, 0.0069],

...,

[-0.0938, -0.0581, -0.0106, ..., -0.0077, 0.0098, 0.0483],

[-0.0378, -0.0742, -0.0081, ..., -0.0269, -0.0091, -0.0009],

[-0.0055, -0.0125, -0.0046, ..., -0.0204, -0.0028, -0.0081]],

dtype=torch.bfloat16, grad_fn=<DivBackward0>)}

labels = tensor([1., 0., 0., 0., 0., 0., 1., 0., 0., 0., 0., 0., 1., 0., 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.])- 训练demo

https://github.com/QwenLM/Qwen3-Embedding/blob/main/docs/training/SWIFT.md

训练时,将query、positive、negative 同时输入模型。如batch =4, 则输入batch = batch*(query_num+ positive_num+negative)

数据流向:

JSON

数据处理前 batch=4:{'anchor_input_ids': [100007, 67338, 117487, 36407, 104332, 100298, 101807, 102969, 103584, 99252, 11319, 151643], 'anchor_labels': [-100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, 151643], 'anchor_length': 12, 'positive_input_ids': [30534, 101907, 114826, 9370, 100745, 117487, 3837, 100724, 108521, 104183, 3837, 106084, 104273, 99222, 100964, 104705, 50511, 101153, 117487, 3837, 104431, 114826, 102488, 99616, 1773, 151643], 'negative_input_ids': [[99545, 106033, 99971, 100673, 106033, 99971, 16530, 104460, 101548, 3837, 106581, 100673, 99545, 106033, 1773, 151643], [111474, 99545, 71138, 99769, 102119, 108132, 111474, 104569, 105419, 15946, 3837, 109108, 105802, 102988, 118406, 104250, 3837, 100631, 105625, 15946, 104760, 102268, 101199, 53153, 99250, 104155, 104460, 106293, 1773, 151643], [100141, 50404, 99514, 121664, 5373, 111741, 5373, 32463, 52510, 32463, 99971, 111177, 9370, 102685, 72990, 103239, 86402, 1773, 73157, 102131, 30440, 99330, 102354, 99971, 20, 15, 15, 15, 106301, 1773, 151643], [99752, 100471, 99252, 9370, 99252, 52129, 31914, 101400, 21287, 18493, 120375, 102176, 33108, 99243, 99742, 119998, 9370, 59956, 101719, 52853, 17447, 1773, 151643], [104332, 101002, 101408, 100518,

100439, 115979, 100470, 115509, 107549, 33108, 104697, 102013, 3837, 104431, 101807, 100517, 101304, 24968, 39352, 104222, 108623, 100417, 82224, 104397, 100417, 99430, 71817, 111936, 117705, 3837, 104216, 101254, 108069, 102756, 104332, 1773, 101899, 99522, 3837, 50511, 37029, 110606, 9370, 101320, 107696, 71138, 5373, 105409, 5373, 116473, 90395, 100638, 111848, 86119, 1773, 91572, 3837, 73670, 37029, 99420, 110819, 104459, 71817, 30844, 21894, 40916, 69905, 1773, 151643]], 'positive_labels': [-100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, 151643], 'negative_labels': [[-100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, 151643], [-100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, 151643], [-100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, 151643], [-100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, 151643], [-100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, 151643]], 'negative_loss_scale': [None, None, None, None, None], 'positive_length': 26, 'negative_length': [16, 30, 31, 23, 69], 'labels': [1.0, 0.0, 0.0, 0.0, 0.0, 0.0], 'length': 207}

模型输入:

tensor([[ 18493, 30709, 100761, ..., 151643, 151643, 151643],

[101138, 112575, 3837, ..., 151643, 151643, 151643],

[ 71817, 100027, 27091, ..., 151643, 151643, 151643],

...,

[102354, 100174, 16530, ..., 151643, 151643, 151643],

[105625, 9370, 99971, ..., 151643, 151643, 151643],

[112646, 9370, 111858, ..., 151643, 151643, 151643]]) torch.Size([28, 101])

损失函数输入:

outputs = {'last_hidden_state': tensor([[-0.0289, -0.0035, -0.0092, ..., 0.0090, 0.0110, -0.0197],

[-0.0469, 0.0026, -0.0105, ..., 0.0029, -0.0238, 0.0090],

[ 0.0062, 0.0334, -0.0051, ..., 0.0036, 0.0011, 0.0069],

...,

[-0.0938, -0.0581, -0.0106, ..., -0.0077, 0.0098, 0.0483],

[-0.0378, -0.0742, -0.0081, ..., -0.0269, -0.0091, -0.0009],

[-0.0055, -0.0125, -0.0046, ..., -0.0204, -0.0028, -0.0081]],

dtype=torch.bfloat16, grad_fn=<DivBackward0>)}

labels = tensor([1., 0., 0., 0., 0., 0., 1., 0., 0., 0., 0., 0., 1., 0., 0., 0., 0., 0., 1., 0., 0., 0., 0., 0.])- 数据demo--->qwen3_emb.json

JSON

{"query": "如何培养一缸清澈透明、无异味且水质稳定的嫩绿水?",

"response": "培养嫩绿水的关键因素有光线、营养和日常管理。需要选择适当的光线,合理调整养殖密度,适中地换水,并每天目测水质变化,及时应对问题。光线选择要适度,散光太强要有遮蔽物,光线太弱可以使用较好的照明设备。营养管理要合理放养,换水次数要适中。管理要到位,确保每天观察水质的物理及生物因素变化。",

"rejected_response": ["因为插穗未生根之前必须保证插穗鲜嫩能进行光合作用以制造生根物质,同时减少插穗的水分蒸发。", "绿茶属于不发酵茶,其制成品的色泽和冲泡后的茶汤较多地保存了鲜茶叶的绿色格调。", "水滴鱼的身体呈凝胶果冻状,全身缺少肌肉,表皮略带粉色,密度比海水低,所以能够漂浮在水中;它没有鱼鳔,主要依靠一身的凝胶物来平衡水密度和抗压强。", "顺德筲箕鱼的特点是按鱼身不同部分安排不同调味,并使用竹藤编制的筲箕蒸制,使鱼肉达到一鱼三味的效果。", "采取一切有利于幼苗生长的措施,提高幼苗生存率。这一时期,水分是决定幼苗成活的关键因子。"]}- 训练指令

Swift

INFONCE_MASK_FAKE_NEGATIVE=true

swift sft \

--model dir1 \

--task_type embedding \

--model_type qwen3_emb \

--train_type lora \

--dataset qwen3_emb_train.json \

--split_dataset_ratio 0.05 \

--eval_strategy steps \

--output_dir output \

--eval_steps 100 \

--num_train_epochs 1 \

--save_steps 100 \

--per_device_train_batch_size 4 \

--per_device_eval_batch_size 4 \

--gradient_accumulation_steps 4 \

--learning_rate 6e-6 \

--loss_type infonce \

--label_names labels \

--dataloader_drop_last true

Swift

cpu 训练指令:

swift sft --model dir1 --task_type embedding --model_type qwen3_emb --train_type lora --dataset qwen3_emb_train.json --split_dataset_ratio 0.05 --eval_strategy steps --output_dir output --eval_steps 100 --num_train_epochs 1 --save_steps 100 --per_device_train_batch_size 4 --per_device_eval_batch_size 4 --gradient_accumulation_steps 4 --learning_rate 6e-6 --loss_type infonce --label_names labels --dataloader_drop_last true --fp16 false --bf16 false --use_cpu- 问题解决

2025-09-23 08:26:21\] 45fb934b8545:5358:5461 \[1\] misc/shmutils.cc:87 NCCL WARN Error: failed to extend /dev/shm/nccl-vCZC3l to 33030532 bytes, error: No space left on device (28) \[2025-09-23 08:26:21\] 45fb934b8545:5358:5461 \[1\] misc/shmutils.cc:129 NCCL WARN Error while creating shared memory segment /dev/shm/nccl-vCZC3l (size 33030528), error: No space left on device (28) \[2025-09-23 08:26:21\] 45fb934b8545:5359:5455 \[2\] misc/shmutils.cc:87 NCCL WARN Error: failed to extend /dev/shm/nccl-Wvu7fr to 33030532 bytes, error: No space left on device (28) \[2025-09-23 08:26:21\] 45fb934b8545:5357:5457 \[0\] misc/shmutils.cc:87 NCCL WARN Error: failed to extend /dev/shm/nccl-TM58ig to 33030532 bytes, error: No space left on device (28) \[2025-09-23 08:26:21\] 45fb934b8545:5360:5459 \[3\] misc/shmutils.cc:87 NCCL WARN Error: failed to extend /dev/shm/nccl-kIuUhv to 33030532 bytes, error: No space left on device (28) 45fb934b8545:5358:5461 \[1\] NCCL INFO proxy.cc:1336 -\> 2 \[2025-09-23 08:26:21\] 45fb934b8545:5359:5455 \[2\] misc/shmutils.cc:129 NCCL WARN Error while creating shared memory segment /dev/shm/nccl-Wvu7fr (size 33030528), error: No space left on device (28) 45fb934b8545:5359:5455 \[2\] NCCL INFO proxy.cc:1336 -\> 2 \[2025-09-23 08:26:21\] 45fb934b8545:5357:5457 \[0\] misc/shmutils.cc:129 NCCL WARN Error while creating shared memory segment /dev/shm/nccl-TM58ig (size 33030528), error: No space left on device (28) \[2025-09-23 08:26:21\] 45fb934b8545:5360:5459 \[3\] misc/shmutils.cc:129 NCCL WARN Error while creating shared memory segment /dev/shm/nccl-kIuUhv (size 33030528), error: No space left on device (28) docker 内使用多卡时,容易共享内存不足,导致vllm 无法启动。 因此,重新创建docker, 增大共享内存。 docker run -it --gpus all --name common_test1 --shm-size=24g -p38015:8014 -p38015:8013 -v /home/jyf:/home/ --privileged=true tone.tcl.com/devops/docker/release/ops/pytorch:2.1.0-cuda12.1-cudnn8-devel /bin/bash