一、问题背景

ttr-2.2.1 以上版本已修复

在 ttr-2.2.1 及以上版本中,Atlas 在开启 Kerberos 后会自动完成 Hive Hook 注入。

若使用早期版本(如 ttr-2.2.0 ),在 Service Check 环节可能因 Hive 未加载 Atlas Hook JAR 而失败。

如在部署或二开中遇到类似问题,可联系作者 获取补丁。

Atlas 启动/联动常见的四类权限或依赖问题

- HBase 无权限(AccessDeniedException)

- Solr 401 Unauthorized

- Kafka ACL 拒绝访问

- Hive 未正确加载 Atlas Hook(本文聚焦)

二、现象复盘与根因定位

1、错误堆栈(节选)

bash

stderr:

NoneType: None

The above exception was the cause of the following exception:

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/stacks/BIGTOP/3.2.0/services/HIVE/package/scripts/service_check.py", line 171, in <module>

HiveServiceCheck().execute()

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 413, in execute

method(env)

File "/var/lib/ambari-agent/cache/stacks/BIGTOP/3.2.0/services/HIVE/package/scripts/service_check.py", line 63, in service_check

hcat_service_check()

File "/var/lib/ambari-agent/cache/stacks/BIGTOP/3.2.0/services/HIVE/package/scripts/hcat_service_check.py", line 61, in hcat_service_check

logoutput=True)

File "/usr/lib/ambari-agent/lib/resource_management/core/base.py", line 168, in __init__

self.env.run()

File "/usr/lib/ambari-agent/lib/resource_management/core/environment.py", line 171, in run

self.run_action(resource, action)

File "/usr/lib/ambari-agent/lib/resource_management/core/environment.py", line 137, in run_action

provider_action()

File "/usr/lib/ambari-agent/lib/resource_management/core/providers/system.py", line 350, in action_run

returns=self.resource.returns,

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 95, in inner

result = function(command, **kwargs)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 161, in checked_call

returns=returns,

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 278, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/ambari-agent/lib/resource_management/core/shell.py", line 493, in _call

raise ExecutionFailed(err_msg, code, out, err)

resource_management.core.exceptions.ExecutionFailed: Execution of '/usr/bin/kinit -kt /etc/security/keytabs/smokeuser.headless.keytab ambari-qa-aaa@TTBIGDATA.COM; env JAVA_HOME=/opt/modules/jdk1.8.0_202 /var/lib/ambari-agent/tmp/hcatSmoke.sh hcatsmokeid000ac363_date330925 prepare true' returned 64. SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/bigtop/3.2.0/usr/lib/hadoop/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/bigtop/3.2.0/usr/lib/hive/lib/log4j-slf4j-impl-2.17.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Reload4jLoggerFactory]

25/11/09 19:33:56 INFO conf.HiveConf: Found configuration file file:/usr/bigtop/3.2.0/etc/hive/conf.dist/hive-site.xml

25/11/09 19:33:57 WARN conf.HiveConf: HiveConf of name hive.hook.proto.base-directory does not exist

25/11/09 19:33:57 WARN conf.HiveConf: HiveConf of name hive.strict.managed.tables does not exist

25/11/09 19:33:57 WARN conf.HiveConf: HiveConf of name hive.stats.fetch.partition.stats does not exist

25/11/09 19:33:57 WARN conf.HiveConf: HiveConf of name hive.heapsize does not exist

25/11/09 19:33:58 WARN conf.HiveConf: HiveConf of name hive.hook.proto.base-directory does not exist

25/11/09 19:33:58 WARN conf.HiveConf: HiveConf of name hive.strict.managed.tables does not exist

25/11/09 19:33:58 WARN conf.HiveConf: HiveConf of name hive.stats.fetch.partition.stats does not exist

25/11/09 19:33:58 WARN conf.HiveConf: HiveConf of name hive.heapsize does not exist

Hive Session ID = 496ff2ba-73a9-4573-ab8c-089d034dd7bb

25/11/09 19:33:58 INFO SessionState: Hive Session ID = 496ff2ba-73a9-4573-ab8c-089d034dd7bb

25/11/09 19:33:58 INFO cli.HCatCli: Forcing hive.execution.engine to mr

25/11/09 19:34:00 INFO session.SessionState: Created HDFS directory: /tmp/hive/ambari-qa/496ff2ba-73a9-4573-ab8c-089d034dd7bb

25/11/09 19:34:00 INFO session.SessionState: Created local directory: /tmp/ambari-qa/496ff2ba-73a9-4573-ab8c-089d034dd7bb

25/11/09 19:34:00 INFO session.SessionState: Created HDFS directory: /tmp/hive/ambari-qa/496ff2ba-73a9-4573-ab8c-089d034dd7bb/_tmp_space.db

25/11/09 19:34:00 INFO ql.Driver: Compiling command(queryId=ambari-qa_20251109193400_b9333130-1f67-414a-b411-e481864194ca): show tables

FAILED: RuntimeException Error loading hooks(hive.exec.post.hooks): java.lang.ClassNotFoundException: org.apache.atlas.hive.hook.HiveHook

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:348)

at org.apache.hadoop.hive.ql.hooks.HookUtils.readHooksFromConf(HookUtils.java:55)

at org.apache.hadoop.hive.ql.HookRunner.loadHooksFromConf(HookRunner.java:90)

at org.apache.hadoop.hive.ql.HookRunner.initialize(HookRunner.java:79)

at org.apache.hadoop.hive.ql.HookRunner.runBeforeParseHook(HookRunner.java:105)

at org.apache.hadoop.hive.ql.Driver.compile(Driver.java:612)

at org.apache.hadoop.hive.ql.Driver.compileInternal(Driver.java:1826)

at org.apache.hadoop.hive.ql.Driver.compileAndRespond(Driver.java:1773)

at org.apache.hadoop.hive.ql.Driver.compileAndRespond(Driver.java:1768)

at org.apache.hadoop.hive.ql.reexec.ReExecDriver.compileAndRespond(ReExecDriver.java:126)

at org.apache.hadoop.hive.ql.reexec.ReExecDriver.run(ReExecDriver.java:214)

at org.apache.hive.hcatalog.cli.HCatDriver.run(HCatDriver.java:49)

at org.apache.hive.hcatalog.cli.HCatCli.processCmd(HCatCli.java:291)

at org.apache.hive.hcatalog.cli.HCatCli.processLine(HCatCli.java:245)

at org.apache.hive.hcatalog.cli.HCatCli.main(HCatCli.java:182)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:323)

at org.apache.hadoop.util.RunJar.main(RunJar.java:236)

25/11/09 19:34:00 ERROR ql.Driver: FAILED: RuntimeException Error loading hooks(hive.exec.post.hooks): java.lang.ClassNotFoundException: org.apache.atlas.hive.hook.HiveHook

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:348)

at org.apache.hadoop.hive.ql.hooks.HookUtils.readHooksFromConf(HookUtils.java:55)

at org.apache.hadoop.hive.ql.HookRunner.loadHooksFromConf(HookRunner.java:90)

at org.apache.hadoop.hive.ql.HookRunner.initialize(HookRunner.java:79)

at org.apache.hadoop.hive.ql.HookRunner.runBeforeParseHook(HookRunner.java:105)

at org.apache.hadoop.hive.ql.Driver.compile(Driver.java:612)

at org.apache.hadoop.hive.ql.Driver.compileInternal(Driver.java:1826)

at org.apache.hadoop.hive.ql.Driver.compileAndRespond(Driver.java:1773)

at org.apache.hadoop.hive.ql.Driver.compileAndRespond(Driver.java:1768)

at org.apache.hadoop.hive.ql.reexec.ReExecDriver.compileAndRespond(ReExecDriver.java:126)

at org.apache.hadoop.hive.ql.reexec.ReExecDriver.run(ReExecDriver.java:214)

at org.apache.hive.hcatalog.cli.HCatDriver.run(HCatDriver.java:49)

at org.apache.hive.hcatalog.cli.HCatCli.processCmd(HCatCli.java:291)

at org.apache.hive.hcatalog.cli.HCatCli.processLine(HCatCli.java:245)

at org.apache.hive.hcatalog.cli.HCatCli.main(HCatCli.java:182)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:323)

at org.apache.hadoop.util.RunJar.main(RunJar.java:236)

java.lang.RuntimeException: Error loading hooks(hive.exec.post.hooks): java.lang.ClassNotFoundException: org.apache.atlas.hive.hook.HiveHook

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:348)

at org.apache.hadoop.hive.ql.hooks.HookUtils.readHooksFromConf(HookUtils.java:55)

at org.apache.hadoop.hive.ql.HookRunner.loadHooksFromConf(HookRunner.java:90)

at org.apache.hadoop.hive.ql.HookRunner.initialize(HookRunner.java:79)

at org.apache.hadoop.hive.ql.HookRunner.runBeforeParseHook(HookRunner.java:105)

at org.apache.hadoop.hive.ql.Driver.compile(Driver.java:612)

at org.apache.hadoop.hive.ql.Driver.compileInternal(Driver.java:1826)

at org.apache.hadoop.hive.ql.Driver.compileAndRespond(Driver.java:1773)

at org.apache.hadoop.hive.ql.Driver.compileAndRespond(Driver.java:1768)

at org.apache.hadoop.hive.ql.reexec.ReExecDriver.compileAndRespond(ReExecDriver.java:126)

at org.apache.hadoop.hive.ql.reexec.ReExecDriver.run(ReExecDriver.java:214)

at org.apache.hive.hcatalog.cli.HCatDriver.run(HCatDriver.java:49)

at org.apache.hive.hcatalog.cli.HCatCli.processCmd(HCatCli.java:291)

at org.apache.hive.hcatalog.cli.HCatCli.processLine(HCatCli.java:245)

at org.apache.hive.hcatalog.cli.HCatCli.main(HCatCli.java:182)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:323)

at org.apache.hadoop.util.RunJar.main(RunJar.java:236)

at org.apache.hadoop.hive.ql.HookRunner.loadHooksFromConf(HookRunner.java:93)

at org.apache.hadoop.hive.ql.HookRunner.initialize(HookRunner.java:79)

at org.apache.hadoop.hive.ql.HookRunner.runBeforeParseHook(HookRunner.java:105)

at org.apache.hadoop.hive.ql.Driver.compile(Driver.java:612)

at org.apache.hadoop.hive.ql.Driver.compileInternal(Driver.java:1826)

at org.apache.hadoop.hive.ql.Driver.compileAndRespond(Driver.java:1773)

at org.apache.hadoop.hive.ql.Driver.compileAndRespond(Driver.java:1768)

at org.apache.hadoop.hive.ql.reexec.ReExecDriver.compileAndRespond(ReExecDriver.java:126)

at org.apache.hadoop.hive.ql.reexec.ReExecDriver.run(ReExecDriver.java:214)

at org.apache.hive.hcatalog.cli.HCatDriver.run(HCatDriver.java:49)

at org.apache.hive.hcatalog.cli.HCatCli.processCmd(HCatCli.java:291)

at org.apache.hive.hcatalog.cli.HCatCli.processLine(HCatCli.java:245)

at org.apache.hive.hcatalog.cli.HCatCli.main(HCatCli.java:182)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:323)

at org.apache.hadoop.util.RunJar.main(RunJar.java:236)

Caused by: java.lang.ClassNotFoundException: org.apache.atlas.hive.hook.HiveHook

at java.net.URLClassLoader.findClass(URLClassLoader.java:382)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:348)

at org.apache.hadoop.hive.ql.hooks.HookUtils.readHooksFromConf(HookUtils.java:55)

at org.apache.hadoop.hive.ql.HookRunner.loadHooksFromConf(HookRunner.java:90)

... 18 more2、核心结论

- 触发点 :Ambari 的 Hive Service Check 调用

hcatSmoke.sh,在 Hive CLI 启动时根据hive.exec.post.hooks

加载 Atlas HiveHook。 - 根因 :运行时 HIVE_AUX_JARS_PATH 未包含

atlas-hive-plugin相关 JAR,导致 ClassNotFoundException:

org.apache.atlas.hive.hook.HiveHook。 - 现象 :

kinit成功、Hive 启动成功,但 Hook 初始化失败 → SQL 编译阶段报错 → Service Check 失败。

三、修复思路总览

- 步骤一:为 Hive 注入 Atlas Hook 依赖 (修正

HIVE_AUX_JARS_PATH) - 步骤二:增强 hcat 服务检查脚本(在 Service Check 的执行环境中显式注入 Hook)

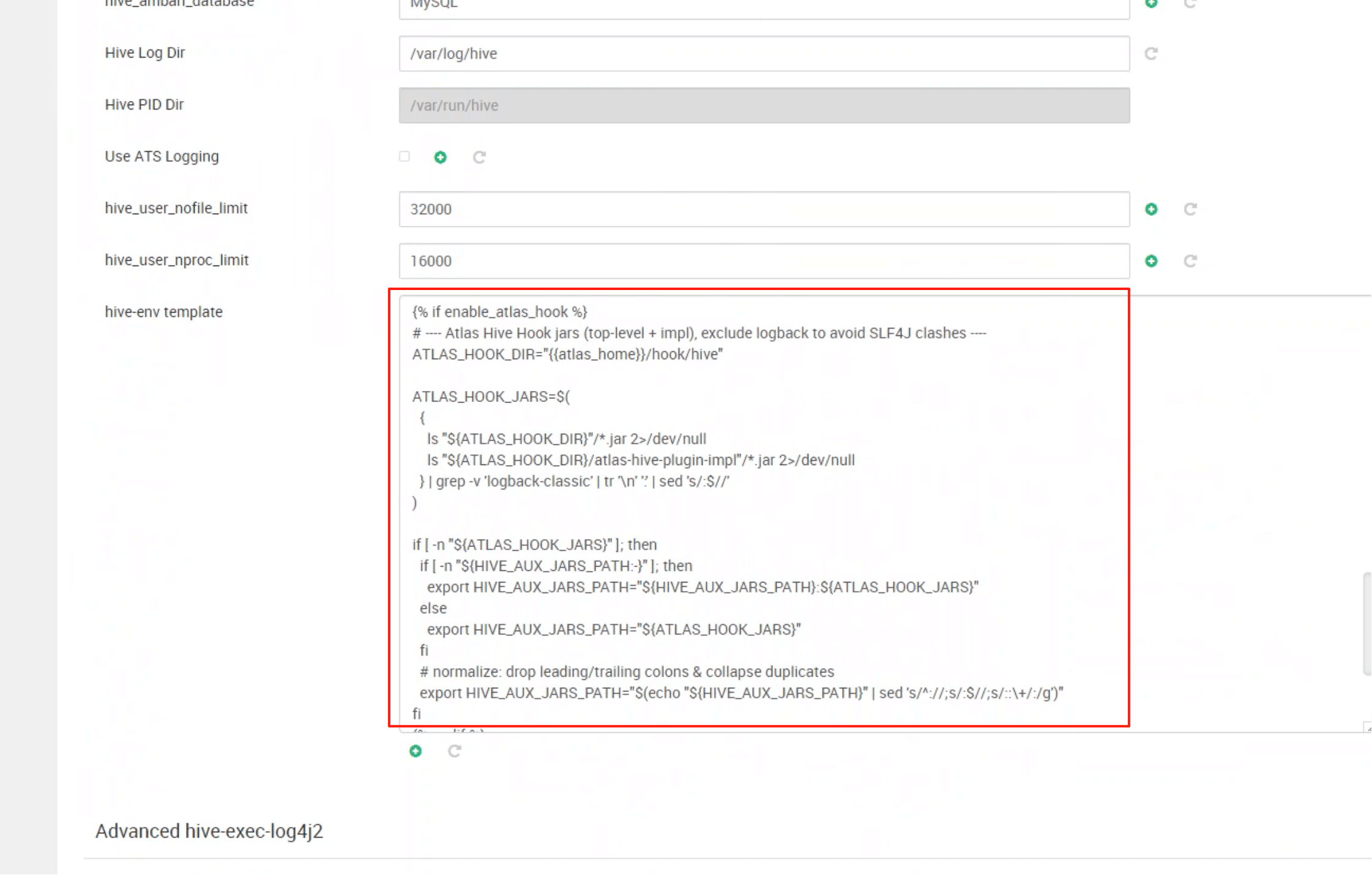

四、步骤一:为 Hive 注入 Atlas Hook JAR

目标:在 Hive CLI/服务 的运行环境中,始终能解析到

atlas-hive-plugin依赖。

参考渲染后的

hive-env.sh片段:

bash

ATLAS_HOOK_DIR="/usr/bigtop/current/atlas-server/hook/hive"

ATLAS_HOOK_JARS=$(

{

ls "${ATLAS_HOOK_DIR}"/*.jar 2>/dev/null

ls "${ATLAS_HOOK_DIR}/atlas-hive-plugin-impl"/*.jar 2>/dev/null

} | grep -v 'logback-classic' | tr '\n' ':' | sed 's/:$//'

)

if [ -n "${ATLAS_HOOK_JARS}" ]; then

if [ -n "${HIVE_AUX_JARS_PATH:-}" ]; then

export HIVE_AUX_JARS_PATH="${HIVE_AUX_JARS_PATH}:${ATLAS_HOOK_JARS}"

else

export HIVE_AUX_JARS_PATH="${ATLAS_HOOK_JARS}"

fi

# 规范化:去头尾冒号、合并重复

export HIVE_AUX_JARS_PATH="$(echo "${HIVE_AUX_JARS_PATH}" | sed 's/^://;s/:$//;s/::\+/:/g')"

fi说明

- 自动扫描 Atlas Hook 目录,拼接为

HIVE_AUX_JARS_PATH;- 过滤

logback-classic,避免日志绑定冲突;- 规范化路径,防止多余冒号导致 classpath 解析异常。

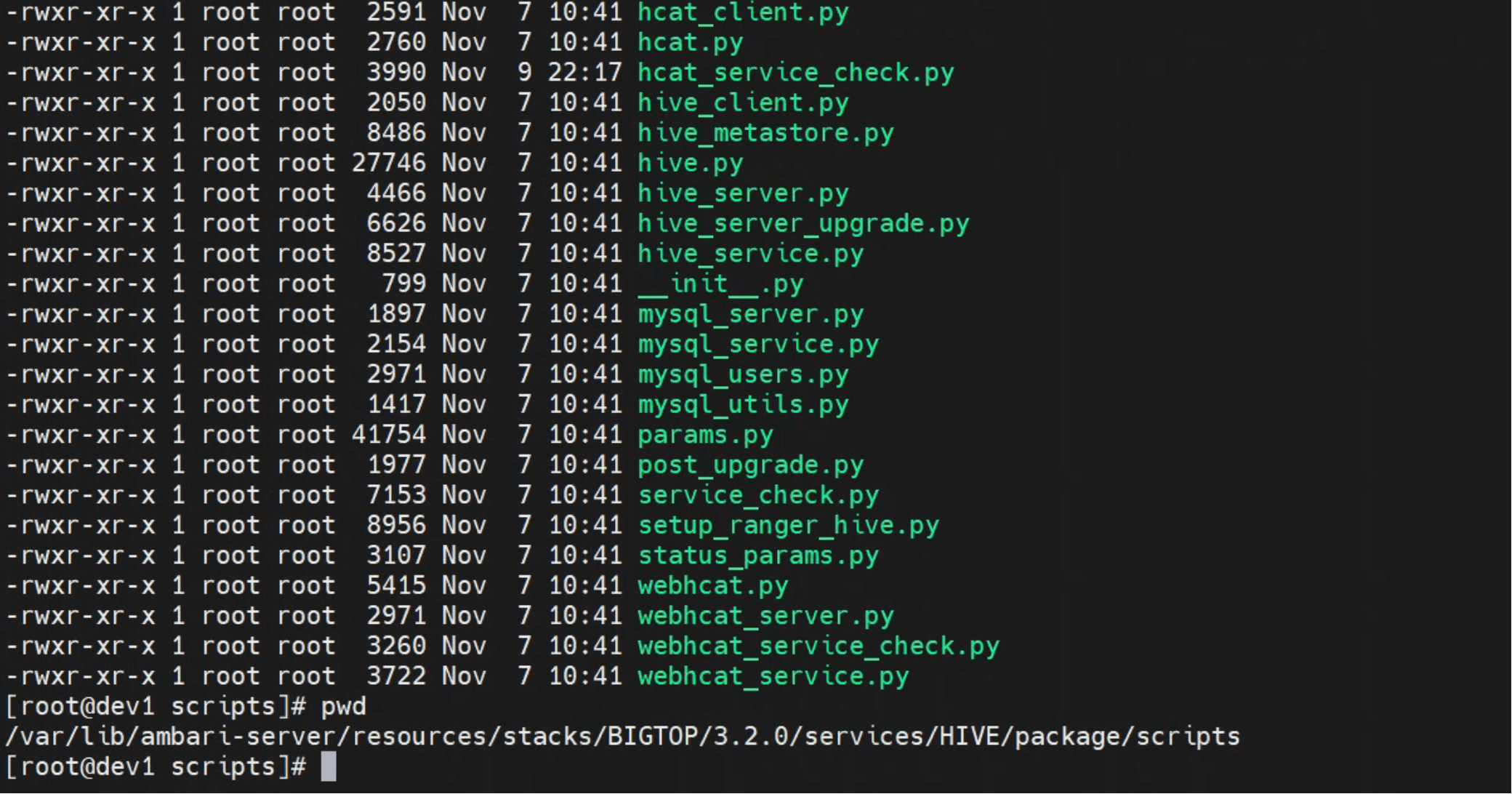

五、步骤二:增强 hcat 服务检查脚本(显式注入 Hook)

目标:Service Check 的执行环境 与真实 Hive 运行环境 一致,避免 smoke 脚本"绕过"了我们在

hive-env中的注入逻辑。

修改文件路径:

bash

/var/lib/ambari-server/resources/stacks/BIGTOP/3.2.0/services/HIVE/package/scripts

将 hcat_service_check.py(或对应脚本)替换为下述增强版本关键片段(已适配 enable_atlas_hook 条件与 Ambari 参数):

python

# --- Atlas Hook 环境注入 ---

if params.enable_atlas_hook:

atlas_hook_env_cmd = format(r'''

ATLAS_HOOK_DIR="{atlas_home}/hook/hive"

ATLAS_HOOK_JARS=$(

{{

ls "${{ATLAS_HOOK_DIR}}"/*.jar 2>/dev/null

ls "${{ATLAS_HOOK_DIR}}/atlas-hive-plugin-impl"/*.jar 2>/dev/null

}} | grep -v 'logback-classic' | tr '\n' ':' | sed 's/:$//'

)

if [ -n "${{ATLAS_HOOK_JARS}}" ]; then

if [ -n "${{HIVE_AUX_JARS_PATH:-}}" ]; then

export HIVE_AUX_JARS_PATH="${{HIVE_AUX_JARS_PATH}}:${{ATLAS_HOOK_JARS}}"

else

export HIVE_AUX_JARS_PATH="${{ATLAS_HOOK_JARS}}"

fi

export HIVE_AUX_JARS_PATH="$(echo "${{HIVE_AUX_JARS_PATH}}" | sed 's/^://;s/:$//;s/::\+/:/g')"

fi

''')

else:

atlas_hook_env_cmd = ''在 prepare/cleanup 两个动作前拼接 atlas_hook_env_cmd,确保 smoke 流程也加载 Hook:

python

prepare_cmd = format(

"{kinit_cmd}{atlas_hook_env_cmd}\n"

"env JAVA_HOME={java64_home} {tmp_dir}/hcatSmoke.sh hcatsmoke{unique} prepare {purge_tables}"

)

...

cleanup_cmd = format(

"{kinit_cmd}{atlas_hook_env_cmd}\n"

" {tmp_dir}/hcatSmoke.sh hcatsmoke{unique} cleanup {purge_tables}"

)替换完成后 重启 ambari-server 使之生效。

六、验证闭环(kinit → smoke → Hook 解析)

bash

kinit -kt /etc/security/keytabs/smokeuser.headless.keytab ambari-qa-aaa@TTBIGDATA.COM

klist

bash

env JAVA_HOME=/opt/modules/jdk1.8.0_202 \

/var/lib/ambari-agent/tmp/hcatSmoke.sh hcatsmokeid000ac563_date360925 prepare true

sql

-- Beeline 连接后执行

show tables;

-- 不再出现 HiveHook ClassNotFound,且 post hooks 正常初始化执行日志应出现我们注入的 Atlas Hook 相关 echo/环境变量,且不再报:

java.lang.ClassNotFoundException: org.apache.atlas.hive.hook.HiveHook。

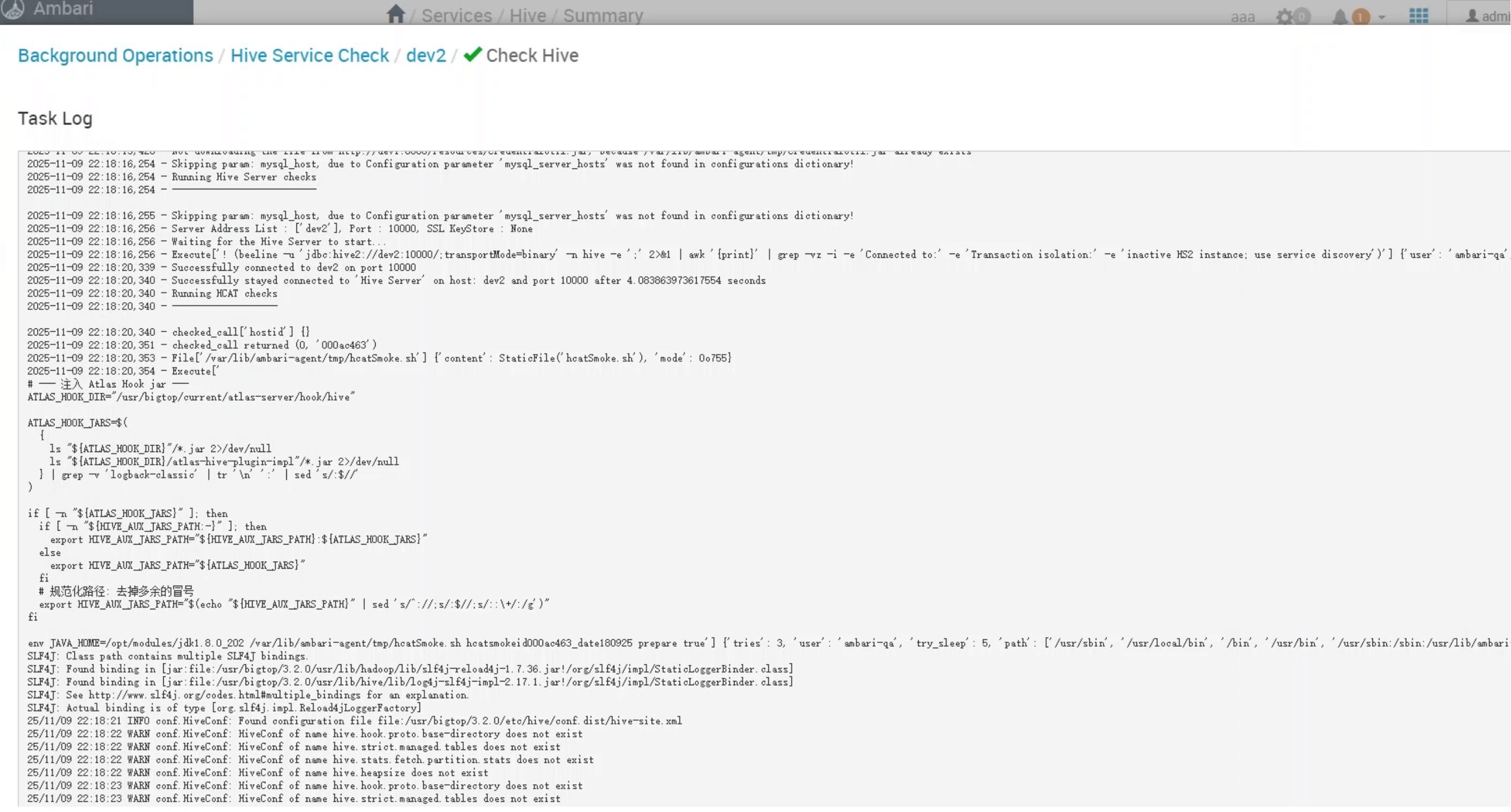

示例片段(已生效):

2025-11-09 22:18:20,340 - checked_call['hostid'] {}

2025-11-09 22:18:20,351 - checked_call returned (0, '000ac463')

2025-11-09 22:18:20,353 - File['/var/lib/ambari-agent/tmp/hcatSmoke.sh'] {'content': StaticFile('hcatSmoke.sh'), 'mode': 0o755}

2025-11-09 22:18:20,354 - Execute['

# --- 注入 Atlas Hook jar ---

ATLAS_HOOK_DIR="/usr/bigtop/current/atlas-server/hook/hive"

ATLAS_HOOK_JARS=$(

{

ls "${ATLAS_HOOK_DIR}"/*.jar 2>/dev/null

ls "${ATLAS_HOOK_DIR}/atlas-hive-plugin-impl"/*.jar 2>/dev/null

} | grep -v 'logback-classic' | tr '\n' ':' | sed 's/:$//'

)

if [ -n "${ATLAS_HOOK_JARS}" ]; then

if [ -n "${HIVE_AUX_JARS_PATH:-}" ]; then

export HIVE_AUX_JARS_PATH="${HIVE_AUX_JARS_PATH}:${ATLAS_HOOK_JARS}"

else

export HIVE_AUX_JARS_PATH="${ATLAS_HOOK_JARS}"

fi

# 规范化路径: 去掉多余的冒号

export HIVE_AUX_JARS_PATH="$(echo "${HIVE_AUX_JARS_PATH}" | sed 's/^://;s/:$//;s/::\+/:/g')"

fi

env JAVA_HOME=/opt/modules/jdk1.8.0_202 /var/lib/ambari-agent/tmp/hcatSmoke.sh hcatsmokeid000ac463_date180925 prepare true'] {'tries': 3, 'user': 'ambari-qa', 'try_sleep': 5, 'path': ['/usr/sbin', '/usr/local/bin', '/bin', '/usr/bin', '/usr/sbin:/sbin:/usr/lib/ambari-server/*:/usr/sbin:/sbin:/usr/lib/ambari-server/*:/opt/modules/jdk1.8.0_202/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin:/var/lib/ambari-agent:/var/lib/ambari-agent:/usr/bigtop/3.2.0/usr/bin:/usr/bigtop/current/hive-client/bin'], 'logoutput': True}

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/bigtop/3.2.0/usr/lib/hadoop/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/bigtop/3.2.0/usr/lib/hive/lib/log4j-slf4j-impl-2.17.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Reload4jLoggerFactory]

25/11/09 22:18:21 INFO conf.HiveConf: Found configuration file file:/usr/bigtop/3.2.0/etc/hive/conf.dist/hive-site.xml重启服务

ambari-server restart