1.上传Spark安装包

spark-3.2.0-bin-hadoop3.2.tgz

上传这个文件到Linux服务器中

将其解压, 课程中将其解压(安装)到: /export/server内.

tar -zxvf spark-3.2.0-bin-hadoop3.2.tgz -C /export/server/

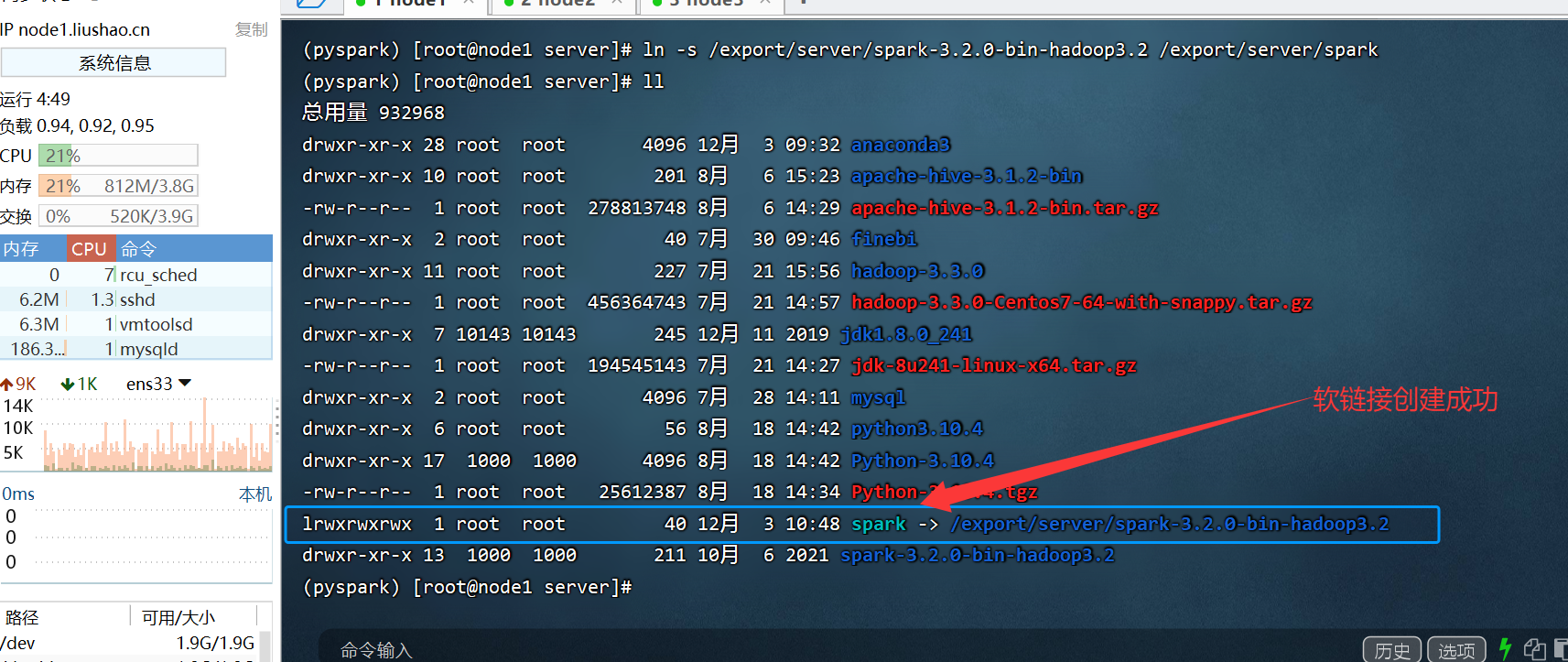

由于spark目录名称很长, 给其一个软链接:

ln -s /export/server/spark-3.2.0-bin-hadoop3.2 /export/server/spark

具体过程参见99号文章

2. 配置环境变量

bash

(pyspark) [root@node1 server]# vim /etc/profile

PYSPARK_PYTHON=变量值的查找方式参见下面-- 开始

将node1右键复制一下链接

连接成功

Last login: Wed Dec 3 13:47:45 2025

(base) [root@node1 ~]# sudo su -

上一次登录:三 12月 3 13:50:03 CST 2025从 192.168.88.1pts/3 上

(base) [root@node1 ~]# ll

total 1220048

-rw-r--r-- 1 root root 4 Jul 23 09:20 1.txt

-rw-r--r-- 1 root root 4 Jul 24 10:27 666.txt

-rw-------. 1 root root 1340 Sep 11 2020 anaconda-ks.cfg

-rw-r--r-- 1 root root 34 Jul 23 09:56 hello.txt

-rwxr-xr-x 1 root root 1218872666 Jul 30 10:17 linux_unix_FineBI6_0-CN.sh

-rw------- 1 root root 4813091 Aug 18 11:22 nohup.out

-rw-r--r-- 1 root root 25612387 Mar 24 2022 Python-3.10.4.tgz

(base) [root@node1 ~]# cd /export/server/anaconda3/

(base) [root@node1 anaconda3]# ll

total 220

drwxr-xr-x 2 root root 12288 Dec 3 09:31 bin

drwxr-xr-x 2 root root 30 Dec 3 09:31 compiler_compat

drwxr-xr-x 2 root root 19 Dec 3 09:31 condabin

drwxr-xr-x 2 root root 20480 Dec 3 09:32 conda-meta

drwxr-xr-x 3 root root 20 Dec 3 09:31 doc

drwxr-xr-x 3 root root 49 Dec 3 10:36 envs

drwxr-xr-x 7 root root 77 Dec 3 09:31 etc

drwxr-xr-x 41 root root 12288 Dec 3 09:31 include

drwxr-xr-x 3 root root 25 Dec 3 09:31 info

drwxr-xr-x 23 root root 36864 Dec 3 09:31 lib

drwxr-xr-x 4 root root 97 Dec 3 09:31 libexec

-rw-r--r-- 1 root root 10588 May 14 2021 LICENSE.txt

drwxr-xr-x 3 root root 22 Dec 3 09:31 licensing

drwxr-xr-x 3 root root 18 Dec 3 09:31 man

drwxr-xr-x 65 root root 4096 Dec 3 09:31 mkspecs

drwxr-xr-x 2 root root 252 Dec 3 09:31 phrasebooks

drwxr-xr-x 498 root root 45056 Dec 3 10:36 pkgs

drwxr-xr-x 27 root root 4096 Dec 3 09:31 plugins

drwxr-xr-x 25 root root 4096 Dec 3 09:31 qml

drwxr-xr-x 2 root root 175 Dec 3 09:31 resources

drwxr-xr-x 2 root root 203 Dec 3 09:31 sbin

drwxr-xr-x 30 root root 4096 Dec 3 09:31 share

drwxr-xr-x 3 root root 22 Dec 3 09:31 shell

drwxr-xr-x 3 root root 146 Dec 3 09:31 ssl

drwxr-xr-x 3 root root 12288 Dec 3 09:31 translations

drwxr-xr-x 3 root root 19 Dec 3 09:31 var

drwxr-xr-x 3 root root 21 Dec 3 09:31 x86_64-conda_cos6-linux-gnu

(base) [root@node1 anaconda3]# cd envs/

(base) [root@node1 envs]# ll

total 0

drwxr-xr-x 11 root root 173 Dec 3 10:36 pyspark

(base) [root@node1 envs]# cd pyspark/

(base) [root@node1 pyspark]# ll

total 24

drwxr-xr-x 2 root root 4096 Dec 3 10:36 bin

drwxr-xr-x 2 root root 30 Dec 3 10:36 compiler_compat

drwxr-xr-x 2 root root 4096 Dec 3 10:36 conda-meta

drwxr-xr-x 11 root root 4096 Dec 3 10:36 include

drwxr-xr-x 17 root root 8192 Dec 3 10:36 lib

drwxr-xr-x 11 root root 128 Dec 3 10:36 share

drwxr-xr-x 3 root root 146 Dec 3 10:36 ssl

drwxr-xr-x 3 root root 17 Dec 3 10:36 x86_64-conda_cos7-linux-gnu

drwxr-xr-x 3 root root 17 Dec 3 10:36 x86_64-conda-linux-gnu

(base) [root@node1 pyspark]# cd bin

(base) [root@node1 bin]# ll

total 20152

lrwxrwxrwx 1 root root 8 Dec 3 10:36 2to3 -> 2to3-3.8

-rwxrwxr-x 1 root root 128 Dec 3 10:36 2to3-3.8

lrwxrwxrwx 1 root root 3 Dec 3 10:36 captoinfo -> tic

-rwxrwxr-x 2 root root 14312 Jul 31 15:38 clear

-rwxrwxr-x 1 root root 6975 Dec 3 10:36 c_rehash

lrwxrwxrwx 1 root root 7 Dec 3 10:36 idle3 -> idle3.8

-rwxrwxr-x 1 root root 126 Dec 3 10:36 idle3.8

-rwxrwxr-x 2 root root 63536 Jul 31 15:38 infocmp

lrwxrwxrwx 1 root root 3 Dec 3 10:36 infotocap -> tic

lrwxrwxrwx 1 root root 2 Dec 3 10:36 lzcat -> xz

lrwxrwxrwx 1 root root 6 Dec 3 10:36 lzcmp -> xzdiff

lrwxrwxrwx 1 root root 6 Dec 3 10:36 lzdiff -> xzdiff

lrwxrwxrwx 1 root root 6 Dec 3 10:36 lzegrep -> xzgrep

lrwxrwxrwx 1 root root 6 Dec 3 10:36 lzfgrep -> xzgrep

lrwxrwxrwx 1 root root 6 Dec 3 10:36 lzgrep -> xzgrep

lrwxrwxrwx 1 root root 6 Dec 3 10:36 lzless -> xzless

lrwxrwxrwx 1 root root 2 Dec 3 10:36 lzma -> xz

-rwxrwxr-x 2 root root 17176 Feb 14 2025 lzmadec

-rwxrwxr-x 1 root root 16968 Dec 3 10:36 lzmainfo

lrwxrwxrwx 1 root root 6 Dec 3 10:36 lzmore -> xzmore

-rwxrwxr-x 1 root root 9028 Dec 3 10:36 ncursesw6-config

-rwxrwxr-x 2 root root 975800 Jul 22 17:31 openssl

-rwxrwxr-x 1 root root 257 Dec 3 10:36 pip

-rwxrwxr-x 1 root root 257 Dec 3 10:36 pip3

lrwxrwxrwx 1 root root 8 Dec 3 10:36 pydoc -> pydoc3.8

lrwxrwxrwx 1 root root 8 Dec 3 10:36 pydoc3 -> pydoc3.8

-rwxrwxr-x 1 root root 111 Dec 3 10:36 pydoc3.8

lrwxrwxrwx 1 root root 9 Dec 3 10:36 python -> python3.8

lrwxrwxrwx 1 root root 9 Dec 3 10:36 python3 -> python3.8

-rwxrwxr-x 1 root root 15160256 Dec 3 10:36 python3.8

-rwxrwxr-x 1 root root 3524 Dec 3 10:36 python3.8-config

lrwxrwxrwx 1 root root 16 Dec 3 10:36 python3-config -> python3.8-config

lrwxrwxrwx 1 root root 4 Dec 3 10:36 reset -> tset

-rwxrwxr-x 2 root root 1691728 Jul 18 01:33 sqlite3

-rwxrwxr-x 2 root root 30392 Aug 15 15:44 sqlite3_analyzer

-rwxrwxr-x 2 root root 22424 Jul 31 15:38 tabs

lrwxrwxrwx 1 root root 8 Dec 3 10:36 tclsh -> tclsh8.6

-rwxrwxr-x 2 root root 15984 Aug 15 15:45 tclsh8.6

-rwxrwxr-x 2 root root 92248 Jul 31 15:38 tic

-rwxrwxr-x 2 root root 22424 Jul 31 15:38 toe

-rwxrwxr-x 2 root root 26624 Jul 31 15:38 tput

-rwxrwxr-x 2 root root 30696 Jul 31 15:38 tset

lrwxrwxrwx 1 root root 2 Dec 3 10:36 unlzma -> xz

lrwxrwxrwx 1 root root 2 Dec 3 10:36 unxz -> xz

-rwxrwxr-x 1 root root 244 Dec 3 10:36 wheel

lrwxrwxrwx 1 root root 7 Dec 3 10:36 wish -> wish8.6

-rwxrwxr-x 2 root root 16136 Aug 15 15:45 wish8.6

lrwxrwxrwx 1 root root 25 Dec 3 10:36 x86_64-conda_cos7-linux-gnu-ld -> x86_64-conda-linux-gnu-ld

-rwxrwxr-x 2 root root 2195376 Sep 26 2024 x86_64-conda-linux-gnu-ld

-rwxrwxr-x 1 root root 108336 Dec 3 10:36 xz

lrwxrwxrwx 1 root root 2 Dec 3 10:36 xzcat -> xz

lrwxrwxrwx 1 root root 6 Dec 3 10:36 xzcmp -> xzdiff

-rwxrwxr-x 2 root root 17176 Feb 14 2025 xzdec

-rwxrwxr-x 2 root root 7588 Feb 14 2025 xzdiff

lrwxrwxrwx 1 root root 6 Dec 3 10:36 xzegrep -> xzgrep

lrwxrwxrwx 1 root root 6 Dec 3 10:36 xzfgrep -> xzgrep

-rwxrwxr-x 2 root root 10413 Feb 14 2025 xzgrep

-rwxrwxr-x 2 root root 2383 Feb 14 2025 xzless

-rwxrwxr-x 2 root root 2234 Feb 14 2025 xzmore

(base) [root@node1 bin]# pwd

/export/server/anaconda3/envs/pyspark/bin

(base) [root@node1 bin]#

【最终得到

/export/server/anaconda3/envs/pyspark/bin

路径】

-- 结束

bash

(pyspark) [root@node1 server]# vim /etc/profile

添加如下代码:

export SPARK_HOME=/export/server/spark

export PYSPARK_PYTHON=/export/server/anaconda3/envs/pyspark/bin/python3.8

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

bash

PYSPARK_PYTHON和 JAVA_HOME 需要同样配置在: `/root/.bashrc`中

(pyspark) [root@node1 server]# vim /.bashrc

添加下面的配置

export JAVA_HOME=/export/server/jdk1.8.0_241

export PYSPARK_PYTHON=/export/server/anaconda3/envs/pyspark/bin/python3.8

bash

(pyspark) [root@node1 server]# vim /etc/profile

(pyspark) [root@node1 server]# vim /.bashrc

(pyspark) [root@node1 server]# cd /export/server/spark

(pyspark) [root@node1 spark]# ll

总用量 124

drwxr-xr-x 2 1000 1000 4096 10月 6 2021 bin

drwxr-xr-x 2 1000 1000 197 10月 6 2021 conf

drwxr-xr-x 5 1000 1000 50 10月 6 2021 data

drwxr-xr-x 4 1000 1000 29 10月 6 2021 examples

drwxr-xr-x 2 1000 1000 12288 10月 6 2021 jars

drwxr-xr-x 4 1000 1000 38 10月 6 2021 kubernetes

-rw-r--r-- 1 1000 1000 22878 10月 6 2021 LICENSE

drwxr-xr-x 2 1000 1000 4096 10月 6 2021 licenses

-rw-r--r-- 1 1000 1000 57677 10月 6 2021 NOTICE

drwxr-xr-x 9 1000 1000 327 10月 6 2021 python

drwxr-xr-x 3 1000 1000 17 10月 6 2021 R

-rw-r--r-- 1 1000 1000 4512 10月 6 2021 README.md

-rw-r--r-- 1 1000 1000 167 10月 6 2021 RELEASE

drwxr-xr-x 2 1000 1000 4096 10月 6 2021 sbin

drwxr-xr-x 2 1000 1000 42 10月 6 2021 yarn

(pyspark) [root@node1 spark]# cd bin

(pyspark) [root@node1 bin]# ll

总用量 116

-rwxr-xr-x 1 1000 1000 1089 10月 6 2021 beeline

-rw-r--r-- 1 1000 1000 1064 10月 6 2021 beeline.cmd

-rwxr-xr-x 1 1000 1000 10965 10月 6 2021 docker-image-tool.sh

-rwxr-xr-x 1 1000 1000 1935 10月 6 2021 find-spark-home

-rw-r--r-- 1 1000 1000 2685 10月 6 2021 find-spark-home.cmd

-rw-r--r-- 1 1000 1000 2337 10月 6 2021 load-spark-env.cmd

-rw-r--r-- 1 1000 1000 2435 10月 6 2021 load-spark-env.sh

-rwxr-xr-x 1 1000 1000 2636 10月 6 2021 pyspark

-rw-r--r-- 1 1000 1000 1542 10月 6 2021 pyspark2.cmd

-rw-r--r-- 1 1000 1000 1170 10月 6 2021 pyspark.cmd

-rwxr-xr-x 1 1000 1000 1030 10月 6 2021 run-example

-rw-r--r-- 1 1000 1000 1223 10月 6 2021 run-example.cmd

-rwxr-xr-x 1 1000 1000 3539 10月 6 2021 spark-class

-rwxr-xr-x 1 1000 1000 2812 10月 6 2021 spark-class2.cmd

-rw-r--r-- 1 1000 1000 1180 10月 6 2021 spark-class.cmd

-rwxr-xr-x 1 1000 1000 1039 10月 6 2021 sparkR

-rw-r--r-- 1 1000 1000 1097 10月 6 2021 sparkR2.cmd

-rw-r--r-- 1 1000 1000 1168 10月 6 2021 sparkR.cmd

-rwxr-xr-x 1 1000 1000 3122 10月 6 2021 spark-shell

-rw-r--r-- 1 1000 1000 1818 10月 6 2021 spark-shell2.cmd

-rw-r--r-- 1 1000 1000 1178 10月 6 2021 spark-shell.cmd

-rwxr-xr-x 1 1000 1000 1065 10月 6 2021 spark-sql

-rw-r--r-- 1 1000 1000 1118 10月 6 2021 spark-sql2.cmd

-rw-r--r-- 1 1000 1000 1173 10月 6 2021 spark-sql.cmd

-rwxr-xr-x 1 1000 1000 1040 10月 6 2021 spark-submit

-rw-r--r-- 1 1000 1000 1155 10月 6 2021 spark-submit2.cmd

-rw-r--r-- 1 1000 1000 1180 10月 6 2021 spark-submit.cmd

(pyspark) [root@node1 bin]# python

Python 3.8.20 (default, Oct 3 2024, 15:24:27)

[GCC 11.2.0] :: Anaconda, Inc. on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> print("hehe")

hehe

>>> exit()

(pyspark) [root@node1 bin]#

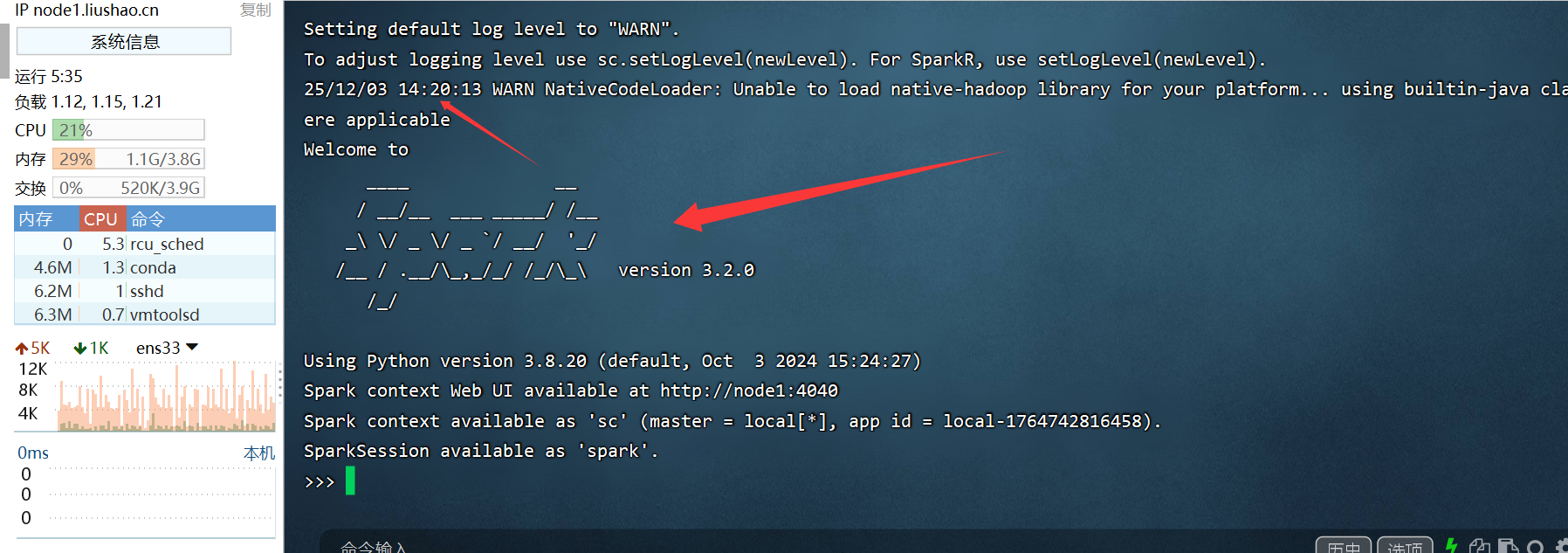

(pyspark) [root@node1 bin]# ./pyspark

Python 3.8.20 (default, Oct 3 2024, 15:24:27)

[GCC 11.2.0] :: Anaconda, Inc. on linux

Type "help", "copyright", "credits" or "license" for more information.

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

25/12/03 14:20:13 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 3.2.0

/_/

Using Python version 3.8.20 (default, Oct 3 2024 15:24:27)

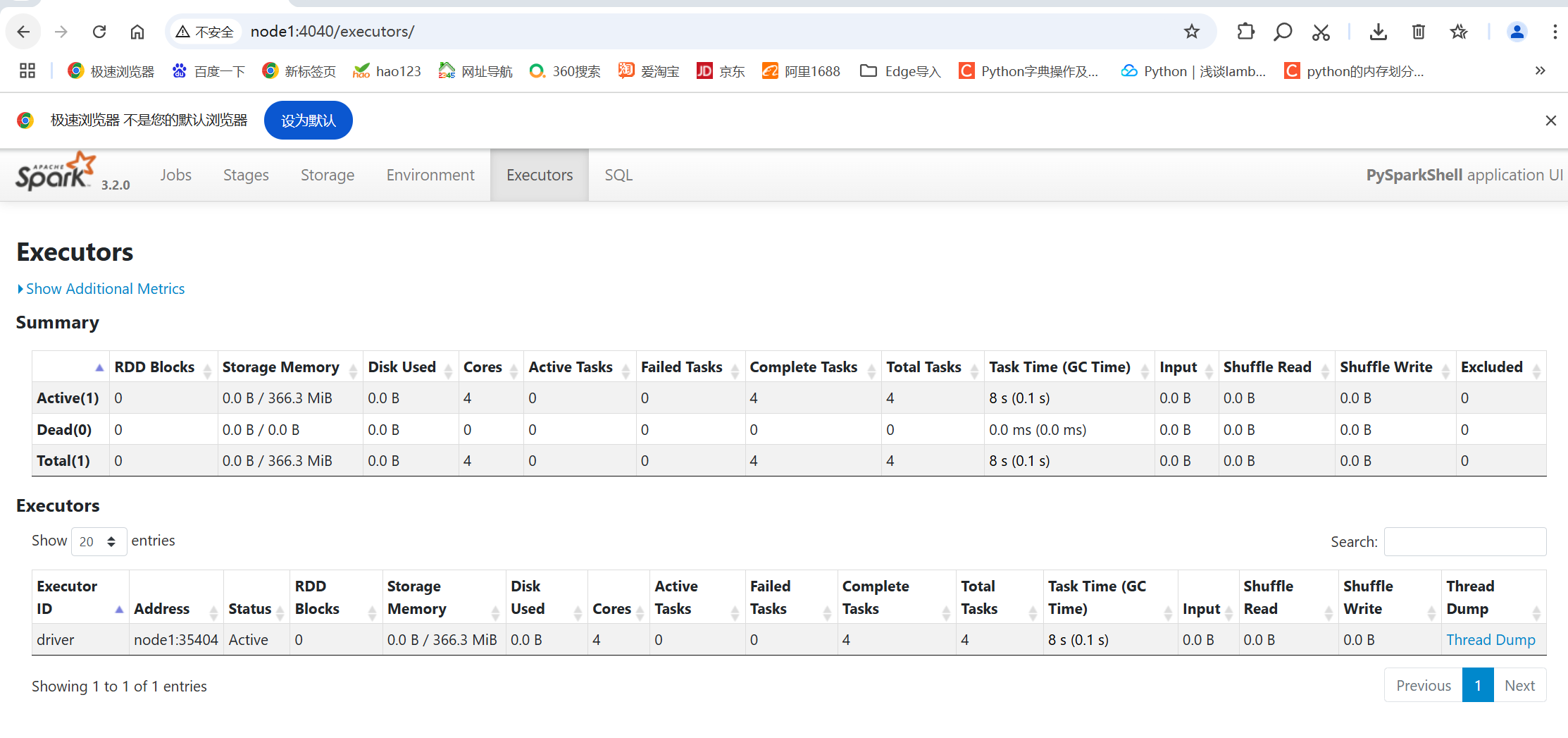

Spark context Web UI available at http://node1:4040

Spark context available as 'sc' (master = local[*], app id = local-1764742816458).

SparkSession available as 'spark'.

>>> sc.parallelize([1,2,3,4,5,6]).map(lambda x: x*10).collect()

[10, 20, 30, 40, 50, 60]

测试成功