本节补充高级优化策略、故障排查、前沿技术应用,并提供完整的工业级项目示例,帮助你从 "会用" 到 "用好" PyTorch。

一:高级优化策略

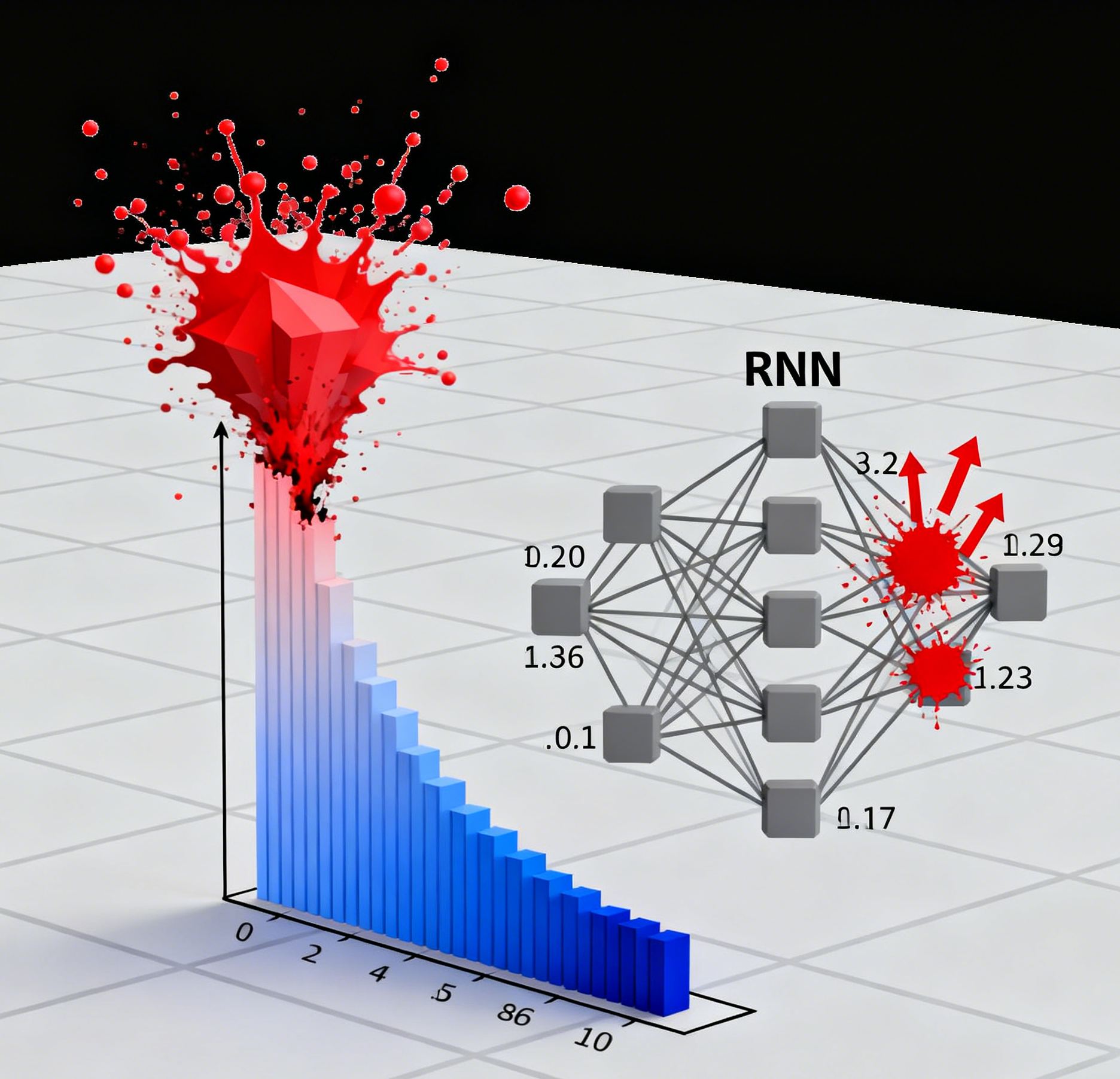

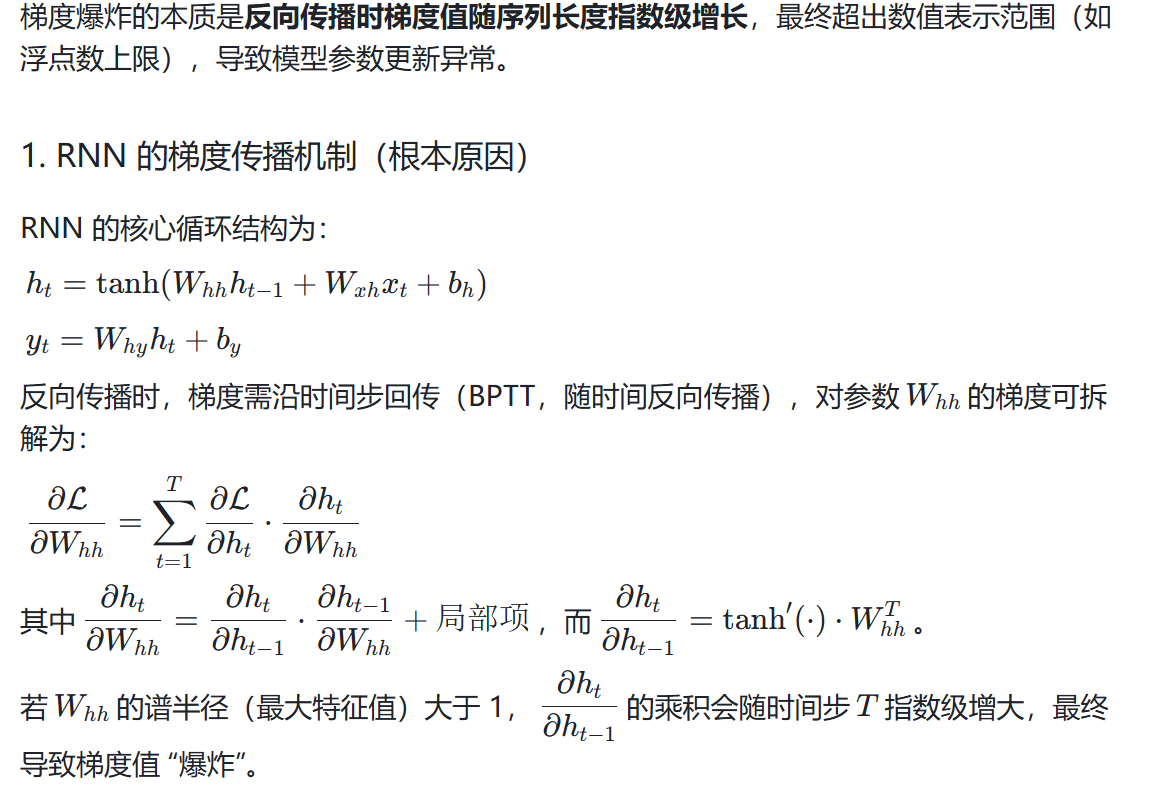

1.1 梯度裁剪(解决梯度爆炸)

在 RNN/LSTM 等序列模型中,梯度爆炸是常见问题,出现的原因:

- 初始化不当:参数初始值过大,直接放大梯度传播的基数;

- 激活函数选择:如 ReLU 在正区间梯度恒为 1,若叠加权重放大,易加剧爆炸;

- 序列过长:时间步T越大,梯度累积的指数效应越明显;

- 数据未归一化:输入 / 隐藏层数值范围过大,梯度计算时基数偏高。

梯度裁剪是最直接的解决方案,配合参数初始化、层归一化、合理的激活函数可大幅降低风险。梯度裁剪可限制梯度的最大范数:

# 训练循环中添加梯度裁剪

loss.backward()

# 裁剪梯度,max_norm为最大范数阈值

torch.nn.utils.clip_grad_norm_(net.parameters(), max_norm=1.0)

optimizer.step()1.2 早停(Early Stopping)

避免过拟合,当验证集损失不再下降时停止训练:

import numpy as np

# 初始化早停参数

patience = 5 # 连续5个epoch无提升则停止

best_val_loss = np.inf

early_stop_count = 0

for epoch in range(100):

train(epoch)

val_loss = test()

# 保存最优模型

if val_loss < best_val_loss:

best_val_loss = val_loss

torch.save(net.state_dict(), "best_model.pth")

early_stop_count = 0

else:

early_stop_count += 1

# 早停判断

if early_stop_count >= patience:

print(f"早停触发,最优验证损失:{best_val_loss:.4f}")

break1.3 混合数据增强(Albumentations)

比 torchvision 更强大的数据增强库,支持多类型变换且速度更快(适用于 CV 任务):

import albumentations as A

from albumentations.pytorch import ToTensorV2

import cv2

# 定义增强策略

train_transform = A.Compose([

A.RandomResizedCrop(28, 28, scale=(0.8, 1.0)), # 随机裁剪缩放

A.HorizontalFlip(p=0.5), # 水平翻转

A.RandomRotation(degrees=10), # 随机旋转

A.Normalize(mean=(0.1307,), std=(0.3081,)), # 归一化

ToTensorV2() # 转为张量

])

# 自定义数据集适配Albumentations

class MNISTAlbumentationsDataset(Dataset):

def __init__(self, dataset, transform=None):

self.dataset = dataset

self.transform = transform

def __len__(self):

return len(self.dataset)

def __getitem__(self, idx):

img, label = self.dataset[idx]

img = np.array(img).astype(np.uint8).squeeze() # 转为numpy数组

if self.transform:

augmented = self.transform(image=img)

img = augmented["image"]

return img, label

# 加载数据

train_dataset = datasets.MNIST(root='./data', train=True, download=True)

train_aug_dataset = MNISTAlbumentationsDataset(train_dataset, train_transform)

train_loader = DataLoader(train_aug_dataset, batch_size=64, shuffle=True)二:常见故障排查与调优

2.1 显存不足问题

| 问题原因 | 解决方案 |

|---|---|

| 批次尺寸过大 | 减小 batch_size,使用梯度累积(模拟大批次) |

| 模型参数过多 | 使用模型量化、剪枝,或改用轻量级模型 |

| 未禁用梯度计算(评估 / 推理) | 推理时加with torch.no_grad() |

| 数据加载占用显存 | num_workers适当增大,pin_memory=True |

| 中间变量未释放 | 手动删除无用变量(del var),调用torch.cuda.empty_cache() |

梯度累积示例(模拟大批次)

accumulation_steps = 4 # 累积4个小批次,等价于batch_size*4

optimizer.zero_grad()

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

output = net(data)

loss = criterion(output, target)

loss = loss / accumulation_steps # 损失归一化

# 累积梯度

loss.backward()

# 每accumulation_steps步更新一次参数

if (batch_idx + 1) % accumulation_steps == 0:

optimizer.step()

optimizer.zero_grad()2.2 模型过拟合问题

| 解决方案 | 代码示例 |

|---|---|

| 数据增强 | 见 1.3 节 Albumentations 示例 |

| Dropout 层 | nn.Dropout(0.5)/nn.Dropout2d(0.2) |

| 权重衰减(L2) | optimizer = optim.Adam(net.parameters(), lr=0.001, weight_decay=1e-4) |

| 早停 | 见 1.2 节早停示例 |

| 批量归一化(BatchNorm) | nn.BatchNorm2d(16)(CNN)/nn.BatchNorm1d(256)(全连接) |

| 模型剪枝 | 见下方示例 |

模型剪枝(减少参数数量)

import torch.nn.utils.prune as prune

# 对卷积层进行剪枝(移除50%的权重)

conv1 = net.conv1

prune.l1_unstructured(conv1, name="weight", amount=0.5) # L1范数剪枝

prune.remove(conv1, "weight") # 永久移除剪枝参数

# 对全连接层剪枝

fc1 = net.fc1

prune.random_unstructured(fc1, name="weight", amount=0.3) # 随机剪枝

prune.remove(fc1, "weight")

# 验证剪枝后模型精度

test(net)2.3 训练速度慢问题

| 优化方向 | 具体措施 |

|---|---|

| 硬件层面 | 使用 GPU / 多 GPU(DDP)、开启 CUDA_LAUNCH_BLOCKING=0 |

| 数据加载 | num_workers>0、pin_memory=True、数据预加载到内存 |

| 混合精度 | 使用 AMP(见 3.2 节) |

| 编译优化 | 使用torch.compile(net)(PyTorch 2.0+) |

| 优化器选择 | 优先使用 AdamW/SGD + 动量,避免低效优化器 |

PyTorch 2.0+ 编译优化(提速 30%-100%)

# 直接编译模型,自动优化计算图

net = torch.compile(net, mode="max-autotune") # max-autotune:最优性能,首次编译稍慢

# 训练逻辑不变

for epoch in range(5):

train(epoch)

test()三:前沿技术应用(PyTorch 2.x+)

3.1 LoRA 微调(低秩适配,大模型轻量化微调)

适用于大语言模型(LLM)、视觉大模型(如 ViT)的轻量化微调,仅训练低秩矩阵:

from peft import LoraConfig, get_peft_model, LoraModel

# 定义LoRA配置

lora_config = LoraConfig(

r=8, # 低秩矩阵的秩

lora_alpha=32, # 缩放因子

target_modules=["conv1", "fc1"], # 目标微调层

lora_dropout=0.1,

bias="none",

task_type="CLASSIFICATION"

)

# 包装模型为LoRA模型

net = get_peft_model(net, lora_config)

net.print_trainable_parameters() # 输出可训练参数占比(通常<1%)

# 训练逻辑不变(仅训练LoRA参数)

optimizer = optim.Adam(net.parameters(), lr=0.001)

for epoch in range(5):

train(epoch)

test()

# 保存/加载LoRA权重

net.save_pretrained("lora_model")

net = LoraModel.from_pretrained(net, "lora_model")3.2 扩散模型(Diffusion Model)极简实现

基于 PyTorch 实现简单的扩散模型(图像生成):

import torch

import torch.nn as nn

import torch.optim as optim

from torch.utils.data import DataLoader

from torchvision import datasets, transforms

import matplotlib.pyplot as plt

# 1. 扩散过程定义(前向加噪)

def forward_diffusion(x, t, betas):

"""

x: 原始图像

t: 时间步

betas: 噪声系数

"""

alpha = 1 - betas

alpha_bar = torch.cumprod(alpha, dim=0)

alpha_bar_t = alpha_bar[t].reshape(-1, 1, 1, 1)

# 加噪:x_t = sqrt(alpha_bar_t)*x + sqrt(1-alpha_bar_t)*噪声

noise = torch.randn_like(x)

x_t = torch.sqrt(alpha_bar_t) * x + torch.sqrt(1 - alpha_bar_t) * noise

return x_t, noise

# 2. 简单UNet模型(去噪网络)

class SimpleUNet(nn.Module):

def __init__(self):

super().__init__()

self.down = nn.Sequential(

nn.Conv2d(1, 64, 3, 1, 1),

nn.ReLU(),

nn.MaxPool2d(2),

nn.Conv2d(64, 128, 3, 1, 1),

nn.ReLU(),

nn.MaxPool2d(2)

)

self.up = nn.Sequential(

nn.Upsample(scale_factor=2),

nn.Conv2d(128, 64, 3, 1, 1),

nn.ReLU(),

nn.Upsample(scale_factor=2),

nn.Conv2d(64, 1, 3, 1, 1)

)

self.time_emb = nn.Embedding(1000, 128) # 时间步嵌入

def forward(self, x, t):

# 时间步嵌入

t_emb = self.time_emb(t).reshape(-1, 128, 1, 1)

# 下采样

x = self.down(x)

# 融合时间步信息

x = x + t_emb

# 上采样

x = self.up(x)

return x

# 3. 训练扩散模型

# 数据加载

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5,), (0.5,))])

train_dataset = datasets.MNIST(root='./data', train=True, download=True, transform=transform)

train_loader = DataLoader(train_dataset, batch_size=64, shuffle=True)

# 初始化参数

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

betas = torch.linspace(1e-4, 0.02, 1000).to(device) # 1000个时间步

net = SimpleUNet().to(device)

criterion = nn.MSELoss()

optimizer = optim.Adam(net.parameters(), lr=0.001)

# 训练循环

epochs = 5

for epoch in range(epochs):

net.train()

total_loss = 0.0

for x, _ in train_loader:

x = x.to(device)

# 随机采样时间步

t = torch.randint(0, 1000, (x.shape[0],)).to(device)

# 前向加噪

x_t, noise = forward_diffusion(x, t, betas)

# 预测噪声

pred_noise = net(x_t, t)

# 损失:预测噪声与真实噪声的MSE

loss = criterion(pred_noise, noise)

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_loss += loss.item()

print(f"Epoch {epoch+1}, Loss: {total_loss/len(train_loader):.4f}")

# 4. 反向扩散(图像生成)

def reverse_diffusion(net, betas, steps=1000):

"""反向扩散生成图像"""

alpha = 1 - betas

alpha_bar = torch.cumprod(alpha, dim=0)

# 初始噪声

x = torch.randn(1, 1, 28, 28).to(device)

net.eval()

with torch.no_grad():

for t in reversed(range(steps)):

t_tensor = torch.tensor([t]).to(device)

# 预测噪声

pred_noise = net(x, t_tensor)

# 反向去噪

alpha_t = alpha[t]

alpha_bar_t = alpha_bar[t]

if t > 0:

noise = torch.randn_like(x)

else:

noise = torch.zeros_like(x)

x = (1/torch.sqrt(alpha_t)) * (x - (1-alpha_t)/torch.sqrt(1-alpha_bar_t)*pred_noise) + torch.sqrt(1-alpha_t)*noise

return x

# 生成图像

generated_img = reverse_diffusion(net, betas)

plt.imshow(generated_img.squeeze().cpu().numpy(), cmap='gray')

plt.title("Diffusion Model Generated Image")

plt.show()四:工业级项目完整示例(图像分类)

以下是一个可直接落地的图像分类项目,包含配置管理、日志、监控、部署全流程:

项目结构

plaintext

image_classification/

├── config/

│ └── train.yaml # 配置文件

├── data/

│ ├── train/ # 训练集(按类别分文件夹)

│ └── val/ # 验证集

├── logs/

│ ├── tensorboard/ # TensorBoard日志

│ └── train.log # 文本日志

├── models/

│ └── resnet.py # 模型定义

├── scripts/

│ ├── train.py # 训练脚本

│ ├── test.py # 测试脚本

│ └── deploy.py # 部署脚本

├── utils/

│ ├── config.py # 配置解析

│ ├── logger.py # 日志工具

│ └── metrics.py # 评估指标

└── requirements.txt核心文件示例

1. config/train.yaml

model:

name: "resnet18"

num_classes: 10

pretrained: true

train:

batch_size: 32

epochs: 20

lr: 0.001

weight_decay: 1e-4

patience: 5

device: "cuda"

data:

train_path: "./data/train"

val_path: "./data/val"

img_size: 224

log:

tensorboard_path: "./logs/tensorboard"

log_file: "./logs/train.log"2. utils/config.py

import yaml

def load_config(config_path):

with open(config_path, 'r', encoding='utf-8') as f:

config = yaml.safe_load(f)

return config3. utils/logger.py

import logging

from torch.utils.tensorboard import SummaryWriter

def setup_logger(log_file):

logging.basicConfig(

level=logging.INFO,

format='%(asctime)s - %(levelname)s - %(message)s',

handlers=[

logging.FileHandler(log_file),

logging.StreamHandler()

]

)

return logging.getLogger(__name__)

def setup_tensorboard(log_path):

return SummaryWriter(log_path)4. scripts/train.py

import os

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import datasets, transforms, models

from torch.utils.data import DataLoader

from utils.config import load_config

from utils.logger import setup_logger, setup_tensorboard

from utils.metrics import calculate_accuracy

# 加载配置

config = load_config("./config/train.yaml")

# 初始化日志

logger = setup_logger(config["log"]["log_file"])

tb_writer = setup_tensorboard(config["log"]["tensorboard_path"])

# 设备配置

device = torch.device(config["train"]["device"] if torch.cuda.is_available() else "cpu")

logger.info(f"使用设备:{device}")

# 数据加载

train_transform = transforms.Compose([

transforms.Resize((config["data"]["img_size"], config["data"]["img_size"])),

transforms.RandomHorizontalFlip(),

transforms.RandomRotation(10),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

val_transform = transforms.Compose([

transforms.Resize((config["data"]["img_size"], config["data"]["img_size"])),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

train_dataset = datasets.ImageFolder(config["data"]["train_path"], transform=train_transform)

val_dataset = datasets.ImageFolder(config["data"]["val_path"], transform=val_transform)

train_loader = DataLoader(train_dataset, batch_size=config["train"]["batch_size"], shuffle=True, num_workers=4)

val_loader = DataLoader(val_dataset, batch_size=config["train"]["batch_size"], shuffle=False, num_workers=4)

# 模型初始化

if config["model"]["name"] == "resnet18":

model = models.resnet18(pretrained=config["model"]["pretrained"])

model.fc = nn.Linear(model.fc.in_features, config["model"]["num_classes"])

model = model.to(device)

# 优化器与损失函数

criterion = nn.CrossEntropyLoss()

optimizer = optim.AdamW(model.parameters(), lr=config["train"]["lr"], weight_decay=config["train"]["weight_decay"])

scheduler = optim.lr_scheduler.ReduceLROnPlateau(optimizer, mode='max', factor=0.1, patience=3)

# 早停初始化

best_acc = 0.0

early_stop_count = 0

# 训练循环

for epoch in range(config["train"]["epochs"]):

# 训练阶段

model.train()

train_loss = 0.0

train_acc = 0.0

for imgs, labels in train_loader:

imgs, labels = imgs.to(device), labels.to(device)

optimizer.zero_grad()

outputs = model(imgs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

train_loss += loss.item() * imgs.size(0)

train_acc += calculate_accuracy(outputs, labels) * imgs.size(0)

train_loss /= len(train_loader.dataset)

train_acc /= len(train_loader.dataset)

# 验证阶段

model.eval()

val_loss = 0.0

val_acc = 0.0

with torch.no_grad():

for imgs, labels in val_loader:

imgs, labels = imgs.to(device), labels.to(device)

outputs = model(imgs)

loss = criterion(outputs, labels)

val_loss += loss.item() * imgs.size(0)

val_acc += calculate_accuracy(outputs, labels) * imgs.size(0)

val_loss /= len(val_loader.dataset)

val_acc /= len(val_loader.dataset)

# 学习率调度

scheduler.step(val_acc)

# 日志记录

logger.info(f"Epoch [{epoch+1}/{config['train']['epochs']}]")

logger.info(f"Train Loss: {train_loss:.4f}, Train Acc: {train_acc:.4f}")

logger.info(f"Val Loss: {val_loss:.4f}, Val Acc: {val_acc:.4f}")

tb_writer.add_scalar("Loss/Train", train_loss, epoch)

tb_writer.add_scalar("Loss/Val", val_loss, epoch)

tb_writer.add_scalar("Accuracy/Train", train_acc, epoch)

tb_writer.add_scalar("Accuracy/Val", val_acc, epoch)

tb_writer.add_scalar("LR", optimizer.param_groups[0]['lr'], epoch)

# 早停与模型保存

if val_acc > best_acc:

best_acc = val_acc

torch.save({

'epoch': epoch,

'model_state_dict': model.state_dict(),

'optimizer_state_dict': optimizer.state_dict(),

'best_acc': best_acc

}, "best_model.pth")

logger.info(f"保存最优模型,验证精度:{best_acc:.4f}")

early_stop_count = 0

else:

early_stop_count += 1

if early_stop_count >= config["train"]["patience"]:

logger.info(f"早停触发,最优验证精度:{best_acc:.4f}")

break

# 关闭日志

tb_writer.close()

logger.info("训练完成")学习与进阶建议

1. 循序渐进的练习路径

- 基础巩固:复现经典模型(ResNet、LSTM、Transformer),理解每一层的作用。

- 小项目实战 :

- CV:MNIST/CIFAR-10 分类、猫狗识别、目标检测(YOLO+PyTorch)。

- NLP:文本分类、情感分析、文本生成(LSTM/GPT 极简版)。

- 大模型微调:基于 HuggingFace Transformers 微调 BERT/ViT,掌握 LoRA/QLoRA。

- 工程化落地:将模型部署到 TorchServe/TensorRT,编写 API 接口。

2. 避坑指南

- 不要忽视数据:数据质量 > 模型复杂度,优先做好数据清洗、标注、增强。

- 不要盲目调参:先固定模型结构,再调学习率 / 批次,最后尝试复杂优化策略。

- 不要忽略工程细节:日志、监控、版本管理(Git)、代码注释是工业级项目的核心。

3. 进阶方向

- 多模态学习:CLIP、BLIP 等多模态模型的 PyTorch 实现。

- 强化学习:结合 PyTorch 实现 DQN/PPO,落地游戏 AI / 机器人控制。

- 量化与部署:深入 TensorRT/ONNX Runtime,优化模型推理速度。

- 分布式训练:掌握 DeepSpeed/FairScale,训练千亿参数大模型。