Ubuntu datasophon1.2.1 二开之三:解决HDFS安装后,启动失败:sudo: unknown user hdfs

背景

上次安装完后监控组件后,继续往下安装ZK,这个很顺利。开始安装Hadoop,在datasophon里,Hadoop分好几部分:HDFS,YARN.先从HDFS开始,又开始折磨之旅。

问题

安装Hadoop 这么复杂的组件,出错应该意料之中。果不其然,安装时就遇到用户不存在问题,详细错误信息如下:

java

2025-12-14 09:18:21 [pool-4875-thread-1] ERROR TaskLogLogger-ZOOKEEPER-ZkServer -

2025-12-14 09:18:21 [pool-4876-thread-1] ERROR TaskLogLogger-ZOOKEEPER-ZkServer -

2025-12-14 09:18:22 [pool-4877-thread-1] ERROR com.datasophon.common.utils.ShellUtils -

2025-12-14 09:18:22 [pool-4878-thread-1] ERROR com.datasophon.common.utils.ShellUtils -

2025-12-14 09:18:22 [pool-4879-thread-1] ERROR TaskLogLogger-ZOOKEEPER-ZkServer -

2025-12-14 09:18:24 [pool-4880-thread-1] ERROR TaskLogLogger-ZOOKEEPER-ZkServer -

2025-12-14 09:18:24 [pool-4881-thread-1] ERROR TaskLogLogger-ZOOKEEPER-ZkServer -

2025-12-14 09:42:00 [pool-4976-thread-1] ERROR TaskLogLogger-HDFS-JournalNode -

2025-12-14 09:42:11 [pool-4978-thread-1] ERROR TaskLogLogger-HDFS-JournalNode -

2025-12-14 09:42:12 [pool-4980-thread-1] ERROR com.datasophon.common.utils.ShellUtils -

2025-12-14 09:42:12 [pool-4981-thread-1] ERROR com.datasophon.common.utils.ShellUtils -

2025-12-14 09:42:12 [pool-4982-thread-1] ERROR com.datasophon.common.utils.ShellUtils -

2025-12-14 09:42:12 [pool-4983-thread-1] ERROR com.datasophon.common.utils.ShellUtils -

2025-12-14 09:42:12 [pool-4984-thread-1] ERROR com.datasophon.common.utils.ShellUtils -

2025-12-14 09:42:12 [pool-4985-thread-1] ERROR com.datasophon.common.utils.ShellUtils -

2025-12-14 09:42:12 [pool-4986-thread-1] ERROR com.datasophon.common.utils.ShellUtils -

2025-12-14 09:42:12 [pool-4987-thread-1] ERROR com.datasophon.common.utils.ShellUtils -

2025-12-14 09:42:12 [pool-4988-thread-1] ERROR com.datasophon.common.utils.ShellUtils -

2025-12-14 09:42:12 [pool-4989-thread-1] ERROR com.datasophon.common.utils.ShellUtils -

2025-12-14 09:42:12 [pool-4990-thread-1] ERROR TaskLogLogger-HDFS-JournalNode -

2025-12-14 09:42:12 [pool-4991-thread-1] ERROR TaskLogLogger-HDFS-JournalNode -

=================================

hadoop-3.3.3/share/doc/hadoop/hadoop-sls/images/sls_cluster_vcores.png

hadoop-3.3.3/share/doc/hadoop/hadoop-sls/images/h5.jpg

hadoop-3.3.3/share/doc/hadoop/hadoop-sls/images/icon_error_sml.gif

hadoop-3.3.3/share/doc/hadoop/hadoop-sls/images/icon_success_sml.gif

hadoop-3.3.3/share/doc/hadoop/hadoop-sls/images/expanded.gif

hadoop-3.3.3/share/doc/hadoop/hadoop-sls/images/external.png

hadoop-3.3.3/share/doc/hadoop/hadoop-sls/images/sls_JVM.png

hadoop-3.3.3/share/doc/hadoop/hadoop-sls/images/sls_track_job.png

hadoop-3.3.3/share/doc/hadoop/hadoop-sls/images/icon_info_sml.gif

hadoop-3.3.3/share/doc/hadoop/hadoop-sls/images/logo_apache.jpg

hadoop-3.3.3/share/doc/hadoop/hadoop-sls/images/bg.jpg

hadoop-3.3.3/share/doc/hadoop/hadoop-sls/images/newwindow.png

hadoop-3.3.3/share/doc/hadoop/hadoop-sls/images/sls_track_queue.png

hadoop-3.3.3/share/doc/hadoop/hadoop-sls/images/sls_running_apps_containers.png

hadoop-3.3.3/share/doc/hadoop/hadoop-sls/images/h3.jpg

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/project-reports.html

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/dependency-analysis.html

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/css/

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/css/maven-base.css

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/css/print.css

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/css/maven-theme.css

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/css/site.css

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/HadoopArchives.html

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/breadcrumbs.jpg

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/apache-maven-project-2.png

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/maven-logo-2.gif

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/collapsed.gif

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/logo_maven.jpg

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/icon_warning_sml.gif

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/logos/

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/logos/build-by-maven-black.png

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/logos/maven-feather.png

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/logos/build-by-maven-white.png

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/banner.jpg

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/h5.jpg

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/icon_error_sml.gif

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/icon_success_sml.gif

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/expanded.gif

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/external.png

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/icon_info_sml.gif

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/logo_apache.jpg

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/bg.jpg

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/newwindow.png

hadoop-3.3.3/share/doc/hadoop/hadoop-archives/images/h3.jpg

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/project-reports.html

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/dependency-analysis.html

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/css/

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/css/maven-base.css

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/css/print.css

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/css/maven-theme.css

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/css/site.css

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/breadcrumbs.jpg

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/apache-maven-project-2.png

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/maven-logo-2.gif

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/collapsed.gif

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/logo_maven.jpg

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/icon_warning_sml.gif

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/logos/

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/logos/build-by-maven-black.png

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/logos/maven-feather.png

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/logos/build-by-maven-white.png

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/banner.jpg

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/h5.jpg

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/icon_error_sml.gif

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/icon_success_sml.gif

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/expanded.gif

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/external.png

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/icon_info_sml.gif

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/logo_apache.jpg

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/bg.jpg

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/newwindow.png

hadoop-3.3.3/share/doc/hadoop/hadoop-hdfs-nfs/images/h3.jpg

hadoop-3.3.3/jmx/

hadoop-3.3.3/jmx/jmx_prometheus_javaagent-0.16.1.jar

hadoop-3.3.3/jmx/prometheus_config.yml

hadoop-3.3.3/logs/

hadoop-3.3.3/logs/userlogs/

hadoop-3.3.3/control_hadoop.sh

hadoop-3.3.3/ranger-hdfs-plugin/

hadoop-3.3.3/ranger-hdfs-plugin/enable-hdfs-plugin.sh

hadoop-3.3.3/ranger-hdfs-plugin/upgrade-hdfs-plugin.sh

hadoop-3.3.3/ranger-hdfs-plugin/upgrade-plugin.py

hadoop-3.3.3/ranger-hdfs-plugin/ranger_credential_helper.py

hadoop-3.3.3/ranger-hdfs-plugin/disable-hdfs-plugin.sh

hadoop-3.3.3/ranger-hdfs-plugin/install/

hadoop-3.3.3/ranger-hdfs-plugin/install/conf.templates/

hadoop-3.3.3/ranger-hdfs-plugin/install/conf.templates/enable/

hadoop-3.3.3/ranger-hdfs-plugin/install/conf.templates/enable/hdfs-site-changes.cfg

hadoop-3.3.3/ranger-hdfs-plugin/install/conf.templates/enable/ranger-hdfs-audit-changes.cfg

hadoop-3.3.3/ranger-hdfs-plugin/install/conf.templates/enable/ranger-hdfs-audit.xml

hadoop-3.3.3/ranger-hdfs-plugin/install/conf.templates/enable/ranger-hdfs-security-changes.cfg

hadoop-3.3.3/ranger-hdfs-plugin/install/conf.templates/enable/ranger-hdfs-security.xml

hadoop-3.3.3/ranger-hdfs-plugin/install/conf.templates/enable/ranger-policymgr-ssl-changes.cfg

hadoop-3.3.3/ranger-hdfs-plugin/install/conf.templates/enable/ranger-policymgr-ssl.xml

hadoop-3.3.3/ranger-hdfs-plugin/install/conf.templates/disable/

hadoop-3.3.3/ranger-hdfs-plugin/install/conf.templates/disable/hdfs-site-changes.cfg

hadoop-3.3.3/ranger-hdfs-plugin/install/lib/

hadoop-3.3.3/ranger-hdfs-plugin/install/lib/ranger-plugins-installer-2.1.0.jar

hadoop-3.3.3/ranger-hdfs-plugin/install/lib/credentialbuilder-2.1.0.jar

hadoop-3.3.3/ranger-hdfs-plugin/install/lib/slf4j-api-1.7.25.jar

hadoop-3.3.3/ranger-hdfs-plugin/install/lib/commons-collections-3.2.2.jar

hadoop-3.3.3/ranger-hdfs-plugin/install/lib/commons-lang-2.6.jar

hadoop-3.3.3/ranger-hdfs-plugin/install/lib/commons-cli-1.2.jar

hadoop-3.3.3/ranger-hdfs-plugin/install/lib/commons-configuration2-2.1.1.jar

hadoop-3.3.3/ranger-hdfs-plugin/install/lib/commons-logging-1.2.jar

hadoop-3.3.3/ranger-hdfs-plugin/install/lib/guava-25.1-jre.jar

hadoop-3.3.3/ranger-hdfs-plugin/install/lib/hadoop-common-3.1.1.jar

hadoop-3.3.3/ranger-hdfs-plugin/install/lib/commons-io-2.5.jar

hadoop-3.3.3/ranger-hdfs-plugin/install/lib/hadoop-auth-3.1.1.jar

hadoop-3.3.3/ranger-hdfs-plugin/install/lib/htrace-core4-4.1.0-incubating.jar

hadoop-3.3.3/ranger-hdfs-plugin/install/lib/stax2-api-3.1.4.jar

hadoop-3.3.3/ranger-hdfs-plugin/install/lib/woodstox-core-5.0.3.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-plugin-classloader-2.1.0.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-shim-2.1.0.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/ranger-plugins-cred-2.1.0.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/ranger-plugins-audit-2.1.0.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/ranger-plugins-common-2.1.0.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/ranger-hdfs-plugin-2.1.0.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/commons-lang-2.6.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/httpclient-4.5.6.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/httpcore-4.4.6.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/eclipselink-2.5.2.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/javax.persistence-2.1.0.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/solr-solrj-7.7.1.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/httpmime-4.5.6.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/noggit-0.8.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/elasticsearch-7.6.0.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/elasticsearch-core-7.6.0.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/elasticsearch-x-content-7.6.0.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/lucene-core-8.4.0.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/hppc-0.8.0.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/joda-time-2.10.6.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/elasticsearch-rest-client-7.6.0.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/httpasyncclient-4.1.3.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/httpcore-nio-4.4.6.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/elasticsearch-rest-high-level-client-7.6.0.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/rank-eval-client-7.6.0.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/lang-mustache-client-7.6.0.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/jna-5.2.0.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/jna-platform-5.2.0.jar

hadoop-3.3.3/ranger-hdfs-plugin/lib/ranger-hdfs-plugin-impl/gethostname4j-0.0.2.jar

hadoop-3.3.3/ranger-hdfs-plugin/install.properties

hadoop-3.3.3/pid/

[ERROR] 2025-12-14 09:42:11 TaskLogLogger-HDFS-JournalNode:[197] -

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[236] - Initial files: 0, Final files: 18, Extracted files: 18

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[240] - Decompression successful, extracted 18 files to /opt/datasophon/hadoop-3.3.3

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[145] - Verifying installation in: /opt/datasophon/hadoop-3.3.3

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[161] - control.sh exists: false, binary exists: false

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[82] - Start to configure service role JournalNode

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[117] - Convert boolean and integer to string

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[117] - Convert boolean and integer to string

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[181] - configure success

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[263] - size is :1

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[266] - config set value to /data/dfs/nn

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[272] - create file path /data/dfs/nn

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[272] - create file path /data/dfs/snn

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[117] - Convert boolean and integer to string

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[263] - size is :1

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[266] - config set value to /data/dfs/dn

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[272] - create file path /data/dfs/dn

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[272] - create file path /data/dfs/jn

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[117] - Convert boolean and integer to string

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[117] - Convert boolean and integer to string

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[117] - Convert boolean and integer to string

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[181] - configure success

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[272] - create file path /data/tmp/hadoop

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[117] - Convert boolean and integer to string

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[263] - size is :4

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[266] - config set value to RULE:[2:$1/$2@$0]([ndj]n\/.*@HADOOP\.COM)s/.*/hdfs/

RULE:[2:$1/$2@$0]([rn]m\/.*@HADOOP\.COM)s/.*/yarn/

RULE:[2:$1/$2@$0](jhs\/.*@HADOOP\.COM)s/.*/mapred/

DEFAULT

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[181] - configure success

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[181] - configure success

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[141] - DEBUG: Constants.INSTALL_PATH = /opt/datasophon

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[142] - DEBUG: PropertyUtils.getString("install.path") = /opt/datasophon

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[177] - execute shell command : [bash, control_hadoop.sh, status, journalnode]

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[178] - DEBUG: Work directory would be: /opt/datasophon/hadoop-3.3.3

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[179] - DEBUG: Expected control.sh location: /opt/datasophon/hadoop-3.3.3/control.sh

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[182] - .

journalnode pid file is not exists

[ERROR] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[197] -

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[141] - DEBUG: Constants.INSTALL_PATH = /opt/datasophon

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[142] - DEBUG: PropertyUtils.getString("install.path") = /opt/datasophon

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[177] - execute shell command : [sudo, -u, hdfs, bash, control_hadoop.sh, start, journalnode]

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[178] - DEBUG: Work directory would be: /opt/datasophon/hadoop-3.3.3

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[179] - DEBUG: Expected control.sh location: /opt/datasophon/hadoop-3.3.3/control.sh

[INFO] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[182] - sudo: unknown user hdfs

sudo: error initializing audit plugin sudoers_audit

[ERROR] 2025-12-14 09:42:12 TaskLogLogger-HDFS-JournalNode:[197] -提示未知的用户hdfs.我就纳闷datasophon为何不顺手创建呢?了解一下,其他类似框架:CDH,HDP,Apache Bigtop,华为FusionInsight,CDP 都可以自动创建或选择创建。

那就尝试加代码让它创建一下!

解决

然而这个想法付诸行动,到最终实现目标,过程有点长,花了7天。

最初想法是直接在datasophon-worker代码里创建用户和组代码,但是执行报错:

can't lock /etc/group文件

后来通过脚本,执行也不行。也创建失败。

多次失败后,我都想放弃,手工创建得了。但是又不甘心,为何其他类似产品可以

呢!后来用一个简单程序,试试可以创建成功。然后在ubuntu 新建服务中调用这个

程序 也成功。然后再试,在datasophon-worker程序启动这个服务也成功。

创建用户成功后,启动还是失败,后来发现安装时指定的hadoop 数据目录没授权,

hdfs用户不能写入

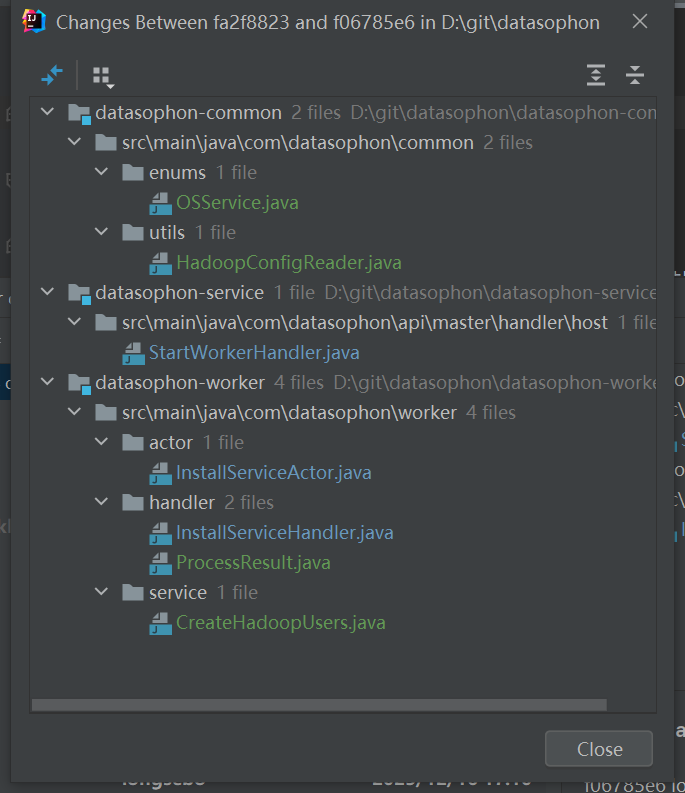

按这个思路,涉及代码如下:

下面几个文件是最主要的

StartWorkerHandler.java

java

/*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package com.datasophon.api.master.handler.host;

import com.datasophon.common.enums.OSService;

import com.datasophon.api.load.ConfigBean;

import com.datasophon.api.utils.CommonUtils;

import com.datasophon.api.utils.MessageResolverUtils;

import com.datasophon.api.utils.MinaUtils;

import com.datasophon.api.utils.SpringTool;

import com.datasophon.common.Constants;

import com.datasophon.common.enums.InstallState;

import com.datasophon.common.model.HostInfo;

import com.datasophon.common.utils.HadoopConfigReader;

import org.apache.commons.lang3.StringUtils;

import org.apache.sshd.client.session.ClientSession;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.nio.charset.StandardCharsets;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

import java.net.InetAddress;

import java.net.UnknownHostException;

import java.util.Date;

public class StartWorkerHandler implements DispatcherWorkerHandler {

private static final Logger logger = LoggerFactory.getLogger(StartWorkerHandler.class);

private Integer clusterId;

private String clusterFrame;

// 使用静态变量确保全局缓存,但要小心并发问题

private static volatile String cachedServiceFilePath = null;

private static volatile String cachedUsersServiceFilePath = null;

private static final Object SERVICE_FILE_LOCK = new Object();

private static final Object USERS_FILE_LOCK = new Object();

public StartWorkerHandler(Integer clusterId, String clusterFrame) {

this.clusterId = clusterId;

this.clusterFrame = clusterFrame;

}

@Override

public boolean handle(ClientSession session, HostInfo hostInfo) throws UnknownHostException {

ConfigBean configBean = SpringTool.getApplicationContext().getBean(ConfigBean.class);

String installPath = Constants.INSTALL_PATH;

String localHostName = InetAddress.getLocalHost().getHostName();

String command = Constants.UPDATE_COMMON_CMD +

localHostName +

Constants.SPACE +

configBean.getServerPort() +

Constants.SPACE +

this.clusterFrame +

Constants.SPACE +

this.clusterId +

Constants.SPACE +

Constants.INSTALL_PATH;

String updateCommonPropertiesResult = MinaUtils.execCmdWithResult(session, command);

if (StringUtils.isBlank(updateCommonPropertiesResult) || "failed".equals(updateCommonPropertiesResult)) {

logger.error("common.properties update failed, command: {}", command);

hostInfo.setErrMsg("common.properties update failed");

hostInfo.setMessage(MessageResolverUtils.getMessage("modify.configuration.file.fail"));

CommonUtils.updateInstallState(InstallState.FAILED, hostInfo);

return false;

}

try {

// Initialize environment

MinaUtils.execCmdWithResult(session, "systemctl stop datasophon-worker.service");

MinaUtils.execCmdWithResult(session, "systemctl stop "+OSService.CREATE_HADOOP_USERS.getServiceName());

MinaUtils.execCmdWithResult(session, "ulimit -n 102400");

MinaUtils.execCmdWithResult(session, "sysctl -w vm.max_map_count=2000000");

// Set startup and self start

MinaUtils.execCmdWithResult(session,

"\\cp " + installPath + "/datasophon-worker/script/datasophon-worker /etc/init.d/");

MinaUtils.execCmdWithResult(session, "chmod +x /etc/init.d/datasophon-worker");

// 创建并上传 datasophon-worker.service

String workerServiceFile = createWorkerServiceFile();

boolean uploadSuccess = MinaUtils.uploadFile(session, "/usr/lib/systemd/system", workerServiceFile);

if (!uploadSuccess) {

logger.error("upload datasophon-worker.service failed");

hostInfo.setErrMsg("upload datasophon-worker.service failed");

hostInfo.setMessage(MessageResolverUtils.getMessage("upload.file.fail"));

CommonUtils.updateInstallState(InstallState.FAILED, hostInfo);

return false;

}

logger.info("datasophon-worker.service uploaded successfully");

// 创建并上传 hadoop users service

String hadoopUsersServiceFile = createHadoopUsersServiceFile();

uploadSuccess = MinaUtils.uploadFile(session, "/etc/systemd/system/", hadoopUsersServiceFile);

if (!uploadSuccess) {

logger.error("upload create-hadoop-users.service failed");

hostInfo.setErrMsg("upload create-hadoop-users.service failed");

hostInfo.setMessage(MessageResolverUtils.getMessage("upload.file.fail"));

CommonUtils.updateInstallState(InstallState.FAILED, hostInfo);

return false;

}

logger.info("create-hadoop-users.service uploaded successfully");

// 继续执行其他命令

MinaUtils.execCmdWithResult(session,

"\\cp " + installPath + "/datasophon-worker/script/datasophon-env.sh /etc/profile.d/");

MinaUtils.execCmdWithResult(session, "source /etc/profile.d/datasophon-env.sh");

hostInfo.setMessage(MessageResolverUtils.getMessage("start.host.management.agent"));

String cleanupCmd = "rm -f /etc/init.d/datasophon-worker 2>/dev/null";

MinaUtils.execCmdWithResult(session, cleanupCmd);

MinaUtils.execCmdWithResult(session, "mkdir -p /opt/datasophon/datasophon-worker/logs");

MinaUtils.execCmdWithResult(session, "systemctl daemon-reload");

MinaUtils.execCmdWithResult(session, "systemctl enable datasophon-worker.service");

MinaUtils.execCmdWithResult(session, "systemctl start datasophon-worker.service");

hostInfo.setProgress(75);

hostInfo.setCreateTime(new Date());

logger.info("end dispatcher host agent :{}", hostInfo.getHostname());

return true;

} catch (Exception e) {

logger.error("Failed to handle worker startup for host: {}", hostInfo.getHostname(), e);

hostInfo.setErrMsg("Worker startup failed: " + e.getMessage());

hostInfo.setMessage(MessageResolverUtils.getMessage("start.host.management.agent.fail"));

CommonUtils.updateInstallState(InstallState.FAILED, hostInfo);

return false;

}

}

/**

* 创建worker服务临时文件

*/

private String createWorkerServiceFile() {

return createTempServiceFile(

"datasophon-worker.service",

cachedServiceFilePath,

SERVICE_FILE_LOCK,

"[Unit]\n" +

"Description=DataSophon Worker Service (Ubuntu)\n" +

"After=network.target network-online.target\n" +

"Wants=network-online.target\n" +

"\n" +

"[Service]\n" +

"Type=forking\n" +

"Environment=JAVA_HOME=/usr/local/jdk1.8.0_333\n" +

"Environment=PATH=/usr/local/jdk1.8.0_333/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/snap/bin\n" +

"Environment=LC_ALL=C.UTF-8\n" +

"WorkingDirectory=/opt/datasophon/datasophon-worker\n" +

"ExecStart=/opt/datasophon/datasophon-worker/bin/datasophon-worker.sh start worker\n" +

"ExecStop=/opt/datasophon/datasophon-worker/bin/datasophon-worker.sh stop worker\n" +

"ExecReload=/opt/datasophon/datasophon-worker/bin/datasophon-worker.sh restart worker\n" +

"User=root\n" +

"Group=root\n" +

"TimeoutStopSec=300\n" +

"KillMode=process\n" +

"Restart=on-failure\n" +

"RestartSec=10\n" +

"StandardOutput=journal\n" +

"StandardError=journal\n" +

"SyslogIdentifier=datasophon-worker\n" +

"\n" +

"ProtectSystem=full\n" +

"ReadWritePaths=/opt/datasophon/datasophon-worker/logs\n" +

"\n" +

"[Install]\n" +

"WantedBy=multi-user.target",

"datasophon-worker"

);

}

/**

* 创建Hadoop用户服务临时文件

*/

private String createHadoopUsersServiceFile() {

return createTempServiceFile(

"create-hadoop-users.service",

cachedUsersServiceFilePath,

USERS_FILE_LOCK,

"[Unit]\n" +

"Description=Create Hadoop Users (One-time Execution)\n" +

"After=network.target\n" +

"\n" +

"[Service]\n" +

"Type=oneshot\n" +

"User=root\n" +

"WorkingDirectory=/opt/datasophon/hadoop-3.3.3\n" +

"Environment=\"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin\"\n" +

"Environment=\"JAVA_HOME=/usr/local/jdk1.8.0_333\"\n" +

"ExecStart=/usr/local/jdk1.8.0_333/bin/java -cp \"/opt/datasophon/hadoop-3.3.3\" com.datasophon.worker.service.CreateHadoopUsers\n" +

"\n" +

"SuccessExitStatus=0 9\n" +

"\n" +

"StandardOutput=journal\n" +

"StandardError=journal\n" +

"SyslogIdentifier=create-hadoop-users\n" +

"\n" +

"[Install]\n" +

"WantedBy=multi-user.target",

"hadoop-users"

);

}

/**

* 通用的临时服务文件创建方法

*/

private String createTempServiceFile(String defaultFileName,

String cachedFilePath,

Object lockObj,

String serviceContent,

String serviceName) {

// 第一重检查(无锁)

String localCache = cachedFilePath;

if (localCache != null && Files.exists(Paths.get(localCache))) {

logger.info("使用缓存的{}服务文件: {}", serviceName, localCache);

return localCache;

}

synchronized (lockObj) {

// 第二重检查(有锁)

localCache = cachedFilePath;

if (localCache != null && Files.exists(Paths.get(localCache))) {

logger.info("同步块内使用缓存的{}服务文件: {}", serviceName, localCache);

return localCache;

}

logger.info("开始创建{}服务文件", serviceName);

try {

// 获取临时目录

String tempDir = System.getProperty("java.io.tmpdir");

if (StringUtils.isBlank(tempDir)) {

throw new RuntimeException("java.io.tmpdir system property is null or empty");

}

logger.info("临时目录: {}", tempDir);

// 确定文件名

String fileName;

try {

// 尝试从OSService获取文件名

OSService service = OSService.CREATE_HADOOP_USERS;

if (service != null && StringUtils.isNotBlank(service.getServiceName())) {

fileName = service.getServiceName();

} else {

fileName = defaultFileName;

}

} catch (Exception e) {

logger.warn("无法从OSService获取文件名,使用默认文件名: {}", defaultFileName);

fileName = defaultFileName;

}

logger.info("服务文件名: {}", fileName);

// 创建文件路径

Path tempDirPath = Paths.get(tempDir);

Path tempFilePath = tempDirPath.resolve(fileName);

logger.info("目标文件路径: {}", tempFilePath.toAbsolutePath());

// 确保目录存在

if (!Files.exists(tempDirPath)) {

Files.createDirectories(tempDirPath);

logger.info("创建临时目录");

}

// 检查写权限

if (!Files.isWritable(tempDirPath)) {

throw new RuntimeException("No write permission to temp directory: " + tempDirPath);

}

// 删除已存在的文件

if (Files.exists(tempFilePath)) {

try {

Files.delete(tempFilePath);

logger.info("删除已存在的文件");

} catch (Exception e) {

logger.warn("删除旧文件失败,将尝试覆盖", e);

}

}

// 写入文件

Files.write(tempFilePath, serviceContent.getBytes(StandardCharsets.UTF_8));

logger.info("文件写入完成");

// 验证文件

if (!Files.exists(tempFilePath)) {

throw new RuntimeException("File not created after write operation");

}

long fileSize = Files.size(tempFilePath);

if (fileSize == 0) {

throw new RuntimeException("Created file is empty");

}

String filePath = tempFilePath.toString();

// 更新缓存 - 这里需要特别注意,因为cachedFilePath是参数,不能直接赋值

// 我们需要根据传入的是哪个缓存变量来更新

if (serviceName.contains("datasophon-worker")) {

cachedServiceFilePath = filePath;

} else {

cachedUsersServiceFilePath = filePath;

}

logger.info("成功创建{}服务文件,路径: {}", serviceName, filePath);

return filePath;

} catch (Exception e) {

logger.error("创建{}服务文件失败", serviceName, e);

throw new RuntimeException("Failed to create " + serviceName + " service file: " + e.getMessage(), e);

}

}

}

}这个类,主要实现了创建创建hadoop 涉及的用户及组:hdfs,yarn,mapred,hadoop的服务。跟原来创建datasophon-worker服务代码整合了,这个服务配置文件有:/usr/local/jdk1.8.0_333/bin/java -cp "/opt/datasophon/hadoop-3.3.3" com.datasophon.worker.service.CreateHadoopUsers\n 这行,调用CreateHadoopUsers,这个类代码如下:

CreateHadoopUsers.java

java

package com.datasophon.worker.service;

import java.io.*;

import java.time.LocalDateTime;

import java.time.format.DateTimeFormatter;

import java.util.ArrayList;

import java.util.List;

public class CreateHadoopUsers {

private static final DateTimeFormatter dtf = DateTimeFormatter.ofPattern("yyyy-MM-dd HH:mm:ss");

public static void main(String[] args) {

System.out.println("=== 创建Hadoop用户(完整正确配置) ===");

try {

// 先清理(可选)

// cleanupExisting();

// 创建所有需要的组

createGroup("hadoop");

createGroup("hdfs");

createGroup("yarn");

createGroup("mapred");

// 创建用户(一次性正确配置)

createHadoopUser("hdfs", "hadoop", "hdfs");

createHadoopUser("yarn", "hadoop", "yarn");

createHadoopUser("mapred", "hadoop", "mapred");

// 详细验证

verifyCompleteSetup();

log("=== Hadoop用户创建完成 ===");

} catch (Exception e) {

logError("程序异常: " + e.getMessage());

e.printStackTrace();

}

}

private static void log(String message) {

System.out.println("[" + LocalDateTime.now().format(dtf) + "] " + message);

}

private static void logError(String message) {

System.err.println("[" + LocalDateTime.now().format(dtf) + "] ERROR: " + message);

}

private static boolean executeCommand(List<String> command) throws Exception {

log("执行: " + String.join(" ", command));

ProcessBuilder pb = new ProcessBuilder(command);

Process process = pb.start();

BufferedReader reader = new BufferedReader(new InputStreamReader(process.getInputStream()));

BufferedReader errorReader = new BufferedReader(new InputStreamReader(process.getErrorStream()));

StringBuilder output = new StringBuilder();

StringBuilder errorOutput = new StringBuilder();

String line;

while ((line = reader.readLine()) != null) {

output.append(line).append("\n");

}

while ((line = errorReader.readLine()) != null) {

errorOutput.append(line).append("\n");

}

int exitCode = process.waitFor();

if (!output.toString().isEmpty()) {

log("输出: " + output.toString().trim());

}

if (!errorOutput.toString().isEmpty()) {

log("错误: " + errorOutput.toString().trim());

}

return exitCode == 0;

}

private static void cleanupExisting() throws Exception {

log("=== 清理现有配置 ===");

// 删除用户

String[] users = {"hdfs", "yarn", "mapred"};

for (String user : users) {

log("清理用户: " + user);

List<String> command = new ArrayList<>();

command.add("userdel");

command.add("-r");

command.add("-f");

command.add(user);

executeCommand(command);

}

// 删除组(稍后创建)

String[] groups = {"hdfs", "yarn", "mapred", "hadoop"};

for (String group : groups) {

log("清理组: " + group);

List<String> command = new ArrayList<>();

command.add("groupdel");

command.add(group);

executeCommand(command);

}

}

private static void createGroup(String groupName) throws Exception {

log("创建组: " + groupName);

List<String> command = new ArrayList<>();

command.add("groupadd");

command.add(groupName);

if (executeCommand(command)) {

log("✓ 组创建成功: " + groupName);

} else {

log("提示: 组 " + groupName + " 可能已存在");

}

}

private static void createHadoopUser(String userName, String primaryGroup, String secondaryGroup) throws Exception {

log("创建用户: " + userName + " (主组: " + primaryGroup + ", 附加组: " + secondaryGroup + ")");

List<String> command = new ArrayList<>();

command.add("useradd");

command.add("-r"); // 系统用户

command.add("-m"); // 创建家目录

command.add("-s"); // 登录shell

command.add("/bin/bash");

command.add("-g"); // 主要组

command.add(primaryGroup);

command.add("-G"); // 附加组(可以有多个)

command.add(secondaryGroup);

command.add(userName);

if (executeCommand(command)) {

log("✓ 用户创建成功: " + userName);

showUserDetails(userName);

} else {

// 如果用户已存在,删除后重试

log("用户可能已存在,尝试删除后重新创建");

// 删除现有用户

List<String> delCommand = new ArrayList<>();

delCommand.add("userdel");

delCommand.add("-r");

delCommand.add("-f");

delCommand.add(userName);

executeCommand(delCommand);

// 重新创建

if (executeCommand(command)) {

log("✓ 用户重新创建成功: " + userName);

showUserDetails(userName);

} else {

logError("用户创建失败: " + userName);

}

}

}

private static void showUserDetails(String userName) throws Exception {

List<String> command = new ArrayList<>();

command.add("id");

command.add(userName);

ProcessBuilder pb = new ProcessBuilder(command);

Process process = pb.start();

BufferedReader reader = new BufferedReader(new InputStreamReader(process.getInputStream()));

String info = reader.readLine();

process.waitFor();

if (info != null) {

log("用户信息: " + info);

}

// 显示家目录

command.clear();

command.add("ls");

command.add("-ld");

command.add("/home/" + userName);

pb = new ProcessBuilder(command);

process = pb.start();

reader = new BufferedReader(new InputStreamReader(process.getInputStream()));

String homeDir = reader.readLine();

process.waitFor();

if (homeDir != null) {

log("家目录: " + homeDir);

}

}

private static void verifyCompleteSetup() throws Exception {

log("=== 完整配置验证 ===");

String[][] userConfigs = {

{"hdfs", "hadoop", "hdfs"},

{"yarn", "hadoop", "yarn"},

{"mapred", "hadoop", "mapred"}

};

boolean allCorrect = true;

for (String[] config : userConfigs) {

String userName = config[0];

String expectedPrimary = config[1];

String expectedSecondary = config[2];

log("验证用户: " + userName);

// 检查用户是否存在

List<String> checkCmd = new ArrayList<>();

checkCmd.add("id");

checkCmd.add(userName);

ProcessBuilder pb = new ProcessBuilder(checkCmd);

Process process = pb.start();

int exitCode = process.waitFor();

if (exitCode != 0) {

logError("✗ 用户不存在: " + userName);

allCorrect = false;

continue;

}

// 获取详细组信息

List<String> groupCmd = new ArrayList<>();

groupCmd.add("id");

groupCmd.add("-gn");

groupCmd.add(userName);

pb = new ProcessBuilder(groupCmd);

process = pb.start();

BufferedReader reader = new BufferedReader(new InputStreamReader(process.getInputStream()));

String primaryGroup = reader.readLine();

process.waitFor();

groupCmd.set(1, "-Gn");

pb = new ProcessBuilder(groupCmd);

process = pb.start();

reader = new BufferedReader(new InputStreamReader(process.getInputStream()));

String allGroups = reader.readLine();

process.waitFor();

// 验证

boolean primaryOk = expectedPrimary.equals(primaryGroup);

boolean secondaryOk = allGroups != null && allGroups.contains(expectedSecondary);

if (primaryOk && secondaryOk) {

log("✓ 配置正确: 主组=" + primaryGroup + ", 包含组=" + expectedSecondary);

} else {

logError("✗ 配置错误:");

if (!primaryOk) {

logError(" 期望主组: " + expectedPrimary + ", 实际主组: " + primaryGroup);

}

if (!secondaryOk) {

logError(" 期望包含组: " + expectedSecondary + ", 实际组: " + allGroups);

}

allCorrect = false;

}

}

// 验证所有组

log("验证所有组...");

String[] groups = {"hadoop", "hdfs", "yarn", "mapred"};

for (String group : groups) {

List<String> cmd = new ArrayList<>();

cmd.add("getent");

cmd.add("group");

cmd.add(group);

ProcessBuilder pb = new ProcessBuilder(cmd);

Process process = pb.start();

int exitCode = process.waitFor();

if (exitCode == 0) {

log("✓ 组存在: " + group);

} else {

logError("✗ 组不存在: " + group);

allCorrect = false;

}

}

if (allCorrect) {

log("✓ 所有配置验证通过");

} else {

logError("存在配置问题,请检查上述错误");

}

}

private static void showFinalSummary() throws Exception {

log("=== 最终配置摘要 ===");

// 用户信息

log("用户信息:");

String[] users = {"hdfs", "yarn", "mapred"};

for (String user : users) {

List<String> cmd = new ArrayList<>();

cmd.add("id");

cmd.add(user);

ProcessBuilder pb = new ProcessBuilder(cmd);

Process process = pb.start();

BufferedReader reader = new BufferedReader(new InputStreamReader(process.getInputStream()));

String info = reader.readLine();

process.waitFor();

if (info != null) {

log(" " + info);

}

}

// 组信息

log("组信息:");

String[] groups = {"hadoop", "hdfs", "yarn", "mapred"};

for (String group : groups) {

List<String> cmd = new ArrayList<>();

cmd.add("getent");

cmd.add("group");

cmd.add(group);

ProcessBuilder pb = new ProcessBuilder(cmd);

Process process = pb.start();

BufferedReader reader = new BufferedReader(new InputStreamReader(process.getInputStream()));

String info = reader.readLine();

process.waitFor();

if (info != null) {

log(" " + info);

}

}

// home目录

log("home目录:");

for (String user : users) {

List<String> cmd = new ArrayList<>();

cmd.add("ls");

cmd.add("-ld");

cmd.add("/home/" + user);

ProcessBuilder pb = new ProcessBuilder(cmd);

Process process = pb.start();

BufferedReader reader = new BufferedReader(new InputStreamReader(process.getInputStream()));

String info = reader.readLine();

process.waitFor();

if (info != null) {

log(" " + info);

}

}

}

}这个类负责创建hadoop相关用户及组,编译为class,为上面服务使用。

InstallServiceHandler.java

java

/*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package com.datasophon.worker.handler;

import cn.hutool.core.io.FileUtil;

import cn.hutool.core.io.StreamProgress;

import cn.hutool.core.lang.Console;

import cn.hutool.http.HttpUtil;

import com.datasophon.common.Constants;

import com.datasophon.common.cache.CacheUtils;

import com.datasophon.common.command.InstallServiceRoleCommand;

import com.datasophon.common.enums.OSService;

import com.datasophon.common.model.RunAs;

import com.datasophon.common.utils.*;

import com.datasophon.worker.utils.TaskConstants;

import lombok.Data;

import org.apache.commons.lang.StringUtils;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.io.*;

import java.nio.channels.FileChannel;

import java.nio.channels.FileLock;

import java.nio.channels.OverlappingFileLockException;

import java.nio.file.Files;

import java.util.ArrayList;

import java.util.List;

import java.util.Objects;

import java.util.concurrent.TimeUnit;

@Data

public class InstallServiceHandler {

private static final String HADOOP = "hadoop";

private String serviceName;

private String serviceRoleName;

private Logger logger;

public InstallServiceHandler(String serviceName, String serviceRoleName) {

this.serviceName = serviceName;

this.serviceRoleName = serviceRoleName;

String loggerName = String.format("%s-%s-%s", TaskConstants.TASK_LOG_LOGGER_NAME, serviceName, serviceRoleName);

logger = LoggerFactory.getLogger(loggerName);

}

public ExecResult install(InstallServiceRoleCommand command) {

ExecResult execResult = new ExecResult();

try {

String destDir = Constants.INSTALL_PATH + Constants.SLASH + "DDP/packages" + Constants.SLASH;

String packageName = command.getPackageName();

String packagePath = destDir + packageName;

Boolean needDownLoad = !Objects.equals(PropertyUtils.getString(Constants.MASTER_HOST), CacheUtils.get(Constants.HOSTNAME))

&& isNeedDownloadPkg(packagePath, command.getPackageMd5());

if (Boolean.TRUE.equals(needDownLoad)) {

downloadPkg(packageName, packagePath);

}

boolean result = decompressPkg(command.getDecompressPackageName(), command.getRunAs(), packagePath);

execResult.setExecResult(result);

} catch (Exception e) {

execResult.setExecOut(e.getMessage());

e.printStackTrace();

}

return execResult;

}

private Boolean isNeedDownloadPkg(String packagePath, String packageMd5) {

Boolean needDownLoad = true;

logger.info("Remote package md5 is {}", packageMd5);

if (FileUtil.exist(packagePath)) {

// check md5

String md5 = FileUtils.md5(new File(packagePath));

logger.info("Local md5 is {}", md5);

if (StringUtils.isNotBlank(md5) && packageMd5.trim().equals(md5.trim())) {

needDownLoad = false;

}

}

return needDownLoad;

}

private void downloadPkg(String packageName, String packagePath) {

String masterHost = PropertyUtils.getString(Constants.MASTER_HOST);

String masterPort = PropertyUtils.getString(Constants.MASTER_WEB_PORT);

String downloadUrl = "http://" + masterHost + ":" + masterPort

+ "/ddh/service/install/downloadPackage?packageName=" + packageName;

logger.info("download url is {}", downloadUrl);

HttpUtil.downloadFile(downloadUrl, FileUtil.file(packagePath), new StreamProgress() {

@Override

public void start() {

Console.log("start to install。。。。");

}

@Override

public void progress(long progressSize, long l1) {

Console.log("installed:{}", FileUtil.readableFileSize(progressSize));

}

@Override

public void finish() {

Console.log("install success!");

}

});

logger.info("download package {} success", packageName);

}

private boolean decompressPkg(String decompressPackageName, RunAs runAs, String packagePath) {

String installPath = Constants.INSTALL_PATH;

String targetDir = installPath + Constants.SLASH + decompressPackageName;

logger.info("Target directory for decompression: {}", targetDir);

// 确保父目录存在

File parentDir = new File(installPath);

if (!parentDir.exists()) {

parentDir.mkdirs();

}

Boolean decompressResult = decompressTarGz(packagePath, targetDir); // 直接解压到目标目录

if (Boolean.TRUE.equals(decompressResult)) {

// 验证解压结果

if (FileUtil.exist(targetDir)) {

logger.info("Verifying installation in: {}", targetDir);

File[] files = new File(targetDir).listFiles();

boolean hasControlSh = false;

boolean hasBinary = false;

if (files != null) {

for (File file : files) {

if (file.getName().equals("control.sh")) {

hasControlSh = true;

}

if (file.getName().equals(decompressPackageName.split("-")[0])) {

hasBinary = true;

}

}

}

logger.info("control.sh exists: {}, binary exists: {}", hasControlSh, hasBinary);

if (Objects.nonNull(runAs)) {

ShellUtils.exceShell(" chown -R " + runAs.getUser() + ":" + runAs.getGroup() + " " + targetDir);

}

ShellUtils.exceShell(" chmod -R 775 " + targetDir);

if (decompressPackageName.contains(HADOOP)) {

changeHadoopInstallPathPerm(decompressPackageName);

}else{

logger.info("package name:"+decompressPackageName);

}

return true;

}

}

return false;

}

public Boolean decompressTarGz(String sourceTarGzFile, String targetDir) {

logger.info("Start to use tar -zxvf to decompress {} to {}", sourceTarGzFile, targetDir);

// 新增:创建目标目录(如果不存在) - 增强版本

File targetDirFile = new File(targetDir);

if (!targetDirFile.exists()) {

logger.info("Target directory does not exist, creating: {}", targetDir);

// 尝试创建目录

boolean created = targetDirFile.mkdirs();

if (!created) {

logger.error("Failed to create target directory: {}", targetDir);

// 添加更多诊断信息

File parentDir = targetDirFile.getParentFile();

if (parentDir != null) {

logger.error("Parent directory exists: {}, writable: {}",

parentDir.exists(), parentDir.canWrite());

}

// 检查是否有权限问题

logger.error("Current user: {}", System.getProperty("user.name"));

return false;

}

logger.info("Successfully created target directory: {}", targetDir);

}

// 1. 首先列出tar包中的文件数量

int tarFileCount = getTarFileCount(sourceTarGzFile);

logger.info("Tar file contains {} files/directories", tarFileCount);

// 3. 执行解压(使用 --strip-components=1)

ArrayList<String> command = new ArrayList<>();

command.add("tar");

command.add("-zxvf");

command.add(sourceTarGzFile);

command.add("-C");

command.add(targetDir);

command.add("--strip-components=1");

ExecResult execResult = ShellUtils.execWithStatus(targetDir, command, 120, logger);

// 4. 验证解压结果

if (execResult.getExecResult()) {

// 等待文件系统同步

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

// ignore

}

// 检查目标目录的文件数量

int finalFileCount = targetDirFile.exists() ?

(targetDirFile.listFiles() != null ? targetDirFile.listFiles().length : 0) : 0;

int extractedFileCount = finalFileCount - tarFileCount;

logger.info("Initial files: {}, Final files: {}, Extracted files: {}",

tarFileCount, finalFileCount, extractedFileCount);

if (extractedFileCount >= 0) {

logger.info("Decompression successful, extracted {} files to {}", extractedFileCount, targetDir);

// 列出前几个文件作为验证

File[] files = targetDirFile.listFiles();

if (files != null) {

int limit = Math.min(files.length, 5);

for (int i = 0; i < limit; i++) {

logger.debug("Extracted file: {}", files[i].getName());

}

}

// 关键文件验证

if (sourceTarGzFile.contains("alertmanager")) {

File controlSh = new File(targetDir + Constants.SLASH + "control.sh");

File binary = new File(targetDir + Constants.SLASH + "alertmanager");

if (!controlSh.exists() || !binary.exists()) {

logger.error("Missing key files after decompression: control.sh={}, alertmanager={}",

controlSh.exists(), binary.exists());

return false;

}

} else if (sourceTarGzFile.contains("prometheus")) {

File controlSh = new File(targetDir + Constants.SLASH + "control.sh");

File binary = new File(targetDir + Constants.SLASH + "prometheus");

if (!controlSh.exists() || !binary.exists()) {

logger.error("Missing key files after decompression: control.sh={}, prometheus={}",

controlSh.exists(), binary.exists());

return false;

}

}

return true;

} else {

logger.error("No files extracted! Something went wrong with decompression.");

return false;

}

}

logger.error("Decompression command failed: {}", execResult.getExecOut());

return false;

}

/**

* 获取tar包中的文件数量

*/

private int getTarFileCount(String tarFile) {

try {

ArrayList<String> command = new ArrayList<>();

command.add("tar");

command.add("-tzf");

command.add(tarFile);

ExecResult execResult = ShellUtils.execWithStatus(".", command, 30, logger);

if (execResult.getExecResult() && execResult.getExecOut() != null) {

// 按行分割,统计非空行

String[] lines = execResult.getExecOut().split("\n");

int count = 0;

for (String line : lines) {

if (line != null && !line.trim().isEmpty()) {

count++;

}

}

return count;

}

} catch (Exception e) {

logger.warn("Failed to count tar files: {}", e.getMessage());

}

return -1; // 未知

}

private void changeHadoopInstallPathPerm(String decompressPackageName) {

logger.info("enter changeHadoopInstallPathPerm,package:"+decompressPackageName);

String hadoopHome = Constants.INSTALL_PATH + Constants.SLASH + decompressPackageName;

// 1. 首先创建必要的用户和用户组

createHadoopSystemUsers(hadoopHome);

// 2. 采用传统安装方式的权限设置(hdfs:hdfs所有权)

// 主要目录所有权给hdfs

ShellUtils.exceShell(" chown -R hdfs:hdfs " + hadoopHome);

ShellUtils.exceShell(" chmod -R 755 " + hadoopHome);

List<String> paths = HadoopConfigReader.getAllHadoopDirectories(hadoopHome);

for(String path:paths) {

logger.info("把目录:{}授权给hdfs",path);

ShellUtils.exceShell( "chown -R hdfs:hadoop "+path);

ShellUtils.exceShell( "chmod -R 755 "+path);

}

// 3. 特殊文件/目录需要不同权限(保持原有逻辑但调整用户)

// container-executor需要特殊权限(通常需要root)

ShellUtils.exceShell(" chown root:hadoop " + hadoopHome + "/bin/container-executor");

ShellUtils.exceShell(" chmod 6050 " + hadoopHome + "/bin/container-executor");

// container-executor.cfg需要root访问控制

ShellUtils.exceShell(" chown root:root " + hadoopHome + "/etc/hadoop/container-executor.cfg");

ShellUtils.exceShell(" chmod 400 " + hadoopHome + "/etc/hadoop/container-executor.cfg");

// 4. logs目录给yarn用户(保持原有)

ShellUtils.exceShell(" chown -R yarn:hadoop " + hadoopHome + "/logs/userlogs");

ShellUtils.exceShell(" chmod 775 " + hadoopHome + "/logs/userlogs");

// 5. 设置ACL权限(如果需要访问系统目录)

setupAclPermissions();

logger.info("Hadoop permissions set successfully in traditional way");

}

/**

* 创建Hadoop所需的系统用户

*/

/**

* 创建Hadoop所需的系统用户(带锁机制)

* @param hadoopHome

*/

private void createHadoopSystemUsers(String hadoopHome) {

logger.info("enter createHadoopSystemUsers");

//debug 尝试调用其他服务创建用户

// Java 执行 systemctl

ProcessBuilder pb = new ProcessBuilder("systemctl", "start", OSService.CREATE_HADOOP_USERS.getServiceName());

Process p = null;

try {

p = pb.start();

boolean execResult = p.waitFor(10, TimeUnit.SECONDS);

if (execResult && p.exitValue() == 0) {

logger.info("call create-hadoop-users.service success");

} else {

logger.info("call create-hadoop-users.service fail");

}

} catch (IOException | InterruptedException e) {

e.printStackTrace();

logger.error("call create-hadoop-users.service fail",e);

}

}

/**

* 设置ACL权限(类似您手工安装的setfacl命令)

*/

private void setupAclPermissions() {

try {

// 1. 优先使用JAVA_HOME环境变量

String javaHome = System.getenv("JAVA_HOME");

List<String> javaPaths = new ArrayList<>();

if (javaHome != null && !javaHome.trim().isEmpty()) {

javaPaths.add(javaHome);

logger.info("Using JAVA_HOME from environment: " + javaHome);

}

// 2. 添加常见的Java安装路径作为备选

String[] commonJavaPaths = {

"/usr/java",

"/usr/lib/jvm",

"/opt/java",

"/usr/local/java",

System.getProperty("java.home") // 当前JVM的java.home

};

for (String path : commonJavaPaths) {

if (path != null && !javaPaths.contains(path)) {

javaPaths.add(path);

}

}

// 3. 设置ACL权限

for (String javaPath : javaPaths) {

File javaDir = new File(javaPath);

if (javaDir.exists() && javaDir.isDirectory()) {

// 为hdfs用户添加rx权限

ShellUtils.exceShell("setfacl -R -m u:hdfs:rx " + javaPath);

// 为yarn用户添加rx权限

ShellUtils.exceShell("setfacl -R -m u:yarn:rx " + javaPath);

logger.info("Set ACL permissions for Java directory: " + javaPath);

break; // 设置一个有效的Java目录即可

}

}

// 4. 为Hadoop安装目录本身设置ACL

String hadoopHome = Constants.INSTALL_PATH;

ShellUtils.exceShell("setfacl -R -m u:yarn:rwx " + hadoopHome + "/logs");

ShellUtils.exceShell("setfacl -R -m u:mapred:rx " + hadoopHome);

} catch (Exception e) {

logger.warn("Failed to set ACL permissions: " + e.getMessage());

// ACL不是必须的,可以降级到传统权限

}

}

}这个给hadoop 用户hdfs授权相关数据目录(在yml配置),实现在changeHadoopInstallPathPerm,另外调用hadoop 创建用户及组服务

最后

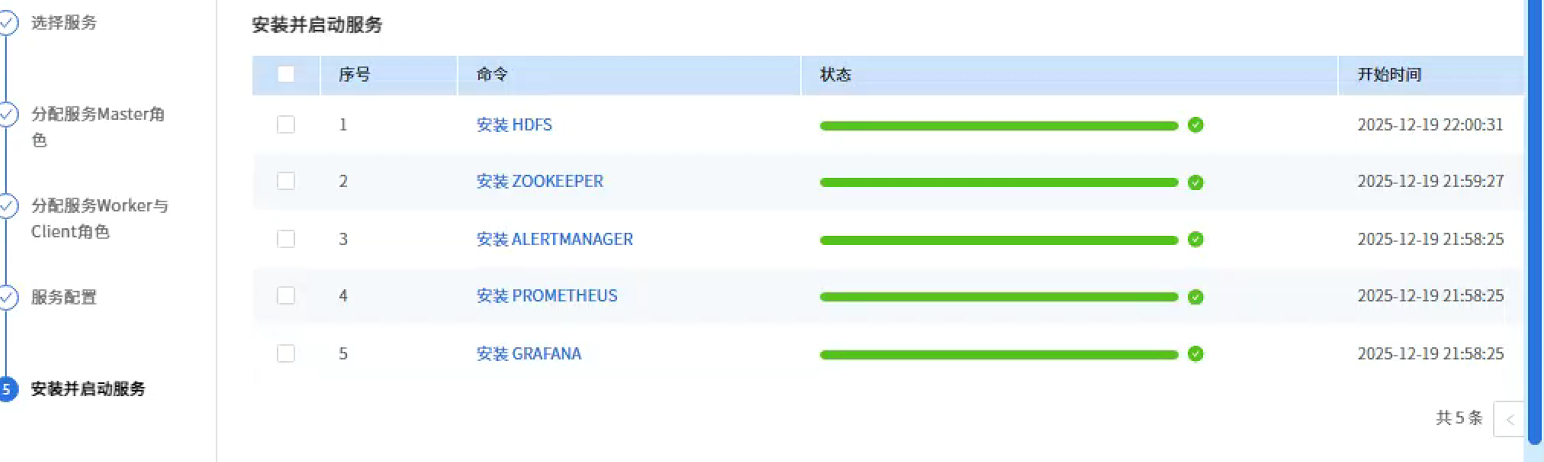

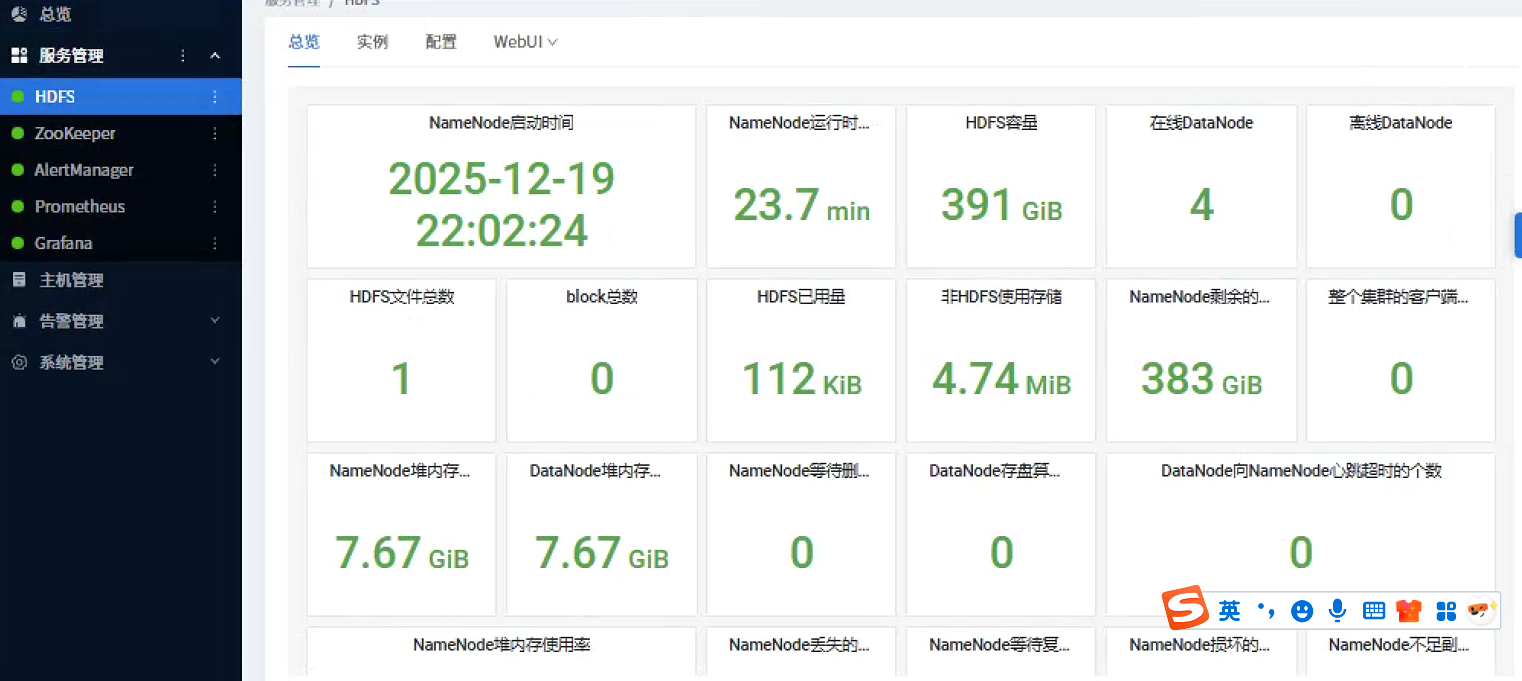

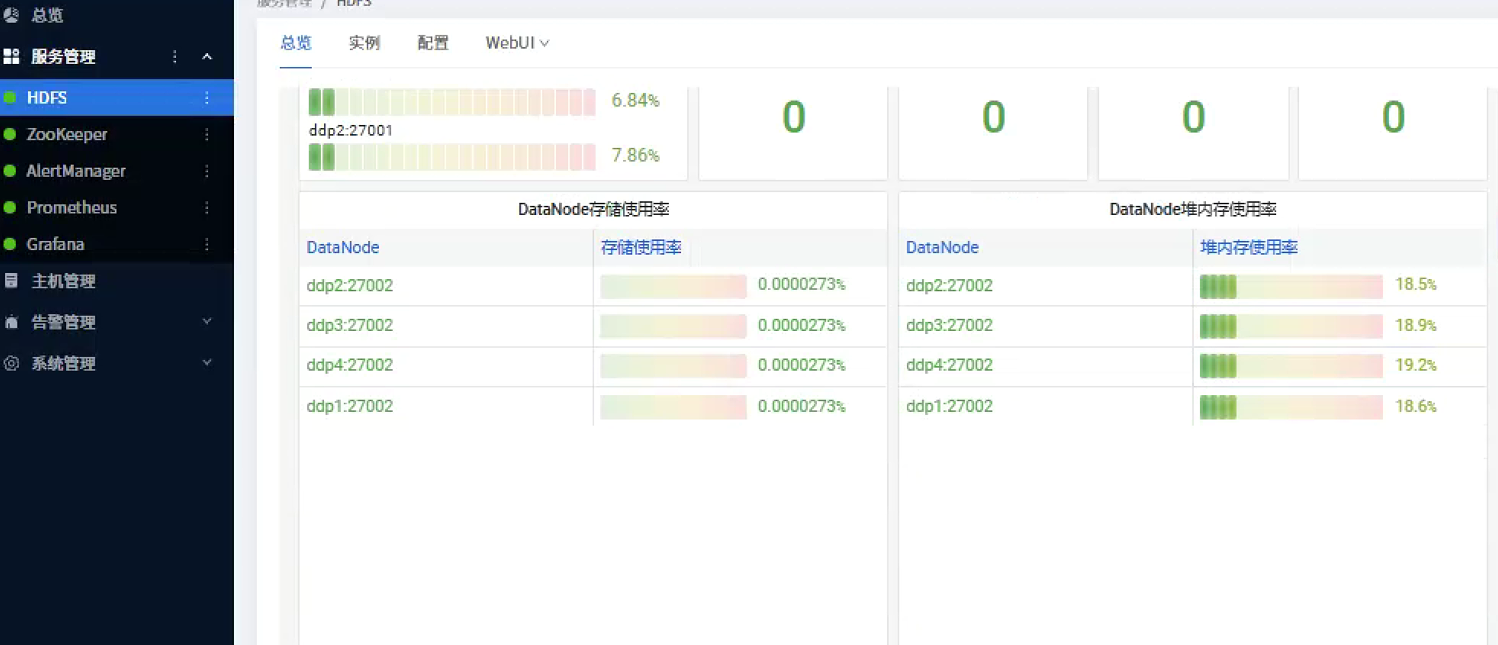

整个过程,有点曲折折磨。有点怀疑人生。幸好,最后总算弄出来了,下面是截图:

如需沟通:lita2lz