目录

[1. ChatSDK介绍](#1. ChatSDK介绍)

[2. ChatSDK获取](#2. ChatSDK获取)

[3. 使用说明](#3. 使用说明)

[3.1 类关系](#3.1 类关系)

[3.2 ChatSDK使用介绍](#3.2 ChatSDK使用介绍)

[3.3 快速上手](#3.3 快速上手)

1. ChatSDK介绍

ChatSDK是一款基于C++语言实现的大模型接入库,目前支持:

- 已接入deepseek-chat、gpt-4o-mini、gemini-2.0-flash模型

- 支持Ollama本地接入deepseek-rl:1.5b模型

- 支持多轮聊天,全量消息和流式消息

- 支持会话管理:获取历史会话、获取历史会话消息

- 使用sqlite对会话数据进行持久化存储

2. ChatSDK获取

bash

#私有仓,无法访问

git clone https://gitee.com/zhibite-edu/ai-model-acess-dev.git

#公有仓

git clone https://gitee.com/zhibite-edu/ai-model-acess-tech.git

#公有仓

git clone https://gitee.com/Axurea/chat-sdk.git目录结构:

bash

ChatSDK/

├── include

│ ├── ChatGPTProvider.h

│ ├── ChatSDK.h

│ ├── common.h

│ ├── DataManager.h

│ ├── DeepSeekProvider.h

│ ├── GeminiProvider.h

│ ├── LLMManager.h

│ ├── LLMProvider.h

│ ├── OllamaLLMProvider.h

│ ├── SessionManager.h

│ └── util

│ └── mylog.h

└── src

├── ChatGPTProvider.cpp

├── ChatSDK.cpp

├── DataManager.cpp

├── DeepSeekProvider.cpp

├── GeminiProvider.cpp

├── LLMManager.cpp

├── OllamaLLMProvider.cpp

├── SessionManager.cpp

└── util

└── mylog.cpp进入sdk目录,执行如下命令:

bash

mkdir build && cd build # 创建build目录并进入该目录

cmake .. # 编译ChatSDK,生成libai_chat_sdk.a静态库

sudo make install # 把编译好的软件,正式放入到系统中,把头文件拷贝到系统中

#流程

ubuntu@VM-12-14-ubuntu:~/ChatSDK/SDK-source/ai-model-acess-tech/AIModelAcessTech/sdk$ mkdir build && cd build

ubuntu@VM-12-14-ubuntu:~/ChatSDK/SDK-source/ai-model-acess-tech/AIModelAcessTech/sdk/build$ cmake ..

CMake Warning (dev) in CMakeLists.txt:

No project() command is present. The top-level CMakeLists.txt file must

contain a literal, direct call to the project() command. Add a line of

code such as

project(ProjectName)

near the top of the file, but after cmake_minimum_required().

CMake is pretending there is a "project(Project)" command on the first

line.

This warning is for project developers. Use -Wno-dev to suppress it.

-- The C compiler identification is GNU 11.4.0

-- The CXX compiler identification is GNU 11.4.0

-- Detecting C compiler ABI info

-- Detecting C compiler ABI info - done

-- Check for working C compiler: /usr/bin/cc - skipped

-- Detecting C compile features

-- Detecting C compile features - done

-- Detecting CXX compiler ABI info

-- Detecting CXX compiler ABI info - done

-- Check for working CXX compiler: /usr/bin/c++ - skipped

-- Detecting CXX compile features

-- Detecting CXX compile features - done

-- Found OpenSSL: /usr/lib/x86_64-linux-gnu/libcrypto.so (found version "3.0.2")

-- Configuring done

-- Generating done

-- Build files have been written to: /home/ubuntu/ChatSDK/SDK-source/ai-model-acess-tech/AIModelAcessTech/sdk/build

ubuntu@VM-12-14-ubuntu:~/ChatSDK/SDK-source/ai-model-acess-tech/AIModelAcessTech/sdk/build$ make

[ 10%] Building CXX object CMakeFiles/ai_chat_sdk.dir/src/ChatSDK.cpp.o

[ 20%] Building CXX object CMakeFiles/ai_chat_sdk.dir/src/DataManager.cpp.o

[ 30%] Building CXX object CMakeFiles/ai_chat_sdk.dir/src/DeepSeekProvider.cpp.o

[ 40%] Building CXX object CMakeFiles/ai_chat_sdk.dir/src/GeminiProvider.cpp.o

[ 50%] Building CXX object CMakeFiles/ai_chat_sdk.dir/src/LLMManager.cpp.o

[ 60%] Building CXX object CMakeFiles/ai_chat_sdk.dir/src/OllamaLLMProvider.cpp.o

[ 70%] Building CXX object CMakeFiles/ai_chat_sdk.dir/src/SessionManager.cpp.o

[ 80%] Building CXX object CMakeFiles/ai_chat_sdk.dir/src/util/myLog.cpp.o

[ 90%] Linking CXX static library libai_chat_sdk.a

[100%] Built target ai_chat_sdk

ubuntu@VM-12-14-ubuntu:~/ChatSDK/SDK-source/ai-model-acess-tech/AIModelAcessTech/sdk/build$ ls

CMakeCache.txt CMakeFiles cmake_install.cmake libai_chat_sdk.a Makefile

ubuntu@VM-12-14-ubuntu:~/ChatSDK/SDK-source/ai-model-acess-tech/AIModelAcessTech/sdk/build$ sudo make install

Consolidate compiler generated dependencies of target ai_chat_sdk

[100%] Built target ai_chat_sdk

Install the project...

-- Install configuration: "Debug"

-- Installing: /usr/local/lib/libai_chat_sdk.a

-- Installing: /usr/local/include/ai_chat_sdk

-- Installing: /usr/local/include/ai_chat_sdk/SessionManager.h

-- Installing: /usr/local/include/ai_chat_sdk/util

-- Installing: /usr/local/include/ai_chat_sdk/util/myLog.h

-- Installing: /usr/local/include/ai_chat_sdk/DataManager.h

-- Installing: /usr/local/include/ai_chat_sdk/DeepSeekProvider.h

-- Installing: /usr/local/include/ai_chat_sdk/common.h

-- Installing: /usr/local/include/ai_chat_sdk/OllamaLLMProvider.h

-- Installing: /usr/local/include/ai_chat_sdk/ChatGPTProvider.h

-- Installing: /usr/local/include/ai_chat_sdk/GeminiProvider.h

-- Installing: /usr/local/include/ai_chat_sdk/LLMProvider.h

-- Installing: /usr/local/include/ai_chat_sdk/ChatSDK.h

-- Installing: /usr/local/include/ai_chat_sdk/LLMManager.h

ubuntu@VM-12-14-ubuntu:~/ChatSDK/SDK-source/ai-model-acess-tech/AIModelAcessTech/sdk/build$ ls /usr/local/

bin etc games include lib man qcloud sbin share src #include中是头文件,lib是静态库文件

#SDK编译好之后,make install会把SDK当中的头文件放到当前目录当中了

ubuntu@VM-12-14-ubuntu:~/ChatSDK/SDK-source/ai-model-acess-tech/AIModelAcessTech/sdk/build$ ls /usr/local/include

ai_chat_sdk

ubuntu@VM-12-14-ubuntu:~/ChatSDK/SDK-source/ai-model-acess-tech/AIModelAcessTech/sdk/build$ ls /usr/local/include/ai_chat_sdk/

ChatGPTProvider.h common.h DeepSeekProvider.h LLMManager.h OllamaLLMProvider.h util

ChatSDK.h DataManager.h GeminiProvider.h LLMProvider.h SessionManager.h

#静态库

ubuntu@VM-12-14-ubuntu:~/ChatSDK/SDK-source/ai-model-acess-tech/AIModelAcessTech/sdk/build$ ls /usr/local/lib/

libai_chat_sdk.a python3.10

-- 它怎么知道我们要安装哪个文件,并且安装到哪个位置。

-- 这个其实是在cmake文件中已经说明了

ubuntu@VM-12-14-ubuntu:~/ChatSDK/SDK-source/ai-model-acess-tech/AIModelAcessTech/sdk/build$ cd ..

ubuntu@VM-12-14-ubuntu:~/ChatSDK/SDK-source/ai-model-acess-tech/AIModelAcessTech/sdk$ cat CMakeLists.txt

# CMake 最低版本要求

cmake_minimum_required(VERSION 3.10)

#设置SDK的名称

set(SDK_NAME "ai_chat_sdk")

#设置C++标准

set(CMAKE_CXX_STANDARD 17)

set(CMAKE_CXX_STANDARD_REQUIRED ON)

#设置构建类型为Debug

set(CMAKE_BUILD_TYPE Debug)

# 收集源文件

file(GLOB_RECURSE SDK_SOURCES "src/*.cpp")

file(GLOB_RECURSE SDK_HEADERS "include/*.h")

# 生成静态库

add_library(${SDK_NAME} STATIC ${SDK_SOURCES} ${SDK_HEADERS})

# 设置输出目录

set(EXECUTABLE_OUTPUT_PATH ${CMAKE_CURRENT_SOURCE_DIR}/build)

# 定义httplib中的CPPHTTPLIB_OPENSSL_SUPPORT宏

target_compile_definitions(${SDK_NAME} PUBLIC CPPHTTPLIB_OPENSSL_SUPPORT)

target_include_directories(${SDK_NAME} PUBLIC ${CMAKE_CURRENT_SOURCE_DIR}/include)

# 设置静态库的目录

link_directories(/usr/local/lib)

find_package(OpenSSL REQUIRED)

include_directories(${OPENSSL_INCLUDE_DIR})

# 添加CPPHTTPLIB_OPENSSL_SUPPORT定义

target_compile_definitions(${SDK_NAME} PRIVATE CPPHTTPLIB_OPENSSL_SUPPORT)

# 链接库

target_link_libraries(${SDK_NAME} PUBLIC jsoncpp fmt spdlog sqlite3 OpenSSL::SSL OpenSSL::Crypto)

# 安装规则 拷贝静态库到系统目录下 /usr/local/lib

install(TARGETS ${SDK_NAME}

ARCHIVE DESTINATION lib

)

# 安装规则 拷贝头文件到系统目录下 /usr/local/include

install(DIRECTORY ${CMAKE_CURRENT_SOURCE_DIR}/include/

DESTINATION include/ai_chat_sdk

FILES_MATCHING PATTERN "*.h"

)3. 使用说明

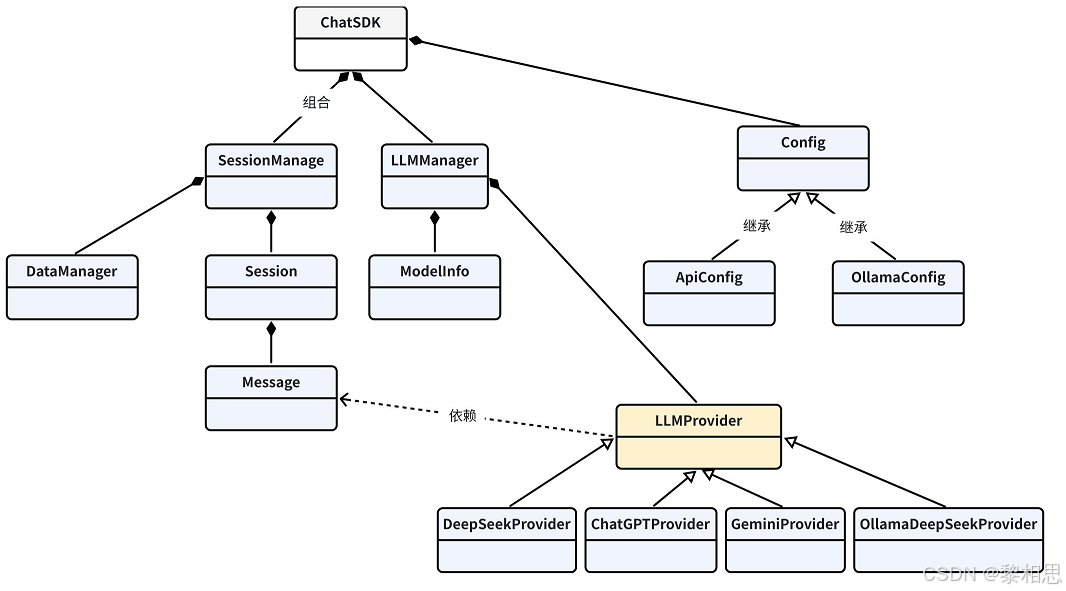

3.1 类关系

3.2 ChatSDK使用介绍

在程序中使用ChatSDK库时,主要通过ChatSDK类与库交互。该库中涉及到的成员介绍如下:

bash

Header : #include<ai_chat_sdk/ChatSDK.h>

CMake : target_link_libraries({ProjName} PRIVATE ai_chat_sdk)

cpp

public Functions

bool initModels(const std::vector<std::shared_ptr<Config>>& configs)

///功能:初始化所支持的模型

//参数:configs-所有支持的模型需配置的参数

//返回值:初始化成功返回ture,否则返回false

std::string createSession(const std::string& modelName)

//功能:为modelName模型创建会话

//参数:modelName-模型名称

//返回值:返回会话Id

std::shared_ptr<Session> getSession(const std::string& sessionId)

//功能:获取sessionld对应的会话信息

//参数:sessionld-会话ld

//返回值:sessionld对应的会话信息

std::vector<std::string> getSessionList()

//功能:获取所有会话信息

//返回值:返回所有会话信息

std::vector<ModelInfo> getAvailableModels()

//功能:获取支持的所有模型

//返回值:返回支持的所有模型

bool deleteSession(const std::string& sessionId)

//功能:删除sessionld对应的会话信息

//参数:sessionld-会话ld

//返回值:删除成功返回true,否则返回false

std::string sendMessage(const std::string sessionId,const std::string& message)

//功能:给大模型发送消息,大模型生成所有回复后一次性返回

//参数:

// sessionld-会话Id

// message-给大模型发送的消息内容

// 返回值:返回大模型的完整回复

std::string sendMessageStream(const std::string sessionId,const std::string& message,std::function<void(const std::string&,bool)> callback)

//功能:给大模型发送消息,大模型生成一点返回一点,即流式响应

//参数:

// sessionld -会话Id

// message-给大模型发送的消息内容

// callback-大模型返回消息用户处理回到函数

//返回值:返回大模型的完整回复相关数据结构请查看sdk/include/common.h文件

3.3 快速上手

cpp

#include <iostream>

#include <ai_chat_sdk/ChatSDK.h>

#include <ai_chat_sdk/util/mylog.h>

void sendMessageStream(ai_chat_sdk::ChatSDK &chatSDK, const std::string &

sessionId)

{

std::cout << "--------------发送消息--------------" << std::endl;

std::cout << "user消息 > ";

std::string message;

std::getline(std::cin, message);

std::cout << "--------------发送消息完成--------------" << std::endl;

chatSDK.sendMessageStream(sessionId, message, [](const std::string &response, bool done)

{

std::cout << "assistant消息: " << response << std::endl;

if(done){

std::cout << "--------------接收消息完成--------------" << std::endl;

} });

}

int main(int argc, char *argv[])

{

aurora::Logger::initLogger("aiChatServer", "stdout", spdlog::level::info);

ai_chat_sdk::ChatSDK chatSDK;

// 初始化deepseek模型信息

ai_chat_sdk::APIConfig deepseekConfig;

deepseekConfig._apikey = std::getenv("deepseek_apikey");

deepseekConfig._temperature = 0.7;

deepseekConfig._maxTokens = 2048;

deepseekConfig._modelName = "deepseek-chat";

std::vector<std::shared_ptr<ai_chat_sdk::Config>> configs;

configs.push_back(std::make_shared<ai_chat_sdk::APIConfig>(deepseekConfig));

// 初始化模型

chatSDK.initModels(configs);

std::cout << "--------------创建会话--------------" << std::endl;

std::string sessionId = chatSDK.createSession("deepseek-chat");

std::cout << "创建会话成功, 会话ID: " << sessionId << std::endl;

int userOP = 1;

while (true)

{

std::cout << "-------1. send message 0. exit-----------------" << std::endl;

std::cin >> userOP;

if (userOP == 0)

{

break;

}

getchar();

sendMessageStream(chatSDK, sessionId);

}

std::cout

<< "--------------程序退出--------------" << std::endl;

return 0;

}在源码目录下创建CMakeList.txt文件,按照如下规则进行编译:

bash

# 项⽬名称为AIChatDemo

project(AIChatDemo)

# 设置C++标准,使⽤C++17标准进⾏编译,REQUIRED表⽰编译器如果不⽀持C++17则报错

set(CMAKE_CXX_STANDARD 17)

set(CMAKE_CXX_STANDARD_REQUIRED ON)

# 设置构建类型为Debug

set(CMAKE_BUILD_TYPE Debug)

# 添加可执⾏⽂件

add_executable(AIChatDemo main.cpp)

# 查找OpenSSL包

find_package(OpenSSL REQUIRED)

# 将 OpenSSL 的头⽂件⽬录添加到编译器的搜索路径中

include_directories(${OPENSSL_INCLUDE_DIR})

# 设置库的⽬录,链接器会在这个⽬录中查找需要的库⽂件ai_chat_sdk库⽂件

link_directories(/usr/local/lib)

# 链接SDK

target_link_libraries(AIChatDemo PRIVATE

ai_chat_sdk

fmt

jsoncpp

OpenSSL::SSL

OpenSSL::Crypto

gflags

spdlog

sqlite3

)编译命令:

bash

# 构建build⽬录,并进⼊build⽬录

mkdir build && cd build

# ⽣成构建系统⽂件

camke ..

# 对程序进⾏编译

make