1 环境安装

yolo地址

https://gitee.com/monkeycc/ultralytics#https://gitee.com/link?target=https%3A%2F%2Fgithub.com%2Fultralytics%2Fassets%2Freleases%2Fdownload%2Fv8.3.0%2Fyolo11s.pt查看版本

bash

C:\Users\Administrator>python -V

Python 3.10.4

C:\Users\Administrator>pip show torch

Name: torch

Version: 1.13.1

Summary: Tensors and Dynamic neural networks in Python with strong GPU acceleration

Home-page: https://pytorch.org/

Author: PyTorch Team

Author-email: packages@pytorch.org

License: BSD-3

Location: e:\2019_software\python\install_file\lib\site-packages

Requires: typing-extensions

Required-by: thop, torchvision, ultralytics, ultralytics-thop

C:\Users\Administrator>升级pip

bash

python -m pip install --upgrade pip安装

bash

pip install ultralytics --target F:\test\yolo\package下载模型

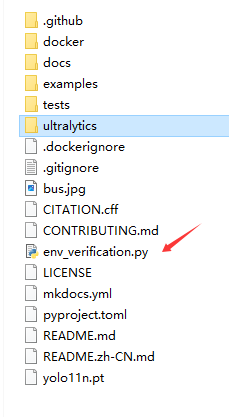

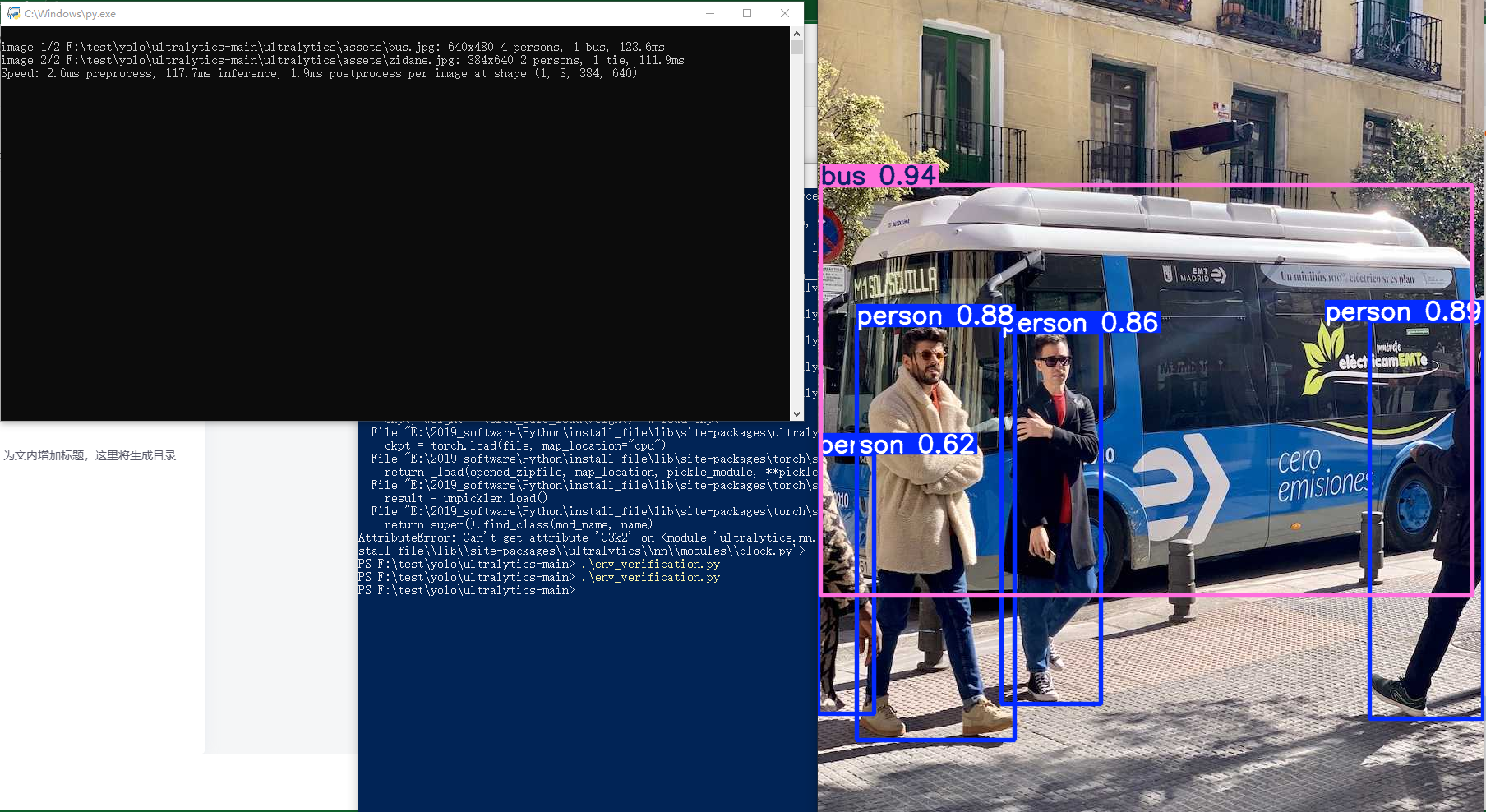

在ultralytics-main目录下创建python文件名为env_verification.py。其他目录下注意修改图片的目录,这里使用的是自带的目录。

添加如下代码

python

from ultralytics import YOLO

import cv2

# Load a pretrained YOLO11n model

model = YOLO("yolo11n.pt")

# Perform object detection on an image

results = model("ultralytics/assets/") # Predict on an image

# 逐帧显示

for result in results:

annotated_frame = result.plot()

# 使用OpenCV显示

cv2.imshow("YOLOv11 Result", annotated_frame)

cv2.waitKey(0) # 等待按键,0表示无限等待

cv2.destroyAllWindows()然后在目录下运行

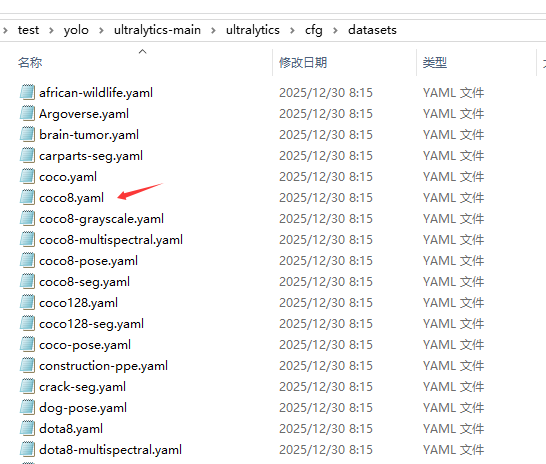

2 训练

复制该文件到主路径

修改该文件

python

# Ultralytics 🚀 AGPL-3.0 License - https://ultralytics.com/license

# COCO8 dataset (first 8 images from COCO train2017) by Ultralytics

# Documentation: https://docs.ultralytics.com/datasets/detect/coco8/

# Example usage: yolo train data=coco8.yaml

# parent

# ├── ultralytics

# └── datasets

# └── coco8 ← downloads here (1 MB)

# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

path: F:/test/yolo/ultralytics-main/datasets # 数据集的根目录,最好用绝对路径

train: images/train # 训练图片在的目录

val: images/val # 验证集图片

test: # test images (optional)

# Classes

names:

0: apple

# Download script/URL (optional)

download: https://github.com/ultralytics/assets/releases/download/v0.0.0/coco8.zip找个文件夹构建这样的文件夹配置

python

│ │ ├── images/

│ │ │ ├── train/

│ │ │ └── val/

│ │ └── labels/

│ │ ├── train/

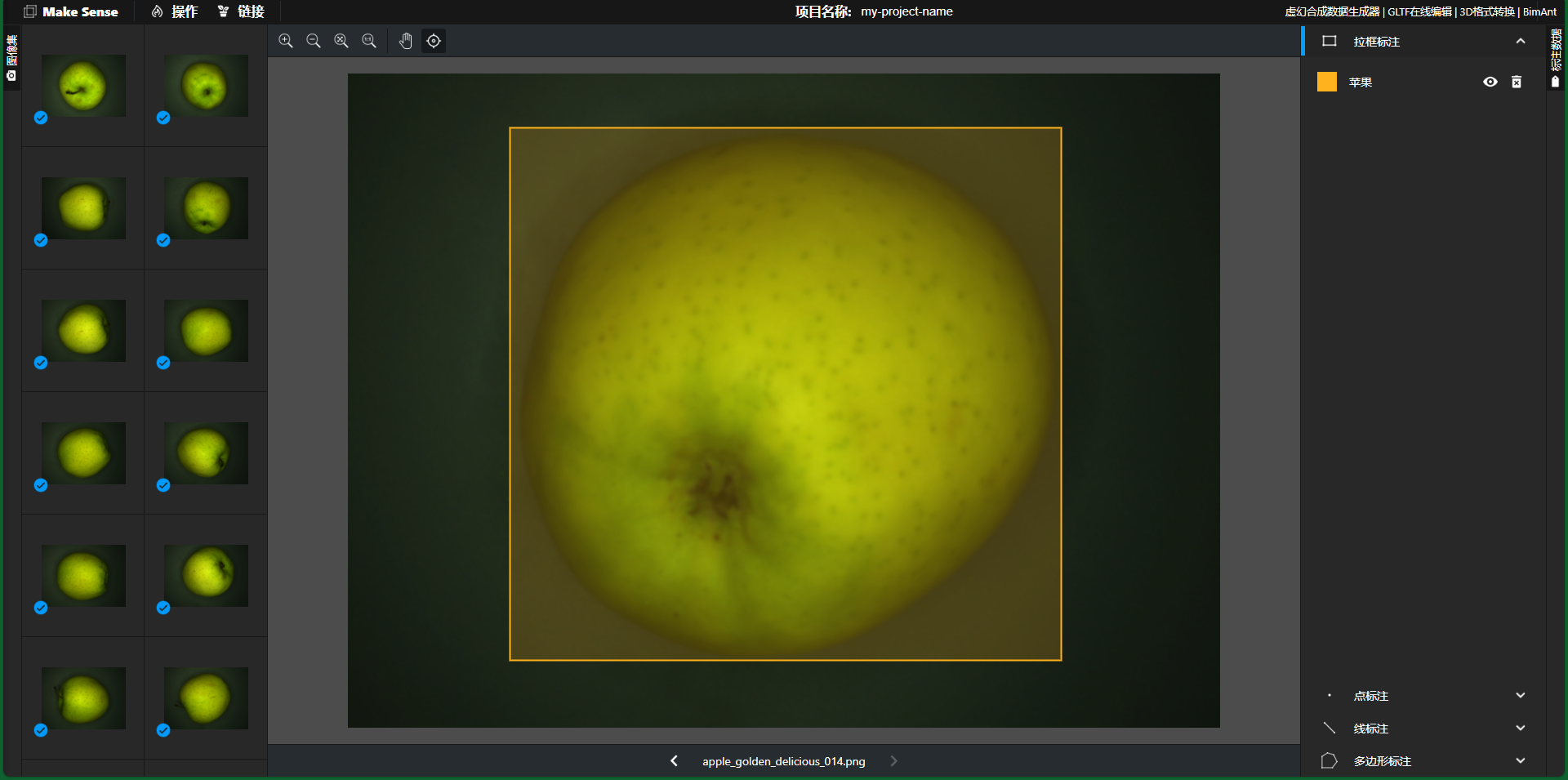

│ │ └── val/进入在线标注网站

python

https://makesense.bimant.com/标注图像

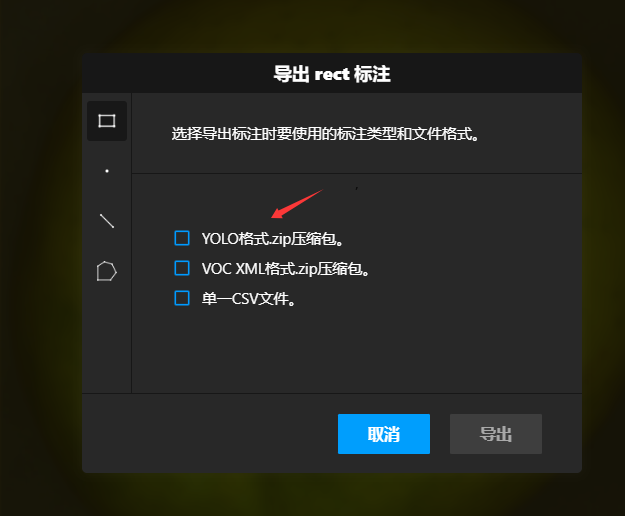

导出标签

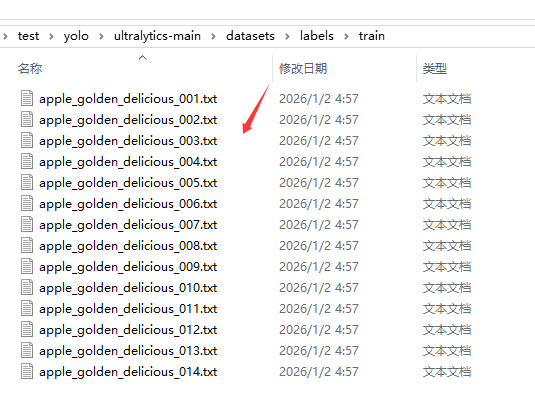

解压后放到如下路径

运行训练程序

python

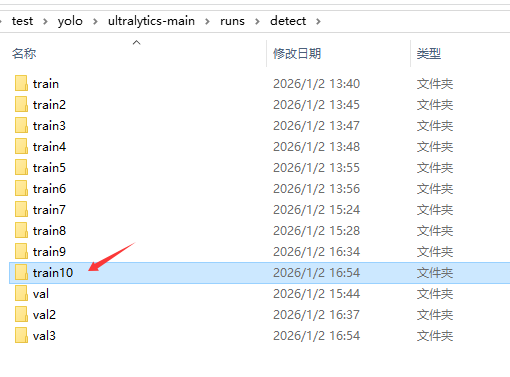

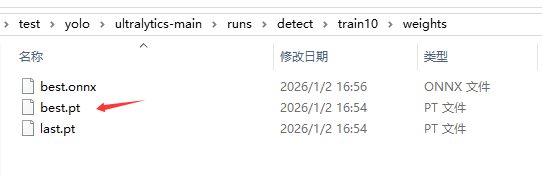

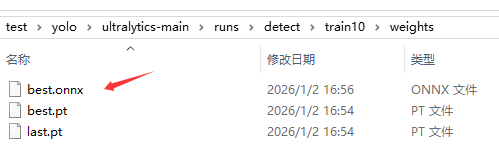

.\train2.py训练完成会在runs\detect生成一个文件夹

这个就是模型文件

再c++上面用要转换模型,选训练好的模型为pt,不能够直接使用,需要转换为onnx格式的。

首先安装依赖库

python

pip install onnx==1.17.0 # 这里我的要求为['onnx>=1.12.0,<1.18.0'],运行转换代码报错后注意版本

pip install onnxslim

pip install onnxruntime在根目录下,新建pt_to_onnx.py文件,转换代码如下:

python

from ultralytics import YOLO

# 加载一个训练好的模型

model = YOLO("runs/detect/train10/weights/best.pt")

path = model.export(format="onnx") # 返回导出模型的路径

print(f"导出的路径为:{path}")然后运行程序,就生成了下面的文件

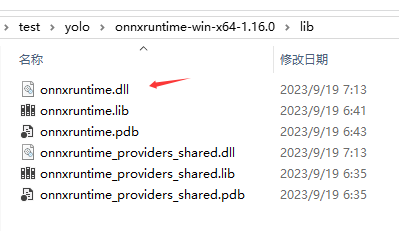

3 Qt推理测试

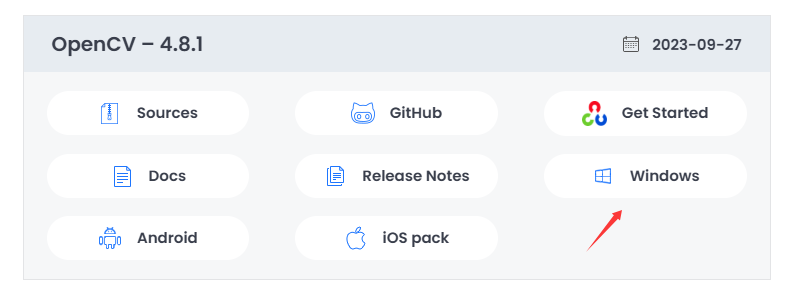

下载OPENCV

下载

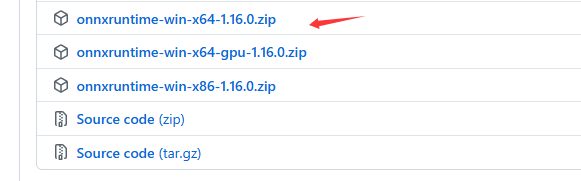

cpp

https://github.com/microsoft/onnxruntime/releases/tag/v1.16.0

下载解压得到库

pro配置路径

python

INCLUDEPATH += F:/2022_software/OpenCV/4_8_1/src1/build/install/include/

INCLUDEPATH += F:/2022_software/OpenCV/4_8_1/src1/build/install/include/opencv2/

LIBS += -LF:/2022_software/OpenCV/4_8_1/src1/build/install/x64/vc16/lib -lopencv_world481

INCLUDEPATH += F:\test\yolo\onnxruntime-win-x64-1.16.0\include

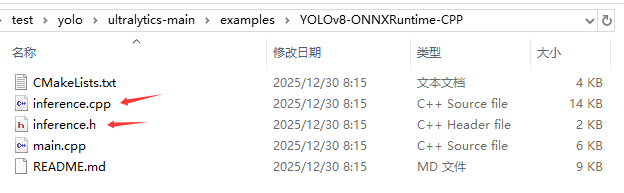

LIBS += -LF:/test/yolo/onnxruntime-win-x64-1.16.0/lib -lonnxruntime将这个两个文件复制并添加到项目中

inference.h要设置如下更改

cpp

std::vector<std::string> classes{"apple"};再添加一个新函数

cpp

std::string Inference::getClassName(int class_id) const {

if (class_id >= 0 && class_id < classes.size()) {

return classes[class_id];

} else {

return "Unknown";

}

}mainwindow.h

cpp

#ifndef MAINWINDOW_H

#define MAINWINDOW_H

#include <QMainWindow>

#include "inference.h"

QT_BEGIN_NAMESPACE

namespace Ui { class MainWindow; }

QT_END_NAMESPACE

class MainWindow : public QMainWindow

{

Q_OBJECT

public:

MainWindow(QWidget *parent = nullptr);

~MainWindow();

private slots:

void on_pushButton_clicked(bool checked);

private:

Ui::MainWindow *ui;

YOLO_V8 *Inf_;

};

#endif // MAINWINDOW_Hmainwindow.cpp

cpp

#include "mainwindow.h"

#include "ui_mainwindow.h"

#include <QMessageBox>

#include <qfiledialog.h>

MainWindow::MainWindow(QWidget *parent)

: QMainWindow(parent)

, ui(new Ui::MainWindow)

{

ui->setupUi(this);

try {

Inf_ = new YOLO_V8;

DL_INIT_PARAM params;

params.rectConfidenceThreshold = 0.1;

params.iouThreshold = 0.5;

params.modelPath = "best.onnx";

params.imgSize = { 640, 640 };

#ifdef USE_CUDA

params.cudaEnable = true;

// GPU FP32 inference

params.modelType = YOLO_DETECT_V8;

// GPU FP16 inference

//Note: change fp16 onnx model

//params.modelType = YOLO_DETECT_V8_HALF;

#else

// CPU inference

params.modelType = YOLO_DETECT_V8;

params.cudaEnable = false;

#endif

Inf_->CreateSession(params);

}

catch (const std::exception& e) {

std::cerr << "创建 Inference 实例时发生异常: " << e.what() << std::endl;

QMessageBox::critical(this, "异常", QString("模型加载失败: %1").arg(e.what()));

}

catch (...) {

std::cerr << "创建 Inference 实例时发生未知异常。" << std::endl;

QMessageBox::critical(this, "异常", "发生未知错误,无法初始化模型。");

}

}

MainWindow::~MainWindow()

{

delete ui;

delete Inf_;

}

void MainWindow::on_pushButton_clicked(bool checked)

{

QString imagePath = QFileDialog::getOpenFileName(

this,

"选择图片",

"",

"图像文件 (*.png *.jpg *.jpeg *.bmp *.gif)"

);

if (!imagePath.isEmpty()) {

QPixmap pixmap(imagePath);

if (pixmap.isNull()) {

QMessageBox::warning(this, "错误", "无法加载图片!");

return;

}

ui->original_image_label->setPixmap(pixmap.scaled(

ui->original_image_label->size(),

Qt::KeepAspectRatio,

Qt::SmoothTransformation

));

std::string stdStr = imagePath.toUtf8().constData();

cv::Mat img_mat = cv::imread(stdStr, cv::IMREAD_COLOR);

if (img_mat.empty()) {

qDebug() << "图像读取失败,路径:" << imagePath;

}

// 执行检测任务

std::vector<DL_RESULT> res;

Inf_->RunSession(img_mat, res);

QString resultText = "类别统计结果:\n";

for (auto& re : res)

{

cv::RNG rng(cv::getTickCount());

cv::Scalar color(rng.uniform(0, 256), rng.uniform(0, 256), rng.uniform(0, 256));

const cv::Rect& box = re.box;

std::string className = Inf_->getClassName(re.classId);

float confidence = re.confidence;

// 绘制框

cv::rectangle(img_mat, box, color, 2);

// 构建标签文字

std::string label = className + ' ' + std::to_string(confidence).substr(0, 4);

cv::Size textSize = cv::getTextSize(label, cv::FONT_HERSHEY_PLAIN, 1, 1, 0);

cv::Rect textBox(box.x-1, box.y - textSize.height*2, textSize.width + 10, textSize.height*2);

// 绘制背景框和文字

cv::rectangle(img_mat, textBox, color, cv::FILLED);

cv::putText(img_mat, label, cv::Point(box.x + 5, box.y - 5), cv::FONT_HERSHEY_PLAIN, 1, cv::Scalar(0, 0, 0), 1, 0);

QString line = QString("%1\n").arg(QString::fromStdString(label));

resultText += line;

}

// 将结果显示到 QLabel 上

ui->result_label->setText(resultText);

// 将 cv::Mat 转换为 QImage,再显示在 QLabel 上

QImage qimg(img_mat.data,

img_mat.cols,

img_mat.rows,

static_cast<int>(img_mat.step),

QImage::Format_BGR888); // 注意格式要匹配 OpenCV 的 BGR

// 设置 QLabel 显示图像(自动缩放至 label 尺寸)

ui->detect_imag_label->setPixmap(QPixmap::fromImage(qimg).scaled(

ui->detect_imag_label->size(),

Qt::KeepAspectRatio,

Qt::SmoothTransformation));

}

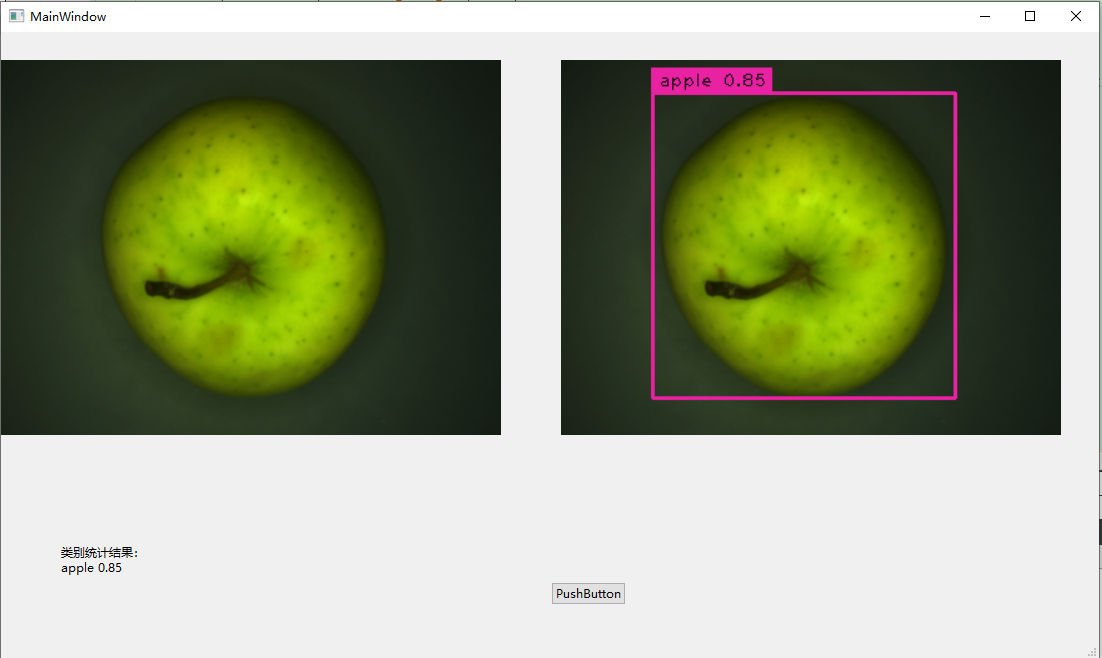

}最后运行

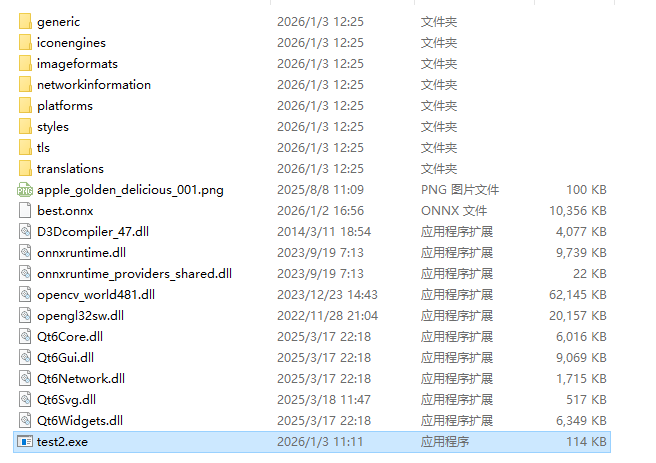

最后部署

windeployqt xxx.exe,就会初步打包完成,再把opencv_world480.dll,onnxruntime.dll,onnxruntime_xxx_shared.dll复制到刚刚到exe打包后的目录