SpringAI Rag 文件读取

- [1 依赖](#1 依赖)

- [2 配置](#2 配置)

- [3 代码](#3 代码)

- [4 中文拆分](#4 中文拆分)

1 依赖

xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>3.5.9</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<groupId>com.xu</groupId>

<artifactId>spring-openai-onnx</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>spring-openai-onnx</name>

<description>Demo project for Spring Boot</description>

<properties>

<java.version>25</java.version>

<spring-ai.version>1.1.2</spring-ai.version>

</properties>

<dependencies>

<!-- SpringBoot 前端请求 -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<!-- Redis Rag 存储 -->

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-vector-store-redis</artifactId>

</dependency>

<!-- Rag 文档解析 -->

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-tika-document-reader</artifactId>

</dependency>

<!-- hutool -->

<dependency>

<groupId>cn.hutool</groupId>

<artifactId>hutool-all</artifactId>

<version>5.8.42</version>

</dependency>

<!-- devtools -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-devtools</artifactId>

<scope>runtime</scope>

<optional>true</optional>

</dependency>

<!-- lombok -->

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<!-- test -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

</dependencies>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-bom</artifactId>

<version>${spring-ai.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<annotationProcessorPaths>

<path>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</path>

</annotationProcessorPaths>

</configuration>

</plugin>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<configuration>

<excludes>

<exclude>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</exclude>

</excludes>

</configuration>

</plugin>

</plugins>

</build>

</project>2 配置

yml

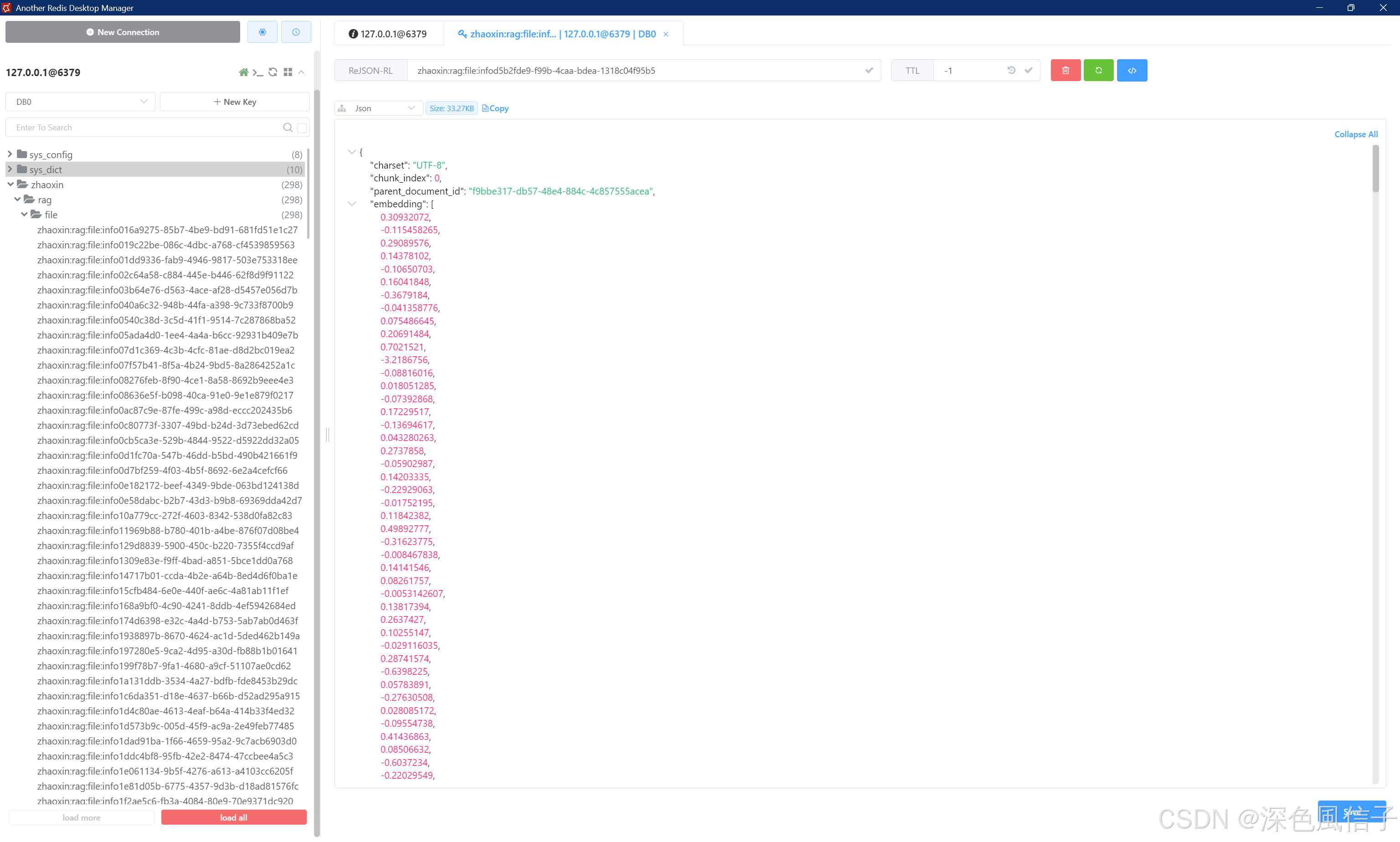

spring:

data:

redis:

host: 127.0.0.1

port: 6379

password:

application:

name: spring-openai-onnx

ai:

vectorstore:

redis:

initialize-schema: true # 是否初始化所需的模式

index-name: zhaoxin-rag # 用于存储向量的索引名称

prefix: 'zhaoxin:rag:file:info' # redis 键的前缀3 代码

java

package com.xu;

import org.springframework.ai.document.Document;

import org.springframework.ai.reader.TextReader;

import org.springframework.ai.reader.tika.TikaDocumentReader;

import org.springframework.ai.transformer.splitter.TextSplitter;

import org.springframework.ai.vectorstore.redis.RedisVectorStore;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.core.io.FileSystemResource;

import org.springframework.core.io.Resource;

import org.junit.jupiter.api.Test;

import com.xu.conf.ZhaoXinTextSplitter;

import java.util.List;

@SpringBootTest

class RagTest {

@Autowired

private RedisVectorStore redisVectorStore;

@Test

void file() {

Resource pdf = new FileSystemResource("D:\\SourceCode\\简历\\个人简历.pdf");

Resource doc = new FileSystemResource("D:\\SourceCode\\简历\\个人简历.docx");

Resource excel = new FileSystemResource("D:\\SourceCode\\简历\\个人简历.xlsx");

TikaDocumentReader reader = new TikaDocumentReader(pdf);

List<Document> origin = reader.get();

// 中文拆分

TextSplitter splitter = new ZhaoXinTextSplitter();

List<Document> documents = splitter.split(origin);

redisVectorStore.add(documents);

}

@Test

void text() {

Resource resource = new FileSystemResource("D:\\SourceCode\\简历\\a.txt");

TextReader reader = new TextReader(resource);

List<Document> origin = reader.get();

// 中文拆分

TextSplitter splitter = new ZhaoXinTextSplitter();

List<Document> documents = splitter.split(origin);

redisVectorStore.add(documents);

}

}4 中文拆分

java

package com.xu.conf;

import org.springframework.ai.transformer.splitter.TextSplitter;

import java.util.ArrayList;

import java.util.List;

import java.util.regex.Matcher;

import java.util.regex.Pattern;

import java.util.stream.Collectors;

/**

* 优化后的中文专属文本拆分器

* 适配中文语义边界,避免拆分后语义断裂

*

* @author hyacinth

*/

public class ZhaoXinTextSplitter extends TextSplitter {

// 中文核心分隔符正则(按「强语义边界→弱语义边界」排序,优先拆分大的语义单元)

private static final Pattern CHINESE_SEPARATOR_PATTERN = Pattern.compile(

"(。|?|!|;|:|\\n\\n|\\n|,)",

Pattern.UNICODE_CASE | Pattern.MULTILINE

);

// 空白字符过滤正则(去除全角/半角空白、制表符等)

private static final Pattern BLANK_PATTERN = Pattern.compile("\\s+");

@Override

protected List<String> splitText(String text) {

// 步骤1:预处理文本,去除多余空白,避免无效拆分

String processedText = preprocessText(text);

// 步骤2:按中文语义边界拆分文本,得到初步拆分结果

List<String> initialSplits = splitByChineseSemantic(processedText);

// 步骤3:过滤无效分块,合并过短分块,得到最终有效分块

return filterAndOptimizeChunks(initialSplits);

}

/**

* 文本预处理:去除多余空白、制表符,统一换行格式

*/

private String preprocessText(String text) {

if (text == null || text.isBlank()) {

return "";

}

// 1. 替换全角空白为半角

String result = text.replace(" ", " ");

// 2. 去除连续多个空白(空格、制表符等),保留单个空格

result = BLANK_PATTERN.matcher(result).replaceAll(" ");

// 3. 去除文本首尾空白

return result.trim();

}

/**

* 核心:按中文语义边界拆分文本,保留分隔符(保证语义完整)

*/

private List<String> splitByChineseSemantic(String text) {

List<String> chunks = new ArrayList<>();

if (text.isBlank()) {

return chunks;

}

// 利用正则拆分,同时保留分隔符(中文标点是语义的重要组成,不可丢弃)

String[] splitParts = CHINESE_SEPARATOR_PATTERN.split(text);

Matcher matcher = CHINESE_SEPARATOR_PATTERN.matcher(text);

List<String> separators = new ArrayList<>();

// 提取所有匹配到的中文分隔符(用于后续拼接,保留语义)

while (matcher.find()) {

separators.add(matcher.group());

}

// 拼接拆分后的内容和对应的分隔符,形成完整语义块

for (int i = 0; i < splitParts.length; i++) {

String part = splitParts[i].trim();

if (part.isBlank()) {

continue;

}

// 拼接当前内容和对应的分隔符(避免分隔符丢失)

StringBuilder chunk = new StringBuilder(part);

if (i < separators.size()) {

chunk.append(separators.get(i));

}

chunks.add(chunk.toString().trim());

}

return chunks;

}

/**

* 过滤无效分块,优化分块质量(去除空值、合并过短分块)

*/

private List<String> filterAndOptimizeChunks(List<String> initialSplits) {

// 步骤1:过滤空字符串、仅含标点的无效分块

List<String> validChunks = initialSplits.stream()

.filter(chunk -> chunk != null && !chunk.isBlank() && !isOnlyPunctuation(chunk))

.collect(Collectors.toList());

// 步骤2:合并过短分块(避免出现单个词语、几个汉字的无效小分块)

return mergeShortChunks(validChunks, 100);

}

/**

* 判断是否仅含标点符号(过滤无效分块)

*/

private boolean isOnlyPunctuation(String chunk) {

return chunk.matches("[。|?|!|;|:|,|、|\\s]+");

}

/**

* 合并过短分块,保证分块语义完整性

*

* @param validChunks 有效分块列表

* @param minChunkLength 最小分块字符数

* @return 优化后的分块列表

*/

private List<String> mergeShortChunks(List<String> validChunks, int minChunkLength) {

List<String> mergedChunks = new ArrayList<>();

StringBuilder currentChunk = new StringBuilder();

for (String chunk : validChunks) {

// 若当前拼接的分块长度不足,继续拼接下一个分块

if (currentChunk.length() + chunk.length() < minChunkLength) {

currentChunk.append(chunk);

} else {

// 长度达标,存入结果列表,重置当前拼接器

if (!currentChunk.isEmpty()) {

mergedChunks.add(currentChunk.toString().trim());

currentChunk.setLength(0);

}

mergedChunks.add(chunk.trim());

}

}

// 处理最后一个未完成拼接的分块

if (!currentChunk.isEmpty()) {

mergedChunks.add(currentChunk.toString().trim());

}

return mergedChunks;

}

// 可选:重写拆分入口方法,适配Spring AI的Document拆分

@Override

public List<org.springframework.ai.document.Document> split(List<org.springframework.ai.document.Document> documents) {

return super.split(documents);

}

}