在 Kubernetes 集群中,Ingress 曾是南北向流量治理的「标配」------ 但随着业务复杂度提升,它的局限性逐渐暴露:依赖厂商自定义注解实现高级功能(如权重分发、TLS 配置)、跨厂商迁移困难、职责边界模糊(基础设施与应用配置混在一起)。

直到 Kubernetes SIG-Network 推出 Gateway-API (gateway.networking.k8s.io),这一局面被彻底改变。作为官方定义的「下一代流量治理标准」,Gateway-API 以「面向角色、标准化、可扩展」为核心设计理念,通过 GatewayClass「Gateway」「HTTPRoute」等模块化资源,将基础设施配置与应用路由规则解耦,原生支持 HTTPS、权重分发、多协议路由等高级功能,同时兼容 Envoy Gateway、Traefik、Istio 等主流控制器,彻底摆脱厂商锁定。

笔者近期基于 Envoy Gateway + MetalLB 环境,从零落地了 Gateway-API 的全流程:从测试环境的自签证书、端到端 HTTPS 验证,到生产环境适配阿里云域名 / 证书、80→443 强制重定向,再到踩过版本兼容(v1 vs v1beta1)、MetalLB 暴露、后端 TLS 连接等坑,最终实现了 80%:20% 流量权重分发的核心需求。

这篇文章不会纠结于 Gateway-API 的纯理论定义,而是聚焦「可落地」------ 不仅会拆解官方核心资源的设计逻辑,还会完整还原测试环境→生产环境的迁移过程,包含证书配置、MetalLB 集成、权重分发验证、常见坑避坑指南,最后还会扩展熔断、流量镜像等高级功能的实现思路。

无论你是想替换老旧的 Ingress 配置,还是想标准化集群流量治理,亦或是需要落地 HTTPS + 灰度发布的生产需求,这篇实战指南都能帮你少走弯路,快速上手 Gateway-API。

一、Gateway-API

1. Gateway-API定义

Gateway-API 是 Kubernetes 官方 SIG-Network 维护的项目,专注于 Kubernetes 集群的 L4(传输层)和 L7(应用层)路由管理,是下一代 Ingress、负载均衡与服务网格 API 标准。其核心定位是:

- 设计理念:通用化、富有表达力、面向角色(Role-oriented);

- 核心能力:同时支持南北向流量(Ingress,集群外→集群内)和东西向流量(Service Mesh,集群内服务间),实现统一流量治理;

- 核心价值:解决传统 Ingress 功能有限、扩展依赖自定义注解、跨厂商移植困难等问题,提供标准化、可扩展的流量治理方案。

本质是 一套 CRD(自定义资源定义)规范 + 配套 API 规则,而非可直接运行的网关组件。

Gateway API 只定义语言(资源、意图、约束和状态)------它不是代理/负载均衡器;需要具体的 gateway 实现(Envoy Gateway、Istio、Traefik、Kong、云厂商 LB 控制器等)把这些"意图"变成实际的 L4/L7 行为并与底层网络集成。

2. Gateway-API核心资源

| 资源名称 | 作用 | 管理角色 |

|---|---|---|

GatewayClass |

网关类,定义网关的类型和实现(如 Istio、Kong、Traefik),全局唯一 | 集群运维 |

Gateway |

网关实例,对应实际的流量入口(如负载均衡 IP / 端口),绑定 GatewayClass | 集群运维 |

HTTPRoute/TCPRoute |

路由规则,定义请求匹配条件和转发目标(如路径、域名、后端 Service) | 应用开发 |

- 分层关系:GatewayClass → Gateway → Route → 后端服务(Service 等);

- 场景差异:

- Ingress 场景:Route 关联 Gateway,通过 Gateway 接收集群外流量并转发;

- Service Mesh 场景(GAMMA initiative):无需 Gateway/GatewayClass,Route 直接关联 Service,管理集群内服务间流量。

3. 部署Gateway-API

bash

[root@k8s-master ~]# kubectl apply -f https://github.com/kubernetes-sigs/gateway-api/releases/download/v1.4.0/standard-install.yaml

[root@k8s-master ~]# kubectl get -f standard-install.yaml

NAME CREATED AT

backendtlspolicies.gateway.networking.k8s.io 2026-01-08T07:30:40Z

gatewayclasses.gateway.networking.k8s.io 2026-01-08T07:30:40Z

gateways.gateway.networking.k8s.io 2026-01-08T07:30:40Z

grpcroutes.gateway.networking.k8s.io 2026-01-08T07:30:40Z

httproutes.gateway.networking.k8s.io 2026-01-08T07:30:40Z

referencegrants.gateway.networking.k8s.io 2026-01-08T07:30:40Z二、EnvoyGateway

因为上面安装的GatewayAPI的版本是1.4.0,所以这里要对照上安装EnvoyGatewayv1.6版本.

| Envoy Gateway version | Envoy Proxy version | Rate Limit version | Gateway API version | Kubernetes version | End of Life |

|---|---|---|---|---|---|

| latest | dev-latest | master | v1.4.1 | v1.32, v1.33, v1.34, v1.35 | n/a |

| v1.6 | distroless-v1.36.2 | 99d85510 | v1.4.0 | v1.30, v1.31, v1.32, v1.33 | 2026/05/13 |

| v1.5 | distroless-v1.35.0 | a90e0e5d | v1.3.0 | v1.30, v1.31, v1.32, v1.33 | 2026/02/13 |

| v1.4 | distroless-v1.34.1 | 3e085e5b | v1.3.0 | v1.30, v1.31, v1.32, v1.33 | 2025/11/13 |

| v1.3 | distroless-v1.33.0 | 60d8e81b | v1.2.1 | v1.29, v1.30, v1.31, v1.32 | 2025/07/30 |

| v1.2 | distroless-v1.32.1 | 28b1629a | v1.2.0 | v1.28, v1.29, v1.30, v1.31 | 2025/05/06 |

| v1.1 | distroless-v1.31.0 | 91484c59 | v1.1.0 | v1.27, v1.28, v1.29, v1.30 | 2025/01/22 |

| v1.0 | distroless-v1.29.2 | 19f2079f | v1.0.0 | v1.26, v1.27, v1.28, v1.29 | 2024/09/13 |

1. EnvoyGateway概念与优势

EnvoyGateway 是 基于 Envoy 代理、完全遵循 Gateway-API 标准 的开源网关控制器(属于 CNCF 沙箱项目),核心作用是将 Gateway-API 的声明式配置(如 Gateway、HTTPRoute)翻译成 Envoy 的运行时规则,最终实现集群流量的接入、路由与治理。

简单说:Gateway-API 是 "流量治理的标准化接口",而 EnvoyGateway 是 "接口的实现者"------ 它一边对接 Gateway-API 的 CRD 资源,一边利用 Envoy 强大的代理能力,让开发者无需直接操作 Envoy 复杂配置,就能通过 Gateway-API 轻松落地 HTTPS、权重分发、熔断等高级功能。

- 优势

- 「标准无锁」:100% 兼容 Gateway-API 标准,配置可跨厂商移植;

- 「能力原生」:继承 Envoy 强大流量治理,HTTPS / 权重 / 熔断开箱即用;

- 「生态友好」:无缝对接 MetalLB、K8s TLS Secret(阿里云证书等);

- 「配置极简」:无注解依赖,自动生成 Envoy 数据面和 LB 资源;

- 「轻量高可用」:控制面无状态,支持横向扩容,生产级稳定。

2. 部署EnvoyGateway

bash

[root@k8s-master ~]# kubectl apply --server-side -f https://github.com/envoyproxy/gateway/releases/download/v1.6.1/install.yaml

[root@k8s-master ~]# kubectl get po,svc -n envoy-gateway-system

NAME READY STATUS RESTARTS AGE

pod/envoy-gateway-757bb4947-s5bvr 1/1 Running 0 53m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/envoy-gateway ClusterIP 10.96.122.237 <none> 18000/TCP,18001/TCP,18002/TCP,19001/TCP,9443/TCP 53m

service/envoy-gateway-api-gateway-85d663be LoadBalancer 10.111.107.73 10.0.0.151 80:31876/TCP 50m三、Gateway-API实践

1.HTTP流量访问

1.1 先创建实验业务Pod

bash

[root@k8s-master ~/GatewayApi]# cat 1-deply.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: gatewayapi

labels:

app: gatewayapi-pod

spec:

replicas: 2

selector:

matchLabels:

app: gatewayapi-pod

template:

metadata:

labels:

app: gatewayapi-pod

spec:

containers:

- name: myweb

image: myweb:v1

resources:

requests:

cpu: 300m

memory: 500Mi

limits:

cpu: 500m

memory: 600Mi然后给使用SVC给暴露出来,类型如果没有LB环境可以使用Nodeport等。

bash

kubectl expose deployment gatewayapi --name=gatewayapi-svc --port=80 --target-port=80 --type=LoadBalancer查看资源状态

bash

[root@k8s-master ~/GatewayApi]# kubectl get po,svc

NAME READY STATUS RESTARTS AGE

pod/gatewayapi-5db9676fcf-tnqsm 1/1 Running 0 52m

pod/gatewayapi-5db9676fcf-z2z6n 1/1 Running 0 52m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/gatewayapi-svc LoadBalancer 10.96.121.221 10.0.0.150 80:30980/TCP 5h4m

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 66d1.2 创建GatewayClass

定义一个 Kubernetes 的 GatewayClass 资源 ,名称为 envoy,核心作用是:为集群中的 Gateway 实例绑定具体的网关实现(Envoy Gateway),相当于给网关指定 "底层引擎"。

bash

[root@k8s-master ~/GatewayApi]# cat eg-class.yaml

apiVersion: gateway.networking.k8s.io/v1

kind: GatewayClass

metadata:

# 给这个GatewayClass命名为envoy,后续创建Gateway时会通过这个名称关联

name: envoy

spec:

# 这个值是Envoy Gateway控制器的官方唯一标识

# 意味着所有关联到这个GatewayClass的Gateway实例,都会由Envoy Gateway控制器处理

controllerName: gateway.envoyproxy.io/gatewayclass-controllerGatewayClass 是 K8s 集群中全局唯一的资源(同一种控制器只能有一个 GatewayClass),它不直接处理流量,而是定义 "用什么网关技术栈来处理流量"。

比如这里的 envoy 类型,就指定了底层用 Envoy Gateway 这套技术栈(控制器 + Envoy 代理)。

1.3 创建Gateway和HTTPRoute

Gateway:创建一个基于 Envoy 网关的 HTTP 流量入口(监听 80 端口),允许所有命名空间的路由规则关联它;

HTTPRoute :定义具体的路由规则 ------ 将 www.mywebv1.com 域名的所有请求,转发到 default 命名空间的 gatewayapi-svc:80 服务。

bash

[root@k8s-master ~/GatewayApi]# kubectl create ns gateway-api

[root@k8s-master ~/GatewayApi]# cat gateway-httproute.yaml

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: gateway # Gateway名称,后续HTTPRoute会通过这个名称关联

namespace: gateway-api # 所属命名空间(Gateway和HTTPRoute建议同命名空间,也可跨命名空间)

spec:

# 【核心关联字段】绑定之前创建的GatewayClass(名称必须和eg-class.yaml中的envoy完全一致)

# 表示这个Gateway由Envoy Gateway控制器管理,底层用Envoy代理提供流量入口

gatewayClassName: envoy

# 监听配置:定义Gateway对外提供的流量监听端口/协议

listeners:

- name: http

port: 80

protocol: HTTP

# 允许关联的路由规则范围(安全控制)

allowedRoutes:

namespaces:

from: All # 允许所有命名空间的HTTPRoute关联这个Gateway(开发/测试环境常用)

# 生产环境可设为Same(仅同命名空间)或Selector(指定命名空间)

---

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: myweb

namespace: gateway-api

spec:

parentRefs:

- name: gateway # 关联上面创建的Gateway名称(必须匹配)

# 若Gateway在其他命名空间,需加namespace字段:namespace: xxx

hostnames:

- www.mywebv1.com # 匹配的域名(支持多个,如- api.mywebv1.com)

# 路由规则:定义"匹配什么请求"→"转发到哪里"

rules:

- matches: # 匹配条件:满足这些条件的请求才会被转发

- path: # 路径匹配规则

type: PathPrefix # 匹配类型:前缀匹配(常用,如/会匹配/、/index、/api等所有前缀)

# 其他类型:Exact(精确匹配)、RegularExpression(正则)

value: / # 匹配的路径前缀:所有以/开头的请求(即所有请求)

backendRefs: # 转发目标:满足匹配条件的请求转发到这里

- name: gatewayapi-svc # 目标Service名称(在default命名空间)

namespace: default # 目标Service的命名空间(跨命名空间转发需指定)

port: 80 # 目标Service的端口号关于allowedRoutes.ns

为什么生成环境推荐same或者selector?

allowedRoutes.namespaces.from是 Gateway 的路由准入规则 ,作用是:限制哪些命名空间的 HTTPRoute 可以绑定到当前 Gateway。

from: All:任意命名空间的 HTTPRoute 都能绑定到这个 Gateway;

from: Same:只有和 Gateway 处于同一命名空间的 HTTPRoute 才能绑定。生产环境禁用

All、推荐Same,本质是遵循 K8s 最核心的安全原则:最小权限 + 故障隔离。

1.4 ReferenceGrant

Gateway API 的跨命名空间引用授权凭证,解决 "默认禁止跨命名空间引用后端资源" 的问题;

定义 ReferenceGrant 资源 (Gateway API 安全管控核心资源),核心作用是:显式授权 gateway-api 命名空间的 HTTPRoute 资源,可引用 default 命名空间的 Service 资源。

结合之前的配置:HTTPRoute(gateway-api 命名空间)要转发流量到 default 命名空间的 gatewayapi-svc,K8s 出于安全默认禁止这种跨命名空间引用,ReferenceGrant 就是 "放行凭证"。

bash

# 1. API版本:Gateway API的v1beta1版本(稳定且广泛支持)

apiVersion: gateway.networking.k8s.io/v1beta1

# 2. 资源类型:ReferenceGrant(引用授权)

kind: ReferenceGrant

# 3. 元数据:核心注意点------namespace必须和「被引用的资源」(这里是default的Service)同命名空间

metadata:

name: allow-gateway-to-default # 授权规则名称(自定义,唯一即可)

namespace: default # 【关键】必须和后端Service(gatewayapi-svc)在同一个命名空间

# 4. 规格配置:定义"谁能引用"和"能引用什么"

spec:

# from:指定「发起引用的资源」(谁可以来引用)

from:

- group: gateway.networking.k8s.io # 发起引用的资源所属API组(HTTPRoute属于这个组)

kind: HTTPRoute # 发起引用的资源类型(HTTPRoute)

namespace: gateway-api # 发起引用的资源所在命名空间(你的HTTPRoute在这个空间)

# to:指定「被引用的资源」(能引用什么)

to:

- group: "" # 被引用的资源所属API组:空字符串=K8s核心API组(Service属于核心组)

kind: Service # 被引用的资源类型(Service)

# 可选字段:name(指定具体Service名称),不写则允许引用该命名空间下所有Service

# name: gatewayapi-svc # 生产环境建议加上,缩小授权范围ReferenceGrant 核心作用

- 解决 "跨命名空间引用的安全限制"

2.实现 "最小权限的精准授权"

- 补充 Gateway 的

allowedRoutes管控盲区

依次创建后检查资源状态

bash

[root@k8s-master ~/GatewayApi]# kubectl get po,svc

NAME READY STATUS RESTARTS AGE

pod/gatewayapi-5db9676fcf-tnqsm 1/1 Running 1 (11m ago) 17h

pod/gatewayapi-5db9676fcf-z2z6n 1/1 Running 1 (11m ago) 17h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/gatewayapi-svc LoadBalancer 10.96.121.221 10.0.0.150 80:30980/TCP 21h

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 66d

[root@k8s-master ~/GatewayApi]# kubectl get gateway,httproute -n gateway-api

NAME CLASS ADDRESS PROGRAMMED AGE

gateway.gateway.networking.k8s.io/gateway envoy 10.0.0.151 True 17h

NAME HOSTNAMES AGE

httproute.gateway.networking.k8s.io/myweb ["www.mywebv1.com"] 17h

[root@k8s-master ~/GatewayApi]# kubectl get po,svc -n envoy-gateway-system

NAME READY STATUS RESTARTS AGE

pod/envoy-gateway-757bb4947-s5bvr 1/1 Running 1 (11m ago) 17h

pod/envoy-gateway-api-gateway-85d663be-d59c54954-rbrdn 2/2 Running 2 (11m ago) 17h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/envoy-gateway ClusterIP 10.96.122.237 <none> 18000/TCP,18001/TCP,18002/TCP,19001/TCP,9443/TCP 17h

service/envoy-gateway-api-gateway-85d663be LoadBalancer 10.111.107.73 10.0.0.151 80:31876/TCP 17h

[root@k8s-master ~/GatewayApi]# envoy-gateway-api-gateway-xxx 的 Pod/Service 是 Envoy Gateway 控制器 自动创建的,触发源是你手动创建的 Gateway 资源;

这些资源是 Gateway 规则的 "数据面载体",负责实际的流量转发和端口暴露;

命名中的哈希值对应你的 Gateway 资源,确保 "一个 Gateway 对应一套数据面资源";

控制器自动管理这些资源的生命周期(创建 / 更新 / 删除),你只需维护 Gateway/HTTPRoute 规则即可。

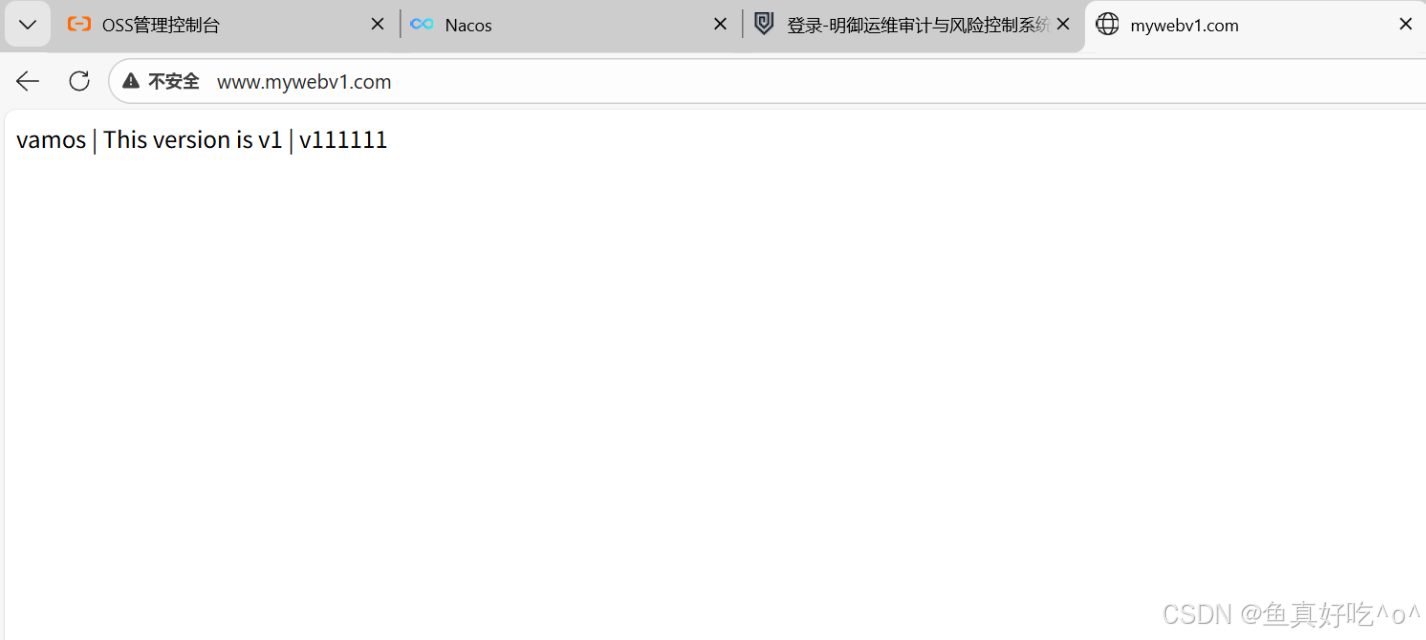

1.5 验证

查看envoy-gateway的日志是没有问题的,可以捕捉到。

bash

[root@k8s-master ~/GatewayApi]# kubectl -n envoy-gateway-system logs -f envoy-gateway-api-gateway-85d663be-d59c54954-rbrdn

Defaulted container "envoy" out of: envoy, shutdown-manager

{":authority":"www.mywebv1.com","bytes_received":0,"bytes_sent":555,"connection_termination_details":null,"downstream_local_address":"10.200.36.93:10080","downstream_remote_address":"10.0.0.1:63396","duration":7,"method":"GET","protocol":"HTTP/1.1","requested_server_name":null,"response_code":404,"response_code_details":"via_upstream","response_flags":"-","route_name":"httproute/gateway-api/myweb/rule/0/match/0/www_mywebv1_com","start_time":"2026-01-09T01:19:30.784Z","upstream_cluster":"httproute/gateway-api/myweb/rule/0","upstream_host":"10.200.169.170:80","upstream_local_address":"10.200.36.93:39200","upstream_transport_failure_reason":null,"user-agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/143.0.0.0 Safari/537.36 Edg/143.0.0.0","x-envoy-origin-path":"/favicon.ico","x-envoy-upstream-service-time":null,"x-forwarded-for":"10.0.0.1","x-request-id":"72c1e2ff-5d1f-4912-9eb1-d62427d60215"}

{":authority":"www.mywebv1.com","bytes_received":0,"bytes_sent":0,"connection_termination_details":null,"downstream_local_address":"10.200.36.93:10080","downstream_remote_address":"10.0.0.1:63396","duration":3,"method":"GET","protocol":"HTTP/1.1","requested_server_name":null,"response_code":304,"response_code_details":"via_upstream","response_flags":"-","route_name":"httproute/gateway-api/myweb/rule/0/match/0/www_mywebv1_com","start_time":"2026-01-09T01:19:49.159Z","upstream_cluster":"httproute/gateway-api/myweb/rule/0","upstream_host":"10.200.169.170:80","upstream_local_address":"10.200.36.93:39200","upstream_transport_failure_reason":null,"user-agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/143.0.0.0 Safari/537.36 Edg/143.0.0.0","x-envoy-origin-path":"/","x-envoy-upstream-service-time":null,"x-forwarded-for":"10.0.0.1","x-request-id":"c9036f80-0706-49d0-b1bf-7fd9b7774365"}2. 流量分发权重访问

- 核心作用:灰度发布 / 金丝雀发布,安全迭代新版本,避免全量上线故障;

- 生产表现:按配置比例分发流量(如 80% 到旧版 svcv1-https、20% 到新版 svcv2-https);实时监控新版服务的错误率、响应耗时,无异常则逐步提高权重至 100%,有问题可快速切回旧版。

2.1 创建两个版本的Pod

bash

[root@k8s-master ~/GatewayApi/SpecifiedTraffic]# cat v1-v2-deploy-svc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: podv1

labels:

app: podv1

spec:

replicas: 1

selector:

matchLabels:

app: v1

template:

metadata:

labels:

app: v1

spec:

containers:

- name: myweb

image: myweb:v1

resources:

requests:

cpu: 300m

memory: 500Mi

limits:

cpu: 500m

memory: 600Mi

ports:

- containerPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: podv2

labels:

app: podv2

spec:

replicas: 1

selector:

matchLabels:

app: v2

template:

metadata:

labels:

app: v2

spec:

containers:

- name: myweb2

image: myweb:v2

resources:

requests:

cpu: 300m

memory: 500Mi

limits:

cpu: 500m

memory: 600Mi

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: svcv1

labels:

app: svcv1

spec:

selector:

app: v1

ports:

- port: 80

targetPort: 80

protocol: TCP

type: ClusterIP

---

apiVersion: v1

kind: Service

metadata:

name: svcv2

labels:

app: svcv2

spec:

selector:

app: v2

ports:

- port: 80

targetPort: 80

protocol: TCP

type: ClusterIP同命名空间下,HTTPRoute 引用 Service 无需 ReferenceGrant,配置简化;

核心修正:HTTPRoute 的 parentRefs.name 必须与 Gateway 名称一致(mywebgty);

该配置部署后,即可实现 80%:20% 的流量权重分发,且符合生产环境的命名空间隔离原则(from: Same)。

2.2 创建Gateway和HTTP Route

bash

[root@k8s-master ~/GatewayApi/SpecifiedTraffic]# cat Gateway.yaml

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: mywebgty

namespace: default

spec:

gatewayClassName: envoy

listeners:

- name: http

port: 80

protocol: HTTP

allowedRoutes:

namespaces:

from: Same

---

# 流量权重分发的HTTPRoute

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: myweb-weight

namespace: default

spec:

parentRefs:

- name: mywebgty

hostnames:

- www.myweb.com

rules:

- matches:

- path:

type: PathPrefix

value: /

# 核心:配置后端Service及流量权重

backendRefs:

- name: svcv1 # 指向v1版本Service

namespace: default # svcv1在default命名空间

port: 80

weight: 80 # 80%流量转发到svcv1

- name: svcv2 # 指向v2版本Service

namespace: default # svcv2在default命名空间

port: 80

weight: 20 # 20%流量转发到svcv22.3 创建资源并验证

bash

[root@k8s-master ~/GatewayApi/SpecifiedTraffic]# kubectl get po,svc

NAME READY STATUS RESTARTS AGE

pod/podv1-595475f94f-pfm4j 1/1 Running 0 64m

pod/podv2-8647fd4c55-sf5zn 1/1 Running 0 64m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 67d

service/svcv1 ClusterIP 10.107.225.59 <none> 80/TCP 64m

service/svcv2 ClusterIP 10.107.137.76 <none> 80/TCP 64m

[root@k8s-master ~/GatewayApi/SpecifiedTraffic]# kubectl get gateway,httproute

NAME CLASS ADDRESS PROGRAMMED AGE

gateway.gateway.networking.k8s.io/mywebgty envoy 10.0.0.150 True 35m

NAME HOSTNAMES AGE

httproute.gateway.networking.k8s.io/myweb-weight ["www.myweb.com"] 35m

[root@k8s-master ~/GatewayApi/SpecifiedTraffic]# kubectl get po,svc -n envoy-gateway-system

NAME READY STATUS RESTARTS AGE

pod/envoy-default-mywebgty-8bf49184-7cb9f884b9-mbt62 2/2 Running 0 36m

pod/envoy-gateway-757bb4947-s5bvr 1/1 Running 1 (141m ago) 19h

pod/envoy-gateway-757bb4947-vnrdw 1/1 Running 0 118m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/envoy-default-mywebgty-8bf49184 LoadBalancer 10.104.178.72 10.0.0.150 80:32633/TCP 36m

service/envoy-gateway ClusterIP 10.96.122.237 <none> 18000/TCP,18001/TCP,18002/TCP,19001/TCP,9443/TCP 19h此时我们访问页面就是v1和v2跳着来的,我们就可以利用这个特性去实现灰度发布和AB测试。

3. HTTPS流量访问

3.1 案例目标

-

前端对外:Envoy Gateway 暴露 443 端口(HTTPS),域名

www.myweb.com,MetalLB 分配 IP10.0.0.150; -

后端对内:v1/v2 Pod 基于 Nginx 启用 443 端口(HTTPS),域名

backend.myweb.com; -

流量分发:端到端 HTTPS 加密,v1 占比 80%,v2 占比 20%;

-

全程无明文,测试用自签证书(生产可直接替换为正式 CA 证书)。

3.2 生产测试证书

bash

生成前端证书(对应域名 `www.myweb.com`,对外暴露)

# 1. 进入工作目录(避免文件混乱)

mkdir -p ~/GatewayApi/CompleteE2E && cd ~/GatewayApi/CompleteE2E

# 2. 生成前端私钥(2048 位 RSA)

openssl genrsa -out frontend-tls.key 2048

# 3. 生成前端证书签名请求(CSR),域名与 HTTPRoute 一致

openssl req -new -key frontend-tls.key -out frontend-tls.csr \

-subj "/C=CN/ST=Beijing/L=Beijing/O=MyOrg/OU=MyTeam/CN=www.myweb.com"

# 4. 自签前端证书(有效期 365 天)

openssl x509 -req -days 365 -in frontend-tls.csr -signkey frontend-tls.key -out frontend-tls.crt

bash

生成后端证书(对应域名 `backend.myweb.com`,对内加密)

# 1. 生成后端私钥

openssl genrsa -out backend-tls.key 2048

# 2. 生成后端证书签名请求(CSR),域名与后端 Nginx 配置一致

openssl req -new -key backend-tls.key -out backend-tls.csr \

-subj "/C=CN/ST=Beijing/L=Beijing/O=MyOrg/OU=MyTeam/CN=backend.myweb.com"

# 3. 自签后端证书(有效期 365 天)

openssl x509 -req -days 365 -in backend-tls.csr -signkey backend-tls.key -out backend-tls.crt

bash

-rw-r--r-- 1 root root 1289 Jan 9 13:59 backend-tls.crt

-rw-r--r-- 1 root root 1013 Jan 9 13:59 backend-tls.csr

-rw------- 1 root root 1704 Jan 9 13:59 backend-tls.key

-rw-r--r-- 1 root root 1277 Jan 9 13:58 frontend-tls.crt

-rw-r--r-- 1 root root 1009 Jan 9 13:58 frontend-tls.csr

-rw------- 1 root root 1704 Jan 9 13:58 frontend-tls.key将生成的证书存储为 k8s kubernetes.io/tls 类型 Secret,供 Envoy Gateway 和后端 Pod 挂载使用。

3.3 创建Secret资源

bash

创建前端证书 Secret(`default` 命名空间)

kubectl create secret tls frontend-myweb-tls-secret \

--namespace default \

--cert=frontend-tls.crt \

--key=frontend-tls.key

创建后端证书 Secret(`default` 命名空间)

kubectl create secret tls backend-myweb-tls-secret \

--namespace default \

--cert=backend-tls.crt \

--key=backend-tls.key验证 Secret 状态(创建成功即可)

bash

[root@k8s-master ~/GatewayApi/CompleteE2E]# kubectl get secret -n default | grep myweb-tls-secret

backend-myweb-tls-secret kubernetes.io/tls 2 28m

frontend-myweb-tls-secret kubernetes.io/tls 2 28m3.4 创建HTTPS业务后端应用

创建后端 Nginx HTTPS 配置 ConfigMap(核心:开启 443 SSL)

创建自定义 Nginx 配置,明确开启 listen 443 ssl;(后端 HTTPS 核心开关),并引用后端证书。

bash

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-https-config

namespace: default

data:

# v1 后端 HTTPS 配置文件

myweb-v1-https.conf: |

server {

# 核心:开启 443 端口 + SSL 加密(后端 HTTPS 开关)

listen 443 ssl;

server_name backend.myweb.com;

# 引用挂载的后端 TLS 证书(Secret 挂载后文件名固定为 tls.crt/tls.key)

ssl_certificate /etc/nginx/tls/tls.crt;

ssl_certificate_key /etc/nginx/tls/tls.key;

# 输出 v1 版本标识(用于后续验证权重)

location / {

default_type text/plain;

return 200 "This is v1 HTTPS backend (80% traffic)\n";

}

}

# v2 后端 HTTPS 配置文件(仅返回内容不同,其余与 v1 一致)

myweb-v2-https.conf: |

server {

listen 443 ssl;

server_name backend.myweb.com;

ssl_certificate /etc/nginx/tls/tls.crt;

ssl_certificate_key /etc/nginx/tls/tls.key;

# 输出 v2 版本标识(用于后续验证权重)

location / {

default_type text/plain;

return 200 "This is v2 HTTPS backend (20% traffic)\n";

}

}

bash

[root@k8s-master ~/GatewayApi/CompleteE2E]# kubectl get configmap nginx-https-config -n default

NAME DATA AGE

nginx-https-config 2 28m创建后端 v1/v2 Deployment + Service(HTTPS 443 端口)

部署后端 Pod(基于 Nginx alpine),挂载 ConfigMap(HTTPS 配置)和 Secret(后端证书),并创建 Service 暴露 443 端口。

bash

[root@k8s-master ~/GatewayApi/CompleteE2E]# cat backend-https-deploy-svc.yaml

# v1 后端 HTTPS Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: podv1-https

labels:

app: v1-https

spec:

replicas: 1

selector:

matchLabels:

app: v1-https

template:

metadata:

labels:

app: v1-https

spec:

containers:

- name: myweb-v1-https

image: nginx:alpine # 轻量 Nginx 镜像,支持挂载配置

resources:

requests:

cpu: 300m

memory: 500Mi

limits:

cpu: 500m

memory: 600Mi

ports:

- containerPort: 443 # 容器监听 443 端口(HTTPS)

# 挂载:1. v1 Nginx HTTPS 配置 2. 后端 TLS 证书

volumeMounts:

- name: nginx-https-v1-conf

mountPath: /etc/nginx/conf.d/ # Nginx 配置文件目录

- name: backend-tls-cert

mountPath: /etc/nginx/tls/ # 后端证书挂载目录(只读)

readOnly: true

# 定义挂载卷

volumes:

- name: nginx-https-v1-conf

configMap:

name: nginx-https-config

items: # 仅挂载 v1 配置文件

- key: myweb-v1-https.conf

path: myweb-v1-https.conf

- name: backend-tls-cert

secret:

secretName: backend-myweb-tls-secret # 引用后端证书 Secret

---

# v2 后端 HTTPS Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: podv2-https

labels:

app: v2-https

spec:

replicas: 1

selector:

matchLabels:

app: v2-https

template:

metadata:

labels:

app: v2-https

spec:

containers:

- name: myweb-v2-https

image: nginx:alpine

resources:

requests:

cpu: 300m

memory: 500Mi

limits:

cpu: 500m

memory: 600Mi

ports:

- containerPort: 443

volumeMounts:

- name: nginx-https-v2-conf

mountPath: /etc/nginx/conf.d/

- name: backend-tls-cert

mountPath: /etc/nginx/tls/

readOnly: true

volumes:

- name: nginx-https-v2-conf

configMap:

name: nginx-https-config

items: # 仅挂载 v2 配置文件

- key: myweb-v2-https.conf

path: myweb-v2-https.conf

- name: backend-tls-cert

secret:

secretName: backend-myweb-tls-secret

---

# v1 后端 HTTPS Service(ClusterIP 类型,仅集群内访问)

apiVersion: v1

kind: Service

metadata:

name: svcv1-https

labels:

app: svcv1-https

spec:

selector:

app: v1-https # 匹配 v1 Pod 标签

ports:

- port: 443 # Service 端口

targetPort: 443 # 指向 Pod 443 端口(HTTPS)

protocol: TCP

type: ClusterIP

---

# v2 后端 HTTPS Service(ClusterIP 类型,仅集群内访问)

apiVersion: v1

kind: Service

metadata:

name: svcv2-https

labels:

app: svcv2-https

spec:

selector:

app: v2-https # 匹配 v2 Pod 标签

ports:

- port: 443

targetPort: 443

protocol: TCP

type: ClusterIP

bash

# 1. 验证 Deployment 状态

kubectl get deploy podv1-https podv2-https -n default

# 2. 验证 Pod 状态(READY 1/1,Running)

kubectl get pod -n default | grep -E "podv1-https|podv2-https"

# 3. 验证 Service 状态(ClusterIP 分配正常)

kubectl get svc svcv1-https svcv2-https -n default

NAME READY UP-TO-DATE AVAILABLE AGE

podv1-https 1/1 1 1 23m

podv2-https 1/1 1 1 23m

podv1-https-7d9d487cdf-vbdb5 1/1 Running 0 21m

podv2-https-7888fb78f-zr8bs 1/1 Running 0 21m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svcv1-https ClusterIP 10.111.155.187 <none> 443/TCP 23m

svcv2-https ClusterIP 10.105.106.6 <none> 443/TCP 23m3.5 创建Gateway和HTTPRoute

bash

[root@k8s-master ~/GatewayApi/CompleteE2E]# cat gateway-e2e-tls.yaml

# Gateway(与你原来一致)

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: mywebgty-e2e-tls

namespace: default

spec:

gatewayClassName: envoy

listeners:

- name: https-external

port: 443

protocol: HTTPS

allowedRoutes:

namespaces:

from: Same

tls:

mode: Terminate

certificateRefs:

- name: frontend-myweb-tls-secret

kind: Secret

group: ""

---

# HTTPRoute: 去掉 backendRefs.tls,只用 port: 443 + weight

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: myweb-weight-e2e-tls

namespace: default

spec:

parentRefs:

- name: mywebgty-e2e-tls

hostnames:

- www.myweb.com

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: svcv1-https

port: 443

weight: 80

- name: svcv2-https

port: 443

weight: 20

---

# BackendTLSPolicy: 指定 Gateway 如何与 backend 建立 TLS(SNI + 使用系统 CA)

# 注意:BackendTLSPolicy 必须与被指向的 Service 在同一 namespace

apiVersion: gateway.networking.k8s.io/v1

kind: BackendTLSPolicy

metadata:

name: myweb-backend-tls

namespace: default

spec:

targetRefs:

- kind: Service

name: svcv1-https

group: ""

- kind: Service

name: svcv2-https

group: ""

validation:

wellKnownCACertificates: "System" # 或使用 caCertificateRefs 指向 ConfigMap/Secret

hostname: backend.myweb.com3.6 验证

bash

[root@k8s-master ~/GatewayApi/CompleteE2E]# kubectl get gateway,httproute,BackendTLSPolicy

NAME CLASS ADDRESS PROGRAMMED AGE

gateway.gateway.networking.k8s.io/mywebgty-e2e-tls envoy 10.0.0.150 True 13m

NAME HOSTNAMES AGE

httproute.gateway.networking.k8s.io/myweb-weight-e2e-tls ["www.myweb.com"] 13m

NAME AGE

backendtlspolicy.gateway.networking.k8s.io/myweb-backend-tls 13m

[root@k8s-master ~/GatewayApi/CompleteE2E]# kubectl get po,svc -n envoy-gateway-system

NAME READY STATUS RESTARTS AGE

pod/envoy-default-mywebgty-e2e-tls-5716b838-58c6976d47-wkp4g 2/2 Running 0 13m

pod/envoy-gateway-757bb4947-s5bvr 1/1 Running 2 (51m ago) 23h

pod/envoy-gateway-757bb4947-vnrdw 1/1 Running 1 (50m ago) 5h3m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/envoy-default-mywebgty-e2e-tls-5716b838 LoadBalancer 10.101.237.78 10.0.0.150 443:30764/TCP 13m

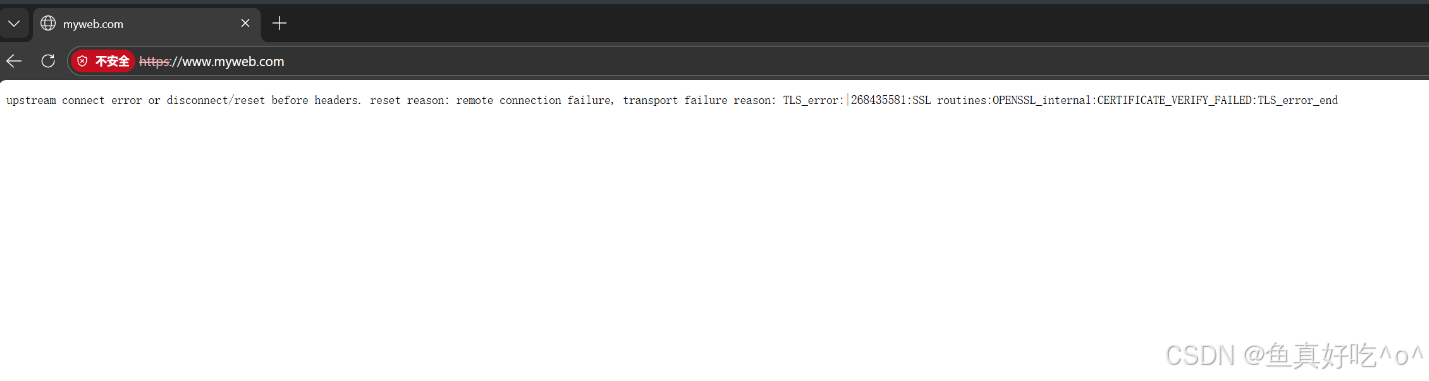

service/envoy-gateway ClusterIP 10.96.122.237 <none> 18000/TCP,18001/TCP,18002/TCP,19001/TCP,9443/TCP 23h浏览器内置了「可信 CA 列表」(比如阿里云 / Let's Encrypt 这类正规 CA 都在列表里),但你测试环境用的是自签证书 (不是正规 CA 签发的),不在浏览器的可信列表里,所以浏览器会判定 "证书不可信",并提示 "不安全",但流量转发的链路本身是通的。

bash

[root@k8s-master ~/GatewayApi/CompleteE2E]# kubectl -n envoy-gateway-system logs -f --tail=10 envoy-default-mywebgty-e2e-tls-5716b838-58c6976d47-wkp4g

Defaulted container "envoy" out of: envoy, shutdown-manager

{":authority":"www.myweb.com","bytes_received":0,"bytes_sent":216,"connection_termination_details":null,"downstream_local_address":"10.200.36.107:10443","downstream_remote_address":"10.0.0.1:62868","duration":1,"method":"GET","protocol":"HTTP/2","requested_server_name":null,"response_code":503,"response_code_details":"upstream_reset_before_response_started{remote_connection_failure|TLS_error:|268435581:SSL_routines:OPENSSL_internal:CERTIFICATE_VERIFY_FAILED:TLS_error_end}","response_flags":"UF","route_name":"httproute/default/myweb-weight-e2e-tls/rule/0/match/0/www_myweb_com","start_time":"2026-01-09T06:22:00.814Z","upstream_cluster":"httproute/default/myweb-weight-e2e-tls/rule/0","upstream_host":"10.200.36.105:443","upstream_local_address":null,"upstream_transport_failure_reason":"TLS_error:|268435581:SSL_routines:OPENSSL_internal:CERTIFICATE_VERIFY_FAILED:TLS_error_end","user-agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/143.0.0.0 Safari/537.36 Edg/143.0.0.0","x-envoy-origin-path":"/","x-envoy-upstream-service-time":null,"x-forwarded-for":"10.0.0.1","x-request-id":"d74349a4-7257-4574-861e-8cecec7712e8"}3.7 生产环境常用说明

生产环境中,同一 K8s 集群内的 Pod 之间通信,无需额外启用 HTTPS 加密(可通过「网络策略」隔离 Pod、「Calico 网络加密」实现集群内流量安全,比手动配置后端 HTTPS 更易维护、开销更低)。

因此,我们简化后端 Deployment + Service,恢复为 80 端口明文通信,删除测试环境的 HTTPS 配置(无需后端证书、无需 Nginx SSL 配置)。

在配置 Envoy Gateway + HTTPRoute 的时候可以引用阿里云正规 SSL 证书 、80 端口重定向到 443(强制 HTTPS) 、80%:20% 权重分发,符合 Gateway API v1 稳定版规范,无测试环境的冗余配置。

bash

# 生产环境 Gateway(HTTPS 443 端口 + 80 端口重定向 + 阿里云证书)

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: mywebgty-prod-https

namespace: default

spec:

gatewayClassName: envoy

listeners:

# 监听 80 端口,强制重定向到 443 HTTPS(生产环境必备)

- name: http-redirect

port: 80

protocol: HTTP

allowedRoutes:

namespaces:

from: Same

# 重定向配置:所有 HTTP 请求转发到 HTTPS 443 端口

hostname:

redirect:

scheme: HTTPS

port: 443

statusCode: 301 # 永久重定向,有利于 SEO 和浏览器缓存

# 监听 443 端口,HTTPS 终止(引用阿里云证书 Secret)

- name: https-main

port: 443

protocol: HTTPS

allowedRoutes:

namespaces:

from: Same

tls:

mode: Terminate # 证书终止在 Envoy Gateway(生产环境最常用)

certificateRefs:

- name: aliyun-myweb-tls-secret # 引用阿里云证书 Secret

kind: Secret

group: ""

# 生产环境优化:启用 HTTP/2,提升访问性能

options:

envoy.gateway.k8s.io/https-options: |

h2Protocol: true

---

# 生产环境 HTTPRoute(80%:20% 权重分发,指向后端 80 端口明文 Service)

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: myweb-weight-prod-https

namespace: default

spec:

parentRefs:

- name: mywebgty-prod-https # 关联生产环境 Gateway

# 绑定阿里云证书的域名(必须与证书 CN/SAN 一致)

hostnames:

- www.myweb.com

rules:

- matches:

- path:

type: PathPrefix

value: / # 匹配所有路径请求

# 核心:80%:20% 权重分发,指向后端明文 Service 80 端口

backendRefs:

- name: svcv1-prod

port: 80

weight: 80 # v1 分配 80% 流量

- name: svcv2-prod

port: 80

weight: 20 # v2 分配 20% 流量

80 端口重定向 443:强制所有 HTTP 请求转为 HTTPS,避免明文传输,提升安全性和用户体验;

启用 HTTP/2:相比 HTTP/1.1,提升并发性能和传输效率,Envoy Gateway 原生支持;

无需 BackendTLSPolicy:后端为集群内明文通信,简化配置,降低维护成本;

证书自动信任 :阿里云证书由可信 CA 签发,客户端(浏览器、curl)无需加

-k参数,直接信任。

4. 熔断与故障隔离

- 核心作用:保护后端服务不被过载流量压垮,避免故障雪崩(如一个服务挂掉连带其他服务不可用);

- 生产表现:当后端并发连接 / 请求数达到阈值(如 2 个并发),Envoy 自动拒绝新请求(返回 503 错误);待后端服务恢复正常后,熔断状态自动解除,流量逐步恢复转发,全程无需人工干预。

熔断

防雪崩:目标服务故障(慢 / 报错),不会拖垮调用方,更不会顺着调用链扩散(比如 C→B→A,A 故障,熔断能让 B 不崩,进而 C 也不崩)

保资源:避免调用方因 "一直等待 / 重试故障服务" 耗尽线程、CPU 等资源,保障调用方自身正常提供服务

-

使用 BackendTrafficPolicy 配置熔断(Circuit Breaker)

-

在并发慢请求场景中

-

由 Envoy 在网关侧直接拒绝请求(503)

-

从而保护后端服务不被压垮

4.1 创建测试Pod用例

bash

[root@k8s-master ~/GatewayApi/cb]# cat demo-cb.yaml

# 1. Namespace

apiVersion: v1

kind: Namespace

metadata:

name: gateway-cb-demo

---

# 2. httpbin Deployment (后端,用于产生延迟/错误)

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpbin

namespace: gateway-cb-demo

labels:

app: httpbin

spec:

replicas: 3

selector:

matchLabels:

app: httpbin

template:

metadata:

labels:

app: httpbin

spec:

containers:

- name: httpbin

# 使用官方 httpbin 镜像(支持 /delay/:n 等路径)

image: docker.io/mccutchen/go-httpbin:v2.15.0

ports:

- containerPort: 8080

# 可根据需要调整资源限制

resources: {}

---

# 3. Service 暴露 httpbin

apiVersion: v1

kind: Service

metadata:

name: httpbin

namespace: gateway-cb-demo

spec:

selector:

app: httpbin

ports:

- port: 80

targetPort: 8080

protocol: TCP4.2 创建Gateway和HTTP Route

bash

# 4. Gateway(监听 HTTP 80)

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: demo-gateway

namespace: gateway-cb-demo

spec:

gatewayClassName: envoy

listeners:

- name: http

protocol: HTTP

port: 80

allowedRoutes:

namespaces:

from: Same

---

# 5. HTTPRoute(把 host 路由到 httpbin 服务)

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: httpbin-route

namespace: gateway-cb-demo

spec:

parentRefs:

- name: demo-gateway

hostnames:

- "www.example.com"

rules:

- matches:

- path:

type: PathPrefix

value: "/"

backendRefs:

- name: httpbin

port: 80

---

# 6. BackendTrafficPolicy(为上面路由的后端设置极低阈值以便容易触发熔断)

# 说明:这是 EnvoyGateway 扩展 CRD(v1alpha1 预览版),专用于配置后端流量治理(此处核心是熔断)

apiVersion: gateway.envoyproxy.io/v1alpha1

kind: BackendTrafficPolicy

metadata:

name: httpbin-backend-policy # 策略名称,自定义用于标识该熔断策略

namespace: gateway-cb-demo # 命名空间:必须与目标 HTTPRoute(httpbin-route)所在命名空间一致,否则无法绑定生效

spec:

# 目标资源绑定:指定该熔断策略作用于哪个资源(此处是 HTTPRoute 路由)

targetRefs:

- group: gateway.networking.k8s.io # 目标资源所属 API 组(HTTPRoute 属于 gateway.networking.k8s.io)

kind: HTTPRoute # 目标资源类型(作用于 HTTPRoute 路由)

name: httpbin-route # 目标资源名称(具体要绑定的路由名称:httpbin-route)

# 熔断规则配置:核心字段,设置极低阈值,方便快速触发熔断(用于演示/测试)

circuitBreaker:

maxConnections: 2 # 后端服务最大并发连接数:仅允许 2 个并发连接,超过则触发熔断

maxPendingRequests: 1 # 后端服务最大等待请求数:请求排队上限仅 1 个,超过则拒绝

maxParallelRequests: 2 # 后端服务最大并行请求数:仅允许 2 个并行请求,超过则触发熔断

bash

[root@k8s-master ~/GatewayApi/cb]# kubectl -n gateway-cb-demo get po,svc,BackendTrafficPolicy,HTTPRoute,gateway

NAME READY STATUS RESTARTS AGE

pod/httpbin-b8b86ff46-lpzdd 1/1 Running 0 37s

pod/httpbin-b8b86ff46-mthb4 1/1 Running 0 37s

pod/httpbin-b8b86ff46-xt9zm 1/1 Running 0 37s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/httpbin ClusterIP 10.96.159.130 <none> 80/TCP 37s

NAME AGE

backendtrafficpolicy.gateway.envoyproxy.io/httpbin-backend-policy 36s

NAME HOSTNAMES AGE

httproute.gateway.networking.k8s.io/httpbin-route ["www.example.com"] 36s

NAME CLASS ADDRESS PROGRAMMED AGE

gateway.gateway.networking.k8s.io/demo-gateway envoy 10.0.0.150 True 36s

[root@k8s-master ~/GatewayApi/cb]# kubectl get po,svc -n envoy-gateway-system

NAME READY STATUS RESTARTS AGE

pod/envoy-gateway-757bb4947-s5bvr 1/1 Running 2 (81m ago) 23h

pod/envoy-gateway-757bb4947-vnrdw 1/1 Running 1 (80m ago) 5h33m

pod/envoy-gateway-cb-demo-demo-gateway-14f87157-5fcd5474cd-h4ftz 2/2 Running 0 41s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/envoy-gateway ClusterIP 10.96.122.237 <none> 18000/TCP,18001/TCP,18002/TCP,19001/TCP,9443/TCP 23h

service/envoy-gateway-cb-demo-demo-gateway-14f87157 LoadBalancer 10.109.39.213 10.0.0.150 80:32508/TCP 41s

bash

[root@k8s-master ~/GatewayApi/cb]# kubectl -n gateway-cb-demo describe backendtrafficpolicy httpbin-backend-policy

Name: httpbin-backend-policy

Namespace: gateway-cb-demo

Labels: <none>

Annotations: <none>

API Version: gateway.envoyproxy.io/v1alpha1

Kind: BackendTrafficPolicy

Metadata:

Creation Timestamp: 2026-01-09T07:02:04Z

Generation: 1

Resource Version: 97031

UID: 7008ee99-27ab-4f8b-bd92-dc07f21fc71a

Spec:

Circuit Breaker:

Max Connections: 2

Max Parallel Requests: 2

Max Parallel Retries: 1024

Max Pending Requests: 1

Target Refs:

Group: gateway.networking.k8s.io

Kind: HTTPRoute

Name: httpbin-route

Status:

Ancestors:

Ancestor Ref:

Group: gateway.networking.k8s.io

Kind: Gateway

Name: demo-gateway

Namespace: gateway-cb-demo

Conditions:

Last Transition Time: 2026-01-09T07:02:04Z

Message: Policy has been accepted.

Observed Generation: 1

Reason: Accepted

Status: True

Type: Accepted

Controller Name: gateway.envoyproxy.io/gatewayclass-controller

Events: <none>

bash

[root@k8s-node1 ~]# curl -v -H "Host: www.example.com" http://10.0.0.150/ -I

* Trying 10.0.0.150:80...

* Connected to 10.0.0.150 (10.0.0.150) port 80

* using HTTP/1.x

> HEAD / HTTP/1.1

> Host: www.example.com

> User-Agent: curl/8.14.1

> Accept: */*

>

* Request completely sent off

< HTTP/1.1 200 OK

HTTP/1.1 200 OK

< access-control-allow-credentials: true

access-control-allow-credentials: true

< access-control-allow-origin: *

access-control-allow-origin: *

< content-security-policy: default-src 'self'; style-src 'self' 'unsafe-inline'; img-src 'self' camo.githubusercontent.com

content-security-policy: default-src 'self'; style-src 'self' 'unsafe-inline'; img-src 'self' camo.githubusercontent.com

< content-type: text/html; charset=utf-8

content-type: text/html; charset=utf-8

< date: Fri, 09 Jan 2026 07:10:03 GMT

date: Fri, 09 Jan 2026 07:10:03 GMT

< transfer-encoding: chunked

transfer-encoding: chunked

<

* Connection #0 to host 10.0.0.150 left intact

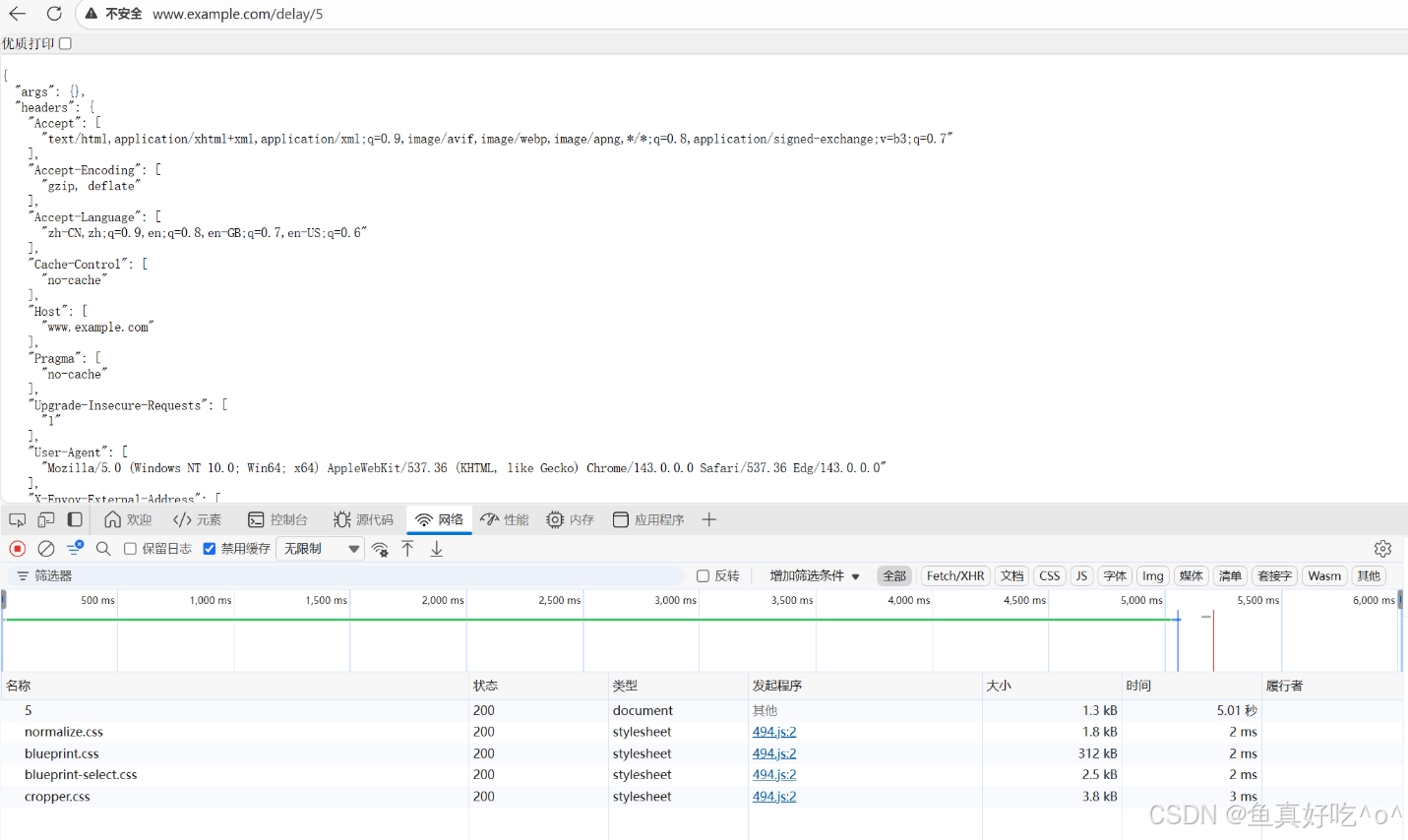

并发慢请求触发熔断

bash

[root@k8s-node1 ~]# for i in {1..10}; do curl -s -o /dev/null -w "%{http_code}\n" -H "Host: www.example.com" http://10.0.0.150/delay/5 & done

[root@k8s-node1 ~]# 503

200

200

503

503

503

503

503

503

503

BackendTrafficPolicy 会被 Envoy Gateway 下沉为 Envoy Cluster 级 Circuit Breaker

当并发连接 / 请求数超过阈值:

Envoy 不会再向后端发请求

直接返回

HTTP 503熔断行为发生在 网关层(L7),不是后端 Pod

在 MetalLB L2 环境下:

LoadBalancer IP 仅在宣告节点可达

测试需在该节点上执行

在 Envoy Gateway 中,BackendTrafficPolicy 将 Gateway API 的抽象策略转化为 Envoy 原生的 Cluster Circuit Breaker,从而在网关层实现快速失败与后端保护,是企业级流量治理的重要基础能力。

4.3 故障隔离示例

故障隔离是自动隔离不健康的后端实例 / Pod,避免流量转发到故障节点 ,主要依赖「健康检查 + 路由故障转移」,Gateway API + Envoy Gateway 可通过 BackendHealthPolicy 实现。

实现步骤(配置后端健康检查,隔离故障 Pod)

创建 BackendHealthPolicy 配置后端健康检查规则:

bash

apiVersion: gateway.networking.k8s.io/v1

kind: BackendHealthPolicy

metadata:

name: myweb-backend-health

namespace: default

spec:

targetRefs:

- kind: Service

name: svcv1-https

group: ""

- kind: Service

name: svcv2-https

group: ""

healthCheck:

type: HTTP

http:

path: /health

port: 8080 # Pod 实际监听端口

scheme: HTTP # 避免 TLS 校验问题

interval: 5s

timeout: 2s

unhealthyThreshold: 3

healthyThreshold: 2一句话总结:

这套资源会让 Envoy Gateway 通过主动健康检查自动将不健康的后端 Service 从流量池中摘除,并在其恢复后无感知地重新接入,从而实现 L7 层的自动故障隔离与自愈。

5. 流量镜像

- 核心作用:无感知测试新功能 / 新版本,获取真实流量验证效果,降低上线风险;

- 生产表现:主流量正常转发到生产后端(如 svcv1-https),同时将 100%/ 部分流量副本转发到测试后端;用户无感知,测试环境可获取真实请求数据(如接口参数、响应耗时),验证新版本兼容性。

5.1 创建示例Pod

bash

[root@k8s-master ~/GatewayApi/mirrors]# cat mirror-demo.yaml

# Namespace

apiVersion: v1

kind: Namespace

metadata:

name: gateway-mirror-demo

---

# Primary Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpbin-primary

namespace: gateway-mirror-demo

spec:

replicas: 2

selector:

matchLabels:

app: httpbin-primary

template:

metadata:

labels:

app: httpbin-primary

spec:

containers:

- name: httpbin

image: mccutchen/go-httpbin:v2.15.0

ports:

- containerPort: 8080

---

# Primary Service

apiVersion: v1

kind: Service

metadata:

name: httpbin-primary

namespace: gateway-mirror-demo

spec:

selector:

app: httpbin-primary

ports:

- port: 80

targetPort: 8080

---

# Mirror Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpbin-mirror

namespace: gateway-mirror-demo

spec:

replicas: 1

selector:

matchLabels:

app: httpbin-mirror

template:

metadata:

labels:

app: httpbin-mirror

spec:

containers:

- name: httpbin

image: mccutchen/go-httpbin:v2.15.0

ports:

- containerPort: 8080

---

# Mirror Service

apiVersion: v1

kind: Service

metadata:

name: httpbin-mirror

namespace: gateway-mirror-demo

spec:

selector:

app: httpbin-mirror

ports:

- port: 80

targetPort: 8080

---5.2 创建Gateway和HTTP Route

bash

# Gateway

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: demo-gateway

namespace: gateway-mirror-demo

spec:

gatewayClassName: envoy

listeners:

- name: http

protocol: HTTP

port: 80

allowedRoutes:

namespaces:

from: Same

---

# HTTPRoute with Traffic Mirror

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: mirror-route

namespace: gateway-mirror-demo

spec:

parentRefs:

- name: demo-gateway

hostnames:

- "www.example.com"

rules:

- matches:

- path:

type: PathPrefix

value: /

backendRefs:

- name: httpbin-primary

port: 80

filters:

- type: RequestMirror

requestMirror:

backendRef:

name: httpbin-mirror

port: 805.3 验证

bash

[root@k8s-master ~/GatewayApi/mirrors]# kubectl -n gateway-mirror-demo get deploy,svc,gateway,httproute -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/httpbin-mirror 1/1 1 1 28s httpbin mccutchen/go-httpbin:v2.15.0 app=httpbin-mirror

deployment.apps/httpbin-primary 2/2 2 2 28s httpbin mccutchen/go-httpbin:v2.15.0 app=httpbin-primary

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/httpbin-mirror ClusterIP 10.97.1.72 <none> 80/TCP 28s app=httpbin-mirror

service/httpbin-primary ClusterIP 10.106.106.231 <none> 80/TCP 28s app=httpbin-primary

NAME CLASS ADDRESS PROGRAMMED AGE

gateway.gateway.networking.k8s.io/demo-gateway envoy 10.0.0.150 True 28s

NAME HOSTNAMES AGE

httproute.gateway.networking.k8s.io/mirror-route ["www.example.com"] 28s

[root@k8s-master ~/GatewayApi/mirrors]# kubectl get po,svc -n envoy-gateway-system

NAME READY STATUS RESTARTS AGE

pod/envoy-gateway-757bb4947-s5bvr 1/1 Running 2 (99m ago) 23h

pod/envoy-gateway-757bb4947-vnrdw 1/1 Running 1 (99m ago) 5h51m

pod/envoy-gateway-mirror-demo-demo-gateway-3aaef86d-8459958875lz5dp 2/2 Running 0 34s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/envoy-gateway ClusterIP 10.96.122.237 <none> 18000/TCP,18001/TCP,18002/TCP,19001/TCP,9443/TCP 23h

service/envoy-gateway-mirror-demo-demo-gateway-3aaef86d LoadBalancer 10.101.128.175 10.0.0.150 80:30600/TCP 34s测试发起请求

bash

[root@k8s-node1 ~]# while true; do curl -v -H "Host: www.example.com" http://10.0.0.150/get; sleep 2; done查看日志和镜像日志

bash

[root@k8s-master ~/GatewayApi/mirrors]# kubectl -n gateway-mirror-demo logs -f pod/httpbin-primary-5db4444b6d-bktcz

time=2026-01-09T07:20:33.024Z level=INFO msg="go-httpbin listening on http://0.0.0.0:8080"

time=2026-01-09T07:22:30.966Z level=INFO msg="200 GET /get 0.2ms" status=200 method=GET uri=/get size_bytes=479 duration_ms=0.211166 user_agent=curl/8.14.1 client_ip=10.0.0.150

time=2026-01-09T07:22:41.809Z level=INFO msg="200 GET /get 0.3ms" status=200 method=GET uri=/get size_bytes=479 duration_ms=0.32533300000000004 user_agent=curl/8.14.1 client_ip=10.0.0.150

time=2026-01-09T07:23:14.251Z level=INFO msg="200 GET /get 0.0ms" status=200 method=GET uri=/get size_bytes=479 duration_ms=0.046897999999999995 user_agent=curl/8.14.1 client_ip=10.0.0.150

time=2026-01-09T07:23:20.305Z level=INFO msg="200 GET /get 0.1ms" status=200 method=GET uri=/get size_bytes=479 duration_ms=0.050535000000000004 user_agent=curl/8.14.1 client_ip=10.0.0.150

time=2026-01-09T07:23:22.321Z level=INFO msg="200 GET /get 0.0ms" status=200 method=GET uri=/get size_bytes=479 duration_ms=0.030468000000000002 user_agent=curl/8.14.1 client_ip=10.0.0.150

bash

[root@k8s-master ~]# kubectl -n gateway-mirror-demo logs -f pod/httpbin-mirror-659b9695d9-wtxwr

time=2026-01-09T07:20:33.065Z level=INFO msg="go-httpbin listening on http://0.0.0.0:8080"

time=2026-01-09T07:22:30.966Z level=INFO msg="200 GET /get 0.3ms" status=200 method=GET uri=/get size_bytes=539 duration_ms=0.26111 user_agent=curl/8.14.1 client_ip=10.0.0.150

time=2026-01-09T07:22:39.190Z level=INFO msg="200 GET /get 0.1ms" status=200 method=GET uri=/get size_bytes=539 duration_ms=0.080862 user_agent=curl/8.14.1 client_ip=10.0.0.150

time=2026-01-09T07:22:41.185Z level=INFO msg="200 GET /get 0.2ms" status=200 method=GET uri=/get size_bytes=539 duration_ms=0.246092 user_agent=curl/8.14.1 client_ip=10.0.0.150

time=2026-01-09T07:22:41.808Z level=INFO msg="200 GET /get 0.0ms" status=200 method=GET uri=/get size_bytes=539 duration_ms=0.028673 user_agent=curl/8.14.1 client_ip=10.0.0.150

time=2026-01-09T07:23:12.232Z level=INFO msg="200 GET /get 0.0ms" status=200 method=GET uri=/get size_bytes=539 duration_ms=0.043802 user_agent=curl/8.14.1 client_ip=10.0.0.150

time=2026-01-09T07:23:14.252Z level=INFO msg="200 GET /get 1.0ms" status=200 method=GET uri=/get size_bytes=539 duration_ms=0.9694159999999999 user_agent=curl/8.14.1 client_ip=10.0.0.150

time=2026-01-09T07:23:16.269Z level=INFO msg="200 GET /get 0.0ms" status=200 method=GET uri=/get size_bytes=539 duration_ms=0.030237 user_agent=curl/8.14.1 client_ip=10.0.0.150

time=2026-01-09T07:23:18.287Z level=INFO msg="200 GET /get 0.0ms" status=200 method=GET uri=/get size_bytes=539 duration_ms=0.037601 user_agent=curl/8.14.1 client_ip=10.0.0.150

time=2026-01-09T07:23:20.305Z level=INFO msg="200 GET /get 0.1ms" status=200 method=GET uri=/get size_bytes=539 duration_ms=0.051086 user_agent=curl/8.14.1 client_ip=10.0.0.150

time=2026-01-09T07:23:22.321Z level=INFO msg="200 GET /get 0.0ms" status=200 method=GET uri=/get size_bytes=539 duration_ms=0.0211 user_agent=curl/8.14.1 client_ip=10.0.0.150可以发现都存在日志,是OK的。

6. 故障注入

- 核心作用:主动模拟真实故障,提前验证系统容错能力(避免故障突发时手忙脚乱);

- 生产表现:通过配置模拟 503 错误、3 秒延迟等异常,观察系统是否能触发故障隔离(BackendHealthPolicy)和熔断;仅用于测试 / 混沌演练,不影响正常业务流量,测试完成后可快速禁用。

通过 HTTPRoute + Envoy Gateway 的 Fault Injection 能力,可以在不修改后端服务的情况下,人为注入延迟或错误响应,用于验证客户端超时、重试、熔断与故障隔离策略是否生效。

不改后端代码

由 Gateway 注入故障

客户端真实感知失败/延迟

| 资源 | 作用 |

|---|---|

| Namespace | 实验隔离 |

| Deployment(httpbin) | 后端服务 |

| Service | 后端暴露 |

| Gateway | 入口 |

| HTTPRoute | 路由 + 故障注入 |

6.1 创建后端服务Pod

bash

# backend.yaml

apiVersion: v1

kind: Namespace

metadata:

name: gateway-fault-demo

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpbin

namespace: gateway-fault-demo

labels:

app: httpbin

spec:

replicas: 2

selector:

matchLabels:

app: httpbin

template:

metadata:

labels:

app: httpbin

spec:

containers:

- name: httpbin

image: docker.io/mccutchen/go-httpbin:v2.15.0

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: httpbin

namespace: gateway-fault-demo

spec:

selector:

app: httpbin

ports:

- port: 80

targetPort: 8080

protocol: TCP

type: ClusterIP-

Namespace 与后续 Gateway/HTTPRoute 在相同命名空间(

gateway-fault-demo),allowedRoutes使用Same时这是必需的。 -

Deployment

containerPort=Service.targetPort(8080)一致,Service 暴露端口为 80(HTTP)。 -

镜像

mccutchen/go-httpbin支持/delay/:n、/get等用于测试。

6.2 Gateway + HTTPRoute

bash

# gateway-fault.yaml

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: fault-gateway

namespace: gateway-fault-demo

spec:

gatewayClassName: envoy

listeners:

- name: http

protocol: HTTP

port: 80

allowedRoutes:

namespaces:

from: Same

---

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: httpbin-fault-delay

namespace: gateway-fault-demo

spec:

parentRefs:

- name: fault-gateway

hostnames:

- "fault.example.com"

rules:

- matches:

- path:

type: PathPrefix

value: /

filters:

- type: FaultInjection

faultInjection:

delay:

percentage: 50

fixedDelay: 5s

backendRefs:

- name: httpbin

port: 80-

Gateway在同一命名空间gateway-fault-demo,listener 为 HTTP 80,allowedRoutes.namespaces.from: Same与 HTTPRoute 同命名空间配合正确。 -

HTTPRoute.filters使用type: FaultInjection并提供faultInjection.delay(percentage与fixedDelay)------这是 Gateway API 层面声明,Envoy Gateway 会把它下沉为对应的 Envoy fault filter(按你当前控制器实现应当生效)。 -

backendRefs简写(name + port)在同命名空间中可用且与 Servicehttpbin对应。 -

如果你更希望注入错误(abort)而不是延迟,替换

filters段为:

bash

- type: FaultInjection

faultInjection:

abort:

percentage: 30

httpStatus: 5036.3 测试

方法 A:如果 Gateway 已产生 LoadBalancer IP(MetalLB),在宣告节点上用该 IP(示例:

10.0.0.150)执行:方法 B:若没有 LB 或 LB 不在当前节点可达,使用

kubectl -n envoy-gateway-system port-forward svc/<envoy-service> 8080:80做本地测试。

单次请求(sanity):

bash

curl -v -H "Host: fault.example.com" http://10.0.0.150/get→ 200,快速响应(主流量未注入延迟)。

批量测延迟分布(延迟注入 50% × 5s):

bash

for i in {1..20}; do

curl -s -w "%{time_total}\n" -H "Host: fault.example.com" http://10.0.0.150/get &

done

wait→ 将看到大约一半请求 time_total ≈ 5s,另一半为正常低延迟(ms)。

7. TCPRoute

TCPRoute 在生产环境中的核心作用,是把"非 HTTP 流量"的入口转发能力,用标准化、可治理、可演进的方式纳入 Gateway 体系。

在同一 Gateway 上用 TCPRoute 将不同 TCP 端口转发到不同后端 Service,验证 TCP 流量能正确到达并回显(echo),并检查 Envoy 下发的 TCP 路由配置。

7.1 创建ALL资源清单

bash

[root@k8s-master ~/GatewayApi/TCPRoute]# cat tcproute-demo.yaml

# tcproute-demo.yaml

# 1) Namespace + TCP echo backends (使用 istio tcp-echo-server 镜像)

apiVersion: v1

kind: Namespace

metadata:

name: gateway-tcp-demo

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: tcp-echo-1

namespace: gateway-tcp-demo

labels:

app: tcp-echo-1

spec:

replicas: 1

selector:

matchLabels:

app: tcp-echo-1

template:

metadata:

labels:

app: tcp-echo-1

spec:

containers:

- name: tcp-echo

image: docker.io/istio/tcp-echo-server:1.3

args: ["9000","hello"] # listens on 9000 by default

ports:

- containerPort: 9000

---

apiVersion: v1

kind: Service

metadata:

name: tcp-echo-1

namespace: gateway-tcp-demo

spec:

selector:

app: tcp-echo-1

ports:

- port: 9000

targetPort: 9000

protocol: TCP

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: tcp-echo-2

namespace: gateway-tcp-demo

labels:

app: tcp-echo-2

spec:

replicas: 1

selector:

matchLabels:

app: tcp-echo-2

template:

metadata:

labels:

app: tcp-echo-2

spec:

containers:

- name: tcp-echo

image: docker.io/istio/tcp-echo-server:1.3

args: ["9001","world"]

ports:

- containerPort: 9000

---

apiVersion: v1

kind: Service

metadata:

name: tcp-echo-2

namespace: gateway-tcp-demo

spec:

selector:

app: tcp-echo-2

ports:

- port: 9001

targetPort: 9001

protocol: TCP

type: ClusterIP

---

# 2) Gateway (TCP listeners)

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: tcp-gateway

namespace: gateway-tcp-demo

spec:

gatewayClassName: envoy

listeners:

- name: echo-a

protocol: TCP

port: 10086

allowedRoutes:

kinds:

- kind: TCPRoute

---

# 3) TCPRoute attaching to the two listeners

# Note: TCPRoute is experimental in Gateway API; API version below follows the spec's experimental examples.

apiVersion: gateway.networking.k8s.io/v1alpha2

kind: TCPRoute

metadata:

name: tcp-echo-a

namespace: gateway-tcp-demo

spec:

parentRefs:

- name: tcp-gateway

sectionName: echo-a

rules:

- backendRefs:

- name: tcp-echo-1

port: 9000

- name: tcp-echo-2

port: 90017.2 检查资源状态

bash

[root@k8s-master ~/GatewayApi/TCPRoute]# kubectl get po,svc -n envoy-gateway-system

NAME READY STATUS RESTARTS AGE

pod/envoy-gateway-757bb4947-s5bvr 1/1 Running 3 (77m ago) 3d18h

pod/envoy-gateway-757bb4947-vnrdw 1/1 Running 2 (77m ago) 3d

pod/envoy-gateway-mirror-demo-demo-gateway-3aaef86d-8459958875lz5dp 2/2 Running 2 (77m ago) 2d18h

pod/envoy-gateway-tcp-demo-tcp-gateway-ed645b8b-7f8fdf785f-h62nd 2/2 Running 0 26m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/envoy-gateway ClusterIP 10.96.122.237 <none> 18000/TCP,18001/TCP,18002/TCP,19001/TCP,9443/TCP 3d18h

service/envoy-gateway-mirror-demo-demo-gateway-3aaef86d LoadBalancer 10.101.128.175 10.0.0.150 80:30600/TCP 2d18h

service/envoy-gateway-tcp-demo-tcp-gateway-ed645b8b LoadBalancer 10.106.60.191 10.0.0.151 10086:32649/TCP 26m

[root@k8s-master ~/GatewayApi/TCPRoute]# kubectl get po,svc,gateway,tcproutes.gateway.networking.k8s.io -n gateway-tcp-demo

NAME READY STATUS RESTARTS AGE

pod/tcp-echo-1-7f4889b658-69pct 1/1 Running 0 26m

pod/tcp-echo-2-7cb4b9d48-nzz85 1/1 Running 0 26m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/tcp-echo-1 ClusterIP 10.111.88.73 <none> 9000/TCP 26m

service/tcp-echo-2 ClusterIP 10.96.5.188 <none> 9001/TCP 26m

NAME CLASS ADDRESS PROGRAMMED AGE

gateway.gateway.networking.k8s.io/tcp-gateway envoy 10.0.0.151 True 26m

NAME AGE

tcproute.gateway.networking.k8s.io/tcp-echo-a 26m7.3 验证

bash

[root@k8s-master ~/GatewayApi/TCPRoute]# kubectl -n envoy-gateway-system get svc envoy-gateway-tcp-demo-tcp-gateway-ed645b8b -o yaml

apiVersion: v1

kind: Service

metadata:

annotations:

metallb.io/ip-allocated-from-pool: metalb

creationTimestamp: "2026-01-12T01:28:29Z"

....

spec:

allocateLoadBalancerNodePorts: true

clusterIP: 10.106.60.191

clusterIPs:

- 10.106.60.191

externalTrafficPolicy: Local ## 这里是Local当 Service 是 LoadBalancer + externalTrafficPolicy: Local 时,只有"存在该 Service 后端 Pod 的节点"才会真正接收并处理来自外部 IP 的流量。 在你当前的 MetalLB(L2)+ Envoy Gateway 架构下,这个效果就表现为:只有 Pod 所在节点(node2)能访问成功。

bash

[root@k8s-node2 ~]# telnet 10.0.0.151 10086

Trying 10.0.0.151...

Connected to 10.0.0.151.

Escape character is '^]'.

telnet> quit

Connection closed.

[root@k8s-node2 ~]# nc 10.0.0.151 10086

hello

hello hello

hello

hello helloenvoy的日志查看

bash

[root@k8s-master ~/GatewayApi/TCPRoute]# kubectl -n envoy-gateway-system logs -f envoy-gateway-tcp-demo-tcp-gateway-ed645b8b-7f8fdf785f-h62nd

Defaulted container "envoy" out of: envoy, shutdown-manager

{":authority":null,"bytes_received":0,"bytes_sent":0,"connection_termination_details":null,"downstream_local_address":"10.200.169.143:10086","downstream_remote_address":"10.0.0.151:48318","duration":6628,"method":null,"protocol":null,"requested_server_name":null,"response_code":0,"response_code_details":null,"response_flags":"-","route_name":null,"start_time":"2026-01-12T01:34:14.292Z","upstream_cluster":"tcproute/gateway-tcp-demo/tcp-echo-a/rule/-1","upstream_host":"10.200.36.71:9001","upstream_local_address":"10.200.169.143:54814","upstream_transport_failure_reason":null,"user-agent":null,"x-envoy-origin-path":null,"x-envoy-upstream-service-time":null,"x-forwarded-for":null,"x-request-id":null}

{":authority":null,"bytes_received":6,"bytes_sent":12,"connection_termination_details":null,"downstream_local_address":"10.200.169.143:10086","downstream_remote_address":"10.0.0.151:45542","duration":9876,"method":null,"protocol":null,"requested_server_name":null,"response_code":0,"response_code_details":null,"response_flags":"-","route_name":null,"start_time":"2026-01-12T01:34:30.948Z","upstream_cluster":"tcproute/gateway-tcp-demo/tcp-echo-a/rule/-1","upstream_host":"10.200.36.75:9000","upstream_local_address":"10.200.169.143:48598","upstream_transport_failure_reason":null,"user-agent":null,"x-envoy-origin-path":null,"x-envoy-upstream-service-time":null,"x-forwarded-for":null,"x-request-id":null}四、总结

本文围绕 K8s Gateway-API 标准化流量治理 核心主题,从理论到实践、从基础到进阶,完整落地了一套基于 Gateway-API + EnvoyGateway 的流量治理架构。通过逐层拆解 Gateway-API 的定义、核心资源与部署流程,明确了其作为 Kubernetes 官方下一代流量治理标准的核心价值 ------ 以「面向角色、标准化、可扩展」打破传统 Ingress 的注解碎片化、厂商锁定等痛点;而 EnvoyGateway 作为 Gateway-API 的原生实现者,以「零注解依赖、生态无缝兼容、Envoy 能力原生继承」的优势,大幅降低了标准化流量治理的落地门槛。

在实践层面,我们从基础到高级逐步递进:

- 基础能力落地:完成 HTTP/HTTPS 流量的标准化接入,通过 GatewayClass、Gateway、HTTPRoute 的分层协作,实现了流量入口定义、路由规则配置、证书安全加密的全流程规范化,且生产环境可直接复用阿里云等正规证书,满足安全合规要求;

- 核心业务支撑:通过 HTTPRoute 原生

weight字段实现灰度发布所需的流量权重分发,无需第三方插件,配置简洁且可移植; - 高级治理能力:基于 EnvoyGateway 扩展资源与 Gateway-API 标准资源,落地了熔断、故障隔离、流量镜像、故障注入等生产级流量治理功能,覆盖「故障预防、故障测试、故障自愈」全链路,满足复杂业务的稳定性诉求。

整套架构的核心优势可概括为三点:

- 「标准化」:所有流量规则基于 Gateway-API 官方字段定义,跨控制器(EnvoyGateway/Traefik/Istio)可直接移植,无厂商锁定;

- 「全场景」:从基础的 HTTP/HTTPS 访问,到灰度发布、熔断、镜像等高级需求,覆盖从测试环境到生产环境的全链路流量治理场景;

- 「易维护」:通过资源分层(GatewayClass 管基础设施、Gateway 管入口、HTTPRoute 管业务)实现角色分离,运维与开发各司其职,降低协作成本。

五、展望

Gateway-API 作为 Kubernetes SIG-Network 主推的下一代流量治理标准,其生态正处于高速发展阶段,未来将在以下方向持续演进与落地:

1. Gateway-API 官方功能持续增强

目前 Gateway-API v1 版本已实现南北向 HTTP/HTTPS 流量的核心治理能力,后续将进一步完善 东西向流量治理(服务网格场景,GAMMA initiative) 、更丰富的策略扩展(如统一认证、限流、Header 重写的标准化),未来无需依赖第三方扩展,即可通过标准资源覆盖更多复杂业务场景,真正实现「南北向 + 东西向」流量的统一标准化治理。

2. EnvoyGateway 生态适配深化

EnvoyGateway 作为 CNCF 沙箱项目,将持续强化与云原生生态的深度集成:一方面,将与 cert-manager、Prometheus、Grafana 等工具实现更紧密的联动,支持证书自动续期、流量指标可视化、告警联动等运维自动化能力;另一方面,将进一步优化生产环境的高可用特性,如多集群网关管理、流量故障自动转移、动态扩缩容等,满足大规模集群的流量治理需求。

3. 生产环境进阶落地方向

对于企业级用户而言,基于本文架构可进一步探索:

- 自动化运维:结合 GitOps 工具(如 ArgoCD)实现 Gateway-API 资源的声明式部署与版本控制,流量规则变更全程可追溯、可回滚;

- 多集群治理:通过 Gateway-API 结合集群网络互联方案(如 Submariner),实现跨集群流量的统一入口与路由分发,适配分布式业务架构;

- 零信任安全:基于 Gateway-API 的 TLS 配置与扩展策略,集成 mTLS 双向认证、JWT 鉴权等安全能力,构建从流量入口到后端服务的端到端零信任防护体系。

4. 行业趋势:标准化替代碎片化

随着 Gateway-API 生态的成熟,其将逐步取代传统 Ingress 成为 Kubernetes 集群流量治理的主流方案。未来,无论是云厂商托管集群,还是企业自建集群,Gateway-API 都将成为流量治理的「通用语言」,企业无需再为不同环境的流量配置重构适配,真正实现「一次配置,全环境复用」的高效治理模式。

综上,Gateway-API 不仅是一套技术标准,更是 Kubernetes 流量治理的「未来方向」。基于本文的实践基础,企业可快速落地标准化流量治理,并随着生态演进持续扩展能力边界,为云原生业务的稳定、高效运行提供坚实的流量层支撑。