六、控制平面高可用(VIP 管理)部署

6.1 部署前环境检查

bash

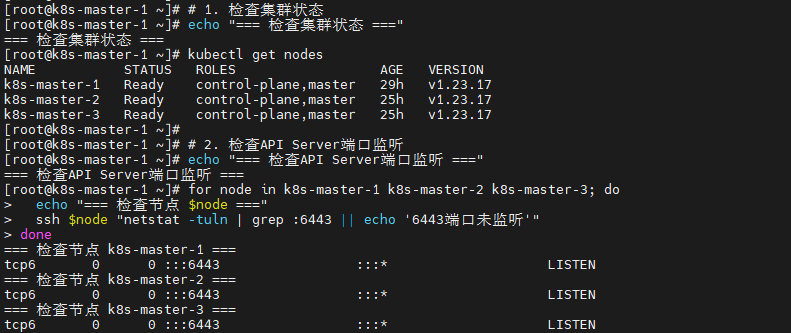

# 1. 检查集群状态

echo "=== 检查集群状态 ==="

kubectl get nodes

# 2. 检查API Server端口监听

echo "=== 检查API Server端口监听 ==="

for node in k8s-master-1 k8s-master-2 k8s-master-3; do

echo "=== 检查节点 $node ==="

ssh $node "netstat -tuln | grep :6443 || echo '6443端口未监听'"

done

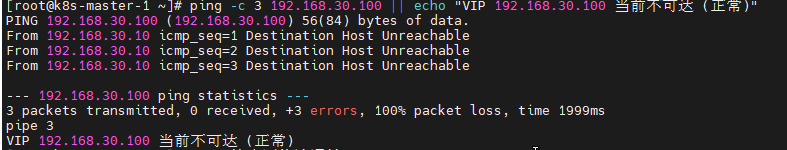

# 3. 检查VIP 192.168.30.100 (此时应不可达,因为还未部署)

echo "=== 检查VIP 192.168.30.100 (部署前) ==="

ping -c 3 192.168.30.100 || echo "VIP 192.168.30.100 当前不可达 (正常)"

# 4. 检查系统资源

echo "=== 检查系统资源 ==="

for node in k8s-master-1 k8s-master-2 k8s-master-3; do

echo "=== 节点 $node ==="

ssh $node "free -h | head -2"

ssh $node "df -h | head -2"

done

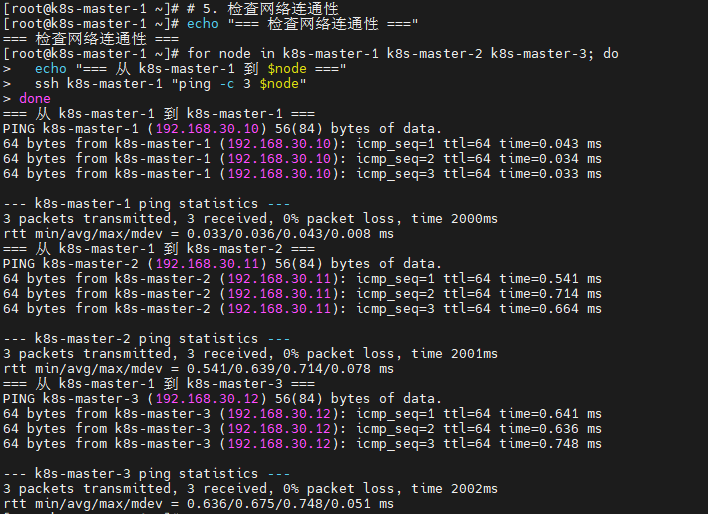

# 5. 检查网络连通性

echo "=== 检查网络连通性 ==="

for node in k8s-master-1 k8s-master-2 k8s-master-3; do

echo "=== 从 k8s-master-1 到 $node ==="

ssh k8s-master-1 "ping -c 3 $node"

done

6.2 安装Keepalived(所有Master节点执行)

bash

# 1. 安装Keepalived

echo "=== 安装Keepalived ==="

yum install -y keepalived

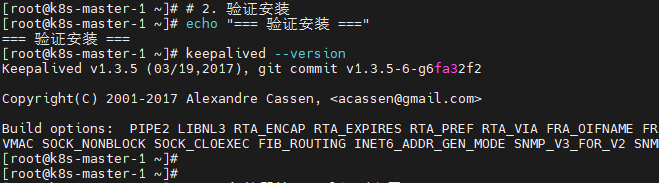

# 2. 验证安装

echo "=== 验证安装 ==="

keepalived --version

6.3 配置Keepalived(各Master节点分别执行)

说明: 使用 Keepalived 的 vrrp_instance 实现 VIP 的高可用(故障转移)。

6.3.1 在k8s-master-1配置Keepalived(MASTER节点)

bash

# 1. 备份原始Keepalived配置

echo "=== 备份原始Keepalived配置 ==="

cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

# 2. 创建Keepalived配置(MASTER节点)- **仅使用 VRRP 实现高可用**

echo "=== 创建Keepalived配置(MASTER节点 - VRRP Only) ==="

cat > /etc/keepalived/keepalived.conf << 'EOF'

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL # 保持原有名称,但实际不使用 LVS

}

# VRRP 实例,用于管理 VIP

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.30.100

}

}

EOF

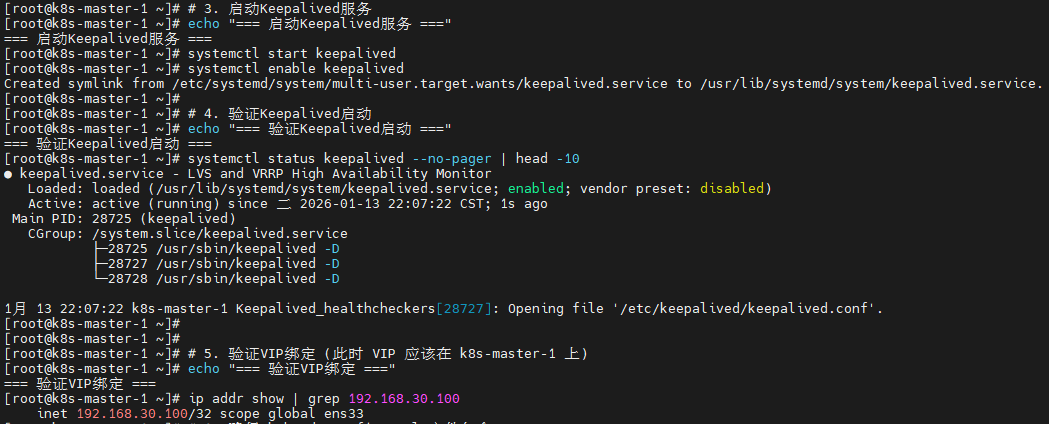

# 3. 启动Keepalived服务

echo "=== 启动Keepalived服务 ==="

systemctl start keepalived

systemctl enable keepalived

# 4. 验证Keepalived启动

echo "=== 验证Keepalived启动 ==="

systemctl status keepalived --no-pager | head -10

# 5. 验证VIP绑定 (此时 VIP 应该在 k8s-master-1 上)

echo "=== 验证VIP绑定 ==="

ip addr show | grep 192.168.30.100

6.3.2 在k8s-master-2配置Keepalived(BACKUP节点)

bash

# 1. 备份原始Keepalived配置

echo "=== 备份原始Keepalived配置 ==="

cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

# 2. 创建Keepalived配置(BACKUP节点)- **仅使用 VRRP 实现高可用**

echo "=== 创建Keepalived配置(BACKUP节点 - VRRP Only) ==="

cat > /etc/keepalived/keepalived.conf << 'EOF'

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

# VRRP 实例,用于管理 VIP

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.30.100

}

}

EOF

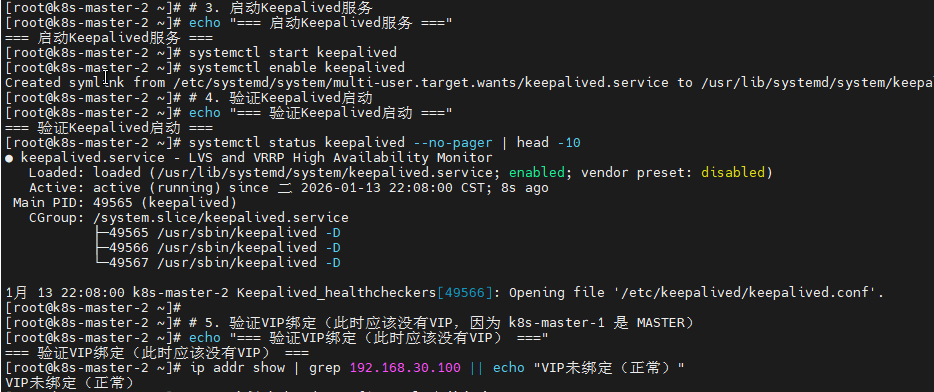

# 3. 启动Keepalived服务

echo "=== 启动Keepalived服务 ==="

systemctl start keepalived

systemctl enable keepalived

# 4. 验证Keepalived启动

echo "=== 验证Keepalived启动 ==="

systemctl status keepalived --no-pager | head -10

# 5. 验证VIP绑定(此时应该没有VIP,因为 k8s-master-1 是 MASTER)

echo "=== 验证VIP绑定(此时应该没有VIP) ==="

ip addr show | grep 192.168.30.100 || echo "VIP未绑定(正常)"

6.3.3 在k8s-master-3配置Keepalived(BACKUP节点)

bash

# 1. 备份原始Keepalived配置

echo "=== 备份原始Keepalived配置 ==="

cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

# 2. 创建Keepalived配置(BACKUP节点)- **仅使用 VRRP 实现高可用**

echo "=== 创建Keepalived配置(BACKUP节点 - VRRP Only) ==="

cat > /etc/keepalived/keepalived.conf << 'EOF'

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

# VRRP 实例,用于管理 VIP

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.30.100

}

}

EOF

# 3. 启动Keepalived服务

echo "=== 启动Keepalived服务 ==="

systemctl start keepalived

systemctl enable keepalived

# 4. 验证Keepalived启动

echo "=== 验证Keepalived启动 ==="

systemctl status keepalived --no-pager | head -10

# 5. 验证VIP绑定(此时应该没有VIP,因为 k8s-master-1 是 MASTER)

echo "=== 验证VIP绑定(此时应该没有VIP) ==="

ip addr show | grep 192.168.30.100 || echo "VIP未绑定(正常)"

6.4 更新API Server证书(在所有Master节点执行)

重要: 在 VIP 高可用部署完成后,必须更新所有 API Server 的证书,使其包含 VIP 192.168.30.100,否则 kubectl 无法通过 VIP 连接。

bash

# 1. 确保 kubeadm-config.yaml 文件包含 certSANs

echo "=== 检查 kubeadm-config.yaml certSANs ==="

grep -A 10 -B 5 certSANs /root/kubeadm-config.yaml

# 2. 备份现有 PKI

echo "=== 备份现有 PKI ==="

sudo cp -r /etc/kubernetes/pki /etc/kubernetes/pki.backup.$(date +%Y%m%d_%H%M%S)

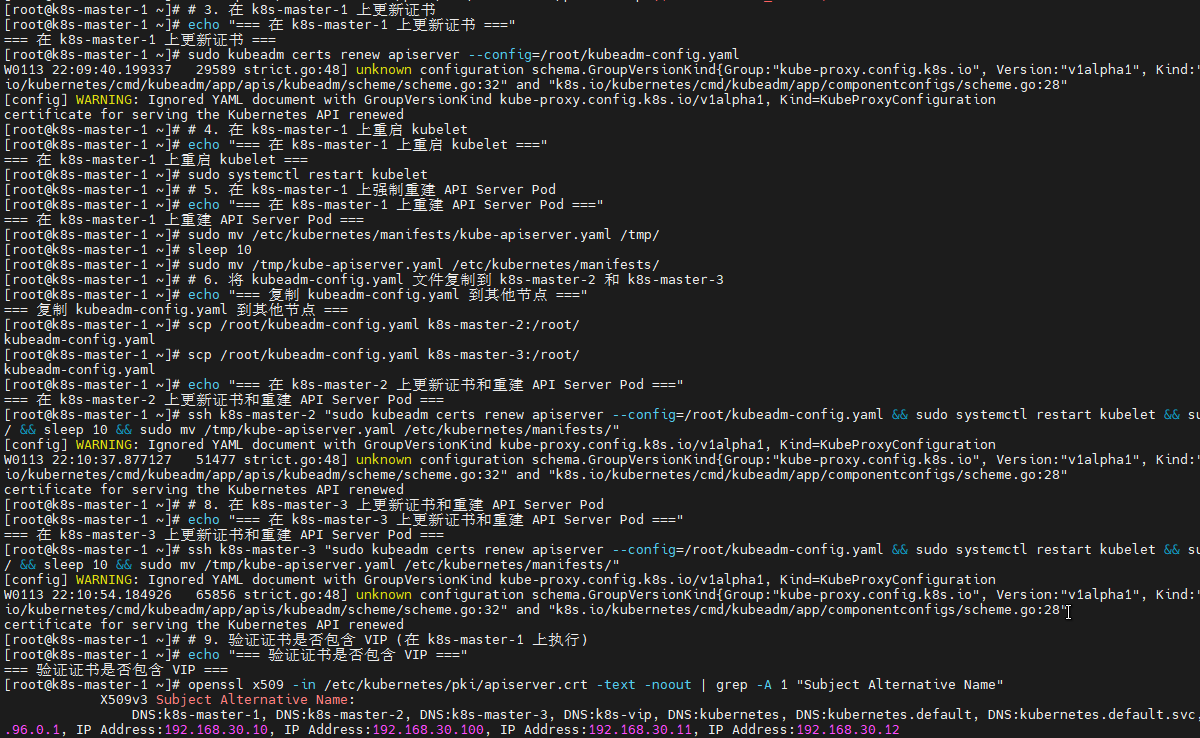

# 3. 在 k8s-master-1 上更新证书

echo "=== 在 k8s-master-1 上更新证书 ==="

sudo kubeadm certs renew apiserver --config=/root/kubeadm-config.yaml

# 4. 在 k8s-master-1 上重启 kubelet

echo "=== 在 k8s-master-1 上重启 kubelet ==="

sudo systemctl restart kubelet

# 5. 在 k8s-master-1 上强制重建 API Server Pod

echo "=== 在 k8s-master-1 上重建 API Server Pod ==="

sudo mv /etc/kubernetes/manifests/kube-apiserver.yaml /tmp/

sleep 10

sudo mv /tmp/kube-apiserver.yaml /etc/kubernetes/manifests/

# 6. 将 kubeadm-config.yaml 文件复制到 k8s-master-2 和 k8s-master-3

echo "=== 复制 kubeadm-config.yaml 到其他节点 ==="

scp /root/kubeadm-config.yaml k8s-master-2:/root/

scp /root/kubeadm-config.yaml k8s-master-3:/root/

# 7. 在 k8s-master-2 上更新证书和重建 API Server Pod

echo "=== 在 k8s-master-2 上更新证书和重建 API Server Pod ==="

ssh k8s-master-2 "sudo kubeadm certs renew apiserver --config=/root/kubeadm-config.yaml && sudo systemctl restart kubelet && sudo mv /etc/kubernetes/manifests/kube-apiserver.yaml /tmp/ && sleep 10 && sudo mv /tmp/kube-apiserver.yaml /etc/kubernetes/manifests/"

# 8. 在 k8s-master-3 上更新证书和重建 API Server Pod

echo "=== 在 k8s-master-3 上更新证书和重建 API Server Pod ==="

ssh k8s-master-3 "sudo kubeadm certs renew apiserver --config=/root/kubeadm-config.yaml && sudo systemctl restart kubelet && sudo mv /etc/kubernetes/manifests/kube-apiserver.yaml /tmp/ && sleep 10 && sudo mv /tmp/kube-apiserver.yaml /etc/kubernetes/manifests/"

# 9. 验证证书是否包含 VIP (在 k8s-master-1 上执行)

echo "=== 验证证书是否包含 VIP ==="

openssl x509 -in /etc/kubernetes/pki/apiserver.crt -text -noout | grep -A 1 "Subject Alternative Name"

6.5 验证高可用部署

bash

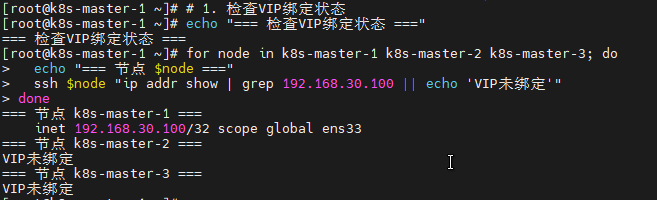

# 1. 检查VIP绑定状态

echo "=== 检查VIP绑定状态 ==="

for node in k8s-master-1 k8s-master-2 k8s-master-3; do

echo "=== 节点 $node ==="

ssh $node "ip addr show | grep 192.168.30.100 || echo 'VIP未绑定'"

done

# 2. 测试VIP连通性

echo "=== 测试VIP连通性 ==="

ping -c 3 192.168.30.100

# 3. 测试API Server健康检查 (通过VIP)

echo "=== 测试API Server健康检查 (通过VIP) ==="

curl -k https://192.168.30.100:6443/healthz --connect-timeout 5

# 4. 检查Keepalived服务状态

echo "=== 检查Keepalived服务状态 ==="

for node in k8s-master-1 k8s-master-2 k8s-master-3; do

echo "=== 节点 $node ==="

ssh $node "systemctl status keepalived --no-pager | head -5"

done

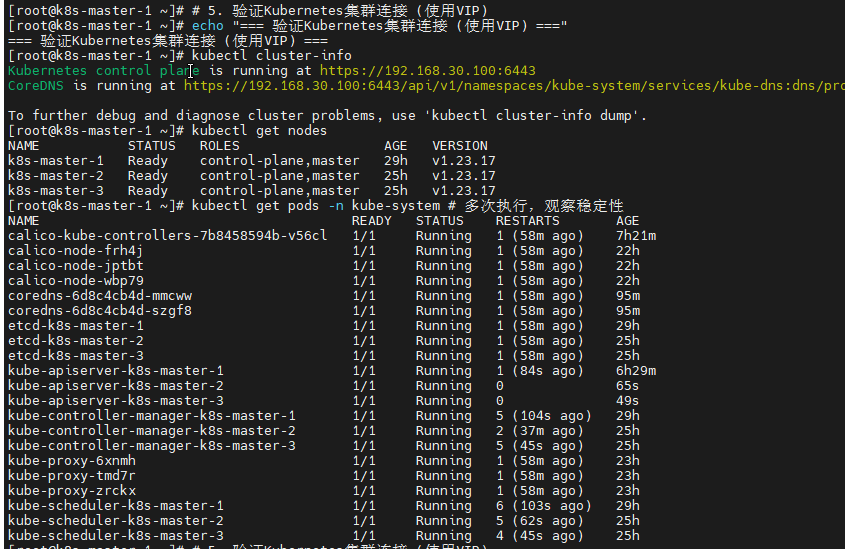

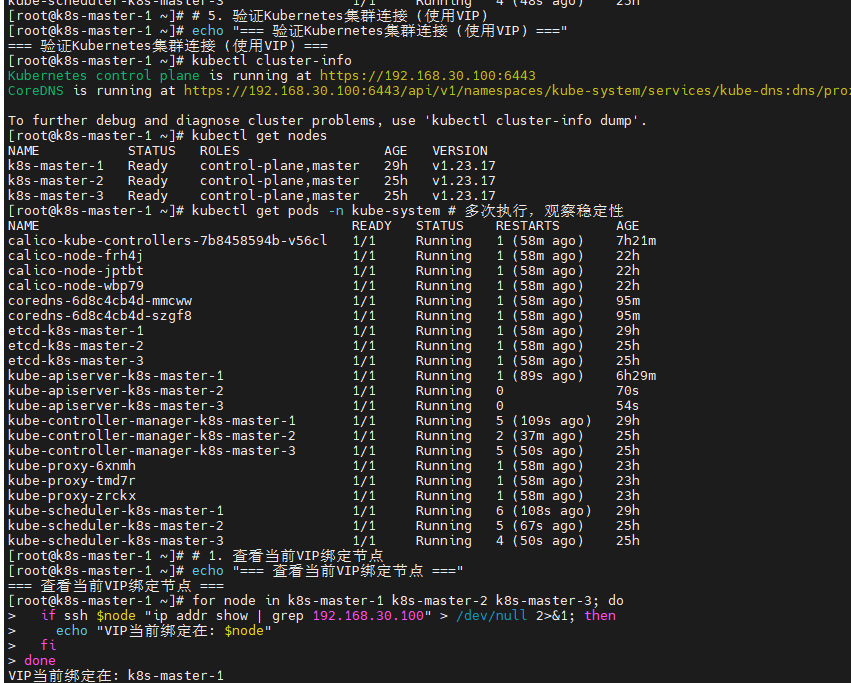

# 5. 验证Kubernetes集群连接 (使用VIP)

echo "=== 验证Kubernetes集群连接 (使用VIP) ==="

kubectl cluster-info

kubectl get nodes

kubectl get pods -n kube-system # 多次执行,观察稳定性

6.6 VIP漂移测试

bash

# 1. 查看当前VIP绑定节点

echo "=== 查看当前VIP绑定节点 ==="

for node in k8s-master-1 k8s-master-2 k8s-master-3; do

if ssh $node "ip addr show | grep 192.168.30.100" > /dev/null 2>&1; then

echo "VIP当前绑定在: $node"

fi

done

# 2. 停止当前VIP绑定节点的Keepalived服务 (例如 k8s-master-1)

echo "=== 停止当前VIP绑定节点的Keepalived服务 ==="

# 假设VIP当前绑定在k8s-master-1

ssh k8s-master-1 "systemctl stop keepalived"

# 3. 等待VIP漂移 (VRRP心跳周期 x 3)

echo "=== 等待VIP漂移(约3秒) ==="

sleep 5

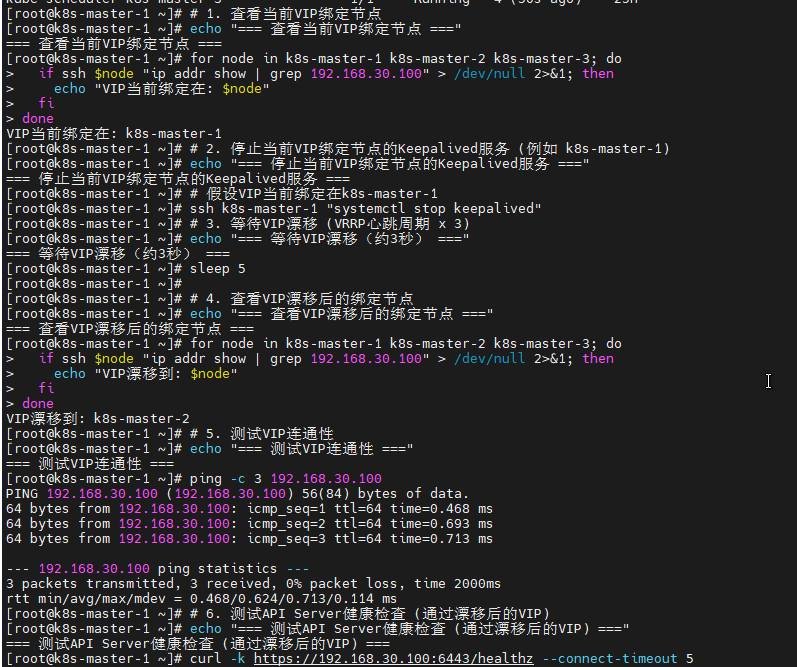

# 4. 查看VIP漂移后的绑定节点

echo "=== 查看VIP漂移后的绑定节点 ==="

for node in k8s-master-1 k8s-master-2 k8s-master-3; do

if ssh $node "ip addr show | grep 192.168.30.100" > /dev/null 2>&1; then

echo "VIP漂移到: $node"

fi

done

# 5. 测试VIP连通性

echo "=== 测试VIP连通性 ==="

ping -c 3 192.168.30.100

# 6. 测试API Server健康检查 (通过漂移后的VIP)

echo "=== 测试API Server健康检查 (通过漂移后的VIP) ==="

curl -k https://192.168.30.100:6443/healthz --connect-timeout 5

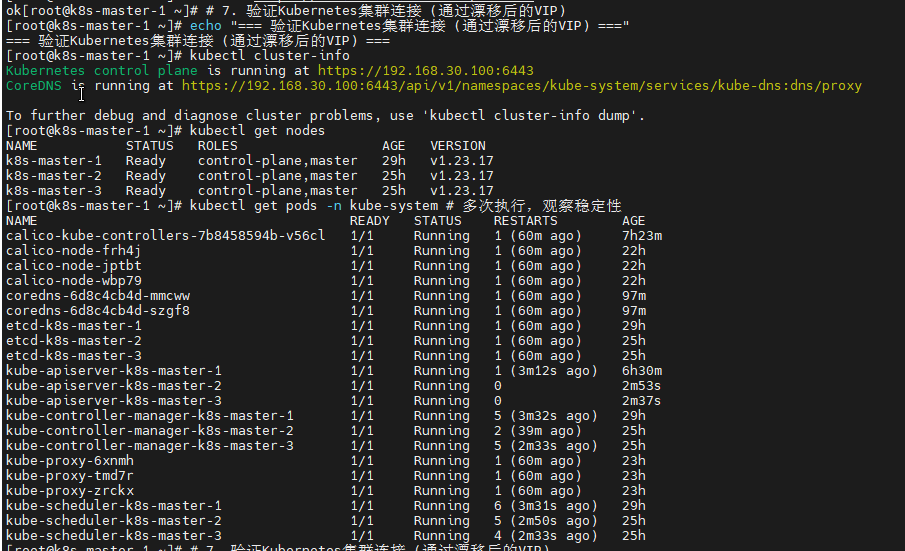

# 7. 验证Kubernetes集群连接 (通过漂移后的VIP)

echo "=== 验证Kubernetes集群连接 (通过漂移后的VIP) ==="

kubectl cluster-info

kubectl get nodes

kubectl get pods -n kube-system # 多次执行,观察稳定性

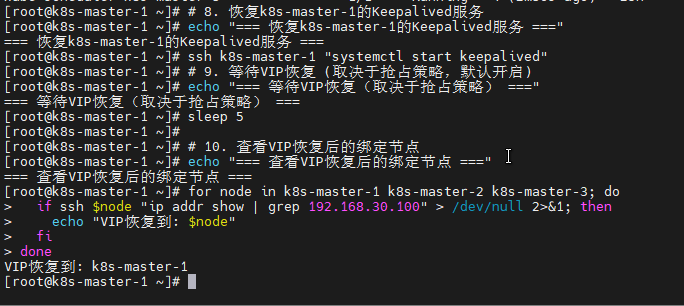

# 8. 恢复k8s-master-1的Keepalived服务

echo "=== 恢复k8s-master-1的Keepalived服务 ==="

ssh k8s-master-1 "systemctl start keepalived"

# 9. 等待VIP恢复 (取决于抢占策略,默认开启)

echo "=== 等待VIP恢复(取决于抢占策略) ==="

sleep 5

# 10. 查看VIP恢复后的绑定节点

echo "=== 查看VIP恢复后的绑定节点 ==="

for node in k8s-master-1 k8s-master-2 k8s-master-3; do

if ssh $node "ip addr show | grep 192.168.30.100" > /dev/null 2>&1; then

echo "VIP恢复到: $node"

fi

done可以看到,VIP飘移到了k8s-master-2节点

恢复复k8s-master-1的Keepalived服务

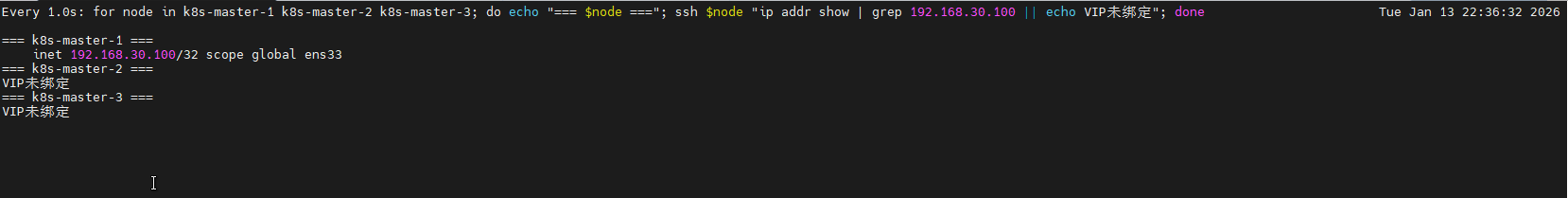

6.7 监控与日志

bash

# 1. 查看Keepalived日志

echo "=== 查看Keepalived日志 ==="

for node in k8s-master-1 k8s-master-2 k8s-master-3; do

echo "=== 节点 $node ==="

ssh $node "journalctl -u keepalived -f"

done

# 2. 查看VIP状态

echo "=== 查看VIP状态 ==="

watch -n 1 'for node in k8s-master-1 k8s-master-2 k8s-master-3; do echo "=== $node ==="; ssh $node "ip addr show | grep 192.168.30.100 || echo VIP未绑定"; done'

# 3. 查看系统资源使用

echo "=== 查看系统资源使用 ==="

for node in k8s-master-1 k8s-master-2 k8s-master-3; do

echo "=== 节点 $node ==="

ssh $node "top -bn1 | head -20"

done这个命令可以一直监控VIP状态

这个命令用于查看系统资源,后面可以搭配监控工具,设置告警规则,避免集群资源耗尽!

6.8 部署验证总结

bash

# 1. 生成部署状态报告

echo "=== 生成控制平面高可用部署状态报告 ==="

echo "===== 控制平面高可用部署状态报告 =====" > /tmp/ha-status.txt

echo "" >> /tmp/ha-status.txt

echo "===== VIP绑定状态 =====" >> /tmp/ha-status.txt

for node in k8s-master-1 k8s-master-2 k8s-master-3; do

echo "=== 节点 $node ===" >> /tmp/ha-status.txt

ssh $node "ip addr show | grep 192.168.30.100 || echo 'VIP未绑定'" >> /tmp/ha-status.txt

echo "" >> /tmp/ha-status.txt

done

echo "===== Keepalived服务状态 =====" >> /tmp/ha-status.txt

for node in k8s-master-1 k8s-master-2 k8s-master-3; do

echo "=== 节点 $node ===" >> /tmp/ha-status.txt

ssh $node "systemctl status keepalived --no-pager | head -5" >> /tmp/ha-status.txt

echo "" >> /tmp/ha-status.txt

done

echo "===== 端口监听状态 =====" >> /tmp/ha-status.txt

for node in k8s-master-1 k8s-master-2 k8s-master-3; do

echo "=== 节点 $node ===" >> /tmp/ha-status.txt

ssh $node "netstat -tuln | grep :6443" >> /tmp/ha-status.txt

echo "" >> /tmp/ha-status.txt

done

echo "===== Kubernetes集群状态 =====" >> /tmp/ha-status.txt

kubectl get nodes >> /tmp/ha-status.txt

kubectl get cs >> /tmp/ha-status.txt

echo "" >> /tmp/ha-status.txt

# 2. 显示报告

echo "=== 控制平面高可用部署状态报告 ==="

cat /tmp/ha-status.txt

# 3. 保存报告

echo "=== 部署状态报告已保存到 /tmp/ha-status.txt ==="

# 4. 验证检查清单

echo "=== 控制平面高可用部署验证检查清单 ==="

echo "✓ VIP 192.168.30.100 已绑定到 MASTER 节点"

echo "✓ Keepalived服务在所有Master节点运行"

echo "✓ 端口6443正常监听"

echo "✓ VIP可以正常漂移"

echo "✓ API Server证书包含VIP"

echo "✓ Kubernetes集群可通过VIP连接且稳定"

echo ""

echo "如果以上所有项都正常,恭喜您!控制平面高可用部署成功!"