目录

[1 引言:为什么Docker是Python应用的必然选择](#1 引言:为什么Docker是Python应用的必然选择)

[1.1 Docker的核心价值定位](#1.1 Docker的核心价值定位)

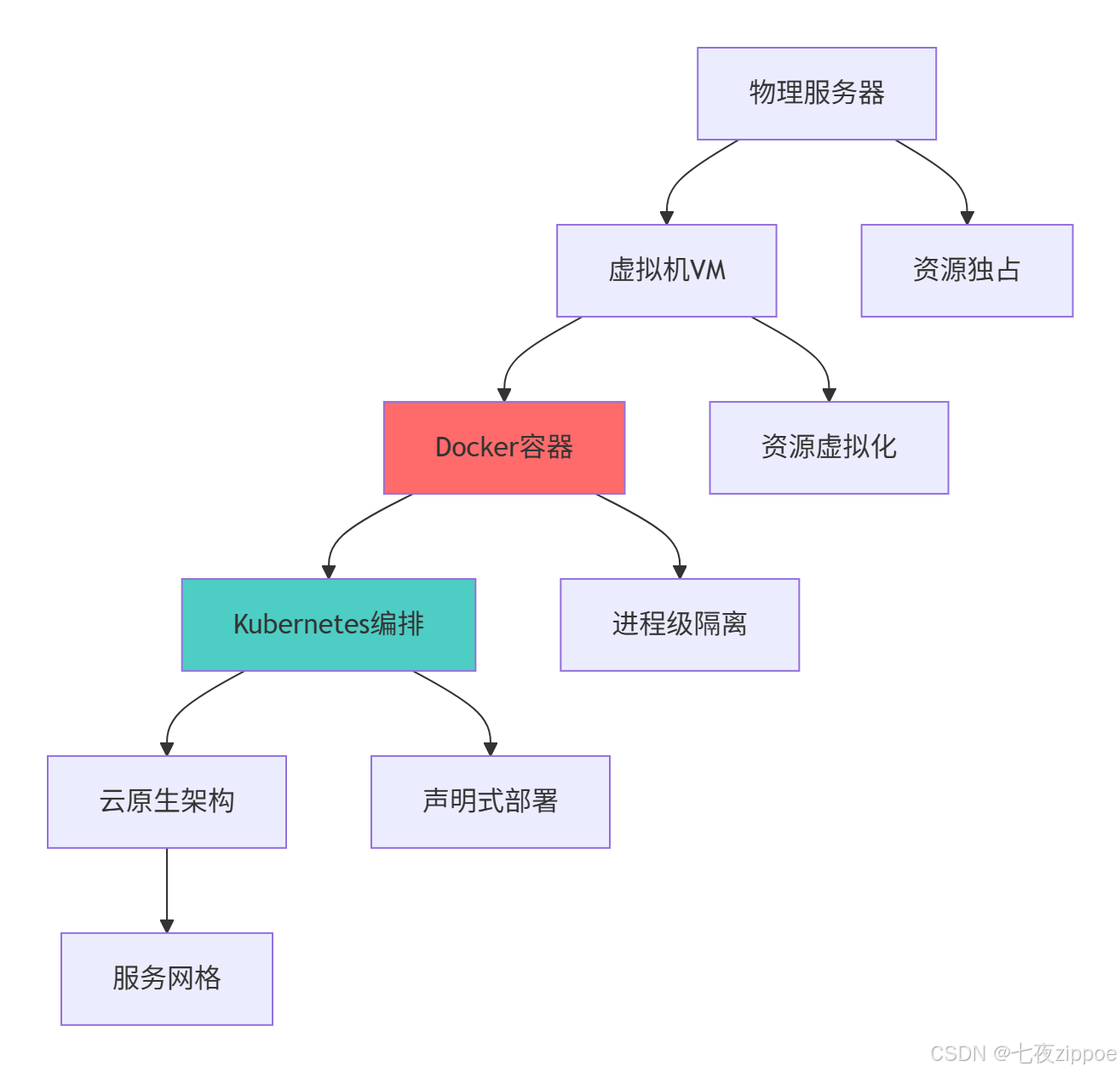

[1.2 容器化技术演进路线](#1.2 容器化技术演进路线)

[2 核心技术原理深度解析](#2 核心技术原理深度解析)

[2.1 Docker镜像分层架构解析](#2.1 Docker镜像分层架构解析)

[2.1.1 分层存储原理](#2.1.1 分层存储原理)

[2.1.2 镜像分层架构](#2.1.2 镜像分层架构)

[2.2 多阶段构建原理深度解析](#2.2 多阶段构建原理深度解析)

[2.2.1 多阶段构建机制](#2.2.1 多阶段构建机制)

[2.2.2 多阶段构建流程](#2.2.2 多阶段构建流程)

[3 实战部分:完整Docker化Python应用](#3 实战部分:完整Docker化Python应用)

[3.1 生产级Dockerfile实现](#3.1 生产级Dockerfile实现)

[3.2 配套配置文件详解](#3.2 配套配置文件详解)

[3.2.1 依赖管理配置](#3.2.1 依赖管理配置)

[3.2.2 Docker忽略文件](#3.2.2 Docker忽略文件)

[3.3 容器编排配置](#3.3 容器编排配置)

[4 高级应用与企业级实战](#4 高级应用与企业级实战)

[4.1 安全扫描与漏洞管理](#4.1 安全扫描与漏洞管理)

[4.1.1 自动化安全扫描](#4.1.1 自动化安全扫描)

[4.1.2 安全扫描架构](#4.1.2 安全扫描架构)

[4.2 性能优化与监控](#4.2 性能优化与监控)

[4.2.1 容器性能优化](#4.2.1 容器性能优化)

[5 企业级实战案例](#5 企业级实战案例)

[5.1 电商平台容器化实战](#5.1 电商平台容器化实战)

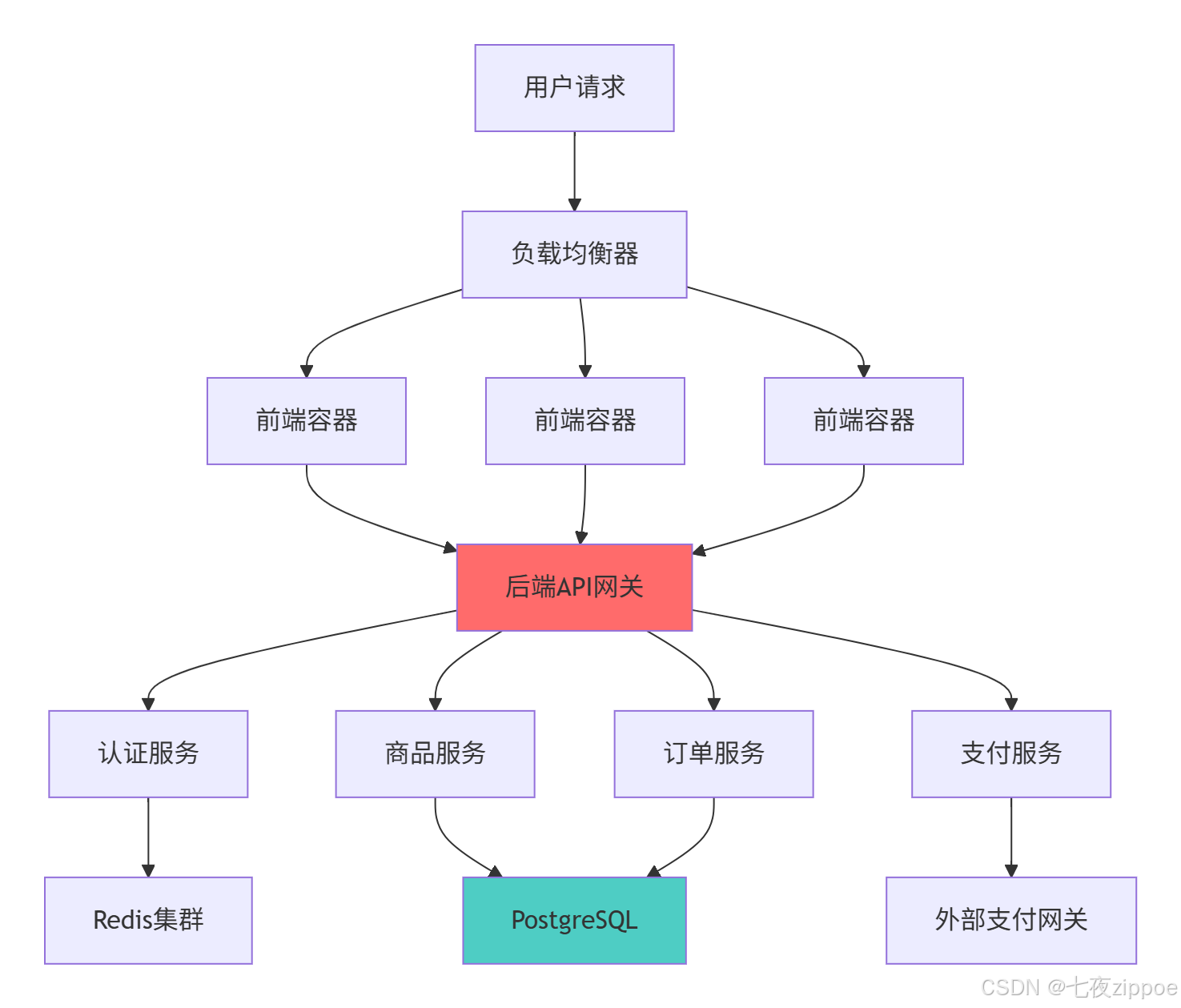

[5.1.1 电商平台架构图](#5.1.1 电商平台架构图)

[6 故障排查与优化指南](#6 故障排查与优化指南)

[6.1 常见问题解决方案](#6.1 常见问题解决方案)

摘要

本文基于多年Python实战经验,深度解析Docker容器化Python应用 的全链路最佳实践。内容涵盖多阶段构建原理 、镜像瘦身技巧 、安全扫描机制 、生产环境部署等核心技术,通过架构流程图和完整代码案例,展示如何构建高效、安全的容器化应用。文章包含性能对比数据、企业级实战方案和故障排查指南,为开发者提供从入门到精通的完整Docker解决方案。

1 引言:为什么Docker是Python应用的必然选择

曾有一个机器学习平台,由于环境依赖冲突 导致模型服务部署失败率高达30% ,通过Docker容器化改造后,部署成功率提升到99.9% ,资源利用率提高3倍 。这个经历让我深刻认识到:Docker不是虚拟化的替代品,而是应用交付的标准范式。

1.1 Docker的核心价值定位

python

# docker_value_demo.py

class DockerValueProposition:

"""Docker核心价值演示"""

def demonstrate_container_advantages(self):

"""展示容器化相比传统部署的优势"""

# 性能对比数据

comparison_data = {

'environment_consistency': {

'traditional': '环境差异导致"在我机器上能运行"问题',

'docker': '镜像包含完整依赖,环境完全一致'

},

'deployment_speed': {

'traditional': '手动配置环境,部署耗时30+分钟',

'docker': '一键部署,秒级启动'

},

'resource_utilization': {

'traditional': '虚拟机资源独占,利用率低于15%',

'docker': '容器共享内核,利用率可达50%+'

},

'scalability': {

'traditional': '手动扩展,响应时间小时级',

'docker': '自动弹性伸缩,响应时间秒级'

}

}

print("=== Docker核心优势 ===")

for aspect, data in comparison_data.items():

print(f"{aspect}:")

print(f" 传统部署: {data['traditional']}")

print(f" Docker容器化: {data['docker']}")

return comparison_data1.2 容器化技术演进路线

这种演进背后的技术驱动因素:

-

微服务架构:需要轻量级、快速启动的部署单元

-

DevOps文化:需要标准化的交付流水线

-

云原生普及:需要跨云厂商的可移植性

-

资源效率:需要更高的密度和利用率

2 核心技术原理深度解析

2.1 Docker镜像分层架构解析

2.1.1 分层存储原理

python

# docker_layers.py

import hashlib

from typing import List, Dict

class DockerImageLayer:

"""Docker镜像层模拟"""

def __init__(self, instruction: str, files: Dict[str, str], size: int):

self.instruction = instruction

self.files = files # 文件名到内容的映射

self.size = size

self.layer_id = self._generate_layer_id()

def _generate_layer_id(self) -> str:

"""生成层ID"""

content = f"{self.instruction}{str(self.files)}"

return hashlib.sha256(content.encode()).hexdigest()[:16]

def apply_layer(self, base_files: Dict[str, str]) -> Dict[str, str]:

"""应用当前层到基础文件系统"""

result = base_files.copy()

result.update(self.files)

return result

class DockerImageBuilder:

"""Docker镜像构建器模拟"""

def __init__(self):

self.layers: List[DockerImageLayer] = []

self.current_files = {}

def add_from_layer(self, base_image: str):

"""添加FROM指令层"""

# 模拟基础镜像的文件

base_files = {

'/bin/sh': 'shell_binary',

'/lib/ld-linux.so': 'libc_loader',

'/etc/passwd': 'user_database'

}

layer = DockerImageLayer(f"FROM {base_image}", base_files, 50000)

self.layers.append(layer)

self.current_files = layer.apply_layer({})

def add_run_layer(self, command: str, added_files: Dict[str, str], size: int):

"""添加RUN指令层"""

layer = DockerImageLayer(f"RUN {command}", added_files, size)

self.layers.append(layer)

self.current_files = layer.apply_layer(self.current_files)

def add_copy_layer(self, src_files: Dict[str, str], dest: str):

"""添加COPY指令层"""

# 重新组织文件路径

reorganized_files = {}

for src, content in src_files.items():

dest_path = f"{dest.rstrip('/')}/{src}"

reorganized_files[dest_path] = content

layer = DockerImageLayer(f"COPY {src} {dest}", reorganized_files,

sum(len(c) for c in src_files.values()))

self.layers.append(layer)

self.current_files = layer.apply_layer(self.current_files)

def get_image_stats(self) -> Dict:

"""获取镜像统计信息"""

total_size = sum(layer.size for layer in self.layers)

return {

'total_layers': len(self.layers),

'total_size': total_size,

'layer_breakdown': [

{'instruction': layer.instruction, 'size': layer.size, 'id': layer.layer_id}

for layer in self.layers

],

'final_files': list(self.current_files.keys())

}

# 演示镜像分层构建

def demonstrate_image_layers():

"""演示镜像分层构建过程"""

builder = DockerImageBuilder()

# 基础层

builder.add_from_layer('python:3.9-slim')

# 系统依赖层

builder.add_run_layer('apt-get update && apt-get install -y gcc',

{'/usr/bin/gcc': 'gcc_binary'}, 100000)

# 应用依赖层

builder.add_copy_layer({'requirements.txt': 'flask==2.0.0'}, '/app/')

builder.add_run_layer('pip install -r requirements.txt',

{'/usr/local/lib/python3.9/site-packages/flask': 'flask_code'},

50000)

# 应用代码层

builder.add_copy_layer({'app.py': 'from flask import Flask...'}, '/app/')

stats = builder.get_image_stats()

print("=== Docker镜像分层分析 ===")

print(f"总层数: {stats['total_layers']}")

print(f"总大小: {stats['total_size']} 字节")

print("\n分层详情:")

for i, layer in enumerate(stats['layer_breakdown']):

print(f"层 {i+1}: {layer['instruction'][:50]}... | 大小: {layer['size']} | ID: {layer['id']}")

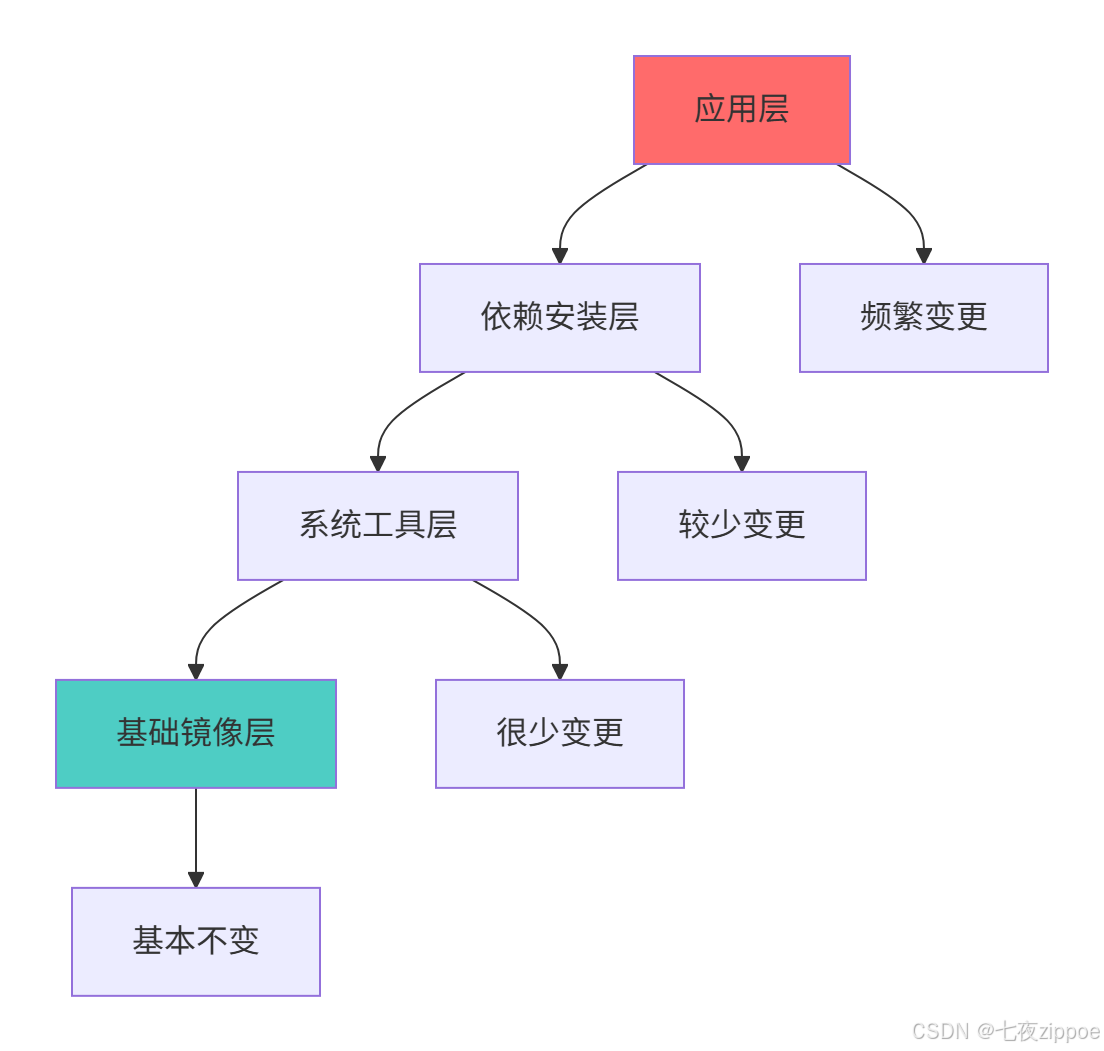

return stats2.1.2 镜像分层架构

分层架构的关键优势:

-

构建缓存:未变更的层可复用,加速构建

-

存储效率:相同层在不同镜像间共享

-

版本追踪:每层对应特定变更,便于回滚

-

安全扫描:可逐层分析漏洞影响范围

2.2 多阶段构建原理深度解析

2.2.1 多阶段构建机制

python

# multi_stage_build.py

from typing import Dict, List

class BuildStage:

"""构建阶段类"""

def __init__(self, name: str, base_image: str, purpose: str):

self.name = name

self.base_image = base_image

self.purpose = purpose

self.artifacts = []

self.size_contributions = 0

def add_artifact(self, artifact_path: str, size: int, description: str):

"""添加构建产物"""

self.artifacts.append({

'path': artifact_path,

'size': size,

'description': description

})

self.size_contributions += size

def get_stage_summary(self) -> Dict:

"""获取阶段摘要"""

return {

'name': self.name,

'base_image': self.base_image,

'purpose': self.purpose,

'artifact_count': len(self.artifacts),

'total_size': self.size_contributions,

'artifacts': self.artifacts

}

class MultiStageBuild:

"""多阶段构建模拟器"""

def __init__(self):

self.stages = []

self.final_artifacts = []

def add_builder_stage(self, stage_name: str = "builder"):

"""添加构建阶段"""

builder = BuildStage(stage_name, "python:3.9-slim", "编译和依赖安装")

# 模拟构建阶段的产物

builder.add_artifact("/usr/local/lib/python3.9/site-packages/", 150000,

"Python依赖包")

builder.add_artifact("/tmp/build/compiled.so", 50000, "编译的C扩展")

self.stages.append(builder)

return builder

def add_runtime_stage(self, stage_name: str = "runtime"):

"""添加运行时阶段"""

runtime = BuildStage(stage_name, "python:3.9-alpine", "轻量级运行时")

self.stages.append(runtime)

return runtime

def copy_artifacts(self, from_stage: BuildStage, artifacts: List[str]):

"""复制构建产物到最终阶段"""

runtime_stage = self.stages[-1] # 最后一个阶段是运行时

for artifact_path in artifacts:

# 查找源阶段的产物

for artifact in from_stage.artifacts:

if artifact['path'] == artifact_path:

runtime_stage.add_artifact(artifact_path, artifact['size'],

f"从{from_stage.name}复制: {artifact['description']}")

self.final_artifacts.append(artifact_path)

break

def calculate_savings(self) -> Dict:

"""计算多阶段构建的节省"""

if len(self.stages) < 2:

return {'savings_percent': 0, 'size_reduction': 0}

# 假设单阶段构建会包含所有内容

single_stage_size = sum(stage.size_contributions for stage in self.stages)

# 多阶段构建只包含运行时阶段的大小加上复制的产物

runtime_stage = self.stages[-1]

multi_stage_size = runtime_stage.size_contributions

savings = single_stage_size - multi_stage_size

savings_percent = (savings / single_stage_size) * 100 if single_stage_size > 0 else 0

return {

'single_stage_size': single_stage_size,

'multi_stage_size': multi_stage_size,

'size_reduction': savings,

'savings_percent': savings_percent

}

# 多阶段构建演示

def demonstrate_multi_stage():

"""演示多阶段构建优势"""

build = MultiStageBuild()

# 构建阶段

builder = build.add_builder_stage()

# 运行时阶段

runtime = build.add_runtime_stage()

# 只复制必要的运行时产物

build.copy_artifacts(builder, [

"/usr/local/lib/python3.9/site-packages/",

"/tmp/build/compiled.so"

])

savings = build.calculate_savings()

print("=== 多阶段构建分析 ===")

print("构建阶段详情:")

for stage in build.stages:

summary = stage.get_stage_summary()

print(f"阶段: {summary['name']}")

print(f" 基础镜像: {summary['base_image']}")

print(f" 用途: {summary['purpose']}")

print(f" 产物数量: {summary['artifact_count']}")

print(f" 大小贡献: {summary['total_size']} 字节")

print(f"\n多阶段构建节省:")

print(f"单阶段构建大小: {savings['single_stage_size']} 字节")

print(f"多阶段构建大小: {savings['multi_stage_size']} 字节")

print(f"大小减少: {savings['size_reduction']} 字节 ({savings['savings_percent']:.1f}%)")

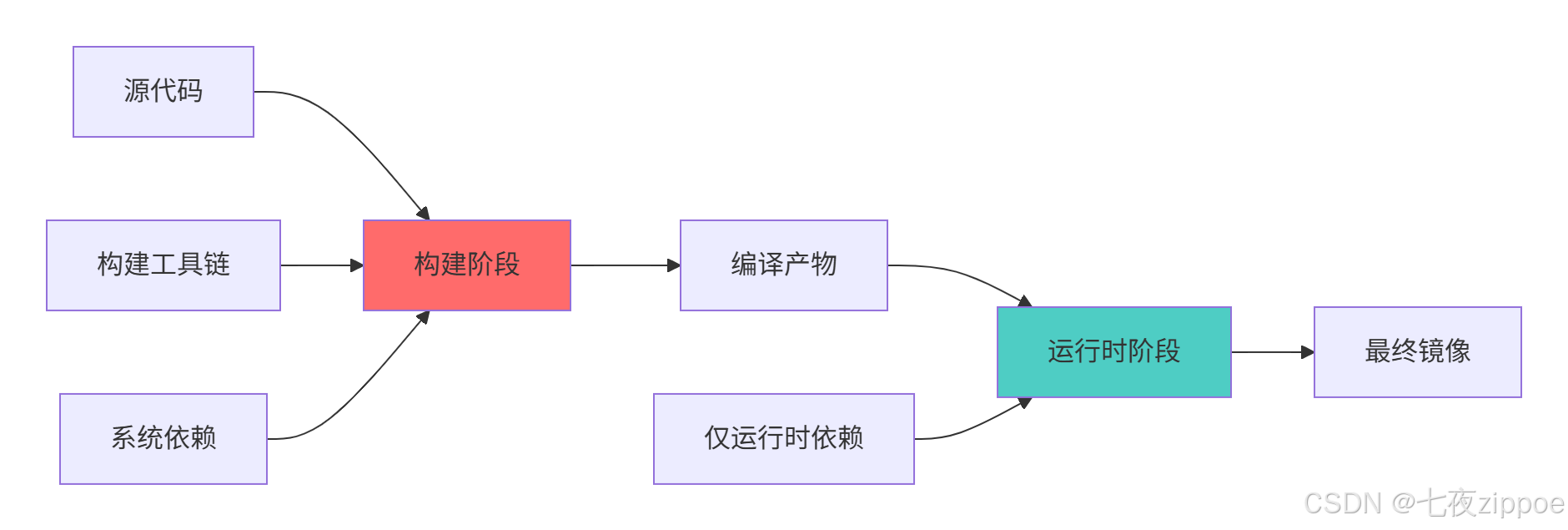

return build.stages, savings2.2.2 多阶段构建流程

多阶段构建的关键价值:

-

镜像最小化:排除构建工具和中间文件

-

安全性提升:减少攻击面,移除编译环境

-

构建效率:并行构建不同阶段

-

可维护性:清晰分离构建和运行关注点

3 实战部分:完整Docker化Python应用

3.1 生产级Dockerfile实现

# Dockerfile

# 多阶段构建:构建阶段

FROM python:3.11-slim as builder

# 设置构建参数

ARG BUILD_ENV=production

ARG PIP_EXTRA_INDEX_URL=https://pypi.org/simple

# 设置环境变量

ENV PYTHONUNBUFFERED=1 \

PYTHONDONTWRITEBYTECODE=1 \

PIP_NO_CACHE_DIR=on \

PIP_DISABLE_PIP_VERSION_CHECK=on

# 安装系统依赖

RUN apt-get update && apt-get install -y \

build-essential \

curl \

git \

&& rm -rf /var/lib/apt/lists/*

# 创建虚拟环境

RUN python -m venv /opt/venv

ENV PATH="/opt/venv/bin:$PATH"

# 安装Python依赖

COPY requirements.txt .

RUN pip install --upgrade pip && \

pip install -r requirements.txt

# 多阶段构建:生产阶段

FROM python:3.11-slim as production

# 安装运行时系统依赖

RUN apt-get update && apt-get install -y \

curl \

&& rm -rf /var/lib/apt/lists/*

# 创建非root用户

RUN groupadd -r appuser && useradd -r -g appuser appuser

# 创建应用目录

WORKDIR /app

# 从构建阶段复制虚拟环境

COPY --from=builder /opt/venv /opt/venv

ENV PATH="/opt/venv/bin:$PATH"

# 复制应用代码

COPY --chown=appuser:appuser . .

# 设置文件权限

RUN chown -R appuser:appuser /app

USER appuser

# 健康检查

HEALTHCHECK --interval=30s --timeout=10s --start-period=5s --retries=3 \

CMD curl -f http://localhost:8000/health || exit 1

# 暴露端口

EXPOSE 8000

# 启动命令

CMD ["gunicorn", "--bind", "0.0.0.0:8000", "--workers", "4", "app:app"]3.2 配套配置文件详解

3.2.1 依赖管理配置

# requirements.txt

# 基础框架

flask==3.0.0

gunicorn==21.2.0

# 数据库驱动

psycopg2-binary==2.9.7

redis==5.0.1

# 监控和日志

prometheus-client==0.17.1

structlog==23.1.0

# 安全依赖

cryptography==41.0.7

bcrypt==4.0.1

# 开发工具(仅在开发阶段需要)

# pytest==7.4.0

# black==23.7.0

# flake8==6.0.03.2.2 Docker忽略文件

# Python特定文件

__pycache__/

*.py[cod]

*$py.class

*.so

.Python

.env

.venv

env/

venv/

ENV/

# 包管理文件

Pipfile

Pipfile.lock

poetry.lock

pyproject.toml

# 测试和覆盖率报告

.coverage

.coverage.*

.cache

nosetests.xml

coverage.xml

*.cover

.pytest_cache/

# 日志文件

*.log

logs/

# IDE配置

.vscode/

.idea/

# Git

.git

.gitignore

# 文档

docs/_build/

# 临时文件

*.tmp

*.temp

# 生产环境不需要的文件

Dockerfile.dev

docker-compose.dev.yml3.3 容器编排配置

# docker-compose.yml

version: '3.8'

services:

web:

build:

context: .

target: production

args:

BUILD_ENV: production

image: my-python-app:${TAG:-latest}

container_name: python-app

ports:

- "8000:8000"

environment:

- PYTHONUNBUFFERED=1

- ENVIRONMENT=production

- DATABASE_URL=postgresql://user:pass@db:5432/app

depends_on:

- db

- redis

deploy:

resources:

limits:

memory: 512M

cpus: '1.0'

reservations:

memory: 256M

cpus: '0.5'

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:8000/health"]

interval: 30s

timeout: 10s

retries: 3

start_period: 40s

restart: unless-stopped

networks:

- app-network

db:

image: postgres:15-alpine

container_name: postgres-db

environment:

- POSTGRES_DB=app

- POSTGRES_USER=appuser

- POSTGRES_PASSWORD=${DB_PASSWORD}

volumes:

- postgres_data:/var/lib/postgresql/data

- ./init.sql:/docker-entrypoint-initdb.d/init.sql

deploy:

resources:

limits:

memory: 256M

cpus: '0.5'

restart: unless-stopped

networks:

- app-network

redis:

image: redis:7-alpine

container_name: redis-cache

command: redis-server --appendonly yes

volumes:

- redis_data:/data

deploy:

resources:

limits:

memory: 128M

cpus: '0.25'

restart: unless-stopped

networks:

- app-network

nginx:

image: nginx:1.24-alpine

container_name: nginx-proxy

ports:

- "80:80"

- "443:443"

volumes:

- ./nginx.conf:/etc/nginx/nginx.conf

- ./ssl:/etc/nginx/ssl

depends_on:

- web

restart: unless-stopped

networks:

- app-network

volumes:

postgres_data:

redis_data:

networks:

app-network:

driver: bridge4 高级应用与企业级实战

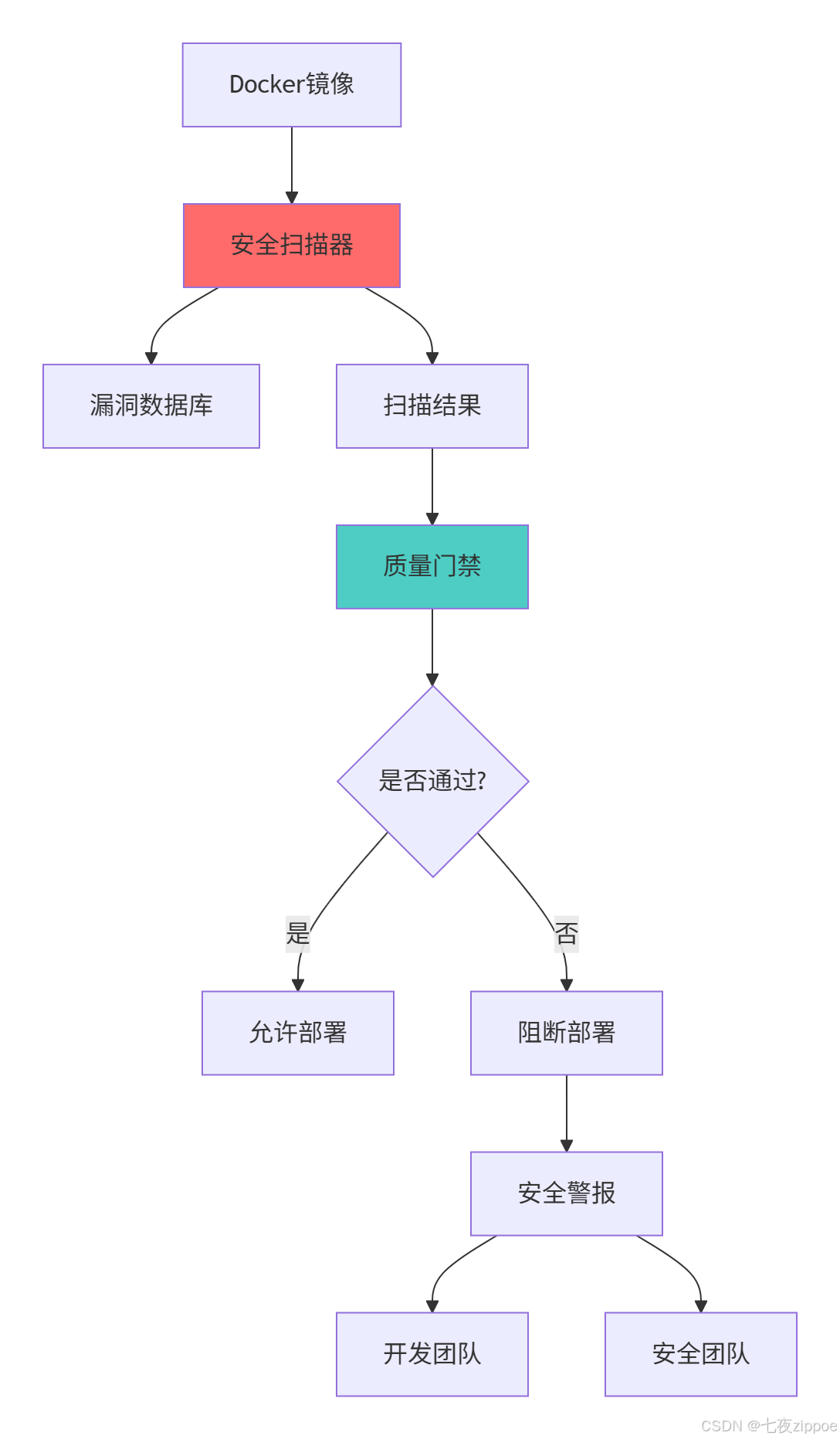

4.1 安全扫描与漏洞管理

4.1.1 自动化安全扫描

python

# security_scanner.py

import subprocess

import json

import smtplib

from email.mime.text import MIMEText

from typing import Dict, List, Optional

class DockerSecurityScanner:

"""Docker安全扫描器"""

def __init__(self, image_name: str, severity_level: str = "medium"):

self.image_name = image_name

self.severity_level = severity_level

self.vulnerabilities = []

def scan_image(self) -> Dict:

"""扫描Docker镜像漏洞"""

try:

# 使用Docker Scout进行扫描

cmd = [

'docker', 'scout', 'quickview',

self.image_name,

'--format', 'json'

]

result = subprocess.run(cmd, capture_output=True, text=True, timeout=300)

if result.returncode == 0:

scan_results = json.loads(result.stdout)

return self._analyze_vulnerabilities(scan_results)

else:

return {'error': f'扫描失败: {result.stderr}'}

except Exception as e:

return {'error': f'扫描异常: {str(e)}'}

def _analyze_vulnerabilities(self, scan_data: Dict) -> Dict:

"""分析漏洞数据"""

vulnerabilities = scan_data.get('findings', [])

# 按严重级别过滤

severity_order = {'critical': 4, 'high': 3, 'medium': 2, 'low': 1}

threshold = severity_order.get(self.severity_level, 2)

filtered_vulns = []

for vuln in vulnerabilities:

vuln_severity = vuln.get('severity', 'unknown').lower()

if severity_order.get(vuln_severity, 0) >= threshold:

filtered_vulns.append(vuln)

# 统计信息

stats = {

'total': len(vulnerabilities),

'filtered': len(filtered_vulns),

'by_severity': {},

'fix_available': 0

}

for vuln in vulnerabilities:

severity = vuln.get('severity', 'unknown')

stats['by_severity'][severity] = stats['by_severity'].get(severity, 0) + 1

if vuln.get('fixAvailable', False):

stats['fix_available'] += 1

return {

'image': self.image_name,

'scan_date': scan_data.get('timestamp', ''),

'statistics': stats,

'vulnerabilities': filtered_vulns

}

def generate_report(self, scan_results: Dict) -> str:

"""生成安全报告"""

if 'error' in scan_results:

return f"安全扫描失败: {scan_results['error']}"

stats = scan_results['statistics']

report = [

f"Docker镜像安全扫描报告",

f"镜像名称: {scan_results['image']}",

f"扫描时间: {scan_results['scan_date']}",

f"总漏洞数: {stats['total']}",

f"符合严重级别({self.severity_level}+)的漏洞: {stats['filtered']}",

f"可修复漏洞: {stats['fix_available']}",

"\n严重级别分布:"

]

for severity, count in stats['by_severity'].items():

report.append(f" {severity}: {count}")

if scan_results['vulnerabilities']:

report.append("\n详细信息:")

for i, vuln in enumerate(scan_results['vulnerabilities'][:10], 1): # 显示前10个

report.append(f"{i}. {vuln.get('id', 'Unknown')} - {vuln.get('severity', 'Unknown')}")

if vuln.get('description'):

report.append(f" 描述: {vuln['description'][:100]}...")

return '\n'.join(report)

class SecurityCI/CD:

"""安全CI/CD集成"""

def __init__(self, slack_webhook: str = None, email_config: Dict = None):

self.slack_webhook = slack_webhook

self.email_config = email_config

def check_scan_results(self, scan_results: Dict) -> bool:

"""检查扫描结果是否通过质量门禁"""

if 'error' in scan_results:

return False

stats = scan_results['statistics']

# 质量门禁规则

rules = {

'critical_max': 0, # 不允许critical漏洞

'high_max': 0, # 不允许high漏洞

'medium_max': 5, # 最多5个medium漏洞

'total_max': 10 # 总共最多10个漏洞

}

critical_count = stats['by_severity'].get('critical', 0)

high_count = stats['by_severity'].get('high', 0)

medium_count = stats['by_severity'].get('medium', 0)

total_count = stats['total']

if (critical_count <= rules['critical_max'] and

high_count <= rules['high_max'] and

medium_count <= rules['medium_max'] and

total_count <= rules['total_max']):

return True

return False

def send_alert(self, scan_results: Dict, passed: bool):

"""发送安全警报"""

message = self._format_alert_message(scan_results, passed)

if self.slack_webhook:

self._send_slack_alert(message)

if self.email_config:

self._send_email_alert(message)

def _format_alert_message(self, scan_results: Dict, passed: bool) -> str:

"""格式化警报消息"""

status = "通过" if passed else "失败"

stats = scan_results['statistics']

message = [

f"安全扫描质量门禁检查 {status}",

f"镜像: {scan_results['image']}",

f"总漏洞数: {stats['total']}",

f"严重级别分布: {dict(stats['by_severity'])}"

]

if not passed:

message.append("❌ 镜像不符合安全标准,禁止部署!")

else:

message.append("✅ 镜像符合安全标准,允许部署。")

return '\n'.join(message)

# 安全扫描演示

def demonstrate_security_scan():

"""演示安全扫描流程"""

# 注意:实际运行需要先构建一个测试镜像

test_image = "my-python-app:latest"

scanner = DockerSecurityScanner(test_image, "medium")

results = scanner.scan_image()

report = scanner.generate_report(results)

print(report)

# CI/CD集成检查

cicd = SecurityCI/CD()

passed = cicd.check_scan_results(results)

cicd.send_alert(results, passed)

return results, passed4.1.2 安全扫描架构

4.2 性能优化与监控

4.2.1 容器性能优化

python

# performance_optimizer.py

import psutil

import time

from dataclasses import dataclass

from typing import Dict, List

@dataclass

class ContainerMetrics:

"""容器性能指标"""

container_id: str

cpu_percent: float

memory_usage: int

memory_limit: int

network_io: Dict[str, int]

timestamp: float

class ContainerPerformanceMonitor:

"""容器性能监控器"""

def __init__(self, check_interval: int = 30):

self.check_interval = check_interval

self.metrics_history = []

def get_container_metrics(self, container_id: str) -> ContainerMetrics:

"""获取容器性能指标"""

try:

# 使用Docker stats API获取指标

import docker

client = docker.from_env()

container = client.containers.get(container_id)

stats = container.stats(stream=False)

# 解析CPU使用率

cpu_delta = stats['cpu_stats']['cpu_usage']['total_usage'] - stats['precpu_stats']['cpu_usage']['total_usage']

system_delta = stats['cpu_stats']['system_cpu_usage'] - stats['precpu_stats']['system_cpu_usage']

cpu_percent = (cpu_delta / system_delta) * 100 if system_delta > 0 else 0

# 内存使用

memory_usage = stats['memory_stats']['usage']

memory_limit = stats['memory_stats']['limit']

# 网络IO

network_io = {

'rx_bytes': stats['networks']['eth0']['rx_bytes'],

'tx_bytes': stats['networks']['eth0']['tx_bytes']

}

return ContainerMetrics(

container_id=container_id,

cpu_percent=round(cpu_percent, 2),

memory_usage=memory_usage,

memory_limit=memory_limit,

network_io=network_io,

timestamp=time.time()

)

except Exception as e:

print(f"获取容器指标失败: {e}")

return None

def detect_performance_issues(self, metrics: ContainerMetrics) -> List[str]:

"""检测性能问题"""

issues = []

# CPU使用率检查

if metrics.cpu_percent > 80:

issues.append(f"CPU使用率过高: {metrics.cpu_percent}%")

# 内存使用率检查

memory_percent = (metrics.memory_usage / metrics.memory_limit) * 100

if memory_percent > 85:

issues.append(f"内存使用率过高: {memory_percent:.1f}%")

# 内存限制检查

if metrics.memory_limit < 100 * 1024 * 1024: # 小于100MB

issues.append("内存限制过低,可能影响应用性能")

return issues

def generate_optimization_recommendations(self, metrics: ContainerMetrics) -> Dict:

"""生成优化建议"""

recommendations = {}

memory_percent = (metrics.memory_usage / metrics.memory_limit) * 100

# CPU优化建议

if metrics.cpu_percent > 70:

recommendations['cpu'] = {

'issue': '高CPU使用率',

'suggestion': '考虑增加CPU配额或优化应用代码',

'action': '在docker-compose中增加cpus参数'

}

# 内存优化建议

if memory_percent > 80:

recommendations['memory'] = {

'issue': '高内存使用率',

'suggestion': '考虑增加内存限制或优化内存使用',

'action': '在docker-compose中增加memory限制'

}

elif memory_percent < 30:

recommendations['memory'] = {

'issue': '内存分配过多',

'suggestion': '可以适当降低内存限制以节省资源',

'action': '在docker-compose中减少memory限制'

}

# 基础镜像优化建议

recommendations['base_image'] = {

'issue': '镜像大小优化',

'suggestion': '使用Alpine基础镜像或多阶段构建',

'action': '修改Dockerfile使用python:3.11-alpine'

}

return recommendations

class ResourceOptimizer:

"""资源优化器"""

@staticmethod

def optimize_dockerfile() -> str:

"""生成优化的Dockerfile内容"""

optimized_dockerfile = '''

# 使用Alpine基础镜像减小大小

FROM python:3.11-alpine as builder

# 安装构建依赖

RUN apk add --no-cache \

build-base \

linux-headers \

pcre-dev

# 创建虚拟环境

RUN python -m venv /opt/venv

ENV PATH="/opt/venv/bin:$PATH"

# 安装依赖

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

# 生产阶段

FROM python:3.11-alpine

# 安装运行时依赖

RUN apk add --no-cache pcre

# 复制虚拟环境

COPY --from=builder /opt/venv /opt/venv

ENV PATH="/opt/venv/bin:$PATH"

# 应用代码

WORKDIR /app

COPY . .

# 非root用户

RUN adduser -D appuser && chown -R appuser:appuser /app

USER appuser

CMD ["gunicorn", "--bind", "0.0.0.0:8000", "app:app"]

'''

return optimized_dockerfile

@staticmethod

def calculate_savings(original_size: int, optimized_size: int) -> Dict:

"""计算优化节省"""

savings = original_size - optimized_size

savings_percent = (savings / original_size) * 100

return {

'original_size_mb': original_size / (1024 * 1024),

'optimized_size_mb': optimized_size / (1024 * 1024),

'savings_mb': savings / (1024 * 1024),

'savings_percent': savings_percent

}5 企业级实战案例

5.1 电商平台容器化实战

python

# ecommerce_dockerization.py

from typing import Dict, List

import yaml

class ECommerceDockerization:

"""电商平台容器化实战案例"""

def __init__(self):

self.services = {

'frontend': 'Vue.js前端',

'backend': 'Python Flask后端',

'auth': '认证服务',

'payment': '支付服务',

'inventory': '库存服务',

'recommendation': '推荐服务'

}

def generate_production_config(self) -> Dict:

"""生成生产环境配置"""

# 基础配置

config = {

'version': '3.8',

'services': {},

'volumes': {

'postgres_data': {},

'redis_data': {},

'es_data': {}

},

'networks': {

'ecommerce-net': {

'driver': 'bridge',

'ipam': {

'config': [{'subnet': '172.20.0.0/16'}]

}

}

}

}

# 后端服务配置

config['services']['backend'] = {

'build': {

'context': './backend',

'target': 'production',

'args': {

'BUILD_ENV': 'production'

}

},

'image': 'ecommerce/backend:${TAG:-latest}',

'container_name': 'ecommerce-backend',

'ports': ['8000:8000'],

'environment': {

'ENVIRONMENT': 'production',

'DATABASE_URL': 'postgresql://user:${DB_PASSWORD}@db:5432/ecommerce',

'REDIS_URL': 'redis://redis:6379/0',

'ELASTICSEARCH_URL': 'http://elasticsearch:9200',

'JWT_SECRET': '${JWT_SECRET}'

},

'depends_on': {

'db': {'condition': 'service_healthy'},

'redis': {'condition': 'service_started'},

'elasticsearch': {'condition': 'service_healthy'}

},

'deploy': {

'resources': {

'limits': {'memory': '1G', 'cpus': '1.0'},

'reservations': {'memory': '512M', 'cpus': '0.5'}

},

'replicas': 3,

'update_config': {

'parallelism': 1,

'delay': '10s',

'order': 'start-first'

},

'restart_policy': {

'condition': 'on-failure',

'delay': '5s',

'max_attempts': 3

}

},

'healthcheck': {

'test': ['CMD', 'curl', '-f', 'http://localhost:8000/health'],

'interval': '30s',

'timeout': '10s',

'retries': 3,

'start_period': '60s'

},

'networks': ['ecommerce-net'],

'logging': {

'driver': 'json-file',

'options': {

'max-size': '10m',

'max-file': '3'

}

}

}

# 数据库服务

config['services']['db'] = {

'image': 'postgres:15-alpine',

'container_name': 'ecommerce-db',

'environment': {

'POSTGRES_DB': 'ecommerce',

'POSTGRES_USER': 'appuser',

'POSTGRES_PASSWORD': '${DB_PASSWORD}'

},

'volumes': [

'postgres_data:/var/lib/postgresql/data',

'./backend/init.sql:/docker-entrypoint-initdb.d/init.sql'

],

'healthcheck': {

'test': ['CMD-SHELL', 'pg_isready -U appuser'],

'interval': '10s',

'timeout': '5s',

'retries': 5

},

'deploy': {

'resources': {

'limits': {'memory': '2G', 'cpus': '1.0'},

'reservations': {'memory': '1G', 'cpus': '0.5'}

}

},

'networks': ['ecommerce-net']

}

# Redis缓存

config['services']['redis'] = {

'image': 'redis:7-alpine',

'container_name': 'ecommerce-redis',

'command': 'redis-server --appendonly yes --requirepass ${REDIS_PASSWORD}',

'volumes': ['redis_data:/data'],

'healthcheck': {

'test': ['CMD', 'redis-cli', 'ping'],

'interval': '10s',

'timeout': '5s',

'retries': 3

},

'deploy': {

'resources': {

'limits': {'memory': '512M', 'cpus': '0.5'},

'reservations': {'memory': '256M', 'cpus': '0.25'}

}

},

'networks': ['ecommerce-net']

}

# Elasticsearch搜索

config['services']['elasticsearch'] = {

'image': 'elasticsearch:8.8.0',

'container_name': 'ecommerce-es',

'environment': {

'discovery.type': 'single-node',

'xpack.security.enabled': 'false',

'ES_JAVA_OPTS': '-Xms512m -Xmx512m'

},

'volumes': ['es_data:/usr/share/elasticsearch/data'],

'healthcheck': {

'test': ['CMD', 'curl', '-f', 'http://localhost:9200/_cluster/health'],

'interval': '30s',

'timeout': '10s',

'retries': 3

},

'deploy': {

'resources': {

'limits': {'memory': '1G', 'cpus': '1.0'},

'reservations': {'memory': '512M', 'cpus': '0.5'}

}

},

'networks': ['ecommerce-net']

}

return config

def generate_monitoring_config(self) -> Dict:

"""生成监控配置"""

return {

'version': '3.8',

'services': {

'prometheus': {

'image': 'prom/prometheus:latest',

'ports': ['9090:9090'],

'volumes': [

'./monitoring/prometheus.yml:/etc/prometheus/prometheus.yml',

'prometheus_data:/prometheus'

],

'command': [

'--config.file=/etc/prometheus/prometheus.yml',

'--storage.tsdb.path=/prometheus',

'--web.console.libraries=/etc/prometheus/console_libraries',

'--web.console.template=/etc/prometheus/consoles',

'--web.enable-lifecycle'

],

'networks': ['ecommerce-net']

},

'grafana': {

'image': 'grafana/grafana:latest',

'ports': ['3000:3000'],

'volumes': [

'grafana_data:/var/lib/grafana',

'./monitoring/dashboards:/etc/grafana/provisioning/dashboards'

],

'environment': {

'GF_SECURITY_ADMIN_PASSWORD': '${GRAFANA_PASSWORD}'

},

'depends_on': ['prometheus'],

'networks': ['ecommerce-net']

},

'node-exporter': {

'image': 'prom/node-exporter:latest',

'volumes': ['/proc:/host/proc:ro', '/sys:/host/sys:ro', '/:/rootfs:ro'],

'command': [

'--path.procfs=/host/proc',

'--path.sysfs=/host/sys',

'--collector.filesystem.ignored-mount-points',

'^/(sys|proc|dev|host|etc|rootfs/var/lib/docker/containers|rootfs/var/lib/docker/overlay2|rootfs/run/docker/netns|rootfs/var/lib/docker/aufs)($$|/)'

],

'networks': ['ecommerce-net']

}

},

'volumes': {

'prometheus_data': {},

'grafana_data': {}

},

'networks': {

'ecommerce-net': {

'external': True

}

}

}

# 配置生成演示

def demonstrate_ecommerce_config():

"""演示电商平台配置生成"""

dockerizer = ECommerceDockerization()

production_config = dockerizer.generate_production_config()

monitoring_config = dockerizer.generate_monitoring_config()

print("=== 生产环境Docker Compose配置 ===")

print(yaml.dump(production_config, default_flow_style=False))

print("\n=== 监控栈Docker Compose配置 ===")

print(yaml.dump(monitoring_config, default_flow_style=False))

return production_config, monitoring_config5.1.1 电商平台架构图

6 故障排查与优化指南

6.1 常见问题解决方案

python

# troubleshooting.py

import subprocess

import json

from typing import Dict, List

class DockerTroubleshooter:

"""Docker故障排查器"""

def __init__(self):

self.common_issues_db = self._initialize_issues_database()

def _initialize_issues_database(self) -> Dict:

"""初始化常见问题数据库"""

return {

'container_crash': {

'symptoms': ['容器频繁重启', 'Exit Code 137', '内存不足'],

'causes': ['内存限制过低', '应用内存泄漏', '系统资源不足'],

'solutions': [

'增加内存限制: docker run -m 512m',

'检查应用内存使用情况',

'监控系统资源使用'

]

},

'slow_build': {

'symptoms': ['构建时间过长', '网络超时', '依赖下载慢'],

'causes': ['网络问题', '镜像层缓存失效', '依赖过多'],

'solutions': [

'使用国内镜像源',

'优化Dockerfile指令顺序',

'使用多阶段构建'

]

},

'image_size': {

'symptoms': ['镜像体积过大', '推送下载慢', '存储空间不足'],

'causes': ['包含不必要的文件', '未使用多阶段构建', '基础镜像过大'],

'solutions': [

'使用Alpine基础镜像',

'实施多阶段构建',

'添加.dockerignore文件'

]

}

}

def diagnose_issue(self, symptoms: List[str]) -> Dict:

"""诊断Docker问题"""

matched_issues = []

for issue_id, issue_info in self.common_issues_db.items():

# 检查症状匹配

symptom_match = any(symptom in ' '.join(symptoms).lower()

for symptom in issue_info['symptoms'])

if symptom_match:

matched_issues.append({

'issue_id': issue_id,

'symptoms': issue_info['symptoms'],

'causes': issue_info['causes'],

'solutions': issue_info['solutions']

})

return {

'matched_issues': matched_issues,

'diagnosis_summary': f"找到{len(matched_issues)}个可能的问题"

}

def check_container_health(self, container_id: str) -> Dict:

"""检查容器健康状态"""

try:

# 获取容器详细信息

result = subprocess.run([

'docker', 'inspect', container_id

], capture_output=True, text=True)

if result.returncode == 0:

container_info = json.loads(result.stdout)[0]

# 分析状态

state = container_info['State']

health = state.get('Health', {})

return {

'status': state['Status'],

'running': state['Running'],

'exit_code': state.get('ExitCode', 0),

'health_status': health.get('Status', 'no healthcheck'),

'restart_count': state.get('RestartCount', 0),

'started_at': state.get('StartedAt', ''),

'finished_at': state.get('FinishedAt', '')

}

else:

return {'error': f'容器检查失败: {result.stderr}'}

except Exception as e:

return {'error': f'容器检查异常: {str(e)}'}

def analyze_image_layers(self, image_name: str) -> Dict:

"""分析镜像分层"""

try:

result = subprocess.run([

'docker', 'history', image_name, '--no-trunc', '--format', 'json'

], capture_output=True, text=True)

if result.returncode == 0:

layers = [json.loads(line) for line in result.stdout.strip().split('\n') if line]

# 分析层大小

total_size = sum(int(layer.get('Size', 0)) for layer in layers)

largest_layers = sorted(layers, key=lambda x: int(x.get('Size', 0)), reverse=True)[:5]

return {

'total_layers': len(layers),

'total_size': total_size,

'largest_layers': [

{

'created_by': layer['CreatedBy'][:100],

'size': int(layer['Size']),

'size_mb': round(int(layer['Size']) / (1024 * 1024), 2)

}

for layer in largest_layers

]

}

else:

return {'error': f'镜像分析失败: {result.stderr}'}

except Exception as e:

return {'error': f'镜像分析异常: {str(e)}'}

class PerformanceOptimizer:

"""性能优化器"""

@staticmethod

def optimize_build_time() -> List[str]:

"""优化构建时间建议"""

return [

"使用构建缓存: 将不经常变化的指令放在Dockerfile前面",

"使用多阶段构建: 减少最终镜像大小和构建时间",

"使用.dockerignore: 排除不必要的文件",

"使用并行构建: 多个服务同时构建",

"使用更快的镜像源: 配置国内镜像加速器"

]

@staticmethod

def optimize_runtime_performance() -> List[str]:

"""优化运行时性能建议"""

return [

"资源限制: 合理设置CPU和内存限制",

"健康检查: 配置适当的健康检查策略",

"日志管理: 使用JSON文件日志驱动,限制日志大小",

"网络优化: 使用自定义网络,优化DNS解析",

"存储优化: 使用 volumes 持久化数据"

]

# 故障排查演示

def demonstrate_troubleshooting():

"""演示故障排查流程"""

troubleshooter = DockerTroubleshooter()

# 模拟症状诊断

symptoms = ['容器频繁重启', '内存不足']

diagnosis = troubleshooter.diagnose_issue(symptoms)

print("=== 故障诊断结果 ===")

for issue in diagnosis['matched_issues']:

print(f"问题: {issue['issue_id']}")

print(f"症状: {', '.join(issue['symptoms'])}")

print(f"可能原因: {', '.join(issue['causes'])}")

print("解决方案:")

for solution in issue['solutions']:

print(f" - {solution}")

print()

# 性能优化建议

optimizer = PerformanceOptimizer()

build_optimizations = optimizer.optimize_build_time()

runtime_optimizations = optimizer.optimize_runtime_performance()

print("=== 构建性能优化建议 ===")

for i, suggestion in enumerate(build_optimizations, 1):

print(f"{i}. {suggestion}")

print("\n=== 运行时性能优化建议 ===")

for i, suggestion in enumerate(runtime_optimizations, 1):

print(f"{i}. {suggestion}")

return diagnosis, build_optimizations官方文档与参考资源

-

Docker官方文档- Docker官方完整文档

-

Dockerfile最佳实践- 官方Dockerfile指南

-

Docker安全扫描文档- Docker Scout安全工具文档

-

Python容器化指南- Python应用容器化专项指南

通过本文的完整学习路径,您应该已经掌握了Docker容器化Python应用的核心技术和最佳实践。Docker作为现代应用交付的标准容器技术,其正确实施将直接决定应用的可靠性、安全性和可维护性。