- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊

前言

- InceptionV3是InceptionV1的升级版,虽然加大了计算量,但是当时效果是比VGG效果要好的。

- 本次任务是探究InceptionV3结构并进行复现实验;

- 欢迎收藏 + 关注,本人将会持续更新

文章目录

1、模型简介

1、模型特点

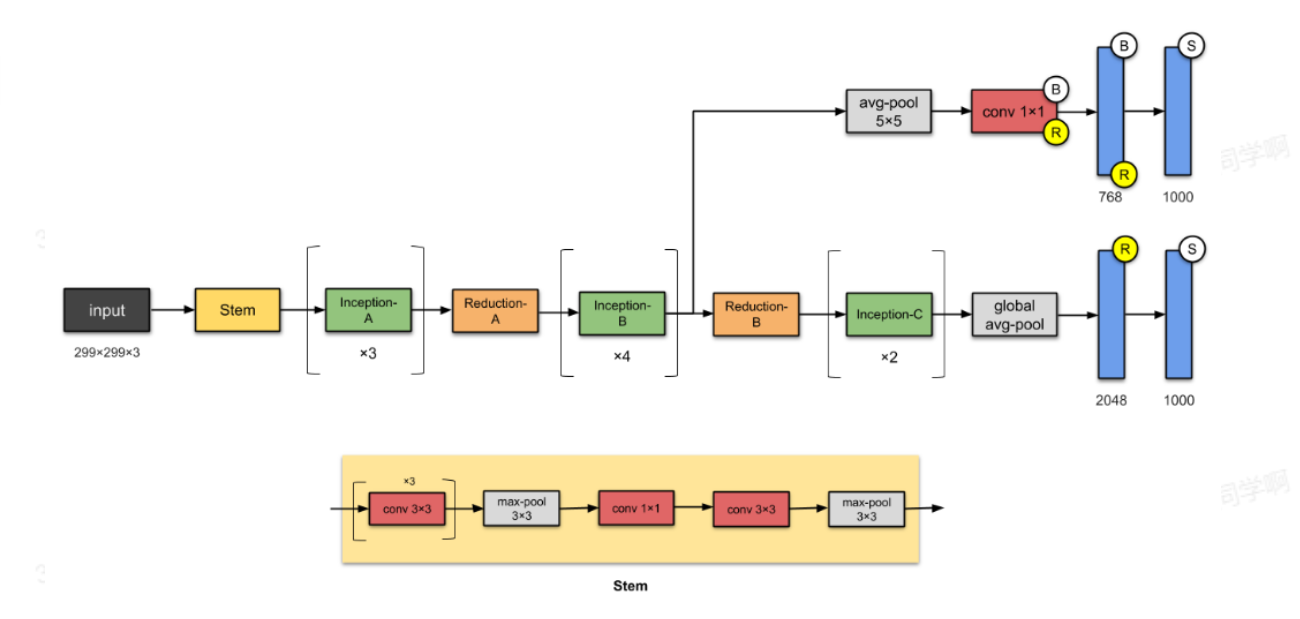

InceptionV3是谷歌在2015年提出,是InceptionV1的进阶版,对于Inception系列网络来说,他是当时第一个在100层卷积网络却依然可以取得好效果的网络(ResNet还没有提出来),相比于InceptionV1来说,他主要特点是:

- 更深入得网络结构,在

InceptionV3中,包含了48层卷积网络,这可以提取出更多特征,从而获得更好成果; - 使用分解卷积,将较大的卷积核分解为多个较小的卷积核,在保持良好性能的情况下,依然降低了网络参数量,减少计算复杂度;

- 使用BN层,

InceptionV3中每个卷积层后都添加了BN层,使数据符合高斯分布,这样有助于缓解网络梯度消失和梯度爆炸的效果,同时也有助网络于收敛和提高泛化能力; - 辅助分类器,在

InceptionV3中提出来辅助分类器结构模块,主要用于缓解深层网络 训练中梯度消失问题,加快模型收敛,辅助分类器结构:平均池化层 + 全连接层 + Softmax激活函数组成; - 基于

RMSPeop优化器代替SGD方法,可以使用模型更快收敛。

📚 分解卷积

在

InceptionV3网络结构中,采用空间可分离卷积结构,这里简介该结构。

👁 在介绍该结构前,先学习一下深度卷积(DW):

- 与常规卷积相比,深度卷积一个卷积核负责一个通道,一个通道只被一个卷积核卷,在常规卷积中是同时操作图片的每个通道。

- 举例:对于一张3通道一条图片,在深度卷积首先经过第一次卷积运算,和常规卷积相比,深度卷积完全是在二维平面内进行。卷积核个数和通道数一一对应,如图所示:

- 而对于常规卷积中,以三通道数为例(参考某一个大神的图片):

-

从上图可以看出,他是在多维平面内进行卷积操作,且一个卷积核同时进行多个通道卷积,然后再生产特征。

👀 现在介绍深度可分离卷积 ,分为两步:深度卷积 + 逐点卷积。

以输入 12 * 12 * 3图像,5 * 5 卷积核为例:

1️⃣ 第一步:深度卷积

通过上面学习可以发现,这里就是使用3个 5 * 5 * 1的卷积核分别提取3个特征,每个卷积核计算完都会得到一个 8 * 8 * 1 的输出特征,然后将3个堆积在一起,就得出了 8 * 8 * 3 大小的最终输出特征图。❔ 发现 :DW卷积缺少特征之间的融合,解决这个问题就是用到了下面介绍的逐点卷积;

2️⃣ 第二步:逐点卷积

逐点卷积就是用1 * 1的卷积核去遍历每一个点;在第一步中,我们得到了 8 * 8 * 3 尺寸的特征图,这里我们采用一个3通道的1 * 1卷积(1 * 1 * 3)对该特征图进行计算,去融合3个通道间的特征功能,如图:

最后就得到了 8 * 8 * 1的输出图,如果这个时候使用256个 1 * 1 * 3的卷积对该特征图进行卷积,得到结果如图:

2、模型结构简介

先回忆一下InceptionV1的核心网络结构:

一:

将 5 * 5 的卷积结构分解成两个3 * 3的卷积运算以提高速度(通过计算发现两个3 * 3卷积结构的计算量远小于一个5 * 5的计算量):

二:

作者将n * n 的卷积核分解为 1 * n 和 n * 1的两个卷积核,如:一个3 * 3的卷积核=先执行一个1 * 3的卷积核在执行一个3 * 1的卷积,作者发现这种方法比单使用3 * 3卷积降低 33% 成本,如图:

三:

作者还在InceptionV1核心结构中进行了横向扩展,解决性能瓶颈问题(训练神经网络很多时候会遇到精度上不去的现象),这一模块主要进行宽度扩展,如图:

最后通过模块搭建,InceptionV1结构如下:

2、模型复现

准备工作

python

import torch

import torch.nn as nn

import torch.nn.functional as F

# 封装 Conv2d + BN + ReLU

class BasicConv2d(nn.Module):

def __init__(self, in_channels, out_channels, **kwargs):

super(BasicConv2d, self).__init__()

self.conv = nn.Conv2d(in_channels, out_channels, bias=False, **kwargs)

self.bn = nn.BatchNorm2d(out_channels, eps=0.001)

def forward(self, x):

x = self.conv(x)

x = self.bn(x)

return F.relu(x, inplace=True)1、InceptionA

python

# 这一部分对于InceptionV1核心部分来说,没什么变化,不同的是 3 * 3变成了两个

class InceptionA(nn.Module):

def __init__(self, in_channels, pool_features):

super(InceptionA, self).__init__()

# 1 * 1卷积, BasicConv2d是封装好的卷积Conv2d + BN + ReLU

self.branch1x1 = BasicConv2d(in_channels, 64, kernel_size=1)

# 1 * 1 + 5 * 5

self.branch5x5_1 = BasicConv2d(in_channels, 48, kernel_size=1)

self.branch5x5_2 = BasicConv2d(48, 64, kernel_size=5, padding=2)

# 1 * 1 + 3 * 3 + 3 * 3

self.branch3x3_1 = BasicConv2d(in_channels, 64, kernel_size=1)

self.branch3x3_2 = BasicConv2d(64, 96, kernel_size=3, padding=1)

self.branch3x3_3 = BasicConv2d(96, 96, kernel_size=3, padding=1)

# 池化

self.branch_pool = BasicConv2d(in_channels, pool_features, kernel_size=1)

def forward(self, x):

branch1x1 = self.branch1x1(x)

branch5x5 = self.branch5x5_1(x)

branch5x5 = self.branch5x5_2(branch5x5)

branch3x3 = self.branch3x3_1(x)

branch3x3 = self.branch3x3_2(branch3x3)

branch3x3 = self.branch3x3_3(branch3x3)

branch_pool = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)

branch_pool = self.branch_pool(branch_pool)

out = [branch1x1, branch5x5, branch3x3, branch_pool]

# 拼接, 通道拼接

return torch.cat(out, dim=1)

2、InceptionB

python

# 这个模块将 3 * 3,,5 * 5变成(1 * n,n * 1)/ (n * 1 + 1 * n)结构

class InceptionB(nn.Module):

def __init__(self, in_channels, channels_7x7):

super(InceptionB, self).__init__()

self.branch1x1 = BasicConv2d(in_channels, 192, kernel_size=1)

c7 = channels_7x7

# 1 * 1 + 7 * 1 + 1 * 7

self.branch7x7_1 = BasicConv2d(in_channels, c7, kernel_size=1)

self.branch7x7_2 = BasicConv2d(c7, c7, kernel_size=(1, 7), padding=(0, 3))

self.branch7x7_3 = BasicConv2d(c7, 192, kernel_size=(7, 1), padding=(3, 0))

# 1 * 1 + (7 * 1 + 1 * 7) * 2

self.branch7x7dbl_1 = BasicConv2d(in_channels, c7, kernel_size=1)

self.branch7x7dbl_2 = BasicConv2d(c7, c7, kernel_size=(7, 1), padding=(3, 0))

self.branch7x7dbl_3 = BasicConv2d(c7, c7, kernel_size=(1, 7), padding=(0, 3))

self.branch7x7dbl_4 = BasicConv2d(c7, c7, kernel_size=(7, 1), padding=(3, 0))

self.branch7x7dbl_5 = BasicConv2d(c7, 192, kernel_size=(1, 7), padding=(0, 3))

self.branch_pool = BasicConv2d(in_channels, 192, kernel_size=1)

def forward(self, x):

branch1x1 = self.branch1x1(x)

branch7x7 = self.branch7x7_1(x)

branch7x7 = self.branch7x7_2(branch7x7)

branch7x7 = self.branch7x7_3(branch7x7)

branch7x7dbl = self.branch7x7dbl_1(x)

branch7x7dbl = self.branch7x7dbl_2(branch7x7dbl)

branch7x7dbl = self.branch7x7dbl_3(branch7x7dbl)

branch7x7dbl = self.branch7x7dbl_4(branch7x7dbl)

branch7x7dbl = self.branch7x7dbl_5(branch7x7dbl)

branch_pool = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)

branch_pool = self.branch_pool(branch_pool)

outputs = [branch1x1, branch7x7, branch7x7dbl, branch_pool]

return torch.cat(outputs, 1)3、InceptionC

python

# 这个部分采用横向扩展,主要用与处理性能瓶颈

class InceptionC(nn.Module):

def __init__(self, in_channels):

super(InceptionC, self).__init__()

# 1 * 1

self.branch1x1 = BasicConv2d(in_channels, 320, kernel_size=1)

# 1 * 1 + 1 * 3 + 3 * 1

self.branch3x3_1 = BasicConv2d(in_channels, 384, kernel_size=1)

self.branch3x3_2a = BasicConv2d(384, 384, kernel_size=(1, 3), padding=(0, 1))

self.branch3x3_2b = BasicConv2d(384, 384, kernel_size=(3, 1), padding=(1, 0))

# 1 * 1 + 3 * 3 + 3 * 1 + 3 * 1

self.branch3x3b1_1 = BasicConv2d(in_channels, 448, kernel_size=1)

self.branch3x3b1_2 = BasicConv2d(448, 384, kernel_size=3, padding=1)

self.branch3x3b1_3a = BasicConv2d(384, 384, kernel_size=(1, 3), padding=(0, 1))

self.branch3x3b1_3b = BasicConv2d(384, 384, kernel_size=(3, 1), padding=(1, 0))

self.branch_pool = BasicConv2d(in_channels, 192, kernel_size=1)

def forward(self, x):

branch1x1 = self.branch1x1(x)

branch3x3 = self.branch3x3_1(x)

branch3x3 = [

self.branch3x3_2a(branch3x3),

self.branch3x3_2b(branch3x3),

]

branch3x3 = torch.cat(branch3x3, dim=1) # 拼接

branch3x3b1 = self.branch3x3b1_1(x)

branch3x3b1 = self.branch3x3b1_2(branch3x3b1)

branch3x3b1 = [

self.branch3x3b1_3a(branch3x3b1),

self.branch3x3b1_3b(branch3x3b1)

]

branch3x3b1 = torch.cat(branch3x3b1, dim=1)

branch_pool = F.avg_pool2d(x, kernel_size=3, stride=1, padding=1)

branch_pool = self.branch_pool(branch_pool)

out = [branch1x1, branch3x3, branch3x3b1, branch_pool]

return torch.cat(out, dim=1)4、ReductionA

从总体模块可以看出,这个位置在于连接InceptionA/B/C模块后,主要用于特征提取后降维操作,总体模型结构如下:

python

class ReductionA(nn.Module):

def __init__(self, in_channels):

super(ReductionA, self).__init__()

# 3 * 3

self.branch3x3 = BasicConv2d(in_channels, 384, kernel_size=3, stride=2)

# 1 * 1 + 3 * 3 + 3 * 3

self.branch3x3db1_1 = BasicConv2d(in_channels, 64, kernel_size=1)

self.branch3x3db1_2 = BasicConv2d(64, 96, kernel_size=3, padding=1)

self.branch3x3db1_3 = BasicConv2d(96, 96, kernel_size=3, stride=2)

def forward(self, x):

branch3x3 = self.branch3x3(x)

branch3x3db1 = self.branch3x3db1_1(x)

branch3x3db1 = self.branch3x3db1_2(branch3x3db1)

branch3x3db1 = self.branch3x3db1_3(branch3x3db1)

# 这里采用最大池化

branch_pool = F.max_pool2d(x, kernel_size=3, stride=2)

out = [branch3x3, branch3x3db1, branch_pool]

return torch.cat(out, dim=1)5、ReductionB

python

class ReductionB(nn.Module):

def __init__(self, in_channels):

super(ReductionB, self).__init__()

self.branch3x3_1 = BasicConv2d(in_channels, 192, kernel_size=1)

self.branch3x3_2 = BasicConv2d(192, 320, kernel_size=3, stride=2)

self.branch7x7x3_1 = BasicConv2d(in_channels, 192, kernel_size=1)

self.branch7x7x3_2 = BasicConv2d(192, 192, kernel_size=(1, 7), padding=(0, 3))

self.branch7x7x3_3 = BasicConv2d(192, 192, kernel_size=(7, 1), padding=(3, 0))

self.branch7x7x3_4 = BasicConv2d(192, 192, kernel_size=3, stride=2)

def forward(self, x):

branch3x3 = self.branch3x3_1(x)

branch3x3 = self.branch3x3_2(branch3x3)

branch7x7x3 = self.branch7x7x3_1(x)

branch7x7x3 = self.branch7x7x3_2(branch7x7x3)

branch7x7x3 = self.branch7x7x3_3(branch7x7x3)

branch7x7x3 = self.branch7x7x3_4(branch7x7x3)

branch_pool = F.max_pool2d(x, kernel_size=3, stride=2)

out = [branch3x3, branch7x7x3, branch_pool]

return torch.cat(out, dim=1)6、辅助分支

python

# 辅助分类器

class InceptionAux(nn.Module):

def __init__(self, in_channels, num_classes):

super(InceptionAux, self).__init__()

self.conv0 = BasicConv2d(in_channels, 128, kernel_size=1)

self.conv1 = BasicConv2d(128, 768, kernel_size=5)

self.conv1.stddev = 0.01 # 设置卷积层权重的标准差

self.fc = nn.Linear(768, num_classes)

self.fc.saddev = 0.001 # 设置全连接层权重的标准差

def forward(self, x):

# 17 x 17 x 768

x = F.avg_pool2d(x, kernel_size=5, stride=5)

# 5 x 5 x 768

x = self.conv0(x)

# 5 x 5 x 128

x = self.coinv1(x)

# 1 x 1 x 768

x = x.view(x.size(0), -1) # 展开

# 768

x = self.fc(x)

return x7、模型搭建

python

class InceptionV3(nn.Module):

def __init__(self, num_classes=1000, aux_logits=False, transform_input=False):

super(InceptionV3, self).__init__()

'''

aux_logits 是否使用辅助分类器

transform_input 是否对数据进行转换

'''

self.aux_logits = aux_logits

self.transform_input = transform_input

# 头,输出初处理

self.Conv2d_1a_3x3 = BasicConv2d(3, 32, kernel_size=3, stride=2)

self.Conv2d_2a_3x3 = BasicConv2d(32, 32, kernel_size=3)

self.Conv2d_2b_3x3 = BasicConv2d(32, 64, kernel_size=3, padding=1)

self.Conv2d_3b_1x1 = BasicConv2d(64, 80, kernel_size=1)

self.Conv2d_4a_3x3 = BasicConv2d(80, 192, kernel_size=3)

# InceptionA

self.Mixed_5b = InceptionA(192, pool_features=32)

self.Mixed_5c = InceptionA(256, pool_features=64)

self.Mixed_5d = InceptionA(288, pool_features=64)

# 降维,通道增加

self.Mixed_6a = ReductionA(288)

# InceptionB

self.Mixed_6b = InceptionB(768, channels_7x7=128)

self.Mixed_6c = InceptionB(768, channels_7x7=160)

self.Mixed_6d = InceptionB(768, channels_7x7=160)

self.Mixed_6e = InceptionB(768, channels_7x7=192)

# 辅助

if aux_logits:

self.AuxLogits = InceptionAux(768, num_classes)

# 降维,通道增加

self.Mixed_7a = ReductionB(768)

# InceptionC

self.Mixed_7b = InceptionC(1280)

self.Mixed_7c = InceptionC(2048)

self.fc = nn.Linear(2048, num_classes)

def forward(self, x):

# 数据转换

if self.transform_input: # 对数据进行标准化

x = x.clone()

x[:, 0] = x[:, 0] * (0.229 / 0.5) + (0.485 - 0.5) / 0.5

x[:, 1] = x[:, 1] * (0.224 / 0.5) + (0.456 - 0.5) / 0.5

x[:, 2] = x[:, 2] * (0.225 / 0.5) + (0.406 - 0.5) / 0.5

# 299 x 299 x 3

x = self.Conv2d_1a_3x3(x)

# 129 x 149 x 32

x = self.Conv2d_2a_3x3(x)

# 147 x 147 x 32

x = self.Conv2d_2b_3x3(x)

# 147 x 147 x 64

x = F.max_pool2d(x, kernel_size=3, stride=2)

# 73 x 73 x 64

x = self.Conv2d_3b_1x1(x)

# 73 x 73 x 80

x = self.Conv2d_4a_3x3(x)

# 71 x 71 x 192

x = F.max_pool2d(x, kernel_size=3, stride=2)

# 35 x 35 x 192

x = self.Mixed_5b(x)

# 35 x 35 x 256

x = self.Mixed_5c(x)

# 35 x 35 x 288

x = self.Mixed_5d(x)

# 35 x 35 x 288

x = self.Mixed_6a(x)

# 17 x 17 x 768

x = self.Mixed_6b(x)

# 17 x 17 x 768

x = self.Mixed_6c(x)

# 17 x 17 x 768

x = self.Mixed_6d(x)

# 17 x 17 x 768

x = self.Mixed_6e(x)

# 17 x 17 x 768

if self.training and self.aux_logits: # 在训练模型中使用

aux = self.AuxLogits(x)

# 17 x 17 x 768

x = self.Mixed_7a(x)

# 8 x 8 x 1280

x = self.Mixed_7b(x)

# 8 x 8 x 2048

x = self.Mixed_7c(x)

# 8 x 8 x 2048

x = F.avg_pool2d(x, kernel_size=8)

# 1 x 1 x 2048

x = F.dropout(x, training=self.training)

# 1 x 1 x 2048

x = x.view(x.size(0), -1)

# 2048

x = self.fc(x)

# num_classes

if self.training and self.aux_logits: # 在训练模型中使用

return x, aux

return x

python

# 测试

device = "cuda" if torch.cuda.is_available() else "cpu"

model = InceptionV3().to(device)

modelInceptionV3(

(Conv2d_1a_3x3): BasicConv2d(

(conv): Conv2d(3, 32, kernel_size=(3, 3), stride=(2, 2), bias=False)

(bn): BatchNorm2d(32, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(Conv2d_2a_3x3): BasicConv2d(

(conv): Conv2d(32, 32, kernel_size=(3, 3), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(Conv2d_2b_3x3): BasicConv2d(

(conv): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(Conv2d_3b_1x1): BasicConv2d(

(conv): Conv2d(64, 80, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(80, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(Conv2d_4a_3x3): BasicConv2d(

(conv): Conv2d(80, 192, kernel_size=(3, 3), stride=(1, 1), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(Mixed_5b): InceptionA(

(branch1x1): BasicConv2d(

(conv): Conv2d(192, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch5x5_1): BasicConv2d(

(conv): Conv2d(192, 48, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(48, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch5x5_2): BasicConv2d(

(conv): Conv2d(48, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_1): BasicConv2d(

(conv): Conv2d(192, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_2): BasicConv2d(

(conv): Conv2d(64, 96, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(96, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_3): BasicConv2d(

(conv): Conv2d(96, 96, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(96, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch_pool): BasicConv2d(

(conv): Conv2d(192, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(32, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

)

(Mixed_5c): InceptionA(

(branch1x1): BasicConv2d(

(conv): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch5x5_1): BasicConv2d(

(conv): Conv2d(256, 48, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(48, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch5x5_2): BasicConv2d(

(conv): Conv2d(48, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_1): BasicConv2d(

(conv): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_2): BasicConv2d(

(conv): Conv2d(64, 96, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(96, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_3): BasicConv2d(

(conv): Conv2d(96, 96, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(96, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch_pool): BasicConv2d(

(conv): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

)

(Mixed_5d): InceptionA(

(branch1x1): BasicConv2d(

(conv): Conv2d(288, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch5x5_1): BasicConv2d(

(conv): Conv2d(288, 48, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(48, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch5x5_2): BasicConv2d(

(conv): Conv2d(48, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_1): BasicConv2d(

(conv): Conv2d(288, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_2): BasicConv2d(

(conv): Conv2d(64, 96, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(96, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_3): BasicConv2d(

(conv): Conv2d(96, 96, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(96, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch_pool): BasicConv2d(

(conv): Conv2d(288, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

)

(Mixed_6a): ReductionA(

(branch3x3): BasicConv2d(

(conv): Conv2d(288, 384, kernel_size=(3, 3), stride=(2, 2), bias=False)

(bn): BatchNorm2d(384, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3db1_1): BasicConv2d(

(conv): Conv2d(288, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(64, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3db1_2): BasicConv2d(

(conv): Conv2d(64, 96, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(96, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3db1_3): BasicConv2d(

(conv): Conv2d(96, 96, kernel_size=(3, 3), stride=(2, 2), bias=False)

(bn): BatchNorm2d(96, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

)

(Mixed_6b): InceptionB(

(branch1x1): BasicConv2d(

(conv): Conv2d(768, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7_1): BasicConv2d(

(conv): Conv2d(768, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7_2): BasicConv2d(

(conv): Conv2d(128, 128, kernel_size=(1, 7), stride=(1, 1), padding=(0, 3), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7_3): BasicConv2d(

(conv): Conv2d(128, 192, kernel_size=(7, 1), stride=(1, 1), padding=(3, 0), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7dbl_1): BasicConv2d(

(conv): Conv2d(768, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7dbl_2): BasicConv2d(

(conv): Conv2d(128, 128, kernel_size=(7, 1), stride=(1, 1), padding=(3, 0), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7dbl_3): BasicConv2d(

(conv): Conv2d(128, 128, kernel_size=(1, 7), stride=(1, 1), padding=(0, 3), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7dbl_4): BasicConv2d(

(conv): Conv2d(128, 128, kernel_size=(7, 1), stride=(1, 1), padding=(3, 0), bias=False)

(bn): BatchNorm2d(128, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7dbl_5): BasicConv2d(

(conv): Conv2d(128, 192, kernel_size=(1, 7), stride=(1, 1), padding=(0, 3), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch_pool): BasicConv2d(

(conv): Conv2d(768, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

)

(Mixed_6c): InceptionB(

(branch1x1): BasicConv2d(

(conv): Conv2d(768, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7_1): BasicConv2d(

(conv): Conv2d(768, 160, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(160, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7_2): BasicConv2d(

(conv): Conv2d(160, 160, kernel_size=(1, 7), stride=(1, 1), padding=(0, 3), bias=False)

(bn): BatchNorm2d(160, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7_3): BasicConv2d(

(conv): Conv2d(160, 192, kernel_size=(7, 1), stride=(1, 1), padding=(3, 0), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7dbl_1): BasicConv2d(

(conv): Conv2d(768, 160, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(160, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7dbl_2): BasicConv2d(

(conv): Conv2d(160, 160, kernel_size=(7, 1), stride=(1, 1), padding=(3, 0), bias=False)

(bn): BatchNorm2d(160, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7dbl_3): BasicConv2d(

(conv): Conv2d(160, 160, kernel_size=(1, 7), stride=(1, 1), padding=(0, 3), bias=False)

(bn): BatchNorm2d(160, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7dbl_4): BasicConv2d(

(conv): Conv2d(160, 160, kernel_size=(7, 1), stride=(1, 1), padding=(3, 0), bias=False)

(bn): BatchNorm2d(160, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7dbl_5): BasicConv2d(

(conv): Conv2d(160, 192, kernel_size=(1, 7), stride=(1, 1), padding=(0, 3), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch_pool): BasicConv2d(

(conv): Conv2d(768, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

)

(Mixed_6d): InceptionB(

(branch1x1): BasicConv2d(

(conv): Conv2d(768, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7_1): BasicConv2d(

(conv): Conv2d(768, 160, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(160, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7_2): BasicConv2d(

(conv): Conv2d(160, 160, kernel_size=(1, 7), stride=(1, 1), padding=(0, 3), bias=False)

(bn): BatchNorm2d(160, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7_3): BasicConv2d(

(conv): Conv2d(160, 192, kernel_size=(7, 1), stride=(1, 1), padding=(3, 0), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7dbl_1): BasicConv2d(

(conv): Conv2d(768, 160, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(160, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7dbl_2): BasicConv2d(

(conv): Conv2d(160, 160, kernel_size=(7, 1), stride=(1, 1), padding=(3, 0), bias=False)

(bn): BatchNorm2d(160, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7dbl_3): BasicConv2d(

(conv): Conv2d(160, 160, kernel_size=(1, 7), stride=(1, 1), padding=(0, 3), bias=False)

(bn): BatchNorm2d(160, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7dbl_4): BasicConv2d(

(conv): Conv2d(160, 160, kernel_size=(7, 1), stride=(1, 1), padding=(3, 0), bias=False)

(bn): BatchNorm2d(160, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7dbl_5): BasicConv2d(

(conv): Conv2d(160, 192, kernel_size=(1, 7), stride=(1, 1), padding=(0, 3), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch_pool): BasicConv2d(

(conv): Conv2d(768, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

)

(Mixed_6e): InceptionB(

(branch1x1): BasicConv2d(

(conv): Conv2d(768, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7_1): BasicConv2d(

(conv): Conv2d(768, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7_2): BasicConv2d(

(conv): Conv2d(192, 192, kernel_size=(1, 7), stride=(1, 1), padding=(0, 3), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7_3): BasicConv2d(

(conv): Conv2d(192, 192, kernel_size=(7, 1), stride=(1, 1), padding=(3, 0), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7dbl_1): BasicConv2d(

(conv): Conv2d(768, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7dbl_2): BasicConv2d(

(conv): Conv2d(192, 192, kernel_size=(7, 1), stride=(1, 1), padding=(3, 0), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7dbl_3): BasicConv2d(

(conv): Conv2d(192, 192, kernel_size=(1, 7), stride=(1, 1), padding=(0, 3), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7dbl_4): BasicConv2d(

(conv): Conv2d(192, 192, kernel_size=(7, 1), stride=(1, 1), padding=(3, 0), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7dbl_5): BasicConv2d(

(conv): Conv2d(192, 192, kernel_size=(1, 7), stride=(1, 1), padding=(0, 3), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch_pool): BasicConv2d(

(conv): Conv2d(768, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

)

(Mixed_7a): ReductionB(

(branch3x3_1): BasicConv2d(

(conv): Conv2d(768, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_2): BasicConv2d(

(conv): Conv2d(192, 320, kernel_size=(3, 3), stride=(2, 2), bias=False)

(bn): BatchNorm2d(320, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7x3_1): BasicConv2d(

(conv): Conv2d(768, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7x3_2): BasicConv2d(

(conv): Conv2d(192, 192, kernel_size=(1, 7), stride=(1, 1), padding=(0, 3), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7x3_3): BasicConv2d(

(conv): Conv2d(192, 192, kernel_size=(7, 1), stride=(1, 1), padding=(3, 0), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch7x7x3_4): BasicConv2d(

(conv): Conv2d(192, 192, kernel_size=(3, 3), stride=(2, 2), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

)

(Mixed_7b): InceptionC(

(branch1x1): BasicConv2d(

(conv): Conv2d(1280, 320, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(320, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_1): BasicConv2d(

(conv): Conv2d(1280, 384, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(384, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_2a): BasicConv2d(

(conv): Conv2d(384, 384, kernel_size=(1, 3), stride=(1, 1), padding=(0, 1), bias=False)

(bn): BatchNorm2d(384, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_2b): BasicConv2d(

(conv): Conv2d(384, 384, kernel_size=(3, 1), stride=(1, 1), padding=(1, 0), bias=False)

(bn): BatchNorm2d(384, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3b1_1): BasicConv2d(

(conv): Conv2d(1280, 448, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(448, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3b1_2): BasicConv2d(

(conv): Conv2d(448, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(384, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3b1_3a): BasicConv2d(

(conv): Conv2d(384, 384, kernel_size=(1, 3), stride=(1, 1), padding=(0, 1), bias=False)

(bn): BatchNorm2d(384, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3b1_3b): BasicConv2d(

(conv): Conv2d(384, 384, kernel_size=(3, 1), stride=(1, 1), padding=(1, 0), bias=False)

(bn): BatchNorm2d(384, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch_pool): BasicConv2d(

(conv): Conv2d(1280, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

)

(Mixed_7c): InceptionC(

(branch1x1): BasicConv2d(

(conv): Conv2d(2048, 320, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(320, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_1): BasicConv2d(

(conv): Conv2d(2048, 384, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(384, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_2a): BasicConv2d(

(conv): Conv2d(384, 384, kernel_size=(1, 3), stride=(1, 1), padding=(0, 1), bias=False)

(bn): BatchNorm2d(384, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3_2b): BasicConv2d(

(conv): Conv2d(384, 384, kernel_size=(3, 1), stride=(1, 1), padding=(1, 0), bias=False)

(bn): BatchNorm2d(384, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3b1_1): BasicConv2d(

(conv): Conv2d(2048, 448, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(448, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3b1_2): BasicConv2d(

(conv): Conv2d(448, 384, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn): BatchNorm2d(384, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3b1_3a): BasicConv2d(

(conv): Conv2d(384, 384, kernel_size=(1, 3), stride=(1, 1), padding=(0, 1), bias=False)

(bn): BatchNorm2d(384, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch3x3b1_3b): BasicConv2d(

(conv): Conv2d(384, 384, kernel_size=(3, 1), stride=(1, 1), padding=(1, 0), bias=False)

(bn): BatchNorm2d(384, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

(branch_pool): BasicConv2d(

(conv): Conv2d(2048, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn): BatchNorm2d(192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True)

)

)

(fc): Linear(in_features=2048, out_features=1000, bias=True)

)

python

model(torch.randn(32, 3, 299, 299).to(device)).shapetorch.Size([32, 1000])8、查看模型详情

python

import torchsummary as summary

summary.summary(model, (3, 299, 299))----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 32, 149, 149] 864

BatchNorm2d-2 [-1, 32, 149, 149] 64

BasicConv2d-3 [-1, 32, 149, 149] 0

Conv2d-4 [-1, 32, 147, 147] 9,216

BatchNorm2d-5 [-1, 32, 147, 147] 64

BasicConv2d-6 [-1, 32, 147, 147] 0

Conv2d-7 [-1, 64, 147, 147] 18,432

BatchNorm2d-8 [-1, 64, 147, 147] 128

BasicConv2d-9 [-1, 64, 147, 147] 0

Conv2d-10 [-1, 80, 73, 73] 5,120

BatchNorm2d-11 [-1, 80, 73, 73] 160

BasicConv2d-12 [-1, 80, 73, 73] 0

Conv2d-13 [-1, 192, 71, 71] 138,240

BatchNorm2d-14 [-1, 192, 71, 71] 384

BasicConv2d-15 [-1, 192, 71, 71] 0

Conv2d-16 [-1, 64, 35, 35] 12,288

BatchNorm2d-17 [-1, 64, 35, 35] 128

BasicConv2d-18 [-1, 64, 35, 35] 0

Conv2d-19 [-1, 48, 35, 35] 9,216

BatchNorm2d-20 [-1, 48, 35, 35] 96

BasicConv2d-21 [-1, 48, 35, 35] 0

Conv2d-22 [-1, 64, 35, 35] 76,800

BatchNorm2d-23 [-1, 64, 35, 35] 128

BasicConv2d-24 [-1, 64, 35, 35] 0

Conv2d-25 [-1, 64, 35, 35] 12,288

BatchNorm2d-26 [-1, 64, 35, 35] 128

BasicConv2d-27 [-1, 64, 35, 35] 0

Conv2d-28 [-1, 96, 35, 35] 55,296

BatchNorm2d-29 [-1, 96, 35, 35] 192

BasicConv2d-30 [-1, 96, 35, 35] 0

Conv2d-31 [-1, 96, 35, 35] 82,944

BatchNorm2d-32 [-1, 96, 35, 35] 192

BasicConv2d-33 [-1, 96, 35, 35] 0

Conv2d-34 [-1, 32, 35, 35] 6,144

BatchNorm2d-35 [-1, 32, 35, 35] 64

BasicConv2d-36 [-1, 32, 35, 35] 0

InceptionA-37 [-1, 256, 35, 35] 0

Conv2d-38 [-1, 64, 35, 35] 16,384

BatchNorm2d-39 [-1, 64, 35, 35] 128

BasicConv2d-40 [-1, 64, 35, 35] 0

Conv2d-41 [-1, 48, 35, 35] 12,288

BatchNorm2d-42 [-1, 48, 35, 35] 96

BasicConv2d-43 [-1, 48, 35, 35] 0

Conv2d-44 [-1, 64, 35, 35] 76,800

BatchNorm2d-45 [-1, 64, 35, 35] 128

BasicConv2d-46 [-1, 64, 35, 35] 0

Conv2d-47 [-1, 64, 35, 35] 16,384

BatchNorm2d-48 [-1, 64, 35, 35] 128

BasicConv2d-49 [-1, 64, 35, 35] 0

Conv2d-50 [-1, 96, 35, 35] 55,296

BatchNorm2d-51 [-1, 96, 35, 35] 192

BasicConv2d-52 [-1, 96, 35, 35] 0

Conv2d-53 [-1, 96, 35, 35] 82,944

BatchNorm2d-54 [-1, 96, 35, 35] 192

BasicConv2d-55 [-1, 96, 35, 35] 0

Conv2d-56 [-1, 64, 35, 35] 16,384

BatchNorm2d-57 [-1, 64, 35, 35] 128

BasicConv2d-58 [-1, 64, 35, 35] 0

InceptionA-59 [-1, 288, 35, 35] 0

Conv2d-60 [-1, 64, 35, 35] 18,432

BatchNorm2d-61 [-1, 64, 35, 35] 128

BasicConv2d-62 [-1, 64, 35, 35] 0

Conv2d-63 [-1, 48, 35, 35] 13,824

BatchNorm2d-64 [-1, 48, 35, 35] 96

BasicConv2d-65 [-1, 48, 35, 35] 0

Conv2d-66 [-1, 64, 35, 35] 76,800

BatchNorm2d-67 [-1, 64, 35, 35] 128

BasicConv2d-68 [-1, 64, 35, 35] 0

Conv2d-69 [-1, 64, 35, 35] 18,432

BatchNorm2d-70 [-1, 64, 35, 35] 128

BasicConv2d-71 [-1, 64, 35, 35] 0

Conv2d-72 [-1, 96, 35, 35] 55,296

BatchNorm2d-73 [-1, 96, 35, 35] 192

BasicConv2d-74 [-1, 96, 35, 35] 0

Conv2d-75 [-1, 96, 35, 35] 82,944

BatchNorm2d-76 [-1, 96, 35, 35] 192

BasicConv2d-77 [-1, 96, 35, 35] 0

Conv2d-78 [-1, 64, 35, 35] 18,432

BatchNorm2d-79 [-1, 64, 35, 35] 128

BasicConv2d-80 [-1, 64, 35, 35] 0

InceptionA-81 [-1, 288, 35, 35] 0

Conv2d-82 [-1, 384, 17, 17] 995,328

BatchNorm2d-83 [-1, 384, 17, 17] 768

BasicConv2d-84 [-1, 384, 17, 17] 0

Conv2d-85 [-1, 64, 35, 35] 18,432

BatchNorm2d-86 [-1, 64, 35, 35] 128

BasicConv2d-87 [-1, 64, 35, 35] 0

Conv2d-88 [-1, 96, 35, 35] 55,296

BatchNorm2d-89 [-1, 96, 35, 35] 192

BasicConv2d-90 [-1, 96, 35, 35] 0

Conv2d-91 [-1, 96, 17, 17] 82,944

BatchNorm2d-92 [-1, 96, 17, 17] 192

BasicConv2d-93 [-1, 96, 17, 17] 0

ReductionA-94 [-1, 768, 17, 17] 0

Conv2d-95 [-1, 192, 17, 17] 147,456

BatchNorm2d-96 [-1, 192, 17, 17] 384

BasicConv2d-97 [-1, 192, 17, 17] 0

Conv2d-98 [-1, 128, 17, 17] 98,304

BatchNorm2d-99 [-1, 128, 17, 17] 256

BasicConv2d-100 [-1, 128, 17, 17] 0

Conv2d-101 [-1, 128, 17, 17] 114,688

BatchNorm2d-102 [-1, 128, 17, 17] 256

BasicConv2d-103 [-1, 128, 17, 17] 0

Conv2d-104 [-1, 192, 17, 17] 172,032

BatchNorm2d-105 [-1, 192, 17, 17] 384

BasicConv2d-106 [-1, 192, 17, 17] 0

Conv2d-107 [-1, 128, 17, 17] 98,304

BatchNorm2d-108 [-1, 128, 17, 17] 256

BasicConv2d-109 [-1, 128, 17, 17] 0

Conv2d-110 [-1, 128, 17, 17] 114,688

BatchNorm2d-111 [-1, 128, 17, 17] 256

BasicConv2d-112 [-1, 128, 17, 17] 0

Conv2d-113 [-1, 128, 17, 17] 114,688

BatchNorm2d-114 [-1, 128, 17, 17] 256

BasicConv2d-115 [-1, 128, 17, 17] 0

Conv2d-116 [-1, 128, 17, 17] 114,688

BatchNorm2d-117 [-1, 128, 17, 17] 256

BasicConv2d-118 [-1, 128, 17, 17] 0

Conv2d-119 [-1, 192, 17, 17] 172,032

BatchNorm2d-120 [-1, 192, 17, 17] 384

BasicConv2d-121 [-1, 192, 17, 17] 0

Conv2d-122 [-1, 192, 17, 17] 147,456

BatchNorm2d-123 [-1, 192, 17, 17] 384

BasicConv2d-124 [-1, 192, 17, 17] 0

InceptionB-125 [-1, 768, 17, 17] 0

Conv2d-126 [-1, 192, 17, 17] 147,456

BatchNorm2d-127 [-1, 192, 17, 17] 384

BasicConv2d-128 [-1, 192, 17, 17] 0

Conv2d-129 [-1, 160, 17, 17] 122,880

BatchNorm2d-130 [-1, 160, 17, 17] 320

BasicConv2d-131 [-1, 160, 17, 17] 0

Conv2d-132 [-1, 160, 17, 17] 179,200

BatchNorm2d-133 [-1, 160, 17, 17] 320

BasicConv2d-134 [-1, 160, 17, 17] 0

Conv2d-135 [-1, 192, 17, 17] 215,040

BatchNorm2d-136 [-1, 192, 17, 17] 384

BasicConv2d-137 [-1, 192, 17, 17] 0

Conv2d-138 [-1, 160, 17, 17] 122,880

BatchNorm2d-139 [-1, 160, 17, 17] 320

BasicConv2d-140 [-1, 160, 17, 17] 0

Conv2d-141 [-1, 160, 17, 17] 179,200

BatchNorm2d-142 [-1, 160, 17, 17] 320

BasicConv2d-143 [-1, 160, 17, 17] 0

Conv2d-144 [-1, 160, 17, 17] 179,200

BatchNorm2d-145 [-1, 160, 17, 17] 320

BasicConv2d-146 [-1, 160, 17, 17] 0

Conv2d-147 [-1, 160, 17, 17] 179,200

BatchNorm2d-148 [-1, 160, 17, 17] 320

BasicConv2d-149 [-1, 160, 17, 17] 0

Conv2d-150 [-1, 192, 17, 17] 215,040

BatchNorm2d-151 [-1, 192, 17, 17] 384

BasicConv2d-152 [-1, 192, 17, 17] 0

Conv2d-153 [-1, 192, 17, 17] 147,456

BatchNorm2d-154 [-1, 192, 17, 17] 384

BasicConv2d-155 [-1, 192, 17, 17] 0

InceptionB-156 [-1, 768, 17, 17] 0

Conv2d-157 [-1, 192, 17, 17] 147,456

BatchNorm2d-158 [-1, 192, 17, 17] 384

BasicConv2d-159 [-1, 192, 17, 17] 0

Conv2d-160 [-1, 160, 17, 17] 122,880

BatchNorm2d-161 [-1, 160, 17, 17] 320

BasicConv2d-162 [-1, 160, 17, 17] 0

Conv2d-163 [-1, 160, 17, 17] 179,200

BatchNorm2d-164 [-1, 160, 17, 17] 320

BasicConv2d-165 [-1, 160, 17, 17] 0

Conv2d-166 [-1, 192, 17, 17] 215,040

BatchNorm2d-167 [-1, 192, 17, 17] 384

BasicConv2d-168 [-1, 192, 17, 17] 0

Conv2d-169 [-1, 160, 17, 17] 122,880

BatchNorm2d-170 [-1, 160, 17, 17] 320

BasicConv2d-171 [-1, 160, 17, 17] 0

Conv2d-172 [-1, 160, 17, 17] 179,200

BatchNorm2d-173 [-1, 160, 17, 17] 320

BasicConv2d-174 [-1, 160, 17, 17] 0

Conv2d-175 [-1, 160, 17, 17] 179,200

BatchNorm2d-176 [-1, 160, 17, 17] 320

BasicConv2d-177 [-1, 160, 17, 17] 0

Conv2d-178 [-1, 160, 17, 17] 179,200

BatchNorm2d-179 [-1, 160, 17, 17] 320

BasicConv2d-180 [-1, 160, 17, 17] 0

Conv2d-181 [-1, 192, 17, 17] 215,040

BatchNorm2d-182 [-1, 192, 17, 17] 384

BasicConv2d-183 [-1, 192, 17, 17] 0

Conv2d-184 [-1, 192, 17, 17] 147,456

BatchNorm2d-185 [-1, 192, 17, 17] 384

BasicConv2d-186 [-1, 192, 17, 17] 0

InceptionB-187 [-1, 768, 17, 17] 0

Conv2d-188 [-1, 192, 17, 17] 147,456

BatchNorm2d-189 [-1, 192, 17, 17] 384

BasicConv2d-190 [-1, 192, 17, 17] 0

Conv2d-191 [-1, 192, 17, 17] 147,456

BatchNorm2d-192 [-1, 192, 17, 17] 384

BasicConv2d-193 [-1, 192, 17, 17] 0

Conv2d-194 [-1, 192, 17, 17] 258,048

BatchNorm2d-195 [-1, 192, 17, 17] 384

BasicConv2d-196 [-1, 192, 17, 17] 0

Conv2d-197 [-1, 192, 17, 17] 258,048

BatchNorm2d-198 [-1, 192, 17, 17] 384

BasicConv2d-199 [-1, 192, 17, 17] 0

Conv2d-200 [-1, 192, 17, 17] 147,456

BatchNorm2d-201 [-1, 192, 17, 17] 384

BasicConv2d-202 [-1, 192, 17, 17] 0

Conv2d-203 [-1, 192, 17, 17] 258,048

BatchNorm2d-204 [-1, 192, 17, 17] 384

BasicConv2d-205 [-1, 192, 17, 17] 0

Conv2d-206 [-1, 192, 17, 17] 258,048

BatchNorm2d-207 [-1, 192, 17, 17] 384

BasicConv2d-208 [-1, 192, 17, 17] 0

Conv2d-209 [-1, 192, 17, 17] 258,048

BatchNorm2d-210 [-1, 192, 17, 17] 384

BasicConv2d-211 [-1, 192, 17, 17] 0

Conv2d-212 [-1, 192, 17, 17] 258,048

BatchNorm2d-213 [-1, 192, 17, 17] 384

BasicConv2d-214 [-1, 192, 17, 17] 0

Conv2d-215 [-1, 192, 17, 17] 147,456

BatchNorm2d-216 [-1, 192, 17, 17] 384

BasicConv2d-217 [-1, 192, 17, 17] 0

InceptionB-218 [-1, 768, 17, 17] 0

Conv2d-219 [-1, 192, 17, 17] 147,456

BatchNorm2d-220 [-1, 192, 17, 17] 384

BasicConv2d-221 [-1, 192, 17, 17] 0

Conv2d-222 [-1, 320, 8, 8] 552,960

BatchNorm2d-223 [-1, 320, 8, 8] 640

BasicConv2d-224 [-1, 320, 8, 8] 0

Conv2d-225 [-1, 192, 17, 17] 147,456

BatchNorm2d-226 [-1, 192, 17, 17] 384

BasicConv2d-227 [-1, 192, 17, 17] 0

Conv2d-228 [-1, 192, 17, 17] 258,048

BatchNorm2d-229 [-1, 192, 17, 17] 384

BasicConv2d-230 [-1, 192, 17, 17] 0

Conv2d-231 [-1, 192, 17, 17] 258,048

BatchNorm2d-232 [-1, 192, 17, 17] 384

BasicConv2d-233 [-1, 192, 17, 17] 0

Conv2d-234 [-1, 192, 8, 8] 331,776

BatchNorm2d-235 [-1, 192, 8, 8] 384

BasicConv2d-236 [-1, 192, 8, 8] 0

ReductionB-237 [-1, 1280, 8, 8] 0

Conv2d-238 [-1, 320, 8, 8] 409,600

BatchNorm2d-239 [-1, 320, 8, 8] 640

BasicConv2d-240 [-1, 320, 8, 8] 0

Conv2d-241 [-1, 384, 8, 8] 491,520

BatchNorm2d-242 [-1, 384, 8, 8] 768

BasicConv2d-243 [-1, 384, 8, 8] 0

Conv2d-244 [-1, 384, 8, 8] 442,368

BatchNorm2d-245 [-1, 384, 8, 8] 768

BasicConv2d-246 [-1, 384, 8, 8] 0

Conv2d-247 [-1, 384, 8, 8] 442,368

BatchNorm2d-248 [-1, 384, 8, 8] 768

BasicConv2d-249 [-1, 384, 8, 8] 0

Conv2d-250 [-1, 448, 8, 8] 573,440

BatchNorm2d-251 [-1, 448, 8, 8] 896

BasicConv2d-252 [-1, 448, 8, 8] 0

Conv2d-253 [-1, 384, 8, 8] 1,548,288

BatchNorm2d-254 [-1, 384, 8, 8] 768

BasicConv2d-255 [-1, 384, 8, 8] 0

Conv2d-256 [-1, 384, 8, 8] 442,368

BatchNorm2d-257 [-1, 384, 8, 8] 768

BasicConv2d-258 [-1, 384, 8, 8] 0

Conv2d-259 [-1, 384, 8, 8] 442,368

BatchNorm2d-260 [-1, 384, 8, 8] 768

BasicConv2d-261 [-1, 384, 8, 8] 0

Conv2d-262 [-1, 192, 8, 8] 245,760

BatchNorm2d-263 [-1, 192, 8, 8] 384

BasicConv2d-264 [-1, 192, 8, 8] 0

InceptionC-265 [-1, 2048, 8, 8] 0

Conv2d-266 [-1, 320, 8, 8] 655,360

BatchNorm2d-267 [-1, 320, 8, 8] 640

BasicConv2d-268 [-1, 320, 8, 8] 0

Conv2d-269 [-1, 384, 8, 8] 786,432

BatchNorm2d-270 [-1, 384, 8, 8] 768

BasicConv2d-271 [-1, 384, 8, 8] 0

Conv2d-272 [-1, 384, 8, 8] 442,368

BatchNorm2d-273 [-1, 384, 8, 8] 768

BasicConv2d-274 [-1, 384, 8, 8] 0

Conv2d-275 [-1, 384, 8, 8] 442,368

BatchNorm2d-276 [-1, 384, 8, 8] 768

BasicConv2d-277 [-1, 384, 8, 8] 0

Conv2d-278 [-1, 448, 8, 8] 917,504

BatchNorm2d-279 [-1, 448, 8, 8] 896

BasicConv2d-280 [-1, 448, 8, 8] 0

Conv2d-281 [-1, 384, 8, 8] 1,548,288

BatchNorm2d-282 [-1, 384, 8, 8] 768

BasicConv2d-283 [-1, 384, 8, 8] 0

Conv2d-284 [-1, 384, 8, 8] 442,368

BatchNorm2d-285 [-1, 384, 8, 8] 768

BasicConv2d-286 [-1, 384, 8, 8] 0

Conv2d-287 [-1, 384, 8, 8] 442,368

BatchNorm2d-288 [-1, 384, 8, 8] 768

BasicConv2d-289 [-1, 384, 8, 8] 0

Conv2d-290 [-1, 192, 8, 8] 393,216

BatchNorm2d-291 [-1, 192, 8, 8] 384

BasicConv2d-292 [-1, 192, 8, 8] 0

InceptionC-293 [-1, 2048, 8, 8] 0

Linear-294 [-1, 1000] 2,049,000

================================================================

Total params: 23,834,568

Trainable params: 23,834,568

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 1.02

Forward/backward pass size (MB): 224.12

Params size (MB): 90.92

Estimated Total Size (MB): 316.07

----------------------------------------------------------------