目录

一、前言

(1)前言

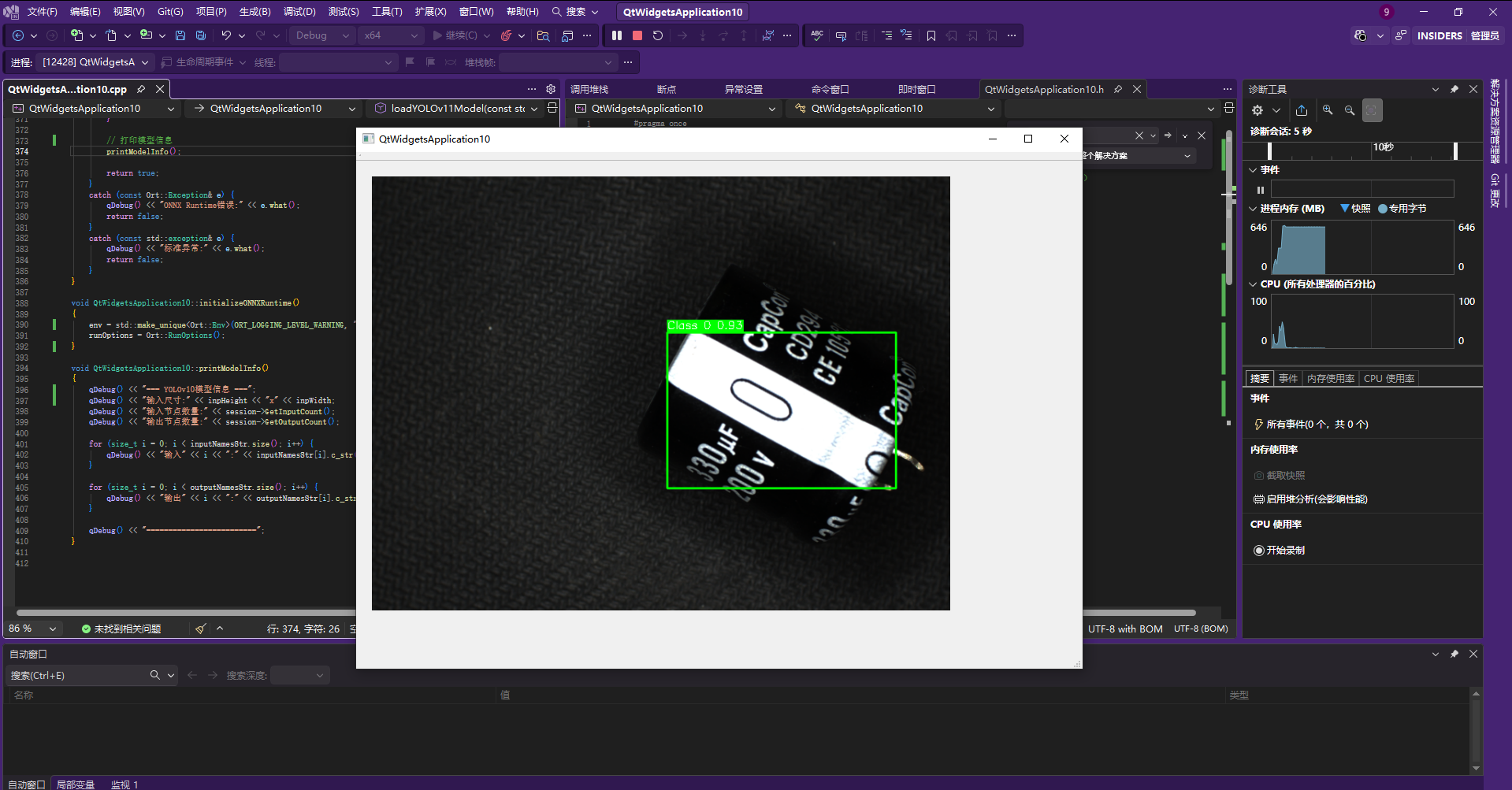

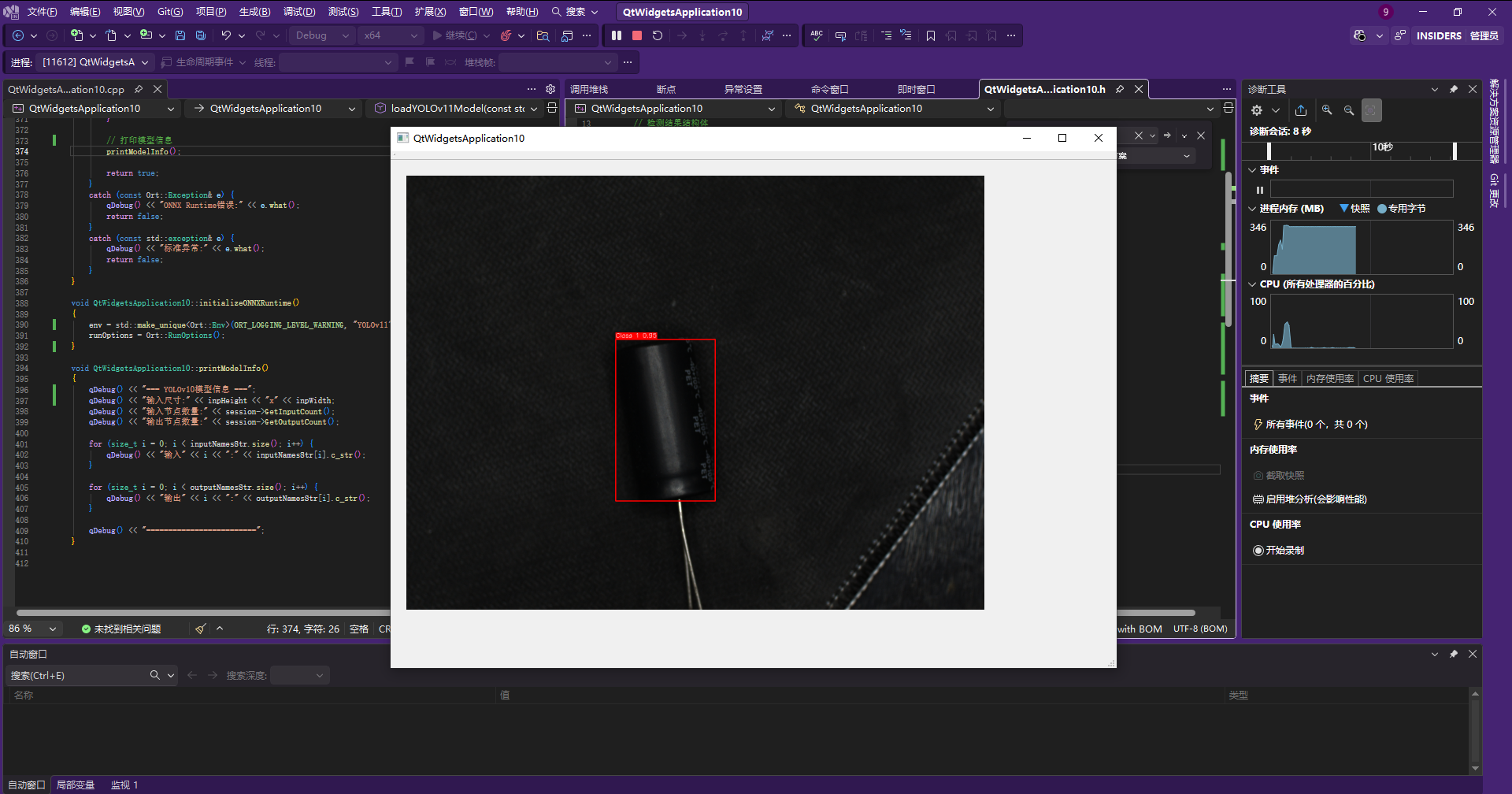

本次项目使用YOLO11对目标进行检测,具体实现了模型加载、图像加载、ONNX Runtime环境初始化、图像预处理和后处理、检测框的绘制、图像显示等功能。代码注释详细。

模型使用python中训练成pt,然后转为onnx模型,标注工具使用ISAT进行标注。本次项目还提供了测试模型和测试图像,请仅作学习使用。

模型只有一个功能,检测电容负极标识和非负极标识,如果使用自己的模型,替换即可。

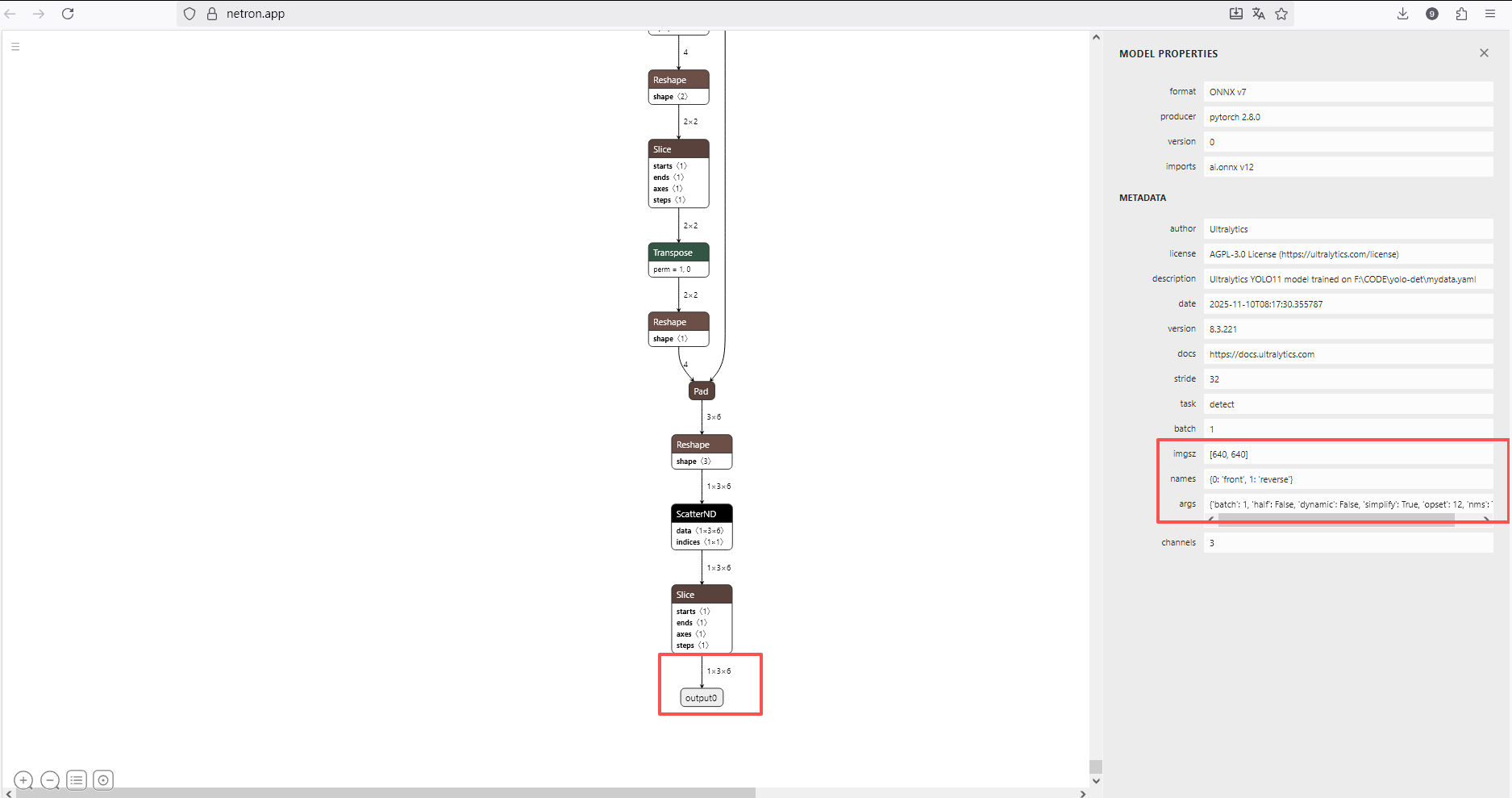

模型的具体参数可以使用:netron.app 进行查看

本次使用的 1106_YOLO11_ONNX_FP32.onnx 模型具体信息如下:

输入大小:640x640

类别:{0: 'front', 1: 'reverse'}

参数:batch': 1, 'half': False, 'dynamic': False, 'simplify': True, 'opset': 12, 'nms': True

输出张量:[1 ,3,6]

如果使用自己的模型:比如你的输出向量为[1 ,300,6],请在代码中修改以适配。

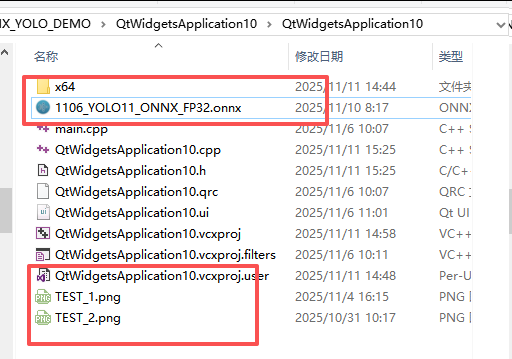

模型和测试图像在打包的根目录中查看

(2)项目准备工作

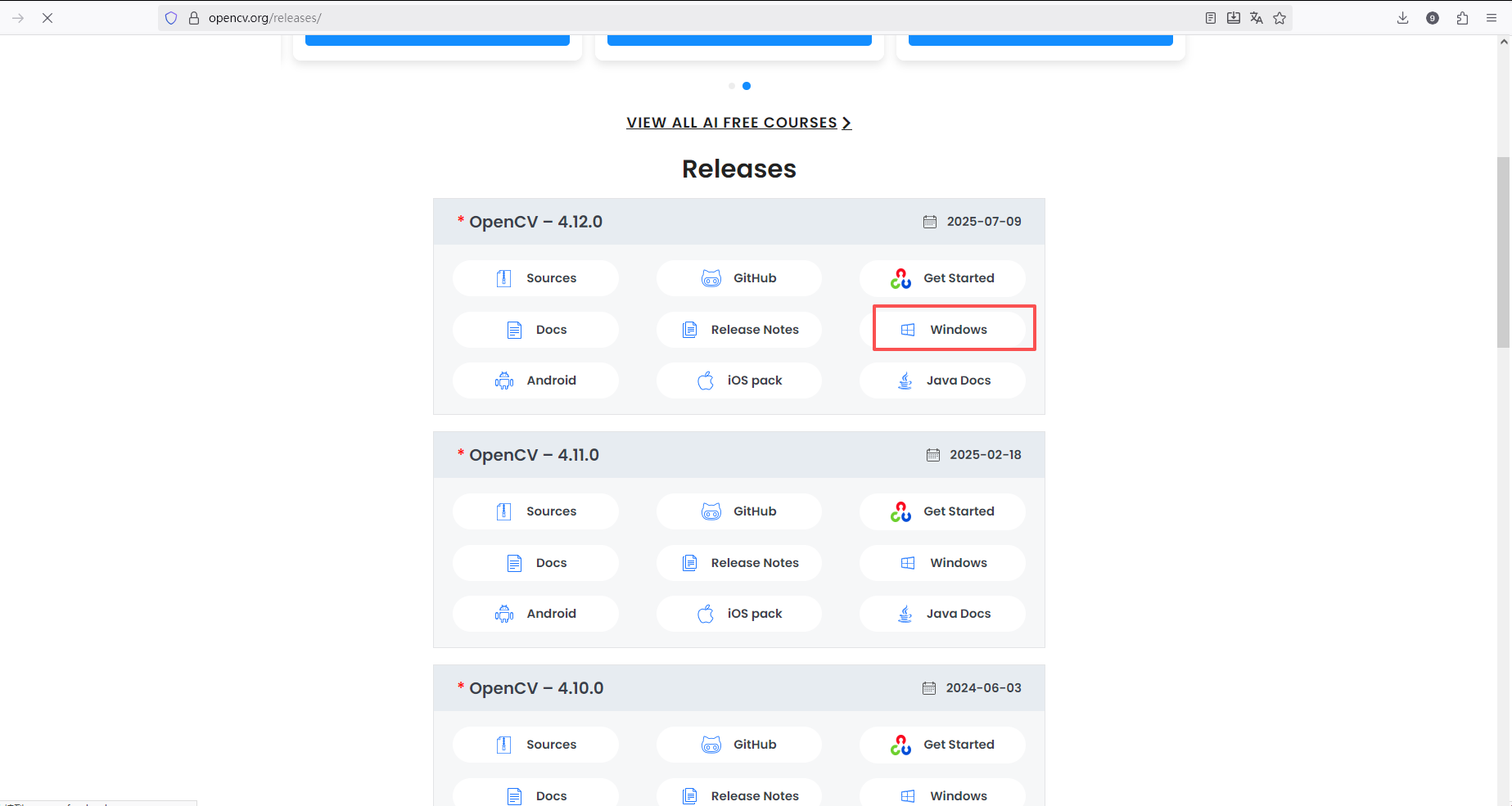

下载并安装opencv 4.12.0:opencv官网

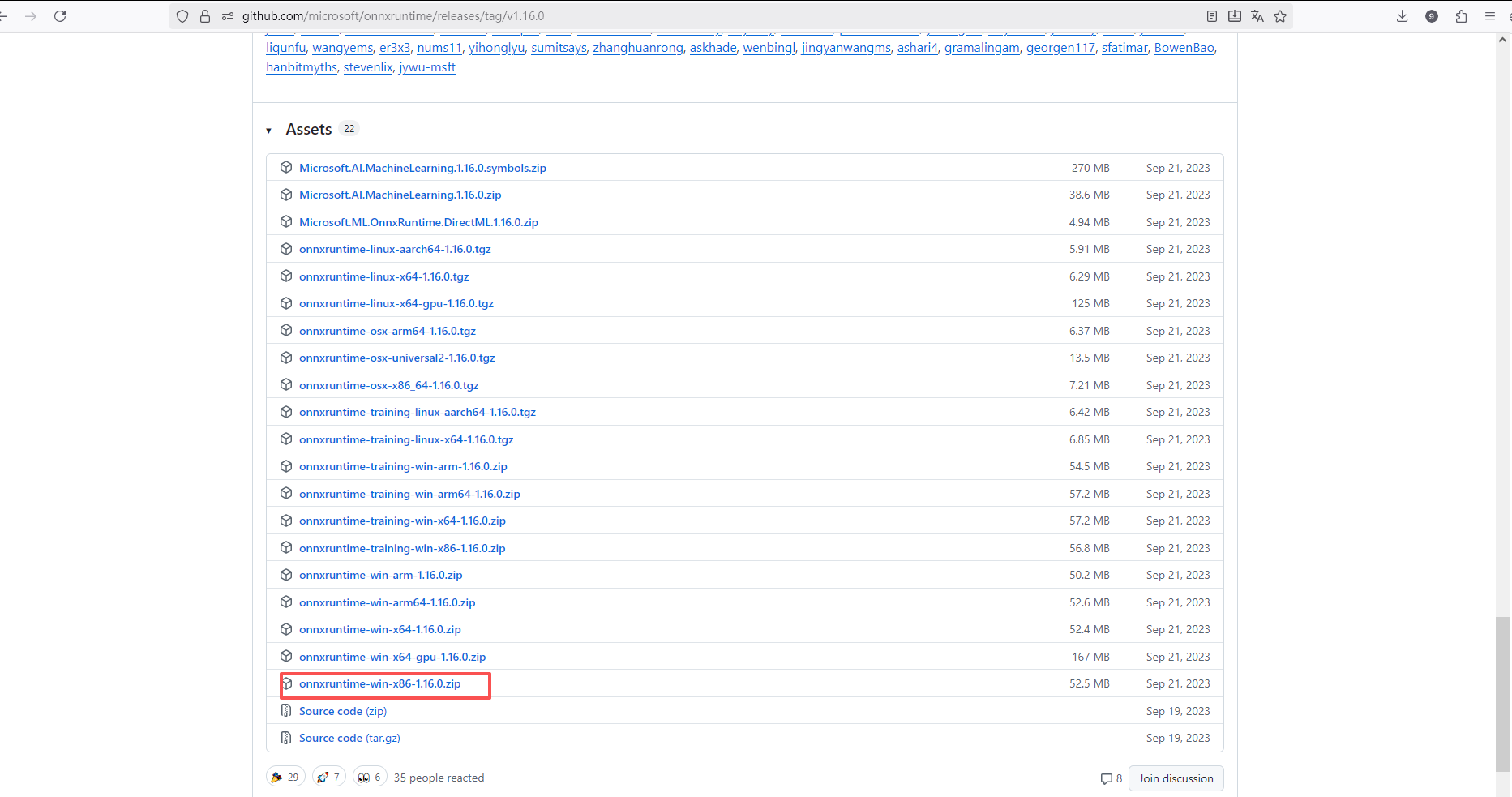

下载onnxruntime-win-x64-1.16.0库:onnxruntime-win-x64-1.16.0 (CPU版本兼容性挺好,最新的1.23.1应该也是可以用的,如果有库了可以先试试不同版本的)

(2)项目中添加头文件和静态库lib和动态库dll

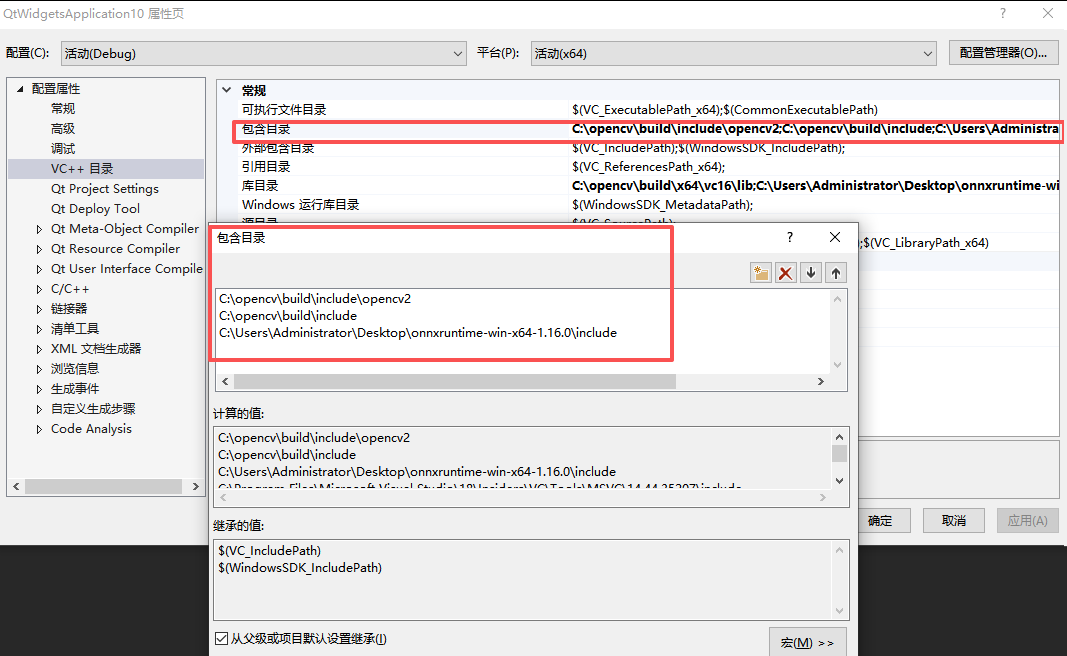

包含目录中添加:

C:\opencv\build\include\opencv2

C:\opencv\build\include

C:\Users\Administrator\Desktop\onnxruntime-win-x64-1.16.0\include

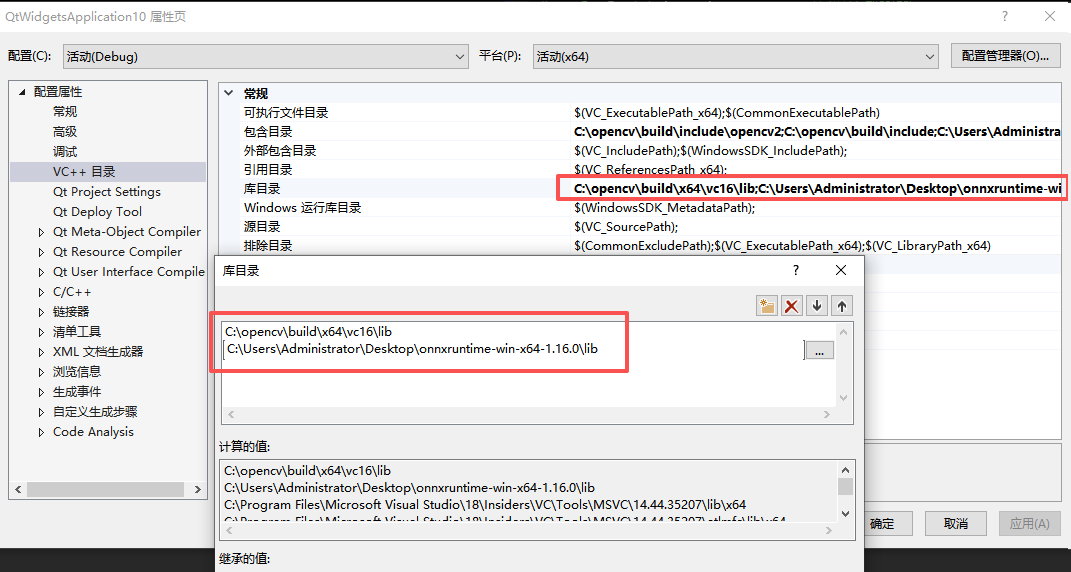

库目录中添加:

C:\opencv\build\x64\vc16\lib

C:\Users\Administrator\Desktop\onnxruntime-win-x64-1.16.0\lib

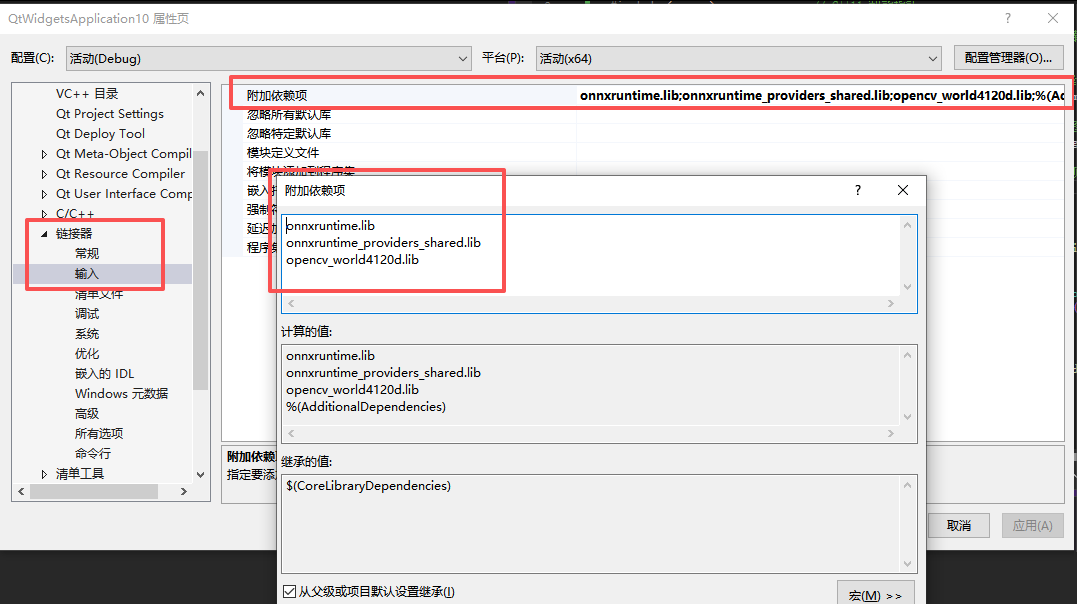

链接器中输入:

onnxruntime.lib

onnxruntime_providers_shared.lib

opencv_world4120d.lib

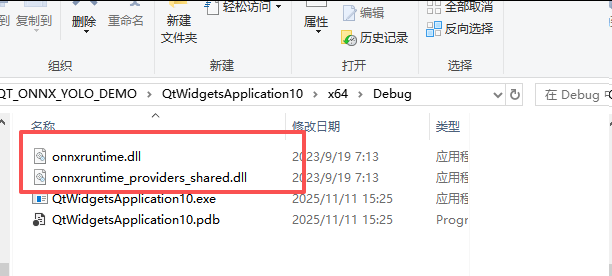

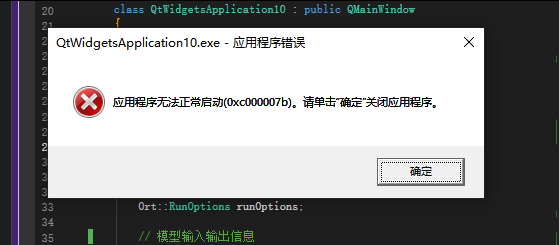

项目的x64->Debug中要添加这两个dll动态库,不然会报0xc000007b应用程序无法启动的错误

(3)YOLO11目标检测运行效果

二、代码详解

QtWidgetsApplication10.h

cpp

#pragma once

#include <QtWidgets/QMainWindow>

#include "ui_QtWidgetsApplication10.h"

#include <opencv2/opencv.hpp> // OPENCV 头文件(版本号:4.12.0)

#include <onnxruntime_cxx_api.h> // ONNX Runtime 头文件(CPU版本)(版本号:onnxruntime-win-x64-1.16.0)

#include <vector> // C++11 容器库

#include <string> // C++11 字符串库

#include <memory> // C++11 智能指针

#include <chrono> // C++11 高精度的时间库

#include <algorithm> // C++11 操作容器中的元素

// 检测结果结构体

struct Detection {

cv::Rect box;

float conf;

int classId;

};

class QtWidgetsApplication10 : public QMainWindow

{

Q_OBJECT

public:

QtWidgetsApplication10(QWidget* parent = nullptr);

~QtWidgetsApplication10();

private:

// ONNX Runtime 相关成员变量

std::unique_ptr<Ort::Env> env;

std::unique_ptr<Ort::Session> session;

Ort::SessionOptions sessionOptions;

Ort::RunOptions runOptions;

// 模型输入输出信息

std::vector<std::string> inputNamesStr;

std::vector<std::string> outputNamesStr;

std::vector<const char*> inputNames;

std::vector<const char*> outputNames;

std::vector<std::vector<int64_t>> inputNodeDims;

std::vector<std::vector<int64_t>> outputNodeDims;

// 模型配置

std::string modelPath = "1106_YOLO11_ONNX_FP32.onnx";

std::string imagePath = "TEST_2.png";

int inpHeight = 640;

int inpWidth = 640;

// 预定义类别颜色或从配置文件中获取

std::vector<cv::Scalar> colors = {

cv::Scalar(0, 255, 0), // 绿色

cv::Scalar(0, 0, 255), // 红色

};

// 图像和检测结果

cv::Mat currentImage;

cv::Mat displayImage;

std::vector<Detection> currentDetections;

// 模型加载

bool loadYOLOv11Model(const std::string& model_path);

void initializeONNXRuntime(); // 初始化ONNX Runtime环境

void printModelInfo(); // 打印模型信息

// 图像加载

bool loadImage(const std::string& imagePath);

// 图像预处理

cv::Mat preprocessImage(const cv::Mat& image);

// 图像后处理

std::vector<Detection> postprocessResults(const std::vector<Ort::Value>& outputTensors,

const cv::Size& originalSize,

float confThreshold = 0.25f,

float iouThreshold = 0.45f);

// 目标检测

std::vector<Detection> detectObjects(const cv::Mat& image);

// 绘制检测结果

void drawDetections(cv::Mat& image, const std::vector<Detection>& detections);

void updateDisplay(); // 更新显示在label中

void autoDetect(); // 检测函数

private:

Ui::QtWidgetsApplication10Class ui;

};QtWidgetsApplication10.cpp

cpp

#include "QtWidgetsApplication10.h"

#include <QMessageBox>

#include <QDebug>

#include <QFile>

QtWidgetsApplication10::QtWidgetsApplication10(QWidget* parent)

: QMainWindow(parent)

{

ui.setupUi(this);

// 初始化ONNX Runtime并加载模型

try {

// 修改为你的YOLO模型绝对路径

if (loadYOLOv11Model(modelPath)) {

// 修改为你的测试图像绝对路径

if (loadImage(imagePath)) {

// 执行目标检测

autoDetect();

}

}

else {

QMessageBox::critical(this, "错误", "YOLOv11模型加载失败!");

}

}

catch (const std::exception& e) {

QMessageBox::critical(this, "异常", QString("模型加载异常: %1").arg(e.what()));

}

}

QtWidgetsApplication10::~QtWidgetsApplication10()

{

session.reset();

env.reset();

}

// 自动检测函数

void QtWidgetsApplication10::autoDetect()

{

if (currentImage.empty() || !session) {

return;

}

// 执行目标检测

auto start = std::chrono::high_resolution_clock::now(); // 开始计时

currentDetections = detectObjects(currentImage); // 执行目标检测

auto end = std::chrono::high_resolution_clock::now(); // 结束计时

auto duration = std::chrono::duration_cast<std::chrono::milliseconds>(end - start); // 计算耗时

// 绘制检测结果

displayImage = currentImage.clone();

drawDetections(displayImage, currentDetections);

updateDisplay();

// 更新状态

qDebug() << "检测完成,发现" << currentDetections.size() << "个目标,耗时:" << duration.count() << "ms";

}

// 加载图像

bool QtWidgetsApplication10::loadImage(const std::string& imagePath)

{

currentImage = cv::imread(imagePath);

if (currentImage.empty()) {

qDebug() << "无法加载图像文件:" << imagePath.c_str();

return false;

}

currentDetections.clear();

// 创建显示副本

displayImage = currentImage.clone();

updateDisplay();

qDebug() << "图像加载成功,尺寸:" << currentImage.cols << "x" << currentImage.rows;

return true;

}

// 目标检测

std::vector<Detection> QtWidgetsApplication10::detectObjects(const cv::Mat& image)

{

if (!session) {

qDebug() << "模型未加载!";

return {};

}

try {

// 保存原始图像尺寸

cv::Size originalSize = image.size();

// 预处理图像

cv::Mat processedImage = preprocessImage(image);

// 创建输入Tensor

size_t inputTensorSize = processedImage.total() * processedImage.channels();

std::vector<float> inputTensorValues(inputTensorSize);

// 将图像数据复制到inputTensorValues中(HWC -> CHW)

std::vector<cv::Mat> channels(3);

cv::split(processedImage, channels);

size_t channelSize = processedImage.rows * processedImage.cols;

for (int i = 0; i < 3; ++i) {

std::memcpy(inputTensorValues.data() + i * channelSize,

channels[i].data, channelSize * sizeof(float));

}

Ort::MemoryInfo memoryInfo = Ort::MemoryInfo::CreateCpu(

OrtAllocatorType::OrtArenaAllocator, OrtMemType::OrtMemTypeDefault);

std::vector<Ort::Value> inputTensors;

inputTensors.push_back(Ort::Value::CreateTensor<float>(

memoryInfo,

inputTensorValues.data(),

inputTensorValues.size(),

inputNodeDims[0].data(),

inputNodeDims[0].size()

));

// 执行推理

auto start = std::chrono::high_resolution_clock::now();

std::vector<Ort::Value> outputTensors = session->Run(

runOptions,

inputNames.data(),

inputTensors.data(),

inputTensors.size(),

outputNames.data(),

outputNames.size()

);

auto end = std::chrono::high_resolution_clock::now();

auto duration = std::chrono::duration_cast<std::chrono::milliseconds>(end - start);

qDebug() << "推理时间:" << duration.count() << "ms";

// 后处理结果

return postprocessResults(outputTensors, originalSize);

}

catch (const std::exception& e) {

qDebug() << "推理错误:" << e.what();

return {};

}

}

// 图像预处理

cv::Mat QtWidgetsApplication10::preprocessImage(const cv::Mat& image)

{

cv::Mat resized, floatImage;

// 调整图像尺寸

cv::resize(image, resized, cv::Size(inpWidth, inpHeight));

// 转换颜色空间 BGR -> RGB

cv::cvtColor(resized, resized, cv::COLOR_BGR2RGB);

// 归一化到 [0,1] 并转换为float

resized.convertTo(floatImage, CV_32FC3, 1.0 / 255.0);

return floatImage;

}

// 图像后处理

std::vector<Detection> QtWidgetsApplication10::postprocessResults(

const std::vector<Ort::Value>& outputTensors, const cv::Size& originalSize,

float confThreshold, float iouThreshold)

{

std::vector<Detection> detections;

if (outputTensors.empty()) {

qDebug() << "输出张量为空";

return detections;

}

// 获取输出数据

const float* outputData = outputTensors[0].GetTensorData<float>();

auto outputShape = outputTensors[0].GetTensorTypeAndShapeInfo().GetShape();

// 输出形状: [1, 3, 6] - [batch, num_detections, (x, y, w, h, conf, class)]

int numDetections = static_cast<int>(outputShape[1]);

int detectionSize = static_cast<int>(outputShape[2]);

qDebug() << "=== 后处理信息 ===";

qDebug() << "输出形状: [" << outputShape[0] << ", " << outputShape[1] << ", " << outputShape[2] << "]";

qDebug() << "检测数量:" << numDetections; //3

qDebug() << "检测维度:" << detectionSize; //6

qDebug() << "原始图像尺寸:" << originalSize.width << "x" << originalSize.height;

qDebug() << "模型输入尺寸:" << inpWidth << "x" << inpHeight;

// 计算缩放比例

float scaleX = static_cast<float>(originalSize.width) / inpWidth;

float scaleY = static_cast<float>(originalSize.height) / inpHeight;

qDebug() << "缩放比例 - X:" << scaleX << " Y:" << scaleY;

int validDetections = 0;

for (int i = 0; i < numDetections; ++i) {

const float* detection = outputData + i * detectionSize;

float conf = detection[4];

// 跳过低置信度的检测

if (conf < confThreshold) continue;

validDetections++;

// 直接使用原始坐标点

float x1 = detection[0];

float y1 = detection[1];

float x2 = detection[2];

float y2 = detection[3];

int classId = static_cast<int>(detection[5]);

// 输出原始坐标信息

qDebug() << "检测" << validDetections << " - 原始坐标: ("

<< x1 << ", " << y1 << ", " << x2 << ", " << y2

<< ") 置信度: " << conf << " 类别: " << classId;

// 如果需要将坐标映射回原始图像尺寸

float orig_x1 = x1 * scaleX;

float orig_y1 = y1 * scaleY;

float orig_x2 = x2 * scaleX;

float orig_y2 = y2 * scaleY;

qDebug() << "检测" << validDetections << " - 映射后坐标: ("

<< orig_x1 << ", " << orig_y1 << ", " << orig_x2 << ", " << orig_y2 << ")";

// 确保坐标在图像范围内

orig_x1 = std::max(0.0f, std::min(orig_x1, static_cast<float>(originalSize.width)));

orig_y1 = std::max(0.0f, std::min(orig_y1, static_cast<float>(originalSize.height)));

orig_x2 = std::max(0.0f, std::min(orig_x2, static_cast<float>(originalSize.width)));

orig_y2 = std::max(0.0f, std::min(orig_y2, static_cast<float>(originalSize.height)));

Detection det;

det.box = cv::Rect(static_cast<int>(orig_x1), static_cast<int>(orig_y1),

static_cast<int>(orig_x2 - orig_x1), static_cast<int>(orig_y2 - orig_y1));

det.conf = conf;

det.classId = classId;

detections.push_back(det);

qDebug() << "检测" << validDetections << " - 最终边界框: ("

<< det.box.x << ", " << det.box.y << ", "

<< det.box.width << ", " << det.box.height << ")";

}

qDebug() << "有效检测数量:" << detections.size();

qDebug() << "=== 后处理完成 ===";

return detections;

}

// 绘制检测结果

void QtWidgetsApplication10::drawDetections(cv::Mat& image, const std::vector<Detection>& detections)

{

for (const auto& detection : detections) {

// 选择颜色(基于类别ID)

cv::Scalar color = colors[detection.classId % colors.size()];

// 绘制边界框

cv::rectangle(image, detection.box, color, 2);

// 创建标签

std::string label = "Class " + std::to_string(detection.classId);

label += " " + std::to_string(detection.conf).substr(0, 4);

// 计算标签背景尺寸

int baseLine;

cv::Size labelSize = cv::getTextSize(label, cv::FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

// 绘制标签背景

cv::rectangle(image,

cv::Point(detection.box.x, detection.box.y - labelSize.height - baseLine),

cv::Point(detection.box.x + labelSize.width, detection.box.y),

color, cv::FILLED);

// 绘制标签文本

cv::putText(image, label,

cv::Point(detection.box.x, detection.box.y - baseLine),

cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(255, 255, 255), 1);

}

}

// 更新显示

void QtWidgetsApplication10::updateDisplay()

{

if (displayImage.empty()) return;

// 将OpenCV图像转换为Qt图像

cv::Mat rgbImage;

cv::cvtColor(displayImage, rgbImage, cv::COLOR_BGR2RGB);

QImage qimg(rgbImage.data, rgbImage.cols, rgbImage.rows,

rgbImage.step, QImage::Format_RGB888);

QPixmap pixmap = QPixmap::fromImage(qimg);

// 在QLabel中显示图像

ui.label->setPixmap(pixmap.scaled(ui.label->width(),

ui.label->height(),

Qt::KeepAspectRatio, Qt::SmoothTransformation));

}

// 模型加载函数

bool QtWidgetsApplication10::loadYOLOv11Model(const std::string& model_path)

{

try {

// 初始化ONNX Runtime环境

initializeONNXRuntime();

// 使用QString进行路径转换

QString qModelPath = QString::fromStdString(model_path);

std::wstring wideModelPath = qModelPath.toStdWString();

// 创建会话

session = std::make_unique<Ort::Session>(*env, wideModelPath.c_str(), sessionOptions);

// 获取模型输入输出信息

Ort::AllocatorWithDefaultOptions allocator;

// 清空之前的存储

inputNamesStr.clear();

inputNames.clear();

inputNodeDims.clear();

outputNamesStr.clear();

outputNames.clear();

outputNodeDims.clear();

// 获取输入信息

size_t numInputNodes = session->GetInputCount();

for (size_t i = 0; i < numInputNodes; i++) {

auto inputName = session->GetInputNameAllocated(i, allocator);

inputNamesStr.push_back(std::string(inputName.get()));

inputNames.push_back(inputNamesStr.back().c_str());

Ort::TypeInfo inputTypeInfo = session->GetInputTypeInfo(i);

auto inputTensorInfo = inputTypeInfo.GetTensorTypeAndShapeInfo();

auto inputDims = inputTensorInfo.GetShape();

inputNodeDims.push_back(inputDims);

qDebug() << "输入名称:" << inputNamesStr.back().c_str();

qDebug() << "输入维度:";

for (auto dim : inputDims) {

qDebug() << dim;

}

}

// 获取输出信息

size_t numOutputNodes = session->GetOutputCount();

for (size_t i = 0; i < numOutputNodes; i++) {

auto outputName = session->GetOutputNameAllocated(i, allocator);

outputNamesStr.push_back(std::string(outputName.get()));

outputNames.push_back(outputNamesStr.back().c_str());

Ort::TypeInfo outputTypeInfo = session->GetOutputTypeInfo(i);

auto outputTensorInfo = outputTypeInfo.GetTensorTypeAndShapeInfo();

auto outputDims = outputTensorInfo.GetShape();

outputNodeDims.push_back(outputDims);

qDebug() << "输出名称:" << outputNamesStr.back().c_str();

qDebug() << "输出维度:";

for (auto dim : outputDims) {

qDebug() << dim;

}

}

// 从输入维度中获取实际的输入高度和宽度

if (!inputNodeDims.empty() && inputNodeDims[0].size() == 4) {

inpHeight = static_cast<int>(inputNodeDims[0][2]);

inpWidth = static_cast<int>(inputNodeDims[0][3]);

qDebug() << "模型输入尺寸:" << inpHeight << "x" << inpWidth;

}

// 打印模型信息

printModelInfo();

return true;

}

catch (const Ort::Exception& e) {

qDebug() << "ONNX Runtime错误:" << e.what();

return false;

}

catch (const std::exception& e) {

qDebug() << "标准异常:" << e.what();

return false;

}

}

void QtWidgetsApplication10::initializeONNXRuntime()

{

env = std::make_unique<Ort::Env>(ORT_LOGGING_LEVEL_WARNING, "YOLOv11");

runOptions = Ort::RunOptions();

}

void QtWidgetsApplication10::printModelInfo()

{

qDebug() << "=== YOLOv10模型信息 ===";

qDebug() << "输入尺寸:" << inpHeight << "x" << inpWidth;

qDebug() << "输入节点数量:" << session->GetInputCount();

qDebug() << "输出节点数量:" << session->GetOutputCount();

for (size_t i = 0; i < inputNamesStr.size(); i++) {

qDebug() << "输入" << i << ":" << inputNamesStr[i].c_str();

}

for (size_t i = 0; i < outputNamesStr.size(); i++) {

qDebug() << "输出" << i << ":" << outputNamesStr[i].c_str();

}

qDebug() << "=========================";

}三、完整项目下载

通过网盘分享的知识: