1. 背景

1.1 现状

程序日志分散,大家都是直接写日志裸文件数据。而对Flash存储器而言。

该写入方式,会存在大量的随机写,造成文件碎片化,降低Flash使用寿命,降低读写速度。

1.2 造成问题

- Flash提前老化,有现场使用了一年半的EMMC,已经出现EMMC寿命到期,读写速度大幅下降,造成卡顿问题,只能维保现场更换,花费大量研发和售后时间、物料成本。

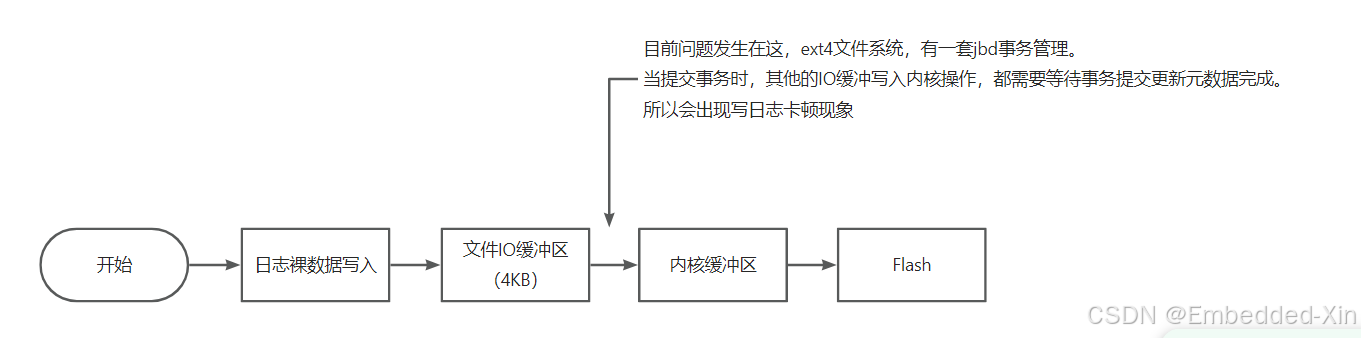

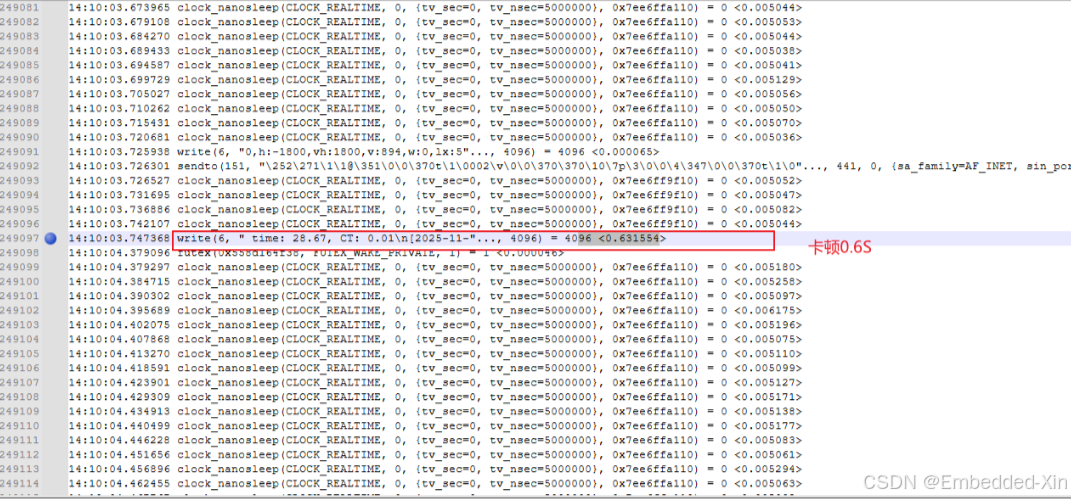

- Ext4文件系统使用jbd2在大量碎片写的情况下,存在性能毛刺问题,造成规划、算法易出现卡顿,导致小车异常,该问题存在时间较长,且逐渐恶化,花费大量研发和现场时间。

2. 解决方案

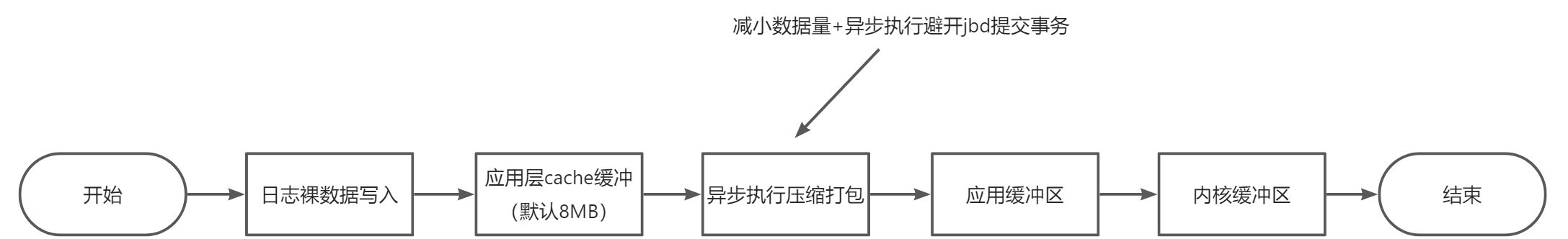

日志写入:spdlog先写入到内存区->到达设定容量(默认8MB,可自由配置)->数据丢给线程池异步执行文件压缩->压缩完成,数据写入磁盘。

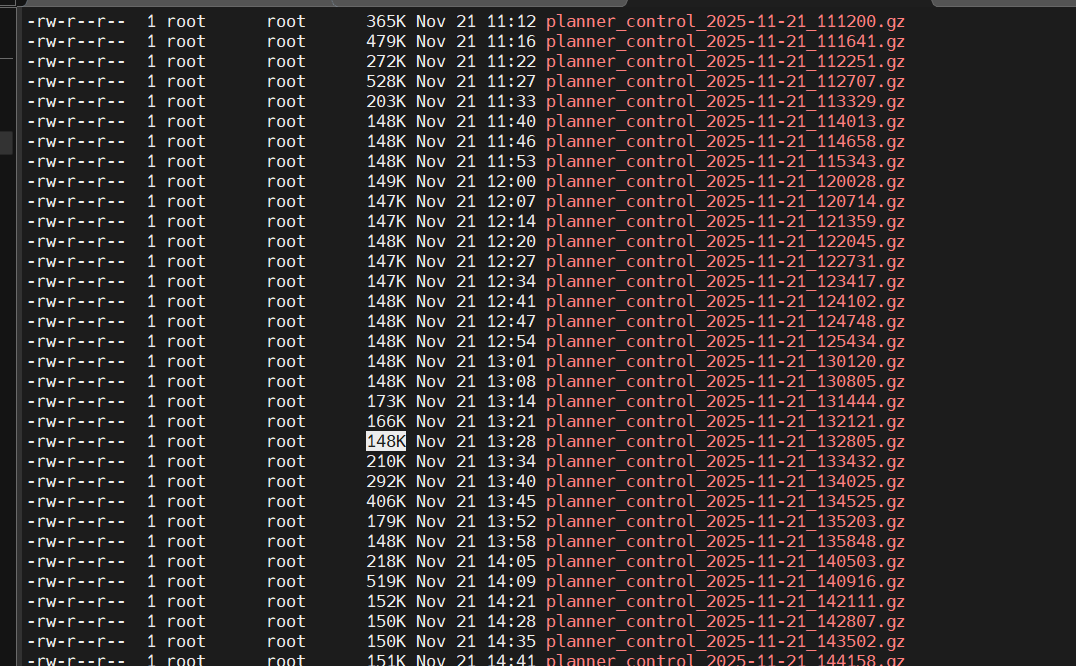

压缩率:8MB原始文本数据形成一个压缩包,压缩包大小为148KB到406KB,实测压缩率为95%到98.2%。

每1024MB数据,减少了约1000MB的Flash数据写入量。

3. 源码

- compress_file_sink.h

json

#ifndef SPDLOG_COMPRESS_FILE_SINK_H

#define SPDLOG_COMPRESS_FILE_SINK_H

#pragma once

#include <spdlog/common.h>

#include <spdlog/sinks/base_sink.h>

#include <spdlog/details/file_helper.h>

#include <spdlog/details/null_mutex.h>

#include <spdlog/details/synchronous_factory.h>

#include <spdlog/fmt/fmt.h>

#include <spdlog/async.h>

#include <future>

#include "thread_pool.h"

#include <zlib.h>

#include <string>

#include <mutex>

namespace spdlog

{

namespace sinks

{

template<typename Mutex>

class compressed_file_sink : public base_sink<Mutex>

{

public:

explicit compressed_file_sink(const filename_t &base_filename, size_t buffer_capacity = 8192,

int compression_level = Z_DEFAULT_STRATEGY,

std::shared_ptr<ThreadPool> thread_pool = nullptr)

: base_filename_(base_filename)

, buffer_capacity_(buffer_capacity)

, compression_level_(compression_level)

, thread_pool_(thread_pool)

{

buffer_.reserve(buffer_capacity_);

}

~compressed_file_sink() override

{

std::lock_guard<Mutex> lock(base_sink<Mutex>::mutex_);

flush_();

}

compressed_file_sink(const compressed_file_sink &) = delete;

compressed_file_sink &operator=(const compressed_file_sink &) = delete;

protected:

void sink_it_(const details::log_msg &msg) override

{

memory_buf_t formatted;

base_sink<Mutex>::formatter_->format(msg, formatted);

buffer_.append(formatted.data(), formatted.data() + formatted.size());

if (buffer_.size() >= buffer_capacity_)

{

compress_async();

}

}

void flush_() override

{

compress_async();

}

private:

// Create filename for the form basename.YYYY-MM-DD-H

filename_t calc_filename(const filename_t &filename, const tm &now_tm)

{

filename_t basename, ext;

std::tie(basename, ext) = details::file_helper::split_by_extension(filename);

return fmt_lib::format(SPDLOG_FILENAME_T("{}_{:04d}-{:02d}-{:02d}_{:02d}{:02d}{:02d}{}"), basename, now_tm.tm_year + 1900, now_tm.tm_mon + 1,

now_tm.tm_mday, now_tm.tm_hour, now_tm.tm_min, now_tm.tm_sec, ext);

}

tm now_tm(log_clock::time_point tp)

{

time_t tnow = log_clock::to_time_t(tp);

return spdlog::details::os::localtime(tnow);

}

void compress(filename_t base_filename, int compression_level, const memory_buf_t &buffer)

{

auto now = log_clock::now();

auto filename = calc_filename(base_filename, now_tm(now));

gzFile gzfp = gzopen(filename.c_str(), "wb");

if(!gzfp)

{

throw spdlog_ex("zlib creaate file failed");

return ;

}

if (gzsetparams(gzfp, 6, compression_level) != Z_OK)

{

throw spdlog_ex("zlib set zip strategy failed");

return ;

}

int len = gzwrite(gzfp, buffer.data(), buffer.size());

printf("compress_async len:%d, size:%d\n", len, buffer.size());

FILE *file = fopen("11", "wb");

fwrite(buffer.data(), buffer.size(), 1, file);

fclose(file);

gzflush(gzfp, Z_SYNC_FLUSH);

gzclose(gzfp);

return ;

}

void compress_async()

{

if (buffer_.size() == 0)

{

return;

}

if (thread_pool_)

{

printf("use threadpool\n");

thread_pool_->enqueue(

[this, buffer = std::move(buffer_), base_filename = base_filename_, level = compression_level_]()

{

this->compress(base_filename, level, buffer);

}

);

}

else

{

std::async(std::launch::async,

[this, buffer = std::move(buffer_), base_filename = base_filename_, level = compression_level_]()

{

this->compress(base_filename, level, buffer);

}

);

}

}

filename_t base_filename_;

memory_buf_t buffer_;

size_t buffer_capacity_;

int compression_level_;

std::shared_ptr<ThreadPool> thread_pool_;

};

using compressed_file_sink_mt = compressed_file_sink<std::mutex>;

using compressed_file_sink_st = compressed_file_sink<details::null_mutex>;

}; // namespace sinks

template<typename Factory = spdlog::synchronous_factory>

inline std::shared_ptr<logger> compressed_file_logger_mt(const std::string &logger_name, const filename_t &filename,

size_t buffer_capacity = 8192,

int compression_level = Z_DEFAULT_COMPRESSION)

{

return Factory::template create<sinks::compressed_file_sink_mt>(logger_name, filename, buffer_capacity,

compression_level);

}

template<typename Factory = spdlog::synchronous_factory>

inline std::shared_ptr<logger> compressed_file_logger_st(const std::string &logger_name, const filename_t &filename,

size_t buffer_capacity = 8192,

int compression_level = Z_DEFAULT_COMPRESSION)

{

return Factory::template create<sinks::compressed_file_sink_st>(logger_name, filename, buffer_capacity,

compression_level);

}

}; // namespace spdlog

#endif- thread_pool.h

json

#ifndef THREAD_POOL_H

#define THREAD_POOL_H

#include <vector>

#include <queue>

#include <memory>

#include <thread>

#include <mutex>

#include <condition_variable>

#include <future>

#include <functional>

#include <stdexcept>

class ThreadPool {

public:

ThreadPool(size_t);

template<class F, class... Args>

auto enqueue(F&& f, Args&&... args)

-> std::future<typename std::result_of<F(Args...)>::type>;

~ThreadPool();

private:

// need to keep track of threads so we can join them

std::vector< std::thread > workers;

// the task queue

std::queue< std::function<void()> > tasks;

// synchronization

std::mutex queue_mutex;

std::condition_variable condition;

bool stop;

};

// the constructor just launches some amount of workers

inline ThreadPool::ThreadPool(size_t threads)

: stop(false)

{

for(size_t i = 0;i<threads;++i)

workers.emplace_back(

[this]

{

for(;;)

{

std::function<void()> task;

{

std::unique_lock<std::mutex> lock(this->queue_mutex);

this->condition.wait(lock,

[this]{ return this->stop || !this->tasks.empty(); });

if(this->stop && this->tasks.empty())

return;

task = std::move(this->tasks.front());

this->tasks.pop();

}

task();

}

}

);

}

// add new work item to the pool

template<class F, class... Args>

auto ThreadPool::enqueue(F&& f, Args&&... args)

-> std::future<typename std::result_of<F(Args...)>::type>

{

using return_type = typename std::result_of<F(Args...)>::type;

auto task = std::make_shared< std::packaged_task<return_type()> >(

std::bind(std::forward<F>(f), std::forward<Args>(args)...)

);

std::future<return_type> res = task->get_future();

{

std::unique_lock<std::mutex> lock(queue_mutex);

// don't allow enqueueing after stopping the pool

if(stop)

throw std::runtime_error("enqueue on stopped ThreadPool");

tasks.emplace([task](){ (*task)(); });

}

condition.notify_one();

return res;

}

// the destructor joins all threads

inline ThreadPool::~ThreadPool()

{

{

std::unique_lock<std::mutex> lock(queue_mutex);

stop = true;

}

condition.notify_all();

for(std::thread &worker: workers)

worker.join();

}

#endif- test.cpp

json

#include <stdio.h>

#include <string>

#include "spdlog_compress_file_sink.h"

#include <spdlog/common.h>

#include <spdlog/sinks/base_sink.h>

#include <spdlog/details/file_helper.h>

#include <spdlog/details/null_mutex.h>

#include <spdlog/details/synchronous_factory.h>

#include <spdlog/fmt/fmt.h>

#include "thread_pool.h"

int main(int argc, char **argv)

{

std::shared_ptr<ThreadPool> pool = std::make_shared<ThreadPool>(4);

auto default_file_sink = std::make_shared<spdlog::sinks::compressed_file_sink<std::mutex>>("nav_default.gz", 8192, Z_DEFAULT_STRATEGY);

default_file_sink->set_level(spdlog::level::trace);

spdlog::sinks_init_list default_sinks = {default_file_sink};

auto nav_default_log = std::make_shared<spdlog::logger>("nav_default", default_sinks);

nav_default_log->set_level(spdlog::level::trace);

// nav_default_log->flush_on(spdlog::level::debug);

// spdlog::set_default_logger(nav_default_log);

for (size_t i = 0; i < 100; i++)

{

/* code */

nav_default_log->info("begin:beginbeginbeginbeginbeginbeginbeginbegin:::::::::::::{}", i);

nav_default_log->info("1111111111111111111111");

nav_default_log->info("22222222222222222222222");

nav_default_log->info("333333");

}

return 0;

}之后生成的日志将是一个个压缩包,例如: