引言

神经网络实验往往需要编写大量代码、调试参数、手动可视化结果,对于初学者而言门槛较高。传统的命令行式实验方式不仅操作繁琐,还难以直观展示算法效果。本文基于Gradio 框架,构建了一个集经典神经网络(感知器、BP、Hopfield) 和深度学习模型(AlexNet/VGG/ResNet) 于一体的可视化 GUI 实验平台,无需编写代码,仅通过界面交互即可完成各类神经网络实验,大幅降低了实验门槛。

本平台支持以下核心实验:

- 感知器二分类实验

- BP 神经网络非线性函数拟合实验

- Hopfield 网络图像恢复实验

- AlexNet/VGG/ResNet 图像分类实验(CIFAR-10)

所有实验均集成在统一的 GUI 界面中,支持参数调节、实时可视化、结果导出,适合教学演示、自学验证、算法对比等场景。

一、平台核心特性

| 实验模块 | 核心功能 | 交互方式 | 可视化输出 |

|---|---|---|---|

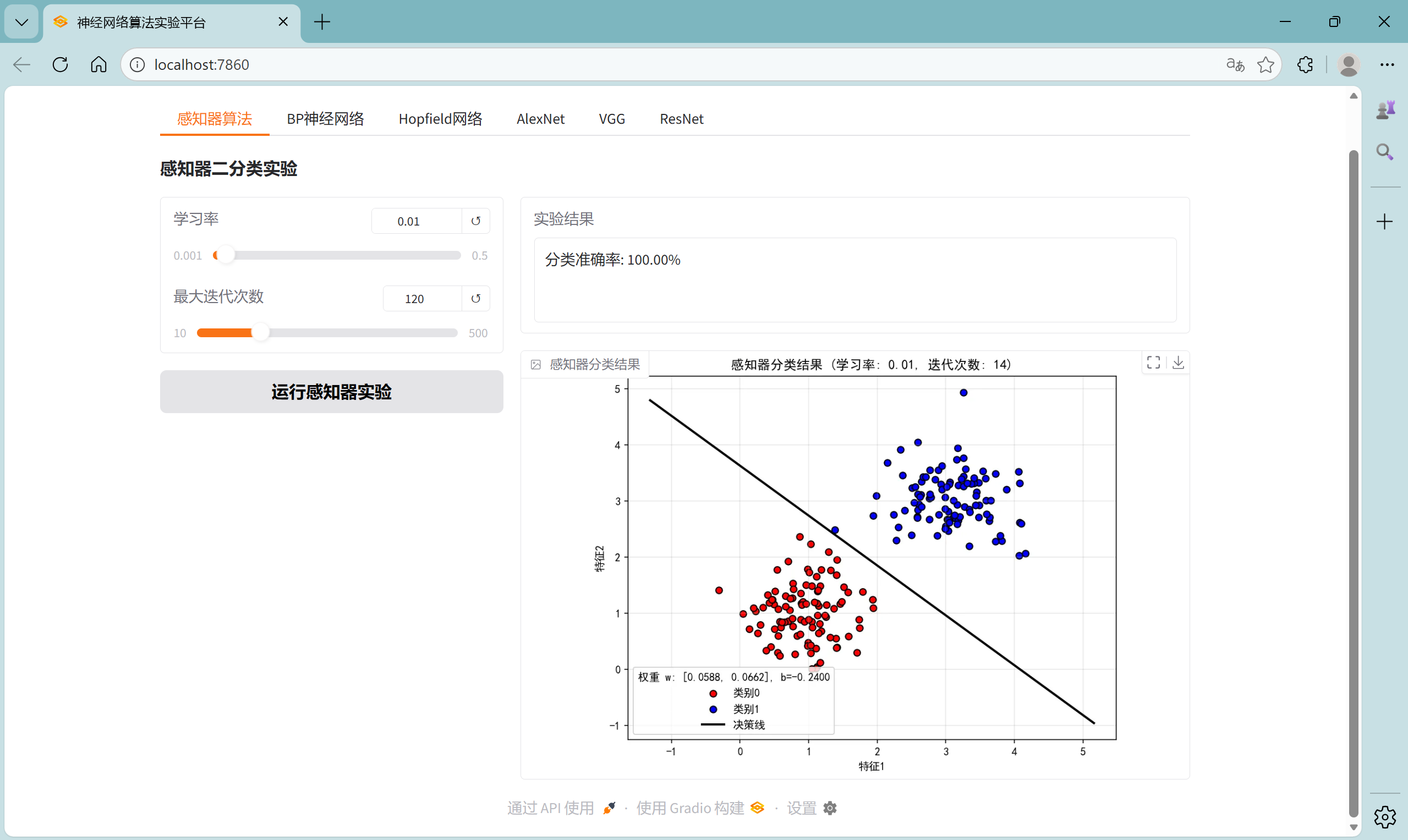

| 感知器算法 | 二分类实验,支持调节学习率、最大迭代次数 | 滑块调节参数 | 分类散点图、决策线、准确率 |

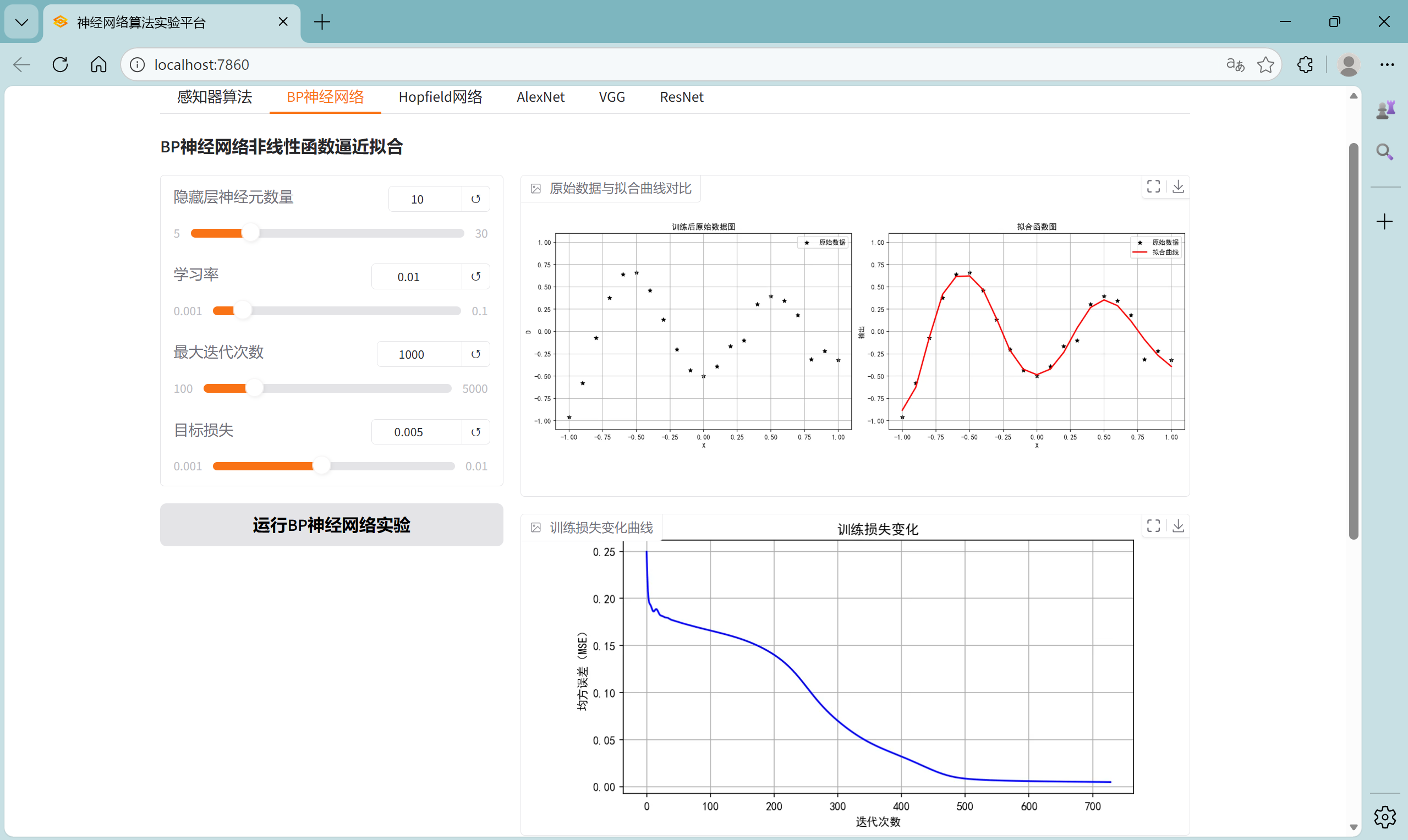

| BP 神经网络 | 非线性函数拟合,支持调节隐藏层神经元数、学习率、迭代次数、目标损失 | 多滑块参数调节 | 原始数据 / 拟合曲线对比、损失下降曲线、网络参数 |

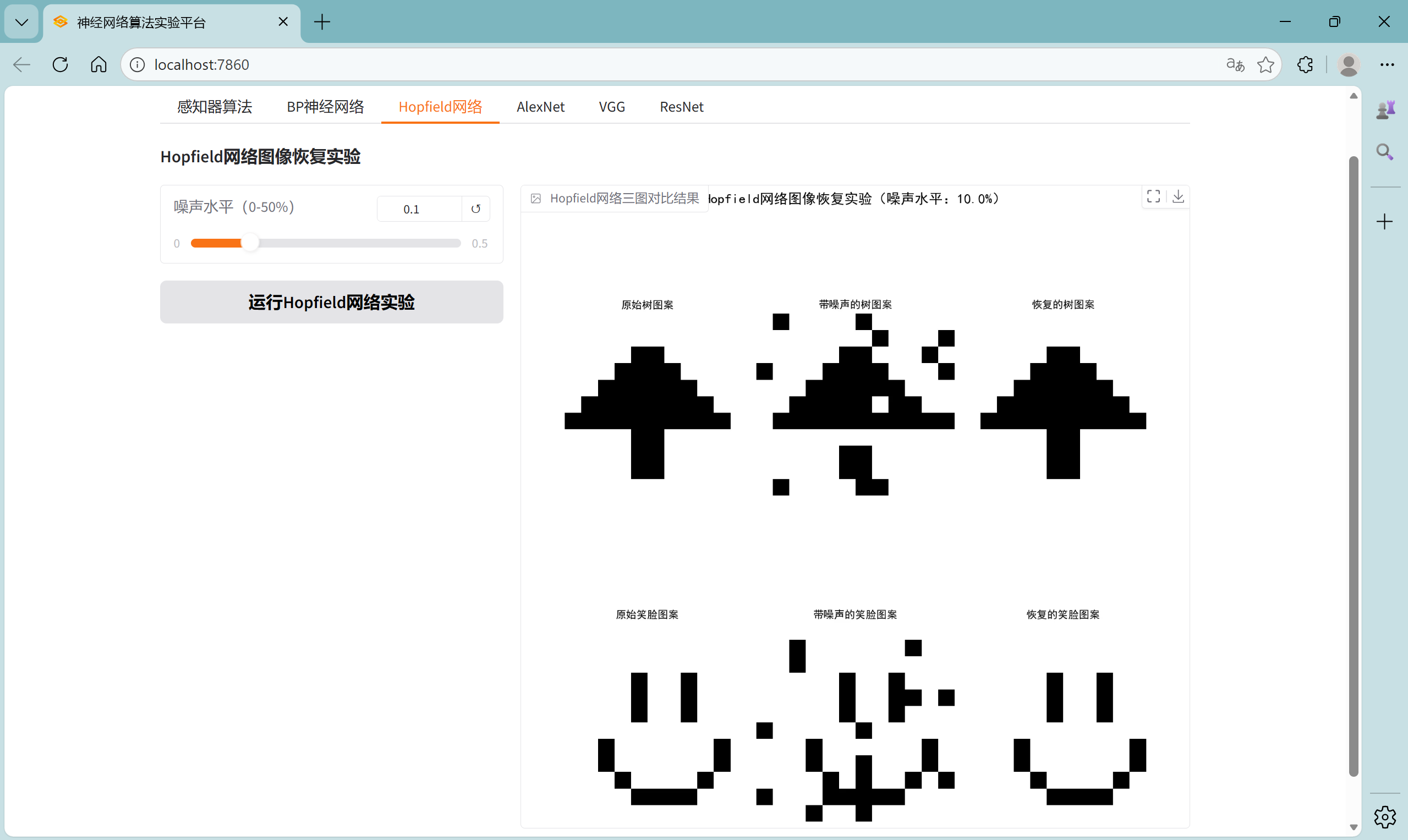

| Hopfield 网络 | 图像恢复实验,支持调节噪声水平 | 噪声滑块 | 原图 / 带噪声图 / 恢复图 |

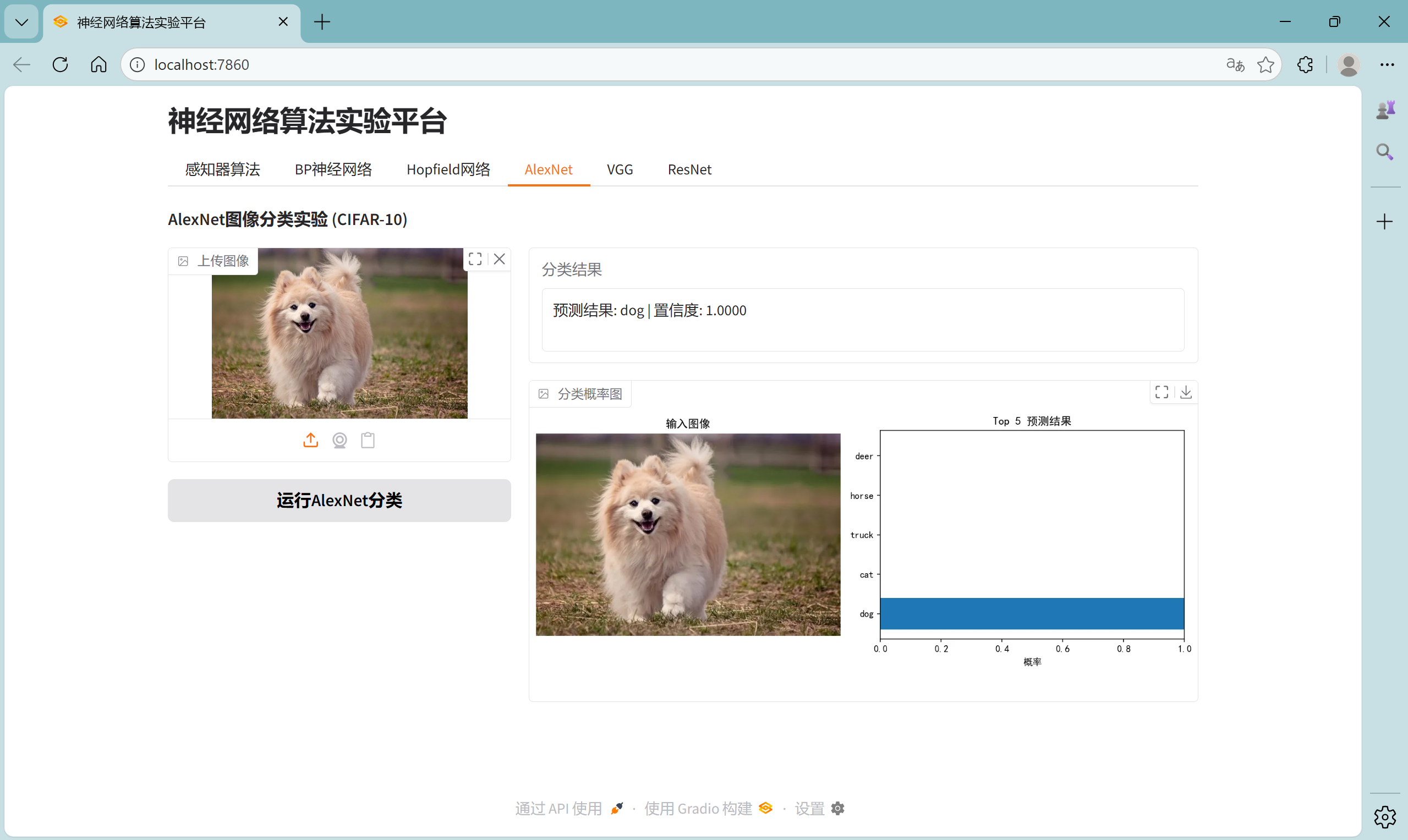

| AlexNet/VGG/ResNet | CIFAR-10 图像分类 | 上传图像 | 分类结果、Top5 概率条形图 |

二、技术栈与核心依赖

1. 核心依赖库

python

# 基础环境

python >= 3.8

# 核心库

gradio >= 4.0 # GUI界面构建

torch >= 2.0 # 深度学习模型

torchvision >= 0.15 # 预训练模型

numpy >= 1.24 # 数值计算

matplotlib >= 3.7 # 可视化

Pillow >= 9.0 # 图像处理2. 依赖安装命令

python

pip install gradio torch torchvision numpy matplotlib pillow三、核心代码解析

1. 整体架构设计

平台采用 Gradio 的Blocks布局,通过Tabs分模块管理不同实验,核心架构如下:

python

def create_interface():

with gr.Blocks(title="神经网络算法实验平台") as demo:

gr.Markdown("# 神经网络算法实验平台")

with gr.Tabs():

# 感知器实验Tab

with gr.Tab("感知器算法"): ...

# BP神经网络Tab

with gr.Tab("BP神经网络"): ...

# Hopfield网络Tab

with gr.Tab("Hopfield网络"): ...

# 深度学习模型Tabs(AlexNet/VGG/ResNet)

with gr.Tab("AlexNet"): ...

with gr.Tab("VGG"): ...

with gr.Tab("ResNet"): ...

return demo2. 经典神经网络模块解析

(1)感知器算法模块

感知器是最简单的二分类模型,核心实现包括数据生成、模型训练、可视化:

python

class Perceptron:

def __init__(self, learning_rate=0.01, max_epochs=100):

self.lr = learning_rate

self.max_epochs = max_epochs

self.w = None # 权重

self.b = 0 # 偏置

def activate(self, z):

return 1 if z >= 0 else -1 # 阶跃激活函数

def train(self, X, y):

y_sym = np.where(y == 0, -1, 1) # 标签转换为±1

n_samples, n_features = X.shape

self.w = np.zeros(n_features)

for epoch in range(self.max_epochs):

updated = False

for i in range(n_samples):

y_pred = self.predict(X[i])

if y_pred != y_sym[i]:

# 权重更新规则

self.w += self.lr * (y_sym[i] - y_pred) * X[i]

self.b += self.lr * (y_sym[i] - y_pred)

updated = True

if not updated: # 无更新则提前停止

break交互逻辑:通过滑块调节学习率和最大迭代次数,点击按钮触发训练,实时输出分类准确率和可视化结果。

(2)BP 神经网络模块

BP 神经网络用于非线性函数拟合,采用 PyTorch 实现全连接网络,核心结构:

python

class BPNetwork(nn.Module):

def __init__(self, hidden_size=10):

super(BPNetwork, self).__init__()

self.hidden_layer = nn.Linear(1, hidden_size) # 输入层→隐藏层

self.output_layer = nn.Linear(hidden_size, 1) # 隐藏层→输出层

def forward(self, x):

hidden_out = torch.tanh(self.hidden_layer(x)) # tanh激活

output_out = torch.tanh(self.output_layer(hidden_out))

return output_out核心优化:

- 采用 Adam 优化器加速收敛

- 支持提前停止(达到目标损失则终止训练)

- 双图可视化(原始数据 + 拟合曲线、损失下降曲线)

(3)Hopfield 网络模块

Hopfield 网络是经典的递归神经网络,用于联想记忆和图像恢复,核心实现:

python

class HopfieldNetwork:

def __init__(self, num_neurons):

self.num_neurons = num_neurons

self.weights = np.zeros((num_neurons, num_neurons))

def train(self, patterns):

# Hebbian学习规则训练权重

for i in range(self.num_neurons):

for j in range(self.num_neurons):

if i != j:

self.weights[i, j] = (1/self.num_neurons) * np.sum(patterns[:,i] * patterns[:,j])

def predict(self, pattern, max_iter=100):

# 异步更新规则恢复图案

current = pattern.copy()

for _ in range(max_iter):

order = np.random.permutation(self.num_neurons)

new_state = current.copy()

for idx in order:

activation = np.dot(self.weights[idx,:], current)

new_state[idx] = 1 if activation >=0 else -1

if np.array_equal(new_state, current):

break

current = new_state

return current特色功能:自定义生成树和笑脸图案,支持不同噪声水平的图像恢复实验,三图对比直观展示恢复效果。

3. 深度学习模型模块解析

(1)模型加载与适配

统一封装 AlexNet/VGG/ResNet 的加载逻辑,适配 CIFAR-10 分类任务:

python

def load_model(model_name):

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

if model_name == "AlexNet":

model = torchvision.models.alexnet(weights=None)

in_features = model.classifier[6].in_features

model.classifier[6] = torch.nn.Linear(in_features, len(CLASSES))

elif model_name == "ResNet":

model = torchvision.models.resnet18(weights=None)

in_features = model.fc.in_features

model.fc = torch.nn.Sequential(torch.nn.Dropout(0.5),

torch.nn.Linear(in_features, len(CLASSES)))

elif model_name == "VGG":

model = torchvision.models.vgg16(weights=None)

in_features = model.classifier[6].in_features

model.classifier[6] = torch.nn.Sequential(torch.nn.Dropout(0.5),

torch.nn.Linear(in_features, len(CLASSES)))

# 加载预训练权重

if os.path.exists(MODEL_PATHS[model_name]):

model.load_state_dict(torch.load(MODEL_PATHS[model_name], map_location=device))

model.to(device)

model.eval()

return model, device(2)预测与可视化

上传图像后,自动预处理并输出分类结果 + Top5 概率条形图:

python

def predict_image(model_name, image):

model, device = load_model(model_name)

transform = get_transform(model_name)

# 预处理

img = Image.fromarray(image).convert("RGB")

img_tensor = transform(img).unsqueeze(0).to(device)

# 预测

with torch.no_grad():

outputs = model(img_tensor)

probs = torch.softmax(outputs, dim=1)

conf, pred = torch.max(probs, 1)

# 可视化

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(10, 4))

ax1.imshow(image), ax1.set_title("输入图像"), ax1.axis('off')

# Top5概率条形图

top_n = 5

top_probs, top_indices = torch.topk(probs, top_n)

ax2.barh([CLASSES[i] for i in top_indices.cpu().numpy()[0]],

top_probs.cpu().numpy()[0])

ax2.set_xlabel("概率"), ax2.set_title(f"Top {top_n} 预测结果")

# 保存并返回

buf = io.BytesIO()

plt.savefig(buf, format='png', bbox_inches='tight', dpi=150)

buf.seek(0)

result_img = Image.open(buf)

return f"预测结果: {CLASSES[pred.item()]} | 置信度: {conf.item():.4f}", result_img4. 中文显示优化

解决 Matplotlib 中文乱码问题:

python

plt.rcParams["font.family"] = ["SimHei"] # 设置中文字体

plt.rcParams['axes.unicode_minus'] = False # 解决负号显示问题四、平台使用指南

1. 环境准备

python

# 1. 安装依赖

pip install gradio torch torchvision numpy matplotlib pillow

# 2. 准备模型文件(需提前训练好以下文件,放在同目录)

# best_alexnet_cifar10.pth

# best_resnet_cifar10.pth

# best_vgg16_cifar10_final.pth2. 启动平台

python

if __name__ == "__main__":

demo = create_interface()

demo.launch(server_name="localhost", server_port=7860, share=False)启动后访问 http://localhost:7860 即可进入 GUI 界面。

3. 各模块使用方法

(1)感知器算法实验

- 调节 "学习率"(0.001-0.5)和 "最大迭代次数"(10-500)滑块;

- 点击 "运行感知器实验";

- 查看右侧 "实验结果"(准确率)和 "感知器分类结果" 图(含决策线、权重信息)。

(2)BP 神经网络实验

- 调节 "隐藏层神经元数量"(5-30)、"学习率"(0.001-0.1)、"最大迭代次数"(100-5000)、"目标损失"(0.001-0.01);

- 点击 "运行 BP 神经网络实验";

- 查看 "原始数据与拟合曲线对比""训练损失变化曲线" 和 "网络参数与训练结果"。

(3)Hopfield 网络实验

- 调节 "噪声水平"(0-50%)滑块;

- 点击 "运行 Hopfield 网络实验";

- 查看三图对比结果(原图 / 带噪声图 / 恢复图)。

(4)深度学习模型分类实验

- 切换到对应模型 Tab(AlexNet/VGG/ResNet);

- 点击 "上传图像" 按钮,选择本地图像;

- 点击 "运行 XXX 分类";

- 查看分类结果和 Top5 概率条形图。

五、实验效果展示

1. 感知器实验效果

2. BP 神经网络实验效果

3. Hopfield 网络实验效果

4. AlexNet 分类实验效果

5. VGG 分类实验效果

6. ResNet 分类实验效果

六、代码优化与扩展方向

1. 现有优化点

- 跨设备适配:自动检测 CPU/GPU,模型加载时自动映射设备;

- 提前停止机制:BP 网络和感知器支持提前停止,避免无效迭代;

- 可视化优化:所有图像保存到内存缓冲区,避免磁盘 IO;

- 中文支持:解决 Matplotlib 中文乱码问题,提升界面友好性。

2. 扩展方向

- 模型训练功能:增加在线训练深度学习模型的功能,无需提前准备权重文件;

- 更多经典网络:增加 RBF、CNN 等网络模块;

- 结果导出:支持将实验结果(图像、参数)导出为 PDF/PNG 文件;

- 批量预测:支持深度学习模型批量图像分类;

- 多语言支持:增加英文界面选项;

- 云端部署 :通过 Gradio 的

share=True实现公网访问,支持远程实验。

七、完整代码

python

import gradio as gr

import numpy as np

import matplotlib.pyplot as plt

import torch

import torchvision

from torchvision import transforms

from PIL import Image

import os

import io

import torch.nn as nn

import torch.optim as optim

# 设置中文字体

plt.rcParams["font.family"] = ["SimHei"]

plt.rcParams['axes.unicode_minus'] = False # 解决负号显示问题

# ====================== 通用配置 ======================

# 模型文件路径

MODEL_PATHS = {

"AlexNet": "best_alexnet_cifar10.pth",

"ResNet": "best_resnet_cifar10.pth",

"VGG": "best_vgg16_cifar10_final.pth"

}

# CIFAR-10类别

CLASSES = ['airplane', 'automobile', 'bird', 'cat', 'deer',

'dog', 'frog', 'horse', 'ship', 'truck']

# ====================== 1. 深度学习模型(AlexNet/VGG/ResNet) ======================

# 数据预处理

def get_transform(model_name):

if model_name == "VGG":

return transforms.Compose([

transforms.Resize((144, 144)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.4914, 0.4822, 0.4465], std=[0.2470, 0.2435, 0.2616])

])

else:

return transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

# 加载模型

def load_model(model_name):

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

if model_name == "AlexNet":

model = torchvision.models.alexnet(weights=None)

in_features = model.classifier[6].in_features

model.classifier[6] = torch.nn.Linear(in_features, len(CLASSES))

elif model_name == "ResNet":

model = torchvision.models.resnet18(weights=None)

in_features = model.fc.in_features

model.fc = torch.nn.Sequential(torch.nn.Dropout(0.5),

torch.nn.Linear(in_features, len(CLASSES)))

elif model_name == "VGG":

model = torchvision.models.vgg16(weights=None)

in_features = model.classifier[6].in_features

model.classifier[6] = torch.nn.Sequential(torch.nn.Dropout(0.5),

torch.nn.Linear(in_features, len(CLASSES)))

else:

raise ValueError(f"未知模型: {model_name}")

# 加载模型权重

if os.path.exists(MODEL_PATHS[model_name]):

model.load_state_dict(torch.load(MODEL_PATHS[model_name], map_location=device))

else:

print(f"警告: 未找到模型文件 {MODEL_PATHS[model_name]},使用随机权重")

model.to(device)

model.eval()

return model, device

# 预测函数

def predict_image(model_name, image):

if image is None:

return "请上传一张图片", None

model, device = load_model(model_name)

transform = get_transform(model_name)

# 预处理图像

img = Image.fromarray(image).convert("RGB")

img_tensor = transform(img).unsqueeze(0).to(device)

# 预测

with torch.no_grad():

outputs = model(img_tensor)

probs = torch.softmax(outputs, dim=1)

conf, pred = torch.max(probs, 1)

# 绘制结果

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(10, 4))

# 显示输入图像

ax1.imshow(image)

ax1.set_title("输入图像")

ax1.axis('off')

# 显示概率条形图

top_n = 5

top_probs, top_indices = torch.topk(probs, top_n)

ax2.barh([CLASSES[i] for i in top_indices.cpu().numpy()[0]],

top_probs.cpu().numpy()[0])

ax2.set_xlabel("概率")

ax2.set_title(f"Top {top_n} 预测结果")

ax2.set_xlim(0, 1)

plt.tight_layout()

# 保存到缓冲区

buf = io.BytesIO()

plt.savefig(buf, format='png', bbox_inches='tight', dpi=150)

buf.seek(0)

result_img = Image.open(buf)

return f"预测结果: {CLASSES[pred.item()]} | 置信度: {conf.item():.4f}", result_img

# ====================== 2. Hopfield网络 ======================

class HopfieldNetwork:

def __init__(self, num_neurons):

self.num_neurons = num_neurons

self.weights = np.zeros((num_neurons, num_neurons))

def train(self, patterns):

for i in range(self.num_neurons):

for j in range(self.num_neurons):

if i != j:

self.weights[i, j] = (1 / self.num_neurons) * np.sum(patterns[:, i] * patterns[:, j])

def predict(self, pattern, max_iter=100):

current = pattern.copy()

for _ in range(max_iter):

order = np.random.permutation(self.num_neurons)

new_state = current.copy()

for idx in order:

activation = np.dot(self.weights[idx, :], current)

new_state[idx] = 1 if activation >= 0 else -1

if np.array_equal(new_state, current):

break

current = new_state

return current

def create_patterns(size=12):

"""创建树和笑脸模式"""

tree = np.ones((size, size)) * -1

smile = np.ones((size, size)) * -1

# 树图案

for i in range(5): # 增加树冠层数

tree[i + 2, (5 - i):(7 + i)] = 1 # 调整位置使其居中

tree[6:10, 5:7] = 1 # 调整树干位置

# 笑脸图案

smile[3:6, 5] = smile[3:6, 8] = 1 # 眼睛

smile[7:9, [3, 10]] = 1

smile[9, [4, 9]] = 1

smile[10, 5:9] = 1 # 嘴巴中间部分

return tree, smile

def add_noise(pattern, noise_level):

"""添加指定比例的噪声"""

noisy = pattern.copy()

pixels = pattern.size

flip_count = int(noise_level * pixels)

indices = np.random.choice(pixels, flip_count, replace=False)

rows, cols = pattern.shape

for idx in indices:

r, c = idx // cols, idx % cols

noisy[r, c] *= -1

return noisy

def hopfield_demo(noise_level):

"""Hopfield网络演示:显示原图、带噪声图、恢复图的三图对比"""

img_size = 12

tree, smile = create_patterns(img_size)

# 训练网络

patterns = np.array([tree.flatten(), smile.flatten()])

net = HopfieldNetwork(img_size * img_size)

net.train(patterns)

# 对两个图案都添加噪声并恢复

noisy_tree = add_noise(tree, noise_level)

recovered_tree = net.predict(noisy_tree.flatten()).reshape(img_size, img_size)

noisy_smile = add_noise(smile, noise_level)

recovered_smile = net.predict(noisy_smile.flatten()).reshape(img_size, img_size)

# 可视化结果

fig, axes = plt.subplots(2, 3, figsize=(10, 12))

fig.suptitle(f'Hopfield网络图像恢复实验(噪声水平:{noise_level * 100}%)', fontsize=16, fontweight='bold')

# 第一行:树

axes[0, 0].imshow(tree, cmap='binary')

axes[0, 0].set_title('原始树图案', fontsize=12)

axes[0, 0].axis('off')

axes[0, 1].imshow(noisy_tree, cmap='binary')

axes[0, 1].set_title(f'带噪声的树图案', fontsize=12)

axes[0, 1].axis('off')

axes[0, 2].imshow(recovered_tree, cmap='binary')

axes[0, 2].set_title('恢复的树图案', fontsize=12)

axes[0, 2].axis('off')

# 第二行:笑脸

axes[1, 0].imshow(smile, cmap='binary')

axes[1, 0].set_title('原始笑脸图案', fontsize=12)

axes[1, 0].axis('off')

axes[1, 1].imshow(noisy_smile, cmap='binary')

axes[1, 1].set_title(f'带噪声的笑脸图案', fontsize=12)

axes[1, 1].axis('off')

axes[1, 2].imshow(recovered_smile, cmap='binary')

axes[1, 2].set_title('恢复的笑脸图案', fontsize=12)

axes[1, 2].axis('off')

plt.tight_layout()

# 保存到缓冲区

buf = io.BytesIO()

plt.savefig(buf, format='png', bbox_inches='tight', dpi=150)

buf.seek(0)

result_img = Image.open(buf)

return result_img

# ====================== 3. 感知器算法 ======================

class Perceptron:

def __init__(self, learning_rate=0.01, max_epochs=100):

self.lr = learning_rate

self.max_epochs = max_epochs

self.w = None

self.b = 0

self.final_w = None

self.final_b = 0

def activate(self, z):

return 1 if z >= 0 else -1

def predict(self, x):

return self.activate(np.dot(x, self.w) + self.b)

def train(self, X, y):

y_sym = np.where(y == 0, -1, 1)

n_samples, n_features = X.shape

self.w = np.zeros(n_features)

self.b = 0

epoch_params = []

for epoch in range(self.max_epochs):

updated = False

for i in range(n_samples):

y_pred = self.predict(X[i])

if y_pred != y_sym[i]:

self.w += self.lr * (y_sym[i] - y_pred) * X[i]

self.b += self.lr * (y_sym[i] - y_pred)

updated = True

epoch_params.append((self.w.copy(), self.b.copy()))

if not updated:

break

self.final_w, self.final_b = self.w.copy(), self.b.copy()

return epoch_params

def generate_data(n_samples=100, seed=42):

np.random.seed(seed)

# 生成两类可分离的数据

class0 = np.random.randn(n_samples, 2) * 0.5 + np.array([1, 1])

class1 = np.random.randn(n_samples, 2) * 0.5 + np.array([3, 3])

X = np.vstack((class0, class1))

y = np.hstack((np.zeros(n_samples), np.ones(n_samples)))

return X, y

def perceptron_demo(learning_rate, max_epochs):

X, y = generate_data()

perceptron = Perceptron(learning_rate=learning_rate, max_epochs=int(max_epochs))

epoch_params = perceptron.train(X, y)

# 绘制结果

fig, ax = plt.subplots(figsize=(8, 6))

# 绘制样本点

ax.scatter(X[y == 0][:, 0], X[y == 0][:, 1], c='red', label='类别0', edgecolors='k')

ax.scatter(X[y == 1][:, 0], X[y == 1][:, 1], c='blue', label='类别1', edgecolors='k')

# 绘制最终决策线

x1 = np.linspace(X[:, 0].min() - 1, X[:, 0].max() + 1, 100)

if abs(perceptron.final_w[1]) > 1e-6:

x2 = (-perceptron.final_w[0] * x1 - perceptron.final_b) / perceptron.final_w[1]

else:

x1 = [-perceptron.final_b / (perceptron.final_w[0] + 1e-6)] * 100

x2 = np.linspace(X[:, 1].min() - 1, X[:, 1].max() + 1, 100)

ax.plot(x1, x2, 'k-', linewidth=2, label='决策线')

ax.set_xlabel('特征1')

ax.set_ylabel('特征2')

ax.set_title(f'感知器分类结果 (学习率: {learning_rate}, 迭代次数: {len(epoch_params)})')

ax.legend(title=f'权重 w: [{perceptron.final_w[0]:.4f}, {perceptron.final_w[1]:.4f}], b={perceptron.final_b:.4f}')

ax.grid(alpha=0.3)

# 保存到缓冲区

buf = io.BytesIO()

plt.savefig(buf, format='png', bbox_inches='tight', dpi=150)

buf.seek(0)

result_img = Image.open(buf)

# 计算准确率

y_sym = np.where(y == 0, -1, 1)

y_pred = np.apply_along_axis(perceptron.predict, 1, X)

accuracy = np.mean(y_pred == y_sym)

return f"分类准确率: {accuracy:.2%}", result_img

# ====================== 4. BP神经网络 ======================

class BPNetwork(nn.Module):

def __init__(self, hidden_size=10):

super(BPNetwork, self).__init__()

self.hidden_layer = nn.Linear(in_features=1, out_features=hidden_size) # 1→hidden_size

self.output_layer = nn.Linear(in_features=hidden_size, out_features=1) # hidden_size→1

def forward(self, x):

hidden_out = torch.tanh(self.hidden_layer(x)) # 隐含层tansig激活

output_out = torch.tanh(self.output_layer(hidden_out)) # 输出层tansig激活

return output_out

def train_bp_network(hidden_size, learning_rate, max_epochs, target_loss):

# 准备实验数据

X = [

-1.0, -0.9, -0.8, -0.7, -0.6, -0.5, -0.4,

-0.3, -0.2, -0.1, 0.0, 0.1, 0.2, 0.3,

0.4, 0.5, 0.6, 0.7, 0.8, 0.9, 1.0

]

D = [

-0.9602, -0.5770, -0.0729, 0.3771, 0.6405, 0.6600, 0.4609,

0.1336, -0.2013, -0.4344, -0.5000, -0.3930, -0.1647, -0.0988,

0.3072, 0.3960, 0.3449, 0.1816, -0.3120, -0.2189, -0.3201

]

# 转换为PyTorch张量

X_tensor = torch.tensor(X, dtype=torch.float32).view(-1, 1) # 21×1

D_tensor = torch.tensor(D, dtype=torch.float32).view(-1, 1) # 21×1

# 初始化网络与训练参数

net = BPNetwork(hidden_size=hidden_size)

criterion = nn.MSELoss() # 均方误差损失

optimizer = optim.Adam(net.parameters(), lr=learning_rate) # 优化器

# 网络训练过程

loss_history = []

for epoch in range(max_epochs):

O_tensor = net(X_tensor) # 前向传播

loss = criterion(O_tensor, D_tensor) # 计算损失

loss_history.append(loss.item())

# 反向传播与参数更新

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 提前停止条件

if loss.item() < target_loss:

break

# 结果预测

with torch.no_grad():

O = net(X_tensor).numpy()

# 绘制结果

fig, axes = plt.subplots(1, 2, figsize=(14, 5))

# 左图:原始数据点

axes[0].scatter(X, D, c='k', marker='*', label='原始数据')

axes[0].set_xlabel('X')

axes[0].set_ylabel('D')

axes[0].set_title('训练后原始数据图')

axes[0].set_xlim(-1.1, 1.1)

axes[0].set_ylim(-1.1, 1.1)

axes[0].grid(True)

axes[0].legend()

# 右图:拟合曲线

axes[1].scatter(X, D, c='k', marker='*', label='原始数据')

axes[1].plot(X, O, 'r-', linewidth=2, label='拟合曲线')

axes[1].set_xlabel('X')

axes[1].set_ylabel('输出')

axes[1].set_title('拟合函数图')

axes[1].set_xlim(-1.1, 1.1)

axes[1].set_ylim(-1.1, 1.1)

axes[1].grid(True)

axes[1].legend()

plt.tight_layout()

# 保存到缓冲区

buf1 = io.BytesIO()

plt.savefig(buf1, format='png', bbox_inches='tight', dpi=150)

buf1.seek(0)

result_img1 = Image.open(buf1)

# 绘制损失下降曲线

plt.figure(figsize=(8, 4))

plt.plot(range(len(loss_history)), loss_history, 'b-')

plt.xlabel('迭代次数')

plt.ylabel('均方误差(MSE)')

plt.title('训练损失变化')

plt.grid(True)

# 保存到缓冲区

buf2 = io.BytesIO()

plt.savefig(buf2, format='png', bbox_inches='tight', dpi=150)

buf2.seek(0)

result_img2 = Image.open(buf2)

# 获取网络参数

hidden_weights = net.hidden_layer.weight.detach().numpy()

hidden_bias = net.hidden_layer.bias.detach().numpy()

output_weights = net.output_layer.weight.detach().numpy()

output_bias = net.output_layer.bias.detach().numpy()

params_text = f"===== 网络参数 =====\n"

params_text += f"输入层到隐含层权重:\n{hidden_weights.T}\n\n"

params_text += f"隐含层阈值:\n{hidden_bias}\n\n"

params_text += f"隐含层到输出层权重:\n{output_weights}\n\n"

params_text += f"输出层阈值:\n{output_bias}\n\n"

params_text += f"最终损失:{loss_history[-1]:.6f}\n"

params_text += f"训练迭代次数:{len(loss_history)}"

return result_img1, result_img2, params_text

# ====================== Gradio界面 ======================

def create_interface():

with gr.Blocks(title="神经网络算法实验平台") as demo:

gr.Markdown("# 神经网络算法实验平台")

with gr.Tabs():

# 感知器实验

with gr.Tab("感知器算法"):

gr.Markdown("### 感知器二分类实验")

with gr.Row():

with gr.Column(scale=1):

lr = gr.Slider(minimum=0.001, maximum=0.5, value=0.01, step=0.001, label="学习率")

epochs = gr.Slider(minimum=10, maximum=500, value=100, step=10, label="最大迭代次数")

perceptron_btn = gr.Button("运行感知器实验")

with gr.Column(scale=2):

perceptron_text = gr.Textbox(label="实验结果", lines=3)

perceptron_plot = gr.Image(label="感知器分类结果", height=400)

perceptron_btn.click(

fn=perceptron_demo,

inputs=[lr, epochs],

outputs=[perceptron_text, perceptron_plot]

)

# BP神经网络

with gr.Tab("BP神经网络"):

gr.Markdown("### BP神经网络非线性函数逼近拟合")

with gr.Row():

with gr.Column(scale=1):

hidden_size = gr.Slider(minimum=5, maximum=30, value=10, step=1, label="隐藏层神经元数量")

bp_lr = gr.Slider(minimum=0.001, maximum=0.1, value=0.01, step=0.001, label="学习率")

bp_max_epochs = gr.Slider(minimum=100, maximum=5000, value=1000, step=100, label="最大迭代次数")

target_loss = gr.Slider(minimum=0.001, maximum=0.01, value=0.005, step=0.001, label="目标损失")

bp_btn = gr.Button("运行BP神经网络实验")

with gr.Column(scale=2):

bp_plot1 = gr.Image(label="原始数据与拟合曲线对比", height=300)

bp_plot2 = gr.Image(label="训练损失变化曲线", height=300)

bp_params = gr.Textbox(label="网络参数与训练结果", lines=10)

bp_btn.click(

fn=train_bp_network,

inputs=[hidden_size, bp_lr, bp_max_epochs, target_loss],

outputs=[bp_plot1, bp_plot2, bp_params]

)

# Hopfield网络实验

with gr.Tab("Hopfield网络"):

gr.Markdown("### Hopfield网络图像恢复实验")

with gr.Row():

with gr.Column(scale=1):

noise_level = gr.Slider(minimum=0.0, maximum=0.5, value=0.1, step=0.05, label="噪声水平(0-50%)")

hopfield_btn = gr.Button("运行Hopfield网络实验")

with gr.Column(scale=2):

hopfield_plot = gr.Image(label="Hopfield网络三图对比结果", height=600)

hopfield_btn.click(

fn=hopfield_demo,

inputs=[noise_level],

outputs=[hopfield_plot]

)

# AlexNet实验

with gr.Tab("AlexNet"):

gr.Markdown("### AlexNet图像分类实验 (CIFAR-10)")

with gr.Row():

with gr.Column(scale=1):

alexnet_img = gr.Image(type="numpy", label="上传图像", height=200)

alexnet_btn = gr.Button("运行AlexNet分类")

with gr.Column(scale=2):

alexnet_text = gr.Textbox(label="分类结果", lines=2)

alexnet_plot = gr.Image(label="分类概率图", height=300)

alexnet_btn.click(

fn=lambda img: predict_image("AlexNet", img),

inputs=[alexnet_img],

outputs=[alexnet_text, alexnet_plot]

)

# VGG实验

with gr.Tab("VGG"):

gr.Markdown("### VGG图像分类实验 (CIFAR-10)")

with gr.Row():

with gr.Column(scale=1):

vgg_img = gr.Image(type="numpy", label="上传图像", height=200)

vgg_btn = gr.Button("运行VGG分类")

with gr.Column(scale=2):

vgg_text = gr.Textbox(label="分类结果", lines=2)

vgg_plot = gr.Image(label="分类概率图", height=300)

vgg_btn.click(

fn=lambda img: predict_image("VGG", img),

inputs=[vgg_img],

outputs=[vgg_text, vgg_plot]

)

# ResNet实验

with gr.Tab("ResNet"):

gr.Markdown("### ResNet图像分类实验 (CIFAR-10)")

with gr.Row():

with gr.Column(scale=1):

resnet_img = gr.Image(type="numpy", label="上传图像", height=200)

resnet_btn = gr.Button("运行ResNet分类")

with gr.Column(scale=2):

resnet_text = gr.Textbox(label="分类结果", lines=2)

resnet_plot = gr.Image(label="分类概率图", height=300)

resnet_btn.click(

fn=lambda img: predict_image("ResNet", img),

inputs=[resnet_img],

outputs=[resnet_text, resnet_plot]

)

return demo

# 启动应用

if __name__ == "__main__":

demo = create_interface()

demo.launch(server_name="localhost", server_port=7860, share=False)