Ubuntu datasophon1.2.1 二开之二:解决三大监控组件安装后,启动失败:报缺失common.sh

背景

在上次安装集群成功后,继续往下安装监控三大组件:AlertManager,Prometheus,Grafana.已经做好安装失败准备

问题

结果安装后,启动报common.sh找不到!为何找不到呢,我看压缩包也有啊,tar命令没看出问题。后来问一下ai,说缺少个参数:--strip-components=1

后来详细了解一下这个参数作用: 去掉第一层目录

解决

根据ai提示,修改了InstallServiceHandler.java,主要是decompressTarGz,

decompressWithStripComponents方法

java

/*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package com.datasophon.worker.handler;

import cn.hutool.core.io.FileUtil;

import cn.hutool.core.io.StreamProgress;

import cn.hutool.core.lang.Console;

import cn.hutool.http.HttpUtil;

import com.datasophon.common.Constants;

import com.datasophon.common.cache.CacheUtils;

import com.datasophon.common.command.InstallServiceRoleCommand;

import com.datasophon.common.model.RunAs;

import com.datasophon.common.utils.ExecResult;

import com.datasophon.common.utils.FileUtils;

import com.datasophon.common.utils.PropertyUtils;

import com.datasophon.common.utils.ShellUtils;

import com.datasophon.worker.utils.TaskConstants;

import lombok.Data;

import org.apache.commons.lang.StringUtils;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.io.File;

import java.util.ArrayList;

import java.util.Objects;

@Data

public class InstallServiceHandler {

private static final String HADOOP = "hadoop";

private String serviceName;

private String serviceRoleName;

private Logger logger;

public InstallServiceHandler(String serviceName, String serviceRoleName) {

this.serviceName = serviceName;

this.serviceRoleName = serviceRoleName;

String loggerName = String.format("%s-%s-%s", TaskConstants.TASK_LOG_LOGGER_NAME, serviceName, serviceRoleName);

logger = LoggerFactory.getLogger(loggerName);

}

public ExecResult install(InstallServiceRoleCommand command) {

ExecResult execResult = new ExecResult();

try {

String destDir = Constants.INSTALL_PATH + Constants.SLASH + "DDP/packages" + Constants.SLASH;

String packageName = command.getPackageName();

String packagePath = destDir + packageName;

Boolean needDownLoad = !Objects.equals(PropertyUtils.getString(Constants.MASTER_HOST), CacheUtils.get(Constants.HOSTNAME))

&& isNeedDownloadPkg(packagePath, command.getPackageMd5());

if (Boolean.TRUE.equals(needDownLoad)) {

downloadPkg(packageName, packagePath);

}

boolean result = decompressPkg(packageName, command.getDecompressPackageName(), command.getRunAs(), packagePath);

execResult.setExecResult(result);

} catch (Exception e) {

execResult.setExecOut(e.getMessage());

e.printStackTrace();

}

return execResult;

}

private Boolean isNeedDownloadPkg(String packagePath, String packageMd5) {

Boolean needDownLoad = true;

logger.info("Remote package md5 is {}", packageMd5);

if (FileUtil.exist(packagePath)) {

// check md5

String md5 = FileUtils.md5(new File(packagePath));

logger.info("Local md5 is {}", md5);

if (StringUtils.isNotBlank(md5) && packageMd5.trim().equals(md5.trim())) {

needDownLoad = false;

}

}

return needDownLoad;

}

private void downloadPkg(String packageName, String packagePath) {

String masterHost = PropertyUtils.getString(Constants.MASTER_HOST);

String masterPort = PropertyUtils.getString(Constants.MASTER_WEB_PORT);

String downloadUrl = "http://" + masterHost + ":" + masterPort

+ "/ddh/service/install/downloadPackage?packageName=" + packageName;

logger.info("download url is {}", downloadUrl);

HttpUtil.downloadFile(downloadUrl, FileUtil.file(packagePath), new StreamProgress() {

@Override

public void start() {

Console.log("start to install。。。。");

}

@Override

public void progress(long progressSize, long l1) {

Console.log("installed:{}", FileUtil.readableFileSize(progressSize));

}

@Override

public void finish() {

Console.log("install success!");

}

});

logger.info("download package {} success", packageName);

}

private boolean decompressPkg(String packageName, String decompressPackageName, RunAs runAs, String packagePath) {

String installPath = Constants.INSTALL_PATH;

String targetDir = installPath + Constants.SLASH + decompressPackageName;

logger.info("Target directory for decompression: {}", targetDir);

// 确保父目录存在

File parentDir = new File(installPath);

if (!parentDir.exists()) {

parentDir.mkdirs();

}

Boolean decompressResult = decompressTarGz(packagePath, targetDir); // 直接解压到目标目录

if (Boolean.TRUE.equals(decompressResult)) {

// 验证解压结果

if (FileUtil.exist(targetDir)) {

logger.info("Verifying installation in: {}", targetDir);

File[] files = new File(targetDir).listFiles();

boolean hasControlSh = false;

boolean hasBinary = false;

if (files != null) {

for (File file : files) {

if (file.getName().equals("control.sh")) {

hasControlSh = true;

}

if (file.getName().equals(decompressPackageName.split("-")[0])) {

hasBinary = true;

}

}

}

logger.info("control.sh exists: {}, binary exists: {}", hasControlSh, hasBinary);

if (Objects.nonNull(runAs)) {

ShellUtils.exceShell(" chown -R " + runAs.getUser() + ":" + runAs.getGroup() + " " + targetDir);

}

ShellUtils.exceShell(" chmod -R 775 " + targetDir);

return true;

}

}

return false;

}

public Boolean decompressTarGz(String sourceTarGzFile, String targetDir) {

logger.info("Start to use tar -zxvf to decompress {} to {}", sourceTarGzFile, targetDir);

// 新增:创建目标目录(如果不存在) - 增强版本

File targetDirFile = new File(targetDir);

if (!targetDirFile.exists()) {

logger.info("Target directory does not exist, creating: {}", targetDir);

// 尝试创建目录

boolean created = targetDirFile.mkdirs();

if (!created) {

logger.error("Failed to create target directory: {}", targetDir);

// 添加更多诊断信息

File parentDir = targetDirFile.getParentFile();

if (parentDir != null) {

logger.error("Parent directory exists: {}, writable: {}",

parentDir.exists(), parentDir.canWrite());

}

// 检查是否有权限问题

logger.error("Current user: {}", System.getProperty("user.name"));

return false;

}

logger.info("Successfully created target directory: {}", targetDir);

}

// 1. 首先列出tar包中的文件数量

int tarFileCount = getTarFileCount(sourceTarGzFile);

logger.info("Tar file contains {} files/directories", tarFileCount);

// 2. 记录目标目录当前的文件数量

int initialFileCount = targetDirFile.exists() ?

(targetDirFile.listFiles() != null ? targetDirFile.listFiles().length : 0) : 0;

// 3. 执行解压(使用 --strip-components=1)

ArrayList<String> command = new ArrayList<>();

command.add("tar");

command.add("-zxvf");

command.add(sourceTarGzFile);

command.add("-C");

command.add(targetDir);

command.add("--strip-components=1");

ExecResult execResult = ShellUtils.execWithStatus(targetDir, command, 120, logger);

// 4. 验证解压结果

if (execResult.getExecResult()) {

// 等待文件系统同步

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

// ignore

}

// 检查目标目录的文件数量

int finalFileCount = targetDirFile.exists() ?

(targetDirFile.listFiles() != null ? targetDirFile.listFiles().length : 0) : 0;

int extractedFileCount = finalFileCount - initialFileCount;

logger.info("Initial files: {}, Final files: {}, Extracted files: {}",

initialFileCount, finalFileCount, extractedFileCount);

if (extractedFileCount > 0) {

logger.info("Decompression successful, extracted {} files to {}", extractedFileCount, targetDir);

// 列出前几个文件作为验证

File[] files = targetDirFile.listFiles();

if (files != null) {

int limit = Math.min(files.length, 5);

for (int i = 0; i < limit; i++) {

logger.debug("Extracted file: {}", files[i].getName());

}

}

// 关键文件验证

if (sourceTarGzFile.contains("alertmanager")) {

File controlSh = new File(targetDir + Constants.SLASH + "control.sh");

File binary = new File(targetDir + Constants.SLASH + "alertmanager");

if (!controlSh.exists() || !binary.exists()) {

logger.error("Missing key files after decompression: control.sh={}, alertmanager={}",

controlSh.exists(), binary.exists());

return false;

}

} else if (sourceTarGzFile.contains("prometheus")) {

File controlSh = new File(targetDir + Constants.SLASH + "control.sh");

File binary = new File(targetDir + Constants.SLASH + "prometheus");

if (!controlSh.exists() || !binary.exists()) {

logger.error("Missing key files after decompression: control.sh={}, prometheus={}",

controlSh.exists(), binary.exists());

return false;

}

}

return true;

} else {

logger.error("No files extracted! Something went wrong with decompression.");

return false;

}

}

logger.error("Decompression command failed: {}", execResult.getExecOut());

return false;

}

/**

* 获取tar包中的文件数量

*/

private int getTarFileCount(String tarFile) {

try {

ArrayList<String> command = new ArrayList<>();

command.add("tar");

command.add("-tzf");

command.add(tarFile);

ExecResult execResult = ShellUtils.execWithStatus(".", command, 30, logger);

if (execResult.getExecResult() && execResult.getExecOut() != null) {

// 按行分割,统计非空行

String[] lines = execResult.getExecOut().split("\n");

int count = 0;

for (String line : lines) {

if (line != null && !line.trim().isEmpty()) {

count++;

}

}

return count;

}

} catch (Exception e) {

logger.warn("Failed to count tar files: {}", e.getMessage());

}

return -1; // 未知

}

private Boolean decompressWithStripComponents(String sourceTarGzFile, String targetDir) {

logger.info("Retrying decompression with --strip-components=1");

ArrayList<String> command = new ArrayList<>();

command.add("tar");

command.add("-zxvf");

command.add(sourceTarGzFile);

command.add("-C");

command.add(targetDir);

command.add("--strip-components=1");

ExecResult execResult = ShellUtils.execWithStatus(targetDir, command, 120, logger);

if (execResult.getExecResult()) {

// 验证解压的文件

String packageName = extractPackageName(sourceTarGzFile);

File targetDirFile = new File(targetDir);

File[] files = targetDirFile.listFiles((dir, name) -> name.contains(packageName.split("-")[0]));

if (files != null && files.length > 0) {

logger.info("Decompression with --strip-components=1 successful");

return true;

}

}

return false;

}

private String extractPackageName(String tarFile) {

String fileName = new File(tarFile).getName();

// 移除 .tar.gz 或 .tgz 后缀

if (fileName.endsWith(".tar.gz")) {

return fileName.substring(0, fileName.length() - 7);

} else if (fileName.endsWith(".tgz")) {

return fileName.substring(0, fileName.length() - 4);

}

return fileName;

}

private void changeHadoopInstallPathPerm(String decompressPackageName) {

ShellUtils.exceShell(

" chown -R root:hadoop " + Constants.INSTALL_PATH + Constants.SLASH + decompressPackageName);

ShellUtils.exceShell(" chmod 755 " + Constants.INSTALL_PATH + Constants.SLASH + decompressPackageName);

ShellUtils.exceShell(

" chmod -R 755 " + Constants.INSTALL_PATH + Constants.SLASH + decompressPackageName + "/etc");

ShellUtils.exceShell(" chmod 6050 " + Constants.INSTALL_PATH + Constants.SLASH + decompressPackageName

+ "/bin/container-executor");

ShellUtils.exceShell(" chmod 400 " + Constants.INSTALL_PATH + Constants.SLASH + decompressPackageName

+ "/etc/hadoop/container-executor.cfg");

ShellUtils.exceShell(" chown -R yarn:hadoop " + Constants.INSTALL_PATH + Constants.SLASH + decompressPackageName

+ "/logs/userlogs");

ShellUtils.exceShell(

" chmod 775 " + Constants.INSTALL_PATH + Constants.SLASH + decompressPackageName + "/logs/userlogs");

}

}最后

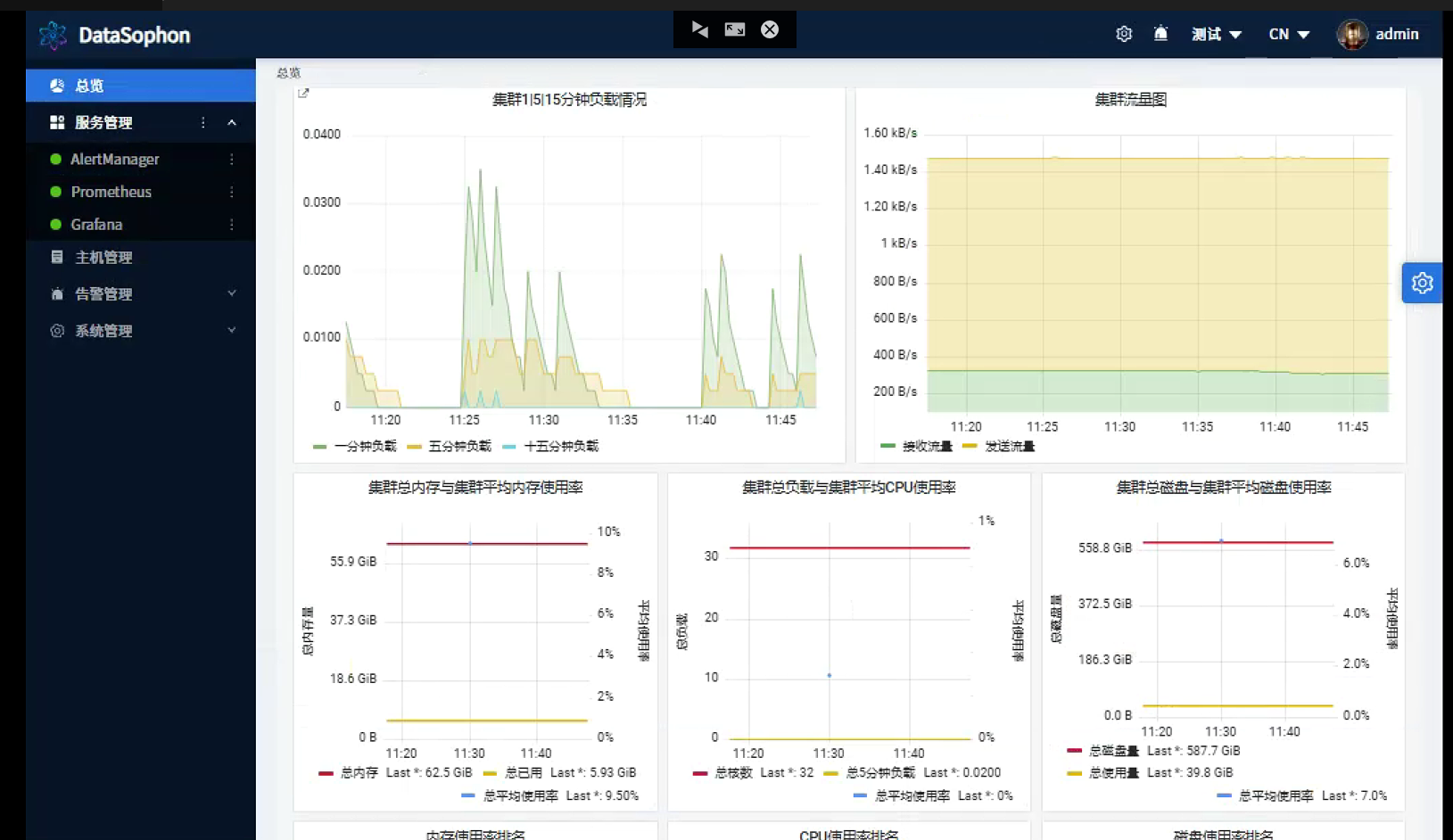

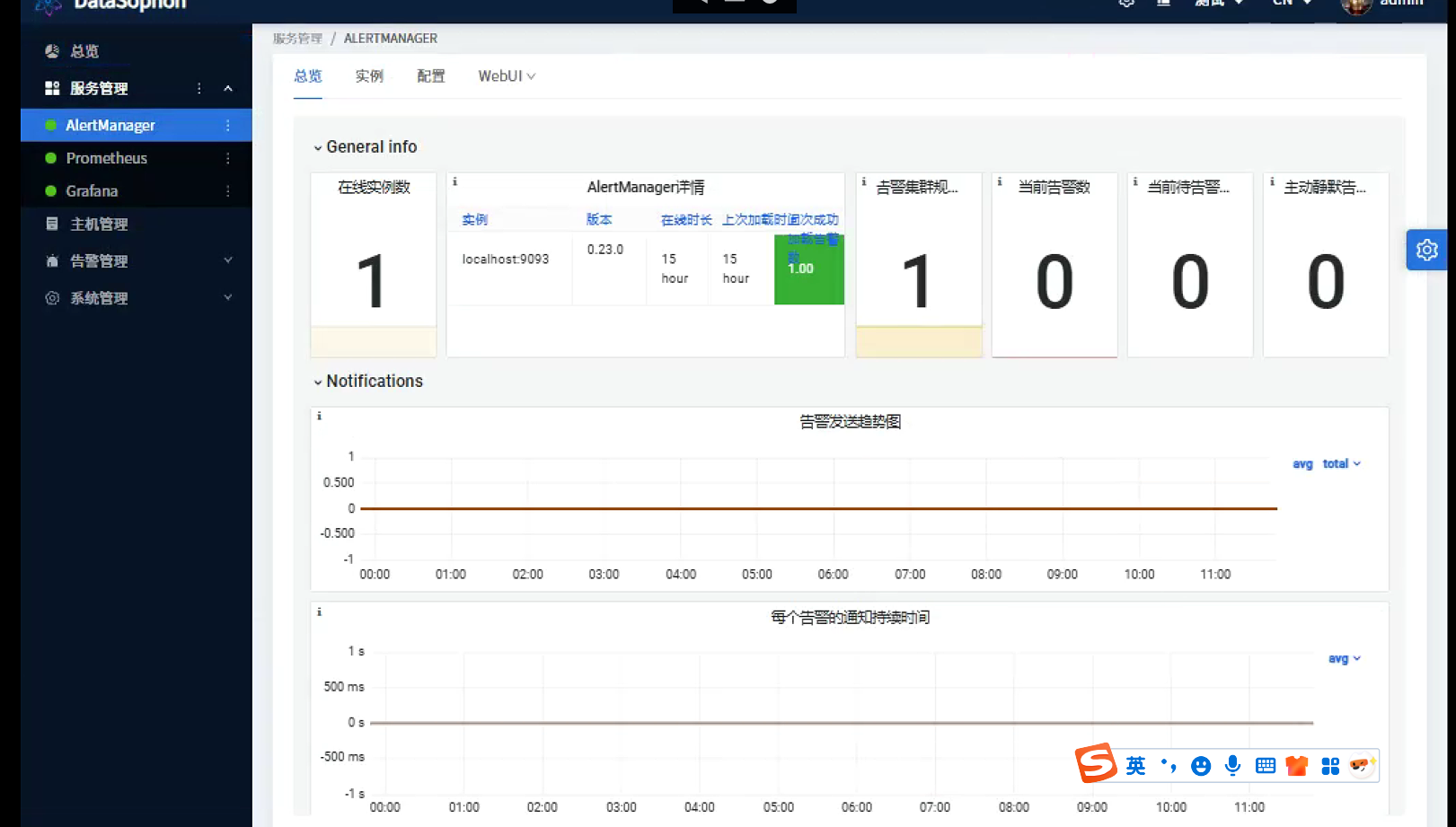

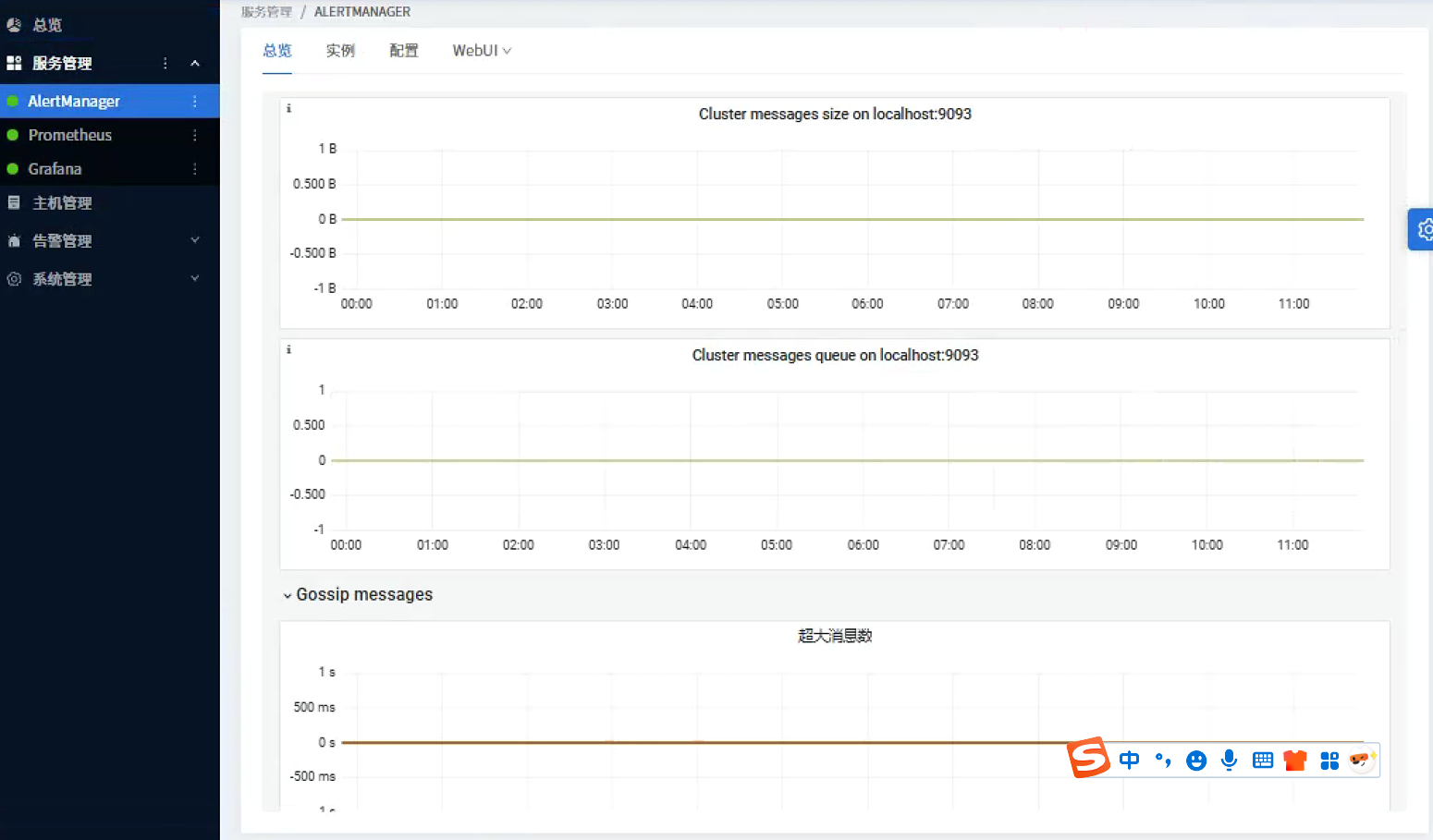

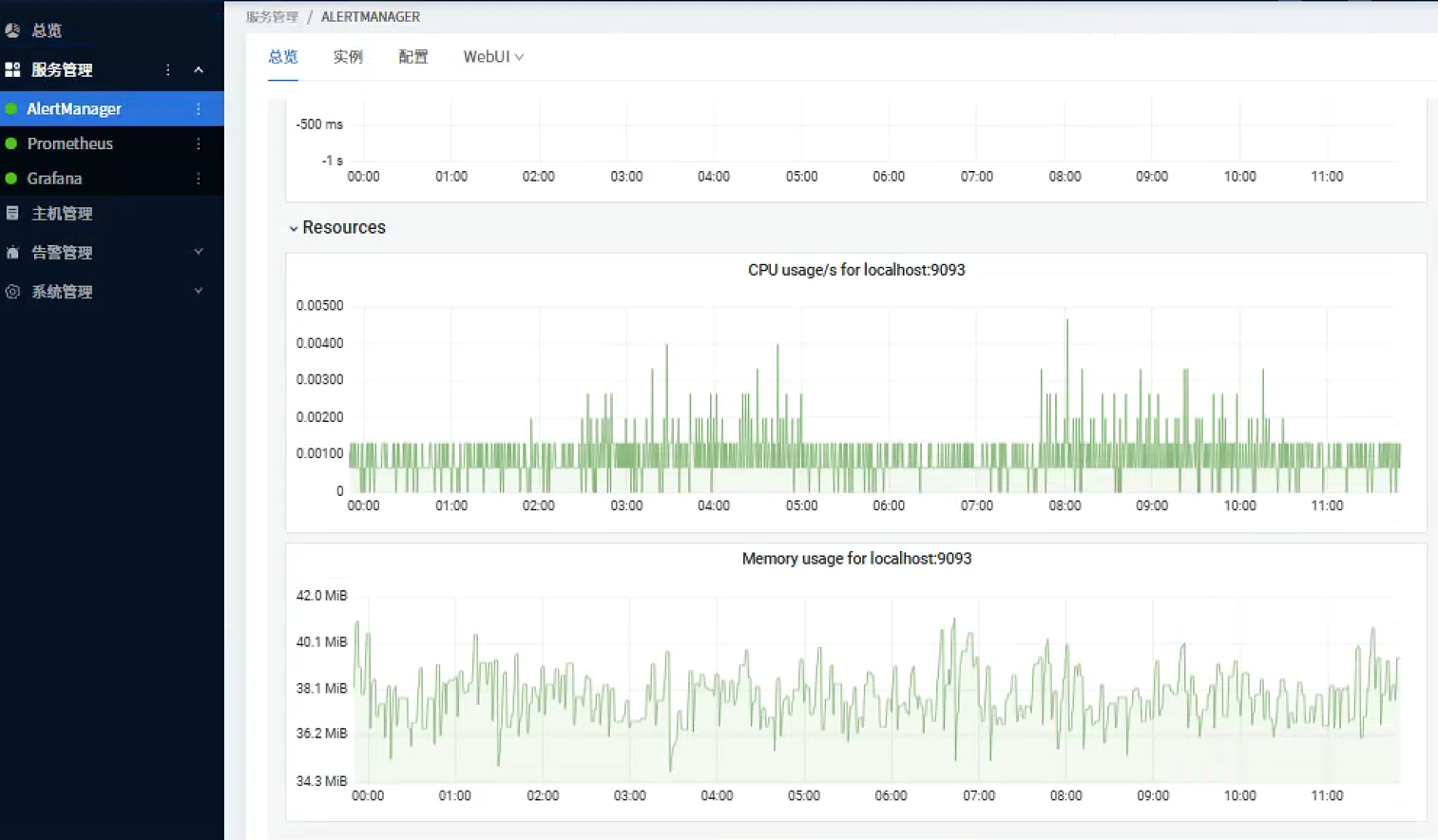

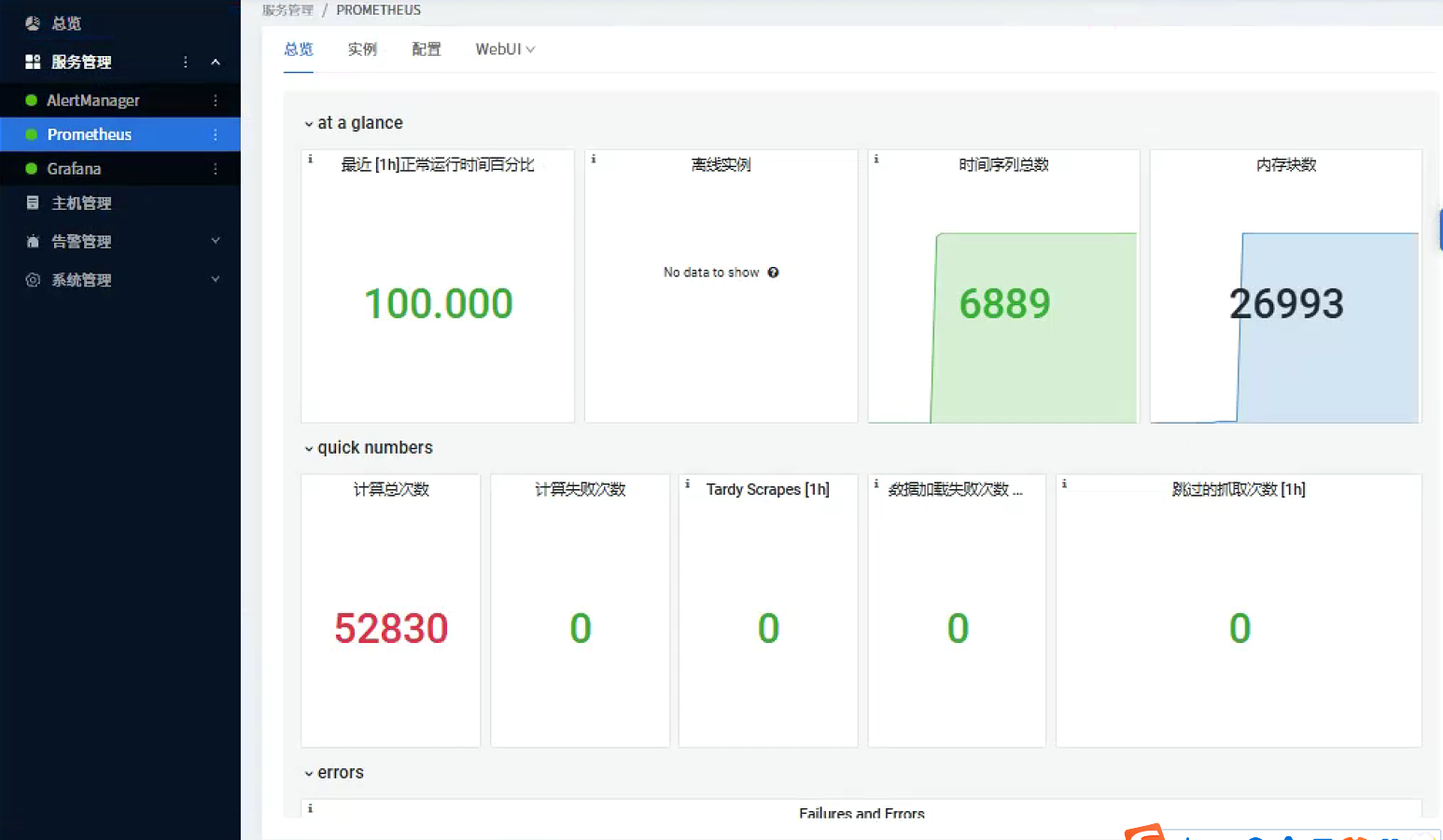

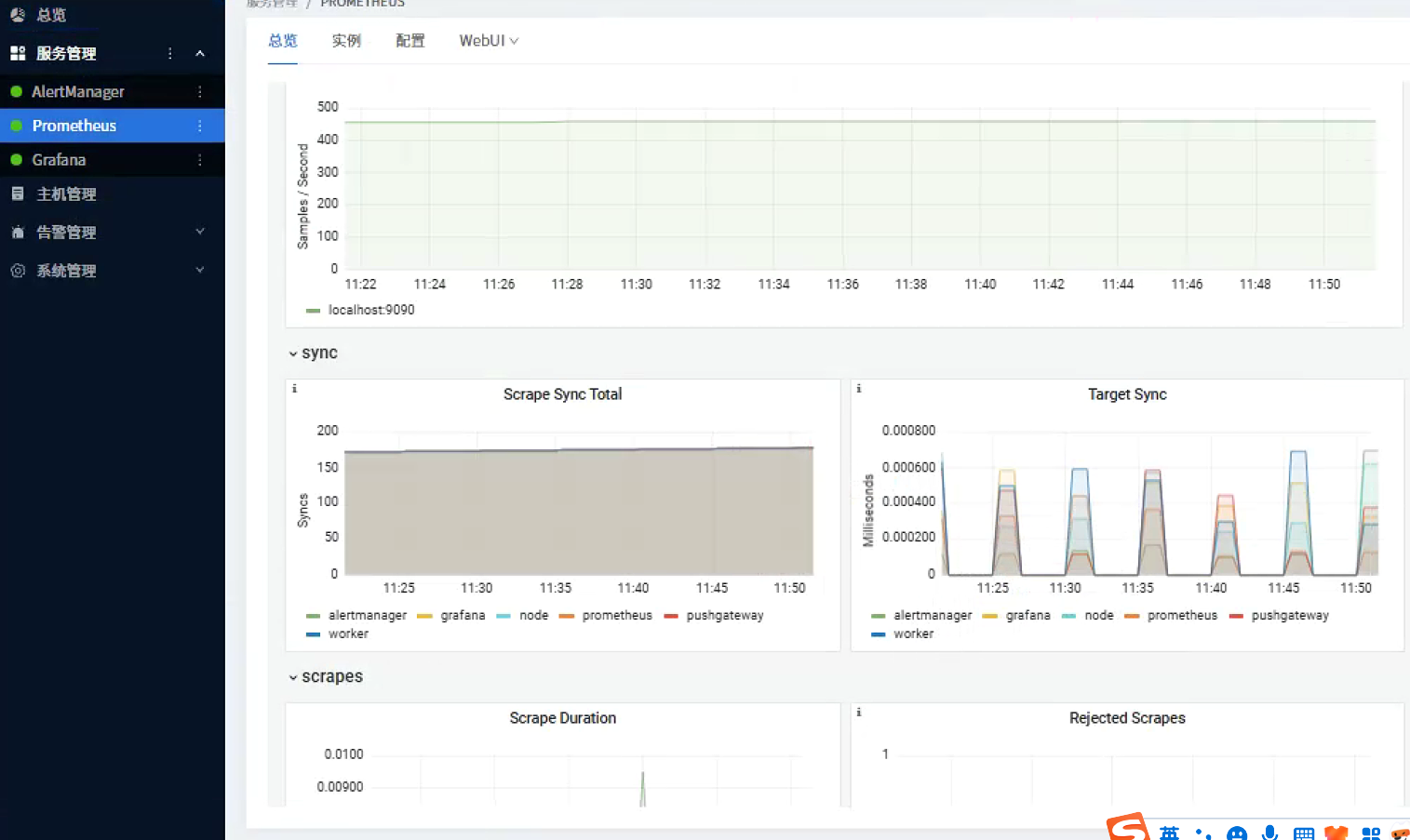

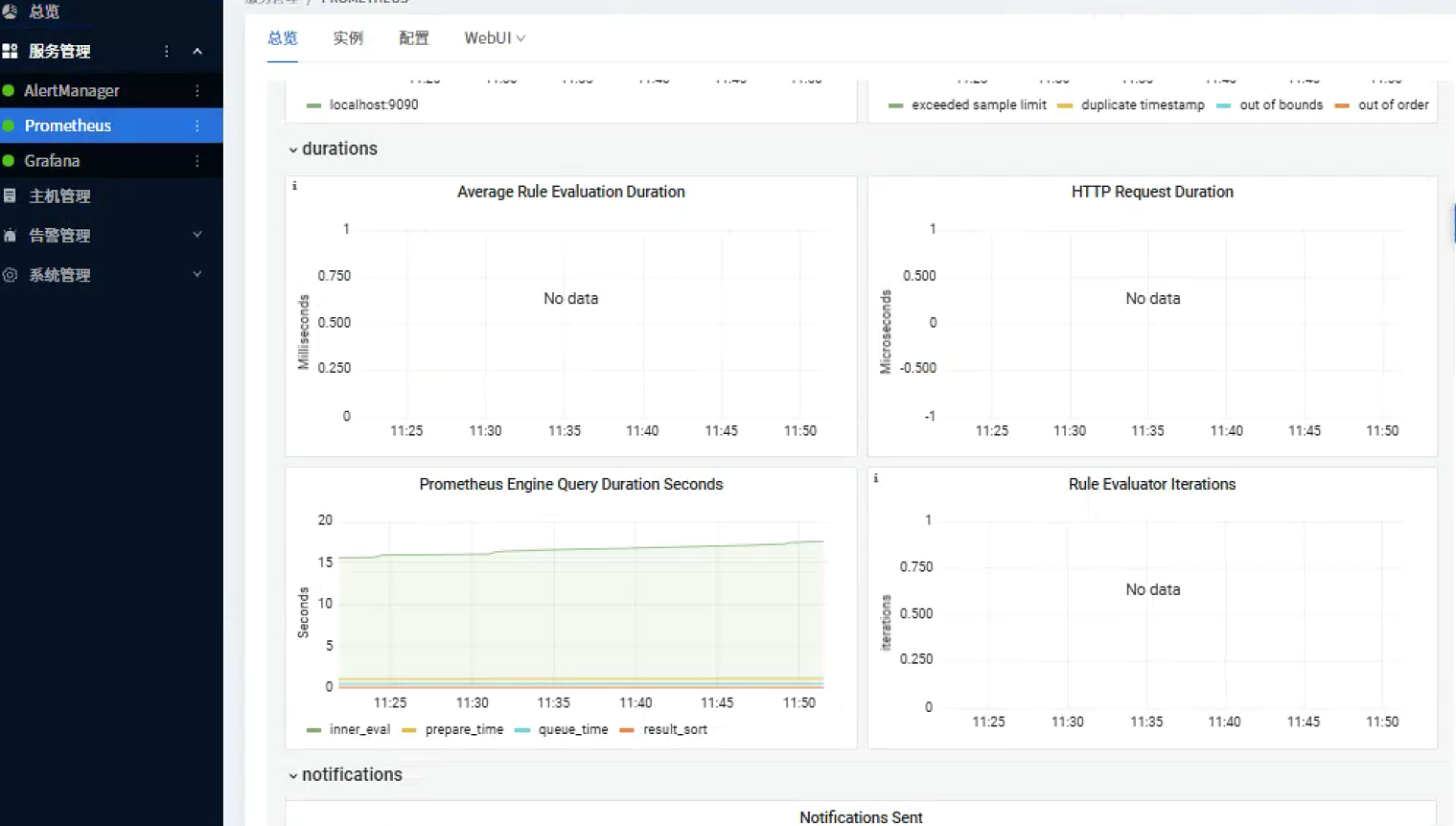

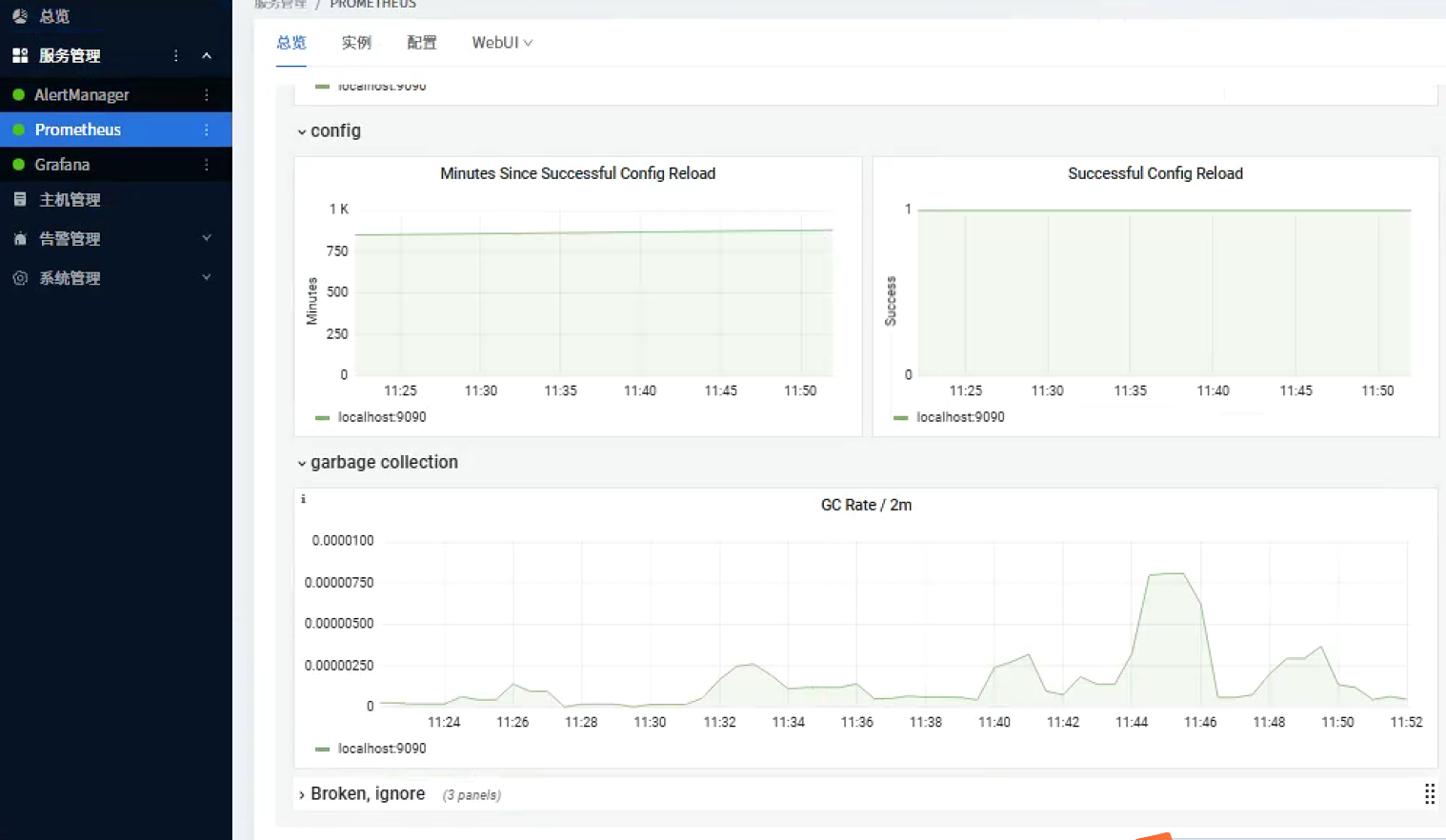

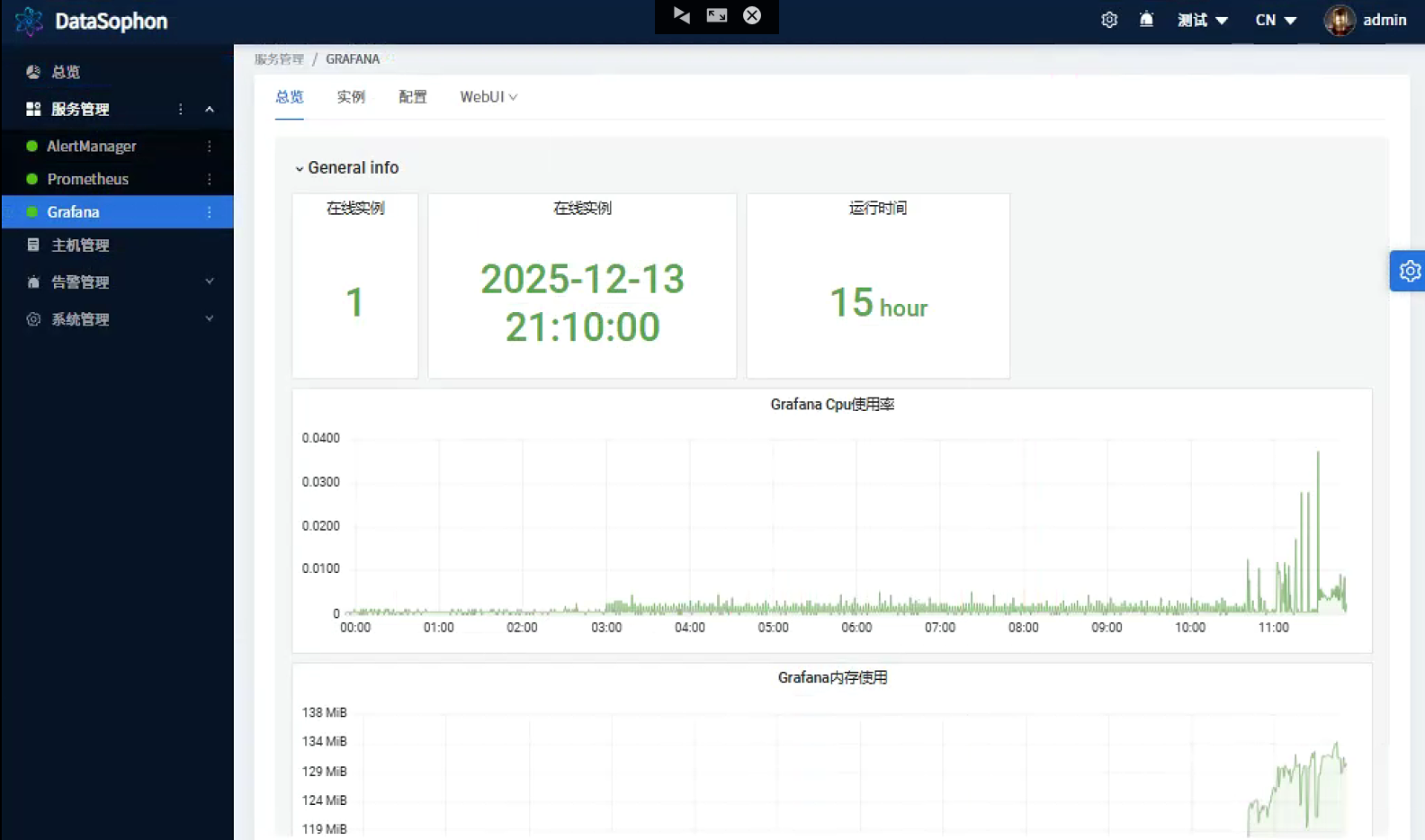

安装成功后,界面截图:

总览:

AlertManager:

Prometheus:

Grafana:

界面还是挺酷的,总览监控了集群所有节点,但是后面三大组件,好像只是localhost节点(ddp1),这是美中不足。如果有哪位高手知道如何修改,可以沟通指点一下:lita2lz

另外,安装过程中,遇坑,重新安装的话,一定记得清空相关表,否则会遇到意想不到的"效果":

sql

use datasophon;

delete from t_ddh_cluster_info;

delete from t_ddh_cluster_alert_group_map;

delete from t_ddh_cluster_alert_history;

delete from t_ddh_cluster_group where cluster_id!=1;

delete from t_ddh_cluster_host;

delete from t_ddh_cluster_node_label;

delete from t_ddh_cluster_queue_capacity;

delete from t_ddh_cluster_rack;

delete from t_ddh_cluster_role_user;

delete from t_ddh_cluster_service_command;

delete from t_ddh_cluster_service_instance;

delete from t_ddh_cluster_service_instance_role_group;

delete from t_ddh_cluster_service_role_group_config;

delete from t_ddh_cluster_service_role_instance;

delete from t_ddh_cluster_user where cluster_id!=1;

delete from t_ddh_cluster_user_group where cluster_id!=1;

delete from t_ddh_cluster_variable where cluster_id!=1;

delete from t_ddh_cluster_yarn_queue;

delete from t_ddh_cluster_yarn_scheduler;

delete from t_ddh_cluster_zk;