目录

- Informer介绍

-

- 典型应用场景

- Informer组件

-

- Reflector(反射器)

- [Delta FIFO(增量先进先出队列)](#Delta FIFO(增量先进先出队列))

- Indexer(索引器)

- [Controller(控制器,非 K8s 自定义控制器)](#Controller(控制器,非 K8s 自定义控制器))

- 实战

-

- 使用informer监听K8s标准资源(阻塞版本)

- 使用informer监听K8s标准资源(gorountine版本)

- [使用discover client监听CRD资源](#使用discover client监听CRD资源)

Informer介绍

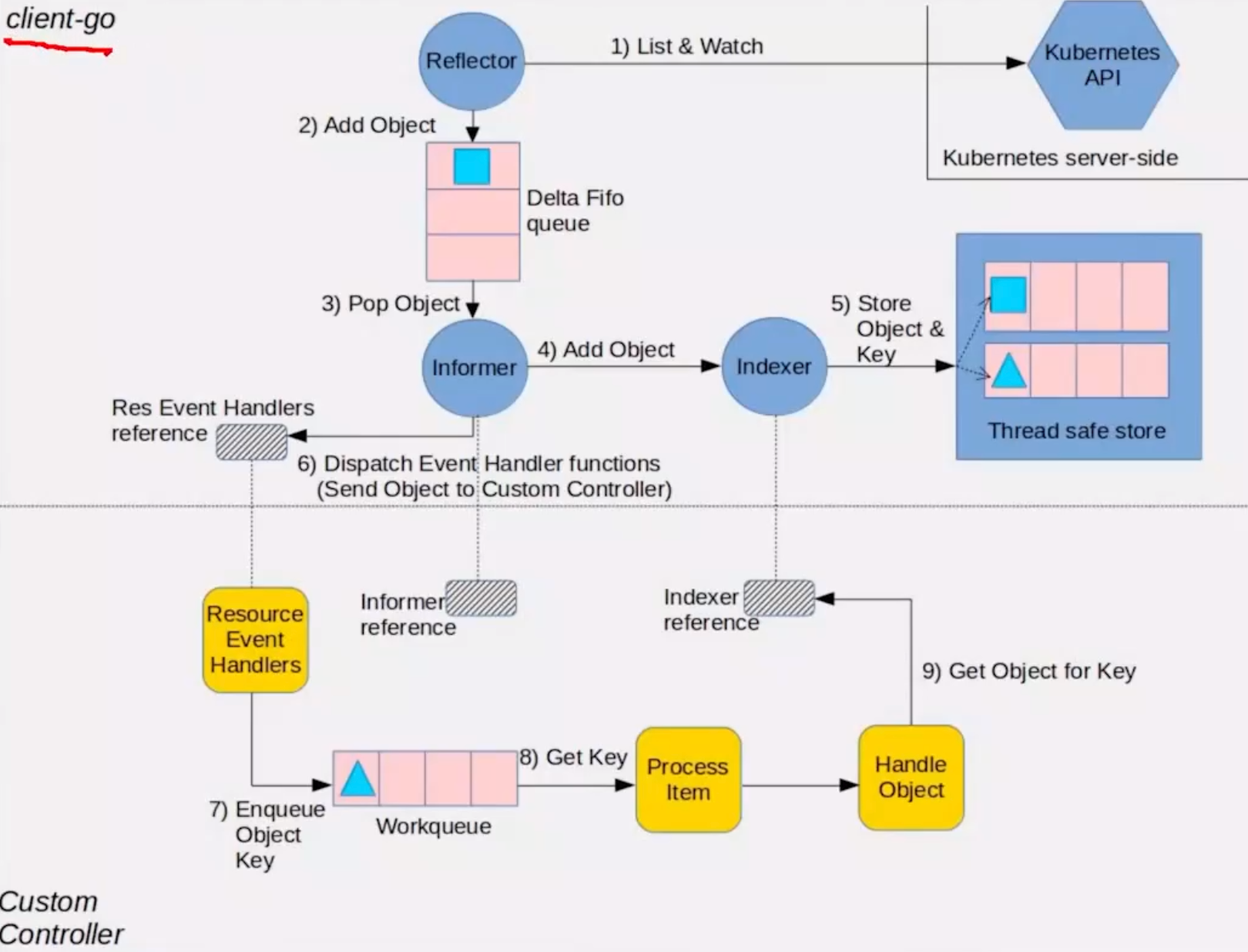

在 Kubernetes(K8s)中,Informer 是客户端侧的核心工具,用于高效、可靠地监听 Kubernetes API Server 中资源对象的变化,并将变化同步到本地缓存,同时触发自定义的事件处理逻辑。它是 K8s 客户端工具包 client-go 提供的核心组件,也是 Operator、自定义控制器等组件的基础。

这个工具解决的问题:直接调用K8s API Server 监听资源变化,会存在两个关键痛点:

- 性能与压力问题:如果多个组件同时监听同一类资源,会向 API Server 发起大量重复请求,造成服务端压力。

- 可靠性问题:API Server 的 watch 机制可能因网络波动、会话超时中断,需要手动处理重连、增量数据恢复等逻辑。

典型应用场景

-

自定义控制器 / Operator

Operator 的核心逻辑就是基于 Informer 监听自定义资源(CRD)的变化,然后执行对应的业务操作(如创建 Pod、配置数据库)。

-

资源监控与告警

监听 Pod、Node 等资源的状态变化,当出现异常(如 Pod 崩溃、Node 不可用)时,触发告警逻辑。

-

自动化运维工具

例如自动清理闲置资源、自动扩缩容等工具,通过 Informer 感知资源变化并执行运维动作。

Informer组件

Informer 通过 本地缓存 + 增量同步 + 事件分发 的机制,完美解决了上述问题。

Reflector(反射器)

- 负责与API Server 建立watch连接,监听指定资源(如 Pod、Deployment)的增、删、改事件。

- 当 watch 中断时,自动发起重连,并通过 资源版本(resourceVersion) 实现增量同步,避免全量拉取。

- 将监听到的资源变化事件,发送到 Delta FIFO 队列 中。

Delta FIFO(增量先进先出队列)

- 作为事件缓冲队列,存储 Reflector 传来的资源增量事件(Added、Updated、Deleted 等类型)。

- 保证事件的有序性,避免并发处理导致的冲突。

Indexer(索引器)

- 维护一份本地缓存,存储从 API Server 同步来的资源对象。

- 支持基于标签、字段等条件的高效查询(类似数据库索引),避免每次查询都请求 API Server。

- 缓存的数据与 API Server 最终一致,是 Informer 高性能的核心保障。

Controller(控制器,非 K8s 自定义控制器)

- 从 Delta FIFO 队列中取出事件,触发对应的事件处理函数(如 OnAdd、OnUpdate、OnDelete)。

- 同时更新 Indexer 中的本地缓存,确保缓存与事件一致。

实战

使用informer监听K8s标准资源(阻塞版本)

go

package main

import (

"flag"

"fmt"

"path/filepath"

"time"

v1 "k8s.io/api/apps/v1"

v1core "k8s.io/api/core/v1"

"k8s.io/apimachinery/pkg/labels"

"k8s.io/client-go/informers"

"k8s.io/client-go/kubernetes"

"k8s.io/client-go/rest"

"k8s.io/client-go/tools/cache"

"k8s.io/client-go/tools/clientcmd"

"k8s.io/client-go/util/homedir"

)

func main() {

var err error

var config *rest.Config

// 加载 kubeconfig 配置

var kubeconfig *string

if home := homedir.HomeDir(); home != "" {

kubeconfig = flag.String("kubeconfig", filepath.Join(home, ".kube", "config"), "[可选] kubeconfig 绝对路径")

} else {

kubeconfig = flag.String("kubeconfig", "", "kubeconfig 绝对路径")

}

// 初始化 rest.Config 对象

if config, err = rest.InClusterConfig(); err != nil {

if config, err = clientcmd.BuildConfigFromFlags("", *kubeconfig); err != nil {

panic(err.Error())

}

}

// 创建 Clientset 对象

clientset, err := kubernetes.NewForConfig(config)

if err != nil {

panic(err.Error())

}

// 初始化 informer

informerFactory := informers.NewSharedInformerFactory(clientset, time.Hour*12)

// 对 Deployment 监听

deployInformer := informerFactory.Apps().V1().Deployments()

informer := deployInformer.Informer()

// Lister 实际上就是本地缓存,他从 Indexer 里取数据

deployLister := deployInformer.Lister()

informer.AddEventHandler(cache.ResourceEventHandlerFuncs{

AddFunc: onAddDeployment,

UpdateFunc: onUpdateDeployment,

DeleteFunc: onDeleteDeployment,

})

// 对 Service 监听

serviceInformer := informerFactory.Core().V1().Services()

serviceInformer.Informer().AddEventHandler(cache.ResourceEventHandlerFuncs{

AddFunc: onAddService,

UpdateFunc: onUpdateService,

DeleteFunc: onDeleteService,

})

stopper := make(chan struct{})

defer close(stopper)

// 启动 informer,List & Watch

informerFactory.Start(stopper)

// 等待所有启动的 Informer 的缓存被同步

informerFactory.WaitForCacheSync(stopper)

// Lister,从本地缓存中获取 default 中的所有 deployment 列表,最终从 Indexer 取数据

deployments, err := deployLister.Deployments("default").List(labels.Everything())

if err != nil {

panic(err)

}

for idx, deploy := range deployments {

fmt.Printf("%d -> %s\n", idx+1, deploy.Name)

}

// 阻塞主 goroutine

<-stopper

}

func onAddDeployment(obj interface{}) {

deploy := obj.(*v1.Deployment)

fmt.Println("add a deployment:", deploy.Name)

}

func onUpdateDeployment(old, new interface{}) {

oldDeploy := old.(*v1.Deployment)

newDeploy := new.(*v1.Deployment)

fmt.Println("update deployment:", oldDeploy.Name, newDeploy.Name)

}

func onDeleteDeployment(obj interface{}) {

deploy := obj.(*v1.Deployment)

fmt.Println("delete a deployment:", deploy.Name)

}

func onAddService(obj interface{}) {

service := obj.(*v1core.Service)

fmt.Println("add a service:", service.Name)

}

func onUpdateService(old, new interface{}) {

oldService := old.(*v1core.Service)

newService := new.(*v1core.Service)

fmt.Println("update service:", oldService.Name, newService.Name)

}

func onDeleteService(obj interface{}) {

service := obj.(*v1core.Service)

fmt.Println("delete a service:", service.Name)

}

~ demo go run main.go

add a service: kubernetes

add a service: kube-dns

add a deployment: coredns

add a deployment: nginx

update deployment: nginx nginx

add a service: nginx

update deployment: nginx nginx

update deployment: nginx nginx

update deployment: nginx nginx

delete a deployment: nginx

delete a service: nginx该代码监听集群的service和deployment,注册了相应的add、update、delete事件,因此当集群中有相应的资源同步的时候,就自动调用相应的函数。

使用informer监听K8s标准资源(gorountine版本)

这个版本是Controller-Workqueue 模式,使用Controller统一管理"缓存-队列-监听"三大核心组件。然后Informer只管往workqueue派发任务,main函数启动一个gorountine来消费workqueue中的任务。这样,informer不会被阻塞,因为它只管派发任务,要阻塞也阻塞gorountine

go

package main

import (

"flag"

"fmt"

"path/filepath"

"time"

v1 "k8s.io/api/apps/v1"

"k8s.io/client-go/informers"

"k8s.io/client-go/kubernetes"

"k8s.io/client-go/rest"

"k8s.io/client-go/tools/cache"

"k8s.io/client-go/tools/clientcmd"

"k8s.io/client-go/util/homedir"

"k8s.io/client-go/util/workqueue"

)

// 这两个先定义

type Controller struct {

indexer cache.Indexer

queue workqueue.TypedRateLimitingInterface[string]

informer cache.Controller

}

func NewController(queue workqueue.TypedRateLimitingInterface[string], indexer cache.Indexer, informer cache.Controller) *Controller {

return &Controller{

informer: informer,

indexer: indexer,

queue: queue,

}

}

// 然后定义 main

// 处理下一个

func (c *Controller) processNextItem() bool {

key, quit := c.queue.Get()

if quit {

return false

}

defer c.queue.Done(key)

err := c.syncToStdout(key)

c.handleErr(err, key)

return true

}

// 输出日志

func (c *Controller) syncToStdout(key string) error {

// 通过 key 从 indexer 中获取完整的对象

obj, exists, err := c.indexer.GetByKey(key)

if err != nil {

fmt.Printf("Fetching object with key %s from store failed with %v\n", key, err)

return err

}

if !exists {

fmt.Printf("Deployment %s does not exist anymore\n", key)

} else {

deployment := obj.(*v1.Deployment)

fmt.Printf("Sync/Add/Update for Deployment %s, Replicas: %d\n", deployment.Name, *deployment.Spec.Replicas)

if deployment.Name == "nginx" {

time.Sleep(2 * time.Second)

return fmt.Errorf("simulated error for deployment %s", deployment.Name)

}

}

return nil

}

// 错误处理

func (c *Controller) handleErr(err error, key string) {

if err == nil {

c.queue.Forget(key)

return

}

if c.queue.NumRequeues(key) < 5 {

fmt.Printf("Retry %d for key %s\n", c.queue.NumRequeues(key), key)

// 重新加入队列,并且进行速率限制,这会让他过一段时间才会被处理,避免过度重试

c.queue.AddRateLimited(key)

return

}

c.queue.Forget(key)

fmt.Printf("Dropping deployment %q out of the queue: %v\n", key, err)

}

func main() {

var err error

var config *rest.Config

var kubeconfig *string

if home := homedir.HomeDir(); home != "" {

kubeconfig = flag.String("kubeconfig", filepath.Join(home, ".kube", "config"), "[可选] kubeconfig 绝对路径")

} else {

kubeconfig = flag.String("kubeconfig", "", "kubeconfig 绝对路径")

}

// 初始化 rest.Config 对象

if config, err = rest.InClusterConfig(); err != nil {

if config, err = clientcmd.BuildConfigFromFlags("", *kubeconfig); err != nil {

panic(err.Error())

}

}

// 创建 Clientset 对象

clientset, err := kubernetes.NewForConfig(config)

if err != nil {

panic(err.Error())

}

// 初始化 informer factory

informerFactory := informers.NewSharedInformerFactory(clientset, time.Hour*12)

// 创建速率限制队列

queue := workqueue.NewTypedRateLimitingQueue(workqueue.DefaultTypedControllerRateLimiter[string]())

// 对 Deployment 监听

deployInformer := informerFactory.Apps().V1().Deployments()

informer := deployInformer.Informer()

informer.AddEventHandler(cache.ResourceEventHandlerFuncs{

AddFunc: func(obj interface{}) { onAddDeployment(obj, queue) },

UpdateFunc: func(old, new interface{}) { onUpdateDeployment(new, queue) },

DeleteFunc: func(obj interface{}) { onDeleteDeployment(obj, queue) },

})

controller := NewController(queue, deployInformer.Informer().GetIndexer(), informer)

stopper := make(chan struct{})

defer close(stopper)

// 启动 informer,List & Watch

informerFactory.Start(stopper)

informerFactory.WaitForCacheSync(stopper)

// 处理队列中的事件

go func() {

for {

if !controller.processNextItem() {

break

}

}

}()

<-stopper

}

func onAddDeployment(obj interface{}, queue workqueue.TypedRateLimitingInterface[string]) {

// 生成 key

key, err := cache.MetaNamespaceKeyFunc(obj)

if err == nil {

queue.Add(key)

}

}

func onUpdateDeployment(new interface{}, queue workqueue.TypedRateLimitingInterface[string]) {

key, err := cache.MetaNamespaceKeyFunc(new)

if err == nil {

queue.Add(key)

}

}

func onDeleteDeployment(obj interface{}, queue workqueue.TypedRateLimitingInterface[string]) {

key, err := cache.DeletionHandlingMetaNamespaceKeyFunc(obj)

if err == nil {

queue.Add(key)

}

}使用discover client监听CRD资源

之前监听的资源是K8s标准资源,只能监听Pod/Depolyment标准资源。discover client能将GVK(YAML形式的)转化成GVR(请求API路径)的格式,这样就能知道要访问什么资源了。

go

package main

import (

"context"

"flag"

"fmt"

"os"

"path/filepath"

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

"k8s.io/apimachinery/pkg/runtime/schema"

"k8s.io/client-go/dynamic"

"k8s.io/client-go/kubernetes"

"k8s.io/client-go/restmapper"

"k8s.io/client-go/tools/clientcmd"

"k8s.io/client-go/util/homedir"

)

func main() {

// 解析命令行参数

if len(os.Args) != 3 {

fmt.Printf("Usage: %s get <resource>\n", os.Args[0])

os.Exit(1)

}

command := os.Args[1]

kind := os.Args[2]

if command != "get" {

fmt.Println("Unsupported command:", command)

os.Exit(1)

}

// 加载 kubeconfig 配置

var kubeconfig *string

if home := homedir.HomeDir(); home != "" {

kubeconfig = flag.String("kubeconfig", filepath.Join(home, ".kube", "config"), "(optional) absolute path to the kubeconfig file")

} else {

kubeconfig = flag.String("kubeconfig", "", "absolute path to the kubeconfig file")

}

flag.Parse()

config, err := clientcmd.BuildConfigFromFlags("", *kubeconfig)

if err != nil {

panic(err.Error())

}

// 创建 dynamic client

dynamicClient, err := dynamic.NewForConfig(config)

if err != nil {

panic(err)

}

// 获取客户端和映射器

clientset, err := kubernetes.NewForConfig(config)

if err != nil {

panic(err)

}

//使用clientset获取Discovery()客户端,用于发现API Server支持的所有的API Group和Version

//然后使用restmapper.GetAPIGroupResources()获取API Group和Version的资源列表

discoveryClient := clientset.Discovery()

apiGroupResources, err := restmapper.GetAPIGroupResources(discoveryClient)

if err != nil {

panic(err)

}

// 创建RESTMapper实例,用于将GVK映射到GVR

mapper := restmapper.NewDiscoveryRESTMapper(apiGroupResources)

// 动态映射 Kind 到 GVR

// gvk := schema.FromAPIVersionAndKind("mygroup.example.com/v1alpha1", kind)

// 还可以用这个方法

gvk := schema.GroupVersionKind{

Group: "mygroup.example.com",

Version: "v1alpha1",

Kind: kind,

}

//搜索GVK对应的GVR

mapping, err := mapper.RESTMapping(gvk.GroupKind(), gvk.Version)

if err != nil {

panic(err)

}

// mapping.Resource 就是 GVR,这样就实现 GVK->GVR 的转化

// 获取资源

resourceInterface := dynamicClient.Resource(mapping.Resource).Namespace("default")

resources, err := resourceInterface.List(context.TODO(), metav1.ListOptions{})

if err != nil {

panic(err)

}

// 打印资源

for _, resource := range resources.Items {

fmt.Printf("Name: %s, Namespace: %s, UID: %s\n", resource.GetName(), resource.GetNamespace(), resource.GetUID())

}

}

//自定义CRD如下

//crd.yaml

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:

name: myresources.mygroup.example.com

spec:

group: mygroup.example.com

versions:

- name: v1alpha1

served: true

storage: true

schema:

openAPIV3Schema:

type: object

properties:

spec:

type: object

properties:

field1:

type: string

description: First example field

field2:

type: string

description: Second example field

status:

type: object

scope: Namespaced

names:

plural: myresources

singular: myresource

kind: MyResource

shortNames:

- myres

//使用这个资源如下

//myresource.yaml

apiVersion: mygroup.example.com/v1alpha1

kind: MyResource

metadata:

name: my-resource-instance

namespace: default

spec:

field1: "ExampleValue1"

field2: "ExampleValue2"

➜ demo_8 kubectl apply -f crd.yaml

customresourcedefinition.apiextensions.k8s.io/myresources.mygroup.example.com created

➜ demo_8 kubectl apply -f myresource.yaml

myresource.mygroup.example.com/my-resource-instance created

➜ demo_8 ls

crd.yaml go.mod go.sum main.go myresource.yaml

➜ demo_8 go run main.go

Usage: /var/folders/qq/m9nwhyqx7_x4s66kkdptq3n40000gn/T/go-build1143860236/b001/exe/main get <resource>

exit status 1

➜ demo_8 go run main.go get MyResource

Name: my-resource-instance, Namespace: default, UID: b2615b29-19f9-47bb-9126-b67b25248923

➜ demo_8