基于Ubuntu 20.04系统,搭建Kubernetes(v1.20.6)微服务集群,用Nginx镜像模拟微服务,整合Harbor、Gitlab+Jenkins+ArgoCD、Prometheus+Grafana、阿里云SLS日志服务、Istio、Helm等技术栈,实现微服务的高可用部署、CI/CD自动化、全链路监控、金丝雀发布、日志聚合及服务治理。

k8s环境规划:

podSubnet(pod 网段) 10.244.0.0/16

serviceSubnet(service 网段): 10.96.0.0/12

实验环境规划:

操作系统:Ubuntu 20.04.3

配置: 4G 内存/2核CPU/120G 硬盘

网络: NAT

架构总览

整体架构分为6层:基础设施层(Ubuntu+Docker+K8s)→ 镜像管理层(Harbor)→ 自动化交付层(Gitlab+Jenkins+ArgoCD)→ 服务治理层(Istio+Helm)→ 微服务层(Nginx模拟服务)→ 可观测性层(Prometheus+Grafana+AlertManager+阿里云SLS日志服务)。

环境规划

| 节点角色 | 主机名 | IP 地址 | 系统版本 | 核心软件 |

|---|---|---|---|---|

| 负载均衡节点 主 | k8s-lb-master | 192.168.121.80 | Ubuntu20.04 | haproxy、keepalived |

| 负载均衡节点 从 | k8s-lb-slave | 192.168.121.81 | Ubuntu20.04 | haproxy、keepalived |

| 虚拟 IP(VIP) | - | 192.168.121.188 | - | - |

| NFS节点 | k8s-nfs | 192.168.121.70 | Ubuntu20.04 | nfs-kernel-server、rpcbind |

| K8s 主节点 1 | k8s-master1 | 192.168.121.100 | Ubuntu20.04 | kubeadm、kubelet、kubectl、docker |

| K8s 主节点 2 | k8s-master2 | 192.168.121.101 | Ubuntu20.04 | kubeadm、kubelet、kubectl、docker |

| K8s 主节点 3 | k8s-master3 | 192.168.121.102 | Ubuntu20.04 | kubeadm、kubelet、kubectl、docker |

| K8s 从节点 1 | k8s-node1 | 192.168.121.200 | Ubuntu20.04 | kubeadm、kubelet、docker |

| K8s 从节点 2 | k8s-node2 | 192.168.121.201 | Ubuntu20.04 | kubeadm、kubelet、docker |

| K8s 从节点 3 | k8s-node3 | 192.168.121.202 | Ubuntu20.04 | kubeadm、kubelet、docker |

1 基础环境配置

1.1 关闭防火墙(Ubuntu系统默认无防火墙)

bash

# 停止并禁用ufw

sudo systemctl stop ufw

sudo systemctl disable ufw

# 验证(输出inactive则成功)

sudo systemctl status ufw1.2 关闭 Swap(K8s 要求必须关闭)

bash

# 永久关闭(注释swap分区行)

sudo sed -i '/swap/s/^/#/' /etc/fstab

# 验证

cat /etc/fstab | grep swap1.3 设置主机名

bash

# 比如lb01节点执行

sudo hostnamectl set-hostname k8s-lb-master

# master01节点执行

sudo hostnamectl set-hostname k8s-master1

# node01节点执行

sudo hostnamectl set-hostname k8s-node11.4 配置hosts文件(所有节点执行)

bash

vim /etc/hosts

192.168.121.80 k8s-lb-master

192.168.121.81 k8s-lb-slave

192.168.121.70 k8s-nfs

192.168.121.100 k8s-master1

192.168.121.101 k8s-master2

192.168.121.102 k8s-master3

192.168.121.200 k8s-node1

192.168.121.201 k8s-node2

192.168.121.202 k8s-node3

192.168.121.188 vip

192.168.121.200 harbor.example.com1.5 时间同步(所有节点执行)

bash

sudo apt update && sudo apt install -y chrony

sudo systemctl start chrony && sudo systemctl enable chrony

# 验证

chronyc tracking1.6 配置内核参数(开启 IP 转发、网桥过滤,所有节点执行)

bash

# 配置内核参数(开启 IPVS/IP 转发)

# 加载内核模块

sudo tee /etc/modules-load.d/k8s.conf << EOF

overlay

br_netfilter

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack

EOF

sudo modprobe overlay && sudo modprobe br_netfilter && sudo modprobe ip_vs

# 配置sysctl参数

sudo tee /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

sudo sysctl --system # 生效配置2 负载均衡节点部署 HAProxy+Keepalived

2.1 安装 HAProxy+Keepalived(两台 LB 节点都执行)

bash

apt update && sudo apt install -y haproxy keepalived2.2 配置 HAProxy(两台 LB 节点配置相同)

bash

vim /etc/haproxy/haproxy.cfg

global

log /dev/log local0 warning

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listeners

stats timeout 30s

user haproxy

group haproxy

daemon

defaults

log global

mode tcp

option tcplog

option dontlognull

timeout connect 5000

timeout client 10000

timeout server 10000

# k8s apiserver负载均衡

frontend k8s-apiserver

bind *:6443

mode tcp

option tcplog

default_backend k8s-apiserver

backend k8s-apiserver

mode tcp

option tcp-check

balance roundrobin

server master01 192.168.121.100:6443 check inter 2000 fall 2 rise 2

server master02 192.168.121.101:6443 check inter 2000 fall 2 rise 2

server master03 192.168.121.102:6443 check inter 2000 fall 2 rise 2

# 重启HAProxy并设置开机自启

sudo systemctl restart haproxy

sudo systemctl enable haproxy

# 验证

sudo systemctl status haproxy2.3 配置 Keepalived(实现 VIP 漂移)

bash

vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_haproxy {

script "/usr/bin/pgrep haproxy" # 检查haproxy是否运行

interval 2 # 检查间隔(秒)

weight 2 # 权重

}

vrrp_instance VI_1 {

state MASTER # 主节点设为MASTER 备节点设为BACKUP

interface ens33 # 替换为实际网卡名(用ip a查看)

virtual_router_id 51 # 虚拟路由ID(51-254)

priority 100 # 优先级,主节点高于备节点,备节点90

advert_int 1 # 心跳间隔(秒)

authentication {

auth_type PASS

auth_pass 1111 # 认证密码,两台LB需一致

}

virtual_ipaddress {

192.168.121.188/24 # VIP地址(和节点同网段)

}

track_script {

chk_haproxy # 关联haproxy检查脚本

}

}

# 重启并设置开机自启

sudo systemctl restart keepalived

sudo systemctl enable keepalived

# 验证VIP(主节点执行,能看到VIP则成功)

ip a 3 k8s集群节点环境配置

3.1 安装容器运行时(docker 19.03)

bash

# 更新系统包索引

apt update

# 安装必要依赖

apt install -y apt-transport-https ca-certificates curl software-properties-common

# 添加Docker官方GPG密钥

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

# 添加Docker稳定版仓库

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

# 安装Docker引擎

sudo apt update

sudo apt install -y docker-ce=5:19.03.15~3-0~ubuntu-focal docker-ce-cli=5:19.03.15~3-0~ubuntu-focal containerd.io

# 验证安装

sudo docker --version

# 设置Docker开机自启

sudo systemctl enable docker

sudo systemctl start docker3.2 安装 kubeadm、kubelet、kubectl(1.20.6 版本)

bash

# 安装必要依赖,支持 Apt 访问 HTTPS 协议的软件源

sudo apt install -y apt-transport-https ca-certificates curl gnupg2

# 下载并导入 K8s 官方密钥(确保源的合法性)

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.20/deb/Release.key | sudo gpg --dearmor -o /etc/apt/trusted.gpg.d/kubernetes-1.20.gpg

# 失效则用以下

curl -fsSL https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo gpg --dearmor -o /etc/apt/trusted.gpg.d/kubernetes-aliyun.gpg

# 添加阿里云 K8s 源(对应 1.20 版本)

echo "deb http://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

curl -fsSL https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

apt update

apt install -y --allow-downgrades --allow-change-held-packages kubelet=1.20.6-00 kubeadm=1.20.6-00 kubectl=1.20.6-003.3 master主节点部署helm

bash

# 下载Helm二进制文件

wget https://get.helm.sh/helm-v3.6.3-linux-amd64.tar.gz

# 解压

.tar -zxvf helm-v3.6.3-linux-amd64.tar.gz

# 移动到PATH目录

sudo mv linux-amd64/helm /usr/local/bin/helm

# 验证安装

helm version4 kubeadm初始化k8s集群

4.1 拉取镜像

bash

# 1.有离线包则上传到集群机器docker load 读取

docker load -i k8simage-1-20-6.tar.gz

# 2.阿里云仓库拉取

docker pull registry.aliyuncs.com/google_containers/kube-proxy:v1.20.6

docker pull registry.aliyuncs.com/google_containers/kube-scheduler:v1.20.6

docker pull registry.aliyuncs.com/google_containers/kube-controller-manager:v1.20.6

docker pull registry.aliyuncs.com/google_containers/kube-apiserver:v1.20.6

docker pull registry.aliyuncs.com/google_containers/etcd:3.4.13-0

docker pull registry.aliyuncs.com/google_containers/kube-proxy:v1.20.6

docker pull registry.aliyuncs.com/google_containers/coredns:1.7.0

docker pull registry.aliyuncs.com/google_containers/pause:3.2

# 有外网的话则自动拉取4.2 生成 kubeadm 配置文件

bash

vim kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: 1.20.6

# 指向VIP的6443端口(HAProxy监听端口)

controlPlaneEndpoint: "192.168.121.188:6443"

# 网络插件(这里用calico,后续需安装)

networking:

podSubnet: "10.244.0.0/16"

serviceSubnet: "10.96.0.0/12"

# 镜像仓库(和前面拉取的一致)

imageRepository: registry.aliyuncs.com/google_containers

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd4.3 初始化集群

bash

# 执行初始化(--config指定配置文件,--upload-certs自动上传证书,用于其他master节点加入)

sudo kubeadm init --config=kubeadm-config.yaml --upload-certs

# 普通用户配置kubectl

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 验证

kubectl get nodes4.4 安装网络插件(Calico,必须安装否则节点 NotReady)

bash

kubectl apply -f https://docs.projectcalico.org/v3.18/manifests/calico.yaml

# 验证(所有pod Running则成功)

kubectl get pods -n kube-system

root@k8s-master1:~# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-6949477b58-7zd4t 1/1 Running 0 33m

calico-node-9hcvf 1/1 Running 0 33m

calico-node-cbc6c 1/1 Running 0 33m

calico-node-fwhfr 1/1 Running 0 33m

calico-node-kbf2b 1/1 Running 0 33m

calico-node-mf2xz 1/1 Running 0 33m

calico-node-qwj7g 1/1 Running 0 33m

coredns-7f89b7bc75-fflvz 1/1 Running 0 36m

coredns-7f89b7bc75-rw7zs 1/1 Running 0 36m

etcd-k8s-master1 1/1 Running 0 37m

etcd-master2 1/1 Running 0 35m

etcd-master3 1/1 Running 0 34m

kube-apiserver-k8s-master1 1/1 Running 0 37m

kube-apiserver-master2 1/1 Running 0 35m

kube-apiserver-master3 1/1 Running 0 34m

kube-controller-manager-k8s-master1 1/1 Running 1 37m

kube-controller-manager-master2 1/1 Running 0 35m

kube-controller-manager-master3 1/1 Running 0 34m

kube-proxy-9g6bb 1/1 Running 0 34m

kube-proxy-bdm94 1/1 Running 0 34m

kube-proxy-kdw4s 1/1 Running 0 34m

kube-proxy-ngzwd 1/1 Running 0 35m

kube-proxy-s2g9m 1/1 Running 0 36m

kube-proxy-vxmhp 1/1 Running 0 34m

kube-scheduler-k8s-master1 1/1 Running 1 37m

kube-scheduler-master2 1/1 Running 0 35m

kube-scheduler-master3 1/1 Running 0 34m4.5 扩容master节点

成功初始化后会出现以下内容

bash

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

------------------------------------master--------------------------------------------------------------

# 这一块是master加入命令

kubeadm join 192.168.121.188:6443 --token 1hgsqu.ptv6bxgrvdga5p8s \

--discovery-token-ca-cert-hash sha256:60d01e04f9cda3e7922cf2eb560a454f26e46074c7feac007f3fcfeb82bae964 \

--control-plane --certificate-key b76296e2db23364f74f69077749f189bb042de1a0c0c57d461efa0327b475c34

------------------------------------master--------------------------------------------------------------

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

------------------------------------work--------------------------------------------------------------

# 这一块是work加入命令

kubeadm join 192.168.121.188:6443 --token 1hgsqu.ptv6bxgrvdga5p8s \

--discovery-token-ca-cert-hash sha256:60d01e04f9cda3e7922cf2eb560a454f26e46074c7feac007f3fcfeb82bae964

------------------------------------work--------------------------------------------------------------

# 在对应的master节点执行

kubeadm join 192.168.121.188:6443 --token 1hgsqu.ptv6bxgrvdga5p8s \

--discovery-token-ca-cert-hash sha256:60d01e04f9cda3e7922cf2eb560a454f26e46074c7feac007f3fcfeb82bae964 \

--control-plane --certificate-key b76296e2db23364f74f69077749f189bb042de1a0c0c57d461efa0327b475c34

# 在对应的work节点执行

kubeadm join 192.168.121.188:6443 --token 1hgsqu.ptv6bxgrvdga5p8s \

--discovery-token-ca-cert-hash sha256:60d01e04f9cda3e7922cf2eb560a454f26e46074c7feac007f3fcfeb82bae964 4.6 集群验证

bash

# 1. 查看所有节点(状态Ready则成功)

root@k8s-master1:~# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready control-plane,master 40m v1.20.6

master2 Ready control-plane,master 39m v1.20.6

master3 Ready control-plane,master 38m v1.20.6

node1 Ready work 38m v1.20.6

node2 Ready work 38m v1.20.6

node3 Ready work 38m v1.20.64.7 命令补全

bash

# 安装bash-completion

apt update && apt install -y bash-completion

# 将kubectl补全写入bashrc配置文件

echo "source <(kubectl completion bash)" >> ~/.bashrc

# 生效配置

source ~/.bashrc5 部署 NFS 服务端(k8s-nfs节点)

5.1 安装NFS核心组件

bash

root@k8s-nfs:~# apt update && apt install -y nfs-kernel-server rpcbind5.2 创建 NFS 共享目录

bash

# harbor

# 创建根共享目录(可自定义,如 /data/nfs)

root@k8s-nfs:~# mkdir -p /data/nfs/harbor

# 设置目录权限(确保 K8s 节点能读写)

root@k8s-nfs:~# chmod -R 777 /data/nfs/harbor

root@k8s-nfs:~# chown -R 1000:1000 /data/nfs/harbor

# gitlab

root@k8s-nfs:~# mkdir -p /data/nfs/gitlab/{rails,redis,postgres,registry,minio}

root@k8s-nfs:~# chown -R 1000:1000 /data/nfs/gitlab

root@k8s-nfs:~# chmod -R 777 /data/nfs/gitlab

# jenkins

# 创建Jenkins数据目录

root@k8s-nfs:~# mkdir -p /data/nfs/jenkins

# 设置权限

root@k8s-nfs:~# chmod -R 777 /data/nfs/jenkins

root@k8s-nfs:~# chown -R 1000:1000 /data/nfs/jenkins

# monitoring

# 创建monitoring数据目录

root@k8s-nfs:~# mkdir -p /data/nfs/monitoring

# 设置权限

root@k8s-nfs:~# chmod -R 777 /data/nfs/monitoring

root@k8s-nfs:~# chown -R 1000:1000 /data/nfs/monitoring5.3 配置 NFS共享规则

编辑 /etc/exports 文件,添加 K8s 集群节点的访问规则:

bash

root@k8s-nfs:/data/nfs# vim /etc/exports

# /etc/exports: the access control list for filesystems which may be exported

# to NFS clients. See exports(5).

#

# Example for NFSv2 and NFSv3:

# /srv/homes hostname1(rw,sync,no_subtree_check) hostname2(ro,sync,no_subtree_check)

#

# Example for NFSv4:

# /srv/nfs4 gss/krb5i(rw,sync,fsid=0,crossmnt,no_subtree_check)

# /srv/nfs4/homes gss/krb5i(rw,sync,no_subtree_check)

#

# 允许k8s集群网段

/data/nfs/harbor 192.168.121.0/24(rw,sync,no_root_squash,no_all_squash,insecure)

/data/nfs/gitlab 192.168.121.0/24(rw,sync,no_root_squash,no_all_squash)

/data/nfs/jenkins 192.168.121.0/24(rw,sync,no_root_squash,no_subtree_check)

/data/nfs/monitoring 192.168.121.0/24(rw,sync,no_root_squash,no_subtree_check,insecure)

# 参数说明:

# rw: 读写权限

# sync: 同步写入(数据先写入磁盘再返回)

# no_root_squash: 允许 root 用户操作(避免权限问题)

# insecure: 允许非特权端口访问(适配 K8s 容器网络)5.4 启动并开机自启 NFS 服务

bash

root@k8s-nfs:/data/nfs# systemctl start rpcbind && systemctl enable rpcbind

root@k8s-nfs:/data/nfs# systemctl start nfs-server && systemctl enable nfs-server

# 重载 NFS 配置(修改 exports 后必执行)

root@k8s-nfs:/data/nfs# exportfs -rv5.5 验证 NFS服务端

bash

# 查看共享目录配置

root@k8s-nfs:/data/nfs# showmount -e localhost

# 正常输出:Export list for localhost:

# /data/nfs/harbor 192.168.1.0/24

# 查看 NFS 端口监听

root@k8s-nfs:/data/nfs# ss -tnlp | grep -E '111|2049'

LISTEN 0 4096 0.0.0.0:111 0.0.0.0:* users:(("rpcbind",pid=719,fd=4),("systemd",pid=1,fd=98))

LISTEN 0 64 0.0.0.0:2049 0.0.0.0:*

LISTEN 0 4096 [::]:111 [::]:* users:(("rpcbind",pid=719,fd=6),("systemd",pid=1,fd=100))

LISTEN 0 64 [::]:2049 [::]:*

# 能看到 rpcbind(111 端口)、nfs(2049 端口)监听即可5.6 K8s 所有节点安装 NFS 客户端

K8s 每个节点都需要安装 NFS 客户端,才能挂载 NFS 共享目录:

bash

apt update && apt install -y nfs-common

# 验证客户端连通性(任选一个 K8s 节点执行)

root@node1:~# showmount -e 192.168.121.70

Export list for 192.168.121.70:

/data/nfs/harbor 192.168.121.0/24

# 输出能看到 /data/nfs/harbor 共享目录即正常6 部署Harbor

环境要求

| 组件 | 最低要求 |

|---|---|

| Kubernetes | 1.20.6 |

| Helm | 3.6.3 |

| Ingress 控制器 | nginx-ingress(Harbor 依赖 Ingress 暴露 HTTP/HTTPS 服务) |

| 存储类(SC) | 可用的 StorageClass(如 NFS/Ceph/RBD,需支持 ReadWriteMany 访问模式) |

| 域名 | 域名(如 harbor.example.com),能解析到 Ingress 入口 IP |

| TLS 证书 | 域名对应的 HTTPS 证书(公钥 tls.crt + 私钥 tls.key) |

6.1 部署Nginx-ingress控制器

bash

root@k8s-master1:~# mkdir -p yaml/ingress

root@k8s-master1:~# cd yaml/ingress/

# 下载官方ingress-nginx:v1.2.0

root@k8s-master1:~/yaml/ingress# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.2.0/deploy/static/provider/baremetal/deploy.yaml

root@k8s-master1:~/yaml/ingress# ls

deploy.yaml

root@k8s-master1:~/yaml/ingress# mv deploy.yaml ingress-nginx-controller-daemonset.yaml

# 修改yaml文件

root@k8s-master1:~/yaml/ingress# vim ingress-nginx-controller-daemonset.yaml

-------------------------------

# 注释service

#apiVersion: v1

#kind: Service

#metadata:

# labels:

# app.kubernetes.io/component: controller

# app.kubernetes.io/instance: ingress-nginx

# app.kubernetes.io/name: ingress-nginx

# app.kubernetes.io/part-of: ingress-nginx

# app.kubernetes.io/version: 1.2.0

# name: ingress-nginx-controller

# namespace: ingress-nginx

#spec:

# ipFamilies:

# - IPv4

# ipFamilyPolicy: SingleStack

# ports:

# - appProtocol: http

# name: http

# port: 80

# protocol: TCP

# targetPort: http

# - appProtocol: https

# name: https

# port: 443

# protocol: TCP

# targetPort: https

# selector:

# app.kubernetes.io/component: controller

# app.kubernetes.io/instance: ingress-nginx

# app.kubernetes.io/name: ingress-nginx

# type: NodePort

# 修改资源类型为Daemonset所有节点部署,使用宿主机网络,可以通过node节点主机名+端口号访问

apiVersion: apps/v1

#kind: Deployment

kind: DaemonSet

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.2.0

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

minReadySeconds: 0

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

spec:

hostNetwork: true #使用宿主机网络

hostPID: true #使用宿主机Pid

------------------------------------

# 更新资源配置文件

root@k8s-master1:~/yaml/ingress# kubectl apply -f ingress-nginx-controller-daemonset.yaml

# 查看pod启动状态

root@k8s-master1:~/yaml/ingress# kubectl get pod -n ingress-nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-admission-create-z76r7 0/1 Completed 0 94s 10.244.104.3 node2 <none> <none>

ingress-nginx-admission-patch-fbd6p 0/1 Completed 0 94s 10.244.166.131 node1 <none> <none>

ingress-nginx-controller-84rqq 1/1 Running 0 94s 192.168.121.200 node1 <none> <none>

ingress-nginx-controller-rb5vv 1/1 Running 0 94s 192.168.121.201 node2 <none> <none>

ingress-nginx-controller-wrdlc 1/1 Running 0 94s 192.168.121.202 node3 <none> <none>6.2 K8s 部署 NFS 动态存储供给器(NFS Provisioner)

Harbor 需要动态创建 PVC,因此需要部署 NFS Provisioner(静态 NFS 无法自动创建 PVC)。

创建 nfs-provisioner.yaml

bash

root@k8s-master1:~# mkdir /root/yaml/harbor

root@k8s-master1:~# cd /root/yaml/harbor

root@k8s-master1:~/yaml/harbor# vim nfs-provisioner.yaml

---

# 1. 创建 ServiceAccount(赋予权限)

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: kube-system

---

# 2. 创建 RBAC 权限(Provisioner 需要的权限)

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "delete", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: kube-system

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

# 3. 部署 NFS Provisioner Deployment

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-client-provisioner

namespace: kube-system

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: k8s.gcr.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner-harbor # 供给器名称(后续 StorageClass 要用到)

- name: NFS_SERVER

value: 192.168.121.70 # NFS 服务端 IP

- name: NFS_PATH

value: /data/nfs/harbor # NFS 共享目录

volumes:

- name: nfs-client-root

nfs:

server: 192.168.121.70 # NFS 服务端 IP

path: /data/nfs/harbor # NFS 共享目录

---

# 4. 创建 NFS StorageClass(核心,名称为 nfs-storage)

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storage-harbor # 这个名称要和 Harbor values.yaml 中的 storageClass 一致

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner-harbor # 必须和上面的 PROVISIONER_NAME 一致

parameters:

archiveOnDelete: "false" # 删除 PVC 时自动删除 NFS 目录(避免残留)

reclaimPolicy: Delete # 回收策略(Delete/Retain)

allowVolumeExpansion: true # 允许 PVC 扩容6.3 部署 NFS Provisioner

bash

root@k8s-master1:~/yaml/harbor# kubectl apply -f nfs-provisioner.yaml

serviceaccount/nfs-client-provisioner unchanged

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner unchanged

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner unchanged

deployment.apps/nfs-client-provisioner unchanged

storageclass.storage.k8s.io/nfs-storage unchanged

root@k8s-master1:~/yaml/harbor# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-6949477b58-7zd4t 1/1 Running 1 15h

calico-node-9hcvf 1/1 Running 1 15h

calico-node-cbc6c 1/1 Running 1 15h

calico-node-fwhfr 1/1 Running 1 15h

calico-node-kbf2b 1/1 Running 1 15h

calico-node-mf2xz 1/1 Running 1 15h

calico-node-qwj7g 1/1 Running 1 15h

coredns-7f89b7bc75-fflvz 1/1 Running 1 15h

coredns-7f89b7bc75-rw7zs 1/1 Running 1 15h

etcd-k8s-master1 1/1 Running 1 15h

etcd-master2 1/1 Running 1 15h

etcd-master3 1/1 Running 1 15h

kube-apiserver-k8s-master1 1/1 Running 1 15h

kube-apiserver-master2 1/1 Running 1 15h

kube-apiserver-master3 1/1 Running 1 15h

kube-controller-manager-k8s-master1 1/1 Running 2 15h

kube-controller-manager-master2 1/1 Running 1 15h

kube-controller-manager-master3 1/1 Running 1 15h

kube-proxy-9g6bb 1/1 Running 1 15h

kube-proxy-bdm94 1/1 Running 1 15h

kube-proxy-kdw4s 1/1 Running 1 15h

kube-proxy-ngzwd 1/1 Running 1 15h

kube-proxy-s2g9m 1/1 Running 1 15h

kube-proxy-vxmhp 1/1 Running 1 15h

kube-scheduler-k8s-master1 1/1 Running 2 15h

kube-scheduler-master2 1/1 Running 1 15h

kube-scheduler-master3 1/1 Running 1 15h

nfs-client-provisioner-56cdd8f647-2qdf7 1/1 Running 0 43m

# 查看 Pod 运行状态(等待 STATUS=Running)6.4 验证 StorageClass

bash

root@k8s-master1:~/yaml/harbor# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-storage k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate true 44m6.5 部署Harbor

6.5.1 创建 Harbor 命名空间

bash

root@k8s-master1:~/yaml/harbor# kubectl create namespace harbor6.5.2 创建 TLS 证书 Secret

bash

root@k8s-master1:~/yaml/harbor/harbor-ca# mkdi harbor-ca

# 生成自签名证书

root@k8s-master1:~/yaml/harbor/harbor-ca# openssl req -x509 -nodes -days 3650 -newkey rsa:2048 \

-keyout tls.key -out tls.crt \

-subj "/CN=harbor.example.com/O=harbor-ca"

Generating a RSA private key

.................+++++

........................+++++

writing new private key to 'tls.key'

-----

root@k8s-master1:~/yaml/harbor# ls

nfs-provisioner.yaml tls.crt tls.key

# 创建secret

root@k8s-master1:~/yaml/harbor# kubectl create secret tls harbor-tls --cert=tls.crt --key=tls.key -n harbor

secret/harbor-tls created6.5.3 添加 Harbor Helm 仓库

bash

# 添加 Harbor 官方 Helm 仓库

root@k8s-master1:~/yaml/harbor# helm repo add harbor https://helm.goharbor.io

# 更新仓库索引(确保获取最新版本)

root@k8s-master1:~/yaml/harbor# helm repo update

# 查看 Harbor 可部署版本

root@k8s-master1:~/yaml/harbor# helm search repo harbor/harbor --versions6.5.4 定制 harbor-values.yaml

bash

root@k8s-master1:~/yaml/harbor# vim harbor-values.yaml

# 全局配置

global:

# 存储大小(根据实际需求调整)

storageClass: "nfs-storage-harbor" # StorageClass 名称

# 暴露方式配置

expose:

type: ingress # 使用 Ingress 暴露服务(推荐生产环境)

tls:

enabled: true # 启用 HTTPS

secretName: "harbor-tls" # 对应前面创建的 TLS Secret 名称

notarySecretName: "harbor-tls" # Notary 服务也使用相同证书

ingress:

hosts:

core: harbor.example.com # Harbor 域名

className: "nginx" # 对应的 IngressClass 名称(默认 nginx)

# 外部访问地址

externalURL: https://harbor.example.com

# 持久化存储配置

persistence:

enabled: true # 启用持久化

resourcePolicy: "keep" # 删除 Helm 释放时保留 PVC

persistentVolumeClaim:

# 镜像仓库存储

registry:

storageClass: "nfs-storage-harbor"

size: 20Gi # 替换为实际需要的大小

# 其他组件存储(按需调整)

chartmuseum:

storageClass: "nfs-storage-harbor"

size: 10Gi

jobservice:

storageClass: "nfs-storage-harbor"

size: 1Gi

database:

storageClass: "nfs-storage-harbor"

size: 5Gi

redis:

storageClass: "nfs-storage-harbor"

size: 1Gi

trivy:

storageClass: "nfs-storage-harbor"

size: 5Gi

# 管理员密码(生产环境必须修改为强密码)

harborAdminPassword: "123456" # 建议包含大小写、数字、特殊字符

# 组件开关(按需关闭不需要的组件)

components:

registry:

enabled: true

core:

enabled: true

portal:

enabled: true

jobservice:

enabled: true

database:

enabled: true # 使用内置数据库(测试用),生产建议用外部 PostgreSQL

redis:

enabled: true # 使用内置 Redis(测试用),生产建议用外部 Redis

trivy:

enabled: true # 漏洞扫描(可选,关闭可减少资源占用)

notary:

enabled: false # 签名验证(可选,按需开启)

chartmuseum:

enabled: false # Helm Chart 仓库(可选,按需开启)6.5.5 helm部署harbor

bash

# 执行 Helm 安装(指定命名空间、自定义配置文件)

root@k8s-master1:~/yaml/harbor# helm install harbor harbor/harbor -f harbor-values.yaml -n harbor --version 1.8.2

# 查看部署

# 如果没有绑定nginx,就edit进去添加ingressClassName: nginx

root@k8s-master1:~/yaml/harbor# kubectl get ingress -n harbor

NAME CLASS HOSTS ADDRESS PORTS AGE

harbor-ingress nginx harbor.example.com 192.168.121.200,192.168.121.201,192.168.121.202 80, 443 65s

harbor-ingress-notary <none> notary.harbor.domain 192.168.121.200,192.168.121.201,192.168.121.202 80, 443 65s

root@k8s-master1:~/yaml/harbor# kubectl get pod -n harbor

NAME READY STATUS RESTARTS AGE

harbor-chartmuseum-5f4c65cc4c-s6v9z 1/1 Running 0 9m28s

harbor-core-69b9745c7d-xzd4k 1/1 Running 0 9m28s

harbor-database-0 1/1 Running 0 9m17s

harbor-jobservice-d4bf7764-tjldp 1/1 Running 0 9m27s

harbor-notary-server-f784f55d9-hxbjk 1/1 Running 0 9m27s

harbor-notary-signer-5ccc446846-5jpts 1/1 Running 0 9m27s

harbor-portal-86bb5f6dd9-hpxqb 1/1 Running 0 9m27s

harbor-redis-0 1/1 Running 0 9m27s

harbor-registry-7cc497d875-flclr 2/2 Running 0 9m27s

harbor-trivy-0 1/1 Running 0 9m27s6.5.6 登录harbor web ui

bash

# 查看ingress的443端口

root@k8s-master1:~/yaml/harbor# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.102.66.112 <none> 80:32106/TCP,443:31698/TCP 32m

ingress-nginx-controller-admission ClusterIP 10.98.253.150 <none> 443/TCP 107mwindowns主机编辑hosts 配置域名解析

192.168.121.101 harbor.example.com

浏览器访问https://harbor.example.com:31698/

用户名:admin

密码:123456

6.6 配置k8s集群docker信任harbor仓库

web ui 页面下载ca证书

bash

# 上传到mster1节点

# 建立docker目录

root@k8s-master1:~/yaml/harbor/harbor-ca# mkdir -p /etc/docker/certs.d/harbor.example.com

root@k8s-master1:~/yaml/harbor/harbor-ca# cp ca.crt /etc/docker/certs.d/harbor.example.com/

root@k8s-master1:~/yaml/harbor/harbor-ca# systemctl restart docker

root@k8s-master1:~/yaml/harbor/harbor-ca# docker login harbor.example.com

Authenticating with existing credentials...

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded6.7 测试镜像推送/拉取

bash

root@k8s-master1:~/yaml/harbor/harbor-ca# docker pull nginx

'Using default tag: latest

latest: Pulling from library/nginx

119d43eec815: Pull complete

700146c8ad64: Pull complete

d989100b8a84: Pull complete

500799c30424: Pull complete

10b68cfefee1: Pull complete

57f0dd1befe2: Pull complete

eaf8753feae0: Pull complete

Digest: sha256:c881927c4077710ac4b1da63b83aa163937fb47457950c267d92f7e4dedf4aec

Status: Downloaded newer image for nginx:latest

docker.io/library/nginx:latest

root@k8s-master1:~/yaml/harbor/harbor-ca# docker tag nginx:latest harbor.example.com/library/nginx:latest

root@k8s-master1:~/yaml/harbor/harbor-ca# docker push harbor.example.com/library/nginx:latest

The push refers to repository [harbor.example.com/library/nginx]

d9d3f8c27ad7: Pushed

4b53e01dba29: Pushed

3b4fce0e490d: Pushed

4c34f6878173: Pushed

547c913b4108: Pushed

e84c0e25063e: Pushed

e50a58335e13: Pushed

latest: digest: sha256:a6dd519f4cc2f69a8f049f35b56aec2e30b7ddfedee12976c9e289c07b421804 size: 1778

root@node1:~# docker pull harbor.example.com/library/nginx:latest

latest: Pulling from library/nginx

380ebb5ce8c1: Pull complete

700146c8ad64: Pull complete

d989100b8a84: Pull complete

500799c30424: Pull complete

10b68cfefee1: Pull complete

57f0dd1befe2: Pull complete

eaf8753feae0: Pull complete

Digest: sha256:a6dd519f4cc2f69a8f049f35b56aec2e30b7ddfedee12976c9e289c07b421804

Status: Downloaded newer image for harbor.example.com/library/nginx:latest

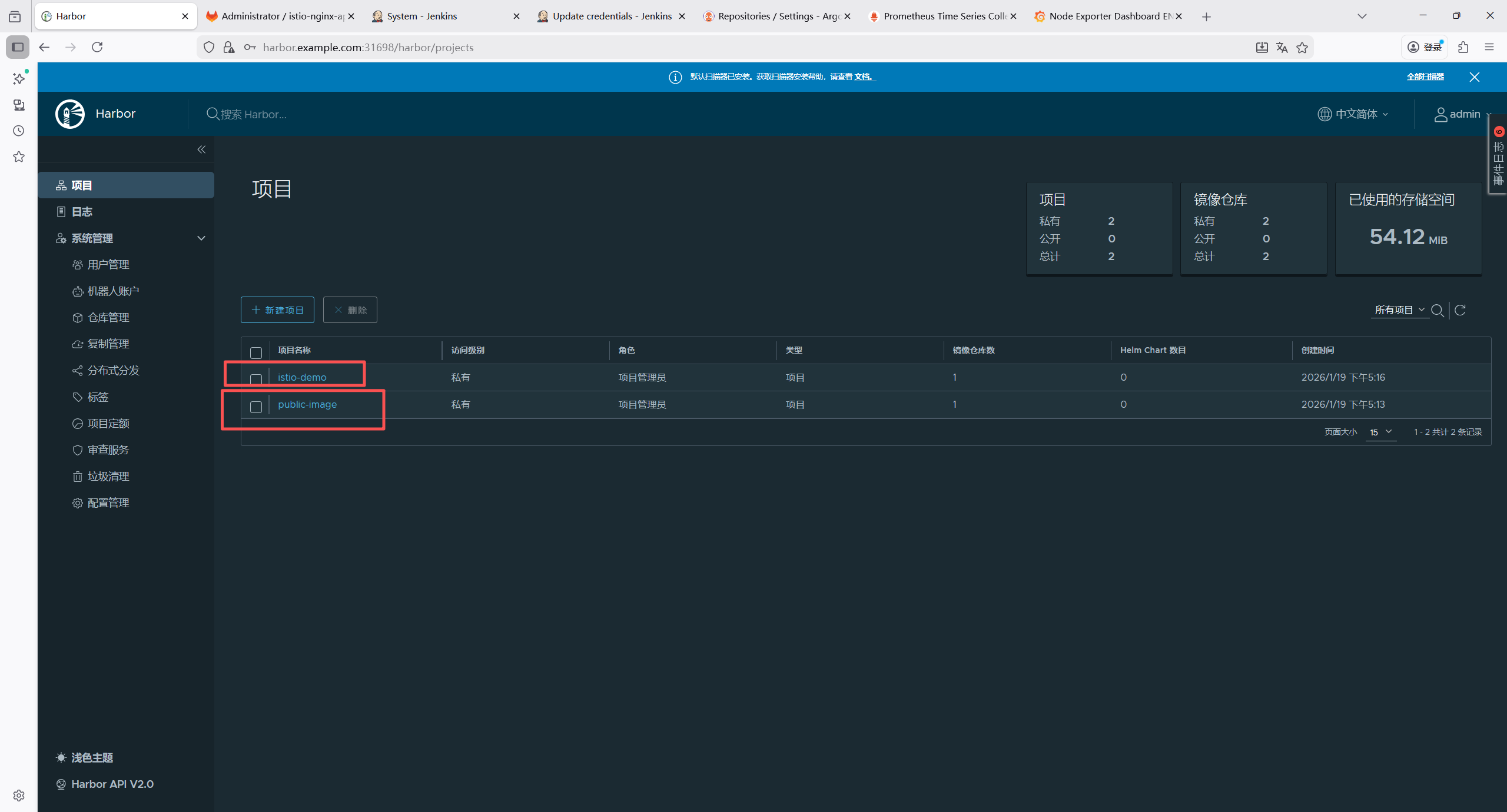

harbor.example.com/library/nginx:latest6.8 创建镜像仓库

7 部署CI/CD流水线**: GitLab, Jenkins, ArgoCD**

7.1 部署Gitlab

7.1.1 部署 NFS 动态存储供给器(NFS Provisioner)

bash

root@k8s-master1:~/yaml# mkdir gitlab

root@k8s-master1:~/yaml/gitlab# vim nfs-provisioner.yaml

---

# 1. 创建 ServiceAccount(赋予权限)

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner-gitlab

namespace: kube-system

---

# 2. 创建 RBAC 权限(Provisioner 需要的权限)

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-gitlab-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "delete", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner-gitlab

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner-gitlab

namespace: kube-system

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-gitlab-runner

apiGroup: rbac.authorization.k8s.io

---

# 3. 部署 NFS Provisioner Deployment

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-client-provisioner-gitlab

namespace: kube-system

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner-gitlab

template:

metadata:

labels:

app: nfs-client-provisioner-gitlab

spec:

serviceAccountName: nfs-client-provisioner-gitlab

containers:

- name: nfs-client-provisioner-gitlab

image: k8s.gcr.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner-gitlab # 供给器名称(后续 StorageClass 要用到)

- name: NFS_SERVER

value: 192.168.121.70 # NFS 服务端 IP

- name: NFS_PATH

value: /data/nfs/gitlab # NFS 共享目录

volumes:

- name: nfs-client-root

nfs:

server: 192.168.121.70 # NFS 服务端 IP

path: /data/nfs/gitlab # NFS 共享目录

---

# 4. 创建 NFS StorageClass

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storage-gitlab # 这个名称要和 gitlab values.yaml 中的 storageClass 一致

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner-gitlab # 必须和上面的 PROVISIONER_NAME 一致

parameters:

archiveOnDelete: "false" # 删除 PVC 时自动删除 NFS 目录(避免残留)

reclaimPolicy: Delete # 回收策略(Delete/Retain)

allowVolumeExpansion: true # 允许 PVC 扩容7.1.2 创建 GitLab PVC

bash

root@k8s-master1:~/yaml/gitlab# vim gitlab-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: gitlab-config

namespace: gitlab

spec:

accessModes:

- ReadWriteOnce

storageClassName: nfs-storage-gitlab

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: gitlab-data

namespace: gitlab

spec:

accessModes:

- ReadWriteOnce

storageClassName: nfs-storage-gitlab

resources:

requests:

storage: 20Gi # 根据实际需求调整

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: gitlab-logs

namespace: gitlab

spec:

accessModes:

- ReadWriteOnce

storageClassName: nfs-storage-gitlab

resources:

requests:

storage: 20Gi7.1.3 创建 GitLab Deployment

bash

root@k8s-master1:~/yaml/gitlab# vim gitlab-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: gitlab

namespace: gitlab

spec:

replicas: 1 # GitLab建议单实例部署(多实例需额外配置)

selector:

matchLabels:

app: gitlab

template:

metadata:

labels:

app: gitlab

spec:

affinity: # 添加节点亲和性配置

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution: # 硬亲和性,必须满足

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname # 使用主机名标签

operator: In

values:

- node2 # 指定调度到node2节点

containers:

- name: gitlab

image: gitlab/gitlab-ce:16.8.1-ce.0 # 选择稳定版本,避免latest

imagePullPolicy: IfNotPresent

env:

# 核心配置:指定GitLab的外部访问域名

- name: GITLAB_EXTERNAL_URL

value: "https://gitlab.example.com" # 域名要和Ingress一致,建议用HTTPS

# 时区配置(可选,建议添加)

- name: TZ

value: "Asia/Shanghai"

# 数据库配置(默认内置PostgreSQL,若用外部数据库需额外配置)

- name: POSTGRES_PASSWORD

valueFrom:

secretKeyRef:

name: gitlab-postgres-secret

key: password

ports:

- containerPort: 80

name: http

- containerPort: 443

name: https

- containerPort: 22

name: ssh

volumeMounts:

- name: gitlab-config

mountPath: /etc/gitlab

- name: gitlab-data

mountPath: /var/opt/gitlab

- name: gitlab-logs

mountPath: /var/log/gitlab

volumes:

- name: gitlab-config

persistentVolumeClaim:

claimName: gitlab-config

- name: gitlab-data

persistentVolumeClaim:

claimName: gitlab-data

- name: gitlab-logs

persistentVolumeClaim:

claimName: gitlab-logs7.1.4 创建 GitLab Service

bash

root@k8s-master1:~/yaml/gitlab# vim gitlab-service.yaml

apiVersion: v1

kind: Service

metadata:

name: gitlab

namespace: gitlab

spec:

selector:

app: gitlab

type: ClusterIP # 仅对内暴露,对外通过Ingress访问

ports:

- name: http

port: 80

targetPort: 80

- name: https

port: 443

targetPort: 443

- name: ssh

port: 22

targetPort: 227.1.5 创建 Ingress(绑定域名 + Nginx 控制器)

bash

root@k8s-master1:~/yaml/gitlab# vim gitlab-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: gitlab

namespace: gitlab

annotations:

# 指定使用Nginx Ingress控制器

kubernetes.io/ingress.class: "nginx"

# 启用HTTPS(若需要HTTP自动跳转HTTPS,添加以下注解)

nginx.ingress.kubernetes.io/ssl-redirect: "true"

# 客户端最大请求体大小(GitLab上传文件需要)

nginx.ingress.kubernetes.io/proxy-body-size: "10g"

# 超时配置(避免长连接断开)

nginx.ingress.kubernetes.io/proxy-read-timeout: "3600"

nginx.ingress.kubernetes.io/proxy-send-timeout: "3600"

spec:

rules:

- host: gitlab.example.com # 绑定指定域名

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: gitlab

port:

number: 807.1.6 执行部署

bash

# 1. 部署PVC

root@k8s-master1:~/yaml/gitlab# kubectl apply -f gitlab-pvc.yaml

# 2. 部署Deployment

root@k8s-master1:~/yaml/gitlab# kubectl apply -f gitlab-deployment.yaml

# 3. 部署Service

root@k8s-master1:~/yaml/gitlab# kubectl apply -f gitlab-service.yaml

# 4. 部署Ingress

root@k8s-master1:~/yaml/gitlab# kubectl apply -f gitlab-ingress.yaml7.1.7 检查部署状态

bash

# 检查PVC是否绑定成功(STATUS为Bound)

root@k8s-master1:~/yaml/gitlab# kubectl get pvc -n gitlab

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

gitlab-config Bound pvc-32e7e321-9ac3-4cf1-88e1-9ad008923929 10Gi RWO nfs-storage-gitlab 91m

gitlab-data Bound pvc-157268e1-468a-4d82-8311-a69fc52bb0d4 20Gi RWO nfs-storage-gitlab 91m

gitlab-logs Bound pvc-fc4b83ea-2009-44e2-bcf0-c95b37c1daf0 20Gi RWO nfs-storage-gitlab 91m

# 检查Pod是否运行(STATUS为Running)

root@k8s-master1:~/yaml/gitlab# kubectl get pods -n gitlab

NAME READY STATUS RESTARTS AGE

gitlab-dc87455cc-vgg4c 1/1 Running 0 12m

# 检查Service和Ingress

root@k8s-master1:~/yaml/gitlab# kubectl get svc -n gitlab

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

gitlab ClusterIP 10.110.211.47 <none> 80/TCP,443/TCP,22/TCP 91m

root@k8s-master1:~/yaml/gitlab# kubectl get ingress -n gitlab

NAME CLASS HOSTS ADDRESS PORTS AGE

gitlab nginx gitlab.example.com 192.168.121.200,192.168.121.201,192.168.121.202 80 91m7.1.8 获取 GitLab 初始管理员密码

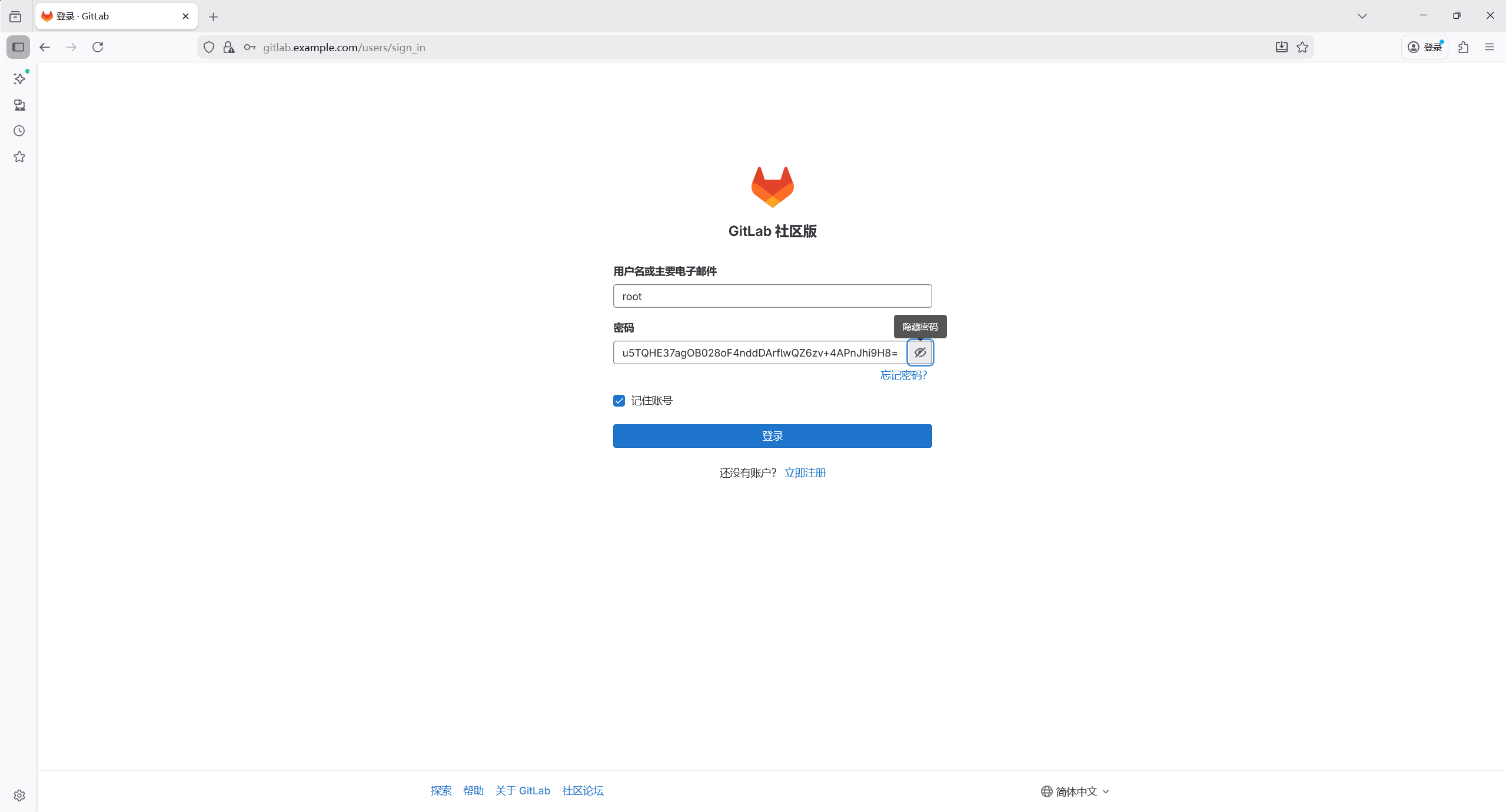

GitLab 启动后,初始密码会保存在 Pod 内的/etc/gitlab/initial_root_password文件中,执行以下命令获取:

bash

root@k8s-master1:~/yaml/gitlab# kubectl exec -it gitlab-dc87455cc-vgg4c -n gitlab -- cat /etc/gitlab/initial_root_password

# WARNING: This value is valid only in the following conditions

# 1. If provided manually (either via `GITLAB_ROOT_PASSWORD` environment variable or via `gitlab_rails['initial_root_password']` setting in `gitlab.rb`, it was provided before database was seeded for the first time (usually, the first reconfigure run).

# 2. Password hasn't been changed manually, either via UI or via command line.

#

# If the password shown here doesn't work, you must reset the admin password following https://docs.gitlab.com/ee/security/reset_user_password.html#reset-your-root-password.

Password: u5TQHE37agOB028oF4nddDArflwQZ6zv+4APnJhi9H8=

# NOTE: This file will be automatically deleted in the first reconfigure run after 24 hours.7.1.9 访问 Gitlab

需要在windows主机hosts文件配置域名解析

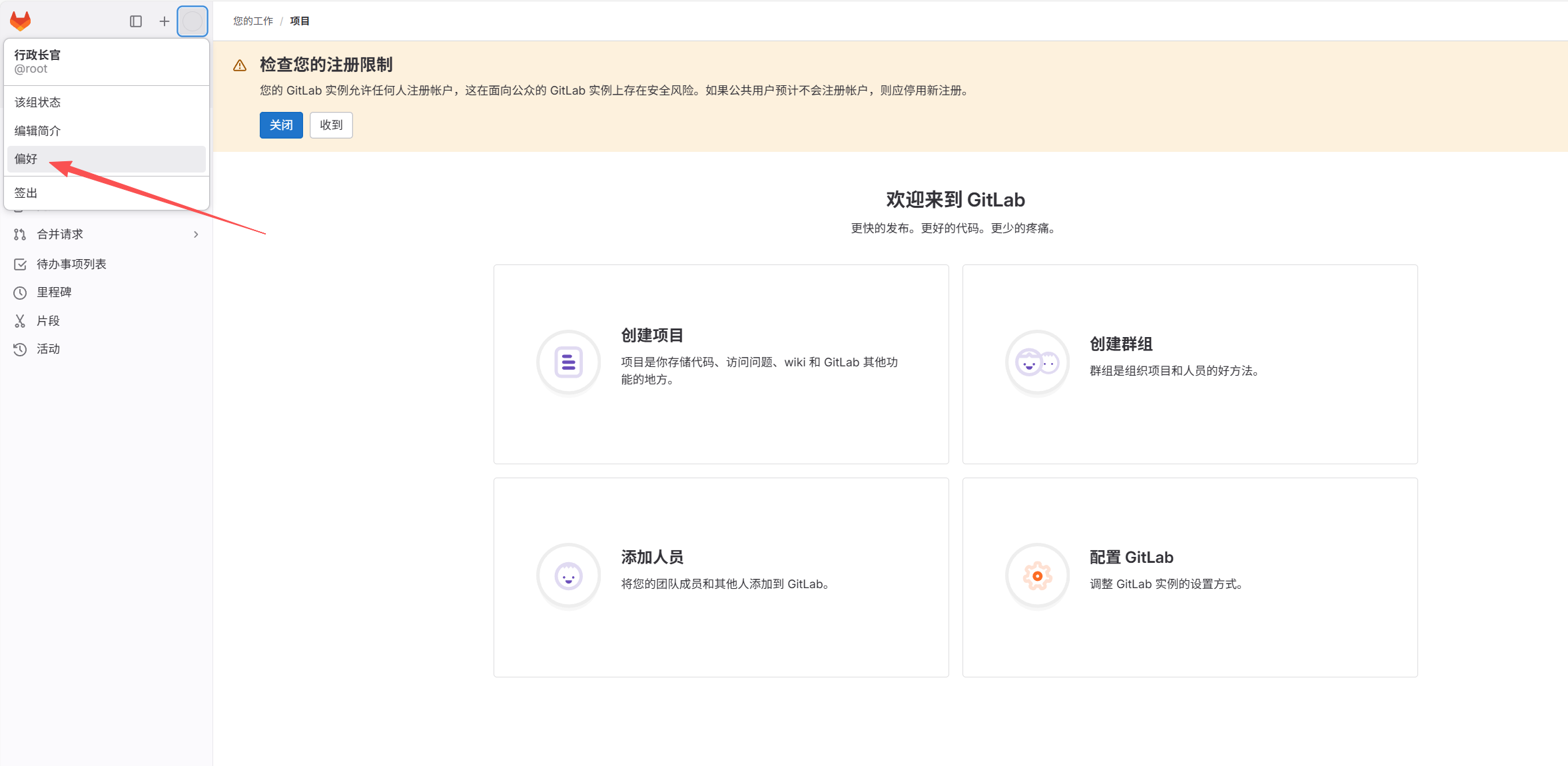

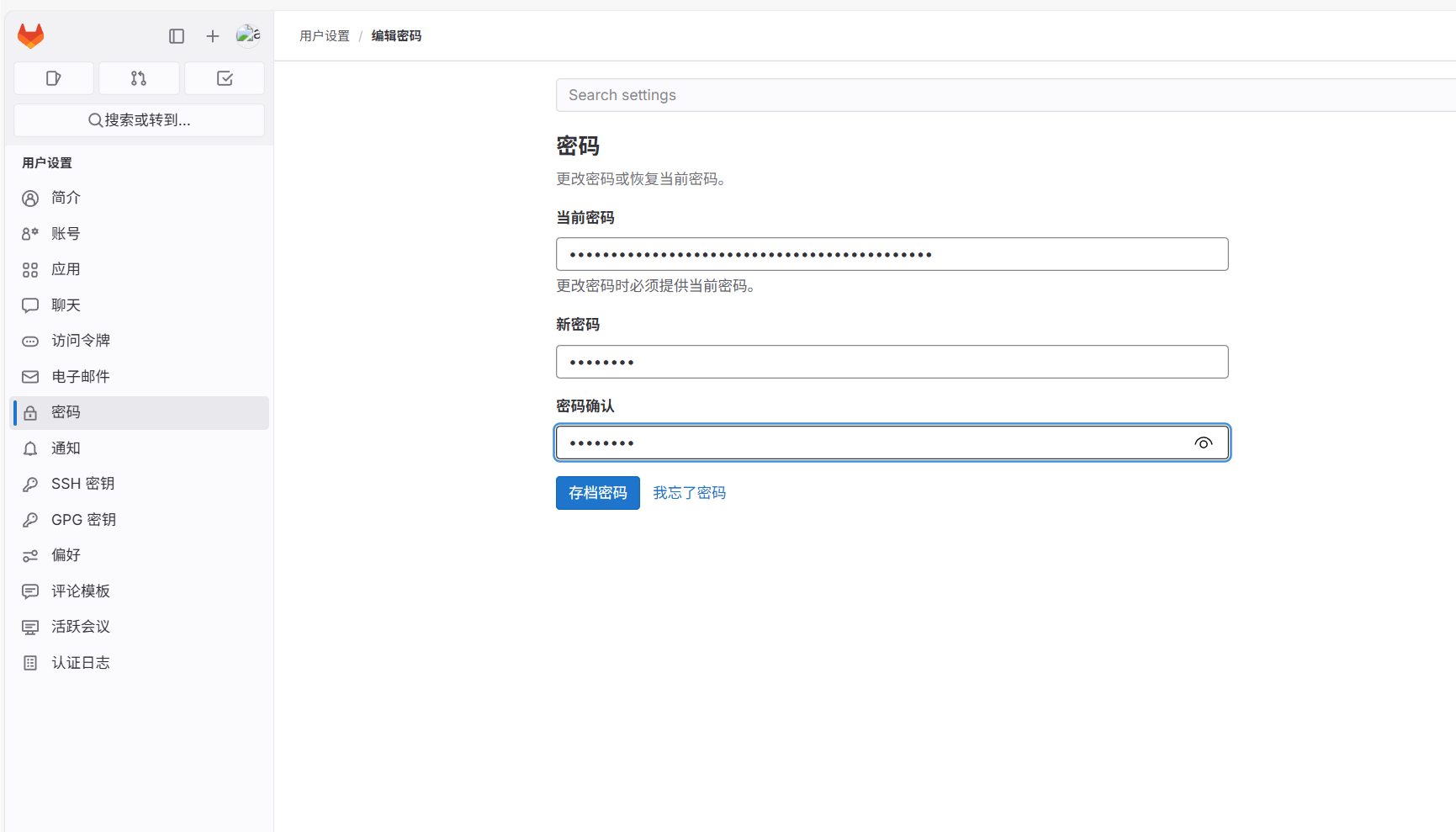

在浏览器中输入gitlab.example.com,使用用户名root和上述初始密码登录,登录后建议立即修改管理员密码。

修改密码

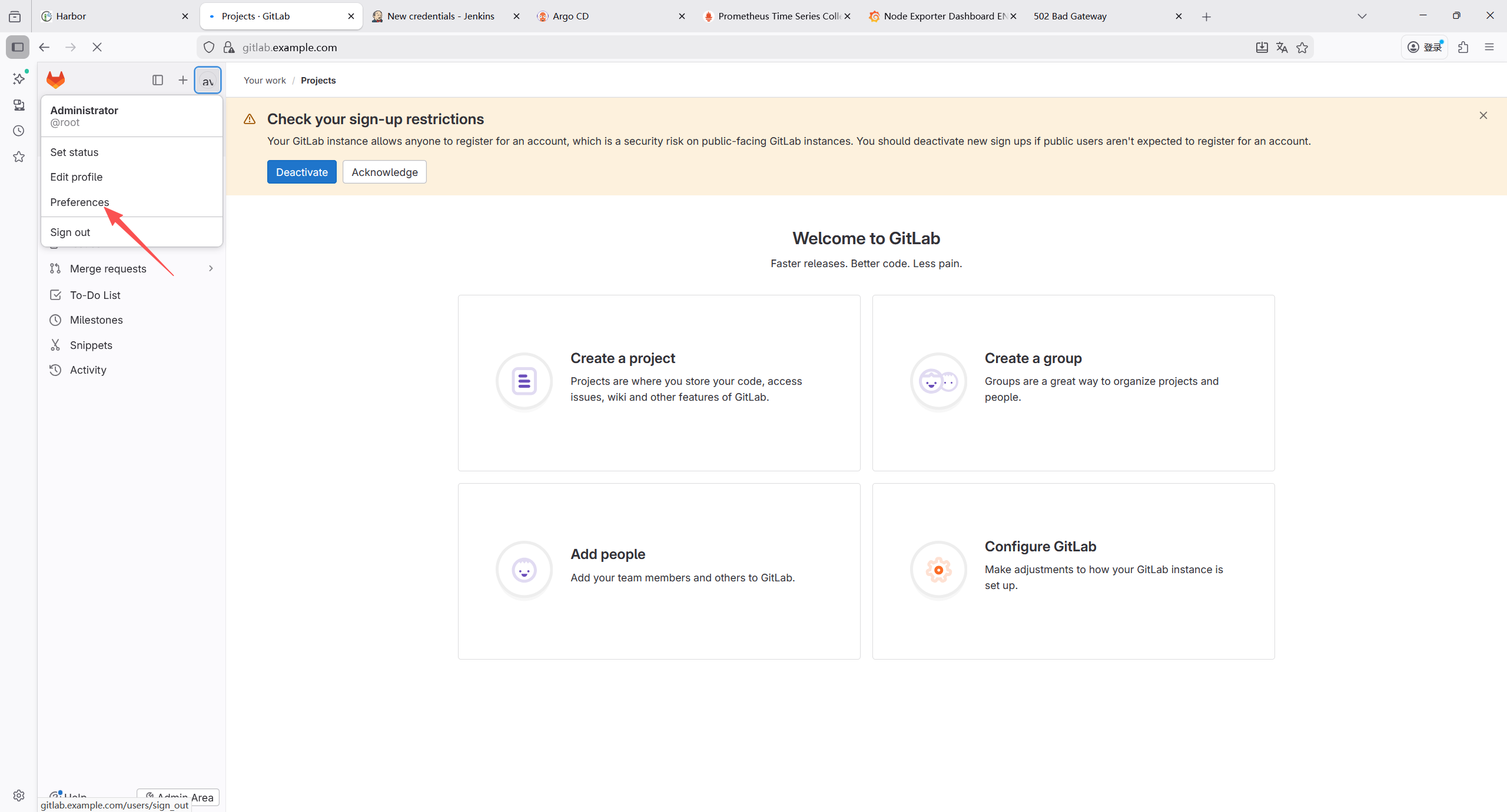

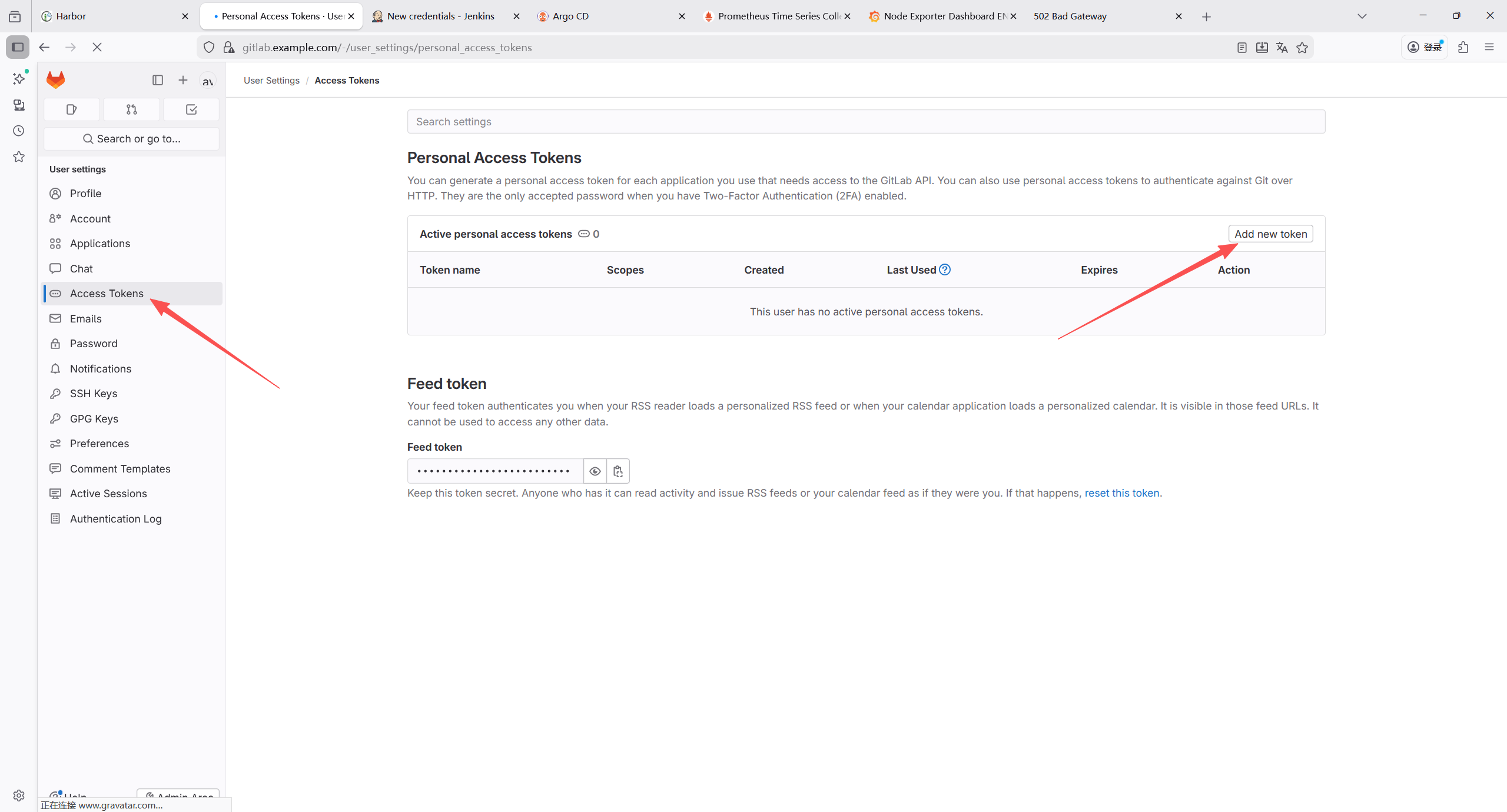

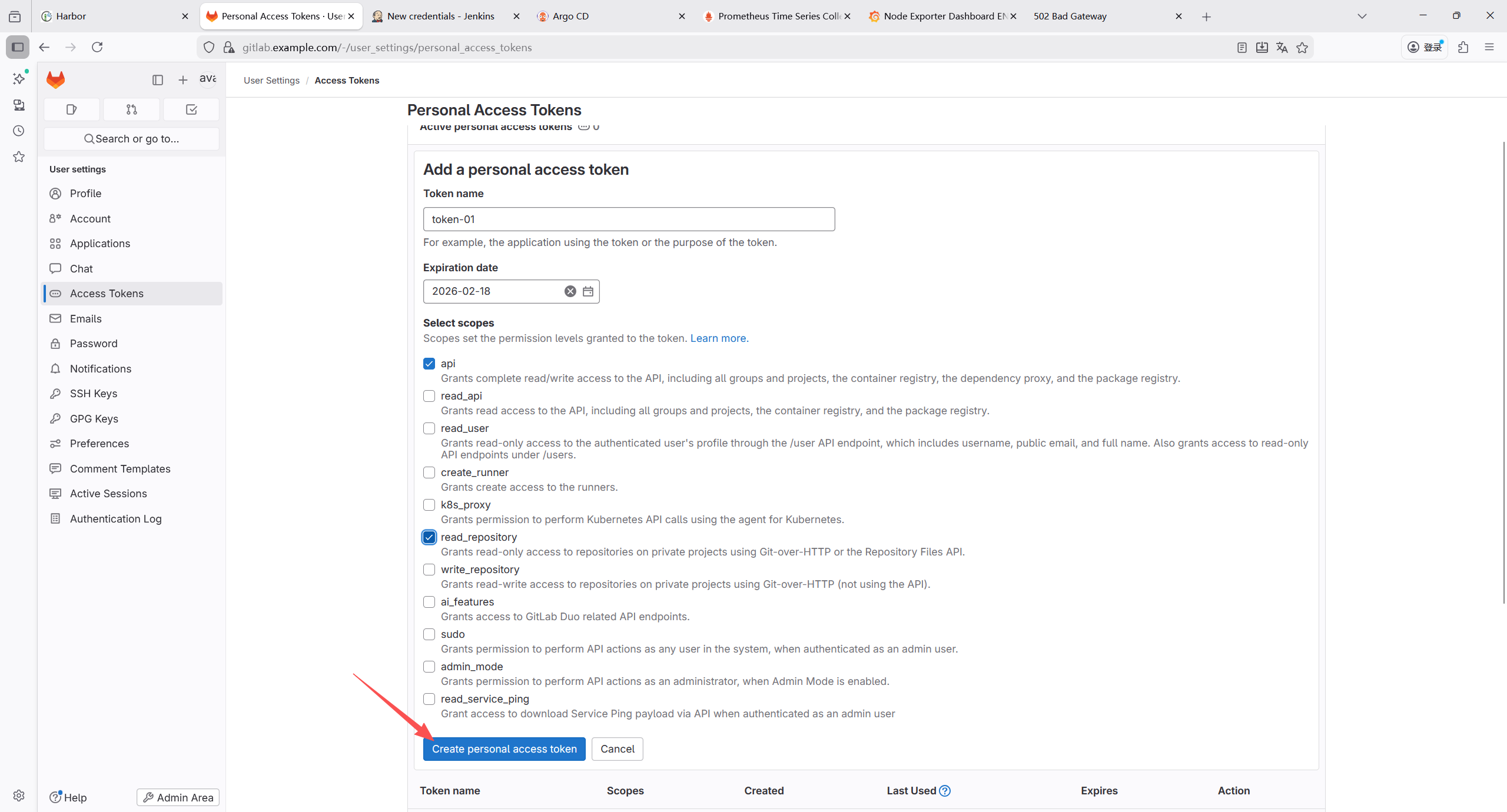

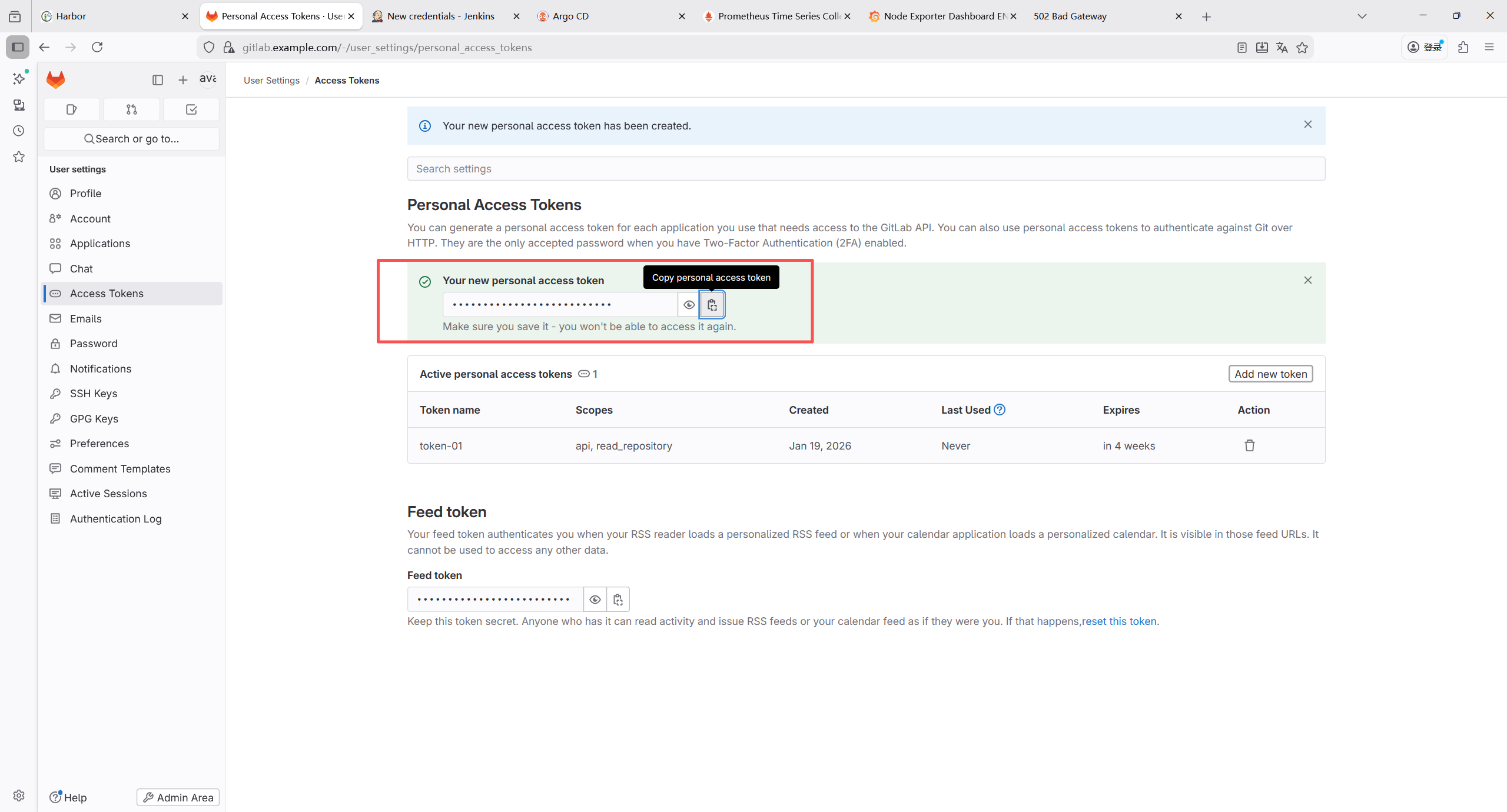

7.1.10 生成个人访问令牌

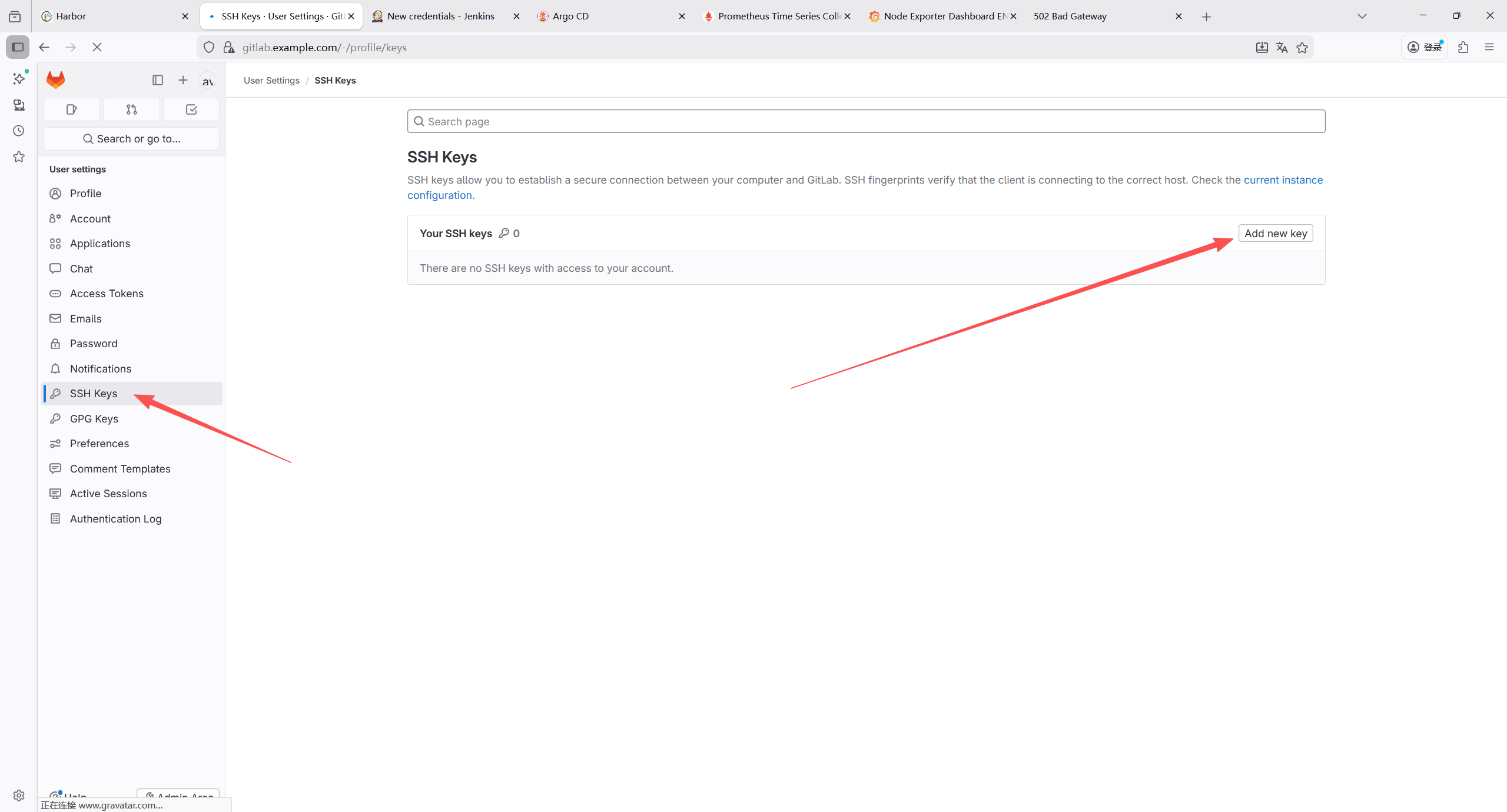

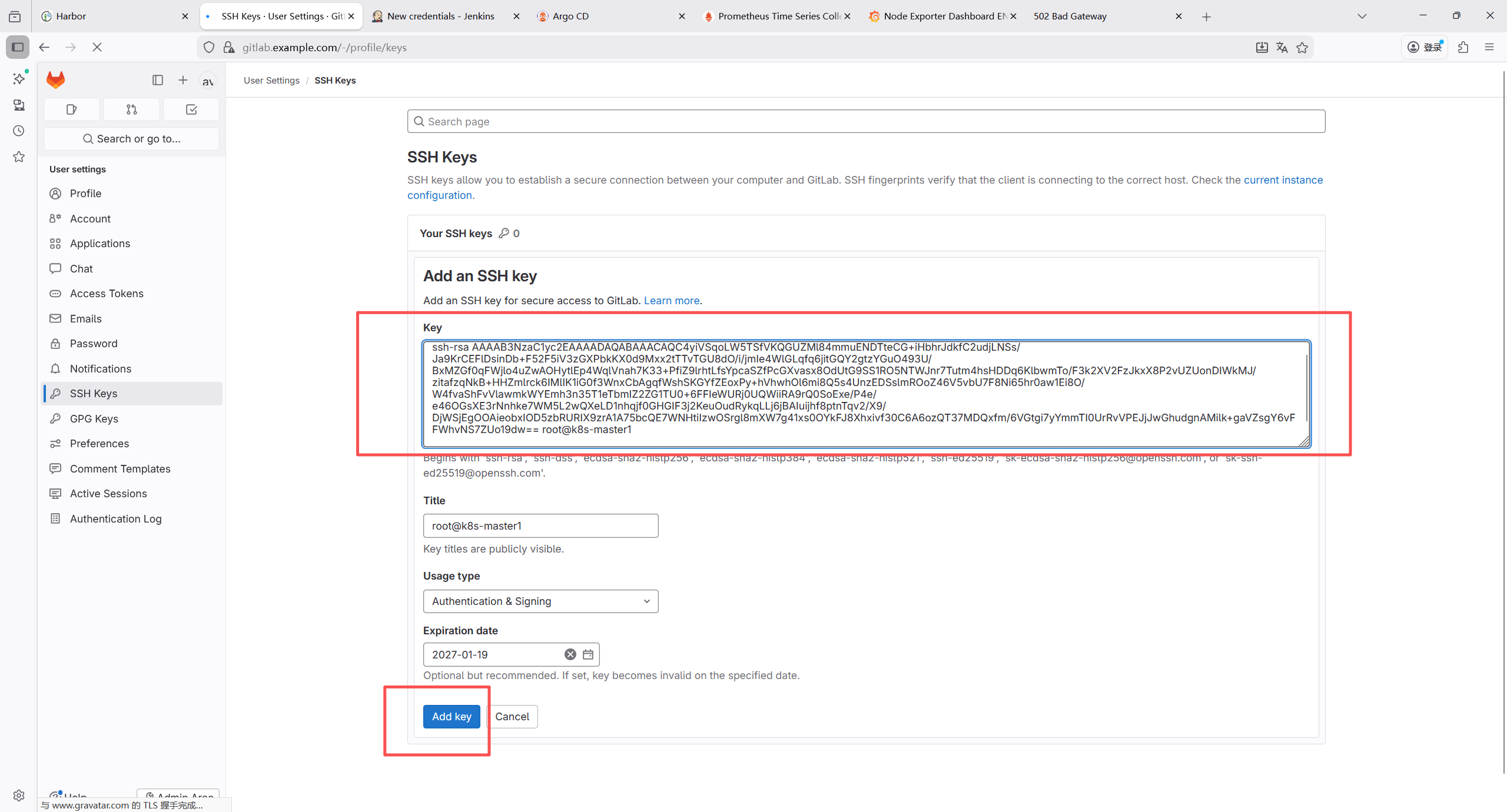

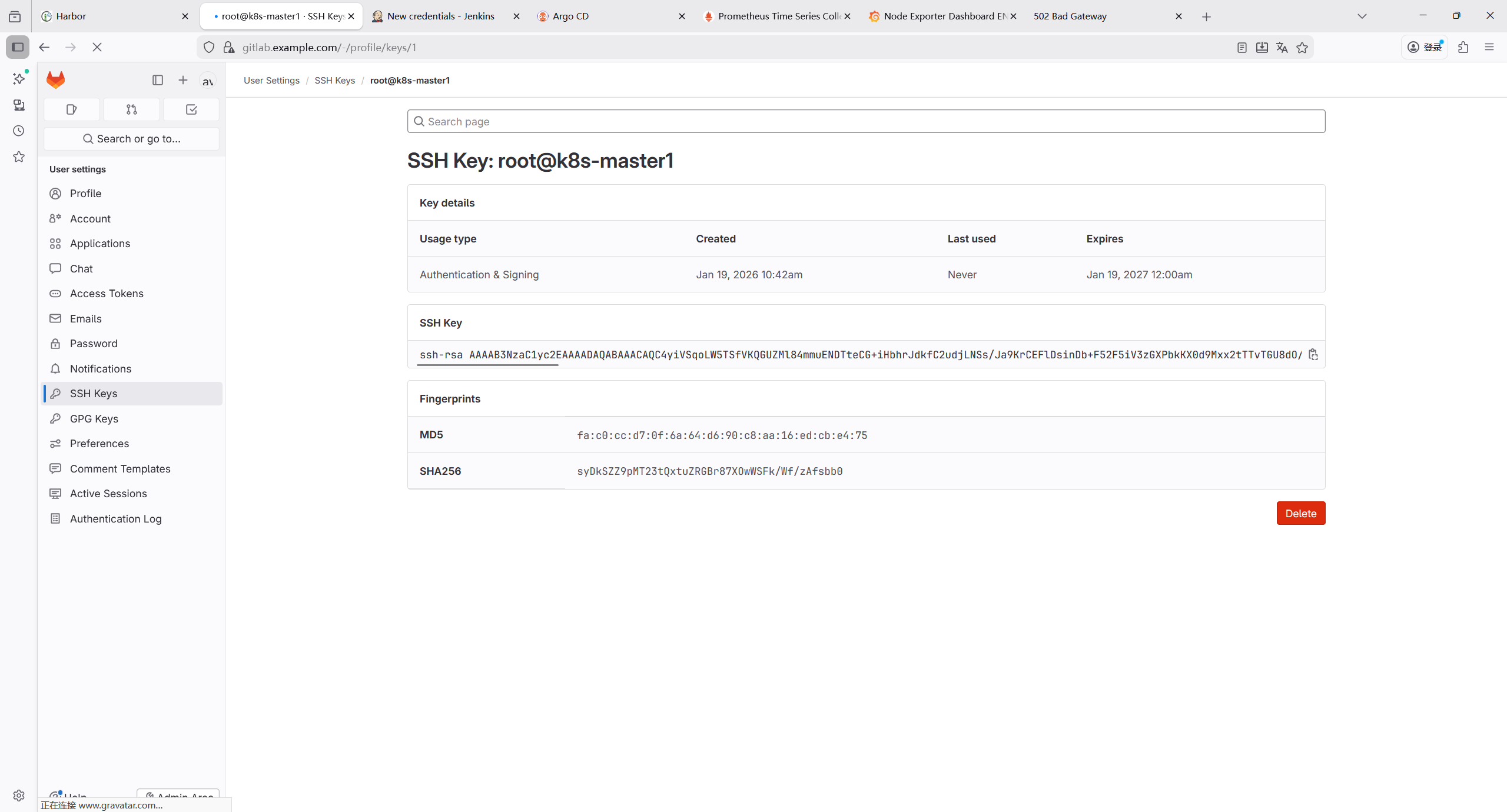

7.1.11 配置管理节点ssh免密认证

bash

root@k8s-master1:~# ssh-keygen -t rsa -b 4096

root@k8s-master1:~# cat ~/.ssh/id_rsa.pub

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQC4yiVSqoLW5TSfVKQGUZMl84mmuENDTteCG+iHbhrJdkfC2udjLNSs/Ja9KrCEFlDsinDb+F52F5iV3zGXPbkKX0d9Mxx2tTTvTGU8dO/i/jmIe4WlGLqfq6jitGQY2gtzYGuO493U/BxMZGf0qFWjlo4uZwAOHytlEp4WqlVnah7K33+PfiZ9lrhtLfsYpcaSZfPcGXvasx8OdUtG9SS1RO5NTWJnr7Tutm4hsHDDq6KlbwmTo/F3k2XV2FzJkxX8P2vUZUonDIWkMJ/zitafzqNkB+HHZmlrck6IMlIK1iG0f3WnxCbAgqfWshSKGYfZEoxPy+hVhwhOl6mi8Q5s4UnzEDSslmROoZ46V5vbU7F8Ni65hr0aw1Ei8O/W4fvaShFvVlawmkWYEmh3n35T1eTbmIZ2ZG1TU0+6FFIeWURj0UQWiiRA9rQ0SoExe/P4e/e46OGsXE3rNnhke7WM5L2wQXeLD1nhqjf0GHGIF3j2KeuOudRykqLLj6jBAIuijhf8ptnTqv2/X9/DjWSjEgOOAieobxIOD5zbRURIX9zrA1A75bcQE7WNHtiIzwOSrgl8mXW7g41xs0OYkFJ8Xhxivf30C6A6ozQT37MDQxfm/6VGtgi7yYmmTI0UrRvVPEJjJwGhudgnAMilk+gaVZsgY6vFFWhvNS7ZUo19dw== root@k8s-master1

7.2 部署jenkins

7.2.1 部署 NFS 动态存储供给器(NFS Provisioner)

bash

root@k8s-master1:~/yaml# mkdir jenkins

root@k8s-master1:~/yaml/jenkins# vim nfs-provisioner.yaml

---

# 1. 创建 ServiceAccount(赋予权限)

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner-jenkins

namespace: kube-system

---

# 2. 创建 RBAC 权限(Provisioner 需要的权限)

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-jenkins-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "delete", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner-jenkins

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner-jenkins

namespace: kube-system

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-jenkins-runner

apiGroup: rbac.authorization.k8s.io

---

# 3. 部署 NFS Provisioner Deployment

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-client-provisioner-jenkins

namespace: kube-system

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner-jenkins

template:

metadata:

labels:

app: nfs-client-provisioner-jenkins

spec:

serviceAccountName: nfs-client-provisioner-jenkins

containers:

- name: nfs-client-provisioner-jenkins

image: k8s.gcr.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner-jenkins # 供给器名称(后续 StorageClass 要用到)

- name: NFS_SERVER

value: 192.168.121.70 # NFS 服务端 IP

- name: NFS_PATH

value: /data/nfs/jenkins # NFS 共享目录

volumes:

- name: nfs-client-root

nfs:

server: 192.168.121.70 # NFS 服务端 IP

path: /data/nfs/jenkins # NFS 共享目录

---

# 4. 创建 NFS StorageClass

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storage-jenkins # 这个名称要和 jenkins values.yaml 中的 storageClass 一致

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner-jenkins # 必须和上面的 PROVISIONER_NAME 一致

parameters:

archiveOnDelete: "false" # 删除 PVC 时自动删除 NFS 目录(避免残留)

reclaimPolicy: Delete # 回收策略(Delete/Retain)

allowVolumeExpansion: true # 允许 PVC 扩容

# 应用部署

root@k8s-master1:~/yaml/jenkins# kubectl apply -f nfs-provisioner.yaml7.2.2 创建 Jenkins PVC(基于 nfs-storage-jenkins)

bash

root@k8s-master1:~/yaml/jenkins# vim jenkins-pvc.yaml'

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: jenkins-pvc

namespace: jenkins

spec:

accessModes:

- ReadWriteMany # NFS支持多节点读写

resources:

requests:

storage: 10Gi # 按需调整存储大小

storageClassName: nfs-storage-jenkins # 关联创建的存储类

root@k8s-master1:~/yaml/jenkins# kubectl apply -f jenkins-pvc.yaml

# 验证PVC是否绑定(STATUS为Bound则正常)

root@k8s-master1:~/yaml/jenkins# kubectl get pvc -n jenkins

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

jenkins-pvc Bound pvc-89ce598e-e60a-4968-b0b6-92cc95808ac3 10Gi RWX nfs-storage-jenkins 5m17s7.2.3 创建 Jenkins Service(供 Ingress 访问)

bash

root@k8s-master1:~/yaml/jenkins# vim jenkins-service.yaml

apiVersion: v1

kind: Service

metadata:

name: jenkins-service

namespace: jenkins

spec:

selector:

app: jenkins

ports:

- port: 8080

targetPort: 8080

type: ClusterIP # 仅集群内访问,通过Ingress暴露外部

root@k8s-master1:~/yaml/jenkins# kubectl apply -f jenkins-service.yaml

# 验证Service是否创建成功

root@k8s-master1:~/yaml/jenkins# kubectl get svc -n jenkins

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

jenkins-service ClusterIP 10.104.191.198 <none> 8080/TCP 5m56s7.2.4 创建 Jenkins Deployment

bash

root@k8s-master1:~/yaml/jenkins# vim jenkins-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: jenkins

namespace: jenkins

spec:

replicas: 1

selector:

matchLabels:

app: jenkins

template:

metadata:

labels:

app: jenkins

spec:

securityContext:

fsGroup: 1000 # 与Jenkins用户gid一致

supplementalGroups: [1001] # 新增:加入docker组(GID通常为999,解决docker.sock权限)

containers:

- name: jenkins

image: jenkins/jenkins:2.528.2

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080 # Web页面端口

- containerPort: 50000 # Agent代理端口

#resources:

# limits:

# cpu: "2"

# memory: "4Gi"

# requests:

# cpu: "1"

# memory: "2Gi"

volumeMounts:

- name: jenkins-data

mountPath: /var/jenkins_home # Jenkins数据目录(核心挂载点)

- name: docker-sock

mountPath: /var/run/docker.sock # 容器内挂载路径,与宿主机一致

readOnly: false # 需可读写,否则无法操作Docker

# 挂载宿主机docker二进制文件

- name: docker-bin

mountPath: /usr/bin/docker

readOnly: true

env:

- name: JAVA_OPTS # 调整JVM参数,避免内存不足

value: "-Xms1g -Xmx2g"

volumes:

- name: jenkins-data

persistentVolumeClaim:

claimName: jenkins-pvc # 关联创建的PVC

- name: docker-sock

hostPath:

path: /var/run/docker.sock # 宿主机docker.sock路径

type: Socket # 指定类型为套接字文件,避免路径不存在报错

# 可选:定义docker二进制文件卷

- name: docker-bin

hostPath:

path: /usr/bin/docker # 宿主机docker客户端路径

type: File # 指定类型为文件

root@k8s-master1:~/yaml/jenkins# kubectl apply -f jenkins-deployment.yaml

# 验证Pod是否运行(状态为Running则正常,启动可能需要1-2分钟)

root@k8s-master1:~/yaml/jenkins# kubectl get pods -n jenkins

NAME READY STATUS RESTARTS AGE

jenkins-69b4f8c66b-4zwhs 1/1 Running 0 5m57s7.2.5 配置 Ingress Nginx(暴露 Jenkins Web 页面)

bash

root@k8s-master1:~/yaml/jenkins# vim jenkins-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: jenkins-ingress

namespace: jenkins

annotations:

nginx.ingress.kubernetes.io/rewrite-target: / # 重写规则

nginx.ingress.kubernetes.io/ssl-redirect: "false" # 暂时关闭HTTPS(按需开启)

nginx.ingress.kubernetes.io/proxy-body-size: "100m" # 允许大文件上传(Jenkins插件安装)

spec:

ingressClassName: nginx # 匹配Ingress Nginx控制器的class名称(默认是nginx)

rules:

- host: jenkins.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: jenkins-service

port:

number: 8080

root@k8s-master1:~/yaml/jenkins# kubectl apply -f jenkins-ingress.yaml

# 验证Ingress是否创建成功

root@k8s-master1:~/yaml/jenkins# kubectl get ingress -n jenkins

NAME CLASS HOSTS ADDRESS PORTS AGE

jenkins-ingress nginx jenkins.example.com 192.168.121.200,192.168.121.201,192.168.121.202 80 7m19s7.2.6 访问并初始化 Jenkins

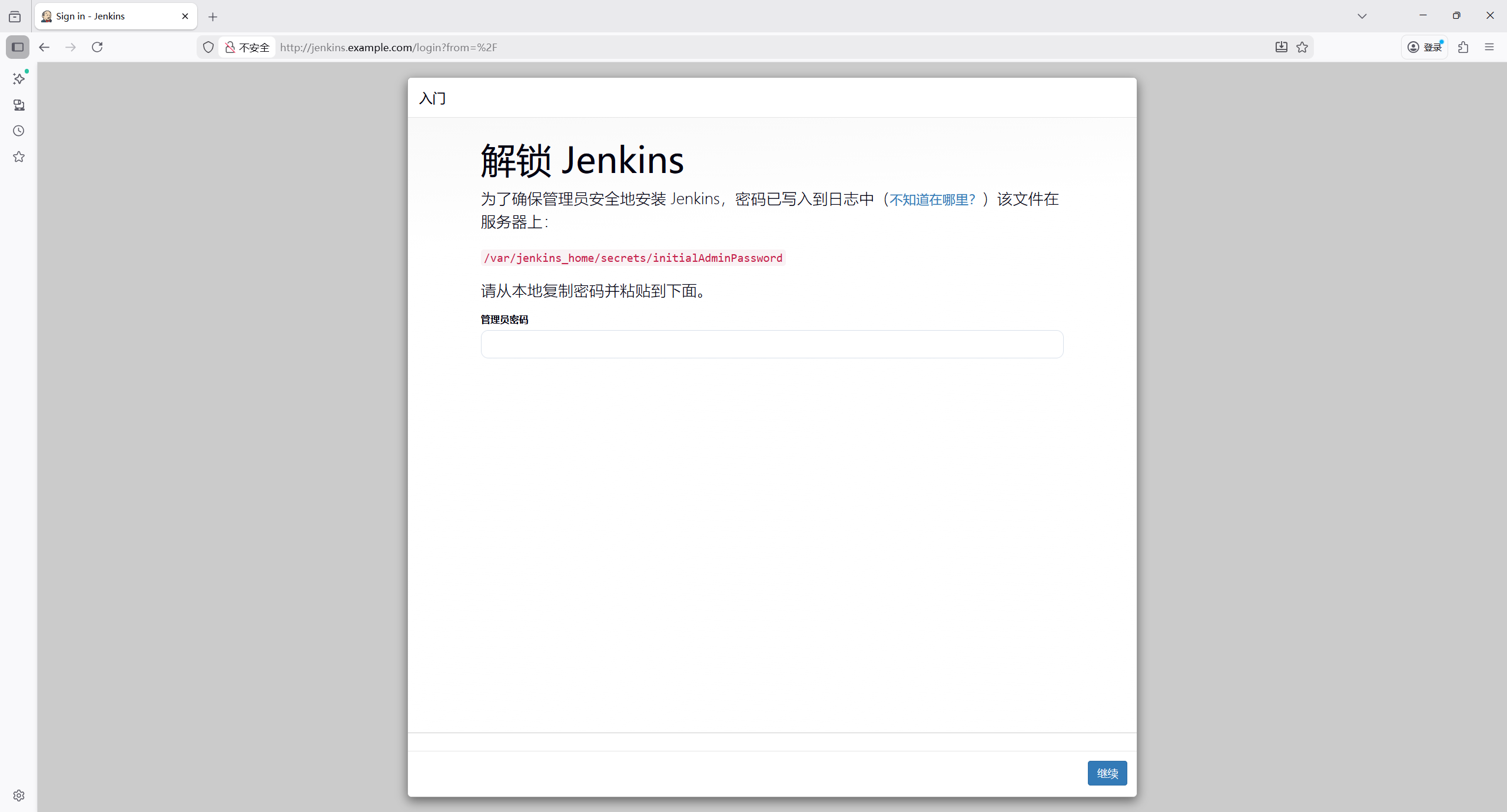

1.获取 Jenkins 初始管理员密码

Jenkins 启动后,初始密码存储在 Pod 的/var/jenkins_home/secrets/initialAdminPassword文件中,执行以下命令获取:

bash

root@k8s-master1:~/yaml/jenkins# kubectl exec -it jenkins-69b4f8c66b-4zwhs -n jenkins -- cat /var/jenkins_home/secrets/initialAdminPassword

55db431945824038b02511a845df35942.访问 Jenkins Web 页面

宿主机访问需要在hosts文件里面配置域名解析

192.168.121.201 jenkins.example.com

打开浏览器,访问配置的 Ingress 域名(jenkins.example.com),或 Ingress IP + 端口(如192.168.1.101:80`):

- 输入第一步获取的初始密码,点击 "继续"。

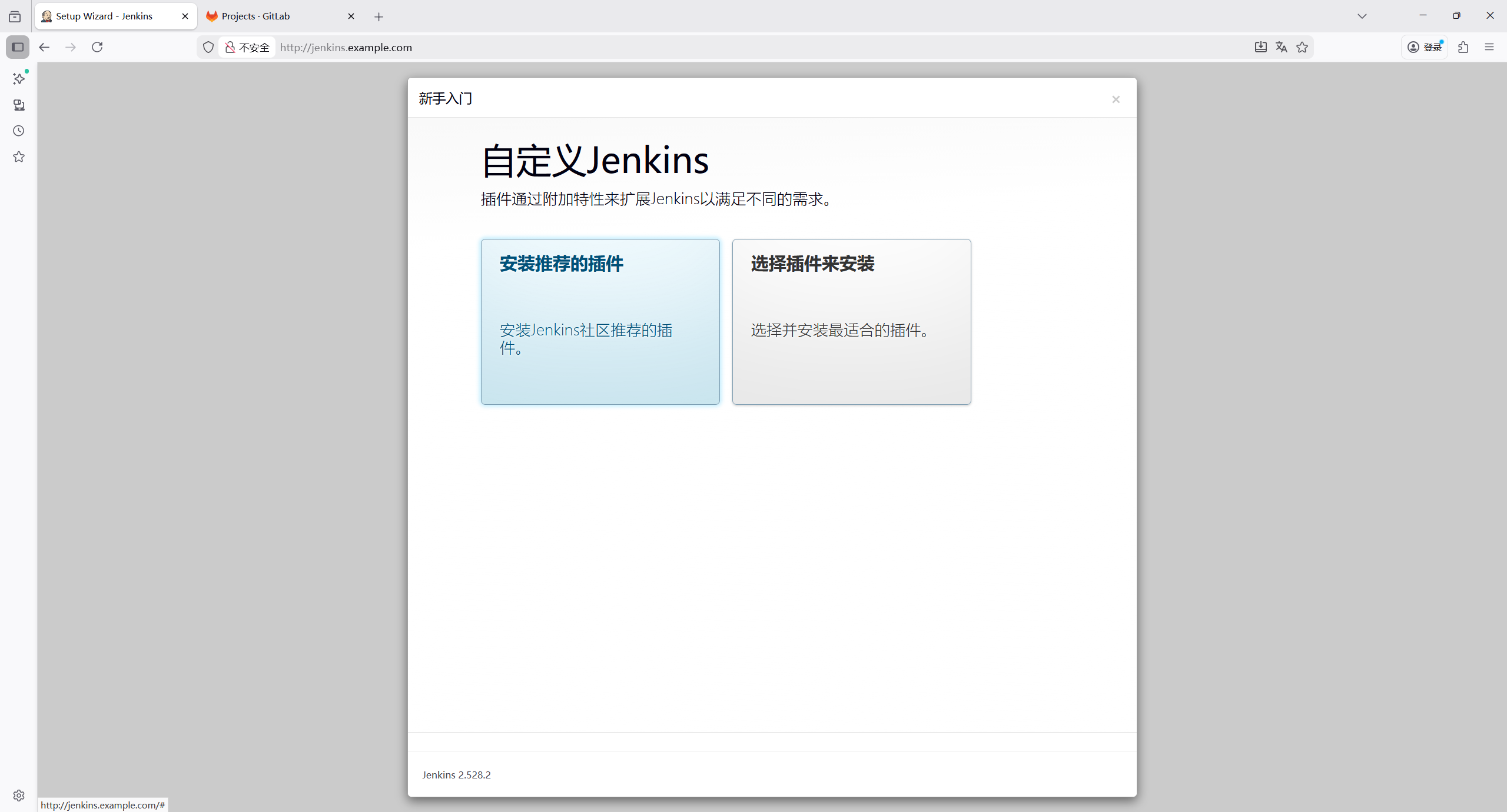

- 选择 "安装推荐的插件"(等待插件安装完成,可能需要几分钟)。

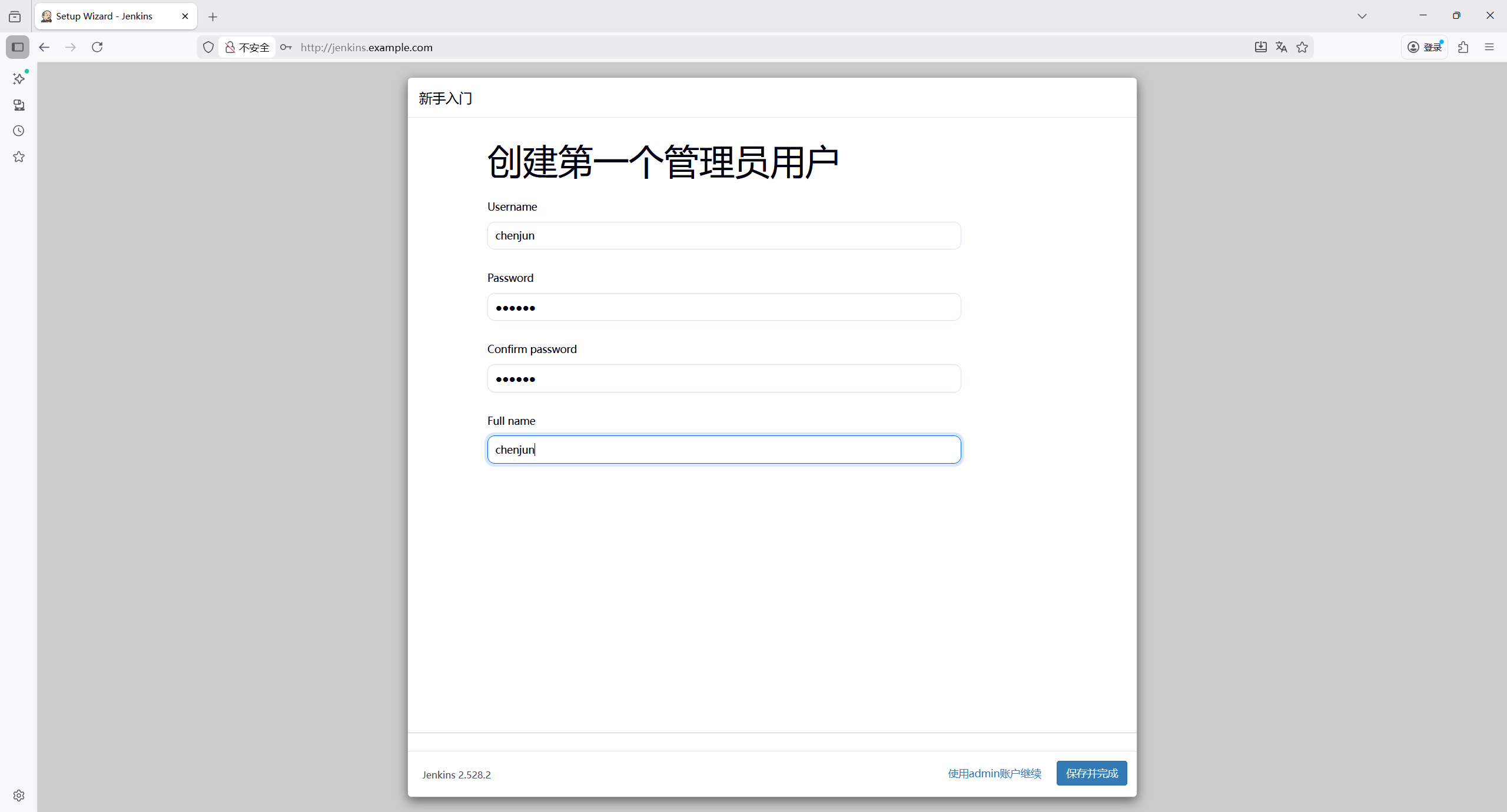

- 创建第一个管理员账户(用户名 / 密码自行设置)。

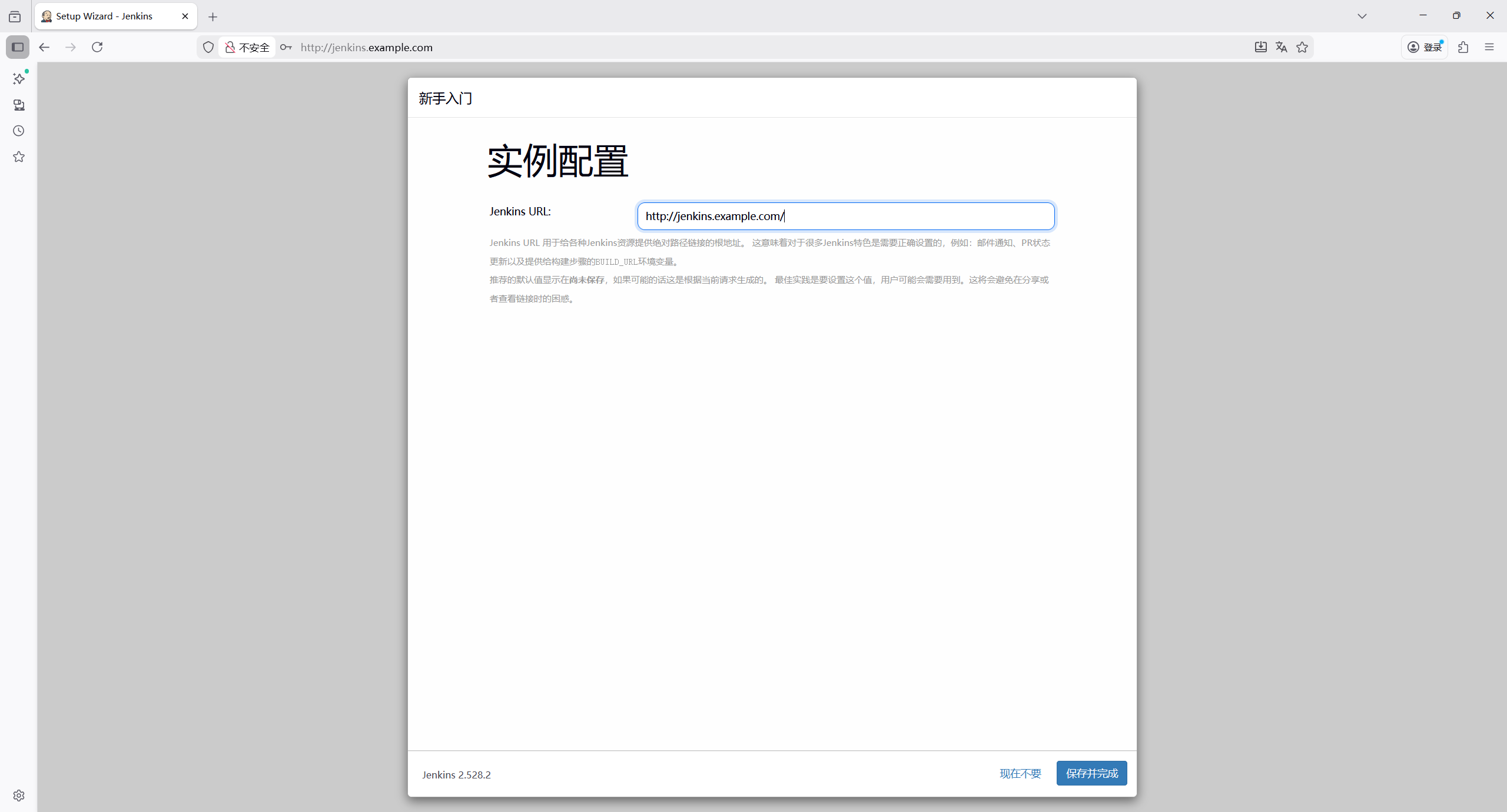

- 配置 Jenkins URL(建议使用 Ingress 的访问地址,jenkins.example.com`)。

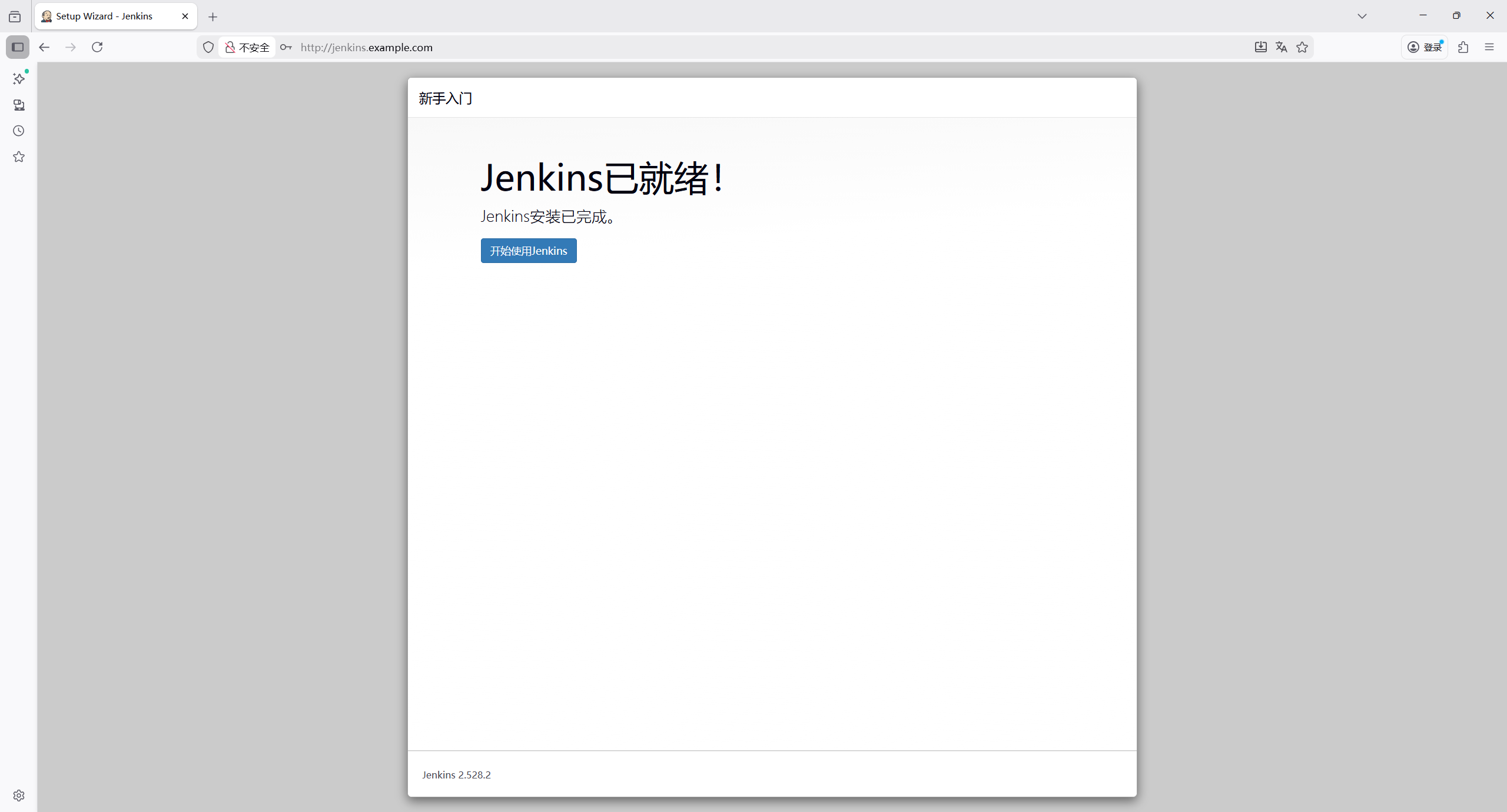

- 完成初始化,进入 Jenkins 主界面。

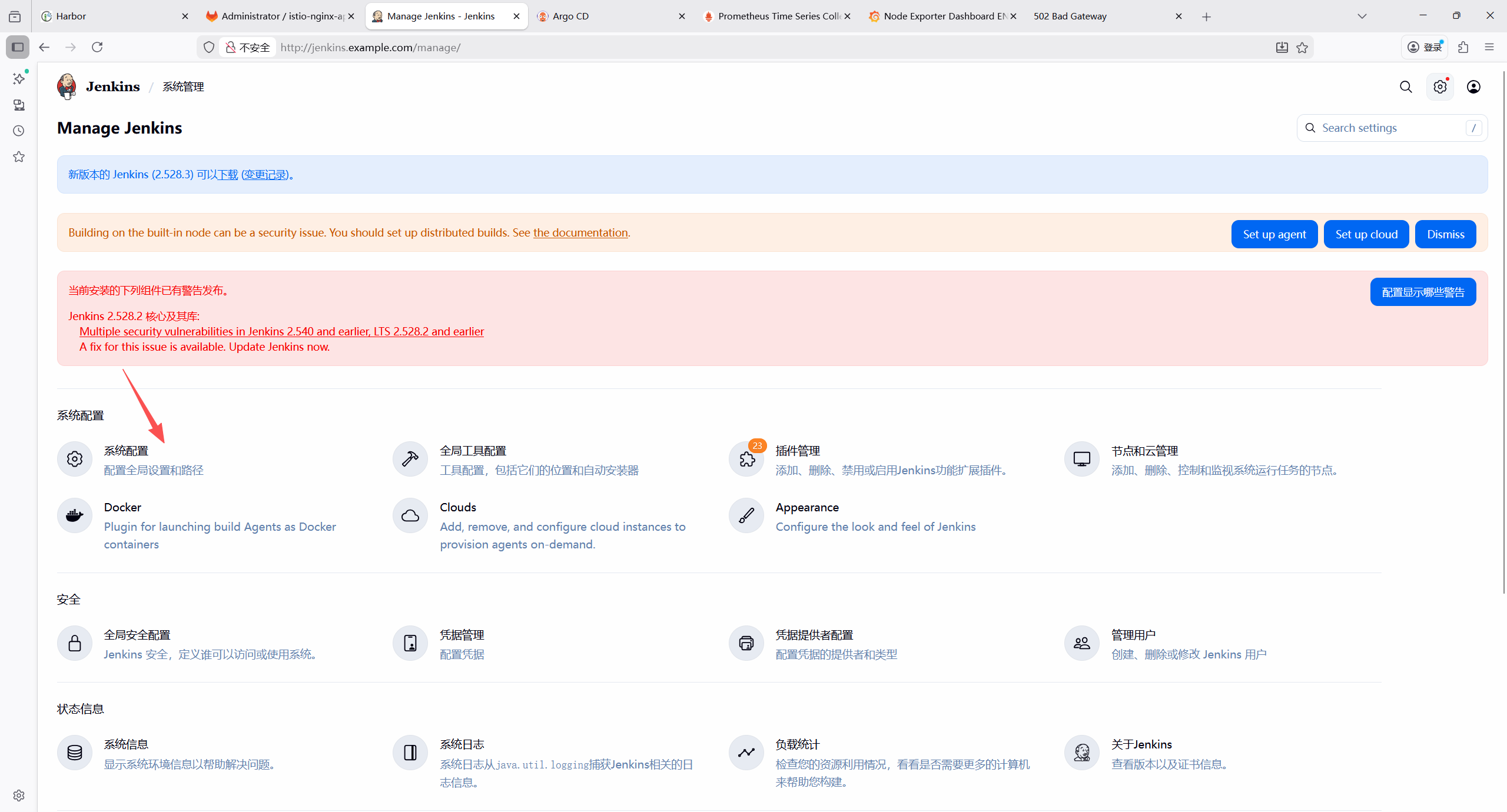

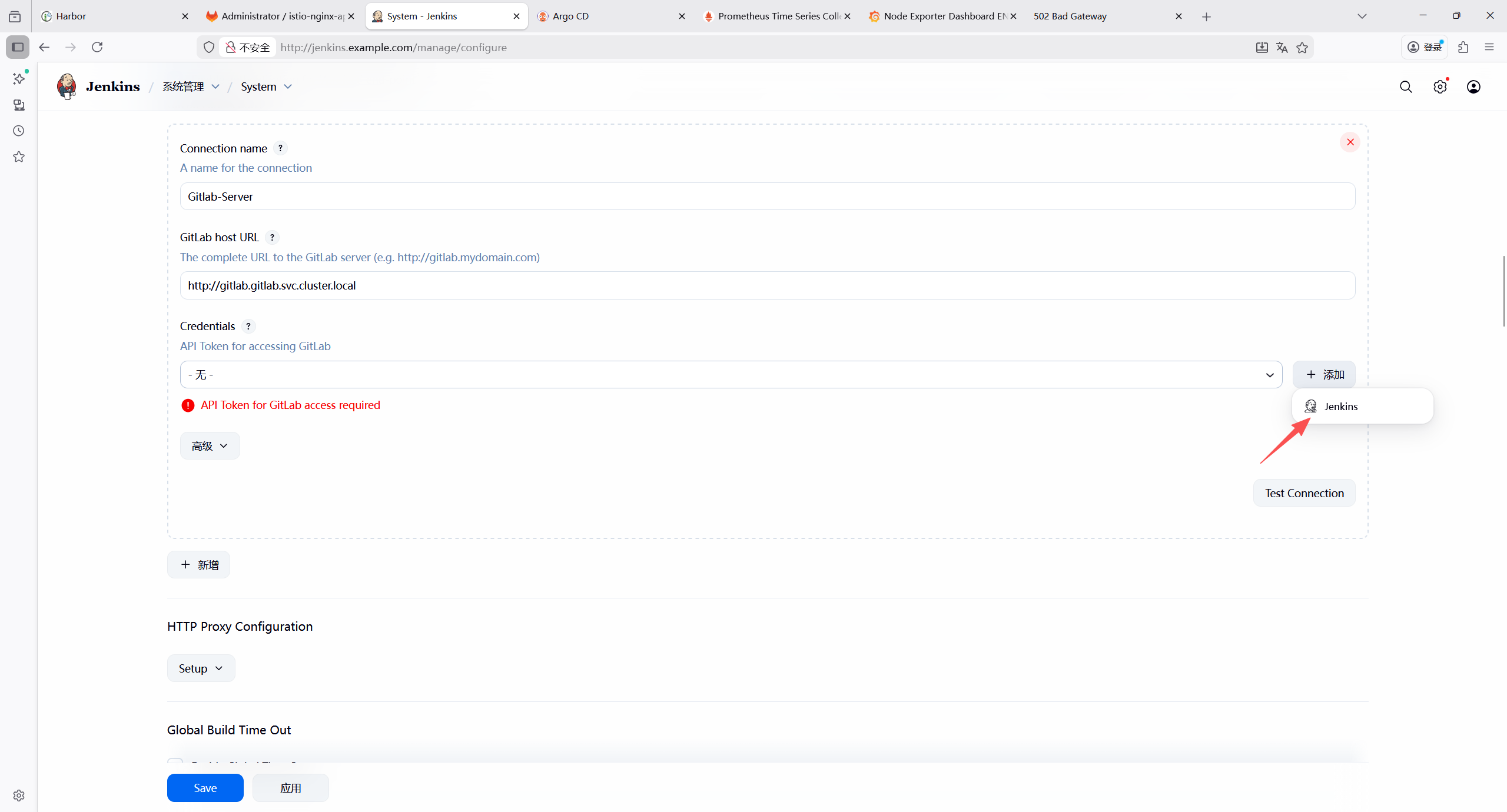

7.2.7 安装必要插件

GitLab Plugin(GitLab WebHook 触发)

Credentials Binding Plugin(凭证管理)

SSH Agent Plugin(GitLab SSH 提交)

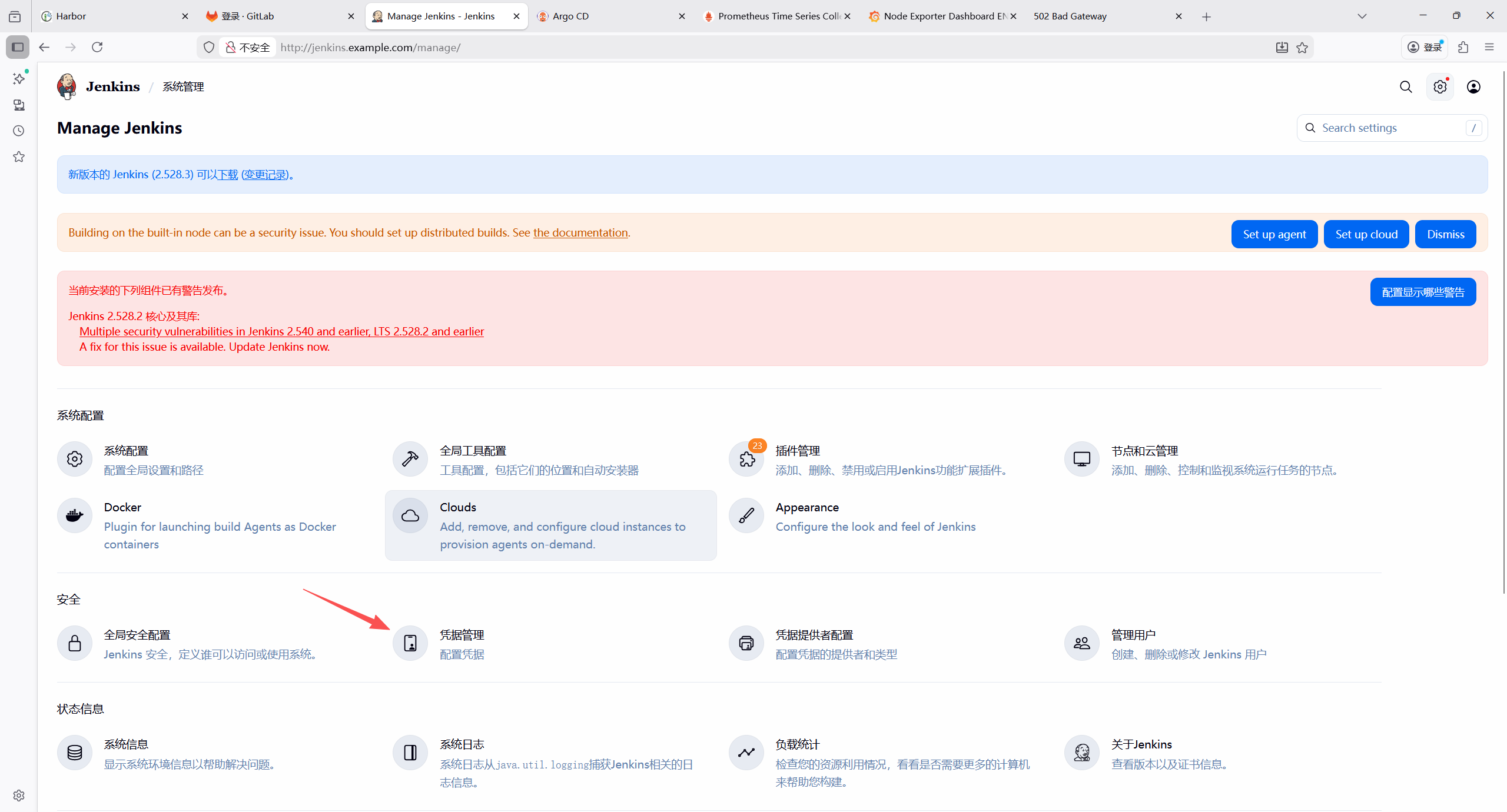

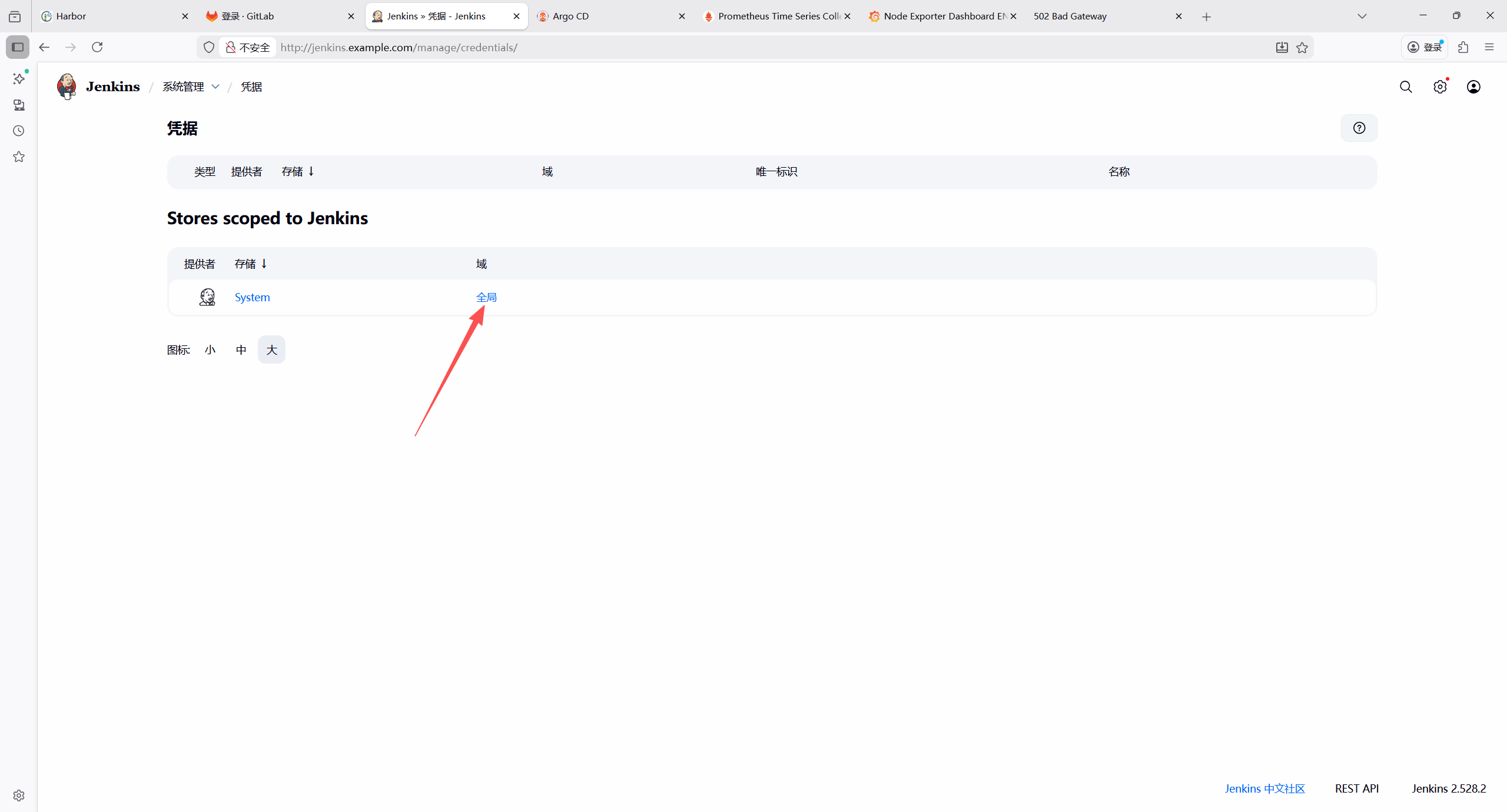

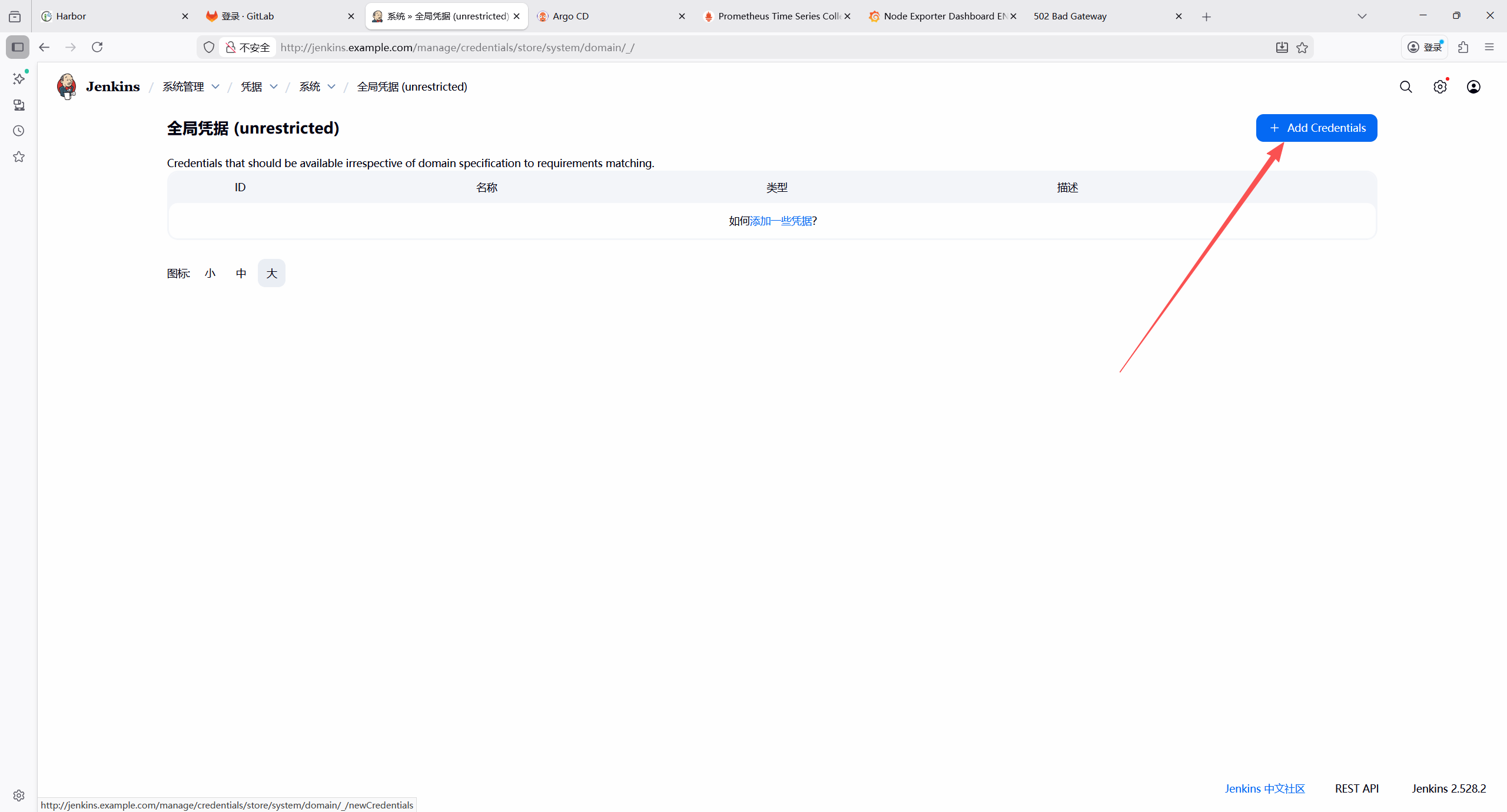

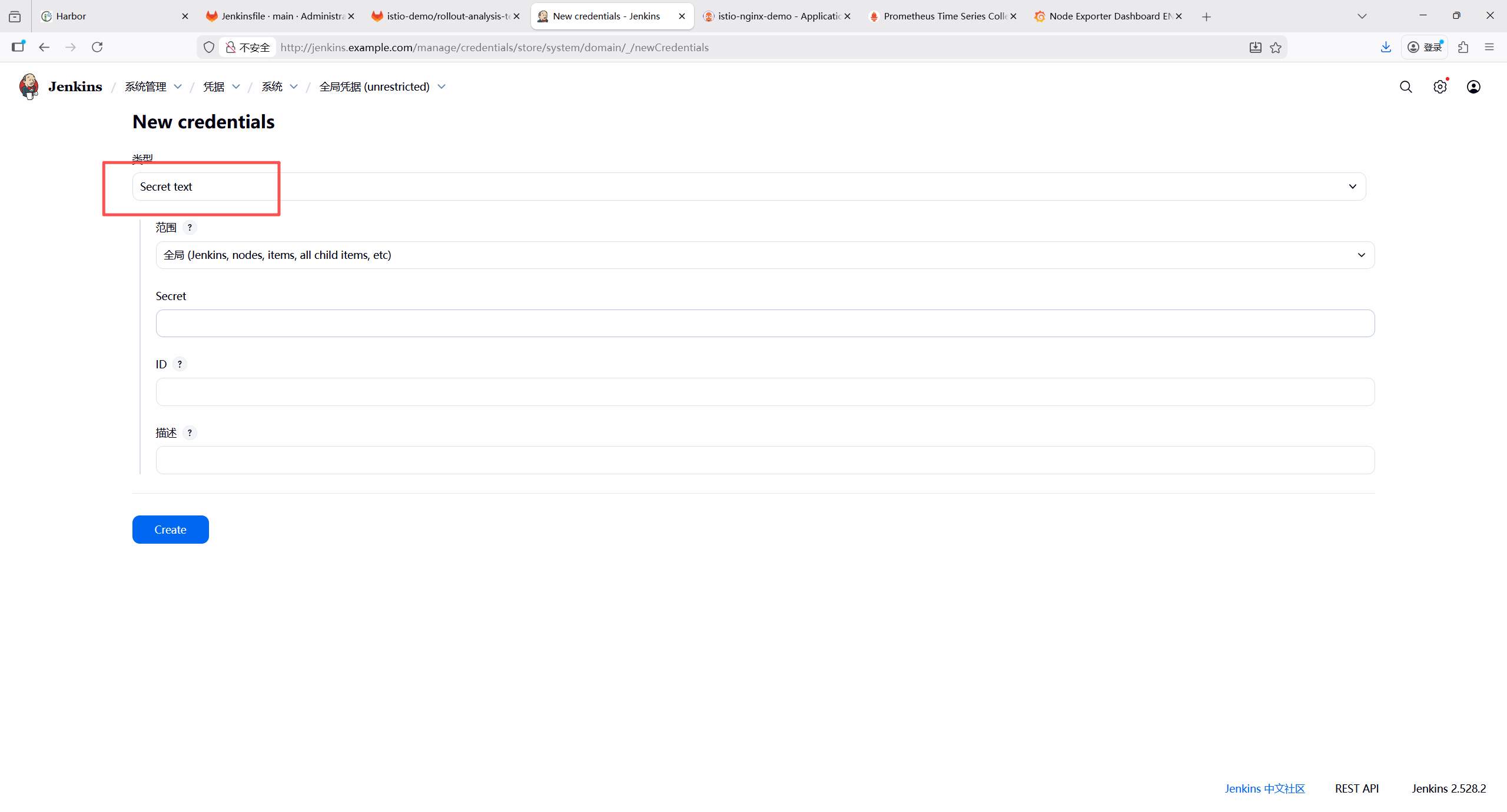

7.2.8 添加 Jenkins 凭证

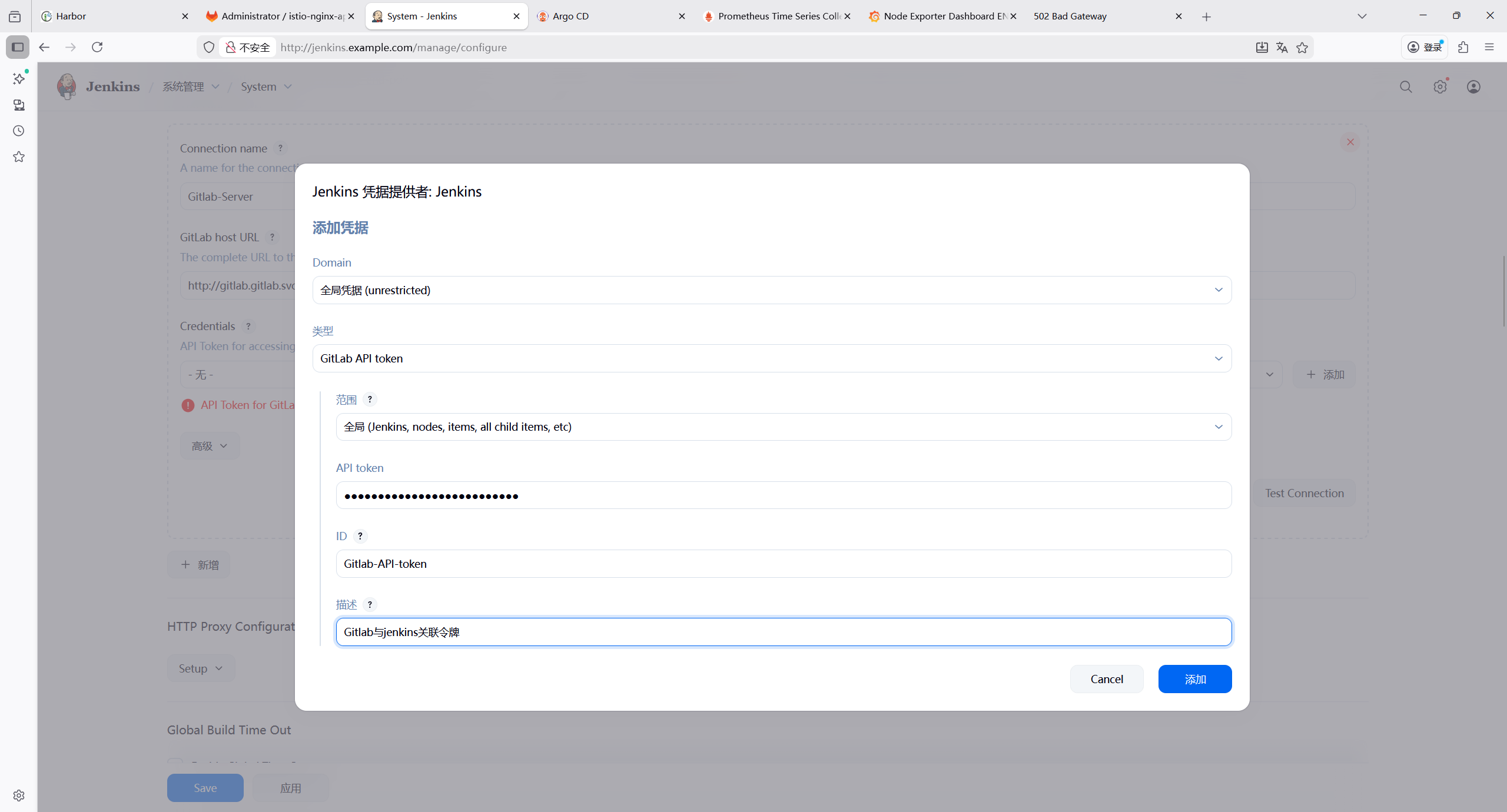

| 凭证类型 | 用途 | 配置项 |

|---|---|---|

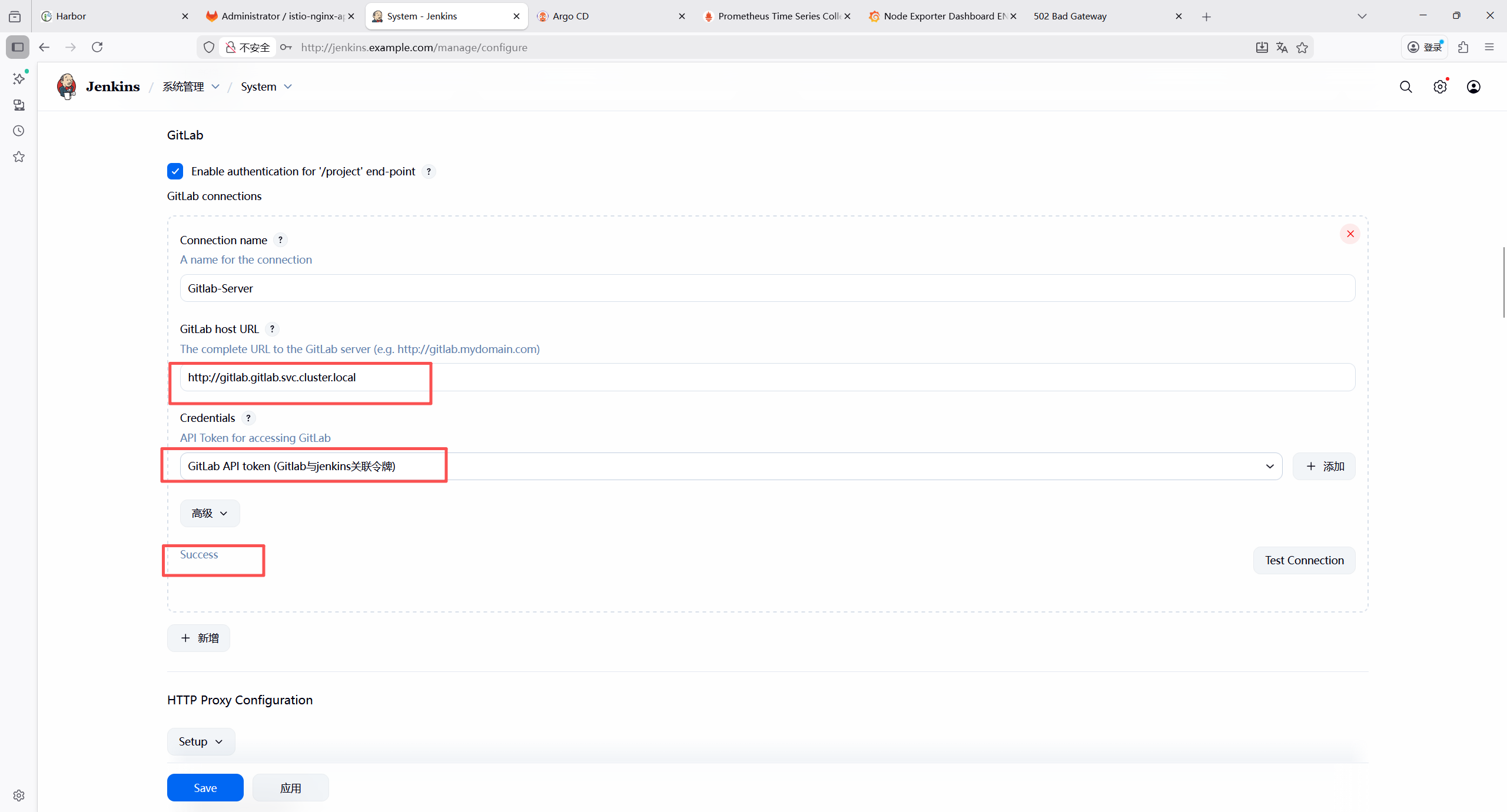

| GitLab API token | GitLab 与jenkins关联 | API token:GitLab 个人访问令牌(需仓库读写权限) |

| Secret text | GitLab 代码拉取与提交 | Secret:GitLab 个人访问令牌(需仓库读写权限) |

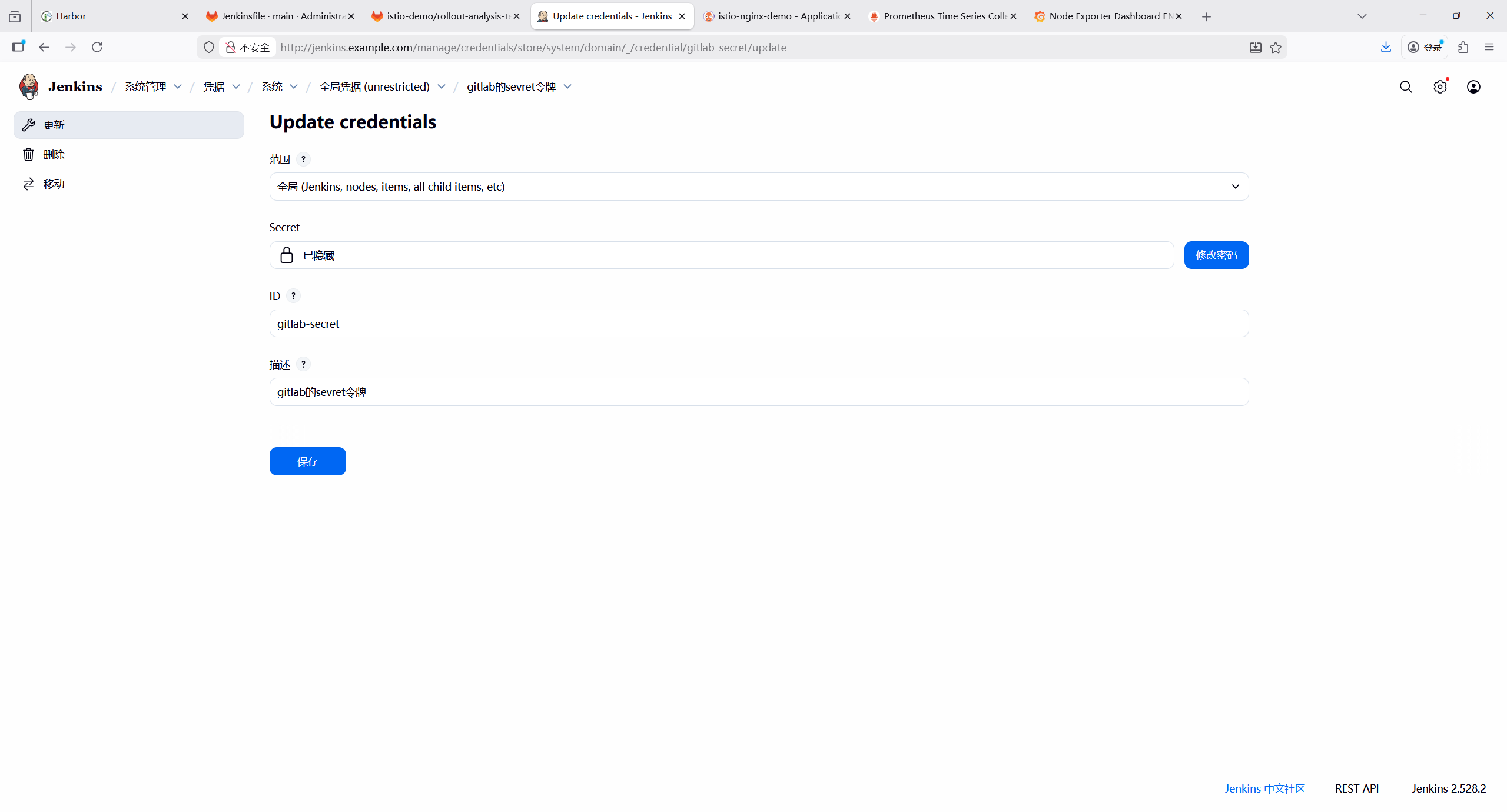

1.Gitlab凭证

Secret text类型凭证,用于后续拉取代码仓库

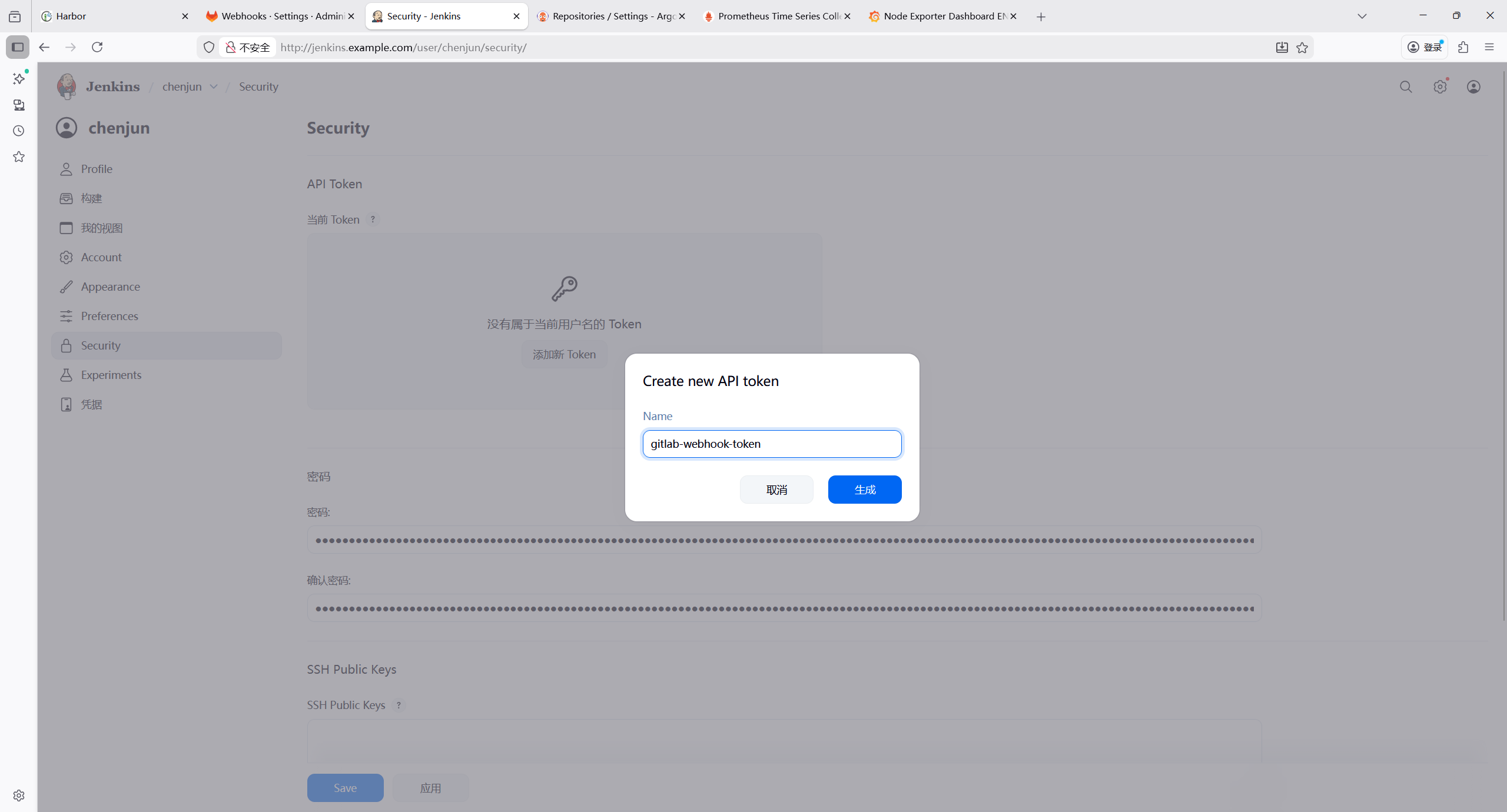

7.2.9 jenkins生成token

7.3 部署ArgoCD

7.3.1 下载ArgoCD 部署文件

bash

# 下载ArgoCD核心部署文件

root@k8s-master1:~/yaml/argocd# wget https://raw.githubusercontent.com/argoproj/argo-cd/v2.4.15/manifests/install.yaml

root@k8s-master1:~/yaml/argocd# mv install.yaml argocd-deploy.yaml

root@k8s-master1:~/yaml/argocd# kubectl apply -f argocd-deploy.yaml -n argocd 7.3.2 创建 Ingress 规则暴露 Web 页面

bash

root@k8s-master1:~/yaml/argocd# vim argocd-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: argocd-server-ingress

namespace: argocd

annotations:

# 强制 HTTP 重定向到 HTTPS(Ingress 层处理)

nginx.ingress.kubernetes.io/ssl-redirect: "true"

# 关键:指定后端服务协议为 HTTPS(匹配 ArgoCD Server 默认模式)

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

spec:

ingressClassName: nginx

tls:

- hosts:

- argocd.example.com

secretName: argocd-tls-secret

rules:

- host: argocd.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: argocd-server

port:

number: 443 # ArgoCD Server 默认 HTTPS 端口

root@k8s-master1:~/yaml/argocd# kubectl apply -f argocd-ingress.yaml7.3.3 验证启动状态

bash

root@k8s-master1:~/yaml/argocd# kubectl get pod -n argocd

NAME READY STATUS RESTARTS AGE

argocd-application-controller-0 1/1 Running 0 11m

argocd-applicationset-controller-7b4f85b695-mnhqf 1/1 Running 0 11m

argocd-dex-server-6b9cf7846f-88wp9 1/1 Running 0 11m

argocd-notifications-controller-84849bb64d-4pb2k 1/1 Running 0 11m

argocd-redis-599b855497-l4jgj 1/1 Running 0 11m

argocd-repo-server-5667fd476b-2rj4l 1/1 Running 0 11m

argocd-server-f4fb696b8-w4gff 1/1 Running 0 11m

root@k8s-master1:~/yaml/argocd# kubectl get svc -n argocd

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

argocd-applicationset-controller ClusterIP 10.101.181.22 <none> 7000/TCP,8080/TCP 11m

argocd-dex-server ClusterIP 10.97.211.53 <none> 5556/TCP,5557/TCP,5558/TCP 11m

argocd-metrics ClusterIP 10.97.90.112 <none> 8082/TCP 11m

argocd-notifications-controller-metrics ClusterIP 10.105.12.227 <none> 9001/TCP 11m

argocd-redis ClusterIP 10.110.228.29 <none> 6379/TCP 11m

argocd-repo-server ClusterIP 10.105.134.22 <none> 8081/TCP,8084/TCP 11m

argocd-server ClusterIP 10.105.126.72 <none> 80/TCP,443/TCP 11m

argocd-server-metrics ClusterIP 10.104.110.113 <none> 8083/TCP 11m

root@k8s-master1:~/yaml/argocd# kubectl get ingress -n argocd

NAME CLASS HOSTS ADDRESS PORTS AGE

argocd-server-ingress nginx argocd.example.com 192.168.121.200,192.168.121.201,192.168.121.202 80, 443 6m8s7.3.4 获取 ArgoCD 初始管理员密码

bash

root@k8s-master1:~/yaml/argocd# kubectl -n argocd get secret argocd-initial-admin-secret -o jsonpath="{.data.password}" | base64 -d && echo

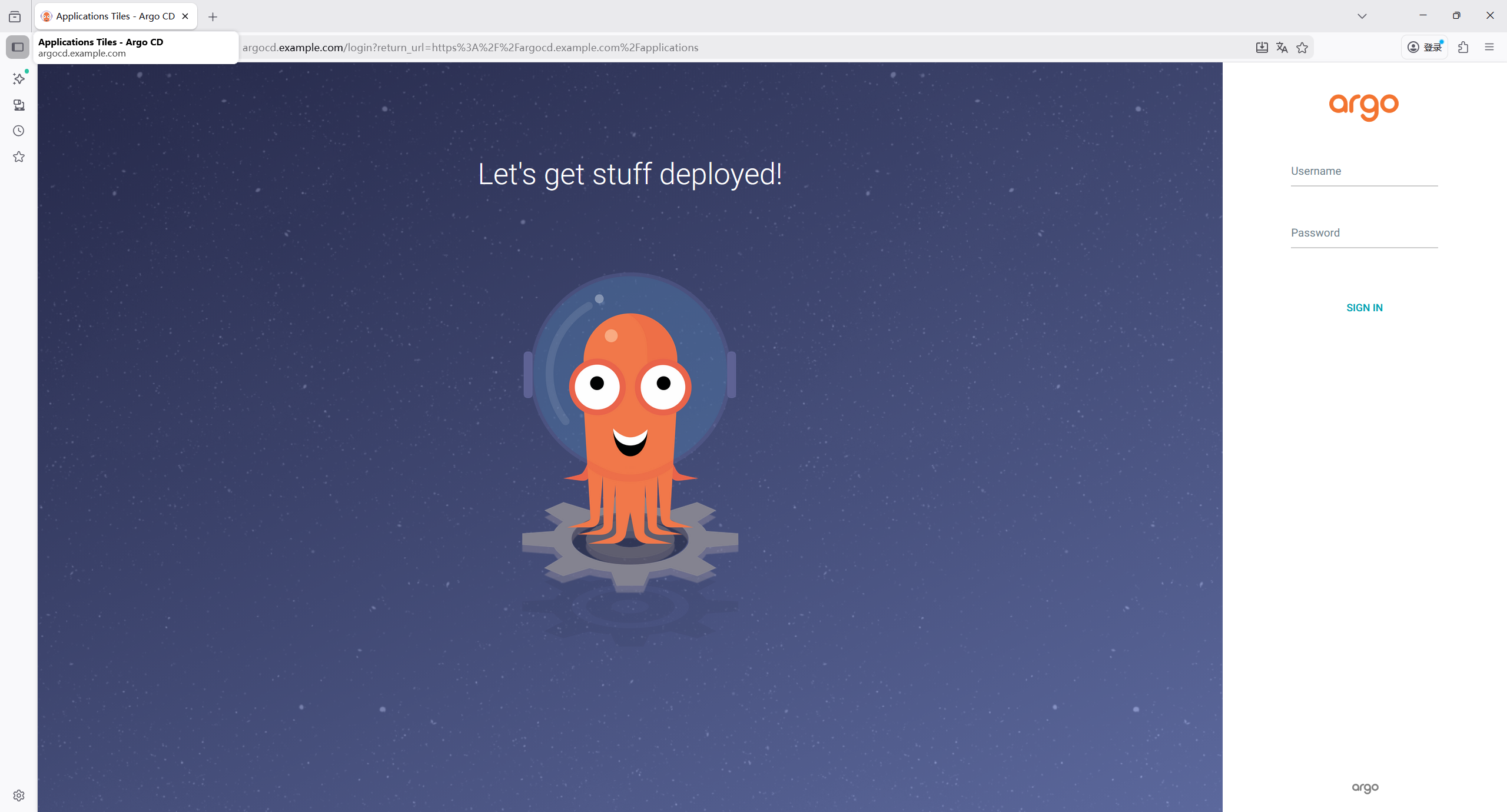

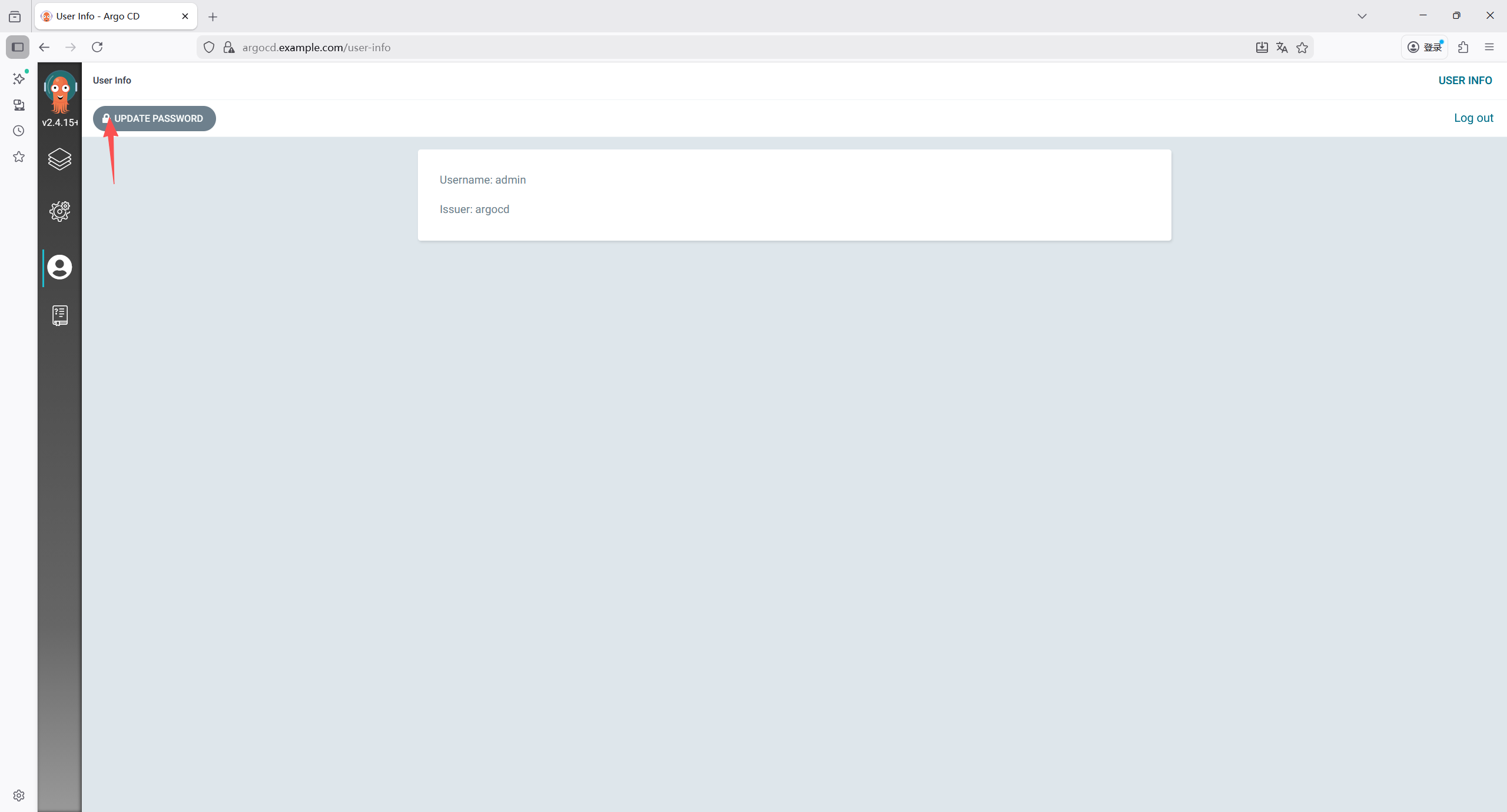

hMLMyqt2MfXmy1Hu7.3.5 登录 ArgoCD(Web UI)

宿主机访问需要在hosts文件里面配置域名解析

192.168.121.202 argocd.example.com

ArgoCD 默认管理员用户名是 admin

修改密码

8 部署Prometheus+Grafana+AlertManager(kube-prometheus-stack)

8.1 部署 NFS 动态存储供给器(NFS Provisioner)

bash

root@k8s-master1:~/yaml# mkdir monitoring

root@k8s-master1:~/yaml/monitoring# vim nfs-provisioner.yaml

---

# 1. 创建 ServiceAccount(赋予权限)

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner-monitoring

namespace: kube-system

---

# 2. 创建 RBAC 权限(Provisioner 需要的权限)

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-monitoring-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "delete", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner-monitoring

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner-monitoring

namespace: kube-system

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-monitoring-runner

apiGroup: rbac.authorization.k8s.io

---

# 3. 部署 NFS Provisioner Deployment

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-client-provisioner-monitoring

namespace: kube-system

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner-monitoring

template:

metadata:

labels:

app: nfs-client-provisioner-monitoring

spec:

serviceAccountName: nfs-client-provisioner-monitoring

containers:

- name: nfs-client-provisioner-monitoring

image: k8s.gcr.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner-monitoring # 供给器名称(后续 StorageClass 要用到)

- name: NFS_SERVER

value: 192.168.121.70 # NFS 服务端 IP

- name: NFS_PATH

value: /data/nfs/monitoring # NFS 共享目录

volumes:

- name: nfs-client-root

nfs:

server: 192.168.121.70 # NFS 服务端 IP

path: /data/nfs/monitoring # NFS 共享目录

---

# 4. 创建 NFS StorageClass

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storage-monitoring # 这个名称要和 monitoring values.yaml 中的 storageClass 一致

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner-monitoring # 必须和上面的 PROVISIONER_NAME 一致

parameters:

archiveOnDelete: "false" # 删除 PVC 时自动删除 NFS 目录(避免残留)

reclaimPolicy: Delete # 回收策略(Delete/Retain)

allowVolumeExpansion: true # 允许 PVC 扩容8.2 配置 Helm Values 文件

bash

# 添加 Prometheus 社区仓库

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm repo update

root@k8s-master1:~/yaml/monitoring# vim values.yaml

# prometheus-values.yaml

global:

# 如果集群使用自定义域名,设置外部访问地址

externalUrl: "http://prometheus.example.com"

# 配置持久化存储(PVC)

prometheus:

ingress:

ingressClassName: nginx

enabled: true

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

hosts:

- prometheus.example.com # 域名

paths: ["/"]

prometheusSpec:

storageSpec:

volumeClaimTemplate:

spec:

storageClassName: nfs-storage-monitoring # 使用 NFS StorageClass

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 20Gi # 根据需求调整

# Grafana 配置(持久化 + Ingress)

grafana:

persistence:

enabled: true

storageClassName: nfs-storage-monitoring

size: 10Gi

adminPassword: "123456"

ingress:

ingressClassName: nginx

enabled: true

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

hosts:

- grafana.example.com # 域名

paths: ["/"]

# AlertManager 配置(持久化 + Ingress)

alertmanager:

alertmanagerSpec:

storage:

volumeClaimTemplate:

spec:

storageClassName: nfs-storage-monitoring

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 10Gi

ingress:

ingressClassName: nginx

enabled: true

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

hosts:

- alertmanager.example.com # 域名

paths: ["/"]

# 部署

root@k8s-master1:~/yaml/monitoring# helm install prometheus prometheus-community/kube-prometheus-stack -f values.yaml --namespace monitoring --version 31.0.0

# 如需要更新

root@k8s-master1:~/yaml/monitoring# helm upgrade prometheus prometheus-community/kube-prometheus-stack -f values.yaml --namespace monitoring --version 31.0.08.3 检查服务状态

bash

root@k8s-master1:~/yaml/monitoring# kubectl get svc -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 29m

prometheus-grafana ClusterIP 10.102.91.223 <none> 80/TCP 29m

prometheus-kube-prometheus-alertmanager ClusterIP 10.103.65.25 <none> 9093/TCP 29m

prometheus-kube-prometheus-operator ClusterIP 10.103.90.158 <none> 443/TCP 29m

prometheus-kube-prometheus-prometheus ClusterIP 10.105.216.248 <none> 9090/TCP 29m

prometheus-kube-state-metrics ClusterIP 10.107.217.252 <none> 8080/TCP 29m

prometheus-operated ClusterIP None <none> 9090/TCP 29m

prometheus-prometheus-node-exporter ClusterIP 10.110.245.180 <none> 9100/TCP 29m

root@k8s-master1:~/yaml/monitoring# kubectl get pod -n monitoring

NAME READY STATUS RESTARTS AGE

alertmanager-prometheus-kube-prometheus-alertmanager-0 2/2 Running 0 29m

prometheus-grafana-5f48d6c84d-96tjc 3/3 Running 0 29m

prometheus-kube-prometheus-operator-6cd54566dc-8lvws 1/1 Running 0 29m

prometheus-kube-state-metrics-85b8d66959-fmqgq 1/1 Running 0 29m

prometheus-prometheus-kube-prometheus-prometheus-0 2/2 Running 0 29m

prometheus-prometheus-node-exporter-455ph 1/1 Running 0 29m

prometheus-prometheus-node-exporter-8plhr 1/1 Running 0 29m

prometheus-prometheus-node-exporter-jksx5 1/1 Running 0 29m

prometheus-prometheus-node-exporter-nrjsh 1/1 Running 0 29m

prometheus-prometheus-node-exporter-qvvsg 1/1 Running 0 29m

prometheus-prometheus-node-exporter-wtcmd 1/1 Running 0 29m

root@k8s-master1:~/yaml/monitoring# kubectl get pvc -n monitoring

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

alertmanager-prometheus-kube-prometheus-alertmanager-db-alertmanager-prometheus-kube-prometheus-alertmanager-0 Bound pvc-5436f4bc-218b-4a19-9075-6d2bafd020b2 10Gi RWO nfs-storage-monitoring 29m

prometheus-grafana Bound pvc-393ba029-a7b3-4712-a49a-b613029cd15e 10Gi RWO nfs-storage-monitoring 29m

prometheus-prometheus-kube-prometheus-prometheus-db-prometheus-prometheus-kube-prometheus-prometheus-0 Bound pvc-e95ab19b-f86b-4de0-a056-14231224f04d 20Gi RWO nfs-storage-monitoring 29m

root@k8s-master1:~/yaml/monitoring# kubectl get ingress -n monitoring

NAME CLASS HOSTS ADDRESS PORTS AGE

prometheus-grafana nginx grafana.example.com 192.168.121.200,192.168.121.201,192.168.121.202 80 29m

prometheus-kube-prometheus-alertmanager nginx alertmanager.example.com 192.168.121.200,192.168.121.201,192.168.121.202 80 29m

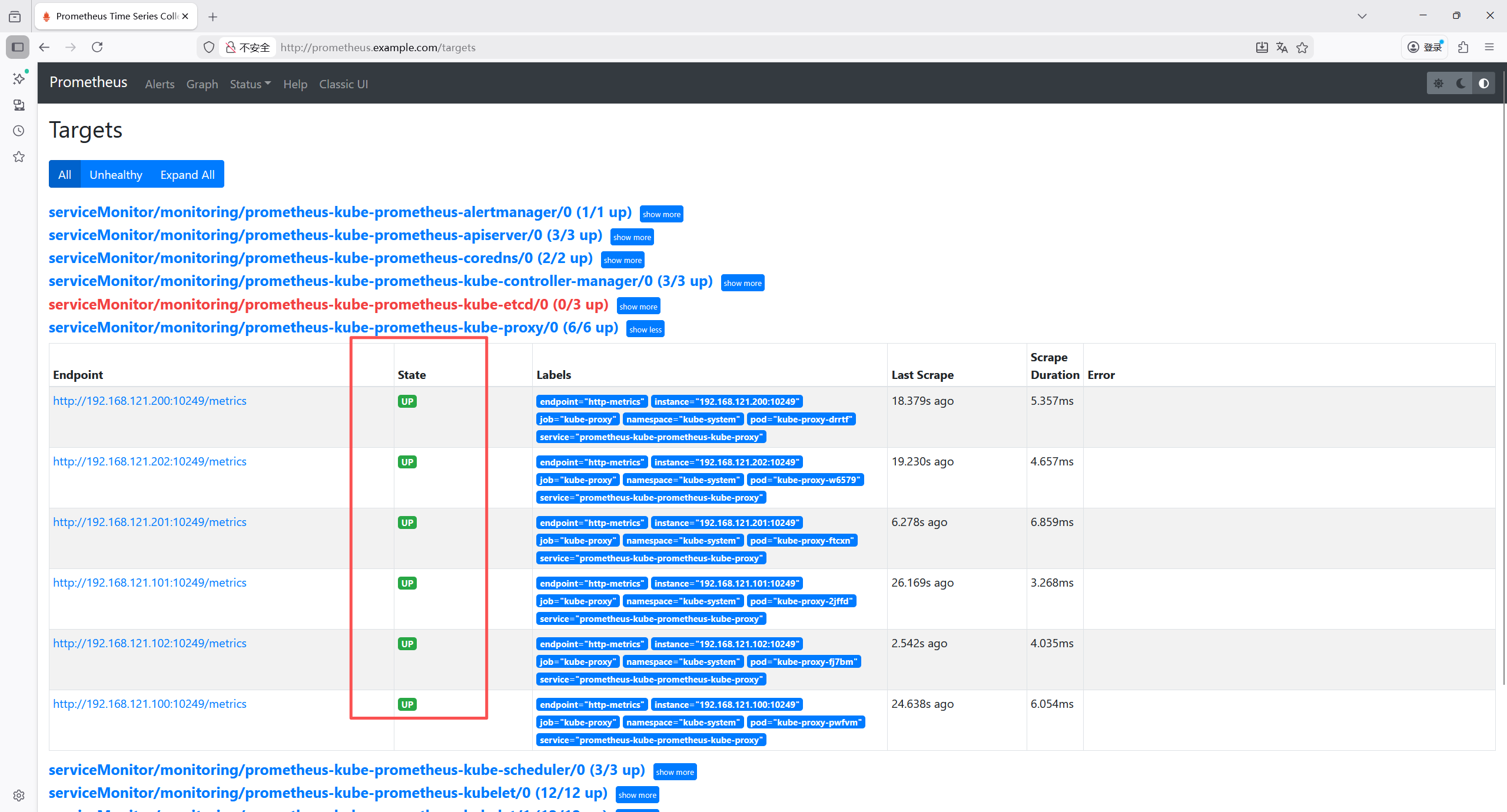

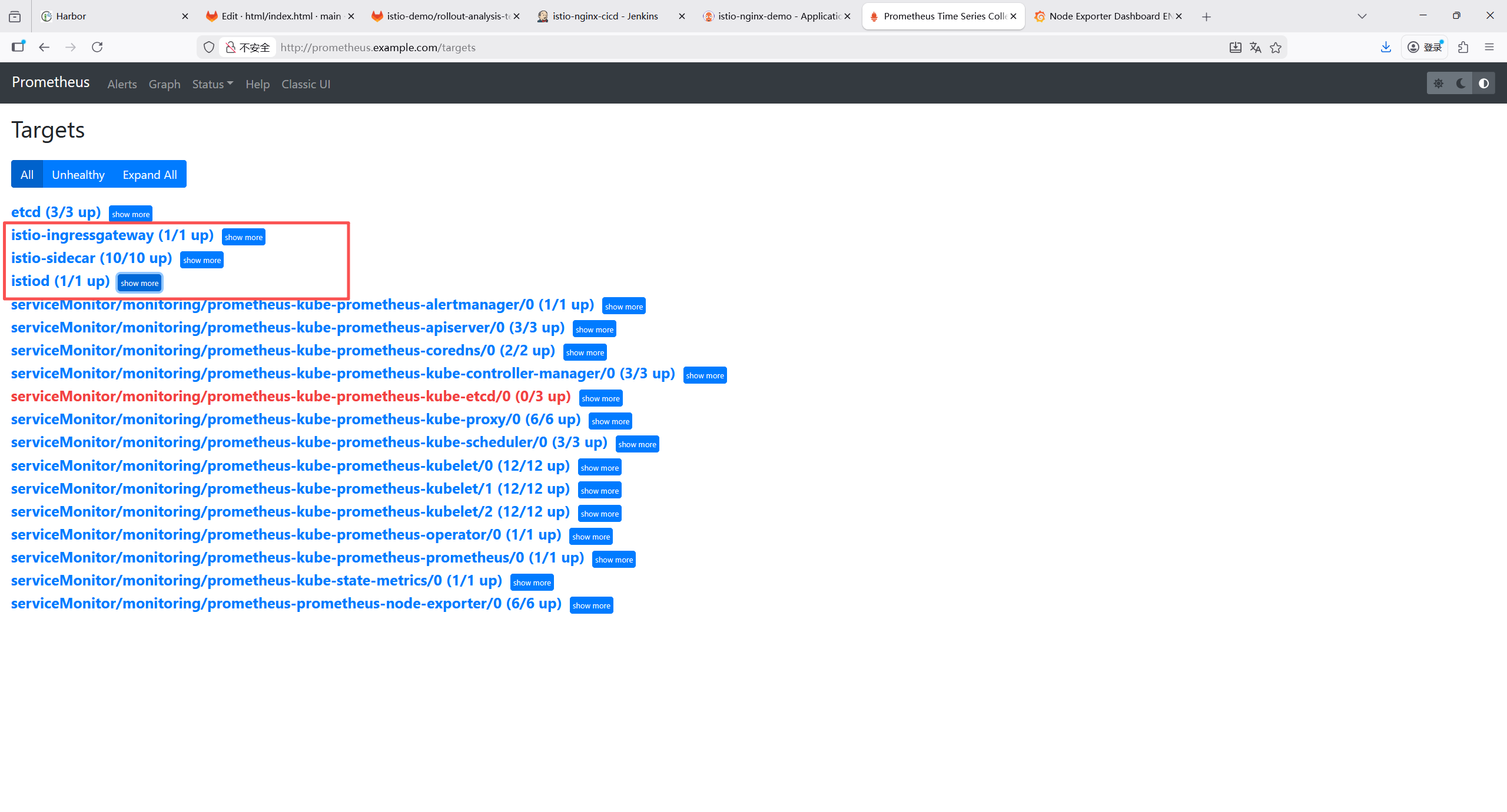

prometheus-kube-prometheus-prometheus nginx prometheus.example.com 192.168.121.200,192.168.121.201,192.168.121.202 80 29m8.4 验证监控目标

在主机hosts文件添加

192.168.121.200 prometheus.example.com

192.168.121.200 grafana.example.com

192.168.121.200 alertmanager.example.com

浏览器输入prometheus.example.com访问web页面

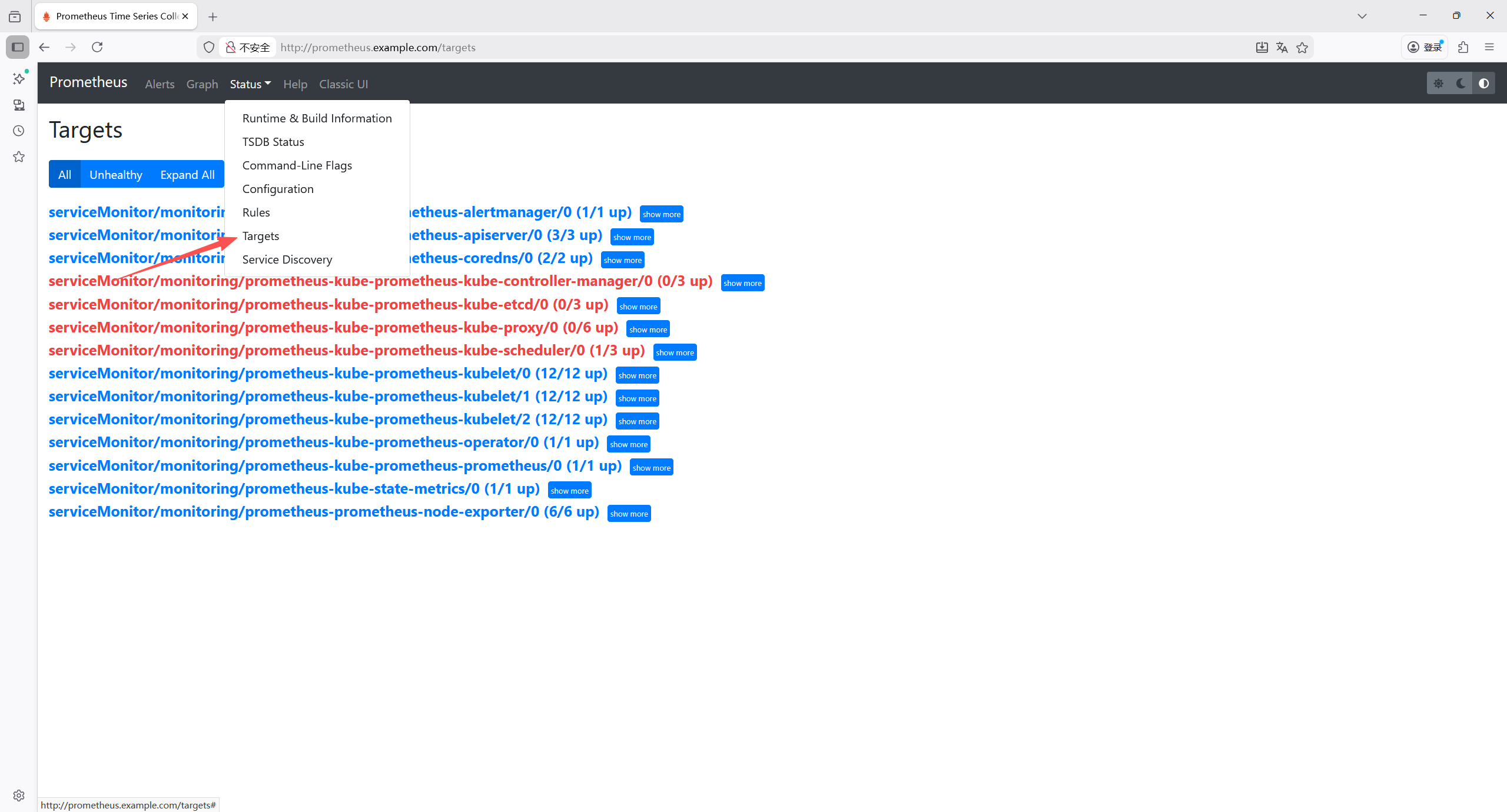

进入status ->targets,确认目前状态是否up

K8s 核心组件(kube-controller-manager、kube-etcd、kube-proxy、kube-scheduler)的监控抓取全部失败,报错为connection refused(连接被拒绝),核心原因是这些组件默认仅监听本地回环地址(127.0.0.1)或未正确暴露 metrics 端点,导致 Prometheus 无法从集群内网 IP 访问。

8.5 k8s集群核心组件开放metrics 访问

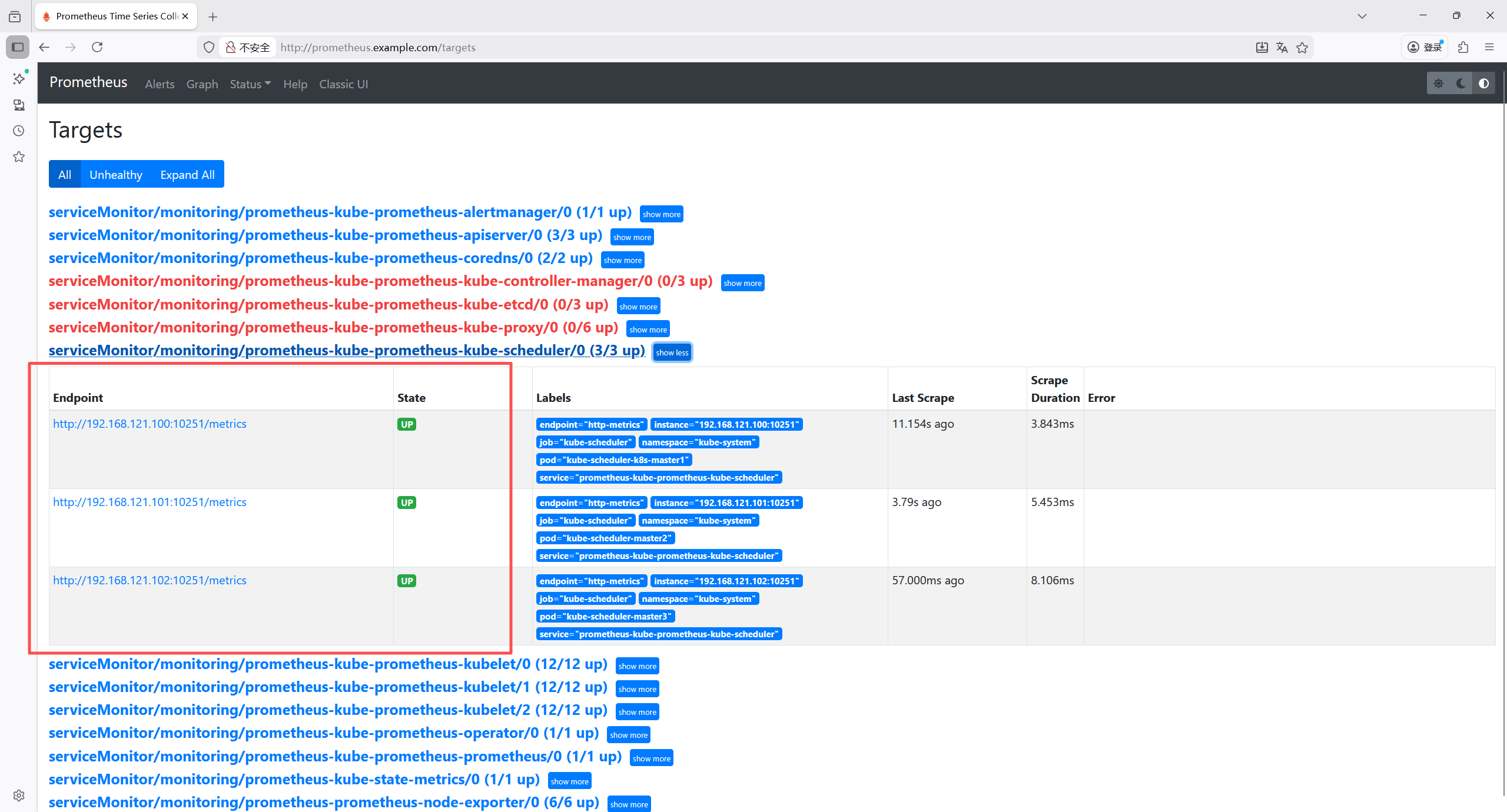

8.5.1 修复 kube-scheduler(所有master节点操作)

bash

root@k8s-master1:~/yaml/monitoring# vim /etc/kubernetes/manifests/kube-scheduler.yaml

spec:

containers:

- command:

- kube-scheduler

- --authentication-kubeconfig=/etc/kubernetes/scheduler.conf

- --authorization-kubeconfig=/etc/kubernetes/scheduler.conf

- --bind-address=0.0.0.0 # 把127.0.0.1修改成0.0.0.0

- --kubeconfig=/etc/kubernetes/scheduler.conf

- --leader-elect=true

#- --port=0 # 注释此行

# 由于是kubeadm部署的,kubelet 会在 10 秒内检测到文件变更并自动重启 Pod,无需手动执行 systemctl 命令。再去查看状态,显示up

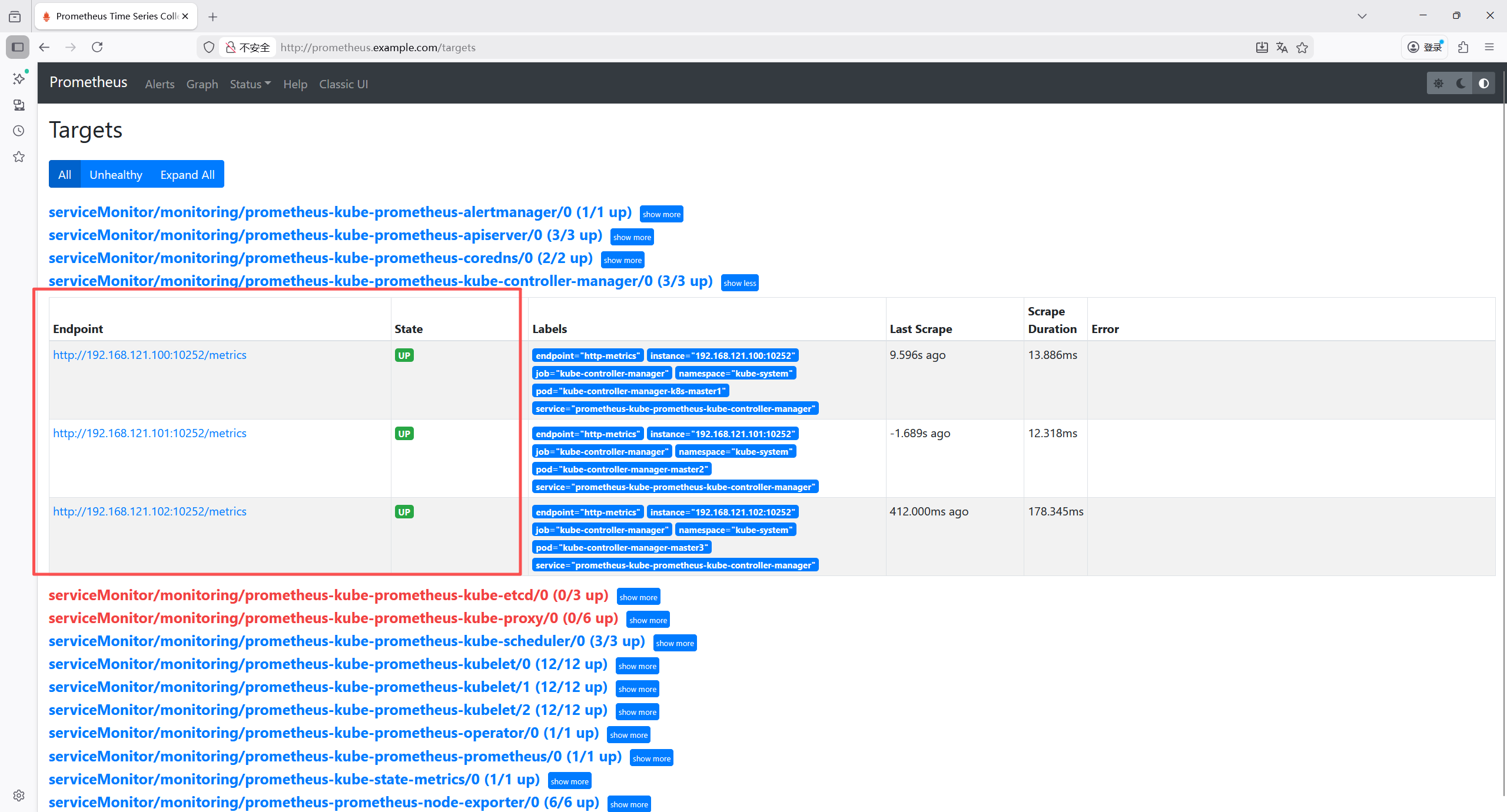

8.5.2 .修复 kube-controller-manager(所有 master 节点操作)

bash

root@k8s-master1:~/yaml/monitoring# vim /etc/kubernetes/manifests/kube-controller-manager.yaml

# 同样修改--bind-address

spec:

containers:

- command:

- kube-controller-manager

- --allocate-node-cidrs=true

- --authentication-kubeconfig=/etc/kubernetes/controller-manager.conf

- --authorization-kubeconfig=/etc/kubernetes/controller-manager.conf

- --bind-address=0.0.0.0

#- --port=0 # 注释此行

8.5.3 修复 kube-proxy

bash

# 编辑ConfigMap

root@k8s-master1:~/yaml/monitoring# kubectl edit configmap kube-proxy -n kube-system

kind: KubeProxyConfiguration

metricsBindAddress: "0.0.0.0:10249" # 默认是""

# 删除所有自动重建读取新配置

root@k8s-master1:~/yaml/monitoring# kubectl delete pods -n kube-system -l k8s-app=kube-proxy

8.5.4 修复 kube-etcd(所有 master 节点操作)

bash

root@master1:~# vim /etc/kubernetes/manifests/etcd.yaml

spec:

containers:

- command:

- etcd

- --advertise-client-urls=https://192.168.121.101:2379

- --cert-file=/etc/kubernetes/pki/etcd/server.crt

- --client-cert-auth=true

- --data-dir=/var/lib/etcd

- --initial-advertise-peer-urls=https://192.168.121.101:2380

- --initial-cluster=k8s-master1=https://192.168.121.100:2380,master2=https://192.168.121.101:2380

- --initial-cluster-state=existing

- --key-file=/etc/kubernetes/pki/etcd/server.key

- --listen-client-urls=https://127.0.0.1:2379,https://192.168.121.101:2379

- --listen-metrics-urls=http://0.0.0.0:2381 # 修改为0.0.0.0

- --metrics=basic # 添加此行由于默认的是2379端口

所以需要加一个自定义监控指标

bash

root@k8s-master1:~/yaml/monitoring# vim values.yaml

prometheusSpec:

# ---------------添加自定义采集

additionalScrapeConfigs:

- job_name: 'etcd'

static_configs:

- targets:

- 192.168.121.100:2381

- 192.168.121.101:2381

- 192.168.121.102:2381

labels:

cluster: 'k8s-cluster'

job: 'etcd'

scheme: http

metrics_path: /metrics

scrape_interval: 30s

scrape_timeout: 10s

relabel_configs:

- source_labels: [__address__]

target_label: instance

- source_labels: [__address__]

regex: '([^:]+):.*'

replacement: '${1}'

target_label: node

- target_label: job

replacement: etcd

# ------------------------------

# 更新

root@k8s-master1:~/yaml/monitoring# helm upgrade prometheus prometheus-community/kube-prometheus-stack -f values.yaml --namespace monitoring --version 31.0.0

默认down不管它

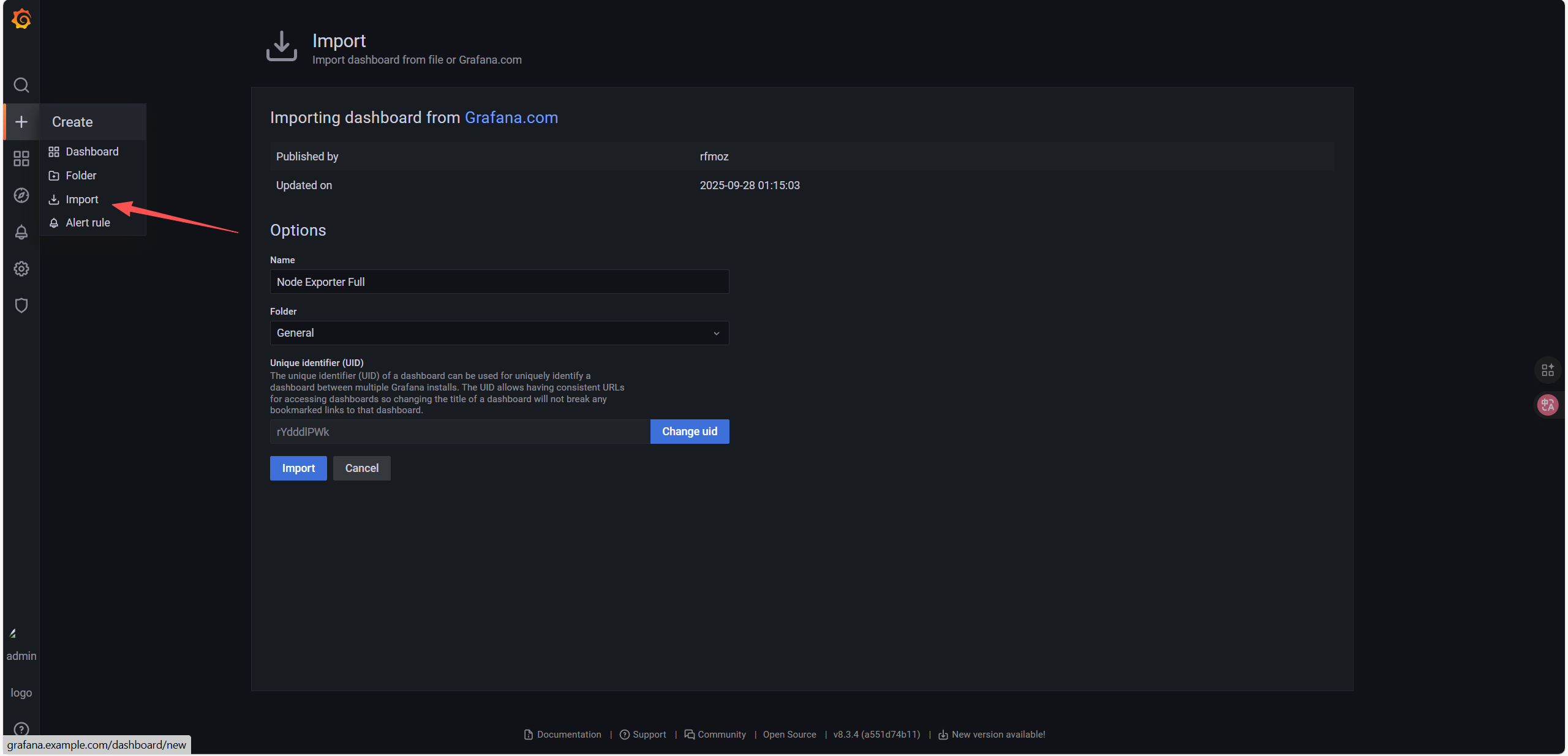

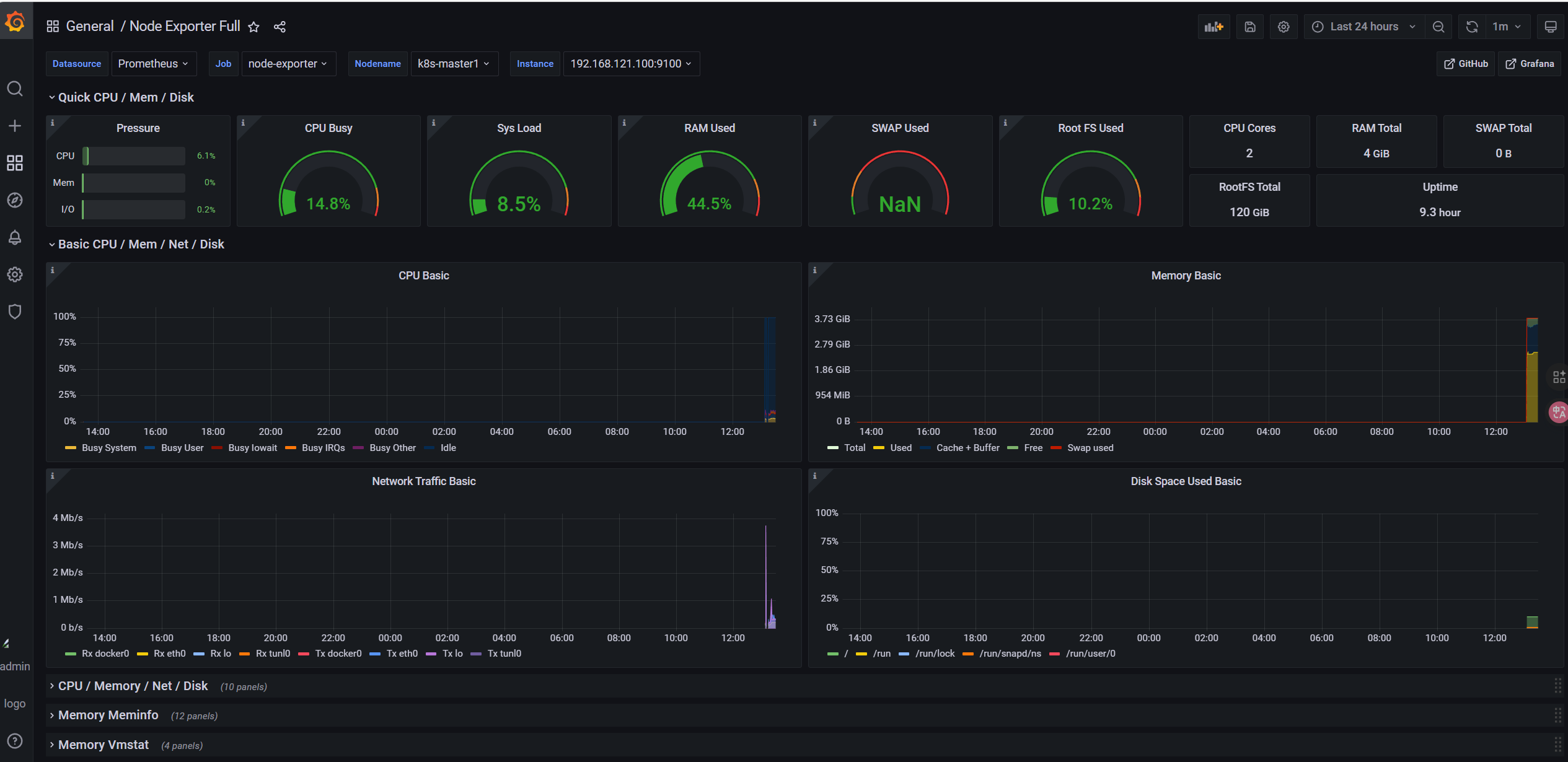

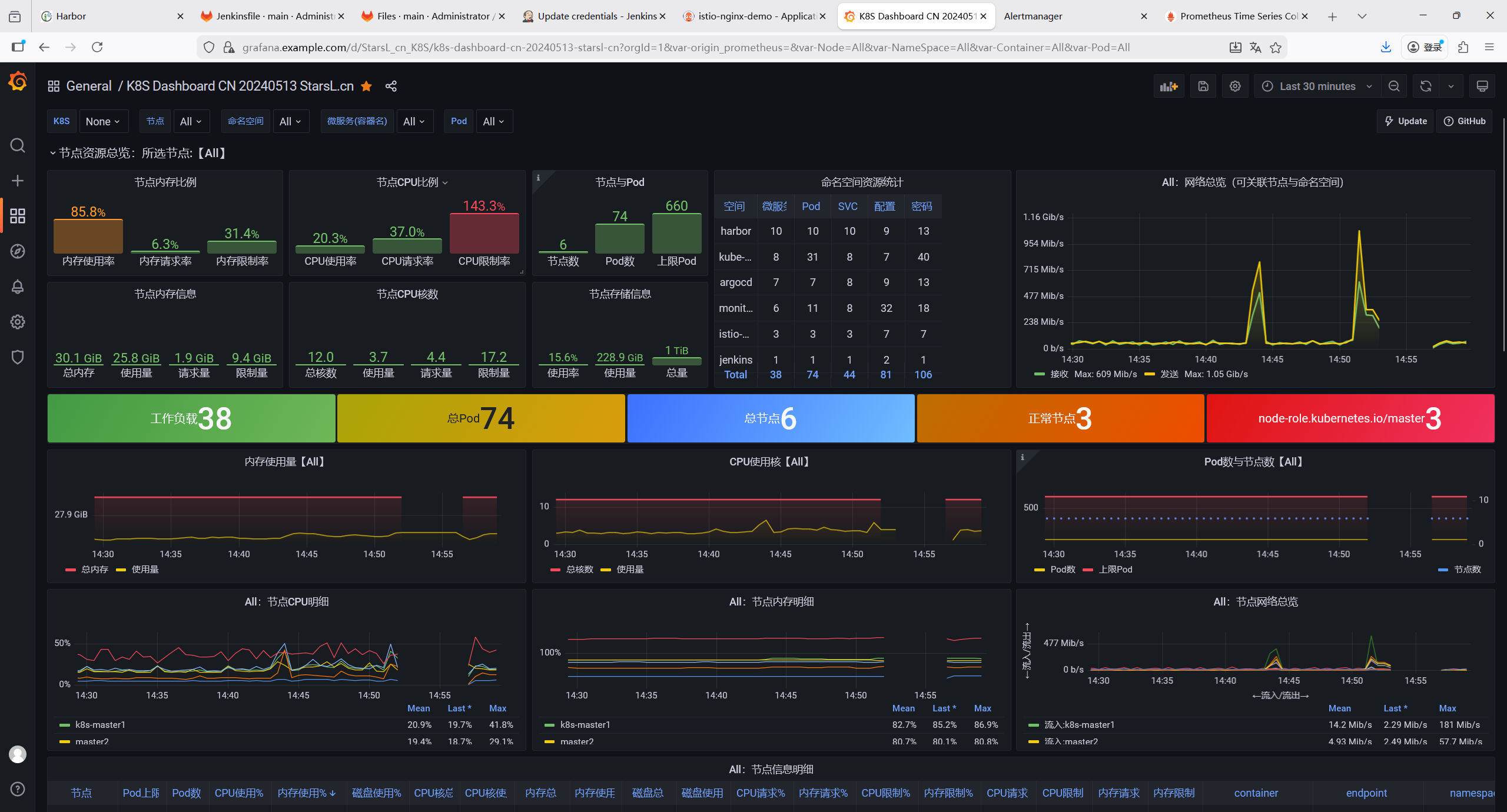

8.6 访问 Grafana

- 浏览器访问

http://grafana.example.com - 登录账号:

admin/ 密码123456 - 导入官方 Dashboard

- 主机状态监控:

1860; - K8s 集群监控:

13105; - K8s etcd监控:

3070; - K8s APIserver:

12006; - K8s Pods监控:

11600;

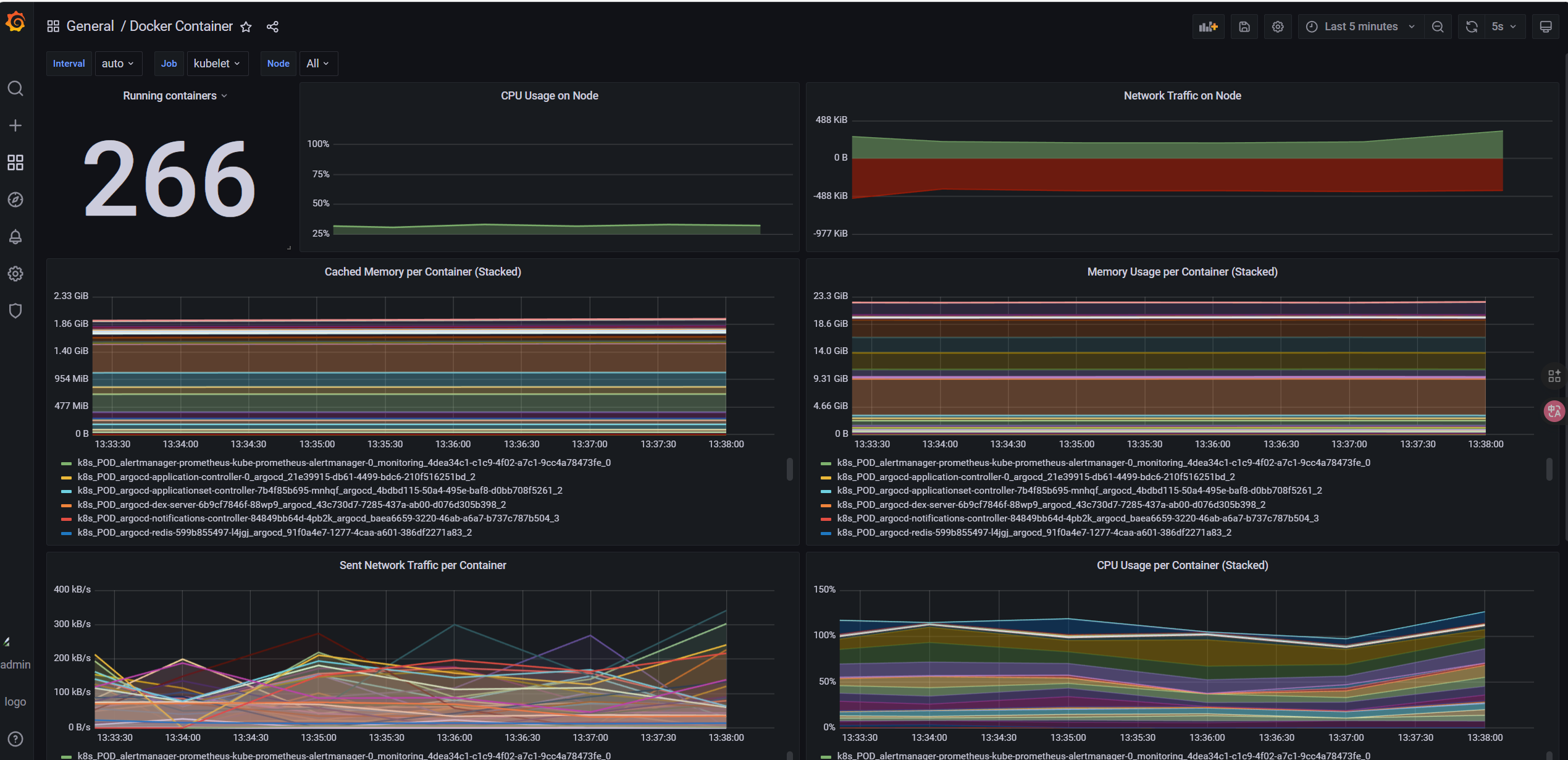

8.6.1 节点监控

8.6.2 集群监控

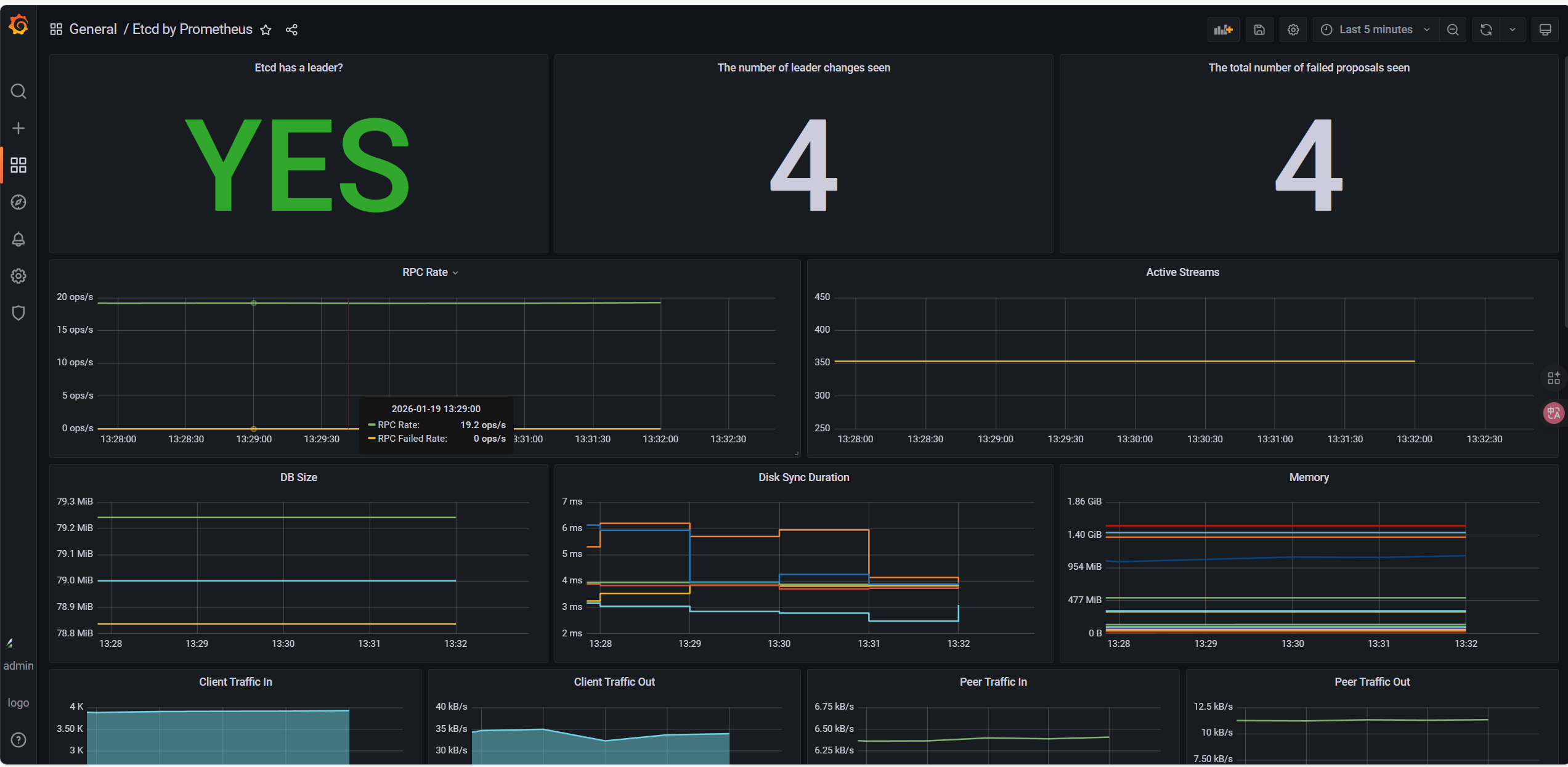

8.6.3 etcd监控

8.6.4 apiServer

8.6.5 pods监控

8.7 AlertManager邮箱告警配置

告警规则helm包里面自带了只需要配置邮箱即可

bash

# AlertManager 配置(持久化 + Ingress + 修复后模板)

alertmanager:

# 配置文件添加内容-------------------------------

config:

global:

resolve_timeout: 5m

# QQ邮箱SMTP配置

smtp_smarthost: 'smtp.qq.com:587'

smtp_from: '3127103271@qq.com'

smtp_auth_username: '3127103271@qq.com'

smtp_auth_password: 'pbinvybmgzzudecd'

smtp_require_tls: true

# 路由规则(修复接收器名称 + 补充severity分组)

route:

receiver: 'qq-email-receiver' # 修正:与接收器名称一致

group_by: ['alertname', 'cluster', 'service', 'severity'] # 补充severity,适配模板

group_wait: 10s

group_interval: 10s

repeat_interval: 1h

# Watchdog 规则:避免内置告警发送邮件

routes:

- match:

alertname: Watchdog

receiver: "null"

# 接收器配置(合并重复定义 + 模板添加默认值)

receivers:

- name: 'null'

- name: 'qq-email-receiver'

email_configs:

- to: '3386733930@qq.com'

send_resolved: true # 告警恢复时发送通知

# 抑制规则:critical级别告警触发时,抑制同组的warning级别告警

inhibit_rules:

- source_match:

severity: 'critical'

target_match:

severity: 'warning'

equal: ['alertname', 'cluster', 'service']

# 结束----------------------------------------------

alertmanagerSpec:

storage:

volumeClaimTemplate:

spec:

storageClassName: nfs-storage-monitoring

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 10Gi

ingress:

ingressClassName: nginx

enabled: true

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

hosts:

- alertmanager.example.com # 域名

paths: ["/"]

# 这个版本有bug必须重新部署才生效

root@k8s-master1:~/yaml/monitoring# helm uninstall prometheus -n monitoring

release "prometheus" uninstalled

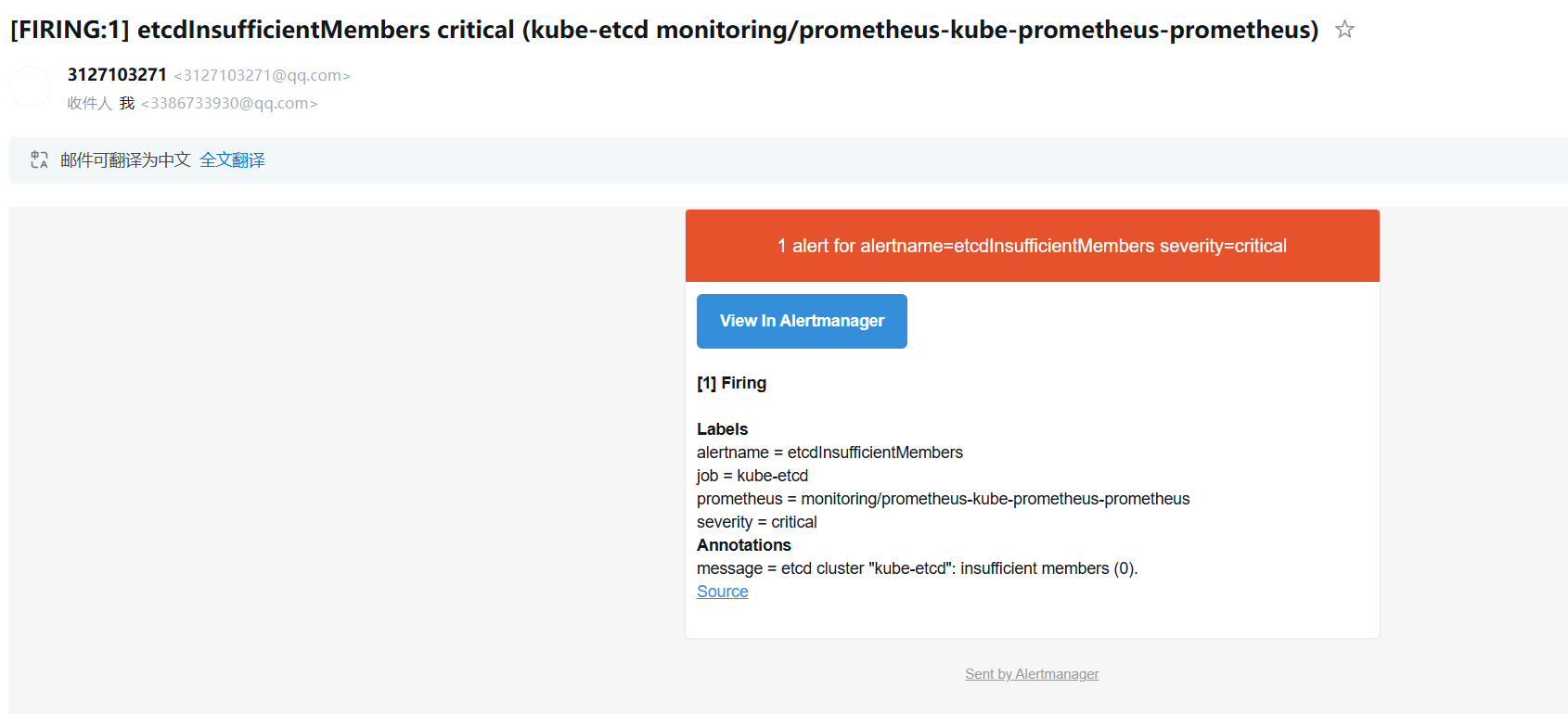

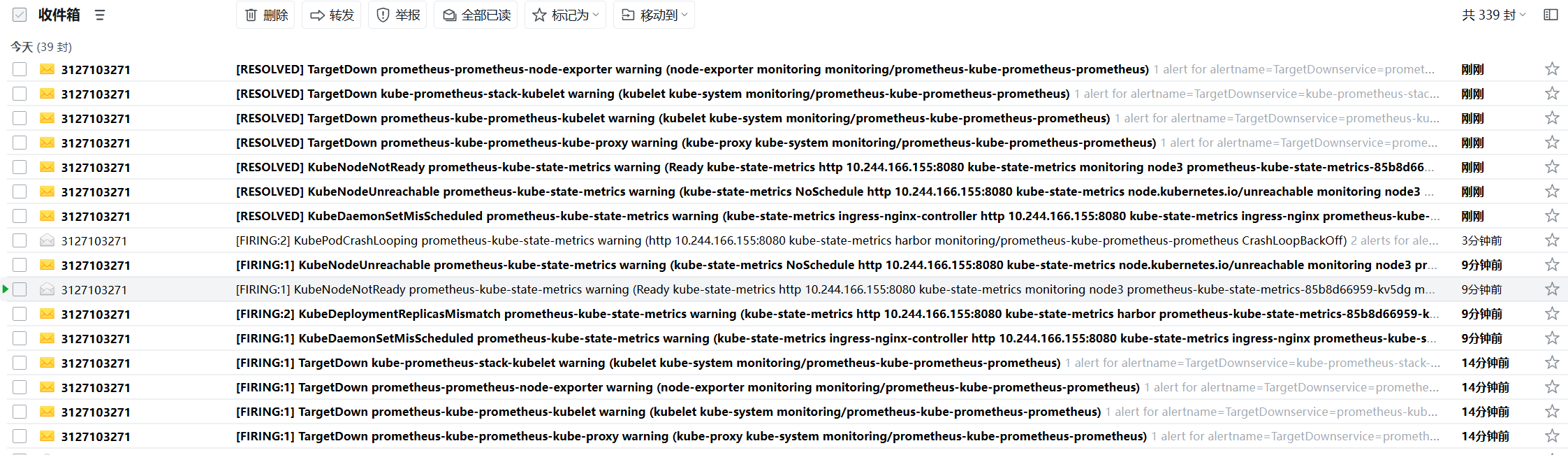

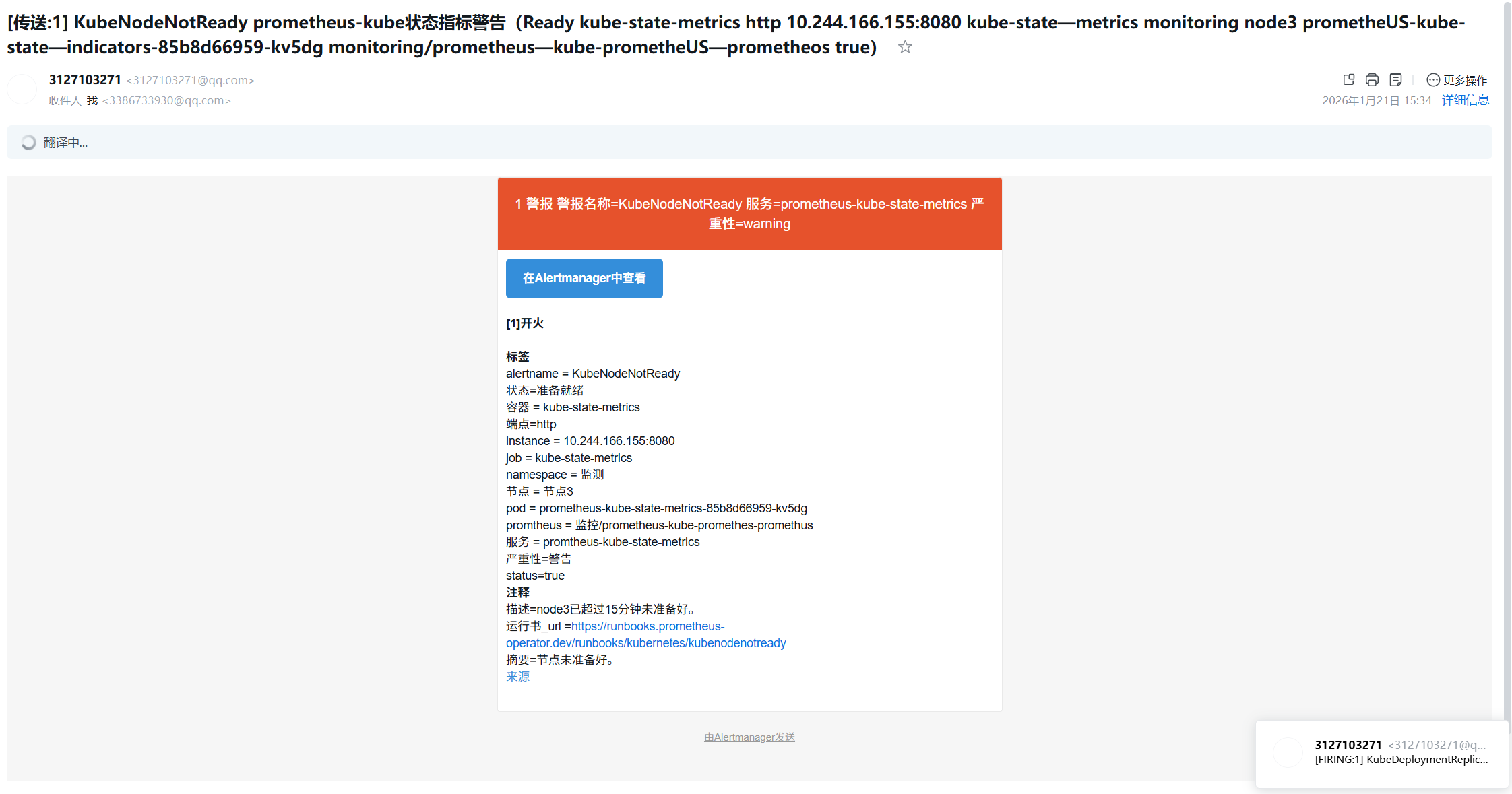

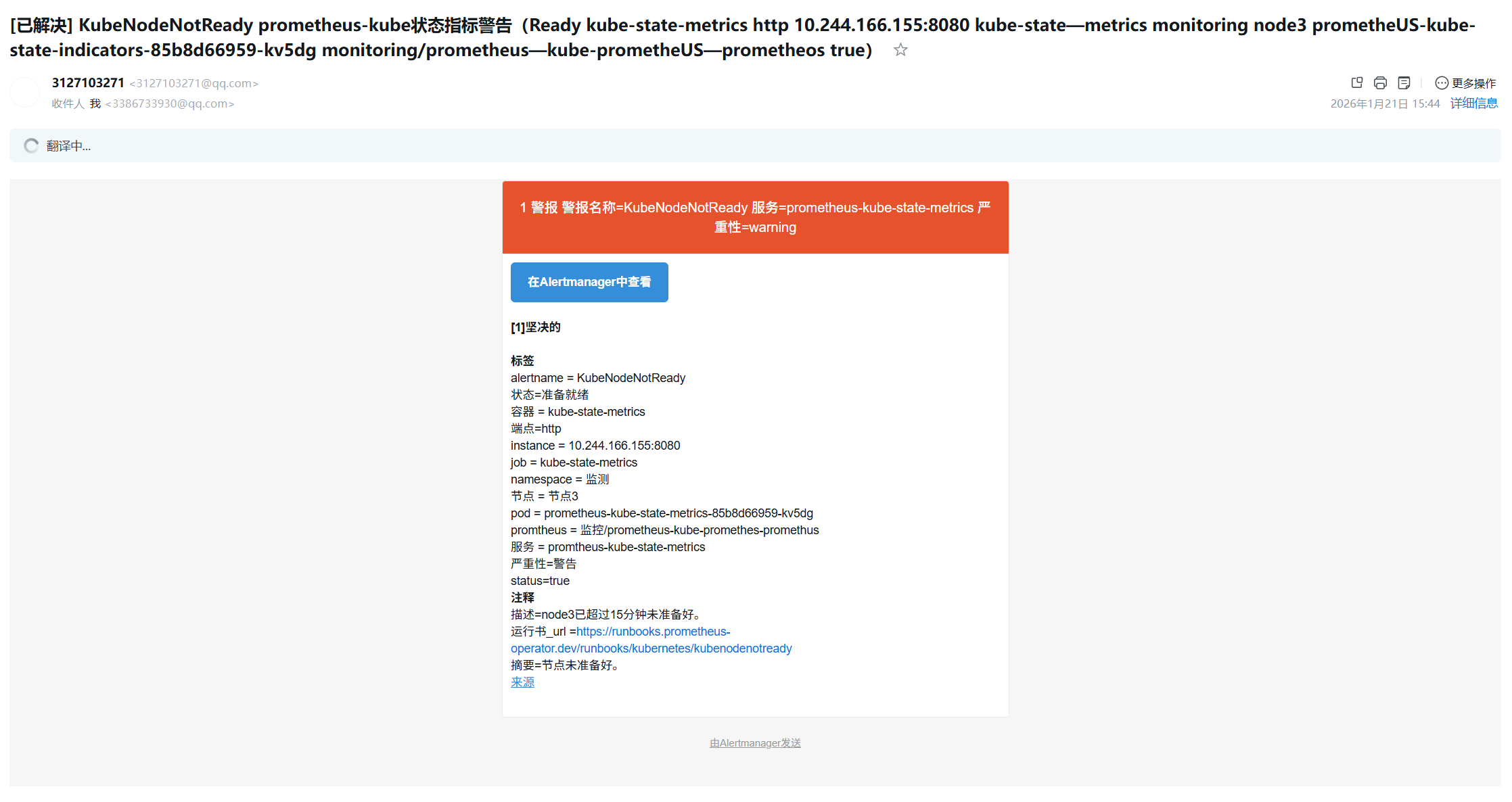

root@k8s-master1:~/yaml/monitoring# helm install prometheus prometheus-community/kube-prometheus-stack -f values.yaml --namespace monitoring --version 31.0.0之前自带的etcd采集指标的问题没有修复,就会收到邮箱告警

随便关掉一台服务器模拟宕机,会收到超时15分钟没准备好

节点恢复后,有已解决通知

9 Istio 部署与配置

9.1 下载并安装 Istio 1.11.8

bash

root@k8s-master1:~/yaml# mkdir istio

# 下载Istio 1.11.8(适配k8s 1.20.6)

root@k8s-master1:~/yaml/istio# curl -L https://istio.io/downloadIstio | ISTIO_VERSION=1.11.8 TARGET_ARCH=x86_64 sh -

root@k8s-master1:~/yaml/istio# cd istio-1.11.8

# 将istioctl加入PATH

root@k8s-master1:~/yaml/istio# cp bin/istioctl /usr/local/bin/

root@k8s-master1:~/yaml/istio# chmod +x /usr/local/bin/istioctl

root@k8s-master1:~/yaml/istio# source ~/.bashrc

# 安装Istio(demo配置,包含所有核心组件)

root@k8s-master1:~/yaml/istio# istioctl install --set profile=demo -y

# 验证Istio部署(所有Pod Running)

root@k8s-master1:~/yaml/istio# kubectl get pods -n istio-system

NAME READY STATUS RESTARTS AGE

istio-egressgateway-66854b84df-zdvpv 1/1 Running 0 4h34m

istiod-7f75778f86-2ktn8 1/1 Running 0 4h34m9.2 开启 Sidecar 自动注入(微服务命名空间)

创建微服务专属命名空间,并开启 Istio Sidecar 自动注入(核心:Istio 通过 Sidecar 管控微服务流量):

bash

# 创建命名空间

root@k8s-master1:~/yaml/istio# kubectl create namespace istio-demo

# 开启Sidecar自动注入

root@k8s-master1:~/yaml/istio# kubectl label namespace istio-demo istio-injection=enabled

# 验证标签

root@k8s-master1:~/yaml/istio# kubectl get ns istio-demo --show-labels

NAME STATUS AGE LABELS

istio-demo Active 20h istio-injection=enabled9.3 配置 Nginx-Ingress 转发 Istio 流量

所有服务通过 nginx-ingress 访问,需配置 nginx-ingress 将域名请求转发到 Istio IngressGateway:

bash

root@k8s-master1:~/yaml/istio# vim ingress-istio.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: istio-gateway-ingress

namespace: istio-system

annotations:

kubernetes.io/ingress.class: "nginx" # nginx-ingress class

nginx.ingress.kubernetes.io/ssl-redirect: "false" # 测试阶段关闭HTTPS

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: "nginx-demo.example.com" # 域名

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: istio-ingressgateway

port:

number: 80

# 执行部署

root@k8s-master1:~/yaml/istio# kubectl apply -f ingress-istio.yaml9.4 删除webhook(测试环境)

bash

# 删除Istio WebHook

kubectl delete validatingwebhookconfiguration istiod-default-validator

# 验证删除成功

kubectl get validatingwebhookconfiguration | grep istio10 GitLab 仓库准备(双仓库模式)

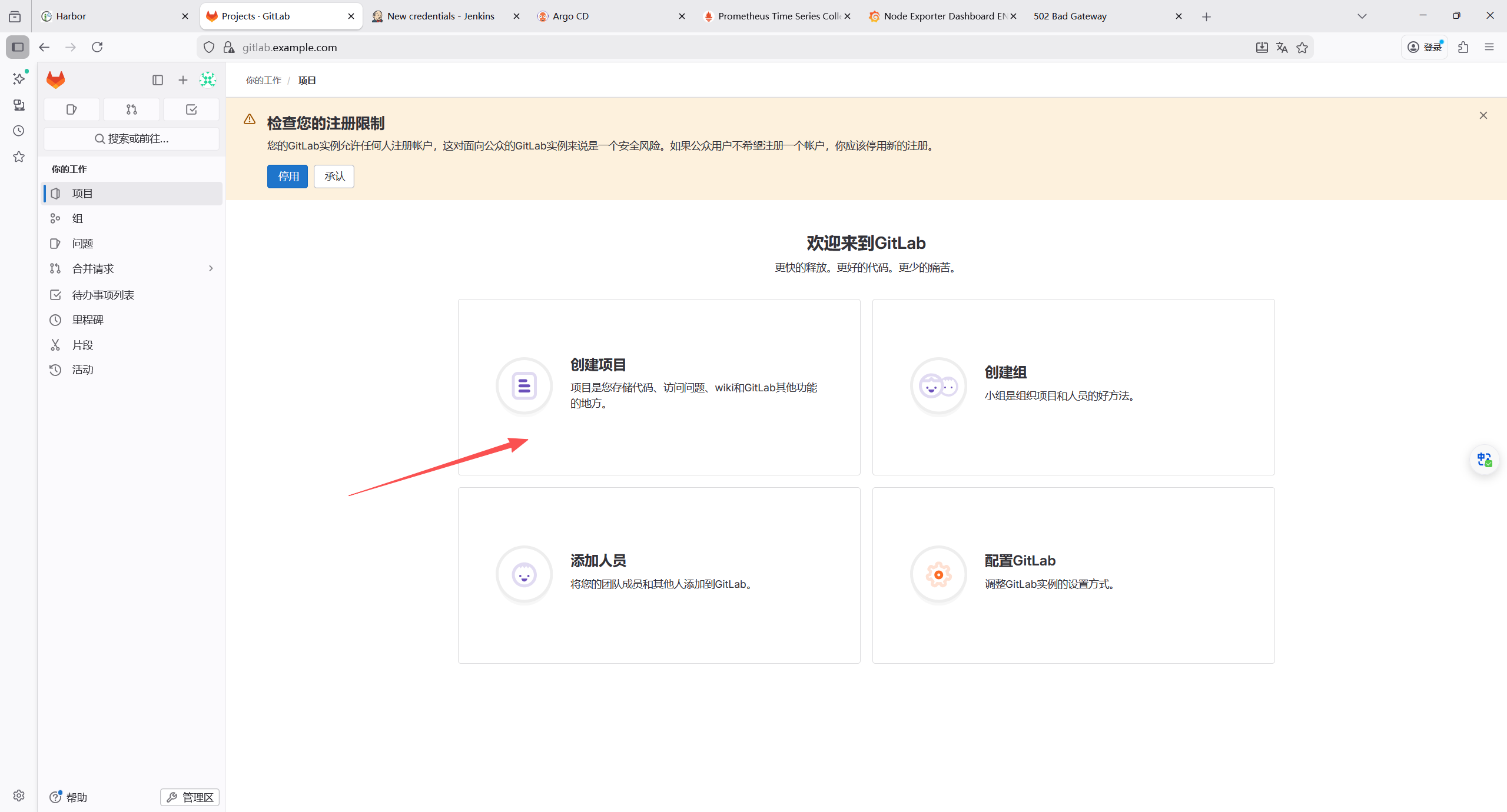

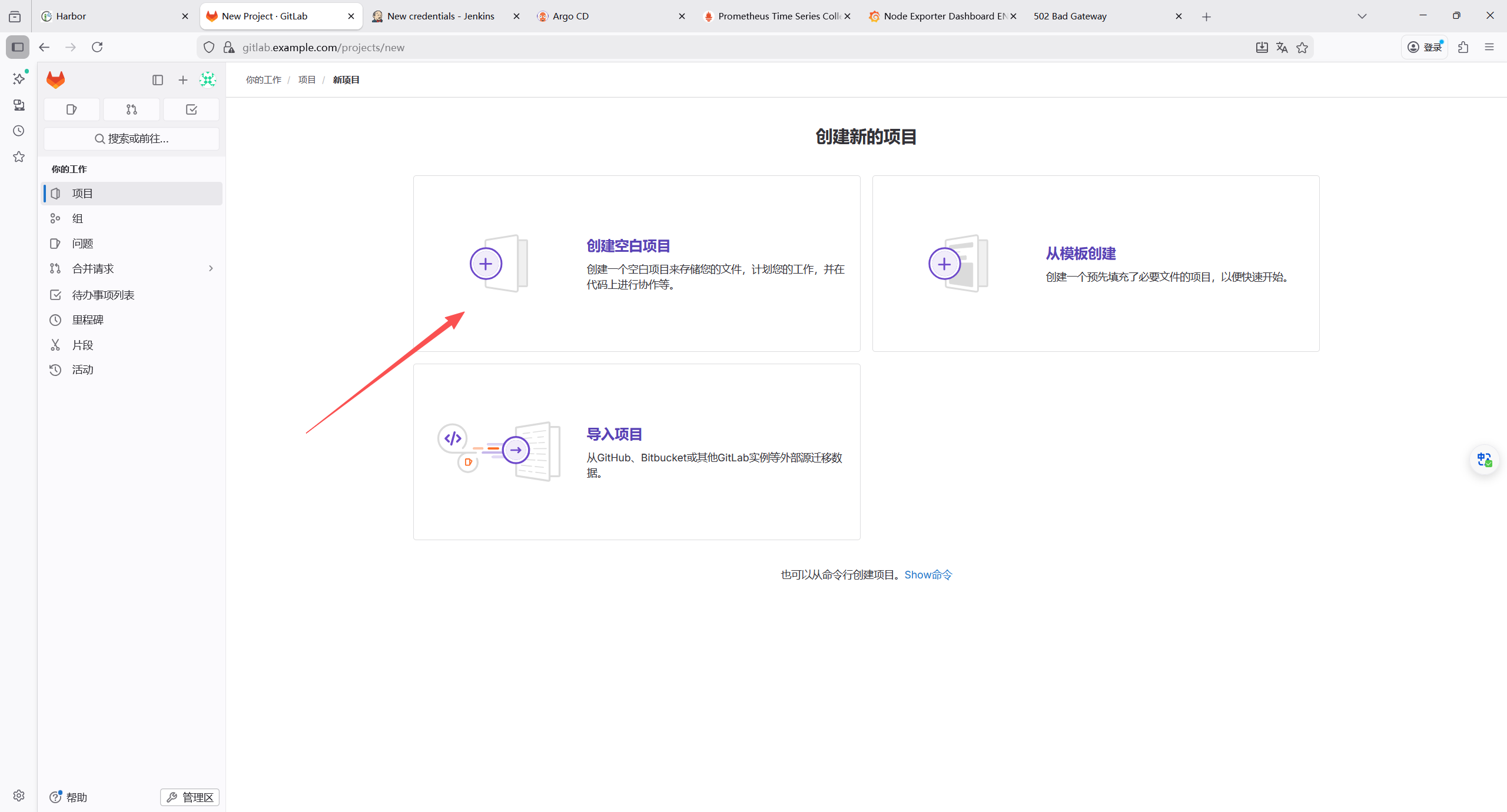

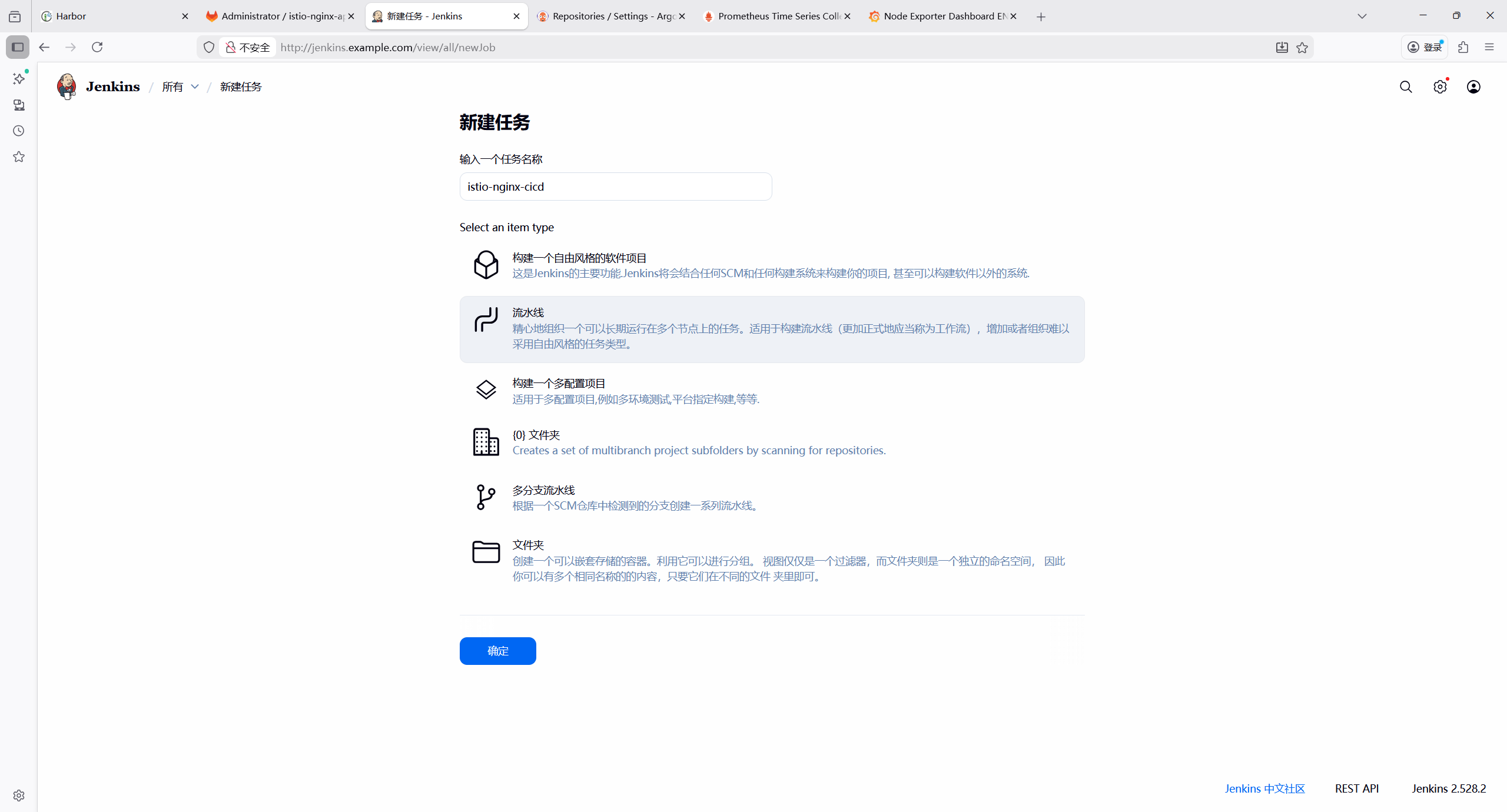

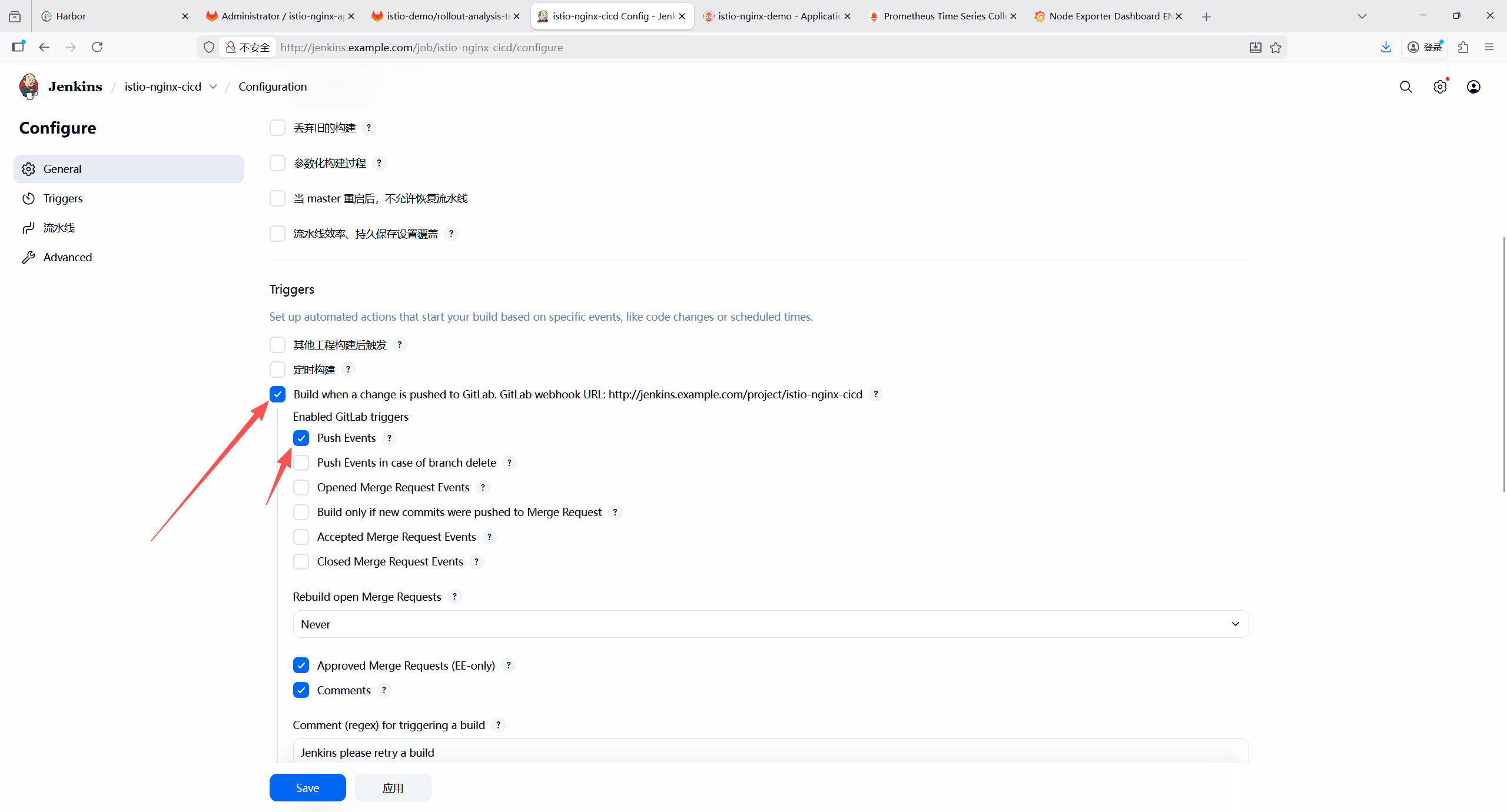

需创建两个 GitLab 仓库

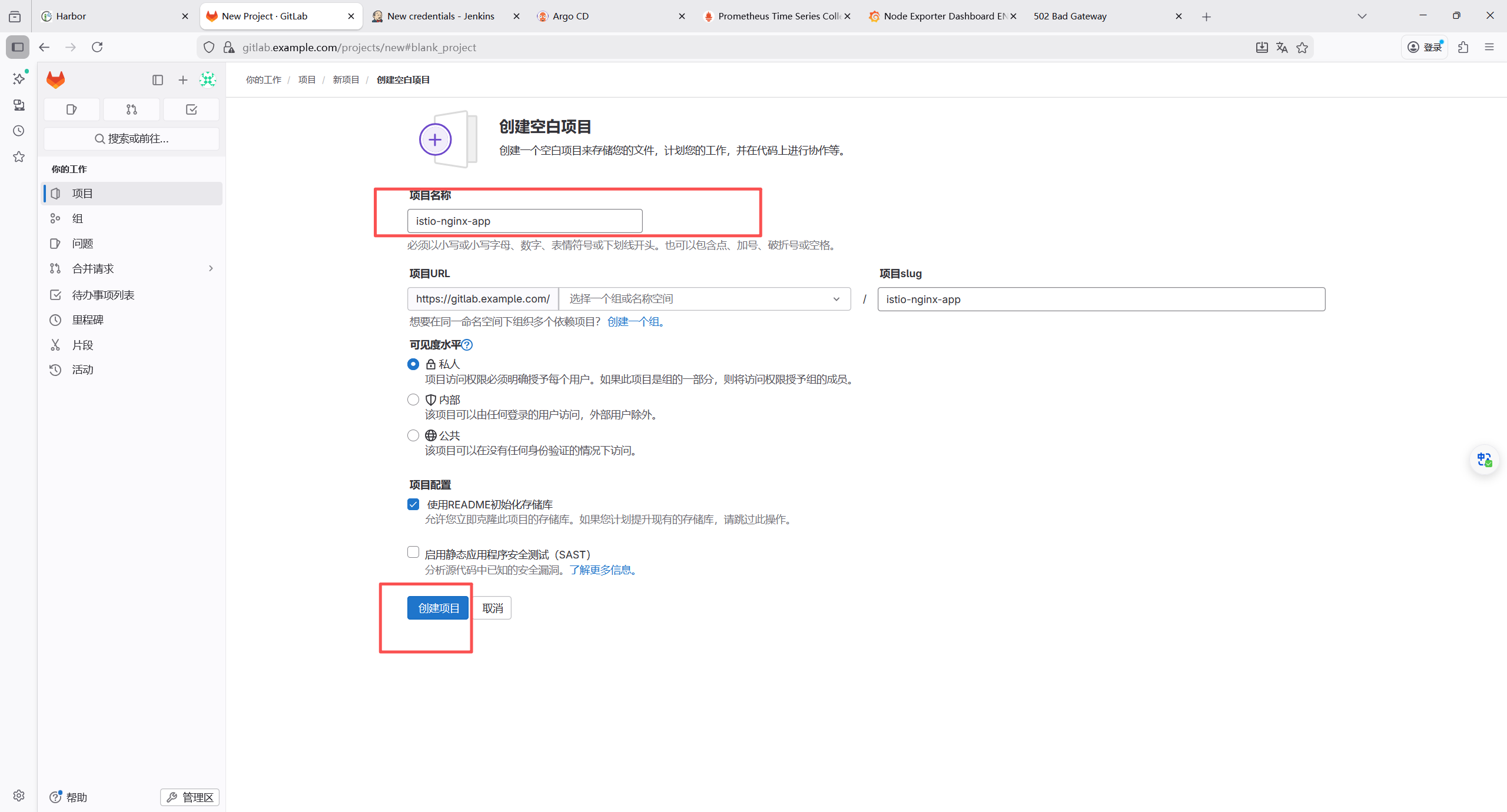

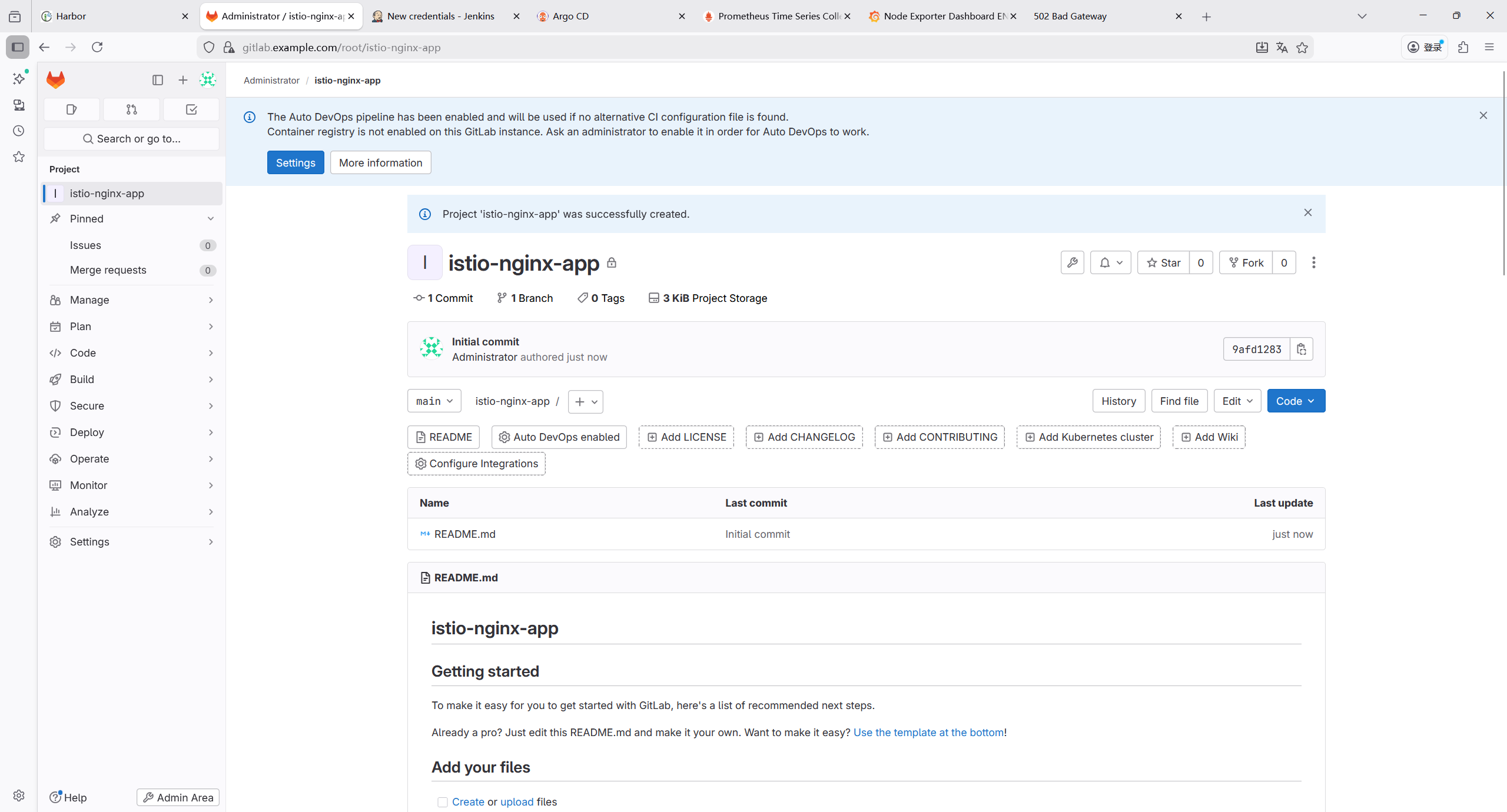

10.1 仓库 1:应用代码仓库(istio-nginx-app)

存放 nginx 微服务代码、Dockerfile,用于 Jenkins 构建镜像。

10.1.1 仓库结构

bash

istio-nginx-app/

├── Jenkinsfile

├── Dockerfile # 构建nginx镜像

└── html/ # 自定义页面(区分版本)

└── index.html

10.1.2 本地克隆gitlab 应用代码仓库

bash

root@k8s-master1:~/yaml# mkdir nginx-app/

root@k8s-master1:~/yaml# cd nginx-app

root@k8s-master1:~/yaml/nginx-app# git config --global user.name "chenjun"

root@k8s-master1:~/yaml/nginx-app# git config --global user.email "3127103271@qq.com"

root@k8s-master1:~/yaml/nginx-app# git config --global color.ui true

root@k8s-master1:~/yaml/nginx-app# git config --list

user.name=chenjun

user.email=3127103271@qq.com

color.ui=true

root@k8s-master1:~/yaml/nginx-app# git init

Initialized empty Git repository in /root/yaml/nginx-app/.git/

root@k8s-master1:~/yaml/nginx-app# git clone git@10.110.211.47:root/istio-nginx-app.git

Cloning into 'istio-nginx-app'...

The authenticity of host '10.110.211.47 (10.110.211.47)' can't be established.

ECDSA key fingerprint is SHA256:Idgr3dsHkKBErB19MSEAPy5/cxTyXTYKI68bOzkRutA.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '10.110.211.47' (ECDSA) to the list of known hosts.

remote: Enumerating objects: 3, done.

remote: Counting objects: 100% (3/3), done.

remote: Compressing objects: 100% (2/2), done.

remote: Total 3 (delta 0), reused 0 (delta 0), pack-reused 0

Receiving objects: 100% (3/3), done.1.编写dockerfile

bash

root@k8s-master1:~/yaml/nginx-app/istio-nginx-app# vim Dockerfile

FROM nginx:harbor.example.com/public-image/nginx:1.21.6

# 复制自定义页面

COPY html/index.html /usr/share/nginx/html/index.html

# 暴露端口

EXPOSE 80

# 启动nginx

CMD ["nginx", "-g", "daemon off;"]2.编写 index.html(v1 版本)

bash

root@k8s-master1:~/yaml/nginx-app/istio-nginx-app# vim html/index.html

<h1>NGINX Service v1 - GitOps CI/CD</h1>3.编写 Jenkinsfile

bash

/**

* 基于Jenkins Pipeline的微服务CI/CD流水线(Istio+Nginx演示)

* 核心流程:拉取应用代码 → 构建Docker镜像 → 推送至Harbor → 更新ArgoCD配置仓库 → 清理镜像

*/

pipeline {

// 执行代理:使用任意可用的Jenkins节点

agent any

// 环境变量定义(统一管理配置,便于维护)

environment {

// 应用代码仓库地址(Nginx模拟微服务代码)

GITLAB_APP_REPO = "http://10.110.211.47/root/istio-nginx-app.git"

// ArgoCD配置仓库地址(存放K8s/Istio部署清单)

GITLAB_CONFIG_REPO = "http://10.110.211.47/root/istio-nginx-config.git"

// GitLab仓库IP(用于拼接克隆地址)

GITLAB_REPO_HOST = "10.110.211.47"

// Harbor镜像仓库地址

HARBOR_REGISTRY = "harbor.example.com"

// Harbor中的项目名称

HARBOR_PROJECT = "istio-demo"

// 镜像名称(对应Nginx服务)

IMAGE_NAME = "nginx"

// 镜像标签(构建编号+时间戳,确保唯一性)

IMAGE_TAG = "v${BUILD_NUMBER}-${new Date().format('yyyyMMddHHmmss')}"

// 发布版本标识(金丝雀发布)

VERSION = "canary"

// GitLab Token的Jenkins凭证ID(用于免密操作GitLab仓库)

GITLAB_TOKEN_CRED_ID = "gitlab-secret"

// ArgoCD配置仓库克隆的临时目录名

MANIFEST_CLONE_DIR = "argocd-config"

}

// 流水线核心阶段(按执行顺序定义)

stages {

// 阶段1:拉取应用代码仓库(从GitLab获取Nginx应用源码)

stage('Pull App Code') {

steps {

echo "===== 拉取应用代码仓库 ====="

// 使用GitSCM插件克隆指定分支的代码

checkout([$class: 'GitSCM',

// 拉取main分支的代码

branches: [[name: '*/main']],

// 远程仓库配置(地址+凭证)

userRemoteConfigs: [[

url: "${GITLAB_APP_REPO}",

credentialsId: "Gitlab-token" // Jenkins中配置的GitLab凭证ID

]]

])

}

}

// 阶段2:构建Docker镜像(基于应用代码构建带版本信息的Nginx镜像)

stage('Build Docker Image') {

steps {

echo "===== 构建镜像:${HARBOR_REGISTRY}/${HARBOR_PROJECT}/${IMAGE_NAME}:${IMAGE_TAG} ====="

// 优化1:替换index.html中的BUILD_TIME变量为当前时间(适配Linux/macOS的sed语法,添加.bak备份避免权限问题)

sh "sed -i.bak 's/\${BUILD_TIME}/\\\$(date +%Y-%m-%d\\ %H:%M:%S)/g' html/index.html && rm -f html/index.html.bak"

// 优化2:替换index.html中的VERSION变量为金丝雀版本标识

sh "sed -i.bak 's/\${VERSION}/${VERSION}/g' html/index.html && rm -f html/index.html.bak"

// 构建Docker镜像(使用当前目录的Dockerfile)

sh "docker build -t ${HARBOR_REGISTRY}/${HARBOR_PROJECT}/${IMAGE_NAME}:${IMAGE_TAG} ."

}

}

// 阶段3:推送镜像到Harbor(将构建好的镜像推送到私有镜像仓库)

stage('Push Image to Harbor') {

steps {

echo "===== 推送镜像到Harbor ====="

// 推送镜像到Harbor(需确保Jenkins节点已登录Harbor)

sh "docker push ${HARBOR_REGISTRY}/${HARBOR_PROJECT}/${IMAGE_NAME}:${IMAGE_TAG}"

}

}

// 阶段4:更新ArgoCD配置仓库(修改部署清单中的镜像标签,触发ArgoCD自动部署)

stage('Update ArgoCD Config Repo') {

steps {

echo "===== 更新ArgoCD配置仓库 ====="

// 清理历史克隆目录,避免残留文件干扰

sh "rm -rf ${MANIFEST_CLONE_DIR}"

// 从Jenkins凭证库获取GitLab Token,免密克隆配置仓库

withCredentials([string(credentialsId: GITLAB_TOKEN_CRED_ID, variable: 'GITLAB_TOKEN')]) {

sh """

set -e # 开启严格模式,任意命令失败则终止脚本

# 克隆ArgoCD配置仓库(使用OAuth2 Token免密认证)

git clone http://oauth2:\${GITLAB_TOKEN}@\${GITLAB_REPO_HOST}/root/istio-nginx-config.git \${MANIFEST_CLONE_DIR}

# 校验克隆结果,失败则终止

if [ ! -d "\${MANIFEST_CLONE_DIR}" ]; then

echo "克隆配置仓库失败!"

exit 1

fi

"""

}

// 进入配置仓库的部署清单目录(istio-demo为项目目录)

dir("${MANIFEST_CLONE_DIR}/istio-demo") {

// 前置校验:检查部署清单文件是否存在,避免替换失败

sh """

if [ ! -f "nginx-rollout.yaml" ]; then

echo "错误:未找到nginx-rollout.yaml文件!当前目录文件列表:"

ls -la # 输出目录文件,便于调试

exit 1

fi

# 输出替换前的文件内容,用于对比验证

echo "===== 替换前的nginx-rollout.yaml内容 ====="

cat nginx-rollout.yaml

"""

// 核心操作:替换部署清单中的镜像标签(仅替换TAG部分,保留仓库和项目路径)

sh "sed -i.bak 's|harbor.example.com/istio-demo/nginx:[^ ]*|harbor.example.com/istio-demo/nginx:${IMAGE_TAG}|g' nginx-rollout.yaml && rm -f nginx-rollout.yaml.bak"

// 验证替换结果,输出替换后的文件内容

sh """

echo "===== 替换后的nginx-rollout.yaml内容 ====="

cat nginx-rollout.yaml

# 查看Git状态(仅显示已跟踪文件的变更,过滤未跟踪的临时文件)

echo "===== Git状态(仅已跟踪文件) ====="

git status --porcelain | grep -v '^??' # ^?? 表示未跟踪文件,过滤后更清晰

"""

// 配置Git提交者信息(Jenkins节点全局生效)

sh "git config --global user.name 'jenkins'"

sh "git config --global user.email 'jenkins@example.com'"

// 优化:仅当已跟踪文件有变更时才执行commit,避免空提交

sh """

# 提取已跟踪文件的变更信息(排除未跟踪文件)

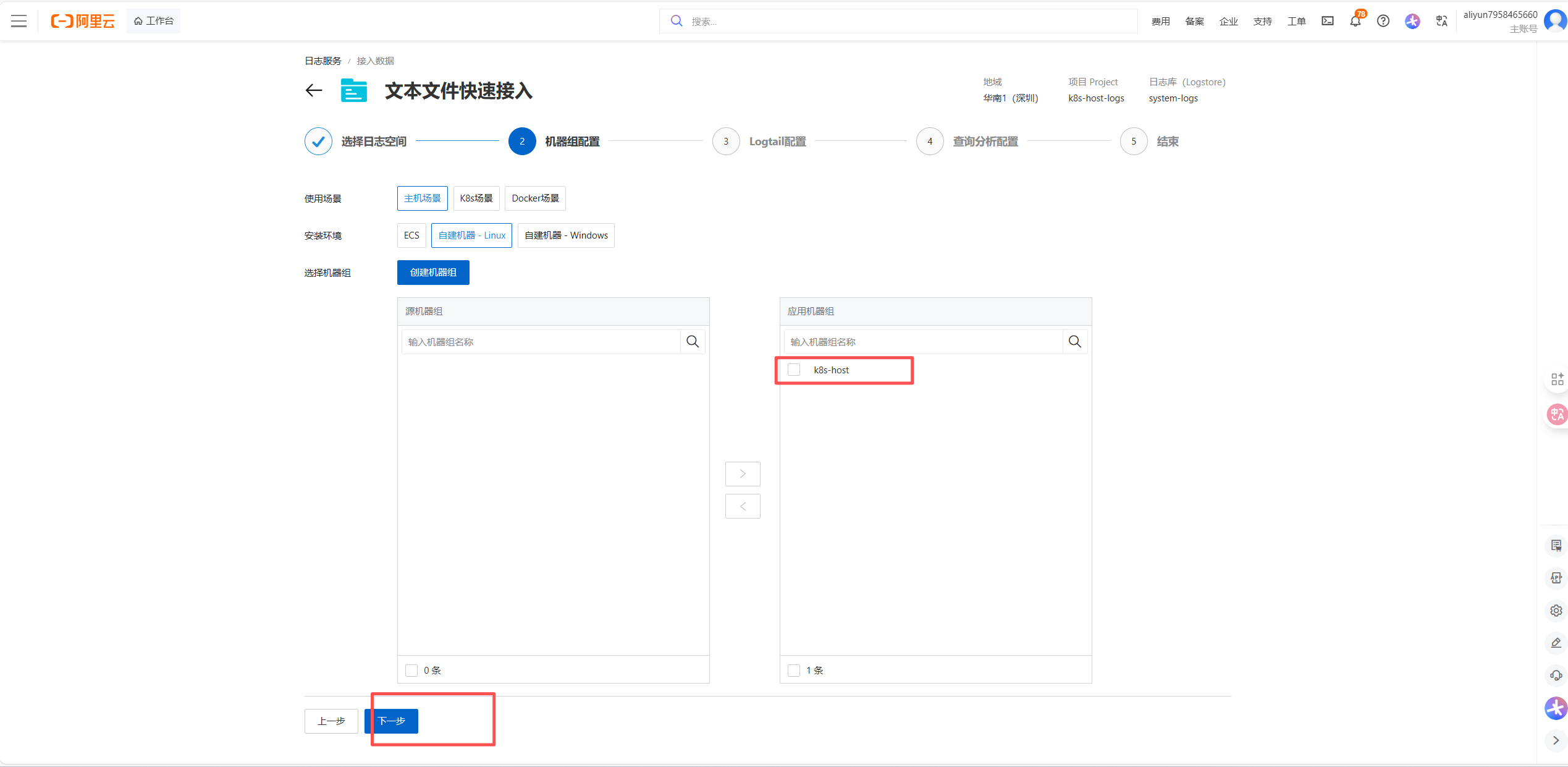

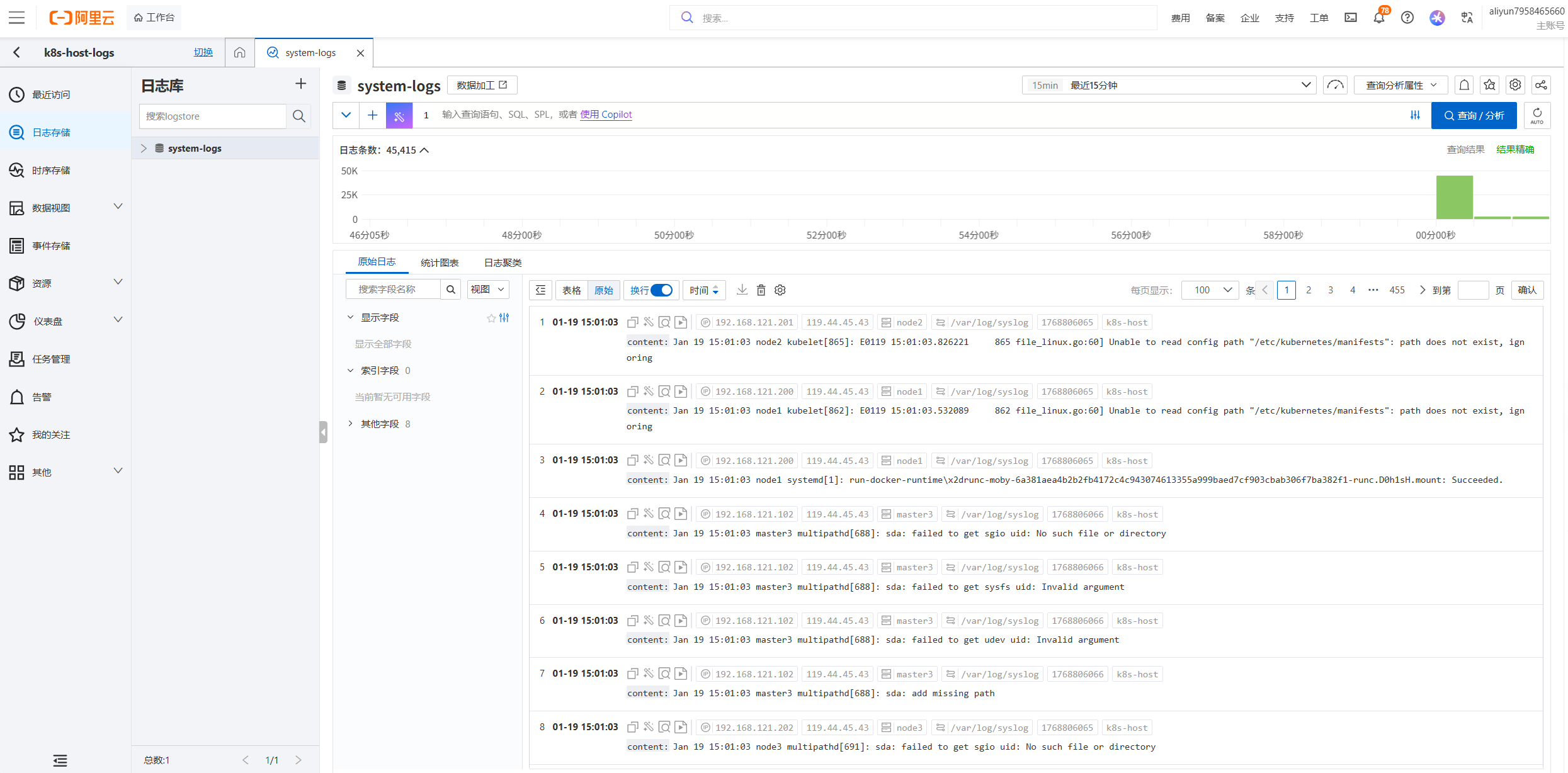

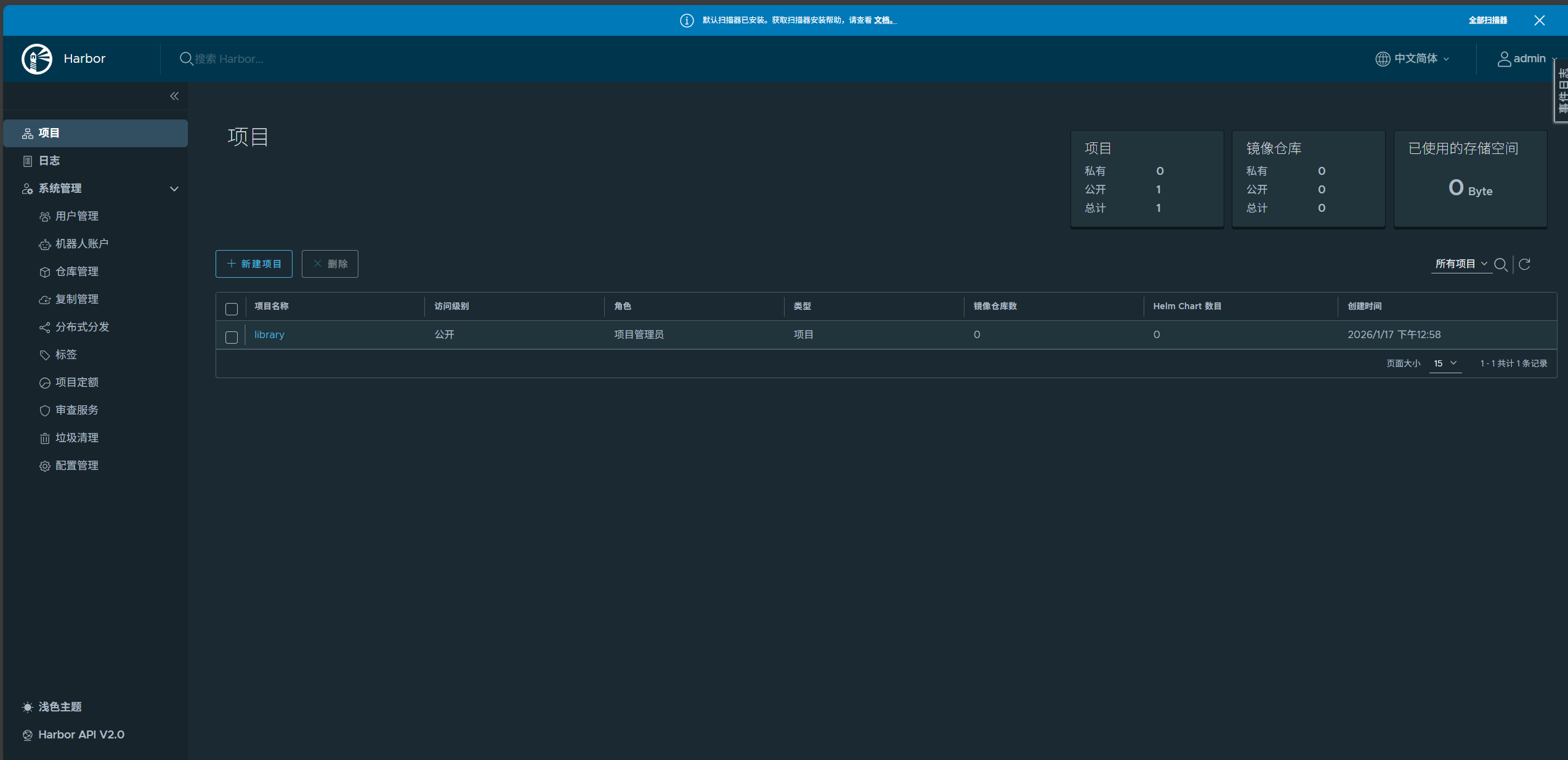

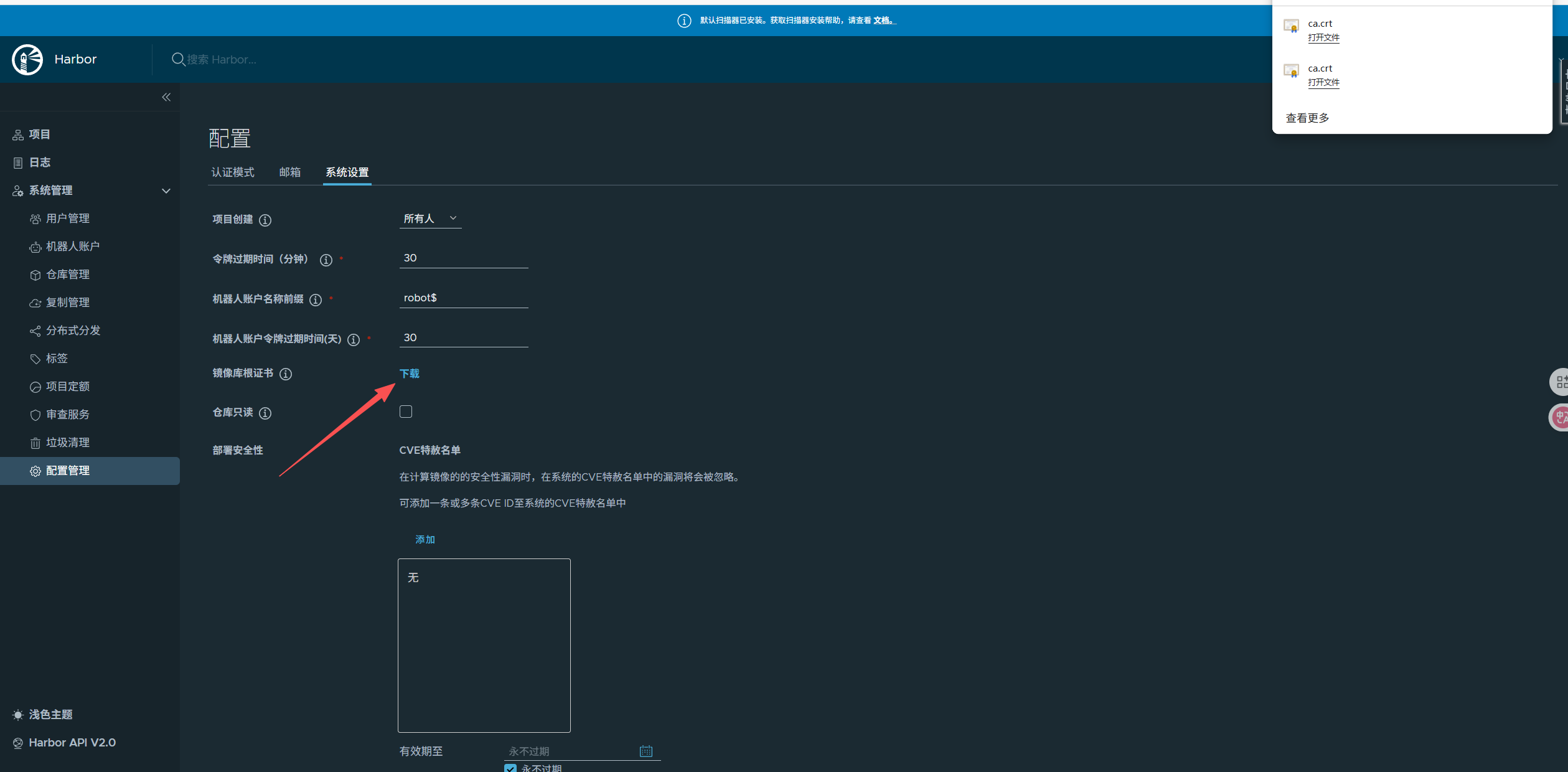

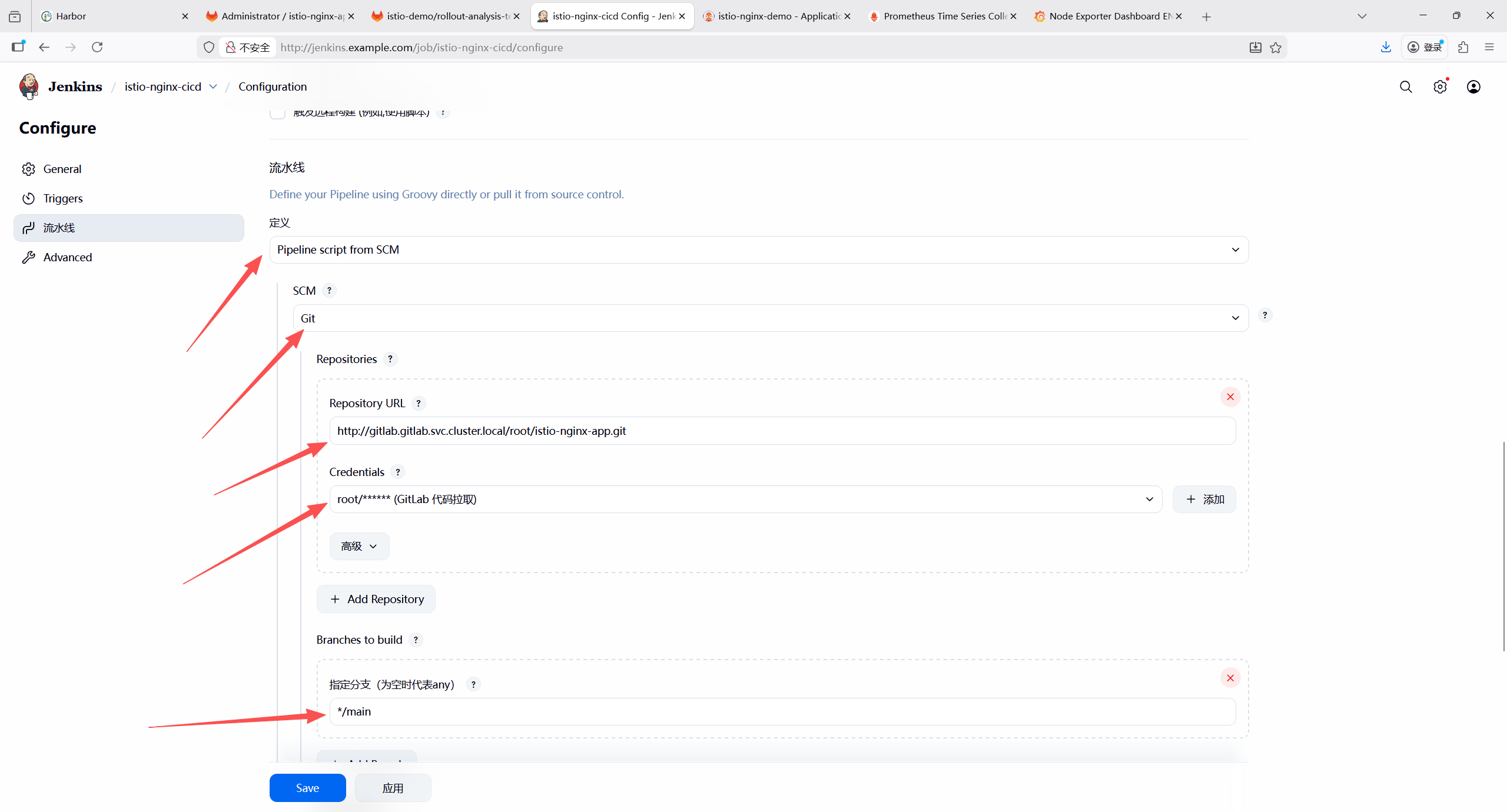

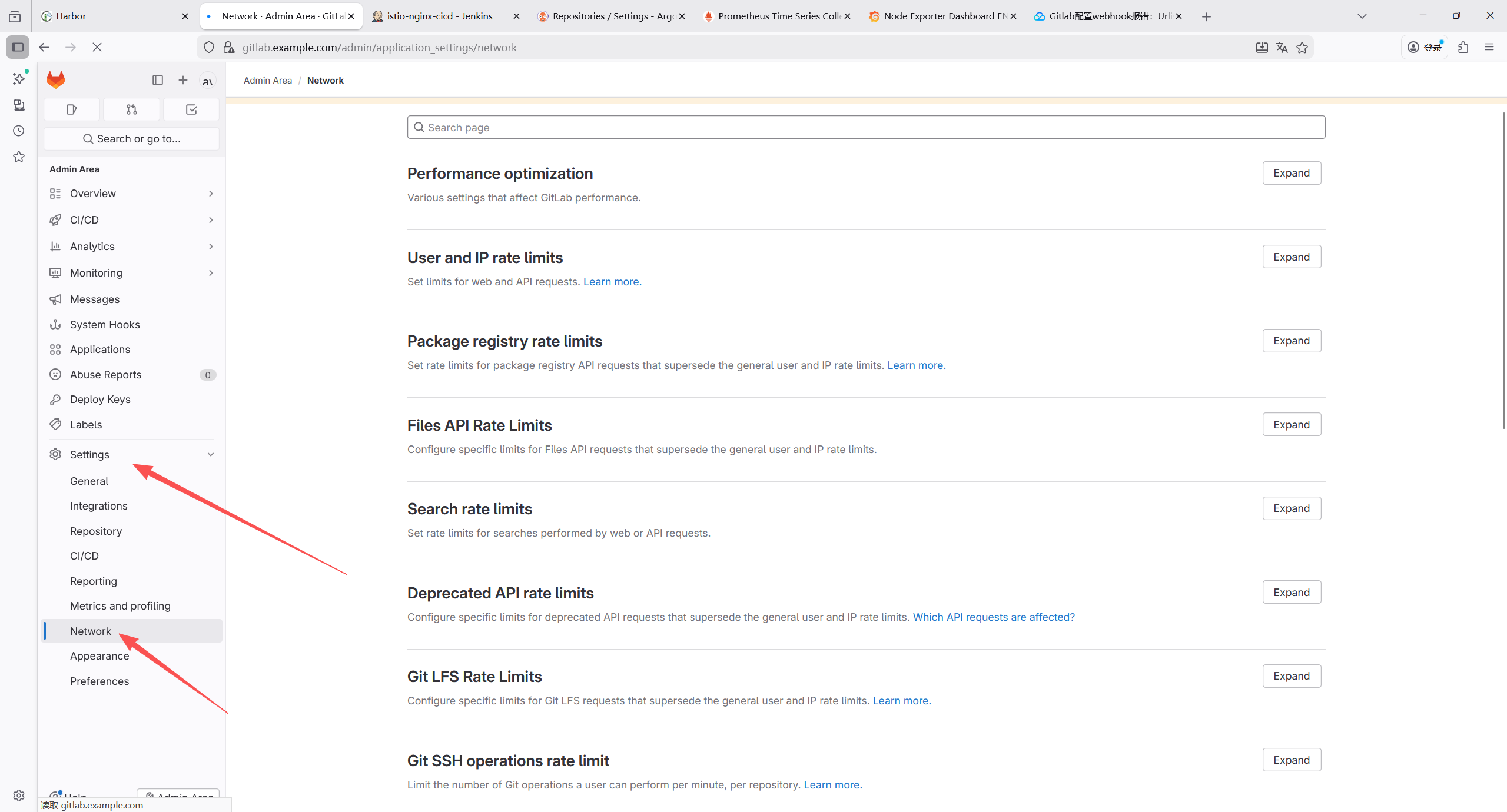

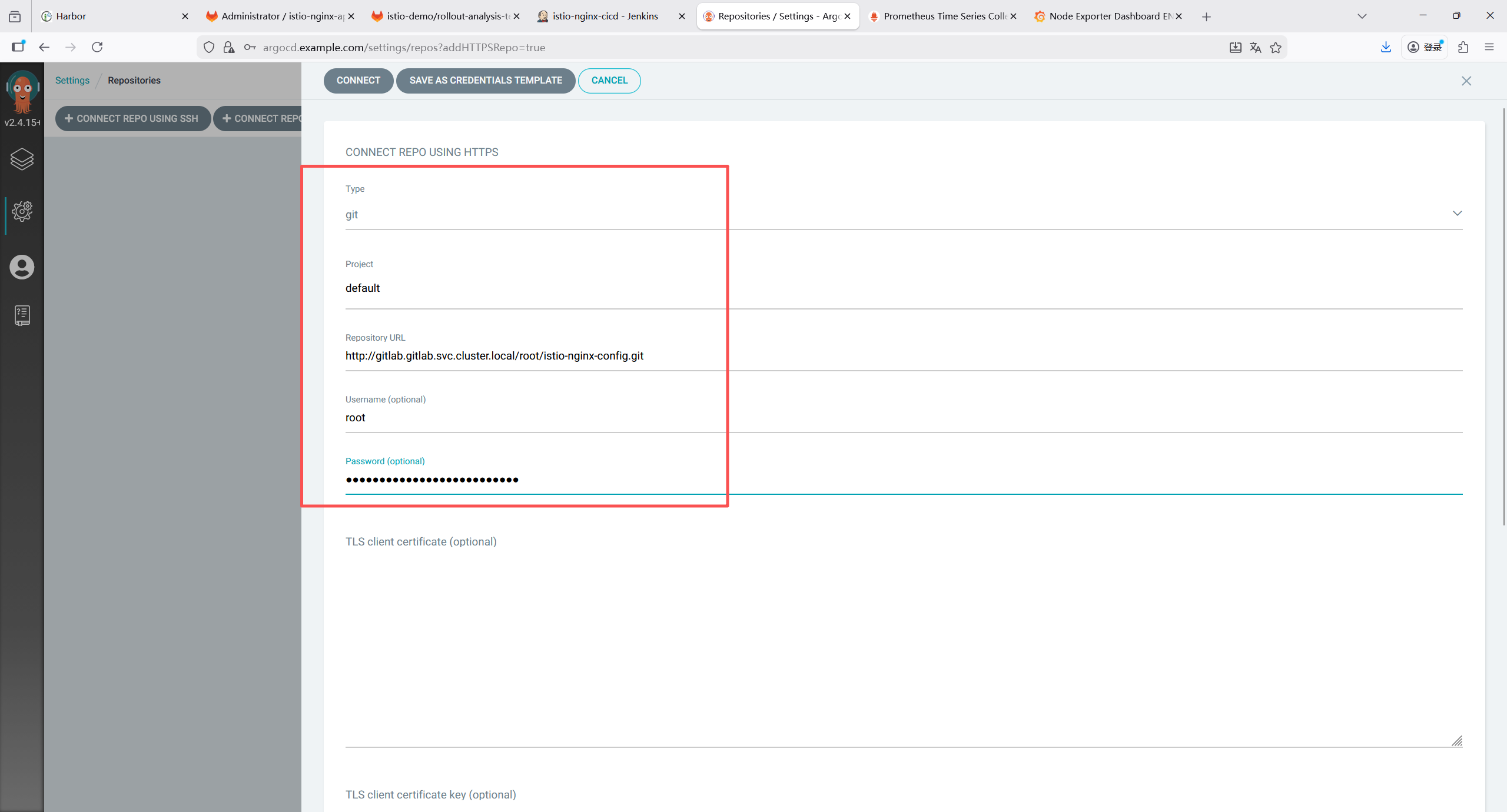

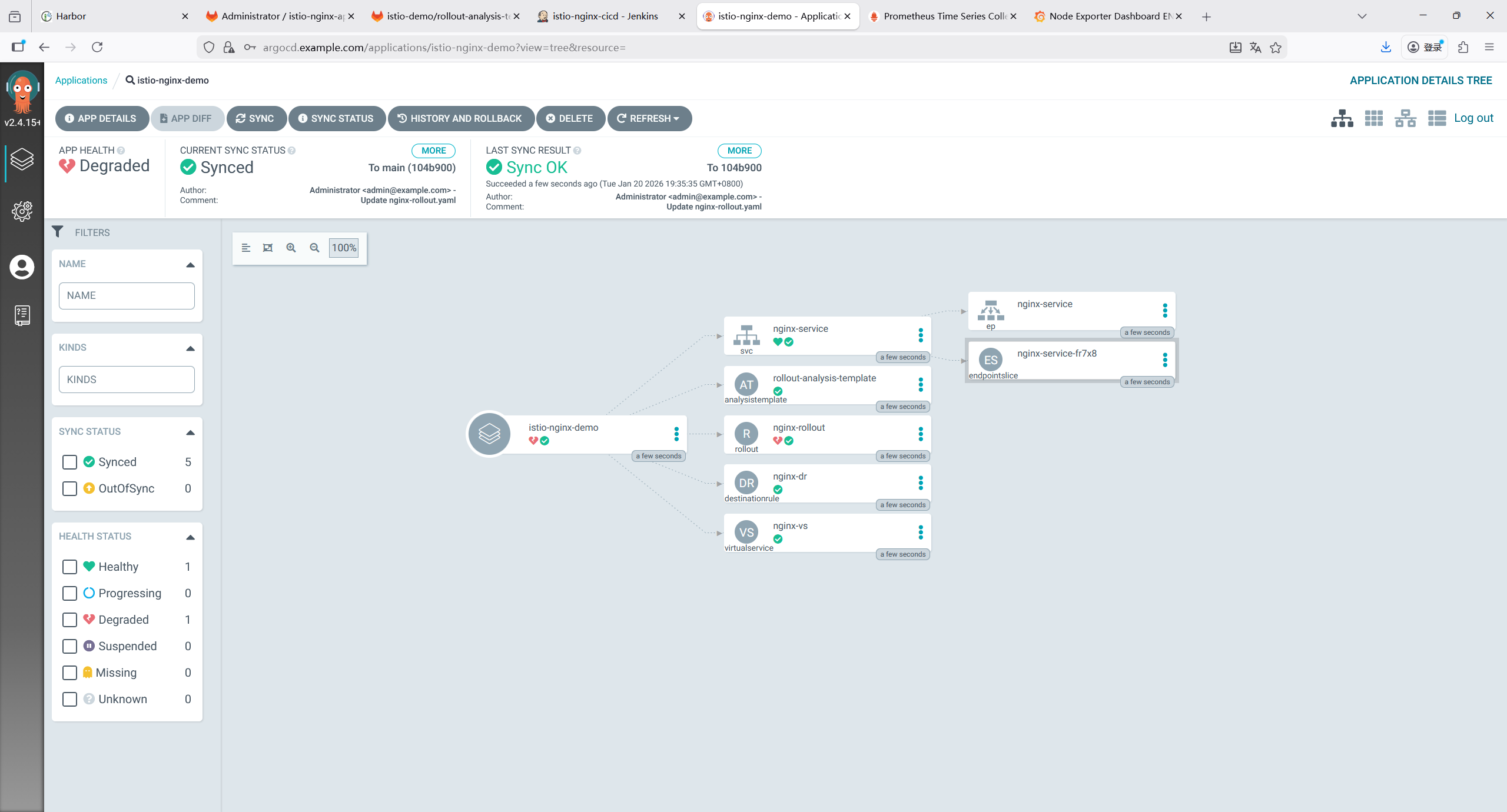

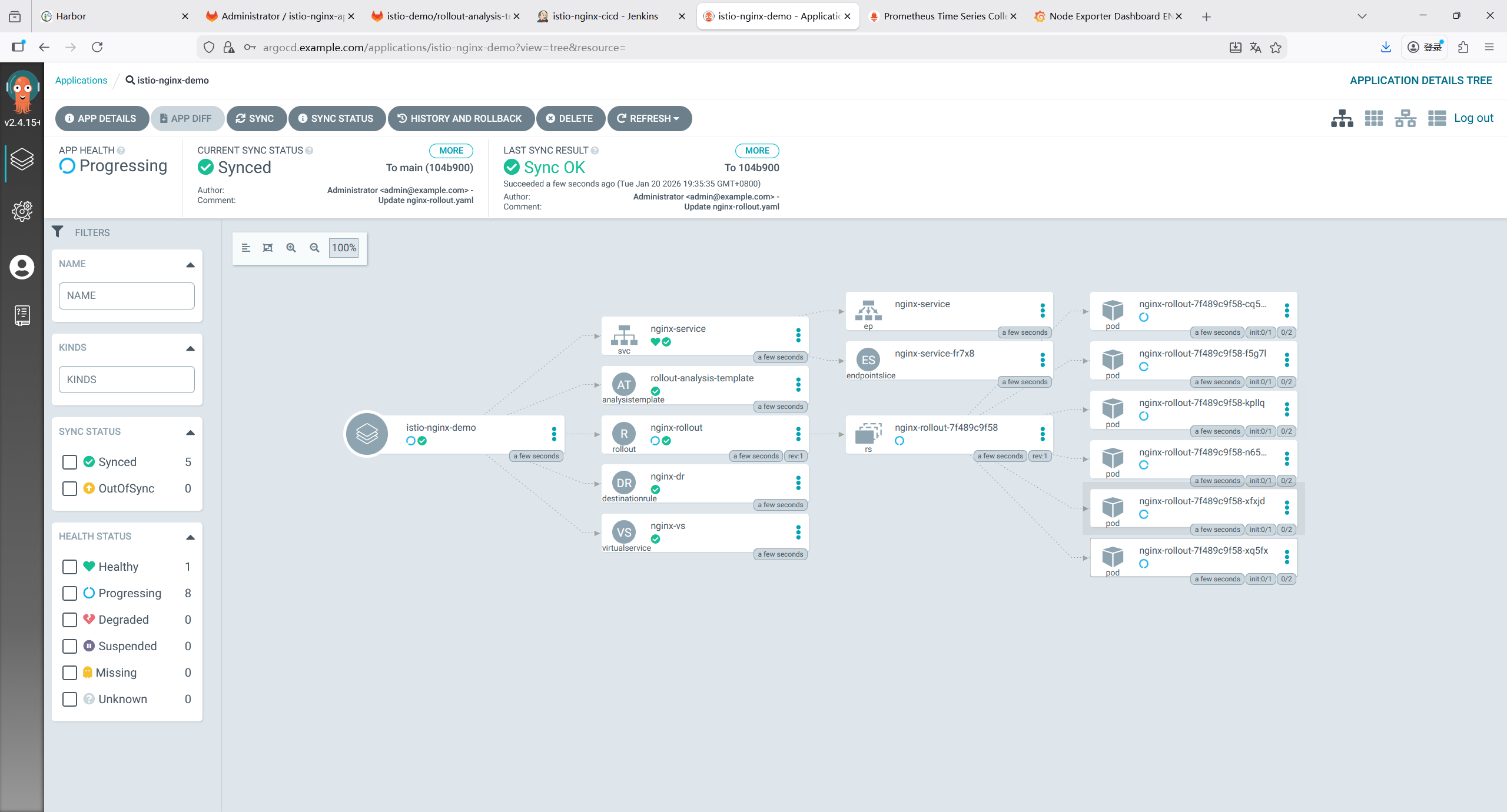

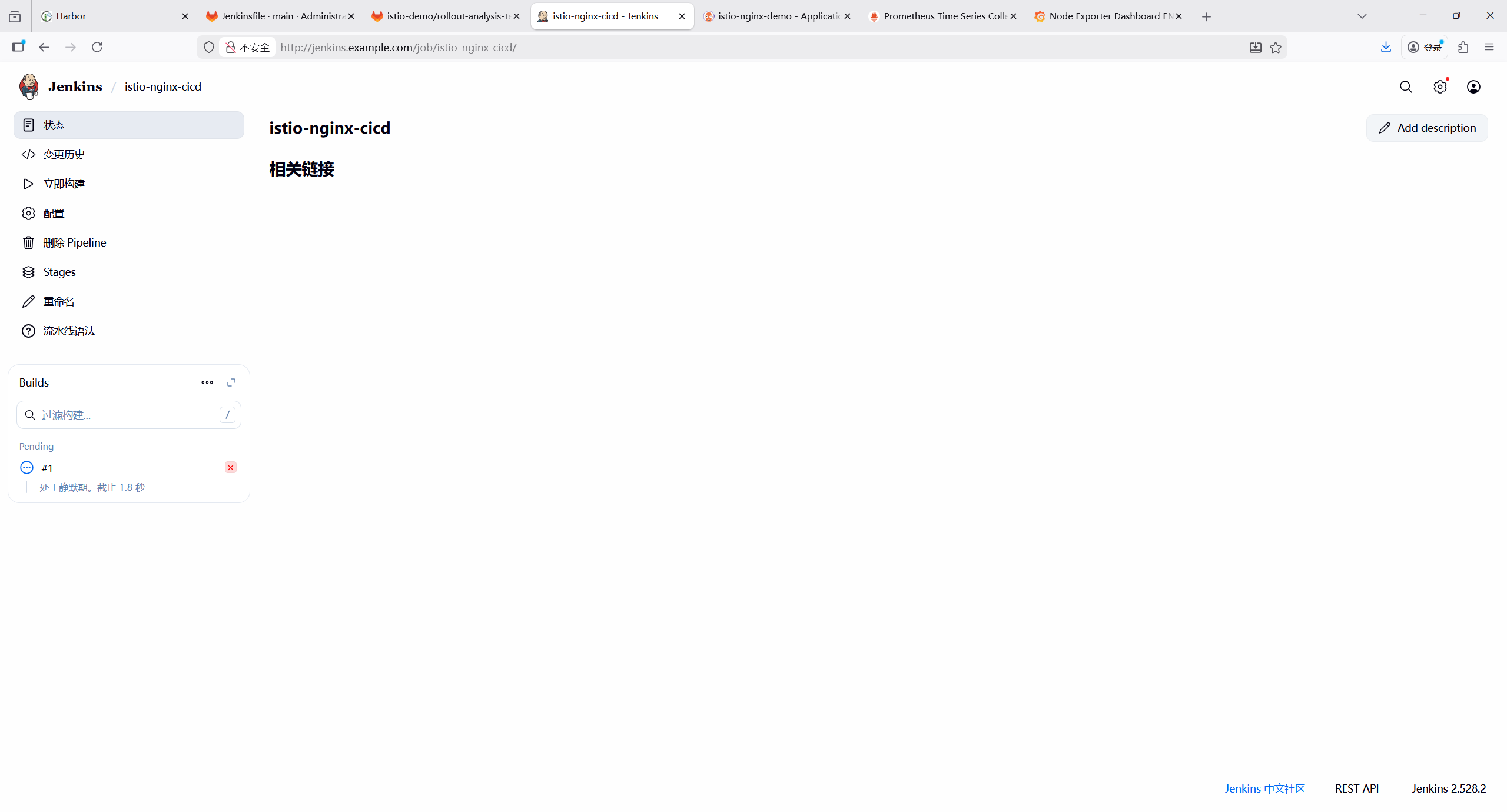

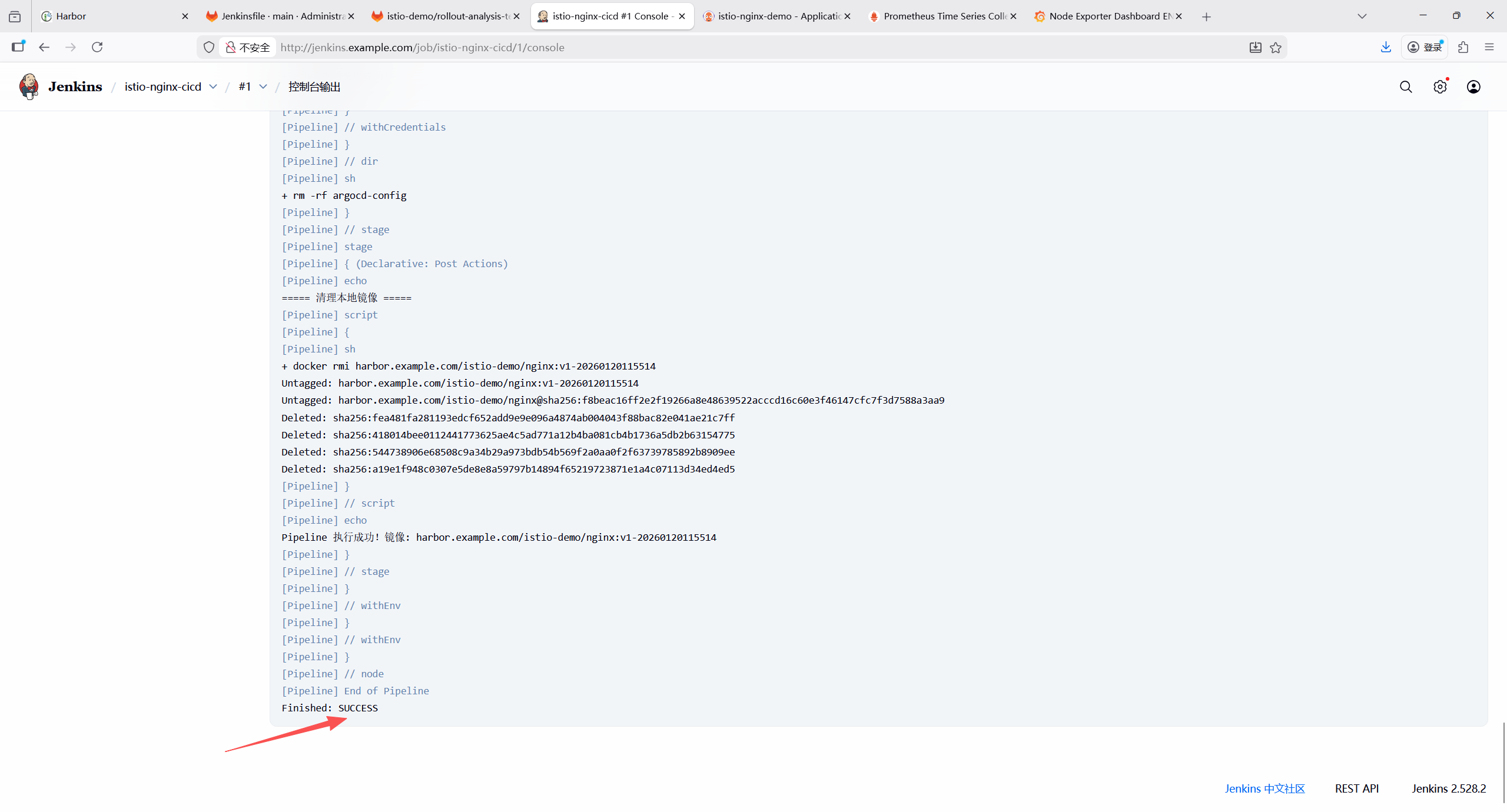

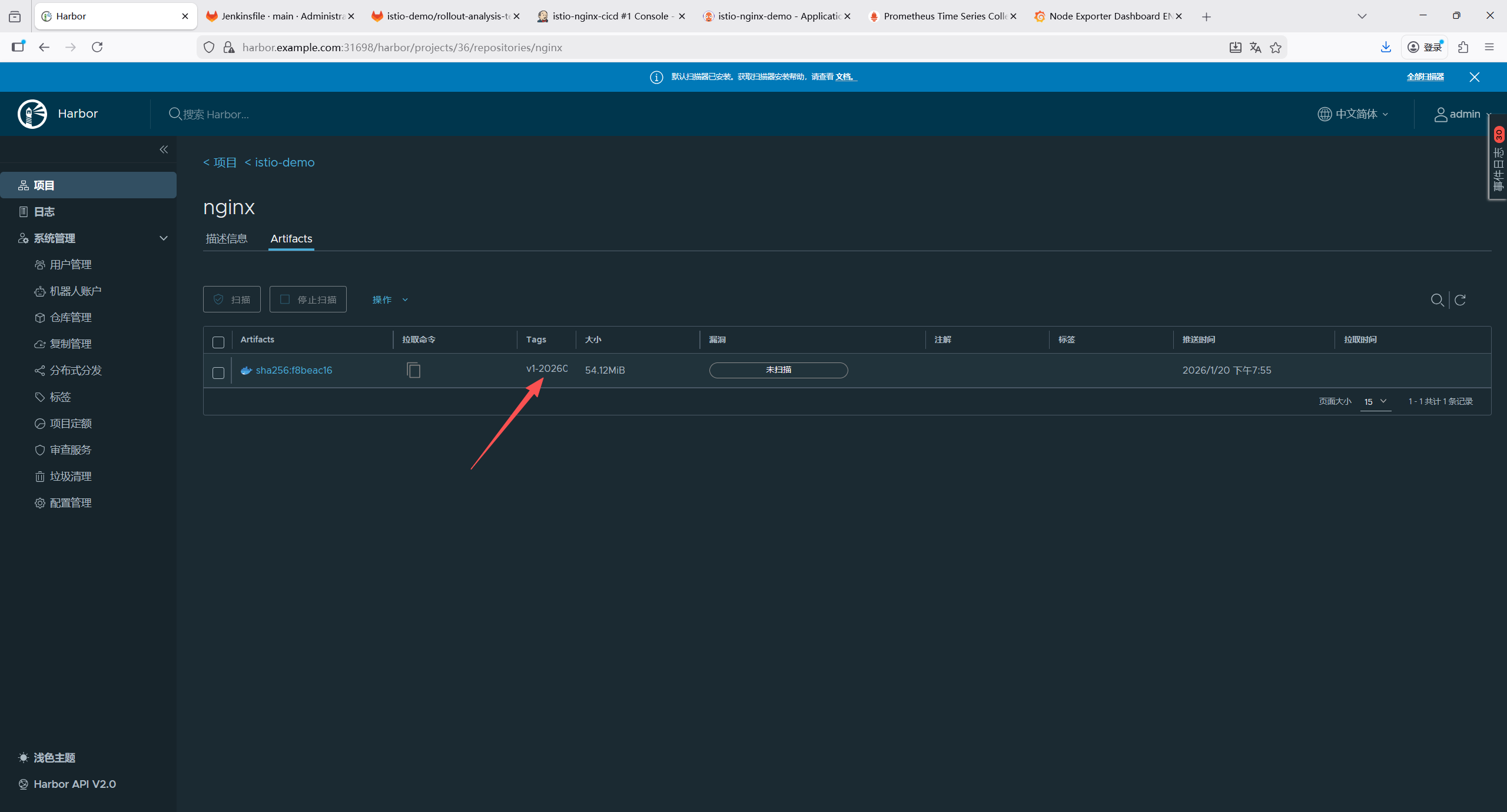

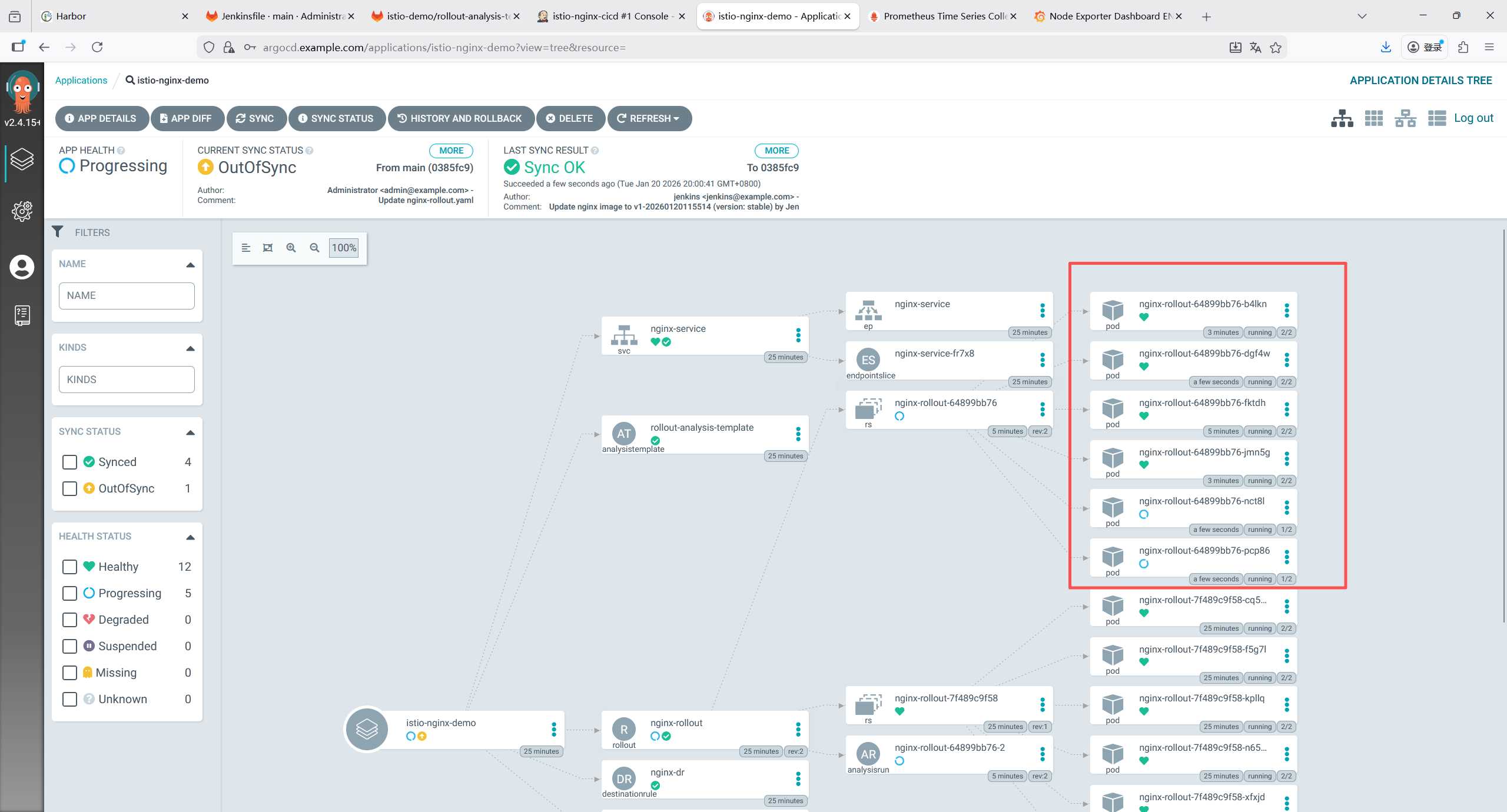

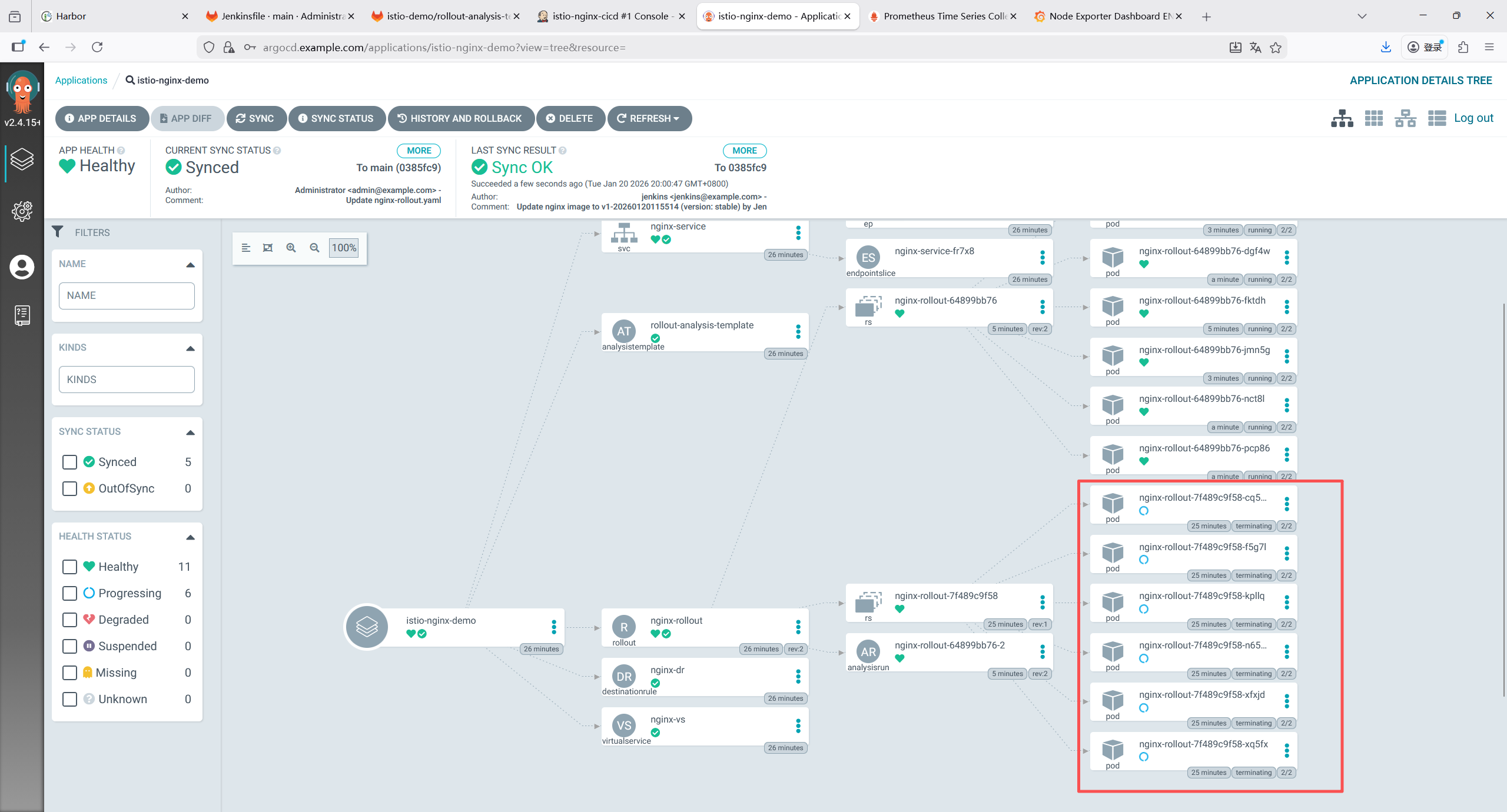

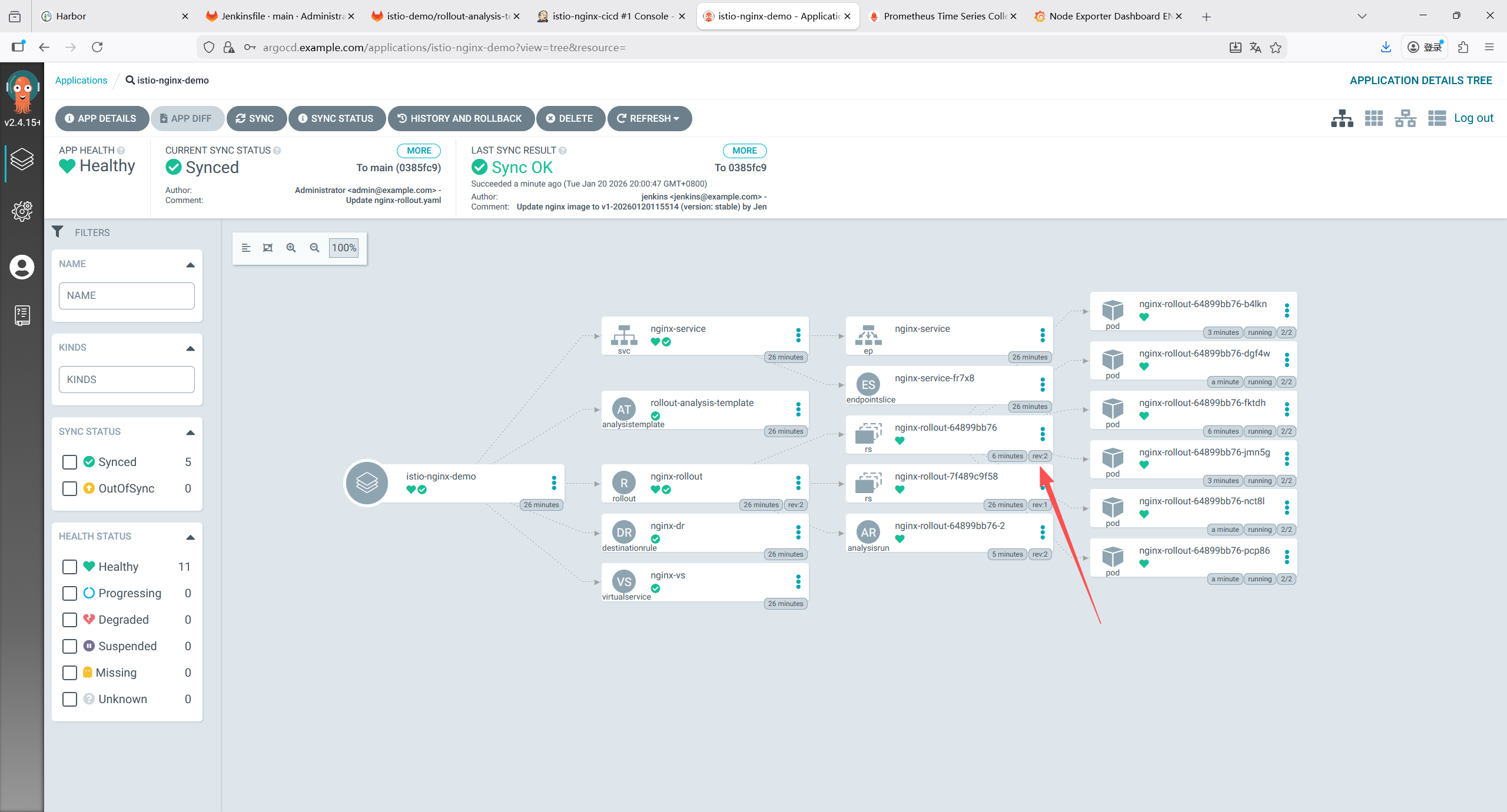

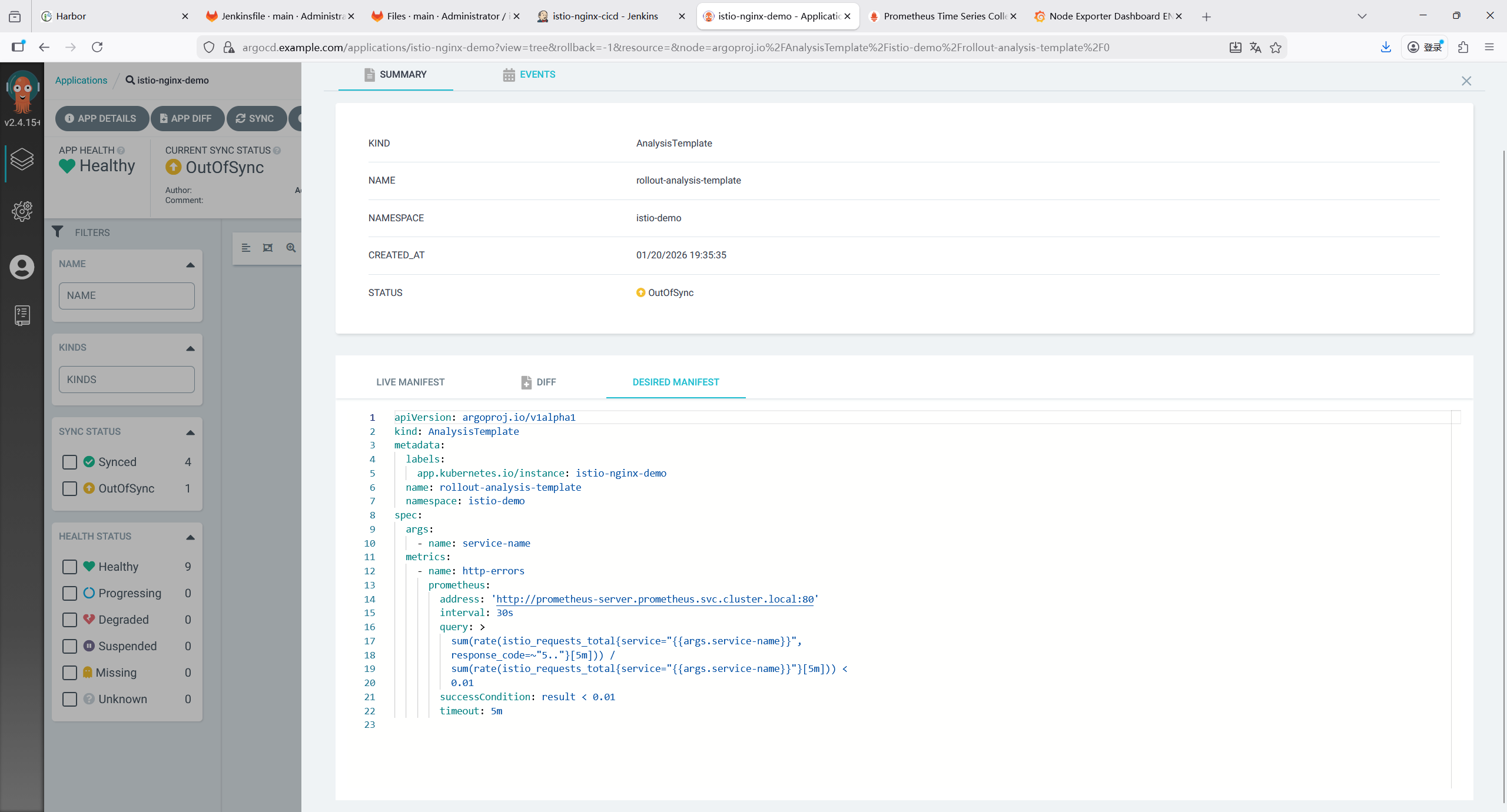

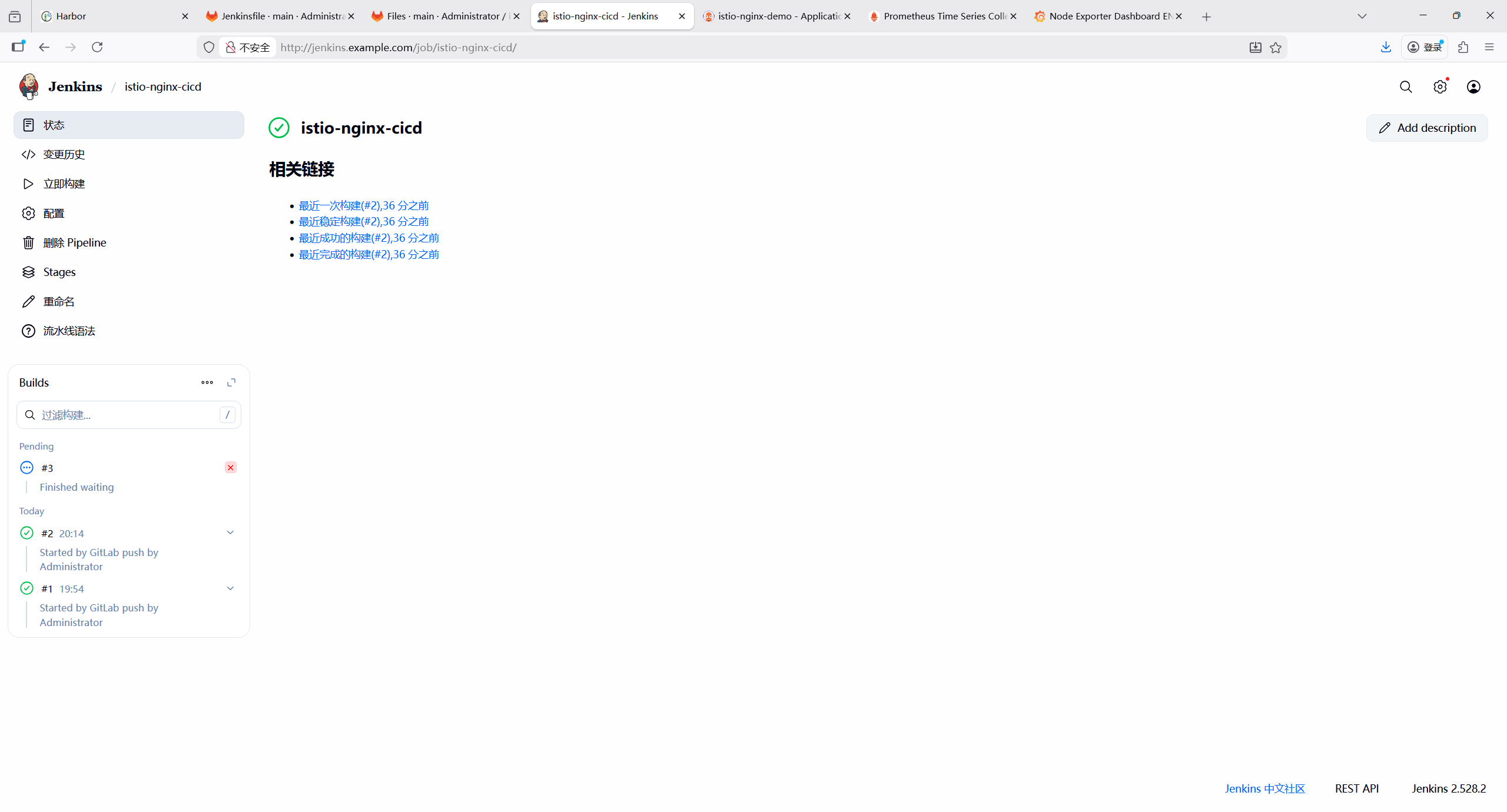

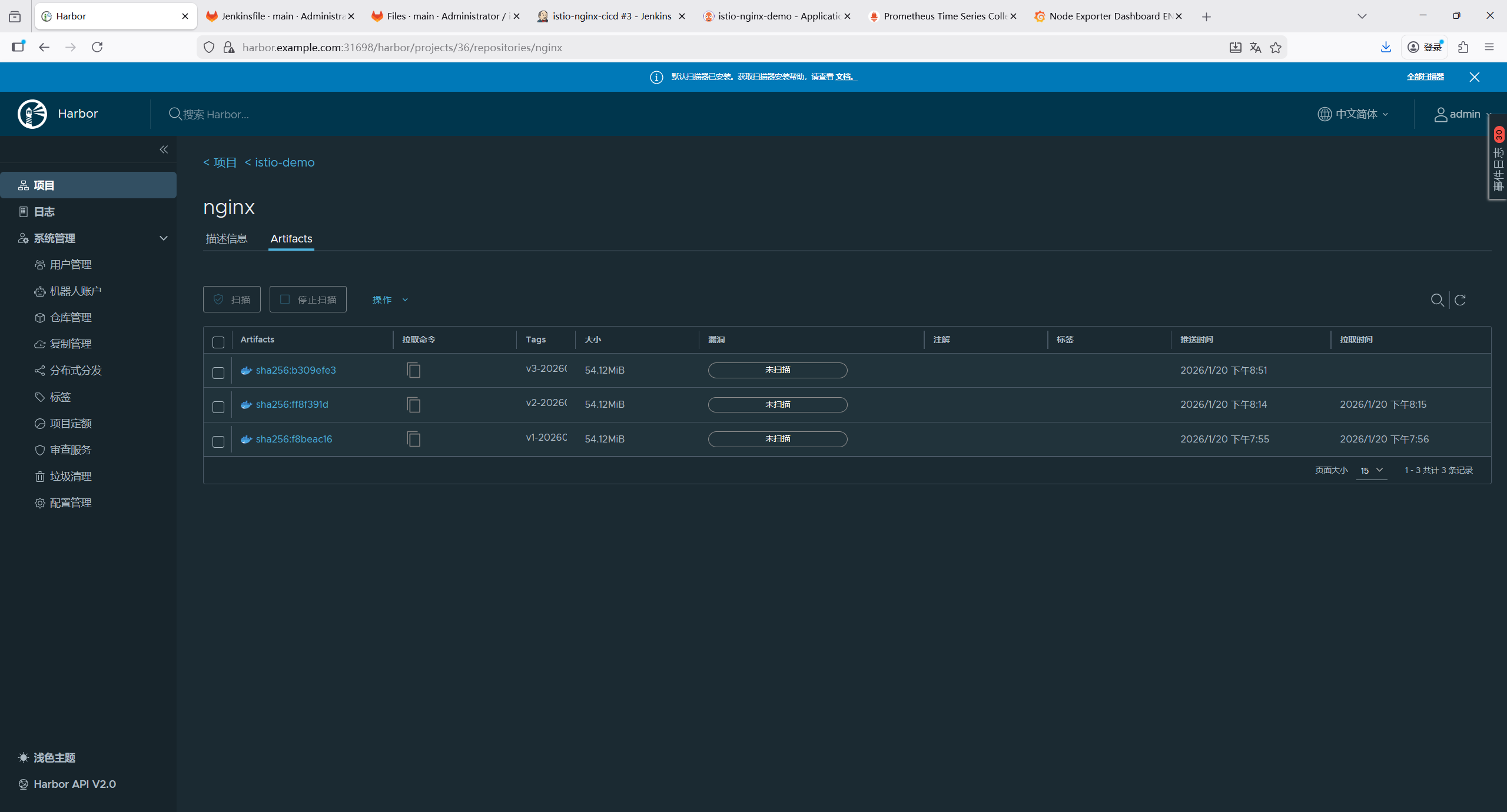

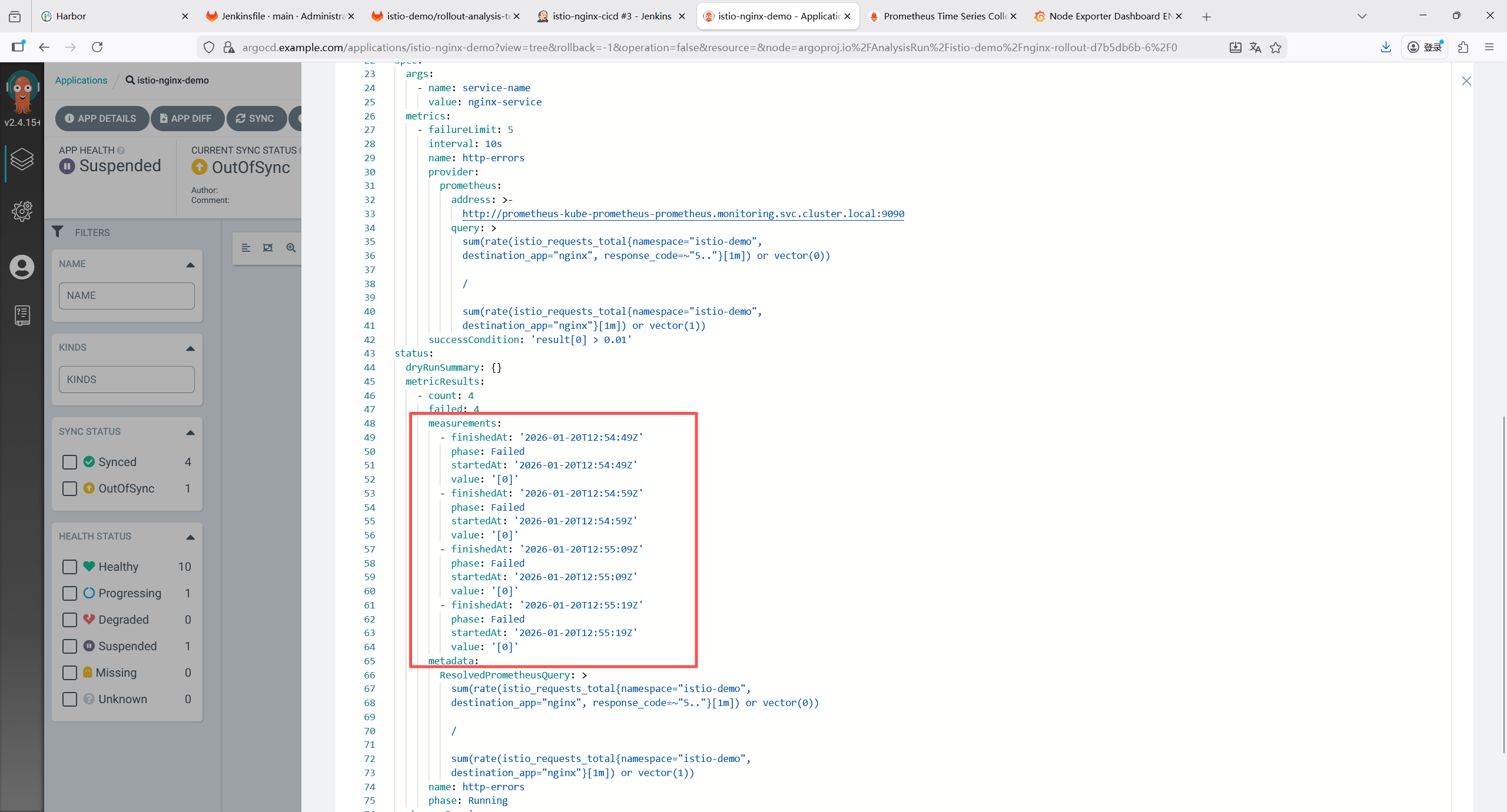

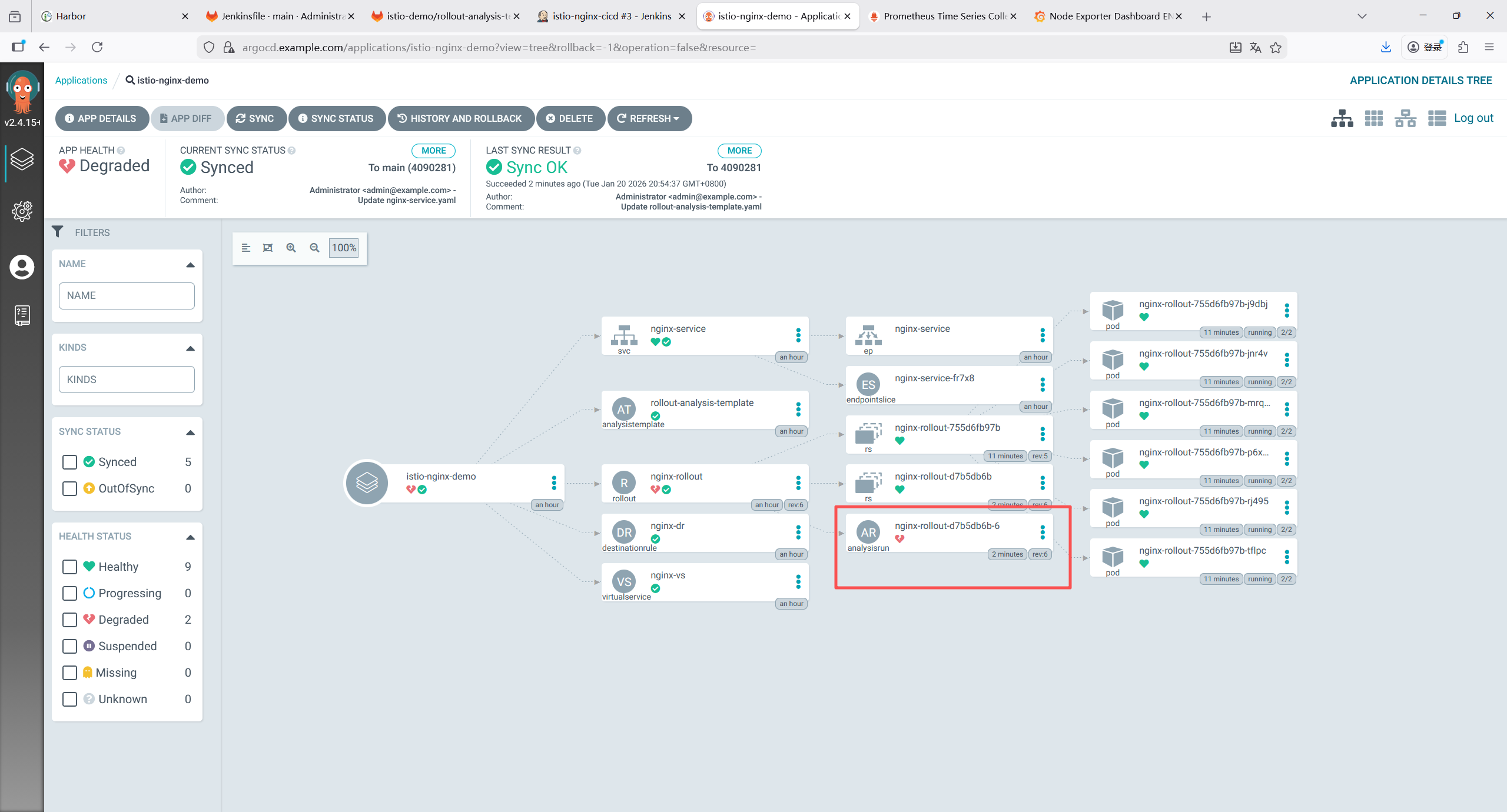

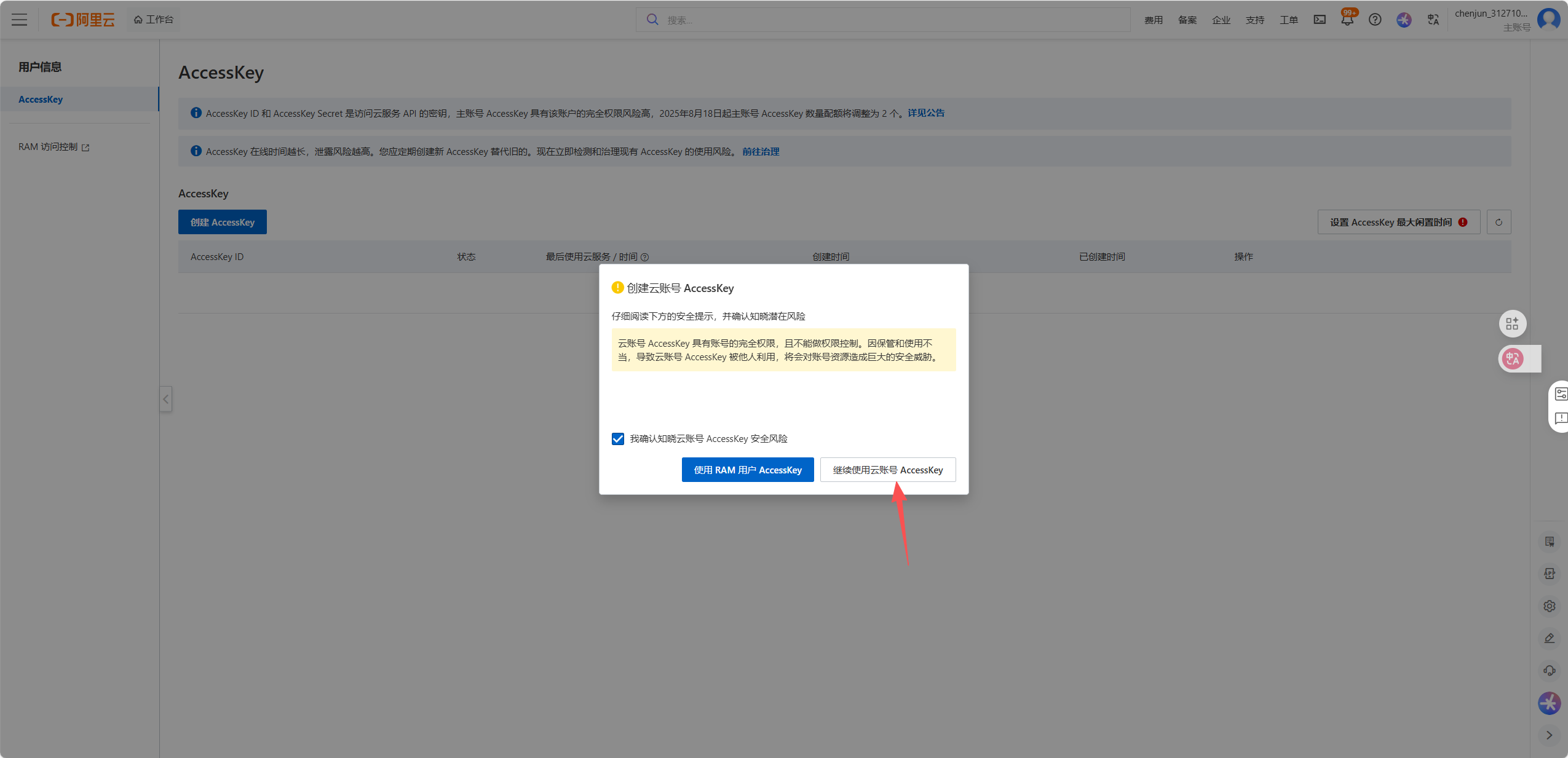

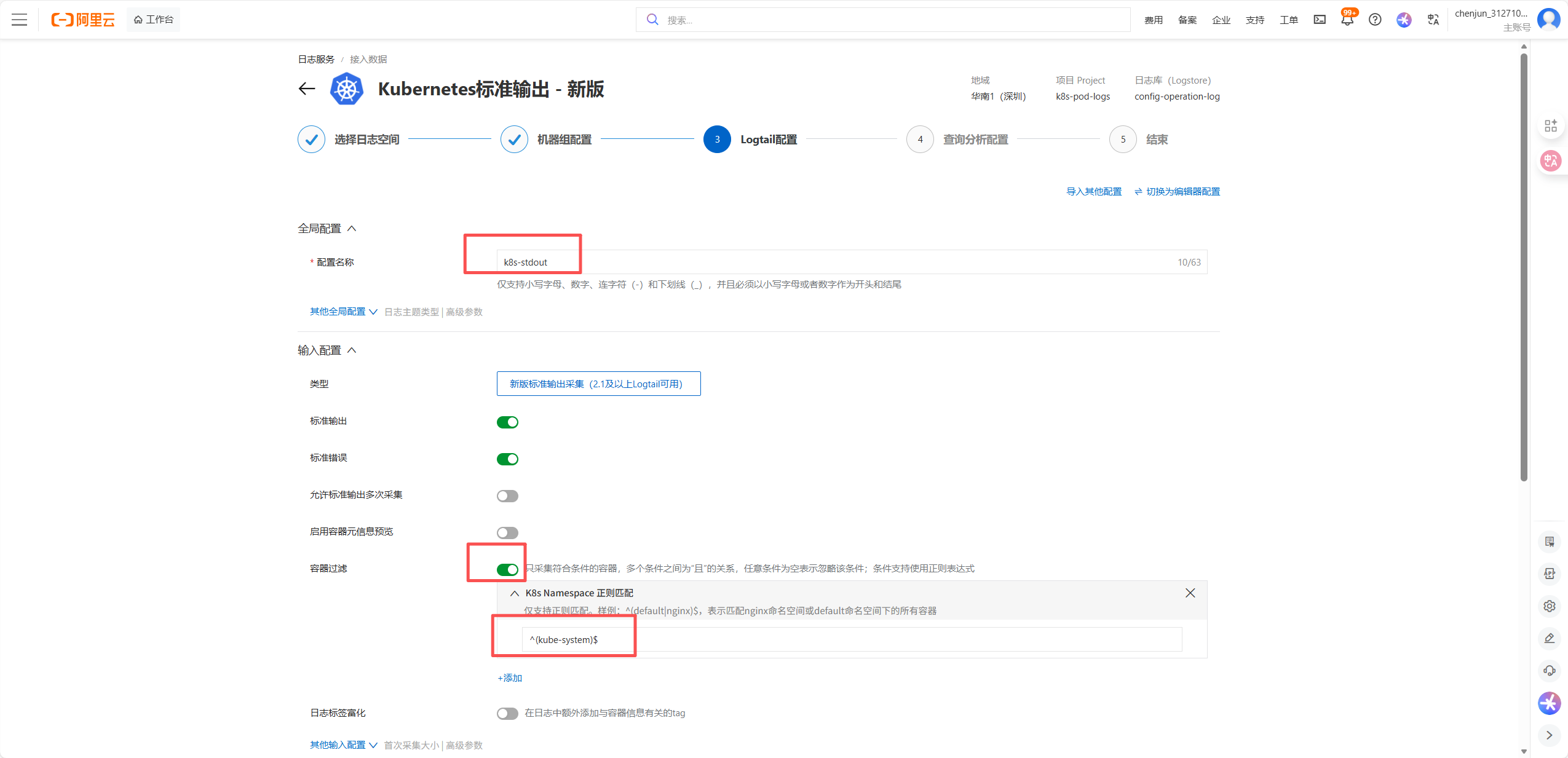

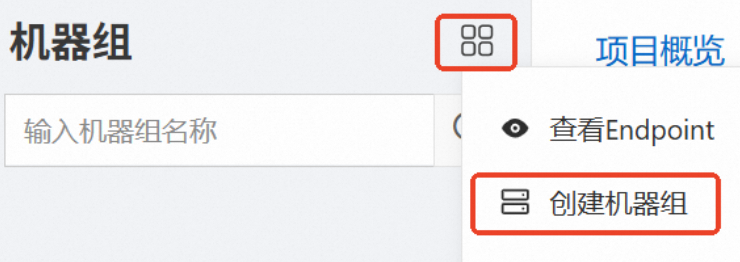

CHANGES=\$(git status --porcelain | grep -v '^??')