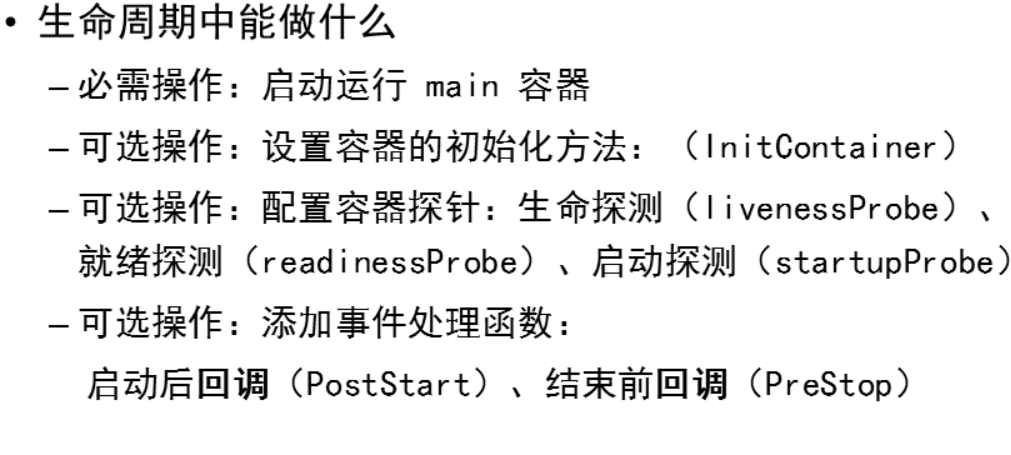

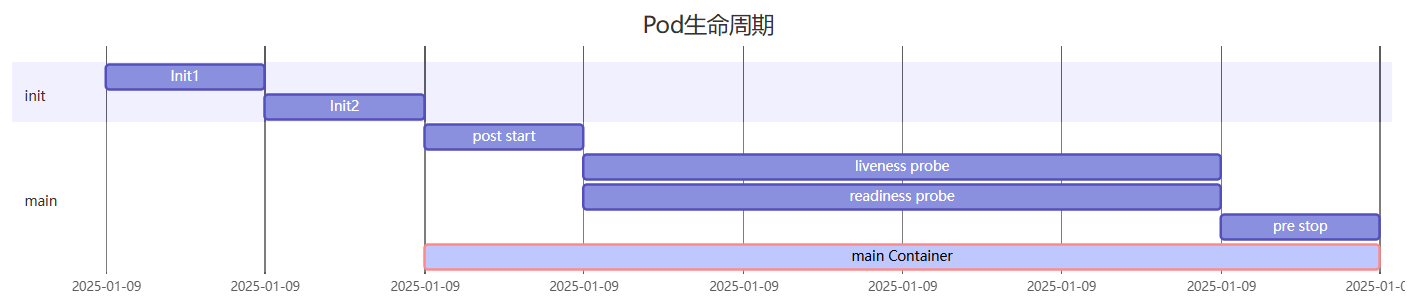

Pod 生命周期

init容器

root@master \~\]# **vim web1.yaml** > --- > > kind: Pod > > apiVersion: v1 > > metadata: > > name: web1 > > spec: > > **initContainers: # 定义初始化任务** > > **- name: task1 # 如果初始化任务失败,主容器不会启动** > > **image: myos:latest # 初始化可以使用不同的镜像** > > **command: \[sh\] # 任务,一般用脚本实现** > > **args: # 任务** > > **- -c # 任务** > > **- \| # 任务** > > **ID=${RANDOM} # 任务** > > **echo "${ID}" # 任务** > > **sleep 3 # 任务** > > **exit $((ID%2)) # 状态 0 成功,其他失败,如果失败会重新执行初始化** > > containers: > > - name: web > > image: myos:httpd \[root@master \~\]# **kubectl replace --force -f web1.yaml** > pod "web1" deleted > > pod/web1 replaced # 如果初始化任务失败就重复执行,直到成功为止 \[root@master \~\]#**kubectl get pods -w** > NAME READY STATUS RESTARTS AGE > > web1 0/1 Init:0/1 0 1s > > web1 0/1 Init:Error 0 6s > > web1 0/1 Init:0/1 1 (1s ago) 7s > > web1 0/1 PodInitializing 0 12s > > web1 1/1 Running 0 13s * **初始化失败** \[root@master \~\]# **vim web1.yaml** > --- > > kind: Pod > > apiVersion: v1 > > metadata: > > name: web1 > > spec: > > **restartPolicy: Never # 任务失败不重启** > > initContainers: > > - name: task1 > > image: myos:latest > > command: \[sh

args:

-c

|

ID=${RANDOM}

echo "${ID}"

sleep 3

exit $((ID%2))

containers:

- name: web

image: myos:httpd

root@master \~\]# **kubectl replace --force -f web1.yaml** > pod "web1" deleted > > pod/web1 replaced # 初始化任务失败,main 容器不会运行 \[root@master \~\]#**kubectl get pods -w** > NAME READY STATUS RESTARTS AGE > > web1 0/1 Init:0/1 0 1s > > web1 0/1 Init:Error 0 5s * **多任务初始化** \[root@master \~\]# vim web1.yaml > --- > > kind: Pod > > apiVersion: v1 > > metadata: > > name: web1 > > spec: > > restartPolicy: Always > > initContainers: > > - name: task1 # 任务一 > > image: myos:latest > > command: \[sh

args:

-c

|

ID=${RANDOM}

echo "${ID}"

sleep 3

exit $((ID%2))

- name: task2 # 任务二,多个任务顺序执行

image: myos:8.5

command: [sh]

args:

-c

|

ID=${RANDOM}

echo "${ID}"

sleep 3

exit $((ID%2))

containers:

- name: web

image: myos:httpd

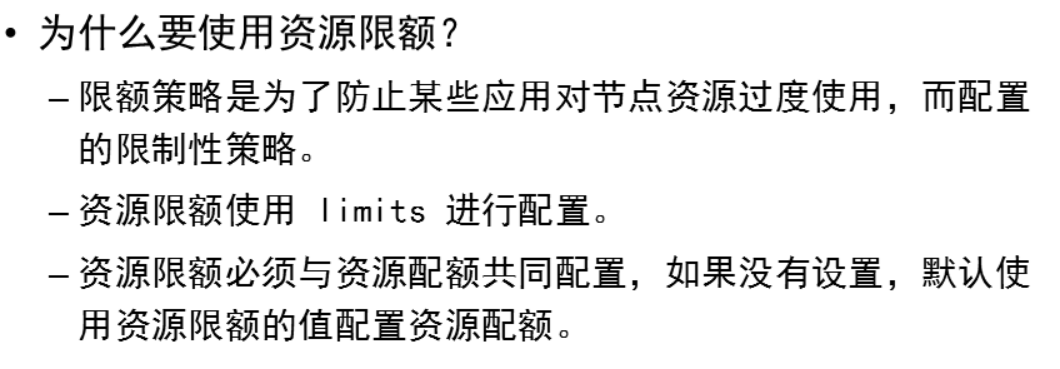

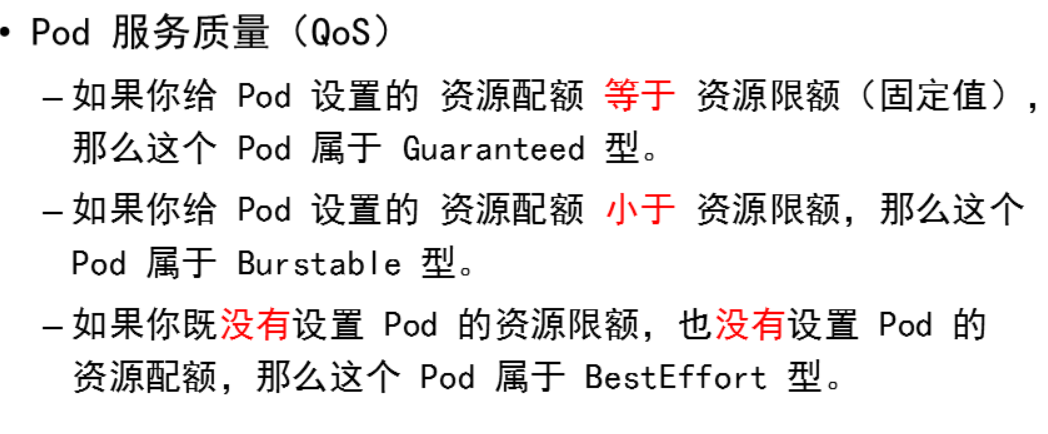

root@master \~\]# kubectl replace --force -f web1.yaml > pod "web1" deleted > > pod/web1 replaced # 多任务顺序执行,必须全部成功后 \[root@master \~\]# **kubectl get pods -w** > NAME READY STATUS RESTARTS AGE > > web1 0/1 Init:0/2 0 1s > > web1 0/1 Init:Error 0 5s > > web1 0/1 Init:0/2 1 (1s ago) 6s > > web1 0/1 Init:1/2 1 (5s ago) 10s > > web1 0/1 Init:1/2 1 11s > > web1 0/1 Init:Error 1 14s > > web1 0/1 Init:1/2 2 (1s ago) 15s > > web1 0/1 PodInitializing 0 18s > > web1 1/1 Running 0 19s #### 启动探针  # 用于保护慢启动的服务 \[root@master \~\]# vim web2.yaml > --- > > kind: Pod > > apiVersion: v1 > > metadata: > > name: web2 > > spec: > > containers: > > - name: web > > image: myos:httpd > > **startupProbe: # 启动探针** > > **initialDelaySeconds: 30 # 首次检查延时** > > **failureThreshold: 3 # 可失败的次数** > > **periodSeconds: 10 # 检查间隔** > > **tcpSocket: # 使用 tcp 协议检测** > > **port: 80 # 端口号** \[root@master \~\]#**kubectl apply -f web2.yaml** > pod/web2 created \[root@master \~\]#**kubectl get pods -w** > NAME READY STATUS RESTARTS AGE > > web2 0/1 Running 0 1s > > web2 1/1 Running 0 41s #### 存活探针 # 判断某个核心资源是否可用,在 Pod 的全部生命周期中(重建容器) \[root@master \~\]# vim web2.yaml > --- > > kind: Pod > > apiVersion: v1 > > metadata: > > name: web2 > > spec: > > containers: > > - name: web > > image: myos:httpd > > startupProbe: > > initialDelaySeconds: 30 > > failureThreshold: 3 > > periodSeconds: 10 > > tcpSocket: > > port: 80 > > **livenessProbe: # 定义存活探针** > > **timeoutSeconds: 3 # 服务影响超时** > > **httpGet: # 使用 HTTP 协议检测** > > **path: /info.php # 请求的 URL 路径** > > **port: 80 # 服务端口号** \[root@master \~\]# **kubectl apply -f web2.yaml** > pod/web2 created \[root@master \~\]# **kubectl get pods -w** > NAME READY STATUS RESTARTS AGE > > web2 0/1 Running 0 5s > > web2 1/1 Running 0 41s > > web2 0/1 Running 1 (1s ago) 112s > > web2 0/1 Running 1 (30s ago) 2m21s > > web2 1/1 Running 1 (30s ago) 2m21s # 在其他终端执行测试 \[root@master \~\]# **kubectl exec -it web2 -- rm -f info.php** #### 就绪探针 # 附加条件检测,在 Pod 的全部生命周期中(禁止调用,不重启) \[root@master \~\]# vim web2.yaml > --- > > kind: Pod > > apiVersion: v1 > > metadata: > > name: web2 > > spec: > > containers: > > - name: web > > image: myos:httpd > > startupProbe: > > initialDelaySeconds: 30 > > failureThreshold: 3 > > periodSeconds: 10 > > tcpSocket: > > port: 80 > > livenessProbe: > > timeoutSeconds: 3 > > httpGet: > > path: /info.php > > port: 80 > > **readinessProbe: # 定义就绪探针** > > **failureThreshold: 3 # 失败确认次数** > > **periodSeconds: 5 # 检测间隔** > > **exec: # 执行命令进行检测** > > **command: # 检测命令** > > **- sh** > > **- -c** > > **- \|** > > **read ver \ > **if (( ${ver:-0} \> 2 ));then** > > **res=0** > > **fi** > > **exit ${res:-1} # 版本大于 2 成功,否则失败** \[root@master \~\]# **kubectl replace --force -f web2.yaml** > pod/web2 created \[root@master \~\]# kubectl get pods -w > NAME READY STATUS RESTARTS AGE > > web2 0/1 Running 0 5s > > web2 0/1 Running 0 40s > > web2 1/1 Running 0 60s > > web2 0/1 Running 0 90s # 在其他终端执行测试 \[root@master \~\]#**kubectl exec -it web2 -- bash** \[root@web2 \~\]# **echo "3" \>/var/www/html/version.txt** \[root@web2 \~\]# **echo "1" \>/var/www/html/version.txt** #### 事件处理函数 # 在主容器启动之后或结束之前执行的附加操作 \[root@master \~\]# vim web3.yaml > --- > > kind: Pod > > apiVersion: v1 > > metadata: > > name: web3 > > spec: > > containers: > > - name: web > > image: myos:httpd > > **lifecycle: # 定义启动后事件处理函数** > > **postStart:** > > **exec:** > > **command:** > > **- sh** > > **- -c** > > **- \|** > > **echo "自动注册服务" \|tee -a /tmp/web.log** > > **sleep 10** > > **preStop: # 定义关闭前事件处理函数** > > **exec:** > > **command:** > > **- sh** > > **- -c** > > **- \|** > > **echo "清除已注册的服务" \|tee -a /tmp/web.log** > > **sleep 10** \[root@master \~\]# kubectl apply -f web3.yaml > pod/web3 created \[root@master \~\]# **kubectl exec -it web3 -- bash** \[root@web3 html\]# cat /tmp/web.log > 自动注册服务 \[root@web3 html\]# cat /tmp/web.log > 自动注册服务 > > 清除已注册的服务 # 在其他终端执行 \[root@master \~\]# **kubectl delete pods web3** pod "web3" deleted ### Pod 资源管理 * **memtest.py 需准备** # 创建 Pod \[root@master \~\]# vim app.yaml > --- > > kind: Pod > > apiVersion: v1 > > metadata: > > name: app > > spec: > > containers: > > - name: web > > image: myos:httpd \[root@master \~\]# kubectl apply -f app.yaml pod/app created # 使用 memtest.py 占用内存资源 \[root@master \~\]# **kubectl cp memtest.py app:/usr/bin/** \[root@master \~\]# **kubectl exec -it app -- bash** \[root@app html\]# **memtest.py 1000** > use memory success > > press any key to exit : # 使用 info.php 占用计算资源 \[root@master \~\]# **curl http://10.244.1.10/info.php?id=9999999** # 在另一个终端监控 \[root@master \~\]# **watch -n 1 'kubectl top pods'** > NAME CPU(cores) MEMORY(bytes) > > app 993m 19Mi > > app 3m 1554Mi #### 资源配额 \[root@master \~\]# vim app.yaml > --- > > kind: Pod > > apiVersion: v1 > > metadata: > > name: app > > spec: > > containers: > > - name: web > > image: myos:httpd > > **resources: # 配置资源策略** > > **requests: # 配额策略** > > **cpu: 1500m # 计算资源配额** > > **memory: 800Mi # 内存资源配额** \[root@master \~\]# kubectl replace --force -f app.yaml > pod "app" deleted > > pod/app replaced \[root@master \~\]# **kubectl describe pods app** > ...... > > Ready: True > > Restart Count: 0 > > Requests: > > cpu: 1500m > > memory: 800Mi * **验证配额策略** \[root@master \~\]# **sed "s,app,app1," app.yaml \|kubectl apply -f -** **pod/app1 created** \[root@master \~\]# sed "s,app,app2," app.yaml \|kubectl apply -f - pod/app2 created \[root@master \~\]# sed "s,app,app3," app.yaml \|kubectl apply -f - pod/app3 created \[root@master \~\]# kubectl get pods > NAME READY STATUS RESTARTS AGE > > app 1/1 Running 0 18s > > app1 1/1 Running 0 16s > > app2 1/1 Running 0 15s > > app3 0/1 Pending 0 12s # 清理实验配置 \[root@master \~\]# kubectl delete pod --all #### 资源限额  \[root@master \~\]# vim app.yaml > --- > > kind: Pod > > apiVersion: v1 > > metadata: > > name: app > > spec: > > containers: > > - name: web > > image: myos:httpd > > **resources: # 配置资源策略** > > **limits: # 限额策略** > > **cpu: 600m # 计算资源限额** > > **memory: 800Mi # 内存资源限额** \[root@master \~\]# kubectl replace --force -f app.yaml > pod "app" deleted > > pod/app replaced \[root@master \~\]# kubectl describe pods app > ...... > > Ready: True > > Restart Count: 0 > > Limits: > > cpu: 600m > > memory: 800Mi > > Requests: > > cpu: 600m > > memory: 800Mi * **验证资源限额** \[root@master \~\]# **kubectl cp memtest.py app:/usr/bin/** \[root@master \~\]# kubectl exec -it app -- bash \[root@app html\]# memtest.py 700 > use memory success > > press any key to exit : \[root@app html\]# **memtest.py 1000** > Killed \[root@master \~\]# **curl http://10.244.1.10/info.php?id=9999999** # 在其他终端查看 \[root@master \~\]#**watch -n 1 'kubectl top pods'** > NAME CPU(cores) MEMORY(bytes) > > app 600m 19Mi # 清理实验 Pod \[root@master \~\]# kubectl delete pods --all pod "app" deleted #### Pod 服务质量  ##### BestEffort \[root@master \~\]# vim app1.yaml > --- > > kind: Pod > > apiVersion: v1 > > metadata: > > name: app1 > > spec: > > containers: > > - name: web > > image: myos:httpd \[root@master \~\]# kubectl apply -f app1.yaml pod/app1 created > \[root@master \~\]# kubectl describe pods app1 \|grep QoS > > QoS Class: BestEffort ##### Burstable \[root@master \~\]# vim app2.yaml > --- > > kind: Pod > > apiVersion: v1 > > metadata: > > name: app2 > > spec: > > containers: > > - name: web > > image: myos:httpd > > resources: > > requests: > > cpu: 400m > > memory: 200Mi \[root@master \~\]# kubectl apply -f app2.yaml pod/app2 created \[root@master \~\]#**kubectl describe pods app2 \|grep QoS** > QoS Class: Burstable ##### Guaranteed \[root@master \~\]# vim app3.yaml > --- > > kind: Pod > > apiVersion: v1 > > metadata: > > name: app3 > > spec: > > containers: > > - name: web > > image: myos:httpd > > resources: > > requests: > > cpu: 200m > > memory: 200Mi > > **limits:** > > **cpu: 200m** > > **memory: 200Mi** \[root@master \~\]# kubectl apply -f app3.yaml pod/app3 created \[root@master \~\]# **kubectl describe pods app3 \|grep QoS** > QoS Class: Guaranteed ### Quota 资源管理 #### 全局资源配额 \[root@master \~\]# vim quota.yaml > --- > > kind: ResourceQuota # 全局资源限额对象 > > apiVersion: v1 > > metadata: > > name: myquota1 > > namespace: work # 规则作用的名称空间 > > spec: # ResourceQuota.spec 定义 > > hard: # 创建强制规则 > > pods: 3 # 限制创建资源对象总量 > > scopes: # 配置服务质量类型 > > - BestEffort # Pod QoS类型 \[root@master \~\]#**kubectl create namespace work** > namespace/work created \[root@master \~\]# **kubectl apply -f quota.yaml** > resourcequota/myquota1 created # 查看配额信息 \[root@master \~\]# **kubectl describe namespaces work** > ...... > > Resource Quotas > > Name: myquota1 > > Scopes: BestEffort > > \* Matches all pods that do not have resource requirements set. These pods have a best effort quality of service. > > Resource Used Hard > > -------- --- --- > > pods 0 3 * **验证配额策略** \[root@master \~\]# sed 's,app1,app11,' app1.yaml \|kubectl -n work apply -f - \[root@master \~\]# sed 's,app1,app12,' app1.yaml \|kubectl -n work apply -f - \[root@master \~\]# sed 's,app1,app13,' app1.yaml \|kubectl -n work apply -f - \[root@master \~\]# **sed 's,app1,app14,' app1.yaml \|kubectl -n work apply -f -** > **Error from server (Forbidden): error when creating "STDIN": pods "app14" is forbidden: exceeded quota: myquota1, requested: pods=1, used: pods=3, limited: pods=3** \[root@master \~\]# sed 's,app3,app31,' app3.yaml \|kubectl -n work apply -f - \[root@master \~\]# sed 's,app3,app32,' app3.yaml \|kubectl -n work apply -f - #### 服务质量配额 \[root@master \~\]# vim quota.yaml > --- > > kind: ResourceQuota > > apiVersion: v1 > > metadata: > > name: myquota1 > > namespace: work > > spec: > > hard: > > pods: 3 > > scopes: > > - BestEffort > > --- > > kind: ResourceQuota > > apiVersion: v1 > > metadata: > > name: myquota2 > > namespace: work > > spec: > > hard: > > pods: 10 # 限制创建资源对象总量 > > cpu: 2300m # 计算资源配额 > > memory: 3Gi # 内存资源配额 > > scopes: > > - NotBestEffort # Pod QoS类型 # 更新配额策略 \[root@master \~\]#**kubectl apply -f quota.yaml** > resourcequota/myquota1 configured > > resourcequota/myquota2 created # 查看配额信息 \[root@master \~\]# kubectl describe namespace work > ...... > > Resource Quotas > > Name: myquota1 > > Scopes: BestEffort > > \* Matches all pods that do not have resource requirements set. These pods have a best effort quality of service. > > Resource Used Hard > > -------- --- --- > > pods 3 3 > > Name: myquota2 > > Scopes: NotBestEffort > > \* Matches all pods that have at least one resource requirement set. These pods have a burstable or guaranteed quality of service. > > Resource Used Hard > > -------- --- --- > > cpu 400 2300m > > memory 400 3Gi > > pods 2 10 * **验证配额策略** \[root@master \~\]# sed 's,app2,app21,' app2.yaml \|kubectl -n work apply -f - \[root@master \~\]# sed 's,app2,app22,' app2.yaml \|kubectl -n work apply -f - \[root@master \~\]# sed 's,app2,app23,' app2.yaml \|kubectl -n work apply -f - \[root@master \~\]# sed 's,app2,app24,' app2.yaml \|kubectl -n work apply -f - \[root@master \~\]# **sed 's,app2,app25,' app2.yaml \|kubectl -n work apply -f -** > Error from server (Forbidden): error when creating "STDIN": pods "app25" is forbidden: exceeded quota: myquota2, requested: cpu=400m, used: cpu=2, limited: cpu=2300m * **清理实验配置** \[root@master \~\]# kubectl -n work delete pods --all \[root@master \~\]# kubectl delete namespace work namespace "work" deleted