文章目录

- kubernetes容器云平台

-

- 入门须知

- [第 1 章 Kubernetes概述](#第 1 章 Kubernetes概述)

- [第 2 章 Kubernetes集群部署](#第 2 章 Kubernetes集群部署)

-

- docker版

- containerd版

-

- 前置环境部署

- 安装containerd

-

- 加载模块

- 立即生效

- 安装依赖的软件包

- [添加 Docker 软件源](#添加 Docker 软件源)

- 查看containerd版本

- 安装指定版本containerd

- 生成containerd的配置文件

- 修改containerd的驱动程序

- 修改镜像仓库地址

- 启动containerd

- 镜像加速配置

- 启动containerd

- 测试

- 安装kubeadm

- 主节点部署Kubernetes

- node工作节点加入集群

- 部署网络CNI组建

- [第 3 章 kubernetes基础](#第 3 章 kubernetes基础)

-

- 第1部:客户端命令kubectl

- 集群Node管理

- [worker node节点管理集群](#worker node节点管理集群)

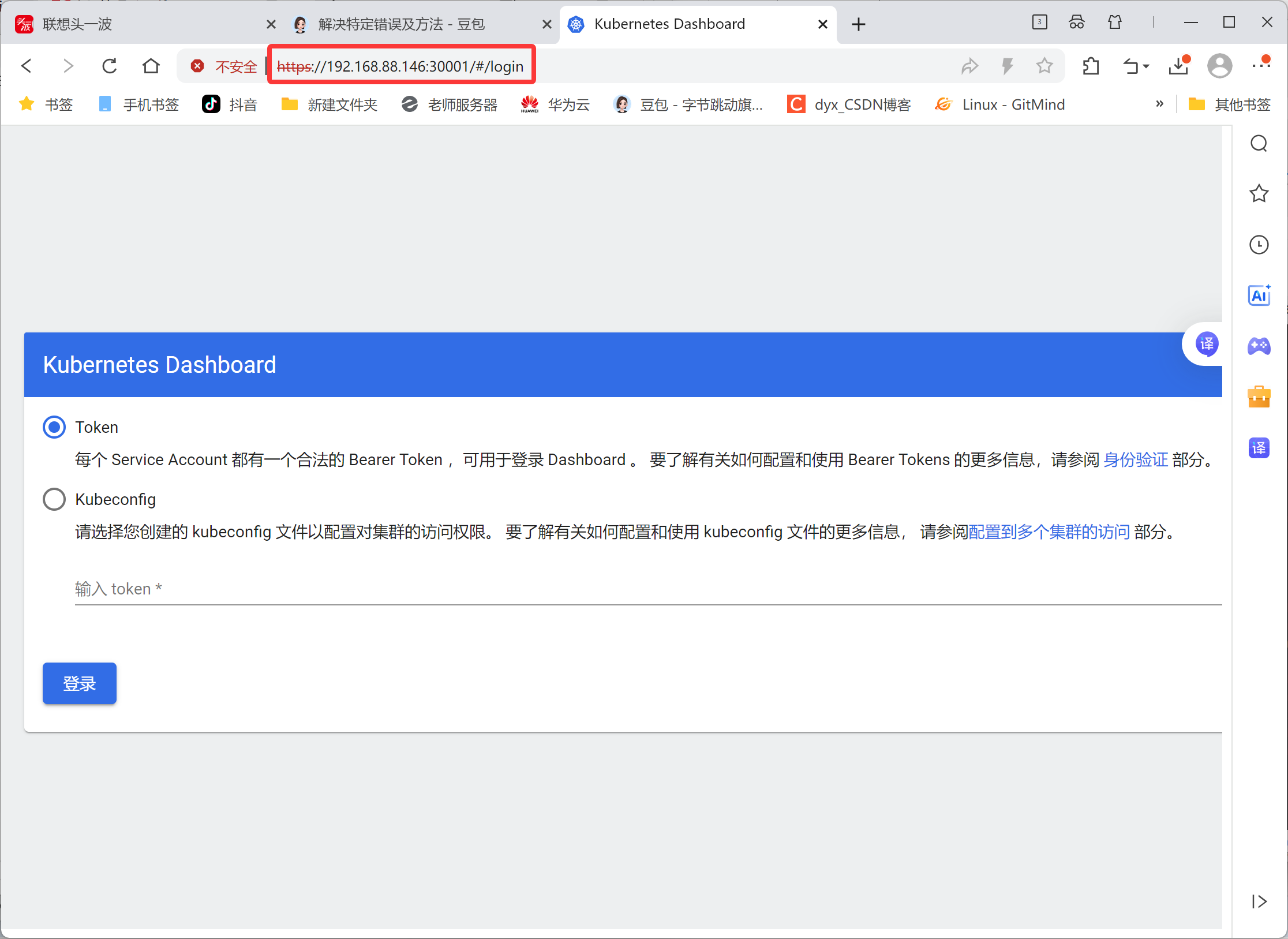

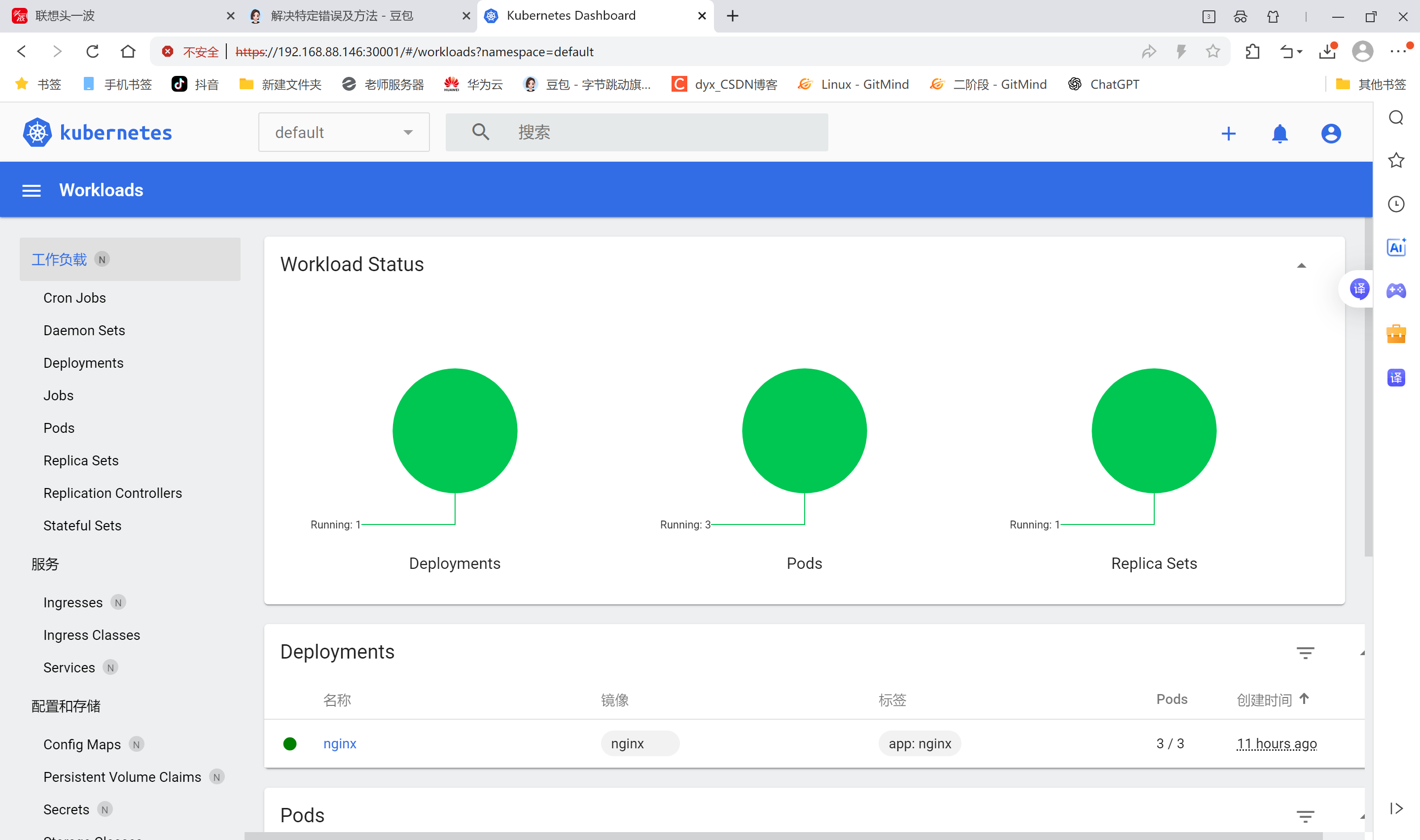

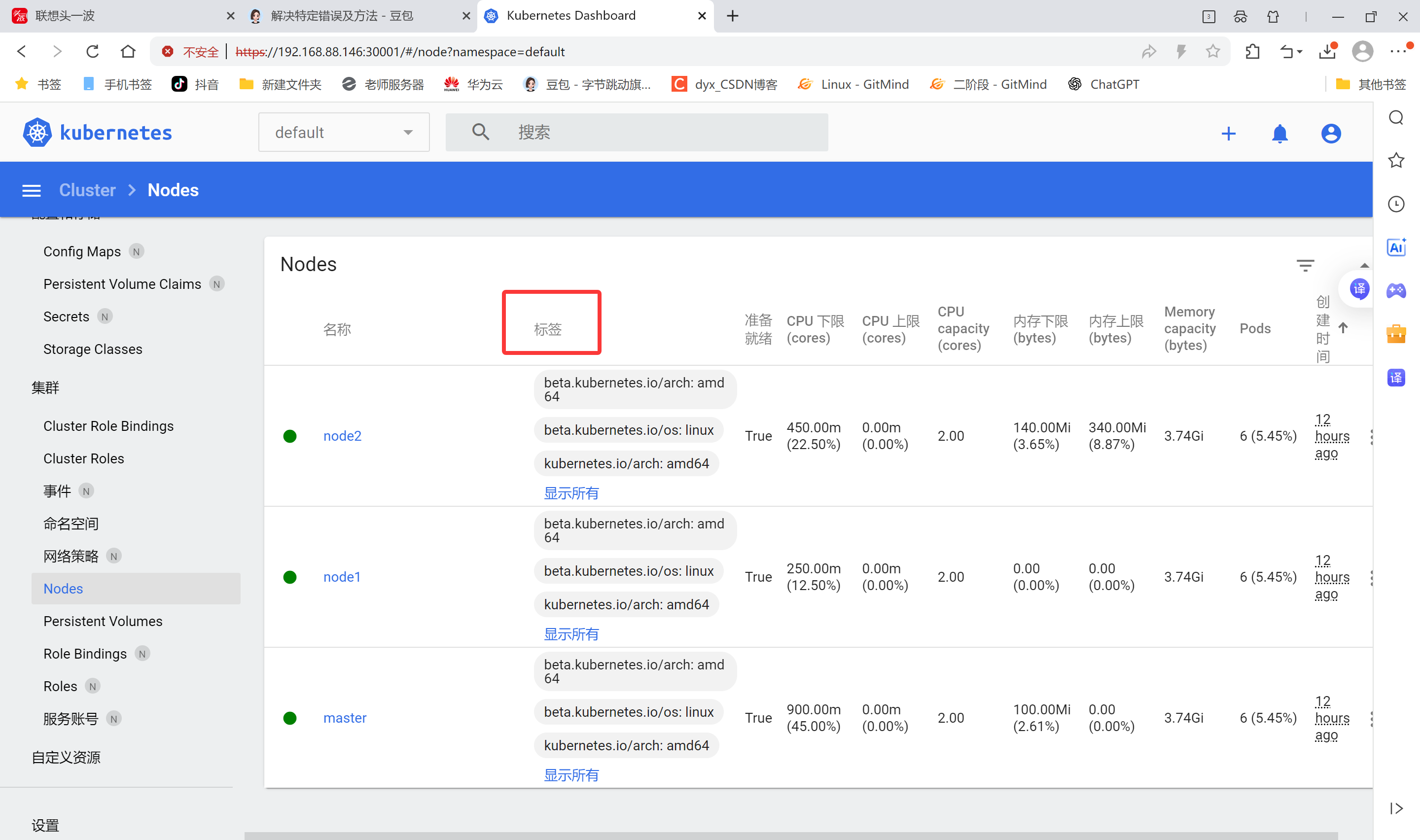

- dashboard界面

- 节点标签(label)

- YAML声明式文件

- YAML资源对象描述方法

- 命名空间(Namespace)

- [第 4 章 kubernetes核心概念](#第 4 章 kubernetes核心概念)

-

- 组件

-

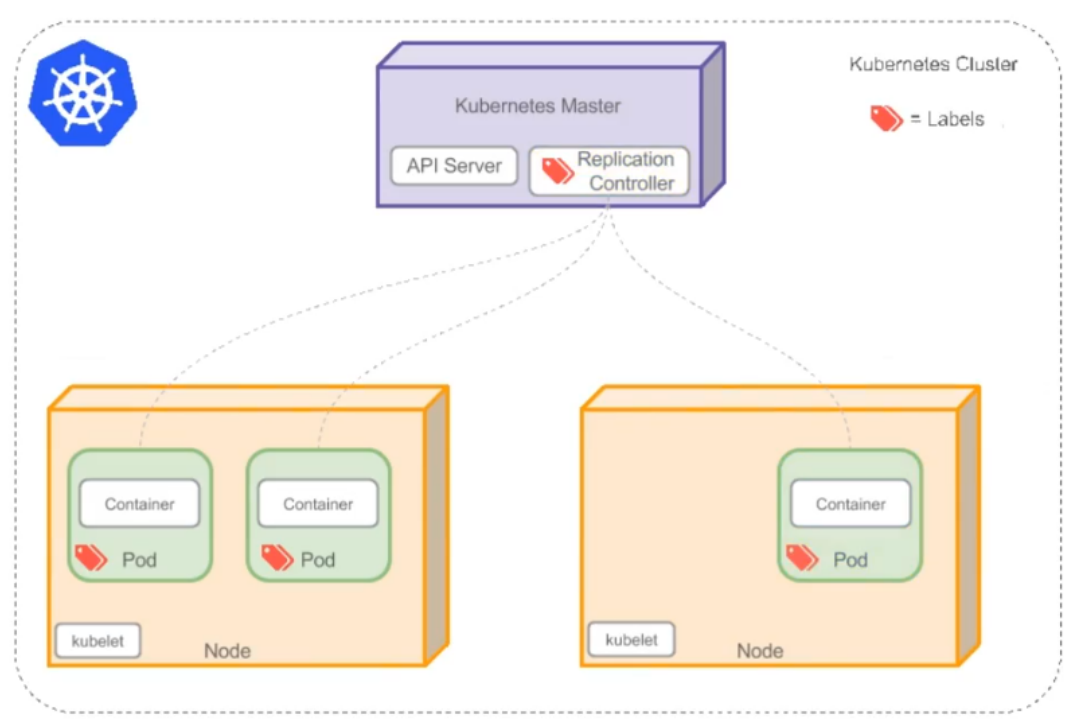

- 1.Pod

- 2.Controller

- 3.Label

- [4.Label Selector](#4.Label Selector)

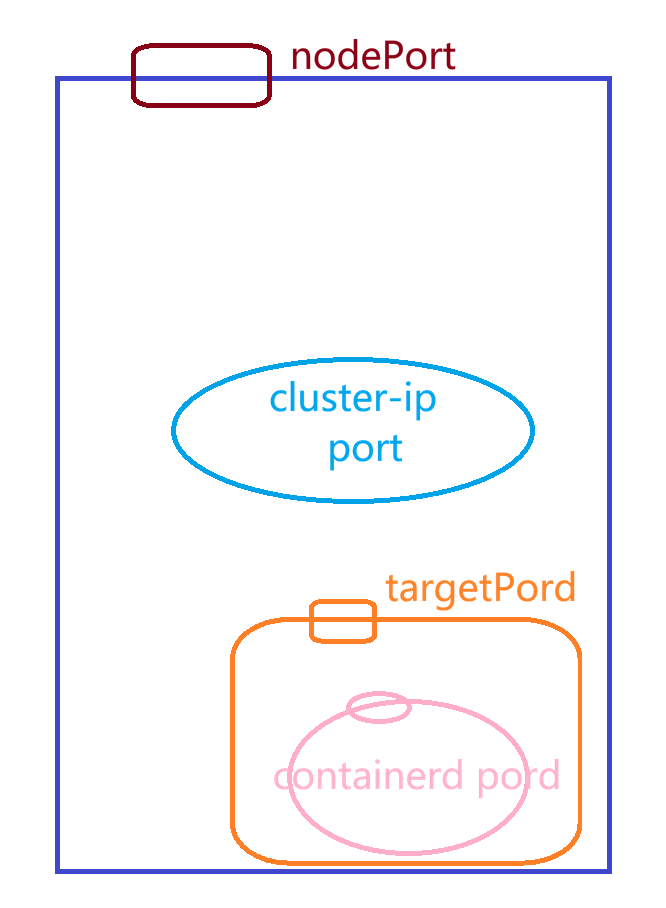

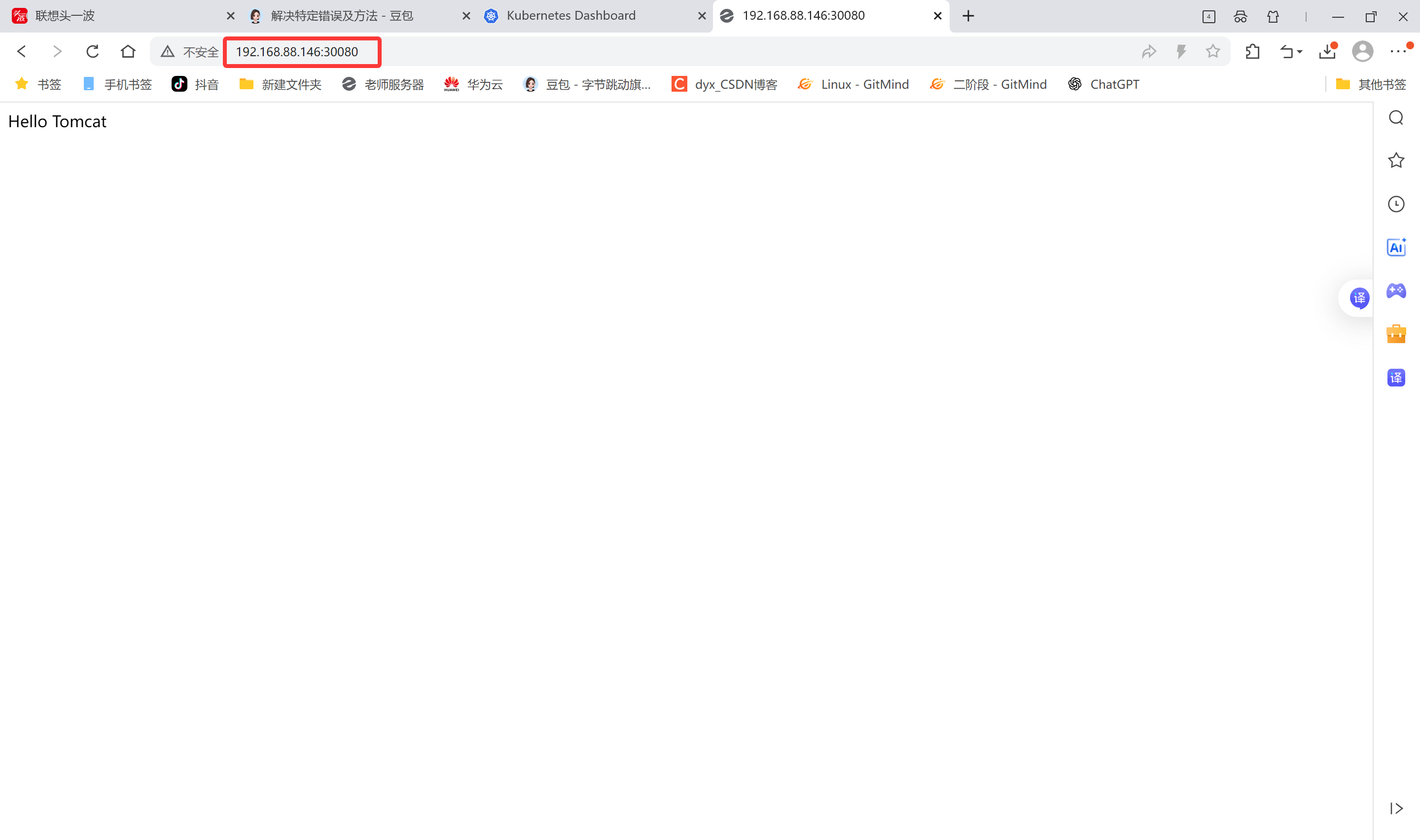

- 5.Service

- 6.Endpoints

- 7.DNS

- kubernetes核心概念之间的关系

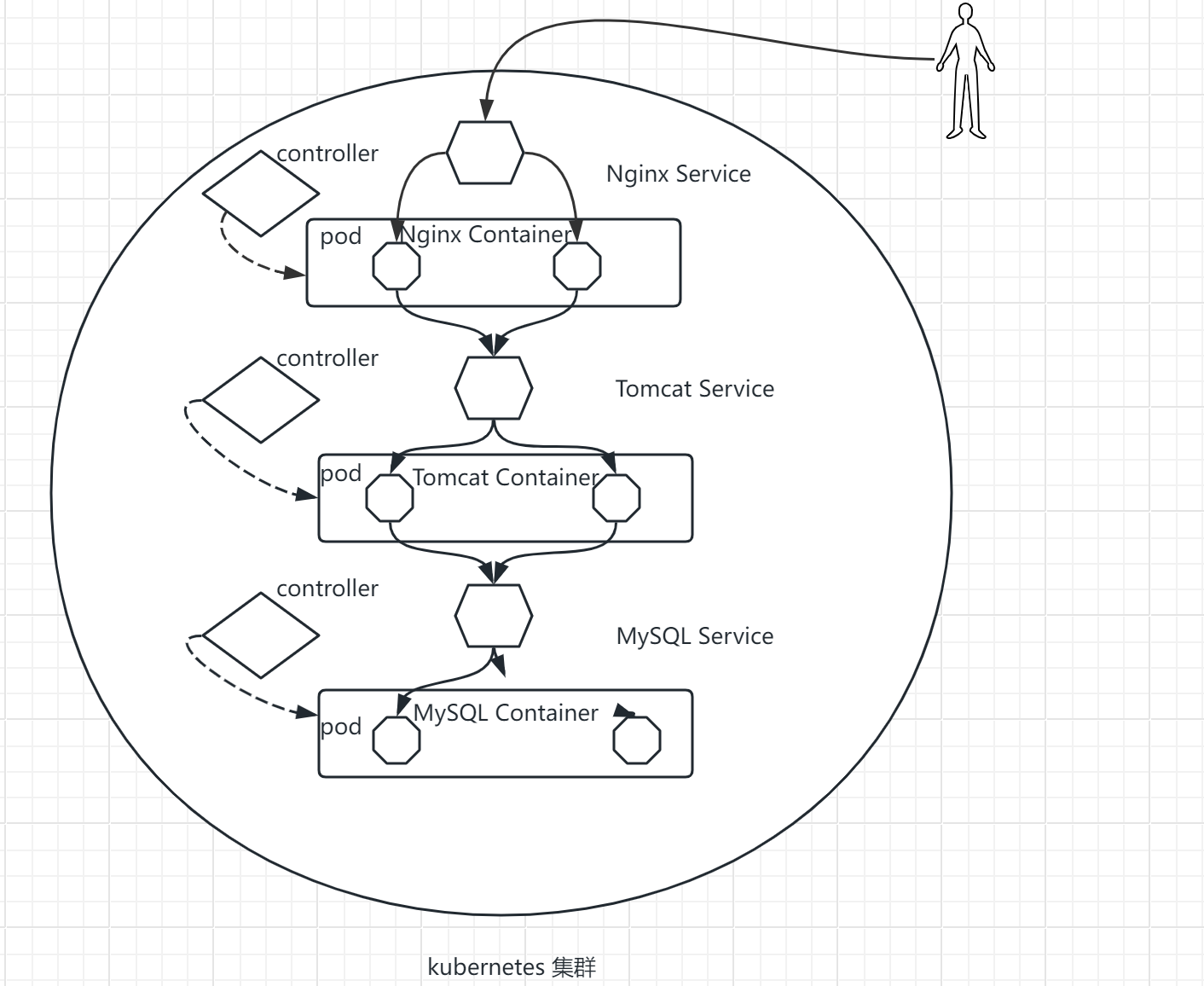

- 基于kubernetes集群容器化应用的微服务

kubernetes容器云平台

入门须知

- 熟悉Linux基础命令

- 熟悉Docker基本管理

- 了解SSL证书工作原理

- 了解负载均衡工作原理(L4/L7)

- 了解集群,分布式概念

- 了解域名解析原理了解网络协议

第 1 章 Kubernetes概述

Kubernetes是什么

- Kubernetes是Google在2014年开源的一个容器集群管理系统,Kubernetes简称K8S。

- K8S用于容器化应用程序的部署,扩展和管理。

- K8S提供了容器编排,资源调度,弹性伸缩,部署管理,服务发现等一系列功能。

- Kubernetes目标是让部署容器化应用简单高效。

Kubernetes特性

- 自我修复:在节点故障时重新启动失败的容器,替换和重新部署,保证预期的副本数量;杀死健康检查失败的容器,并且在未准备好之前不会处理客户端请求,确保线上服务不中断。

- 弹性伸缩:使用命令、UI或者基于CPU使用情况自动快速扩容和缩容应用程序实例,保证应用业务高峰并发时的高可用性;业务低峰时回收资源,以最小成本运行服务。

- 自动部署和回滚:K8S采用滚动更新策略更新应用,一次更新一个Pod,而不是同时删除所有Pod,如果更新过程中出现问题,将回滚更改,确保升级不受影响业务。

- 服务发现和负载均衡:K8S为多个容器提供一个统一访问入口(内部IP地址和一个DNS名称),并且负载均衡关联的所有容器,使得用户无需考虑容器IP问题。

- 机密和配置管理:管理机密数据和应用程序配置,而不需要把敏感数据暴露在镜像里,提高敏感数据安全性。并可以将一些常用的配置存储在K8S中,方便应用程序使用。

- 存储编排:挂载外部存储系统,无论是来自本地存储,公有云(如AWS),还是网络存储(如NFS、GlusterFS、Ceph)都作为集群资源的一部分使用,极大提高存储使用灵活性。

- 批处理:提供一次性任务,定时任务;满足批量数据处理和分析的场景。

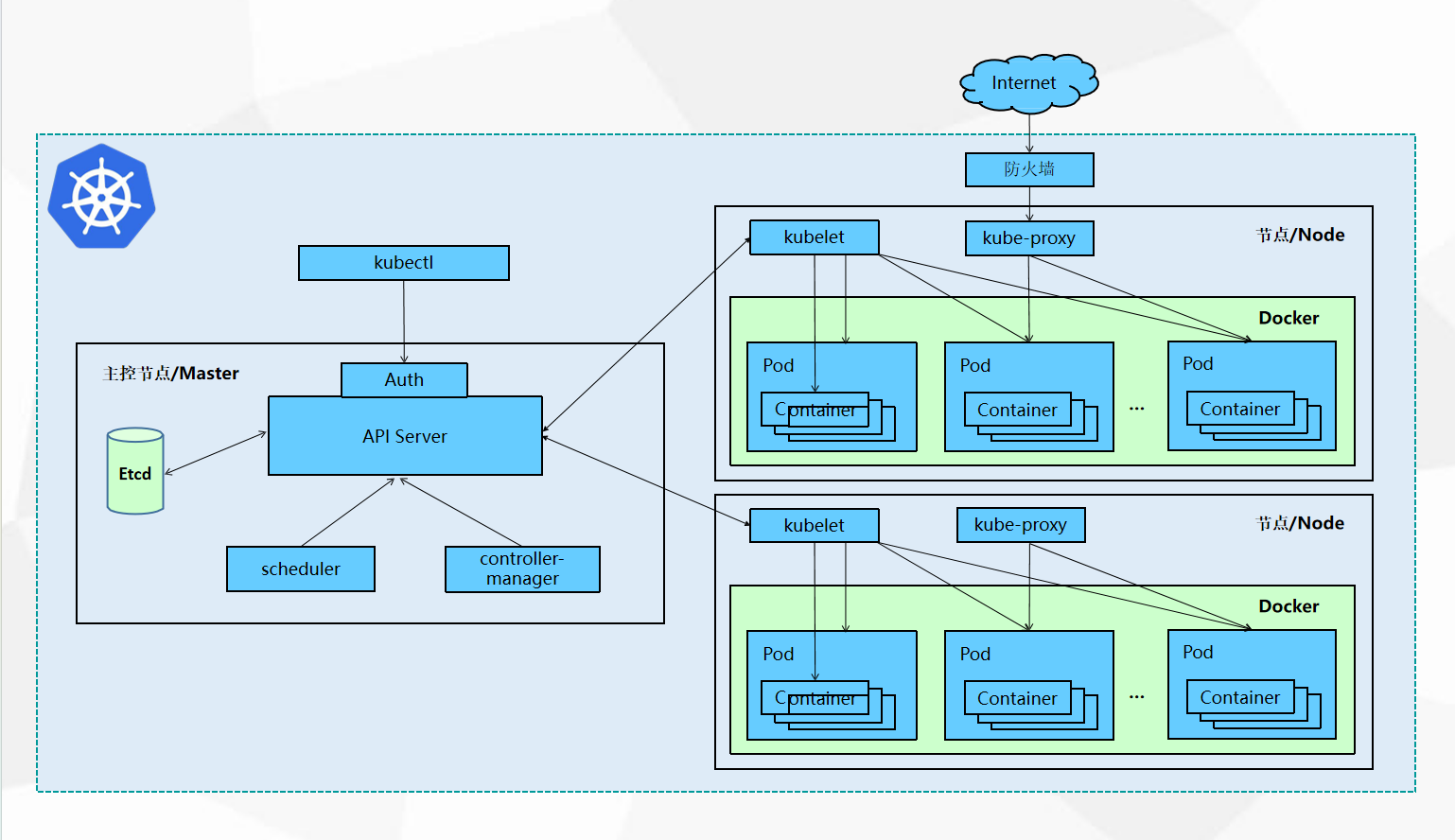

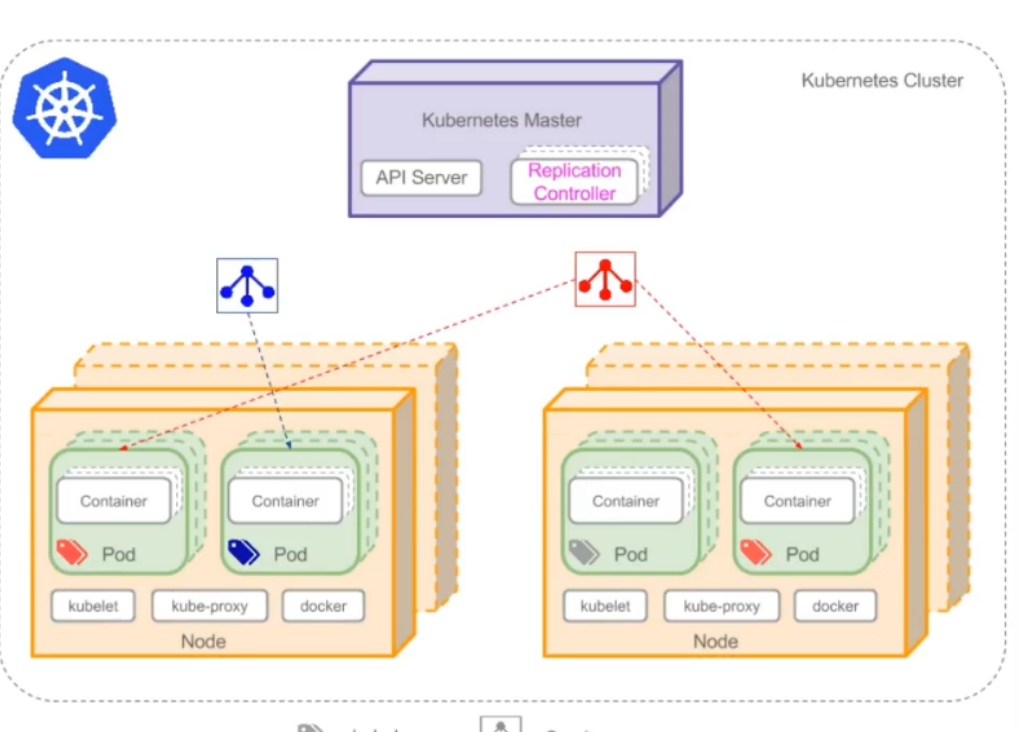

Kubernetes集群架构与组件

API Server在node节点上kubelet控制静态的pod的生命周期

kubelet发送证书申请给APIServer,API Server颁发

auth中的证书放在admin.conf,admin-conf指向master地址,方便和通信

用https的协议管理k8s集群

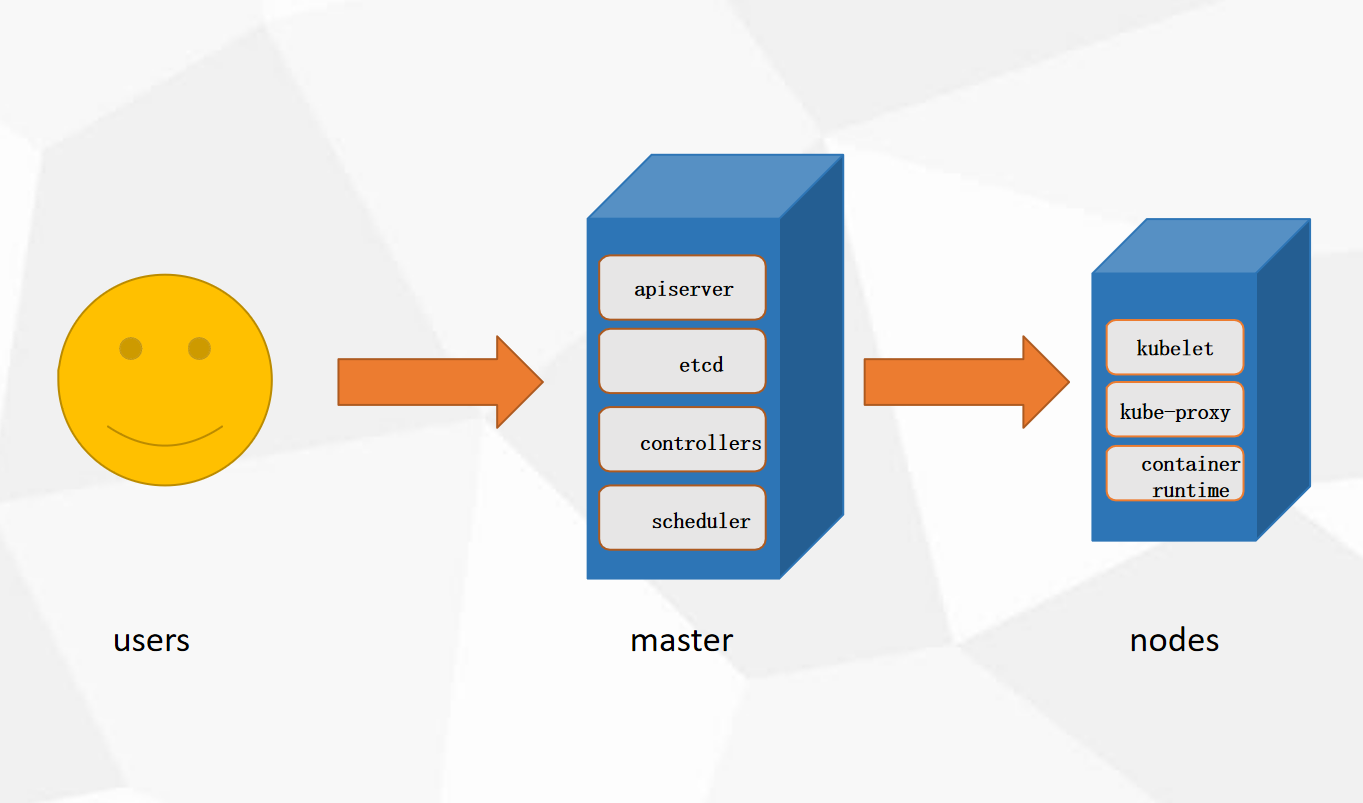

用户通过kubectl命令申请资源,向主节点master传输,通过Auth安全认证(证书存放在admin.conf中)进入API Server,将数据存储到Etcd中,scheduler调度器到node节点上选择合适的节点(较为空闲的),将节点传送给API Server,controller-manager控制器,监控集群,保证集群达到期望状态,API Server通过kubelet管理node节点,给合适节点发送指令,kubelet在node节点控制pod的生命周期,kubelet发送证书申请给APIServer,API Server颁发后,创建pod存储空间,容器运行在pod空间里面。外网访问网页,需要经过防火墙,通过node节点上的kube-proxy对接,维护负载均衡。

用户通过kubectl命令行工具申请资源,auth负责权限校验,防止调用资源出现权限错误,apiserver 接受请求,向etcd发送资源的状态(集群配置),scheduler根据节点条件为资源选择合适的node,同时向etcd发送结果,controller-manager运行各种控制器,保证pod不会down掉,kubelet(白手套)负责管理pod的生命周期,收到api-server颁发的证书后调用docker创建pod,kube-proxy负责外部的访问,维护service的负载均衡

Kubernetes集群架构与组件

Master组件

- kube-apiserver:Kubernetes API,集群的统一入口,各组件协调者,以RESTful API提供接口 服务,所有对象资源的增删改查和监听操作都交给APIServer处理后再提交给 Etcd存储。

- kube-controller-manager:处理集群中常规后台任务,一个资源对应一个控制器,而ControllerManager 就是负责管理这些控制器的。

- kube-scheduler:根据调度算法为新创建的Pod选择一个Node节点,可以任意部署,可以部署在 同一个节点上,也可以部署在不同的节点上。

- etcd:分布式键值存储系统。用于保存集群状态数据,比如Pod、Service等对象信息。

Node组件

- kubelet:kubelet是Master在Node节点上的Agent,管理本机运行容器的生命周期,比如创 建容器、Pod挂载数据卷、下载secret、获取容器和节点状态等工作。kubelet将每 个Pod转换成一组容器。

- kube-proxy:在Node节点上实现Pod网络代理,维护网络规则和四层负载均衡工作。

- docker或rocket:容器引擎,运行容器。

k8s版本:

1.14 - 1.19 默认支持容器运行时:docker

1.20 - 1.23 同支持:docker、containerd

1.24 - 默认支持:containerd

Kubernetes核心概念

- Pod

- 最小部署单元

- 一组容器的集合

- 一个Pod中的容器共享网络命名空间

- Pod是短暂的

- Controllers

- ReplicaSet : 确保预期的Pod副本数量

- Deployment : 无状态应用部署

- StatefulSet : 有状态应用部署

- DaemonSet : 确保所有Node运行同一Pod

- Job : 一次性任务

- Cronjob : 定时任务

- 更高级层次对象,部署和管理PodService防止Pod失联定义一组Pod的访问策略

- Service

- 防止Pod失联

- 定义一组Pod的访问策略

Label : 标签,附加到某个资源上,用于关联对象、查询和筛选

Namespaces : 命名空间,将对象逻辑上隔离

Annotations :注释

第 2 章 Kubernetes集群部署

docker版

前置环境部署

1.主机配置

| CPU | 内存 | 硬盘 | IP | 主机名 | 软件 |

|---|---|---|---|---|---|

| 2核 | 4G | 100G | 192.168.88.146 | master | docker |

| 2核 | 4G | 100G | 192.168.88.147 | node1 | docker |

| 2核 | 4G | 100G | 192.168.88.148 | node2 | docker |

配置主机名

在三台主机中各自设置对应主机名称

python

hostnamectl set-hostname master

hostnamectl set-hostname node1

hostnamectl set-hostname node2配置主机名解析

所有节点都配置,并测试ping通

python

[root@master ~ 14:51:26]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.88.146 master

192.168.88.147 node1

192.168.88.148 node2

[root@node1 ~ 14:51:30]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.88.146 master

192.168.88.147 node1

192.168.88.148 node2

[root@node2 ~ 14:51:34]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.88.146 master

192.168.88.147 node1

192.168.88.148 node2测试

python

[root@node1 ~ 14:52:58]# ping node2

PING node2 (192.168.88.148) 56(84) bytes of data.

64 bytes from node2 (192.168.88.148): icmp_seq=1 ttl=64 time=0.388 ms

64 bytes from node2 (192.168.88.148): icmp_seq=2 ttl=64 time=0.215 ms

^C

--- node2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 0.215/0.301/0.388/0.088 ms

[root@node1 ~ 14:53:07]# ping -c 2 master

PING master (192.168.88.146) 56(84) bytes of data.

64 bytes from master (192.168.88.146): icmp_seq=1 ttl=64 time=0.387 ms

64 bytes from master (192.168.88.146): icmp_seq=2 ttl=64 time=0.319 ms

--- master ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 0.319/0.353/0.387/0.034 ms安装环境包

python

[root@master ~ 14:53:00]# yum -y install vim lrzsz unzip wget net-tools tree bash-completion conntrack ntpdate ntp ipvsadm ipset iptables curl sysstat libseccomp git psmisc telnet unzip gcc gcc-c++ make

[root@node1 ~ 14:53:15]# yum -y install vim lrzsz unzip wget net-tools tree bash-completion conntrack ntpdate ntp ipvsadm ipset iptables curl sysstat libseccomp git psmisc telnet unzip gcc gcc-c++ make

[root@node2 ~ 14:52:30]# yum -y install vim lrzsz unzip wget net-tools tree bash-completion conntrack ntpdate ntp ipvsadm ipset iptables curl sysstat libseccomp git | 类别 | 软件包 | 核心功能 |

|---|---|---|

| 文件操作 | vim , lrzsz , unzip | 编辑/上传下载/解压 ZIP |

| 网络管理 | net-tools , ipvsadm | 网络配置/IPVS 负载均衡 |

| 系统监控 | sysstat , psmisc | 性能监控/进程管理 |

| 开发编译 | gcc , make | 代码编译/自动化构建 |

| 安全防护 | iptables , libseccomp | 防火墙/容器系统调用限制 |

关闭防火墙和内核安全机制

三节点

bash

[root@master ~ 15:05:03]# systemctl disable firewalld --now

[root@node1 ~ 15:05:10]# sed -i 's/enforcing/disabled/g' /etc/selinux/config

[root@node1 ~ 15:05:16]# setenforce 0

setenforce: SELinux is disabled

[root@node1 ~ 15:05:03]# systemctl disable firewalld --now

[root@node1 ~ 15:05:10]# sed -i 's/enforcing/disabled/g' /etc/selinux/config

[root@node1 ~ 15:05:16]# setenforce 0

setenforce: SELinux is disabled

[root@node2 ~ 15:04:54]# systemctl disabl e firewalld --now

[root@node2 ~ 15:05:10]# sed -i 's/enforc ing/disabled/g' /etc/selinux/config

[root@node2 ~ 15:05:16]# setenforce 0

setenforce: SELinux is disabled关闭swap分区

启用swap分区会对系统的性能产生非常负面的影响,因此kubernetes要求每个节点都要禁用swap分区

bash

[root@node1 ~ 15:05:21]# swapoff -a && sed -i '/swap/s/^/#/' /etc/fstab

[root@node1 ~ 15:05:21]# swapoff -a && sed -i '/swap/s/^/#/' /etc/fstab

[root@node2 ~ 15:05:21]# swapoff -a && sed -i '/swap/s/^/#/' /etc/fstab调整系统内核参数(注意:升级内核)

bash

[root@master ~ 15:05:28]# yum update -y kernel && reboot

[root@node1 ~ 15:05:28]# yum update -y kenel && reboot

[root@node2 ~ 15:05:28]# yum update -y kernel && reboot

bash

[root@node1 ~ 15:09:08]# cat >/etc/sysctl.d/kubernetes.conf<<EOF

# 开启Linux内核的网络桥接功能,同时启用iptables和ip6tables的网络包过滤功能,用于在网络桥接时进行网络包过滤

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

# 开启路由转发,转发IPv4的数据包

net.ipv4.ip_forward=1

# 尽可能避免使用交换分区,提升k8s性能

vm.swappiness=0

# 不检查物理内存是否够用

vm.overcommit_memory=1

EOF立即生效

bash

sysctl --system

* Applying /usr/lib/sysctl.d/00-system.conf ...

* Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ...

kernel.yama.ptrace_scope = 0

* Applying /usr/lib/sysctl.d/50-default.conf ...

kernel.sysrq = 16

kernel.core_uses_pid = 1

kernel.kptr_restrict = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.conf.all.accept_source_route = 0

net.ipv4.conf.default.promote_secondaries = 1

net.ipv4.conf.all.promote_secondaries = 1

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/kubernetes.conf ...

net.ipv4.ip_forward = 1

vm.swappiness = 0

vm.overcommit_memory = 1

* Applying /etc/sysctl.conf ...时间同步

bash

[root@node1 ~ 15:10:55]# yum -y install chrony

[root@node1 ~ 15:11:03]# systemctl restart chronyd

#列出 Chrony 守护进程当前配置和使用的所有时间源(NTP 服务器)及其同步状态信息

[root@node1 ~ 15:11:07]# chronyc sources -v

210 Number of sources = 0

.-- Source mode '^' = server, '=' = peer, '#' = local clock.

/ .- Source state '*' = current synced, '+' = combined , '-' = not combined,

| / '?' = unreachable, 'x' = time may be in error, '~' = time too variable.

|| .- xxxx [ yyyy ] +/- zzzz

|| Reachability register (octal) -. | xxxx = adjusted offset,

|| Log2(Polling interval) --. | | yyyy = measured offset,

|| \ | | zzzz = estimated error.

|| | | \

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

#将硬件时钟的时间同步到系统时钟

[root@node1 ~ 15:11:18]# hwclock -sIPVS功能

bash

[root@node1 ~ 15:11:27]# cat >>/etc/modules-load.d/ipvs.conf<<EOF

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack_ipv4

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

overlay

br_netfilter

EOFPVS内核模块功能说明

-

IPVS 核心模块

- ip_vs :IPVS 负载均衡基础模块

- ip_vs_rr :轮询(Round Robin)调度算法

- ip_vs_wrr :加权轮询(Weighted RR)调度算法

- ip_vs_sh :源地址哈希(Source Hashing)调度算法

-

网络连接与过滤

- nf_conntrack_ipv4 :IPv4 连接跟踪(NAT/防火墙依赖,新内核中内核版本 ≥4.19 时合并至 nf_conntrack )

- ip_tables :iptables 基础框架

- ipt_REJECT :实现数据包拒绝(REJECT 动作)

-

IP 集合管理

- ip_set :IP 地址集合管理

- xt_set & ipt_set :iptables 与 IP 集合的扩展匹配

-

网络隧道与桥接

- ipip :IP-over-IP 隧道协议

- overlay :Overlay 网络支持(如 Docker 跨主机网络)

- br_netfilter :桥接网络流量过滤(需配合 net.bridge.bridge-nf-call-iptables=1 参数)

-

反向路径过滤

- ipt_rpfilter :反向路径验证(防 IP 欺骗)

典型应用场景

-

Kubernetes 节点初始化:IPVS 模式 kube-proxy 依赖这些模块

-

负载均衡服务器:启用 IPVS 调度算法

-

容器网络配置:Overlay 和桥接模块支持

重启服务

bash

[root@node1 ~ 15:11:40]# systemctl restart systemd-modules-load查看内核模块

bash

[root@node1 ~ 15:11:50]# lsmod | grep -e ip_vs -e nf_conntrack_ipv4

nf_conntrack_ipv4 19149 0

nf_defrag_ipv4 12729 1 nf_conntrack_ipv4

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs 145458 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 143411 2 ip_vs,nf_conntrack_ipv4

libcrc32c 12644 3 xfs,ip_vs,nf_conntrack2:docker-ce环境

前置环境安装

bash

[root@node1 ~ 15:11:56]# yum install -y yum-utils device-mapper-persistent-data lvm2说明:

yum-utils 提供了 yum-config-manager

device mapper 存储驱动程序需要 device-mapper-persistent-data 和 lvm2

Device Mapper 是 Linux2.6 内核中支持逻辑卷管理的通用设备映射机制,它为实现用于存储资源管理的块设备驱动提供了一个高度模块化的内核架构。

使用阿里云镜像

bash

[root@node1 ~ 15:16:54]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

已加载插件:fastestmirror

adding repo from: https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

grabbing file https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo to /etc/yum.repos.d/docker-ce.repo

repo saved to /etc/yum.repos.d/docker-ce.repo安装docker-ce

bash

[root@node1 ~ 15:17:55]# yum install -y docker-ce防火墙设置(已操作)

bash

[root@node1 ~ 15:15:21]# setenforce 0

setenforce: SELinux is disabled

[root@node1 ~ 15:15:28]# vim /etc/selinux/config

[root@node1 ~ 15:15:44]# iptables -F启动docker服务

bash

[root@node1 ~ 15:20:02]# systemctl enable --now docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.镜像加速

bash

[root@node1 ~ 15:20:13]# tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": [

"https://09def58152000fc00ff0c00057bad7e0.mirror.swr.myhuaweicloud.com",

"https://do.nark.eu.org",

"https://dc.j8.work",

"https://docker.m.daocloud.io",

"https://dockerproxy.com",

"https://docker.mirrors.ustc.edu.cn",

"https://docker.nju.edu.cn",

"https://registry.docker-cn.com",

"https://hub-mirror.c.163.com",

"https://hub.uuuadc.top",

"https://docker.anyhub.us.kg",

"https://dockerhub.jobcher.com",

"https://dockerhub.icu",

"https://docker.ckyl.me",

"https://docker.aws19527.cn",

"https://mirror.baidubce.com",

"https://docker.1panel.live"

]

}

EOF修改cgroup方式

bash

[root@node1 ~ 15:27:17]# vim /etc/docker/daemon.json

{

"registry-mirrors": [

"https://05vz3np5.mirror.aliyuncs.com",

"https://do.nark.eu.org",

"https://dc.j8.work",

"https://docker.m.daocloud.io",

"https://dockerproxy.com",

"https://docker.mirrors.ustc.edu.cn",

"https://docker.nju.edu.cn",

"https://registry.docker-cn.com",

"https://hub-mirror.c.163.com",

"https://hub.uuuadc.top",

"https://docker.anyhub.us.kg",

"https://dockerhub.jobcher.com",

"https://dockerhub.icu",

"https://docker.ckyl.me",

"https://docker.aws19527.cn",

"https://mirror.baidubce.com",

"https://docker.1panel.live"

],

"exec-opts": ["native.cgroupdriver=systemd"]

}

[root@node1 ~ 15:27:51]# systemctl daemon-reload

[root@node1 ~ 15:27:54]# systemctl restart dockercri-dockerd安装

作用:cri-dockerd 的主要作用是为 Docker Engine 提供一个符合 Kubernetes CRI(ContainerRuntime Interface)标准的接口 ,使 Docker 能继续作为 Kubernetes 的容器运行时(ContainerRuntime),尤其是在 Kubernetes1.24版本后,官方移除对原生 Docker 支持(dockershim)之后。

也可以使用linux的wget直接下载

bash

wget https://github.com/mirantis/cri-dockerd/releases/download/v0.3.4/cri-dockerd-0.3.4-3.e17.x86_64.rpm下载完成后直接安装

bash

[root@master ~ 18:51:21]# rpm -ivh cri-dockerd-0.3.4-3.el7.x86_64.rpm

准备中... ################################ [100%]

正在升级/安装...

1:cri-dockerd-3:0.3.4-3.el7 ################################ [100%]编辑服务配置文件

bash

[root@node2 ~ 18:52:13]# vim /usr/lib/systemd/system/cri-docker.service

#编辑第10行,中间添加 --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9

ExecStart=/usr/bin/cri-dockerd --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9 --container-runtime-endpoint fd://启动cri-dockerd服务

bash

[root@node1 ~ 15:43:58]# systemctl daemon-reload

[root@node1 ~ 15:44:05]# # systemctl start cri-docker.service

[root@node1 ~ 15:44:10]# systemctl star cri-docker.service

[root@node1 ~ 15:44:17]# systemctl enabl cri-docker.service

Created symlink from /etc/systemd/systemmulti-user.target.wants/cri-docker.servie to /usr/lib/systemd/system/cri-docker.ervice.检查文件是否启动

bash

[root@node1 ~ 15:44:26]# ls /run/cri-*

/run/cri-dockerd.sockkubernetes集群部署

Yum源

国内阿里云镜像源

bash

[root@node1 ~ 15:44:34]# cat <<EOF>/etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF软件安装

查看可安装的版本

bash

[root@node1 ~ 15:45:02]# yum list kubeadm.x86_64 --showduplicates | sort -r

已加载插件:fastestmirror

可安装的软件包

* updates: mirrors.aliyun.com

Loading mirror speeds from cached hostfie

kubeadm.x86_64 1.99-0 kubernetes

kubeadm.x86_64 1.98-0 kubernetes

kubeadm.x86_64 1.97-0 kubernetes

kubeadm.x86_64 1.96-0 kubernetes

kubeadm.x86_64 1.95-0 kubernetes

... ...

kubernetes

kubeadm.x86_64 1.1.4-0 kubernetes

kubeadm.x86_64 1.1.3-0 kubernetes

kubeadm.x86_64 1.1.2-0 kubernetes

kubeadm.x86_64 1.1.13-0 kubernetes

kubeadm.x86_64 1.1.12-0 kubernetes

kubeadm.x86_64 1.1.11-0 kubernetes

kubeadm.x86_64 1.1.1-0 kubernetes

kubeadm.x86_64 1.1.10-0 kubernetes

kubeadm.x86_64 1.1.0-0 kubernetes

* extras: mirrors.aliyun.com

* base: mirrors.aliyun.com安装1.28.2-0版本

bash

[root@node1 ~ 15:45:15]# yum install -y kubeadm-1.28.0-0 kubelet-1.28.0-0 kubectl-1.28.0-0kubelet配置

强制指定 kubelet 使用 systemd 作为 cgroup 驱动,确保与 Docker 或其他容器运行时保持一致

将 kube-proxy 的代理模式设置为 ipvs ,替代默认的 iptables ,提升大规模集群的网络性能

bash

[root@node1 ~ 15:49:03]# vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

#因为没有初始化产生对应配置文件,我们先设置开机自启动状态

[root@node1 ~ 15:49:20]# # systemctl daemon-reload

[root@node1 ~ 15:49:25]# systemctl enable kubelet.service

Created symlink from /etc/systemd/systemmulti-user.target.wants/kubelet.service o /usr/lib/systemd/system/kubelet.servic.集群初始化

⚠注意:只在master节点做操作

查看可使用镜像

bash

[root@master ~ 15:49:31]# kubeadm config images list --kubernetes-version=v1.28.0 --image-repository=registry.aliyuncs.com/google_containers

registry.aliyuncs.com/google_containers/

registry.aliyuncs.com/google_containers/

registry.aliyuncs.com/google_containers/

registry.aliyuncs.com/google_containers/

registry.aliyuncs.com/google_containers/

registry.aliyuncs.com/google_containers/

registry.aliyuncs.com/google_containers/镜像下载

bash

[root@master ~ 15:49:52]# kubeadm config images pull --cri-socket=unix:///var/run/cri-dockerd.sock --kubernetes-version=v1.28.0 --image-repository=registry.aliyuncs.com/google_containers

[config/images] Pulled registry.aliyuncs

[config/images] Pulled registry.aliyuncs:v1.28.0

[config/images] Pulled registry.aliyuncs

[config/images] Pulled registry.aliyuncs

[config/images] Pulled registry.aliyuncs

[config/images] Pulled registry.aliyuncs

[config/images] Pulled registry.aliyuncs查看已下载的镜像

bash

[root@master ~ 15:51:20]# docker images

REPOSITORY CREATED SIZE

registry.aliyuncs.com/google_containers/54 2 years ago 126MB

registry.aliyuncs.com/google_containers/ba 2 years ago 122MB

registry.aliyuncs.com/google_containers/2a 2 years ago 60.1MB

registry.aliyuncs.com/google_containers/aa 2 years ago 73.1MB

registry.aliyuncs.com/google_containers/02 2 years ago 294MB

registry.aliyuncs.com/google_containers/f8 2 years ago 53.6MB

registry.aliyuncs.com/google_containers/97 3 years ago 744kB方式一:创建初始化集群配置文件(推荐)

bash

[root@master ~ 15:51:55]# kubeadm config print init-defaults > kubeadm-init.yaml

[root@master ~ 15:52:20]# vim kubeadm-init.yaml修改内容如下

bash

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.88.140 #12行 修改master节点ip

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/cri-dockerd.sock #15行 修改容器进行时

imagePullPolicy: IfNotPresent

name: k8s-master #17行 修改master节点主机名

taints: #18行 注意!去掉Null

- effect: NoSchedule #19行 添加污点,不在这个节点上创建资源/亲和,资源优先创建在这个节点

key: node-role.kubernetes.io/control-plane #20行 添加

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers #32行 修改镜像仓库地址

kind: ClusterConfiguration

kubernetesVersion: 1.28.0 #34行 修改k8s版本

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16 #38行 增加pod网段

scheduler: {}

# 末尾添加

--- #更改kube-proxy的代理模式,默认为iptables

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

--- #更改kubelet cgroup驱动为systemd

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd初始化完成

bash

[root@master ~ 15:54:27]# kubeadm init --config=kubeadm-init.yaml --upload-certs | tee kubeadm-init.log

[init] Using Kubernetes version: v1.28.0

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet c onnection

[preflight] You can also perform this action in beforehand using 'kubeadm config image s pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master] and IPs [10.96.0.1 192.168.88.140]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master] and IPs [1 92.168.88.140 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master] and IPs [192 .168.88.140 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/ kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Po ds from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 6.003750 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "k ube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the conf iguration for the kubelets in the cluster

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

aae2ec7c80dfc775d213e620b63e7bc3d89dad4db4933532bce4422844af320e

[mark-control-plane] Marking the node master as control-plane by adding the labels: [n ode-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-bal ancers]

[mark-control-plane] Marking the node master as control-plane by adding the taints [no de-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatica lly approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node cli ent certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kub elet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

`Your Kubernetes control-plane has initialized successfully!`

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.88.140:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:8664d099f8e4d6a4e3c0f33bbd8dc3ed0de687d1 初始化失败处理:

bash

重置处理:

1:删除kubernetes目录中所有内容

rm -rf /etc/kubernetes/*

2:删除启动端口进程

pkill -9 kubelet

pkill -9 kube-controll

pkill -9 kube-schedule

3:重置sock文件

kubeadm reset -f --cri-socket=unix:///var/run/cri-dockerd.sock配置kubectl工具

根据初始化成功后的提示进行配置

Kubectl 是一个用于操作Kubernetes集群的命令行工具。

kubectl 在 $HOME/.kube 目录中查找一个名为 config 的配置文件。可以通过设置 KUBECONFIG 环境变量或设置 --kubeconfig 参数来指定其它 kubeconfig 文件。

bash

[root@master ~ 15:54:48]# mkdir -p $HOME/.kube

[root@master ~ 15:55:14]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~ 15:55:14]# sudo chown $(id -u):$(id -g) $HOME/.kube/config永久生效(推荐)

在Kubernetes集群中,admin.conf 文件是用于配置kubectl工具访问Kubernetes集群的客户端配置文件。该文件包含了连接到Kubernetes集群所需的认证信息、集群信息和上下文信息。

bash

[root@master ~ 15:55:14]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

[root@master ~ 15:55:31]# source ~/.bash_profile检查核心组建控制平面的健康状态

bash

[root@master ~ 15:55:35]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

etcd-0 Healthy ok

scheduler Healthy oknode工作节点加入集群

node1加入集群

⚠只在node节点操作

⚠注意:添加--cri-socket unix:///var/run/cri-dockerd.sock,否则会报错

bash

[root@node1 ~ 15:56:09]# kubeadm join 192.168.88.140:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:8664d099f8e4d6a4e3c0f33bbd8dc3ed0de687d1e85ed6ec5234dd8281ef745a --cri-socket unix:///var/run/cri-dockerd.sock

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.node2加入集群

⚠只在node节点操作

⚠注意:添加--cri-socket unix:///var/run/cri-dockerd.sock,否则会报错

bash

[root@node2 ~ 15:49:31]# kubeadm join 192.168.88.140:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:8664d099f8e4d6a4e3c0f33bbd8dc3ed0de687d1e85ed6ec5234dd8281ef745a --cri-socket unix:///var/run/cri-dockerd.sock

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.网络CNI组建部署

查看集群状态

bash

[root@master ~ 15:55:41]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane 3m1s v1.28.0

node1 NotReady <none> 28s v1.28.0

node2 NotReady <none> 14s v1.28.0此时coredns中一直没有IP地址,主要原因缺少网络组建

bash

[root@master ~ 15:57:46]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-66f779496c-nv2rc 0/1 Pending 0 2m51s <none> <none> <none> <none>

coredns-66f779496c-z92n5 0/1 Pending 0 2m51s <none> <none> <none> <none>

etcd-master 1/1 Running 0 3m4s 192.168.88.140 master <none> <none>

kube-apiserver-master 1/1 Running 0 3m4s 192.168.88.140 master <none> <none>

kube-controller-manager-master 1/1 Running 0 3m4s 192.168.88.140 master <none> <none>

kube-proxy-756g5 1/1 Running 0 34s 192.168.88.141 node1 <none> <none>

kube-proxy-t2jm8 0/1 ContainerCreating 0 20s 192.168.88.142 node2 <none> <none>

kube-proxy-wdrqh 1/1 Running 0 2m51s 192.168.88.140 master <none> <none>

kube-scheduler-master 1/1 Running 0 3m4s 192.168.88.140 master <none> <none>kubernetes集群的网络是比较复杂的,不是集群内部实现的,为了更方便的使用集群,因此,使用第三方的cni网络插件(Container Network Interface )。cni是容器网络接口,作用是实现容器跨主机网络通信。pod的ip地址段,也称为cidr。

kubernetes支持多种网络插件,比如flannel、calico、canal等,任选一种即可,本次选择 Calico。calico是一个纯三层的网络解决方案,为容器提供多node间的访问通信,calico将每一个node节点都当做为一个路由器(router),每个pod都是虚拟路由器下的的终端,各节点通过BGP(Border Gateway Protocol) 边界网关协议学习并在node节点生成路由规则,从而将不同node节点上的pod连接起来进行通信,是目前Kubernetes主流的网络方案。

官方下载地址:https://docs.tigera.io/calico

Github访问地址:https://github.com/projectcalico/calico

calico.yaml文件每个版本都有区别的,需要满足对应的k8s 版本

参考:https://archive-os-3-25.netlify.app/calico/3.25/getting-started/kubernetes/requirements

calico 3.25 版本对应的K8S版本有 v1.23---v1.28

下载Calico文件

bash

[root@master ~]# wget --no-check-certificate https://docs.tigera.io/archive/v3.25/manifests/calico.yaml修改Calico文件

bash

[root@master ~ 15:57:52]# ls

anaconda-ks.cfg cri-dockerd-0.3.4-3.el7.x86_64.rpm kubeadm-init.yaml

calico.yaml kubeadm-init.log

[root@master ~ 15:58:28]# vim calico.yaml

# 找到4601行,去掉注释并修改

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"部署Calico

注意:需要等待较长时间下载相关组建,主要看网络环境

bash

[root@master ~ 15:59:11]# kubectl apply -f calico.yaml

poddisruptionbudget.policy/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

serviceaccount/calico-node created

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

deployment.apps/calico-kube-controllers created验证检查集群

查看所有组建状态(等待了35分钟)

bash

[root@master ~ 15:59:19]# watch kubectl get pods -A

[root@master ~ 16:13:54]# kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS R

kube-system calico-kube-controllers-658d97c59c-v8tbw 0/1 ContainerCreating 0

kube-system calico-node-69hpj 1/1 Running 0

kube-system calico-node-fw75n 1/1 Running 0

kube-system calico-node-n5bpm 1/1 Running 0

kube-system coredns-66f779496c-nv2rc 0/1 ImagePullBackOff 0

kube-system coredns-66f779496c-z92n5 1/1 Running 0

kube-system etcd-master 1/1 Running 0

kube-system kube-apiserver-master 1/1 Running 0

kube-system kube-controller-manager-master 1/1 Running 0

kube-system kube-proxy-756g5 1/1 Running 0

kube-system kube-proxy-t2jm8 1/1 Running 0

kube-system kube-proxy-wdrqh 1/1 Running 0

kube-system kube-scheduler-master 1/1 Running 0

[root@master ~ 16:14:09]# watch kubectl get pods -A

NAMESPACE NAME READY STATUS R

kube-system calico-kube-controllers-658d97c59c-v8tbw 0/1 Running 0

kube-system calico-node-69hpj 1/1 Running 0

kube-system calico-node-fw75n 1/1 Running 0

kube-system calico-node-n5bpm 1/1 Running 0

kube-system coredns-66f779496c-nv2rc 0/1 Running 0

kube-system coredns-66f779496c-z92n5 1/1 Running 0

kube-system etcd-master 1/1 Running 0

kube-system kube-apiserver-master 1/1 Running 0

kube-system kube-controller-manager-master 1/1 Running 0

kube-system kube-proxy-756g5 1/1 Running 0

kube-system kube-proxy-t2jm8 1/1 Running 0

kube-system kube-proxy-wdrqh 1/1 Running 0

kube-system kube-scheduler-master 1/1 Running 0如果出现长时间等待状态可以所有节点直接升级内核(解决)

原因:内核版本过低导致BPF文件系统缺失

bash

[root@master ~]# yum update -y kernel && reboot验证dns解析功能

bash

[root@master ~]# dig -t a www.baidu.com @10.96.0.10查看集群node状态

bash

[root@master ~ 16:22:09]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane 27m v1.28.0

node1 Ready <none> 25m v1.28.0

node2 Ready <none> 24m v1.28.0/etc/kubernetes/manifests/目录下存放Kubernetes集群中各个组件的Pod配置文件。

通常会包含以下几个重要的配置文件:

bash

etcd.yaml:用于部署和管理etcd集群的配置文件

kube-apiserver.yaml:用于部署和管理Kubernetes API服务器的配置文件

kube-controller-manager.yaml:用于部署和管理Kubernetes控制器管理器的配置文件

kube-scheduler.yaml:用于部署和管理Kubernetes调度器的配置文件当Kubernetes集群启动时,kubelet会自动监视/etc/kubernetes/manifests/目录下的配置文件,并根据这些配置文件创建和管理对应的Pod。

ca证书默认存放在/etc/kubernetes/pki/目录下

查看集群地址

bash

[root@master ~]# kubectl get service -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 143m优化操作

kubectl命令补全

bash

[root@master ~]# yum install bash-completion -y

[root@master ~]# source /usr/share/bash-completion/bash_completion在当前bash环境中永久设置命令补全

bash

[root@master ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc

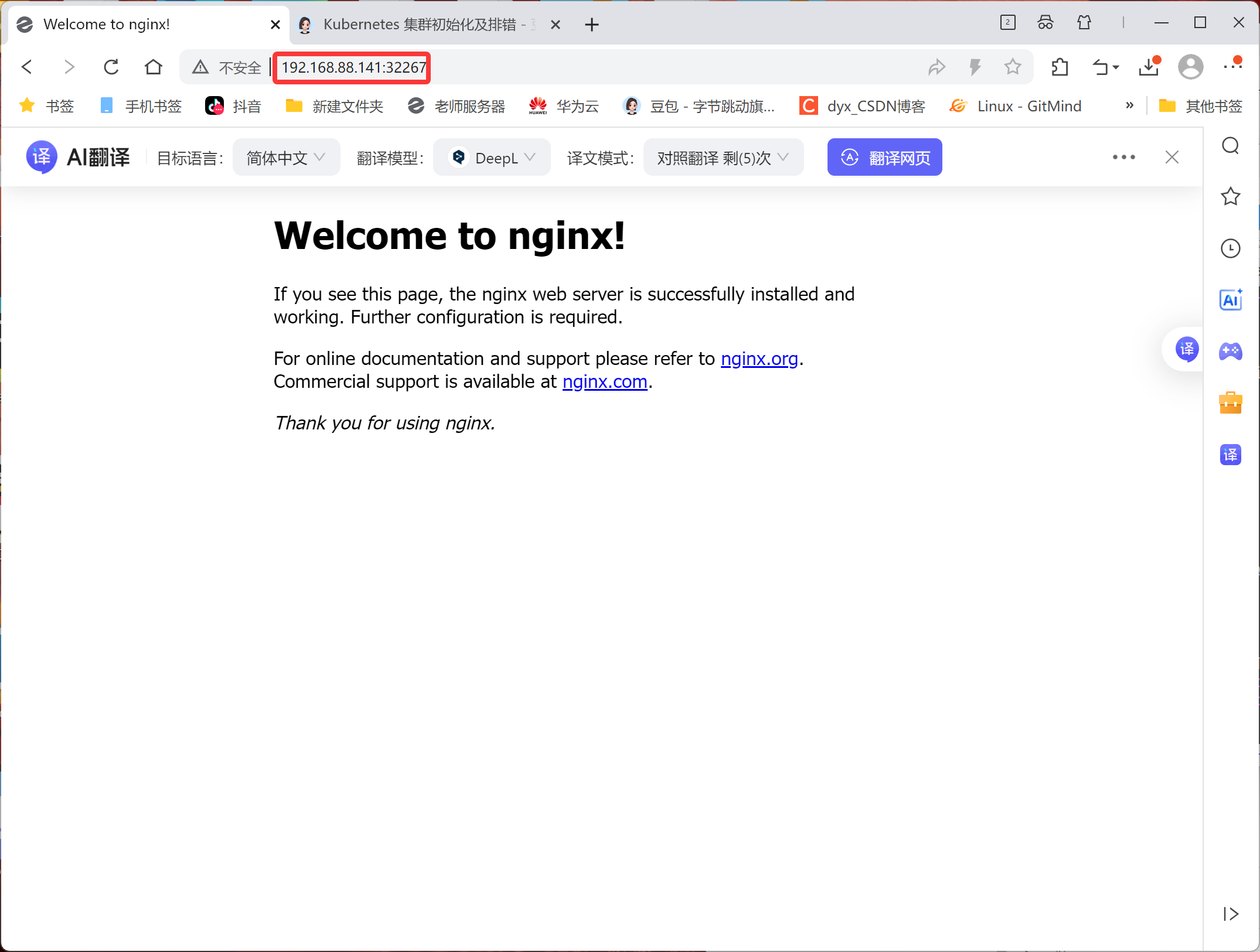

[root@master ~]# source ~/.bashrc测试操作

创建应用服务nginx

bash

[root@master ~ 11:28:12]# kubectl create deployment nginx --image=nginx --replicas=3

deployment.apps/nginx created

[root@master ~ 11:37:14]# kubectl expose deployment nginx --port=80 --target-port=80 --type=NodePort

service/nginx exposed查看pod和service信息

默认情况下,master节点存在污点,不接受任何pod资源调度

bash

[root@master ~ 11:37:52]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-7854ff8877-9zknp 1/1 Running 0 9m40s

pod/nginx-7854ff8877-g6kkl 1/1 Running 0 9m39s

pod/nginx-7854ff8877-ww2qf 1/1 Running 0 9m40s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 19h

service/nginx NodePort 10.102.62.233 <none> 80:32267/TCP 46s

[root@node2 ~ 11:31:33]# docker images |grep nginx

nginx latest 4af177a024eb 26 hours ago 161MB

[root@node1 ~ 11:29:05]# docker images |grep nginx

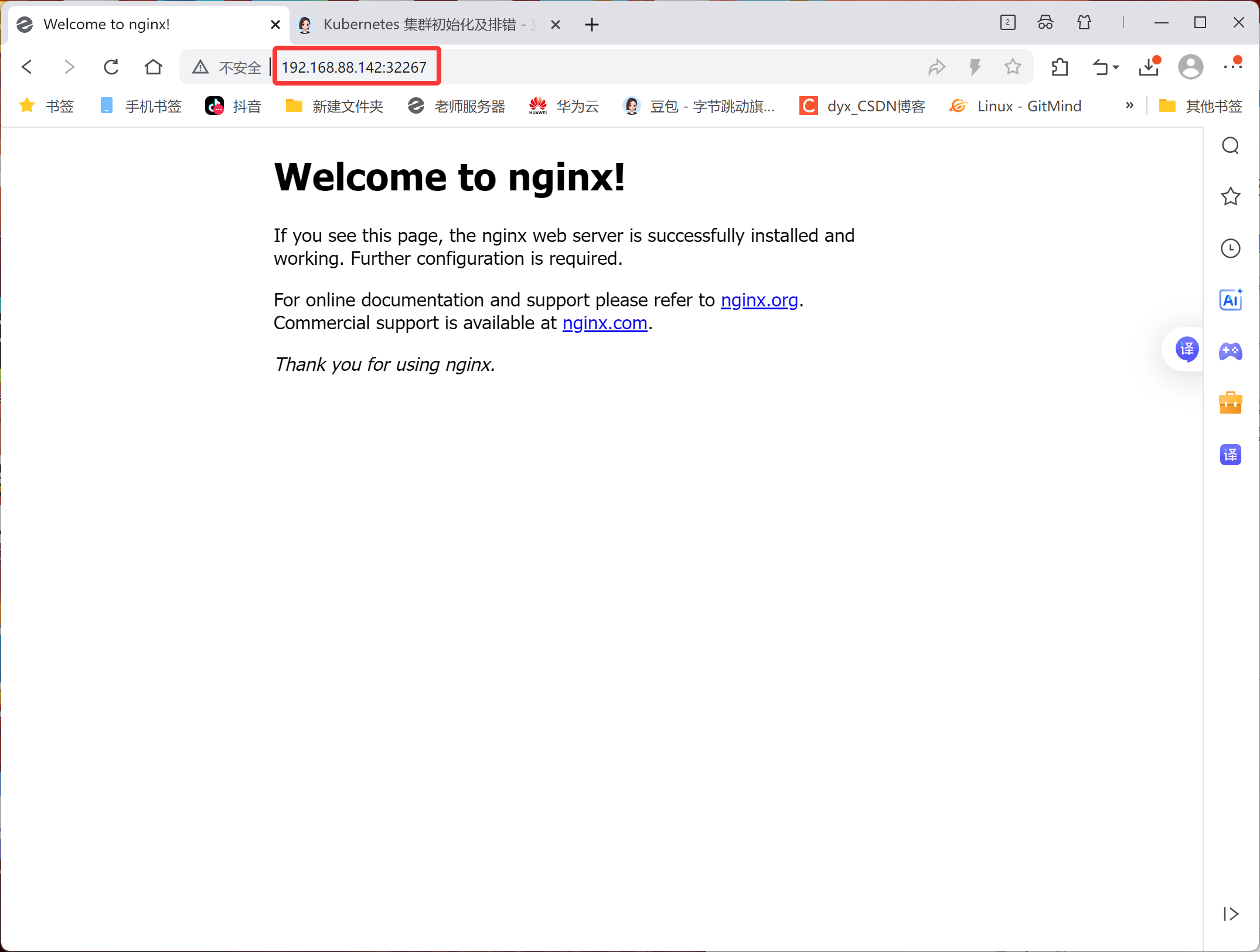

nginx latest 4af177a024eb 26 hours ago 161MB测试访问

注意:关闭node节点防火墙

bash

[root@node1 ~]# systemctl stop firewalld.service

[root@node2 ~]# systemctl stop firewalld.service浏览器输入地址:nodeIP:port 则可以看到nginx测试页面!!!

node1和node2地址都可以访问

containerd版

前置环境部署

1.主机配置

| CPU | 内存 | 硬盘 | IP | 主机名 | 软件 |

|---|---|---|---|---|---|

| 2核 | 4G | 100G | 192.168.88.144 | master | containerd |

| 2核 | 4G | 100G | 192.168.88.143 | node1 | containerd |

| 2核 | 4G | 100G | 192.168.88.145 | node2 | containerd |

配置主机名

bash

hostnamectl set-hostname master

hostnamectl set-hostname node1

hostnamectl set-hostname node2配置主机名解析

所有节点都配置,并测试ping通

bash

[root@master ~ 09:26:40]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.88.144 master

192.168.88.143 node1

192.168.88.145 node2安装环境包

bash

[root@master ~ 09:18:40]# yum -y install vim lrzsz unzip wget net-tools tree bash-completion conntrack ntpdate ntp ipvsadm ipset iptables curl sysstat libseccomp git psmisc telnet unzip gcc gcc-c++ make关闭防火墙和内核安全机制

bash

[root@master ~ 09:25:12]# systemctl disable firewalld --now

[root@master ~ 09:25:24]# vim /etc/selinux/config

[root@master ~ 09:25:48]# setenforce 0

setenforce: SELinux is disabled关闭swap分区

启用swap分区会对系统的性能产生非常负面的影响,因此kubernetes要求每个节点都要禁用swap分区

bash

[root@master ~ 09:26:28]# vim /etc/fstab

#/dev/mapper/centos-swap swap swap defaults 0 0

[root@master ~ 10:33:28]# swapoff -a && sed -i '/swap/s/^/#/' /etc/fstab调整系统内核参数

bash

[root@master ~ 09:11:25]# yum update -y kernel && reboot

[root@master ~ 09:28:40]# cat >/etc/sysctl.d/kubernetes.conf<<EOF

# 开启Linux内核的网络桥接功能,同时启用iptables和ip6tables的网络包过滤功能,用于在网络桥接时进行网络包过滤

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

# 开启路由转发,转发IPv4的数据包

net.ipv4.ip_forward=1

# 尽可能避免使用交换分区,提升k8s性能

vm.swappiness=0

# 不检查物理内存是否够用

vm.overcommit_memory=1

EOF

#立即生效

[root@master ~ 09:29:53]# sysctl --system* Applying /usr/lib/sysctl.d/00-system.conf ...

* Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ...

kernel.yama.ptrace_scope = 0

* Applying /usr/lib/sysctl.d/50-default.conf ...

kernel.sysrq = 16

kernel.core_uses_pid = 1

kernel.kptr_restrict = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.conf.all.accept_source_route = 0

net.ipv4.conf.default.promote_secondaries = 1

net.ipv4.conf.all.promote_secondaries = 1

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/kubernetes.conf ...

net.ipv4.ip_forward = 1

vm.swappiness = 0

vm.overcommit_memory = 1

* Applying /etc/sysctl.conf ...时间同步

bash

[root@master ~ 09:33:44]# yum install -y chrony

[root@master ~ 09:34:11]# systemctl restart chronyd

[root@master ~ 09:34:18]# chronyc sources -v

210 Number of sources = 4

.-- Source mode '^' = server, '=' = peer, '#' = local clock.

/ .- Source state '*' = current synced, '+' = combined , '-' = not combined,

| / '?' = unreachable, 'x' = time may be in error, '~' = time too variable.

|| .- xxxx [ yyyy ] +/- zzzz

|| Reachability register (octal) -. | xxxx = adjusted offset,

|| Log2(Polling interval) --. | | yyyy = measured offset,

|| \ | | zzzz = estimated error.

|| | | \

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^+ ntp7.flashdance.cx 2 6 17 12 -6944us[-9464us] +/- 136ms

^* time.cloudflare.com 3 6 17 11 -5054us[-7573us] +/- 153ms

^? ntp6.flashdance.cx 0 6 0 - +0ns[ +0ns] +/- 0ns

^- makaki.miuku.net 3 6 33 7 +81ms[ +81ms] +/- 217ms

[root@master ~ 09:34:39]# hwclock -sIPVS功能

bash

[root@master ~ 09:35:00]# cat >>/etc/modules-load.d/ipvs.conf<<EOF

> ip_vs

> ip_vs_rr

> ip_vs_wrr

> ip_vs_sh

> nf_conntrack_ipv4

> ip_tables

> ip_set

> xt_set

> ipt_set

> ipt_rpfilter

> ipt_REJECT

> ipip

> overlay

> br_netfilter

> EOF重启服务

bash

[root@master ~ 09:35:25]# systemctl restart systemd-modules-load.service查看内核模块

bash

[root@master ~ 09:37:15]# lsmod | grep -e ip_vs -e nf_conntrack_ipv4

nf_conntrack_ipv4 19149 0

nf_defrag_ipv4 12729 1 nf_conntrack_ipv4

ip_vs_sh 12688 0

ip_vs_wrr 12697 0

ip_vs_rr 12600 0

ip_vs 145458 6 ip_vs_rr,ip_vs_sh,ip_vs_wrr

nf_conntrack 143411 2 ip_vs,nf_conntrack_ipv4

libcrc32c 12644 3 xfs,ip_vs,nf_conntrack安装containerd

指定 containerd 在系统启动时加载的内核模块

bash

[root@master ~ 09:37:29]# cat >>/etc/modules-load.d/containerd.conf <<EOF

> overlay

> br_netfilter

> EOF加载模块

bash

[root@master ~ 09:38:49]# modprobe overlay

[root@master ~ 09:39:04]# modprobe br_netfilter立即生效

bash

[root@master ~ 09:39:06]# sysctl --system* Applying /usr/lib/sysctl.d/00-system.conf ...

net.bridge.bridge-nf-call-ip6tables = 0

net.bridge.bridge-nf-call-iptables = 0

net.bridge.bridge-nf-call-arptables = 0

* Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ...

kernel.yama.ptrace_scope = 0

* Applying /usr/lib/sysctl.d/50-default.conf ...

kernel.sysrq = 16

kernel.core_uses_pid = 1

kernel.kptr_restrict = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.conf.all.accept_source_route = 0

net.ipv4.conf.default.promote_secondaries = 1

net.ipv4.conf.all.promote_secondaries = 1

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/kubernetes.conf ...

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

vm.swappiness = 0

vm.overcommit_memory = 1

* Applying /etc/sysctl.conf ...安装依赖的软件包

bash

[root@master ~ 09:39:44]# yum install -y yum-utils device-mapper-persistent-data lvm2添加 Docker 软件源

bash

[root@master ~ 09:40:00]# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

已加载插件:fastestmirror

adding repo from: http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

grabbing file http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo to /etc/yum.repos.d/docker-ce.repo

repo saved to /etc/yum.repos.d/docker-ce.repo

[root@master ~ 09:41:07]# yum clean all

已加载插件:fastestmirror

正在清理软件源: base docker-ce-stable

: epel extras updates

Cleaning up list of fastest mirrors

[root@master ~ 09:41:17]# yum list查看containerd版本

bash

[root@master ~ 09:44:33]# yum list containerd.io --showduplicates | sort -r安装指定版本containerd

bash

[root@master ~ 09:44:45]# yum -y install containerd.io-1.6.16生成containerd的配置文件

bash

[root@master ~ 09:48:27]# mkdir -p /etc/containerd

[root@master ~ 09:48:36]# containerd config default >/etc/containerd/config.toml修改containerd的驱动程序

bash

[root@master ~ 09:48:36]# sed -i '/SystemdCgroup/s/false/true/g' /etc/containerd/config.toml修改镜像仓库地址

bash

[root@node2 ~ 09:48:51]# vim /etc/containerd/config.toml

sandbox_image = "registry.aliyunc s.com/google_containers/pause:3.9"启动containerd

bash

[root@master ~ 09:49:51]# systemctl enable containerd

Created symlink from /etc/systemd/system/multi-user.target.wants/containerd.service to /usr/lib/systemd/system/containerd.service.

[root@master ~ 09:50:07]# systemctl start containerd

[root@master ~ 09:50:08]# systemctl status containerd

[root@master ~ 09:50:23]# ctr version

Client:

Version: 1.6.16

Revision: 31aa4358a36870b21a992d3ad2bef29e1d693bec

Go version: go1.18.10

Server:

Version: 1.6.16

Revision: 31aa4358a36870b21a992d3ad2bef29e1d693bec

UUID: fe759b6d-f259-4ca2-91a6-299a7916b8fd镜像加速配置

bash

[root@master ~ 09:50:51]# vim /etc/containerd/config.toml

#145

config_path = "/etc/containerd/ certs.d"

[root@master ~ 09:51:46]# mkdir /etc/containerd/certs.d

[root@master ~ 09:52:40]# mkdir /etc/containerd/certs.d/docker.io

[root@master ~ 09:53:16]# cat /etc/containerd/certs.d/docker.io/hosts.toml

server = "https://docker.io"

[host."https://19adffc09b4f4fcbad0603a171dd0419.mirror.swr.myhuaweicloud.com"]

capabilities = ["pull","resolve","push"]

[host."https://hub-mirror.c.163.com"]

capabilities = ["pull","resolve","push"]

[host."https://do.nark.eu.org"]

capabilities = ["pull","resolve","push"]

[host."https://dc.j8.work"]

capabilities = ["pull","resolve","push"]

[host."https://docker.m.daocloud.io"]

capabilities = ["pull","resolve","push"]

[host."https://dockerproxy.com"]

capabilities = ["pull","resolve","push"]

[host."https://docker.mirrors.ustc.edu.cn"]

capabilities = ["pull","resolve","push"]

[host."https://docker.nju.edu.cn"]

capabilities = ["pull","resolve","push"]

[host."https://registry.docker-cn.com"]

capabilities = ["pull","resolve","push"]

[host."https://hub.uuuadc.top"]

capabilities = ["pull","resolve","push"]

[host."https://docker.anyhub.us.kg"]

capabilities = ["pull","resolve","push"]

[host."https://dockerhub.jobcher.com"]

capabilities = ["pull","resolve","push"]

[host."https://dockerhub.icu"]

capabilities = ["pull","resolve","push"]

[host."https://docker.ckyl.me"]

capabilities = ["pull","resolve","push"]

[host."https://docker.awsl9527.cn"]

capabilities = ["pull","resolve","push"]

[host."https://mirror.baidubce.com"]

capabilities = ["pull","resolve","push"]

[host."https://docker.1panel.live"]

capabilities = ["pull","resolve","push"]启动containerd

bash

[root@master ~ 09:53:29]# systemctl enable containerd

[root@master ~ 09:54:11]# systemctl start containerd

[root@master ~ 09:54:11]# systemctl status containerd测试

bash

[root@master ~ 09:57:57]# ctr images pull docker.io/library/httpd:latest --hosts-dir=/etc/containerd/certs.d

[root@master ~ 09:57:57]# ctr i ls

REF TYPE DIGEST SIZE PLATFORMS LABELS

docker.io/library/httpd:latest application/vnd.oci.image.index.v1+json sha256:360c5ad356d5f5e649186914b4c12b54e13bfd6aa3baed2cd972fe5a6f8c45d6 43.1 MiB linux/386,linux/amd64,linux/arm/v5,linux/arm/v7,linux/arm64/v8,linux/ppc64le,linux/riscv64,linux/s390x,unknown/unknown -安装kubeadm

添加k8s软件源

bash

[root@master ~ 10:00:04]# cat <<EOF>/etc/yum.repos.d/kubernetes.repo

> [kubernetes]

> name=Kubernetes

> baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

> enabled=1

> gpgcheck=0

> repo_gpgcheck=0

> gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

> http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF快速建立yum缓存

bash

[root@master ~ 10:00:32]# yum makecache fast查看k8s版本

bash

[root@master ~ 10:01:12]# yum list kubectl --showduplicates | sort -r安装指定版本k8s

bash

[root@master ~ 10:01:49]# yum -y install kubectl-1.28.0 kubelet-1.28.0 kubeadm-1.28.0修改kubelet的crgoup与containerd的crgoup保持一致

bash

[root@master ~ 10:06:05]# cat >/etc/sysconfig/kubelet<<EOF

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

EOFkubelet设置为开机自启动

bash

[root@master ~ 10:12:43]# systemctl daemon-reload

[root@master ~ 10:13:03]# systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.配置crictl工具

bash

[root@master ~ 10:13:03]# cat <<EOF | tee /etc/crictl.yaml

> runtime-endpoint: unix:///run/containerd/containerd.sock

> image-endpoint: unix:///run/containerd/containerd.sock

> timeout: 10

> debug: false

> EOF

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 10

debug: false主节点部署Kubernetes

bash

#查看k8s集群所需要的镜像

[root@master ~ 10:13:28]# kubeadm config images list --kubernetes-version=v1.28.0 \

> --image-repository=registry.aliyuncs.com/google_containers

registry.aliyuncs.com/google_containers/kube-apiserver:v1.28.0

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.28.0

registry.aliyuncs.com/google_containers/kube-scheduler:v1.28.0

registry.aliyuncs.com/google_containers/kube-proxy:v1.28.0

registry.aliyuncs.com/google_containers/pause:3.9

registry.aliyuncs.com/google_containers/etcd:3.5.9-0

registry.aliyuncs.com/google_containers/coredns:v1.10.1

#下载k8s集群所需要的镜像

[root@master ~ 10:16:48]# kubeadm config images pull --kubernetes-version=v1.28.0 --image-repository=registry.aliyuncs.com/google_containers

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.28.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.28.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.28.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.28.0

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.9

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.9-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.10.1

#查看k8s集群所有的镜像

[root@master ~ 10:24:47]# crictl images ls

IMAGE TAG IMAGE ID SIZE

registry.aliyuncs.com/google_containers/coredns v1.10.1 ead0a4a53df89 16.2MB

registry.aliyuncs.com/google_containers/etcd 3.5.9-0 73deb9a3f7025 103MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.28.0 bb5e0dde9054c 34.6MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.28.0 4be79c38a4bab 33.4MB

registry.aliyuncs.com/google_containers/kube-proxy v1.28.0 ea1030da44aa1 24.6MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.28.0 f6f496300a2ae 18.8MB

registry.aliyuncs.com/google_containers/pause 3.9 e6f1816883972 322kB初始化集群自动开启IPVS

bash

#创建初始化集群配置文件

[root@master ~ 10:25:06]# kubeadm config print init-defaults > kubeadm-init.yaml

# 修改初始化集群配置文件

12 advertiseAddress: 192.168.88.144

15 criSocket: unix:///var/run/containerd/containerd.sock

17 name: master

18 taints:

19 - effect: NoSchedule

20 key: node-role.kubernetes.io/control-plane

32 imageRepository: registry.aliyuncs.com/google_containers

34 kubernetesVersion: 1.28.0

38 podSubnet: 10.244.0.0/16

40 --- #更改kube-proxy的代理模式,默认为iptables

41 apiVersion: kubeproxy.config.k8s.io/v1alpha1

42 kind: KubeProxyConfiguration

43 mode: ipvs

44 --- #更改kubelet cgroup驱动为systemd

45 apiVersion: kubelet.config.k8s.io/v1beta1

46 kind: KubeletConfiguration

47 cgroupDriver: systemd初始化集群

bash

[root@master ~ 10:45:43]# kubeadm init --config=kubeadm-init.yaml --upload-certs | tee kubeadm-init.log

[init] Using Kubernetes version: v1.28.0

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master] and IPs [10.96.0.1 192.168.88.144]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master] and IPs [192.168.88.144 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master] and IPs [192.168.88.144 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 6.002599 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

90f32becb379ade5e7430df401bb9b684a2943c0708c176647b4651787495cc2

[mark-control-plane] Marking the node master as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.88.144:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:050c87ea6ed190c69256edaa64c855f181e9310ac25f1a47802cb0ab1782888a配置kubectl工具

bash

[root@master ~ 10:45:56]# mkdir -p $HOME/.kube

[root@master ~ 10:46:36]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~ 10:46:36]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 永久生效(推荐)

[root@master ~ 10:46:36]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

[root@master ~ 10:47:17]# source ~/.bash_profile查看组建状态

bash

[root@master ~ 10:47:27]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy oknode工作节点加入集群

node1

bash

[root@node1 ~ 10:49:49]# kubeadm join 192.168.88.144:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:050c87ea6ed190c69256edaa64c855f181e9310ac25f1a47802cb0ab1782888a

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.node2

bash

[root@node2 ~ 10:49:57]# kubeadm join 192.168.88.144:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:050c87ea6ed190c69256edaa64c855f181e9310ac25f1a47802cb0ab1782888a

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.master

bash

[root@master ~ 10:47:51]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane 4m44s v1.28.0

node1 NotReady <none> 107s v1.28.0

node2 NotReady <none> 93s v1.28.0部署网络CNI组建

bash

# 下载Calico文件

[root@master ~ 10:50:36]# wget --no-check-certificate https://docs.tigera.io/archive/v3.25/manifests/calico.yaml

--2026-01-14 10:50:56-- https://docs.tigera.io/archive/v3.25/manifests/calico.yaml

正在解析主机 docs.tigera.io (docs.tigera.io)... 13.215.239.219, 52.74.6.109, 2406:da18:b3d:e201::259, ...

正在连接 docs.tigera.io (docs.tigera.io)|13.215.239.219|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:238089 (233K) [text/yaml]

正在保存至: "calico.yaml"

100%[============================================>] 238,089 136KB/s 用时 1.7s

2026-01-14 10:51:00 (136 KB/s) - 已保存 "calico.yaml" [238089/238089])

# 修改Calico文件

[root@master ~ 10:51:00]# vim calico.yaml

# 找到4601行,去掉注释并修改

4601 - name: CALICO_IPV4POOL_CIDR

4602 value: "10.244.0.0/16"部署Calico

bash

[root@master ~ 10:52:00]# kubectl apply -f calico.yaml

poddisruptionbudget.policy/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

serviceaccount/calico-node created

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

deployment.apps/calico-kube-controllers created查看所有pod运行情况

bash

[root@master ~ 10:52:25]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-658d97c59c-b6824 0/1 Pending 0 39s

kube-system calico-node-pb9pk 0/1 Init:ImagePullBackOff 0 39s

kube-system calico-node-tq7g5 0/1 Init:ImagePullBackOff 0 39s

kube-system calico-node-vkzj6 0/1 Init:ImagePullBackOff 0 39s

kube-system coredns-66f779496c-drbws 0/1 Pending 0 6m53s

kube-system coredns-66f779496c-jq2zk 0/1 Pending 0 6m53s

kube-system etcd-master 1/1 Running 0 7m8s

kube-system kube-apiserver-master 1/1 Running 0 7m8s

kube-system kube-controller-manager-master 1/1 Running 0 7m8s

kube-system kube-proxy-5x56q 1/1 Running 1 4m15s

kube-system kube-proxy-dvlww 1/1 Running 1 4m1s

kube-system kube-proxy-zcjm9 1/1 Running 0 6m54s

kube-system kube-scheduler-master 1/1 Running 0 7m10s

#等待全都running第 3 章 kubernetes基础

第1部:客户端命令kubectl

1:命令帮助

集群中管理可以使用kubectl命令完成

bash

[root@master ~ 13:44:16]# kubectl -h

kubectl controls the Kubernetes cluster manager.

Find more information at: https://kubernetes.io/docs/reference/kubectl/

Basic Commands (Beginner):

create Create a resource from a file or from stdin

expose Take a replication controller, service, deployment or pod and

expose it as a new Kubernetes service

run 在集群上运行特定镜像

set 为对象设置指定特性

Basic Commands (Intermediate):

explain Get documentation for a resource

get 显示一个或多个资源

edit 编辑服务器上的资源

delete Delete resources by file names, stdin, resources and names, or

by resources and label selector

Deploy Commands:

rollout Manage the rollout of a resource

scale Set a new size for a deployment, replica set, or replication

controller

autoscale Auto-scale a deployment, replica set, stateful set, or

replication controller

Cluster Management Commands:

certificate Modify certificate resources

cluster-info Display cluster information

top Display resource (CPU/memory) usage

cordon 标记节点为不可调度

uncordon 标记节点为可调度

drain 清空节点以准备维护

taint 更新一个或者多个节点上的污点

Troubleshooting and Debugging Commands:

describe 显示特定资源或资源组的详细信息

logs 打印 Pod 中容器的日志

attach 挂接到一个运行中的容器

exec 在某个容器中执行一个命令

port-forward 将一个或多个本地端口转发到某个 Pod

proxy 运行一个指向 Kubernetes API 服务器的代理

cp Copy files and directories to and from containers

auth Inspect authorization

debug Create debugging sessions for troubleshooting workloads and

nodes

events List events

Advanced Commands:

diff Diff the live version against a would-be applied version

apply Apply a configuration to a resource by file name or stdin

patch Update fields of a resource

replace Replace a resource by file name or stdin

wait Experimental: Wait for a specific condition on one or many

resources

kustomize Build a kustomization target from a directory or URL

Settings Commands:

label 更新某资源上的标签

annotate 更新一个资源的注解

completion Output shell completion code for the specified shell (bash,

zsh, fish, or powershell)

Other Commands:

api-resources Print the supported API resources on the server

api-versions Print the supported API versions on the server, in the form of

"group/version"

config 修改 kubeconfig 文件

plugin Provides utilities for interacting with plugins

version 输出客户端和服务端的版本信息

Usage:

kubectl [flags] [options]

Use "kubectl <command> --help" for more information about a given command.

Use "kubectl options" for a list of global command-line options (applies to all

commands).

bash

[root@master ~ 13:38:58]# kubectl api-resources

NAME SHORTNAMES APIVERSION NAMESPACED KIND

bindings v1 true Binding

componentstatuses cs v1 false ComponentStatus

configmaps cm v1 true ConfigMap

endpoints ep v1 true Endpoints

events ev v1 true Event

limitranges limits v1 true LimitRange

namespaces ns v1 false Namespace

nodes no v1 false Node

persistentvolumeclaims pvc v1 true PersistentVolumeClaim

persistentvolumes pv v1 false PersistentVolume

pods po v1 true Pod

podtemplates v1 true PodTemplate

replicationcontrollers rc v1 true ReplicationController

resourcequotas quota v1 true ResourceQuota

secrets v1 true Secret

serviceaccounts sa v1 true ServiceAccount

services svc v1 true Service

mutatingwebhookconfigurations admissionregistration.k8s.io/v1 false MutatingWebhookConfiguration

validatingwebhookconfigurations admissionregistration.k8s.io/v1 false ValidatingWebhookConfiguration

customresourcedefinitions crd,crds apiextensions.k8s.io/v1 false CustomResourceDefinition

apiservices apiregistration.k8s.io/v1 false APIService

controllerrevisions apps/v1 true ControllerRevision

daemonsets ds apps/v1 true DaemonSet

deployments deploy apps/v1 true Deployment

replicasets rs apps/v1 true ReplicaSet

statefulsets sts apps/v1 true StatefulSet

selfsubjectreviews authentication.k8s.io/v1 false SelfSubjectReview

tokenreviews authentication.k8s.io/v1 false TokenReview

localsubjectaccessreviews authorization.k8s.io/v1 true LocalSubjectAccessReview

selfsubjectaccessreviews authorization.k8s.io/v1 false SelfSubjectAccessReview

selfsubjectrulesreviews authorization.k8s.io/v1 false SelfSubjectRulesReview

subjectaccessreviews authorization.k8s.io/v1 false SubjectAccessReview

horizontalpodautoscalers hpa autoscaling/v2 true HorizontalPodAutoscaler

cronjobs cj batch/v1 true CronJob

jobs batch/v1 true Job

certificatesigningrequests csr certificates.k8s.io/v1 false CertificateSigningRequest

leases coordination.k8s.io/v1 true Lease

bgpconfigurations crd.projectcalico.org/v1 false BGPConfiguration

bgppeers crd.projectcalico.org/v1 false BGPPeer

blockaffinities crd.projectcalico.org/v1 false BlockAffinity

caliconodestatuses crd.projectcalico.org/v1 false CalicoNodeStatus

clusterinformations crd.projectcalico.org/v1 false ClusterInformation

felixconfigurations crd.projectcalico.org/v1 false FelixConfiguration

globalnetworkpolicies crd.projectcalico.org/v1 false GlobalNetworkPolicy

globalnetworksets crd.projectcalico.org/v1 false GlobalNetworkSet

hostendpoints crd.projectcalico.org/v1 false HostEndpoint

ipamblocks crd.projectcalico.org/v1 false IPAMBlock

ipamconfigs crd.projectcalico.org/v1 false IPAMConfig

ipamhandles crd.projectcalico.org/v1 false IPAMHandle

ippools crd.projectcalico.org/v1 false IPPool

ipreservations crd.projectcalico.org/v1 false IPReservation

kubecontrollersconfigurations crd.projectcalico.org/v1 false KubeControllersConfiguration

networkpolicies crd.projectcalico.org/v1 true NetworkPolicy

networksets crd.projectcalico.org/v1 true NetworkSet

endpointslices discovery.k8s.io/v1 true EndpointSlice

events ev events.k8s.io/v1 true Event

flowschemas flowcontrol.apiserver.k8s.io/v1beta3 false FlowSchema

prioritylevelconfigurations flowcontrol.apiserver.k8s.io/v1beta3 false PriorityLevelConfiguration

ingressclasses networking.k8s.io/v1 false IngressClass

ingresses ing networking.k8s.io/v1 true Ingress

networkpolicies netpol networking.k8s.io/v1 true NetworkPolicy

runtimeclasses node.k8s.io/v1 false RuntimeClass

poddisruptionbudgets pdb policy/v1 true PodDisruptionBudget

clusterrolebindings rbac.authorization.k8s.io/v1 false ClusterRoleBinding

clusterroles rbac.authorization.k8s.io/v1 false ClusterRole

rolebindings rbac.authorization.k8s.io/v1 true RoleBinding

roles rbac.authorization.k8s.io/v1 true Role

priorityclasses pc scheduling.k8s.io/v1 false PriorityClass

csidrivers storage.k8s.io/v1 false CSIDriver

csinodes storage.k8s.io/v1 false CSINode

csistoragecapacities storage.k8s.io/v1 true CSIStorageCapacity

storageclasses sc storage.k8s.io/v1 false StorageClass

volumeattachments storage.k8s.io/v1 false VolumeAttachment命令:

bash

kubectl 命令 资源类型 资源名称 <参数...>2:命令详解

基础命令

| 命令 | 描述 |

|---|---|

| create | 通过文件名或标准输入创建资源 |

| expose | 将一个资源公开为一个新的service |

| run | 在集群中运行一个特定的镜像 |

| set | 在对象上设置特定的功能 |

| get | 显示一个或多个资源 |

| explain | 文档参考资料 |

| edit | 使用默认的编辑器编辑一个资源 |

| delete | 通过文件名、标准输入、资源名称或标签选择器来删除资源 |

bash

[root@master ~ 13:42:07]# kubectl api-versions

admissionregistration.k8s.io/v1

apiextensions.k8s.io/v1

apiregistration.k8s.io/v1

apps/v1

authentication.k8s.io/v1

authorization.k8s.io/v1

autoscaling/v1

autoscaling/v2

batch/v1

certificates.k8s.io/v1

coordination.k8s.io/v1

crd.projectcalico.org/v1

discovery.k8s.io/v1

events.k8s.io/v1

flowcontrol.apiserver.k8s.io/v1beta2

flowcontrol.apiserver.k8s.io/v1beta3

networking.k8s.io/v1

node.k8s.io/v1

policy/v1

rbac.authorization.k8s.io/v1

scheduling.k8s.io/v1

storage.k8s.io/v1

v1

[root@master ~ 13:43:15]# kubectl api-resources |grep pods

pods po v1 true Pod

[root@master ~ 13:43:44]# kubectl api-resources |grep deployment

deployments deploy apps/v1 true Deployment

[root@master ~ 14:16:46]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 3/3 3 3 169m

[root@master ~ 14:18:54]# kubectl explain deploy.spec

GROUP: apps

KIND: Deployment

VERSION: v1

FIELD: spec <DeploymentSpec>

DESCRIPTION:

Specification of the desired behavior of the Deployment.

DeploymentSpec is the specification of the desired behavior of the

Deployment.

FIELDS:

minReadySeconds <integer>

Minimum number of seconds for which a newly created pod should be ready

without any of its container crashing, for it to be considered available.

Defaults to 0 (pod will be considered available as soon as it is ready)

paused <boolean>

Indicates that the deployment is paused.

progressDeadlineSeconds <integer>

The maximum time in seconds for a deployment to make progress before it is

considered to be failed. The deployment controller will continue to process

failed deployments and a condition with a ProgressDeadlineExceeded reason

will be surfaced in the deployment status. Note that progress will not be

estimated during the time a deployment is paused. Defaults to 600s.

replicas <integer>

Number of desired pods. This is a pointer to distinguish between explicit

zero and not specified. Defaults to 1.

revisionHistoryLimit <integer>

The number of old ReplicaSets to retain to allow rollback. This is a pointer

to distinguish between explicit zero and not specified. Defaults to 10.

selector <LabelSelector> -required-

Label selector for pods. Existing ReplicaSets whose pods are selected by

this will be the ones affected by this deployment. It must match the pod

template's labels.

strategy <DeploymentStrategy>

The deployment strategy to use to replace existing pods with new ones.

template <PodTemplateSpec> -required-

Template describes the pods that will be created. The only allowed

template.spec.restartPolicy value is "Always".

[root@master ~ 14:17:47]# kubectl edit deploy nginx

[root@master ~ 14:22:06]# kubectl describe deploy nginx

Name: nginx

Namespace: default

CreationTimestamp: Wed, 14 Jan 2026 11:28:24 +0800

Labels: app=nginx

Annotations: deployment.kubernetes.io/revision: 1

Selector: app=nginx