一、引言与介绍

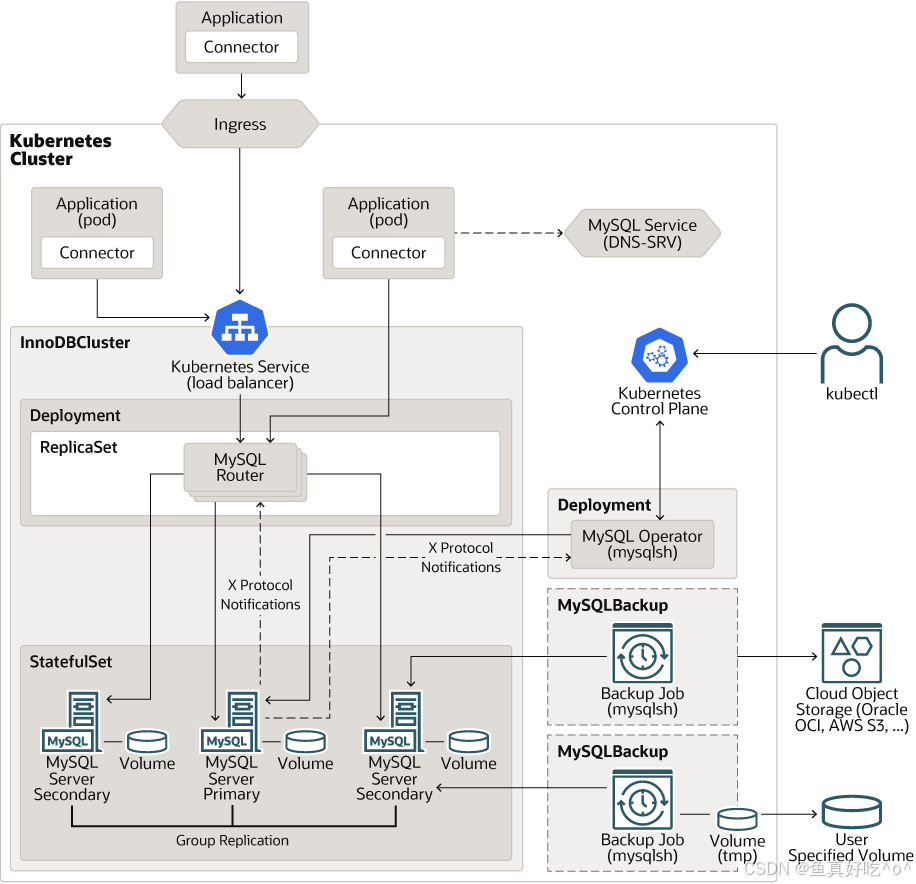

MySQL Operator for Kubernetes 是 Kubernetes 生态中专为 MySQL 设计的专属运维工具,核心基于CRD(自定义资源) 实现,旨在彻底简化 MySQL InnoDB Cluster 的部署、运维与扩展,其核心逻辑与组件价值可通过三层清晰拆解:

1. 基础核心

整个方案的运作起点是 Kubernetes 的 CRD 扩展 ------InnoDBCluster 作为自定义资源,为 MySQL 集群提供了标准化的配置入口。用户只需定义InnoDBCluster资源的 yaml 清单(指定实例数量、配置参数等),提交至 K8s 集群后,便会成为 Operator 的 "操作指令",所有后续的集群搭建、维护动作,均由 Operator 基于该 CRD 资源的状态触发,这是实现集群自动化管理的核心基础。

2. 核心组件

(1)MySQL Operator:集群的 "自动化管家"

作为方案的核心执行者,Operator 运行于 K8s 集群的mysql-operator命名空间(默认),通过 Kubernetes Deployment 保障自身高可用。其核心作用是持续监听InnoDBCluster自定义资源的状态变化:一旦检测到资源创建、更新或异常,便自动触发一系列运维操作 ------ 包括创建管理 MySQL 服务器的 StatefulSet(绑定存储卷、配置容器组)、部署 MySQL Router、生成 ConfigMap(MySQL 配置)与 Secret(认证凭证),同时自动配置 MySQL Group Replication 实现数据同步,全程无需人工介入复杂配置。

(2)InnoDB Cluster:数据库集群的 "核心实体"

InnoDBCluster不仅是 CRD 资源名称,更是实际运行的 MySQL 数据库集群核心 ------ 由一组 MySQL 服务器(Primary/Secondary 节点)组成,通过 Operator 配置的 Group Replication 实现数据实时同步,天然具备高可用特性。它是存储数据、处理业务请求的核心载体,Operator 通过维护其节点状态、复制链路,确保集群稳定运行,避免单点故障。

(3)MySQL Router:应用与集群的 "智能网关"

MySQL Router 是 Operator 自动部署的无状态路由组件(通过 K8s Deployment 管理,支持弹性扩缩容)。其核心作用是作为应用与 InnoDB Cluster 之间的中间层:一方面为应用提供稳定的访问入口(通过 K8s Service 暴露),屏蔽集群节点拓扑变化;另一方面根据应用需求智能路由请求 ------ 将写请求转发至 Primary 节点,读请求分发至 Secondary 节点,既简化了应用连接配置,又实现了读写分离与负载均衡。

3. 核心价值

借助 CRD 的标准化配置与 Operator 的自动化管理,开发者无需深入 MySQL 底层运维细节,仅通过定义InnoDBCluster资源即可快速搭建高可用 MySQL 集群;而 MySQL Router 的路由能力与 InnoDB Cluster 的复制机制,既确保了应用访问的稳定性(无需关心节点切换),又支持根据业务负载弹性扩展,完美适配 Kubernetes 的容器化、云原生场景。

Kubernetes的MySQL作符架构图

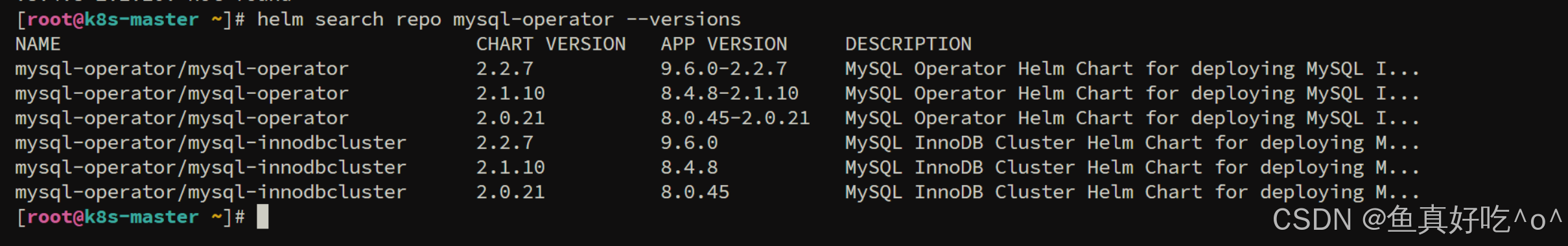

本文采用完全资源清单的方式去部署,因为Helm我没看到官方的一个定义,找不到标准的资源镜像,然后我就懒得去install Chart包去修改values下面的镜像tag了,索性直接使用资源清单部署了。

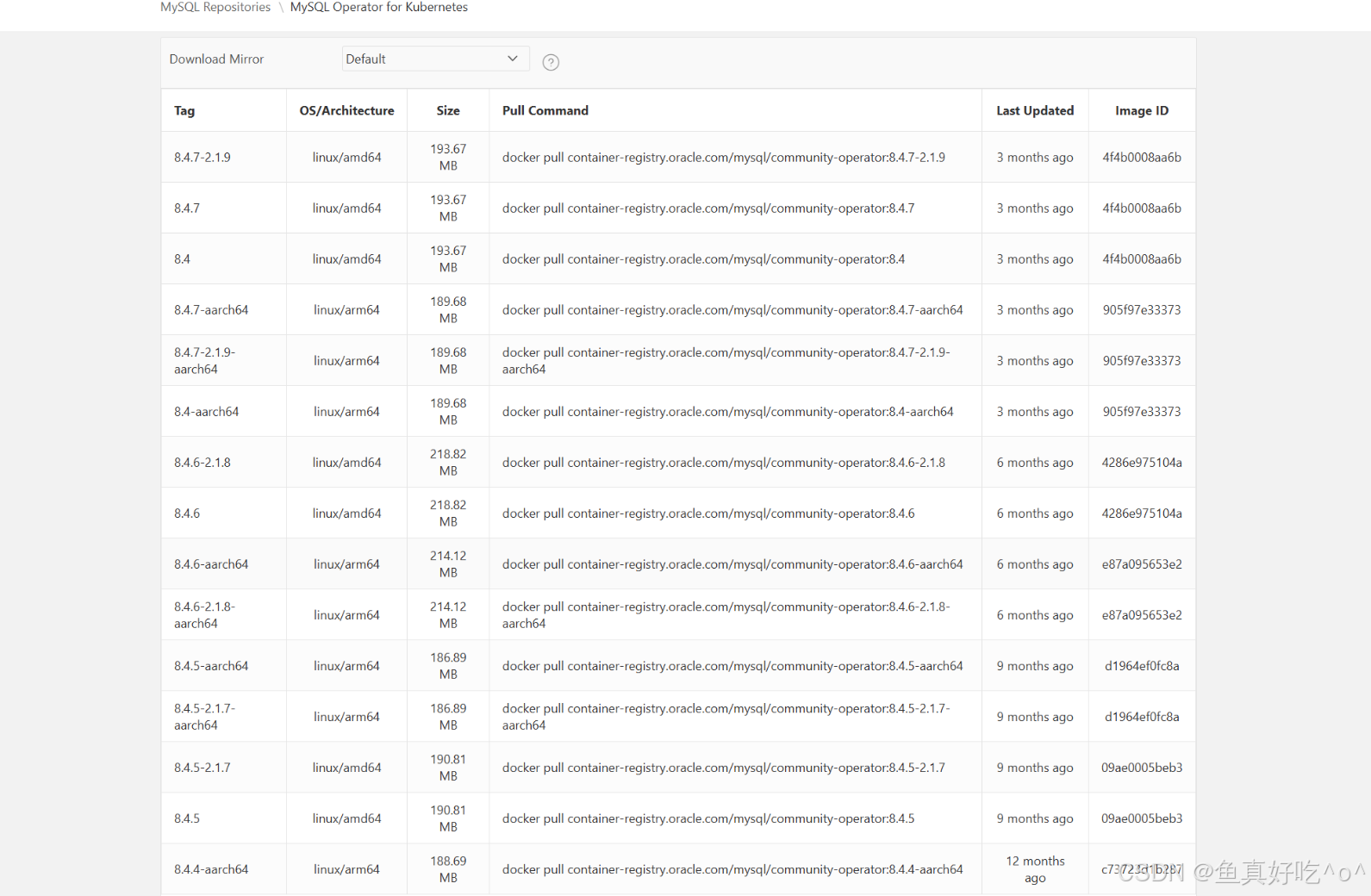

就比如我要部署8.4.8-2.1.10版本的Chart,然后我要去提前拉取镜像嘛。(因为我第一次也是直接helm install 但是发现拉取的镜像是8.4.8)

我就登录到了Oracle Container Registry仓库,但是没有找到8.4.8-2.1.10的镜像,然后我还没有找到官方的Helm的定义,我就没有下载8.4.8的chart然后修改镜像为8.4.7,我怕CRD到时候会有不兼容之类的情况,所以这里就直接使用8.4.7去部署了一套自动化架构。

难道在不同的仓库?本人没研究这个架构太长时间,有懂得可以解释一下。

二、 架构的实现

本文采用的所有资源标准均为自己的实验环境,请生成环境自助调整资源配置大小。

资源清单方式安装8.4.7mysql-operator/deploy at 8.4.7-2.1.9 · mysql/mysql-operator

2.1. 安装Operator与CRD资源

bash

[root@k8s-master ~/mysql-operator]# kubectl apply -f https://github.com/mysql/mysql-operator/blob/8.4.7-2.1.9/deploy/deploy-crds.yaml

[root@k8s-master ~/mysql-operator]# kubectl apply -f https://github.com/mysql/mysql-operator/blob/8.4.7-2.1.9/deploy/deploy-operator.yaml

[root@k8s-master ~/mysql-operator]# kubectl -n mysql-operator get all -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/mysql-operator-6c49944b64-fmbwq 1/1 Running 0 18s 10.200.36.75 k8s-node1 <none> <none>

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/mysql-operator 1/1 1 1 18s mysql-operator container-registry.oracle.com/mysql/community-operator:8.4.7-2.1.9 name=mysql-operator

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/mysql-operator-6c49944b64 1 1 1 18s mysql-operator container-registry.oracle.com/mysql/community-operator:8.4.7-2.1.9 name=mysql-operator,pod-template-hash=6c49944b64彩蛋:镜像拉取方法

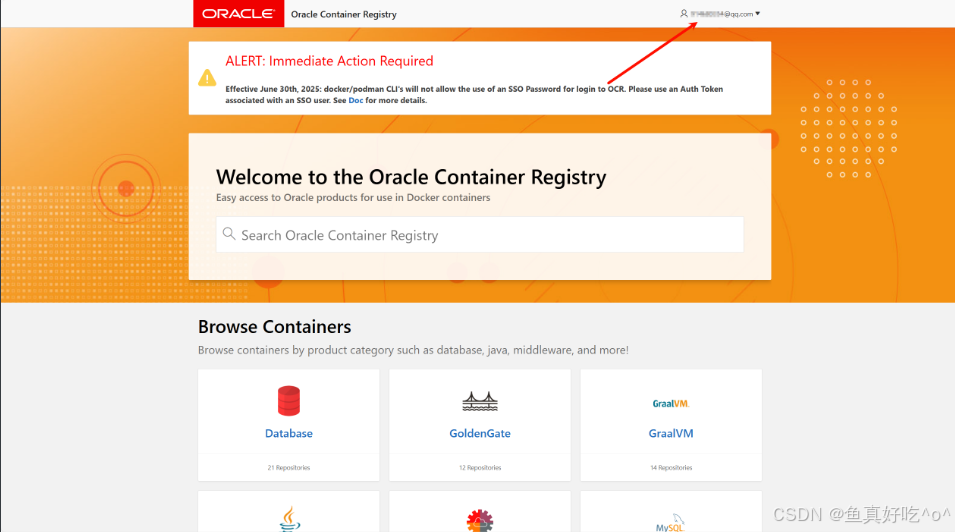

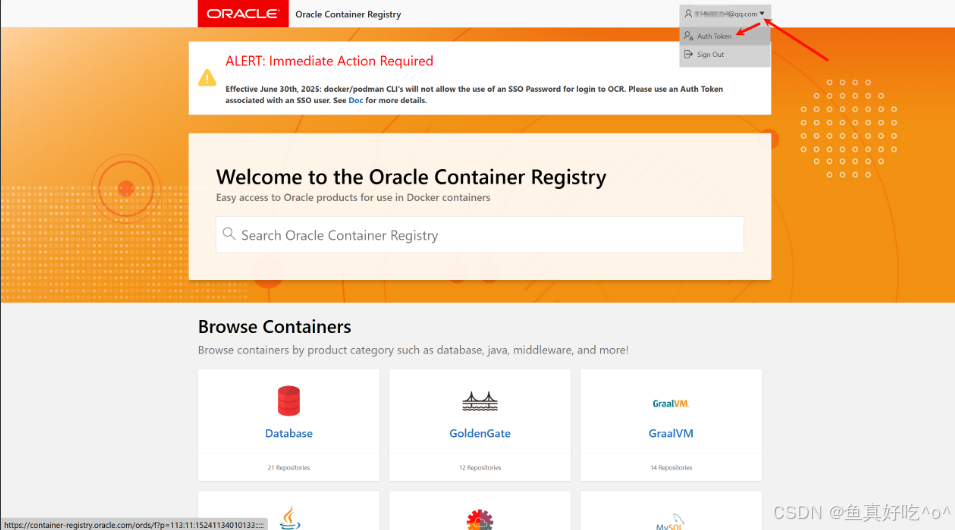

登录Oracle Container Registry: Oracle Container Registry

登录完成点击右上角Auth Token去新建Token

然后将自己生成的Token复制保存下来。

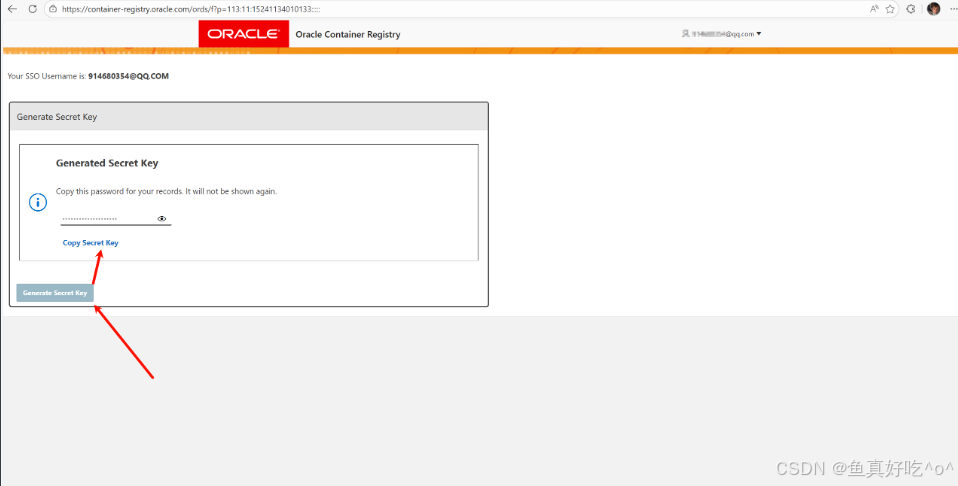

记住拷贝自己的Secret

bash

ehaR9Dqba1cbkYxcMpfJdocker登录镜像仓库

bash

[root@k8s-master ~]# docker login container-registry.oracle.com

Username: 914XXXXX@qq.com

Password: # 输入自己生成的Secret作为密码

WARNING! Your credentials are stored unencrypted in '/root/.docker/config.json'.

Configure a credential helper to remove this warning. See

https://docs.docker.com/go/credential-store/

Login Succeeded

# 拉取镜像即可,注意K8S集群最好都存在此镜像。

[root@k8s-master ~]# docker pull container-registry.oracle.com/mysql/community-operator:8.4.7-2.1.9

8.4.7-2.1.9: Pulling from mysql/community-operator

034ed5741540: Pull complete

8850e9060076: Pull complete

72a19e97697b: Pull complete

cb8069ff2565: Pull complete

20b9802feff4: Pull complete

3290b0b94ed1: Pull complete

cea3ba72d0c2: Pull complete

Digest: sha256:f04a98bba815c33f649a802a1669f85ebe5ead4dcb54264babd8749b70b056f2

Status: Downloaded newer image for container-registry.oracle.com/mysql/community-operator:8.4.7-2.1.9

container-registry.oracle.com/mysql/community-operator:8.4.7-2.1.9

[root@k8s-master ~]# docker pull container-registry.oracle.com/mysql/community-router:8.4.7

8.4.7: Pulling from mysql/community-router

63ce17d7402e: Pull complete

c1796c1f2872: Pull complete

b54c084a1ded: Pull complete

Digest: sha256:b1d63a7ee895db71d9c763085192a378958d2cc5bf3ec6a2a1feb9f21652d9ba

Status: Downloaded newer image for container-registry.oracle.com/mysql/community-router:8.4.7

container-registry.oracle.com/mysql/community-router:8.4.72.2. 部署InnoDBCluster+Router

要用 kubectl 创建 InnoDB 集群, 首先创建一个包含新MySQL凭证的秘密 root 用户,一个名为"my-root-secret"的秘密示例:

bash

[root@k8s-master ~/mysql-operator]# kubectl create ns mysql-cluster

namespace/mysql-cluster created

[root@k8s-master ~/mysql-operator]# kubectl create secret generic my-root-secret \

--from-literal=rootUser=root \

--from-literal=rootHost=% \

--from-literal=rootPassword='String@1307!' \

--namespace mysql-cluster

secret/my-root-secret created用新创建的用户配置新的MySQL InnoDB集群。这个例子是InnoDBCluster定义 创建三个 MySQL 服务器实例和两个 MySQL 路由器实例:

bash

[root@k8s-master ~/mysql-operator/resource-yaml]# cat mysql-innodb-cluster.yaml

apiVersion: mysql.oracle.com/v2

kind: InnoDBCluster

metadata:

name: my-cluster

namespace: mysql-cluster

spec:

version: "8.4.7"

instances: 3

secretName: my-root-secret

edition: community

tlsUseSelfSigned: true

# --- 1. 备份策略配置 (关键修复点) ---

backupProfiles:

- name: nfs-profile

dumpInstance:

storage:

# 必须使用全称

persistentVolumeClaim:

claimName: mysql-backup-pvc

# --- 2. 数据库参数优化 (针对 4G 内存环境) ---

mycnf: |

[mysqld]

# 限制内存池为 512M,给系统留余地

innodb_buffer_pool_size=512M

# 开启性能监控以支持 InnoDB 集群

performance_schema=ON

max_connections=150

innodb_log_file_size=128M

binlog_expire_logs_seconds=604800

# 减少文件系统缓存压力

innodb_flush_method=O_DIRECT

# --- 3. 资源配额 (QoS) ---

podSpec:

resources:

limits:

cpu: "500m"

memory: "1.5Gi"

requests:

cpu: "100m"

memory: "512Mi"

# --- 4. 数据存储配置 ---

datadirVolumeClaimTemplate:

storageClassName: "nfs-csi"

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

# --- 5. 读写分离 Router 配置 ---

router:

instances: 2

podSpec:

# 强制调度到打过标签的节点

nodeSelector:

mysql-router: "true"

containers:

- name: router

image: container-registry.oracle.com/mysql/community-router:8.4.7

imagePullPolicy: IfNotPresent

resources:

requests:

cpu: "100m"

memory: "128Mi"

limits:

cpu: "200m"

memory: "256Mi"在把备份要用到了PV给创建出来

bash

[root@k8s-master ~/mysql-operator/resource-yaml]# cat mysql-backup-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-backup-pvc

namespace: mysql-cluster

spec:

# 确保这里用的是你集群里正确的 NFS StorageClass 名字

storageClassName: "nfs-csi"

accessModes:

- ReadWriteMany # 推荐:NFS 一般支持多读写,方便查看

resources:

requests:

storage: 10Gi然后将资源给创建出来

bash

[root@k8s-master ~/mysql-operator/resource-yaml]# kubectl apply -f mysql-backup-pvc.yaml

[root@k8s-master ~/mysql-operator/resource-yaml]# kubectl get -f mysql-backup-pvc.yaml

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

mysql-backup-pvc Bound pvc-2c7017a9-75ef-4523-94c9-dfcf6a30b819 10Gi RWX nfs-csi <unset> 3m37s注意,在创建InnoDBCluster之前,请将两个要部署Router的节点打上对应的标签,来方便分节点调度。

bash

[root@k8s-master ~]# kubectl label nodes k8s-node1 mysql-router=true

node/k8s-node1 labeled

[root@k8s-master ~]# kubectl label nodes k8s-node2 mysql-router=true

node/k8s-node2 labeled

[root@k8s-master ~/mysql-operator/resource-yaml]# kubectl apply -f mysql-innodb-cluster.yaml

[root@k8s-master ~/mysql-operator/resource-yaml]# kubectl -n mysql-cluster get po,pv,pvc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/my-cluster-0 2/2 Running 0 4m37s 10.200.36.82 k8s-node1 <none> 2/2

pod/my-cluster-1 2/2 Running 0 4m37s 10.200.36.81 k8s-node1 <none> 2/2

pod/my-cluster-2 2/2 Running 0 4m37s 10.200.169.167 k8s-node2 <none> 2/2

pod/my-cluster-router-67fd998b7-6m865 1/1 Running 0 2m39s 10.200.36.83 k8s-node1 <none> <none>

pod/my-cluster-router-67fd998b7-xrrx7 1/1 Running 0 2m39s 10.200.169.168 k8s-node2 <none> <none>

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE VOLUMEMODE

persistentvolume/pvc-2c7017a9-75ef-4523-94c9-dfcf6a30b819 10Gi RWX Delete Bound mysql-cluster/mysql-backup-pvc nfs-csi <unset> 4m50s Filesystem

persistentvolume/pvc-4df4cd5a-35c8-40de-a500-922ebfd8dc2d 10Gi RWO Delete Bound mysql-cluster/datadir-my-cluster-2 nfs-csi <unset> 4m36s Filesystem

persistentvolume/pvc-c0d0a765-00bd-4d4c-be94-2f2f79d7acc4 10Gi RWO Delete Bound mysql-cluster/datadir-my-cluster-1 nfs-csi <unset> 4m36s Filesystem

persistentvolume/pvc-e5d7e917-d24e-4835-9798-03de85a6c054 10Gi RWO Delete Bound mysql-cluster/datadir-my-cluster-0 nfs-csi <unset> 4m37s Filesystem

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE VOLUMEMODE

persistentvolumeclaim/datadir-my-cluster-0 Bound pvc-e5d7e917-d24e-4835-9798-03de85a6c054 10Gi RWO nfs-csi <unset> 4m37s Filesystem

persistentvolumeclaim/datadir-my-cluster-1 Bound pvc-c0d0a765-00bd-4d4c-be94-2f2f79d7acc4 10Gi RWO nfs-csi <unset> 4m37s Filesystem

persistentvolumeclaim/datadir-my-cluster-2 Bound pvc-4df4cd5a-35c8-40de-a500-922ebfd8dc2d 10Gi RWO nfs-csi <unset> 4m37s Filesystem

persistentvolumeclaim/mysql-backup-pvc Bound pvc-2c7017a9-75ef-4523-94c9-dfcf6a30b819 10Gi RWX nfs-csi <unset> 4m50s Filesystem

# 确保三个都已经在线即可

[root@k8s-master ~/mysql-operator/resource-yaml]# kubectl -n mysql-cluster get innodbclusters.mysql.oracle.com

NAME STATUS ONLINE INSTANCES ROUTERS AGE

my-cluster ONLINE 3 3 2 4m58s资源创建完成之后可以查看对应的数据目录是否以及初始化到了数据

bash

# 查看数据目录

[root@k8s-master ~/mysql-operator/resource-yaml]# cd /data/nfs-server/pvc-4df4cd5a-35c8-40de-a500-922ebfd8dc2d/

[root@k8s-master /data/nfs-server/pvc-4df4cd5a-35c8-40de-a500-922ebfd8dc2d]# ll

total 135792

-rw-r----- 1 27 sudo 4194304 Jan 27 10:29 '#ib_16384_0.dblwr'

-rw-r----- 1 27 sudo 12582912 Jan 27 09:46 '#ib_16384_1.dblwr'

drwxr-s--- 2 27 sudo 4096 Jan 27 09:47 '#innodb_redo'/

drwxr-s--- 2 27 sudo 4096 Jan 27 09:47 '#innodb_temp'/

-rw-r----- 1 27 sudo 0 Jan 27 09:48 GCS_DEBUG_TRACE

-rw-r----- 1 27 sudo 56 Jan 27 09:46 auto.cnf

-rw------- 1 27 sudo 1705 Jan 27 09:46 ca-key.pem

-rw-r--r-- 1 27 sudo 1108 Jan 27 09:46 ca.pem

-rw-r--r-- 1 27 sudo 1108 Jan 27 09:46 client-cert.pem

-rw------- 1 27 sudo 1705 Jan 27 09:46 client-key.pem

-rw-r----- 1 27 sudo 5721 Jan 27 09:47 ib_buffer_pool

-rw-r----- 1 27 sudo 12582912 Jan 27 10:29 ibdata1

-rw-r----- 1 27 sudo 12582912 Jan 27 09:47 ibtmp1

-rw-r----- 1 27 sudo 236 Jan 27 09:48 my-cluster-2-relay-bin-group_replication_applier.000001

-rw-r----- 1 27 sudo 15400214 Jan 27 10:29 my-cluster-2-relay-bin-group_replication_applier.000002

-rw-r----- 1 27 sudo 116 Jan 27 09:48 my-cluster-2-relay-bin-group_replication_applier.index

-rw-r----- 1 27 sudo 158 Jan 27 09:48 my-cluster-2-relay-bin-group_replication_recovery.000001

-rw-r----- 1 27 sudo 59 Jan 27 09:48 my-cluster-2-relay-bin-group_replication_recovery.index

-rw-r----- 1 27 sudo 2 Jan 27 09:47 my-cluster-2.pid

-rw-r----- 1 27 sudo 181 Jan 27 09:46 my-cluster.000001

-rw-r----- 1 27 sudo 181 Jan 27 09:47 my-cluster.000002

-rw-r----- 1 27 sudo 15528012 Jan 27 10:29 my-cluster.000003

-rw-r----- 1 27 sudo 60 Jan 27 09:47 my-cluster.index

drwxr-s--- 2 27 sudo 4096 Jan 27 09:46 mysql/

-rw-r----- 1 27 sudo 32505856 Jan 27 10:29 mysql.ibd

drwxr-s--- 2 27 sudo 4096 Jan 27 09:48 mysql_innodb_cluster_metadata/

-rw-r----- 1 27 sudo 124 Jan 27 09:46 mysql_upgrade_history

-rw-r----- 1 27 sudo 1771 Jan 27 09:48 mysqld-auto.cnf

drwxr-s--- 2 27 sudo 4096 Jan 27 09:48 performance_schema/

-rw------- 1 27 sudo 1705 Jan 27 09:46 private_key.pem

-rw-r--r-- 1 27 sudo 452 Jan 27 09:46 public_key.pem

-rw-r--r-- 1 27 sudo 1108 Jan 27 09:46 server-cert.pem

-rw------- 1 27 sudo 1705 Jan 27 09:46 server-key.pem

drwxr-s--- 2 27 sudo 4096 Jan 27 09:46 sys/

-rw-r----- 1 27 sudo 16777216 Jan 27 10:29 undo_001

-rw-r----- 1 27 sudo 16777216 Jan 27 10:29 undo_0022.3 将Router暴露出去

bash

# 创建Route的SVC端口暴露,可以在外部使用图形化工具直接运维

[root@k8s-master ~/mysql-operator/resource-yaml]# cat mysql-route-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: my-cluster-router-lb

namespace: mysql-cluster

labels:

app.kubernetes.io/name: my-cluster-router-lb

spec:

type: NodePort

selector:

app.kubernetes.io/component: router

app.kubernetes.io/name: mysql-router

ports:

- name: mysql-rw

port: 30306

targetPort: 6446

nodePort: 30306 # 读写端口暴露给外部

- name: mysql-ro

port: 30307

targetPort: 6447

nodePort: 30307 # 只读端口暴露给外部

sessionAffinity: None

[root@k8s-master ~/mysql-operator/resource-yaml]# kubectl apply -f mysql-route-svc.yaml

service/my-cluster-router-lb created

[root@k8s-master ~/mysql-operator/resource-yaml]# kubectl -n mysql-cluster get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

my-cluster ClusterIP 10.110.26.7 <none> 3306/TCP,33060/TCP,6446/TCP,6448/TCP,6447/TCP,6449/TCP,6450/TCP,8443/TCP 13m

my-cluster-instances ClusterIP None <none> 3306/TCP,33060/TCP,33061/TCP 13m

my-cluster-router-lb NodePort 10.102.39.114 <none> 30306:30306/TCP,30307:30307/TCP 7s测试连接

bash

[root@k8s-master ~/mysql-operator/resource-yaml]# telnet 10.0.0.6 30306

Trying 10.0.0.6...

Connected to 10.0.0.6.

Escape character is '^]'.

P

8.4.7-router~

b1߮nC1Cn,H$o8q~caching_sha2_password^]

telnet> quit

Connection closed.

[root@k8s-master ~/mysql-operator/resource-yaml]# telnet 10.0.0.6 30307

Trying 10.0.0.6...

Connected to 10.0.0.6.

Escape character is '^]'.

P

8.4.7-routerAh@#N!W߮hPmW0Igu]^4!caching_sha2_password^]

telnet> quit

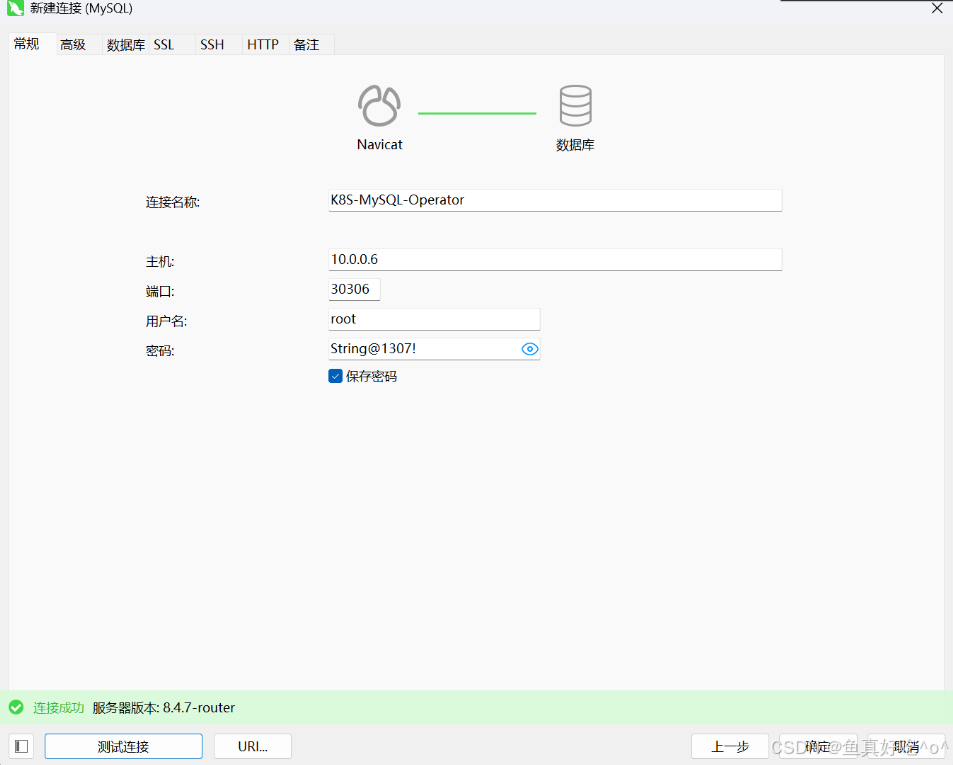

Connection closed.也可以直接使用Navicat进行连接

三、备份功能

3.1 验证备份(dumpInstance)

bash

[root@k8s-master ~/mysql-operator/resource-yaml]# cat test-backup.yaml

apiVersion: mysql.oracle.com/v2

kind: MySQLBackup

metadata:

name: test-backup-01

namespace: mysql-cluster

spec:

clusterName: my-cluster

# 这里引用的就是我们在第三步里定义的 profile 名字

backupProfileName: nfs-profile

# 查看备份状态

[root@k8s-master ~/mysql-operator/resource-yaml]# kubectl -n mysql-cluster get mysqlbackups.mysql.oracle.com -w

NAME CLUSTER STATUS OUTPUT AGE

test-backup-01 my-cluster 6s

test-backup-01 my-cluster Running test-backup-01-20260127-022008 14s

test-backup-01 my-cluster Completed test-backup-01-20260127-022008 16s找到备份目录MySQL Operator 为了避免备份数据丢失,设计上会为每一次备份生成一个唯一的目录名,格式通常是:{备份名称}-{日期}-{时间戳}。

bash

[root@k8s-master ~/mysql-operator/resource-yaml]# kubectl get pv -n mysql-cluster

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

pvc-2c7017a9-75ef-4523-94c9-dfcf6a30b819 10Gi RWX Delete Bound mysql-cluster/mysql-backup-pvc nfs-csi <unset> 35m

pvc-4df4cd5a-35c8-40de-a500-922ebfd8dc2d 10Gi RWO Delete Bound mysql-cluster/datadir-my-cluster-2 nfs-csi <unset> 34m

pvc-c0d0a765-00bd-4d4c-be94-2f2f79d7acc4 10Gi RWO Delete Bound mysql-cluster/datadir-my-cluster-1 nfs-csi <unset> 34m

pvc-e5d7e917-d24e-4835-9798-03de85a6c054 10Gi RWO Delete Bound mysql-cluster/datadir-my-cluster-0 nfs-csi <unset> 34m

[root@k8s-master ~/mysql-operator/resource-yaml]# cd /data/nfs-server/pvc-2c7017a9-75ef-4523-94c9-dfcf6a30b819/test-backup-01-20260127-022008/

[root@k8s-master /data/nfs-server/pvc-2c7017a9-75ef-4523-94c9-dfcf6a30b819/test-backup-01-20260127-022008]# ll

total 308

-rw-r----- 1 27 sudo 1504 Jan 27 10:20 @.done.json

-rw-r----- 1 27 sudo 1352 Jan 27 10:20 @.json

-rw-r----- 1 27 sudo 293 Jan 27 10:20 @.post.sql

-rw-r----- 1 27 sudo 293 Jan 27 10:20 @.sql

-rw-r----- 1 27 sudo 10021 Jan 27 10:20 @.users.sql

-rw-r----- 1 27 sudo 2937 Jan 27 10:20 mysql_innodb_cluster_metadata.json

-rw-r----- 1 27 sudo 25611 Jan 27 10:20 mysql_innodb_cluster_metadata.sql

-rw-r----- 1 27 sudo 819 Jan 27 10:20 mysql_innodb_cluster_metadata@async_cluster_members.json

-rw-r----- 1 27 sudo 1271 Jan 27 10:20 mysql_innodb_cluster_metadata@async_cluster_members.sql

-rw-r----- 1 27 sudo 9 Jan 27 10:20 mysql_innodb_cluster_metadata@async_cluster_members@@0.tsv.zst

-rw-r----- 1 27 sudo 8 Jan 27 10:20 mysql_innodb_cluster_metadata@async_cluster_members@@0.tsv.zst.idx

-rw-r----- 1 27 sudo 830 Jan 27 10:20 mysql_innodb_cluster_metadata@async_cluster_views.json

-rw-r----- 1 27 sudo 1235 Jan 27 10:20 mysql_innodb_cluster_metadata@async_cluster_views.sql

-rw-r----- 1 27 sudo 9 Jan 27 10:20 mysql_innodb_cluster_metadata@async_cluster_views@@0.tsv.zst

-rw-r----- 1 27 sudo 8 Jan 27 10:20

......备份没有问题,然后就可以将配置中修改为定时备份。apply一下生效即可。

bash

# 修改mysql-innodb-cluster.yaml配置下面的备份配置

...

backupProfiles:

- name: nfs-profile

# 新增:定时备份的 cron 表达式(每天凌晨2点)

schedule: "0 2 * * *"

# 备份类型(dumpInstance 表示逻辑备份)

dumpInstance:

storage:

# 绑定已创建的 PVC(必须用全称 persistentVolumeClaim,不能简写)

persistentVolumeClaim:

claimName: mysql-backup-pvc

# 可选:备份保留策略(避免备份文件占满存储)

retention:

# 保留最近7天的备份

days: 7

...3.2 数据恢复设计

官方推荐数据恢复: 你需要创建一个全新的

InnoDBCluster,并在创建时告诉它:"请用这个备份初始化自己"。 核心字段是spec.initDB。

bash

apiVersion: mysql.oracle.com/v2

kind: InnoDBCluster

metadata:

name: my-cluster-restored # 【注意】必须是个新名字,不能和旧集群冲突

namespace: mysql-cluster

spec:

version: "8.4.7"

instances: 3

router:

instances: 1

secretName: my-root-secret

# --- 核心恢复配置 ---

initDB:

dump:

name: nfs-backup-final-20260126-xxx # 这里填你 NFS 目录下具体的那个文件夹名字

storage:

persistentVolumeClaim:

claimName: mysql-backup-pvc # 指向存放备份的那个 PVC为什么这种方式更好?

安全:旧集群(案发现场)被保留下来了,如果恢复失败,你还有机会去旧集群里抢救数据。

原子性:新集群启动时,要么是满血复活的状态,要么就是启动失败,不会出现"数据恢复了一半"的尴尬状态。

3.3 MySQL shell恢复(loadDump)

MySQL :: MySQL Shell 8.4 :: 3.1 MySQL Shell Commands

如果你不想改名字(因为业务代码可能写死了连接地址),或者

initDB方式报错(有时候 Operator 对 PVC 的挂载路径支持有 Bug),那么最稳、最无视版本差异 的方法,手动 MySQL Shell 导入法。

bash

清理旧数据(慎用):

如果确定旧数据不要了,直接进数据库删库,或者重建 PVC。然后使用NFS-Server的备份恢复。

bash

mysqlsh -u root -p'String@1307!' -h 127.0.0.1 -P 30306 \

--util loadDump "/data/backup/nfs-backup-final-xxx" \

--threads=4 \

--resetProgress3.3 OCI对象存储恢复

在 MySQL Operator (8.4+) 的逻辑中,从 OCI 对象存储恢复 和从本地 PVC 恢复,不仅操作逻辑不同,连背后的技术实现都有很大差异。

| 维度 | OCI Object Storage (对象存储) | PersistentVolumeClaim (你的 NFS/PV) |

|---|---|---|

| 技术路径 | 真正的"一键恢复" | "初始化"或"手动导入" |

| Operator 行为 | Operator 会自动下载、解压、流式导入 | Operator 通常只支持在"首次启动"时挂载 |

| 灵活性 | 支持从云端直接"拉取"到任何新集群 | 依赖物理卷的挂载,路径和权限很死板 |

| 配置字段 | 官方文档主推 initDB.ociObjectStorage |

官方文档对 initDB.dump.persistentVolumeClaim 描述较少 |

官方(Oracle)的逻辑是:如果你用他们的云(OCI),它能帮你做得非常自动化。

-

对于 OCI :支持直接在

InnoDBCluster运行时,通过某种触发机制或者initDB完美拉取。 -

对于 PVC :它更倾向于认为这是一个**"快照"** 或者**"导出目录"** 。正如你看到的,8.4 版本删除了

MySQLRestore这种显式的恢复指令。

这里的OCIociObjectStorage 参数是专为甲骨文云(Oracle Cloud Infrastructure)设计的"亲儿子"功能,其他云目前没有这种待遇,但是你可以将你的备份存储到其他的对象存储上。

3.4 传统的mysqldump和XBK进行备份

但是备份时必须连 30307 (RO 端口) :利用 Router 的读写分离,把备份压力卸载到**从库(Secondary)**上。

方案 A:使用

mysqldump(逻辑备份,最稳)适合数据量在 50G 以下的场景

bash

mysqldump -u root -p'String@1307!' -h 127.0.0.1 -P 30307 \

--all-databases \

--single-transaction \

--triggers --routines --events \

--set-gtid-purged=ON \

--master-data=2 \

> /data/backup/full_$(date +%F).sql方案 B:使用

Xtrabackup(物理备份,最快)如果你的数据超过 100G,

mysqldump恢复太慢,建议用 XBK。但在 K8s 中,XBK 无法通过网络直接备份,它需要物理接触数据文件

推荐做法(Sidecar 模式或 CronJob):

-

创建一个临时 Pod,挂载其中一个从库(如

my-cluster-2)的 PVC。 -

或者通过

kubectl exec进到一个从库 Pod 内部执行:

bash

# 进入从库执行,避免主库 IO 阻塞

kubectl exec -it my-cluster-2 -n mysql-cluster -- xtrabackup --backup \

--user=root --password='String@1307!' \

--target-dir=/var/lib/mysql/backup/考虑到"主从切换"的稳健策略

在 K8s 中,Pod 可能会漂移。为了确保备份脚本不失效,自动化脚本应该这样写:

逻辑流:

-

探测谁是从库 :通过 SQL

select * from performance_schema.replication_group_members确认成员状态。 -

锁定目标 :通过 Router 的 30307 端口进行连接。即便发生主从切换,Router 会自动把你的备份请求导向新的从库,脚本无需修改 IP。

-

定期清理:在 NFS 上保留最近 7 天的备份。

建议的备份计划 (Crontab)

| 频率 | 备份方式 | 存储位置 | 目的 |

|---|---|---|---|

| 每 1 小时 | 增量/日志备份 | NFS | 减少数据丢失窗口 |

| 每天凌晨 2 点 | mysqldump 全量 | NFS | 长期存档,应对误删库 |

| 每周/月 | Xtrabackup 全量 | 离线存储 | 灾难性硬件故障恢复 |

总结一句话:在 K8s 里用传统工具,核心就是"连 Router 的只读端口做备份,连读写端口做恢复"。

四、验证集群状态

4.1 查看集群状态

bash

[root@k8s-master ~/mysql-operator/resource-yaml]# kubectl -n mysql-cluster exec -it my-cluster-0 -- mysqlsh

Defaulted container "sidecar" out of: sidecar, mysql, fixdatadir (init), initconf (init), initmysql (init)

MySQL Shell 8.4.7

Copyright (c) 2016, 2025, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its affiliates.

Other names may be trademarks of their respective owners.

Type '\help' or '\?' for help; '\quit' to exit.

# 连接集群

MySQL SQL > \connect root@localhost

Creating a session to 'root@localhost'

Please provide the password for 'root@localhost': ************

Fetching global names for auto-completion... Press ^C to stop.

Your MySQL connection id is 17774 (X protocol)

Server version: 8.4.7 MySQL Community Server - GPL

No default schema selected; type \use <schema> to set one.

# 执行SQL语句

MySQL localhost:33060+ ssl SQL > show databases;

+-------------------------------+

| Database |

+-------------------------------+

| information_schema |

| mysql |

| mysql_innodb_cluster_metadata |

| performance_schema |

| sys |

+-------------------------------+

5 rows in set (0.0017 sec)

# 切换语言类型

MySQL localhost:33060+ ssl SQL > \js

Switching to JavaScript mode...

# 查看集群状态

MySQL localhost:33060+ ssl JS > dba.getCluster().status()

{

"clusterName": "my_cluster",

"defaultReplicaSet": {

"name": "default",

"primary": "my-cluster-0.my-cluster-instances.mysql-cluster.svc.cluster.local:3306",

"ssl": "REQUIRED",

"status": "OK",

"statusText": "Cluster is ONLINE and can tolerate up to ONE failure.",

"topology": {

"my-cluster-0.my-cluster-instances.mysql-cluster.svc.cluster.local:3306": {

"address": "my-cluster-0.my-cluster-instances.mysql-cluster.svc.cluster.local:3306",

"memberRole": "PRIMARY",

"mode": "R/W",

"readReplicas": {},

"replicationLag": "applier_queue_applied",

"role": "HA",

"status": "ONLINE",

"version": "8.4.7"

},

"my-cluster-1.my-cluster-instances.mysql-cluster.svc.cluster.local:3306": {

"address": "my-cluster-1.my-cluster-instances.mysql-cluster.svc.cluster.local:3306",

"memberRole": "SECONDARY",

"mode": "R/O",

"readReplicas": {},

"replicationLag": "applier_queue_applied",

"role": "HA",

"status": "ONLINE",

"version": "8.4.7"

},

"my-cluster-2.my-cluster-instances.mysql-cluster.svc.cluster.local:3306": {

"address": "my-cluster-2.my-cluster-instances.mysql-cluster.svc.cluster.local:3306",

"memberRole": "SECONDARY",

"mode": "R/O",

"readReplicas": {},

"replicationLag": "applier_queue_applied",

"role": "HA",

"status": "ONLINE",

"version": "8.4.7"

}

},

"topologyMode": "Single-Primary"

},

"groupInformationSourceMember": "my-cluster-0.my-cluster-instances.mysql-cluster.svc.cluster.local:3306"

}

MySQL localhost:33060+ ssl JS > 4.2 测试主从复制

bash

# 从JS切换为SQL语言

MySQL localhost:33060+ ssl JS > \sql

Switching to SQL mode... Commands end with ;

-- 1. 创建数据库

CREATE DATABASE sync_test;

-- 2. 切换数据库

USE sync_test;

-- 3. 创建表

CREATE TABLE users (

id INT AUTO_INCREMENT PRIMARY KEY,

name VARCHAR(50),

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP

) ENGINE=InnoDB;

-- 4. 插入一条数据

INSERT INTO users (name) VALUES ('Gemini_Test_Data');

-- 5. 查看数据

MySQL localhost:33060+ ssl sync_test SQL > SELECT * FROM users;

+----+------------------+---------------------+

| id | name | created_at |

+----+------------------+---------------------+

| 1 | Gemini_Test_Data | 2026-01-27 02:47:13 |

+----+------------------+---------------------+

1 row in set (0.0015 sec)

bash

# 再打开一个窗口,连接从库查看数据

MySQL localhost:33060+ ssl SQL > SELECT * FROM sync_test.users;

+----+------------------+---------------------+

| id | name | created_at |

+----+------------------+---------------------+

| 1 | Gemini_Test_Data | 2026-01-27 02:47:13 |

+----+------------------+---------------------+

1 row in set (0.0008 sec)

MySQL localhost:33060+ ssl

bash

主节点再插入数据然后从节点查看

# master节点

MySQL localhost:33060+ ssl sync_test SQL > INSERT INTO sync_test.users (name) VALUES ('Illegal_Write');

Query OK, 1 row affected (0.0084 sec)

MySQL localhost:33060+ ssl sync_test SQL > \quit

Bye!

#replicas节点

MySQL localhost:33060+ ssl SQL > SELECT * FROM sync_test.users;

+----+------------------+---------------------+

| id | name | created_at |

+----+------------------+---------------------+

| 1 | Gemini_Test_Data | 2026-01-27 02:47:13 |

| 2 | Illegal_Write | 2026-01-27 02:49:20 |

+----+------------------+---------------------+

2 rows in set (0.0012 sec)

MySQL localhost:33060+ ssl SQL > exit

-> ^C

MySQL localhost:33060+ ssl SQL > \quit

Bye!4.3 测试读写分离

第一步:找准端口

因为目前使用 NodePort 暴露的服务,每次重建端口可能会变,所以先确认一下当前的端口映射:

bash

kubectl get svc -n mysql-cluster请重点看 my-cluster-router 这一行,找到对应的映射关系:

-

RW 端口 (读写) :找到映射到容器 6446 的那个端口(例如

30306)。 -

RO 端口 (只读) :找到映射到容器 6447 的那个端口(例如

30307)。

第二步:验证"读写端口" (RW Port - 6446)

这个端口是给写操作用的,它必须始终指向 Primary (主节点) 。我们连查 3 次,应该每次都返回同一个名字。

bash

[root@k8s-master ~ ]# apt install -y mysql-client

# 记得替换 -P 后面的端口为你查到的 6446 映射端口

[root@k8s-master ~/mysql-operator/resource-yaml]# for i in {1..3}; do

mysql -u root -p'String@1307!' -h 127.0.0.1 -P 30306 -e "select @@hostname;"

done

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------------+

| @@hostname |

+--------------+

| my-cluster-0 |

+--------------+

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------------+

| @@hostname |

+--------------+

| my-cluster-0 |

+--------------+

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------------+

| @@hostname |

+--------------+

| my-cluster-0 |

+--------------+✅ 预期结果: 输出的 Hostname 完全一样 (例如全是 my-cluster-0)。这说明流量很稳,没乱跑。

第三步:验证"只读端口" (RO Port - 6447) ------ 关键步骤

这个端口是给读操作用的,它应该在所有 Secondary (从节点) 之间做负载均衡。我们连查 5 次,应该看到名字在轮换。

bash

[root@k8s-master ~/mysql-operator/resource-yaml]# # 记得替换 -P 后面的端口为你查到的 6447 映射端口

for i in {1..5}; do

mysql -u root -p'String@1307!' -h 127.0.0.1 -P 30307 -e "select @@hostname;"

done

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------------+

| @@hostname |

+--------------+

| my-cluster-2 |

+--------------+

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------------+

| @@hostname |

+--------------+

| my-cluster-1 |

+--------------+

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------------+

| @@hostname |

+--------------+

| my-cluster-2 |

+--------------+

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------------+

| @@hostname |

+--------------+

| my-cluster-1 |

+--------------+

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------------+

| @@hostname |

+--------------+

| my-cluster-2 |

+--------------+✅ 预期结果: 输出的 Hostname 会在不同的 Pod 名字之间跳变(例如 my-cluster-1 -> my-cluster-2 -> my-cluster-1...)。

🎁 附加题:验证只读属性 (防误写)

为了证明 RO 端口真的是"只读"的,咱们尝试往里面插数据,看看它会不会报错。

bash

[root@k8s-master ~/mysql-operator/resource-yaml]# mysql -u root -p'String@1307!' -h 127.0.0.1 -P 30307 -e "create table test_db.should_fail(id int);"

mysql: [Warning] Using a password on the command line interface can be insecure.

ERROR 1290 (HY000) at line 1: The MySQL server is running with the --super-read-only option so it cannot execute this statement没问题。

4.4 测试高可用

可以看到目前cluster-0是PRIMARY状态。

bash

MySQL localhost:33060+ ssl SQL > SELECT MEMBER_HOST, MEMBER_ROLE, MEMBER_STATE FROM performance_schema.replication_group_members;

+-------------------------------------------------------------------+-------------+--------------+

| MEMBER_HOST | MEMBER_ROLE | MEMBER_STATE |

+-------------------------------------------------------------------+-------------+--------------+

| my-cluster-1.my-cluster-instances.mysql-cluster.svc.cluster.local | SECONDARY | ONLINE |

| my-cluster-0.my-cluster-instances.mysql-cluster.svc.cluster.local | PRIMARY | ONLINE |

| my-cluster-2.my-cluster-instances.mysql-cluster.svc.cluster.local | SECONDARY | ONLINE |

+-------------------------------------------------------------------+-------------+--------------+

3 rows in set (0.0007 sec)打开多个窗口实时监控MySQL-cluster和innoDBCluster的状态

bash

[root@k8s-master ~/mysql-operator/resource-yaml]# kubectl -n mysql-cluster get po -w

NAME READY STATUS RESTARTS AGE

my-cluster-0 2/2 Running 0 67m

my-cluster-1 2/2 Running 0 67m

my-cluster-2 2/2 Running 0 67m

my-cluster-router-67fd998b7-6m865 1/1 Running 0 65m

my-cluster-router-67fd998b7-xrrx7 1/1 Running 0 65m

test-backup-01-20260127-022008-jjzzk 0/1 Completed 0 34m

[root@k8s-master ~]# kubectl -n mysql-cluster get innodbclusters.mysql.oracle.com -w

NAME STATUS ONLINE INSTANCES ROUTERS AGE

my-cluster ONLINE 3 3 2 68m然后在另外窗口直接delete掉Pod[或者直接关机也行]

bash

[root@k8s-master ~/mysql-operator/resource-yaml]# kubectl get po -n mysql-cluster

NAME READY STATUS RESTARTS AGE

my-cluster-0 2/2 Running 0 68m

my-cluster-1 2/2 Running 0 68m

my-cluster-2 2/2 Running 0 68m

my-cluster-router-67fd998b7-6m865 1/1 Running 0 66m

my-cluster-router-67fd998b7-xrrx7 1/1 Running 0 66m

test-backup-01-20260127-022008-jjzzk 0/1 Completed 0 35m

[root@k8s-master ~/mysql-operator/resource-yaml]# kubectl -n mysql-cluster delete po my-cluster-0

pod "my-cluster-0" deleted然后持续监控MySQL-cluster和innoDBCluster的状态,直到集群在短期内自动恢复正常

bash

# Pod以及自动恢复

[root@k8s-master ~/mysql-operator/resource-yaml]# kubectl -n mysql-cluster get po -w

NAME READY STATUS RESTARTS AGE

my-cluster-0 2/2 Running 0 67m

my-cluster-1 2/2 Running 0 67m

my-cluster-2 2/2 Running 0 67m

my-cluster-router-67fd998b7-6m865 1/1 Running 0 65m

my-cluster-router-67fd998b7-xrrx7 1/1 Running 0 65m

test-backup-01-20260127-022008-jjzzk 0/1 Completed 0 34m

my-cluster-0 2/2 Terminating 0 69m

my-cluster-0 2/2 Terminating 0 69m

my-cluster-0 2/2 Terminating 0 69m

my-cluster-0 2/2 Terminating 0 69m

my-cluster-1 2/2 Running 0 69m

my-cluster-1 2/2 Running 0 69m

my-cluster-2 2/2 Running 0 69m

my-cluster-2 2/2 Running 0 69m

my-cluster-0 2/2 Terminating 0 69m

my-cluster-1 2/2 Running 0 69m

my-cluster-2 2/2 Running 0 69m

my-cluster-0 2/2 Terminating 0 70m

my-cluster-0 0/2 Completed 0 70m

my-cluster-0 0/2 Completed 0 70m

my-cluster-0 0/2 Completed 0 70m

my-cluster-0 0/2 Pending 0 0s

my-cluster-0 0/2 Pending 0 0s

my-cluster-0 0/2 Init:0/3 0 0s

my-cluster-0 0/2 Init:0/3 0 0s

my-cluster-0 0/2 Init:0/3 0 0s

my-cluster-0 0/2 Init:0/3 0 1s

my-cluster-0 0/2 Init:1/3 0 5s

my-cluster-0 0/2 Init:1/3 0 8s

my-cluster-0 0/2 Init:2/3 0 10s

my-cluster-0 0/2 PodInitializing 0 12s

my-cluster-0 1/2 Running 0 19s

my-cluster-0 1/2 Running 0 24s

my-cluster-0 1/2 Running 0 25s

my-cluster-0 2/2 Running 0 30s

bash

# InnoDB Cluster也以及恢复

[root@k8s-master ~]# kubectl -n mysql-cluster get innodbclusters.mysql.oracle.com -w

NAME STATUS ONLINE INSTANCES ROUTERS AGE

my-cluster ONLINE 3 3 2 68m

my-cluster ONLINE 3 3 2 69m

my-cluster ONLINE_PARTIAL 2 3 2 69m

my-cluster ONLINE_PARTIAL 2 3 2 70m

my-cluster ONLINE 3 3 2 71m再次查看主从和高可用的关系,可以看到cluster1变成了新主

bash

MySQL localhost:33060+ ssl SQL > SELECT MEMBER_HOST, MEMBER_ROLE, MEMBER_STATE FROM performance_schema.replication_group_members;

+-------------------------------------------------------------------+-------------+--------------+

| MEMBER_HOST | MEMBER_ROLE | MEMBER_STATE |

+-------------------------------------------------------------------+-------------+--------------+

| my-cluster-1.my-cluster-instances.mysql-cluster.svc.cluster.local | PRIMARY | ONLINE |

| my-cluster-0.my-cluster-instances.mysql-cluster.svc.cluster.local | SECONDARY | ONLINE |

| my-cluster-2.my-cluster-instances.mysql-cluster.svc.cluster.local | SECONDARY | ONLINE |

+-------------------------------------------------------------------+-------------+--------------+

3 rows in set (0.0009 sec)4.5 检查集群状态

检查读写分离,没有问题即可。

bash

# 读写分离依然生效就没有问题

[root@k8s-master ~/mysql-operator/resource-yaml]# for i in {1..5}; do

mysql -u root -p'String@1307!' -h 127.0.0.1 -P 30307 -e "select @@hostname;"

done

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------------+

| @@hostname |

+--------------+

| my-cluster-2 |

+--------------+

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------------+

| @@hostname |

+--------------+

| my-cluster-0 |

+--------------+

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------------+

| @@hostname |

+--------------+

| my-cluster-2 |

+--------------+

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------------+

| @@hostname |

+--------------+

| my-cluster-2 |

+--------------+

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------------+

| @@hostname |

+--------------+

| my-cluster-0 |

+--------------+

[root@k8s-master ~/mysql-operator/resource-yaml]# for i in {1..3}; do

mysql -u root -p'String@1307!' -h 127.0.0.1 -P 30306 -e "select @@hostname;"

done

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------------+

| @@hostname |

+--------------+

| my-cluster-1 |

+--------------+

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------------+

| @@hostname |

+--------------+

| my-cluster-1 |

+--------------+

mysql: [Warning] Using a password on the command line interface can be insecure.

+--------------+

| @@hostname |

+--------------+

| my-cluster-1 |

+--------------+

[root@k8s-master ~/mysql-operator/resource-yaml]#

bash

MySQL localhost:33060+ ssl JS > dba.getCluster().status()

{

"clusterName": "my_cluster",

"defaultReplicaSet": {

"name": "default",

"primary": "my-cluster-1.my-cluster-instances.mysql-cluster.svc.cluster.local:3306",

"ssl": "REQUIRED",

"status": "OK",

"statusText": "Cluster is ONLINE and can tolerate up to ONE failure.",

"topology": {

"my-cluster-0.my-cluster-instances.mysql-cluster.svc.cluster.local:3306": {

"address": "my-cluster-0.my-cluster-instances.mysql-cluster.svc.cluster.local:3306",

"memberRole": "SECONDARY",

"mode": "R/O",

"readReplicas": {},

"replicationLag": "applier_queue_applied",

"role": "HA",

"status": "ONLINE",

"version": "8.4.7"

},

"my-cluster-1.my-cluster-instances.mysql-cluster.svc.cluster.local:3306": {

"address": "my-cluster-1.my-cluster-instances.mysql-cluster.svc.cluster.local:3306",

"memberRole": "PRIMARY",

"mode": "R/W",

"readReplicas": {},

"replicationLag": "applier_queue_applied",

"role": "HA",

"status": "ONLINE",

"version": "8.4.7"

},

"my-cluster-2.my-cluster-instances.mysql-cluster.svc.cluster.local:3306": {

"address": "my-cluster-2.my-cluster-instances.mysql-cluster.svc.cluster.local:3306",

"memberRole": "SECONDARY",

"mode": "R/O",

"readReplicas": {},

"replicationLag": "applier_queue_applied",

"role": "HA",

"status": "ONLINE",

"version": "8.4.7"

}

},

"topologyMode": "Single-Primary"

},

"groupInformationSourceMember": "my-cluster-1.my-cluster-instances.mysql-cluster.svc.cluster.local:3306"

}

MySQL localhost:33060+ ssl JS > \quit

Bye!五、基础运维MySQL Shell命令

MySQL Shell 是 Operator 的 "底层执行引擎",而接触的是 Operator 封装后的 K8s CR/Helm 等 "上层运维接口"。

MySQL Operator 底层几乎所有核心运维操作,都是通过调用 MySQL Shell 实现的,但你作为使用者,日常运维并不需要直接写 MySQL Shell 命令(除非是手动调试 / 恢复这类特殊场景)。

5.1 核心连接与会话管理

| 命令(含别名) | 核心功能 | 实用示例 |

|---|---|---|

\connect(\c) |

连接 MySQL 实例(支持多协议) | \c root@127.0.0.1:3306(经典协议); \c --mysqlx root@localhost:33060(X 协议); \c --ssh root@192.168.1.100:22 root@localhost:3306(SSH 隧道) |

\reconnect |

重新连接当前实例(连接断开时用) | 直接输入:\reconnect |

\disconnect |

断开全局会话(保留 MySQL Shell 不退出) | 直接输入:\disconnect |

\status(\s) |

查看当前连接状态(实例信息、字符集、 uptime 等) | 直接输入:\s |

5.2 模式切换与脚本执行(多语言适配)

| 命令 | 核心功能 | 实用示例 |

|---|---|---|

\sql |

切换到 SQL 模式(执行标准 SQL) | 输入 \sql 后,直接写 SQL:SELECT * FROM test.t1; |

\js |

切换到 JavaScript 模式(调用 AdminAPI/X DevAPI) | 输入 \js 后,执行:dba.status()(集群状态) |

\py |

切换到 Python 模式(语法适配 Python) | 输入 \py 后,执行:dba.get_cluster() |

\source(\./source) |

执行脚本文件(按当前模式解析) | \source /tmp/init.sql(SQL 模式执行 SQL 脚本);\source /tmp/backup.js(JS 模式执行脚本 |

5.3 备份恢复与集群运维

| 命令 / 工具类 | 核心功能 | 实用示例(结合之前场景) |

|---|---|---|

util.dumpInstance() |

逻辑备份整个实例(Operator 备份底层命令) | JS 模式:util.dumpInstance('/data/backup', {threads:4}) |

util.loadDump() |

恢复 dumpInstance 备份(适配 Operator 备份文件) | 命令行直接执行:mysqlsh -u root -p'pass' --util loadDump /data/backup --threads=4 |

dba.createCluster() |

创建 InnoDB Cluster(Operator 集群初始化底层命令) | JS 模式:dba.createCluster('mycluster') |

dba.getCluster().status() |

查看集群状态(故障排查用) | JS 模式:var cluster = dba.getCluster(); cluster.status(); |

六、总结

在云原生技术席卷全球的当下,数据库的容器化、自动化管理已成为企业数字化转型的核心需求之一,而 MySQL Operator for Kubernetes 正是为解决这一需求而生的标杆方案 ------ 它以 CRD(自定义资源)为底层基石,串联起从部署、运维、验证到数据安全的全链路能力,将 MySQL 的高可用特性与 Kubernetes 的云原生优势深度融合,为 MySQL 集群管理提供了一套标准化、自动化、可扩展的完整解决方案。

从核心逻辑来看,整套方案的设计极具巧思:InnoDBCluster 自定义资源作为标准化配置入口,让用户无需关注复杂的底层细节,仅通过简单的 YAML 清单即可定义集群需求;MySQL Operator 扮演 "自动化管家" 的核心角色,持续监听 CRD 状态变化,自动完成 StatefulSet 部署、Group Replication 配置、Router 搭建等一系列运维操作,全程无需人工介入;InnoDB Cluster 作为数据库集群的 "核心实体",通过主从复制实现数据实时同步,天然规避单点故障;而 MySQL Router 则以 "智能网关" 身份,为应用提供稳定访问入口与读写分离能力,完美屏蔽集群拓扑变化对上层业务的影响。这四大核心要素相互支撑,构建起一套协同高效、稳定可靠的组件生态。

在落地实践层面,方案的易用性与实用性尤为突出:架构实现阶段,从 Operator 与 CRD 的快速安装,到 InnoDB Cluster 与 Router 的一键部署、路由暴露,流程简洁清晰,配合镜像拉取等实用彩蛋,大幅降低了云原生新手的入门门槛;数据安全层面,覆盖 dumpInstance/loadDump 原生工具、OCI 对象存储备份、传统 mysqldump 与 XBK 等多种备份恢复方式,既适配云原生场景,又兼容传统运维习惯,全方位保障数据不丢失;集群验证环节,通过集群状态查看、主从复制测试、读写分离验证、高可用故障演练等全方位手段,帮助用户快速确认集群是否达到生产级标准;日常运维层面,MySQL Shell 提供了统一的命令集,涵盖连接管理、模式切换、集群运维、备份恢复等核心能力,且支持多语言脚本执行,显著降低了运维人员的学习成本与操作复杂度。

更重要的是,这套方案并非简单的 "技术堆砌",而是真正切中了开发者与运维人员的核心痛点:开发者无需深入钻研 MySQL 底层复制原理与 K8s 资源调度逻辑,即可快速搭建高可用测试环境;运维人员无需面对繁琐的手动配置与故障排查,即可实现集群的规模化管理与弹性扩展,大幅提升运维效率、降低人为失误风险。无论是中小型企业的轻量化部署,还是大型企业的规模化集群管理,这套方案都能凭借其良好的扩展性与适配性灵活应对。

总而言之,MySQL Operator for Kubernetes 不仅是一套数据库管理工具,更是云原生时代 MySQL 高可用集群管理的 "最佳实践"。它以自动化简化复杂运维,以标准化保障环境一致性,以多场景适配满足不同业务需求,让 MySQL 数据库在容器化生态中实现 "即部署、即可用、即稳定",成为企业在云原生转型路上值得信赖的数据库管理优选方案。