业务痛点:某区域物流公司(覆盖10城、500辆配送车、日均订单2万单)存在三大问题:

- 静态路径低效:依赖人工规划固定路线,早晚高峰拥堵路段绕路率25%,平均配送时间120分钟(行业标杆90分钟)

- 动态响应滞后:突发交通管制/订单追加时,司机凭经验调整路径,准点率仅75%(目标90%)

- 成本高企:燃油浪费(绕路)+超时罚款年超5000万元,客户满意度下降(投诉率12%)

算法团队:数据清洗(Spark)、特征工程(状态/动作/奖励特征)、Q-Learning模型训练(Ray分布式)、模拟环境构建(Gym)、策略存储(Feast)

业务团队:API网关、路径优化服务(调用Q-Learning策略)、配送调度系统集成(司机APP/控制台)、监控告警

物流运营团队:通过司机APP接收实时路径,调度员终端干预异常订单

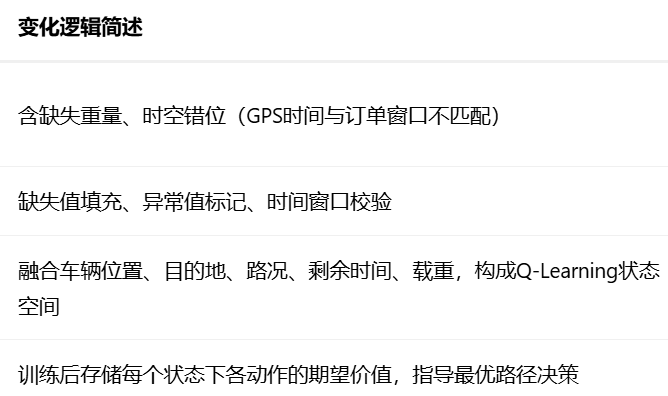

数据准备与特征变化

(1)原数据结构(多源异构数据,来自物流系统)

① 订单系统(delivery_orders,Hive表)

② 车辆GPS(vehicle_gps,Kafka Topic)

json

// 实时车辆状态(每30秒上报)

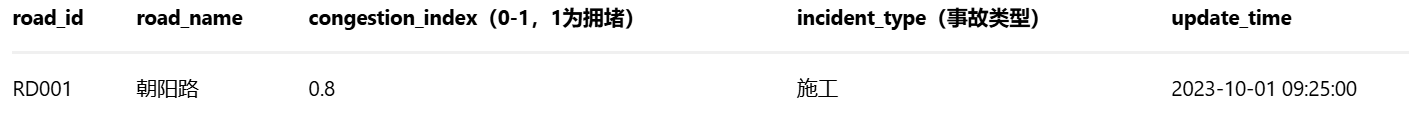

{ "vehicle_id": "VH001", "lat": 39.9042, "lon": 116.4074, "speed_kmh": 45, "load_kg": 120, "timestamp": "2023-10-01T09:30:00Z" }③ 交通数据(traffic_status,API接口)

(2)数据清洗(详细代码,算法团队负责)

目标:处理缺失值、时空错位、噪声,输出标准化的订单、车辆、交通数据

代码文件:data_processing/src/main/python/data_cleaning.py

python

import pandas as pd

import numpy as np

from pyspark.sql import SparkSession

from pyspark.sql.functions import col,when,udf

from pyspark.sql.types import StructType,StructField,DoubleType,StringType,TimestampType

import logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

def clean_order_data(spark,input_path:str,output:str):

"""清洗订单数据(处理缺失值、异常重量、时间窗口校验)"""

df = spark.read.parquet(input)

logger.info(f"原始订单数据量:{df.count()},缺失值统计:\n{df.select([count(when(col(c).isNull(), c)).alias(c) for c in df.columns]).show()}")

# 1.缺失值处理

# weight_kg缺失,用同类订单(同区域、同优先级)均值填充

df df.withColumn("weight_kg",# 修改或创建weight_kg列

when(col("weight_kg").isNull(),

df.filter(

col("origin_lat").between(39.9, 40.0) & #维度在39.9到40.0之间

col("priority") == 3 # 优先级等于3

).agg(avg("weight_kg")).first()[0]).otherwise(col("weight_kg"))

)

# 极端缺失(如区域无同类订单):用全局均值填充

global_mean_weight = df.agg(avg("weight_kg")).first()[0]

df = df.fillna({"weight_kg":global_mean_weight})

# 2.异常值处理

# weight_kg > 500kg(超大型货物,单独处理) 或<0.1kg(无效)->标记异常

df = df.withColumn("is_abnormal_weight",when((col("weight_kg")>500)|(col("weight_kg") < 0.1),1).otherwise(0))

#时间窗口校验:start >= end ->交换时间

df = df.withColumn("time_window_start", when(col("time_window_start") > col("time_window_end"), col("time_window_end")).otherwise(col("time_window_start")))

df = df.withColumn("time_window_end", when(col("time_window_start") > col("time_window_end"), col("time_window_start")).otherwise(col("time_window_end")))

# 3.去重与保存

df_clean = df.dropDuplicates(["order_id"]).select(

"order_id", "origin_lat", "origin_lon", "dest_lat", "dest_lon",

"weight_kg", "time_window_start", "time_window_end", "priority"

)

df_clean.write.mode("overrite").parquet(out_path)

logger.info(f"清洗后订单数据量:{df_clean.count()},保存至{output_path}")

return df_clean

if __name__ == "__main__":

spark = SparkSession.builder.appName("LogisticsDataCleaning").getOrCreate()

#输入/输出路径(数据湖存储)

order_input = "s3://logistics-data-lake/raw/delivery_orders.parquet"

order_output = "s3://logistics-data-lake/cleaned/orders_cleaned.parquet"

clean_order_data(spark,order_input,order_output)

spark.stop()(3)特征工程与特征数据生成(明确feature_path/label_path)

将清洗后数据转换为Q-Learning可输入的状态特征矩阵(feature_path)和Q表策略模型(label_path),核心是设计状态、动作、奖励特征

① 状态特征生成(state_feature_generator.py)

python

import pandas as pd

from shapely.geometry import Point, LineString

import geopandas as gpd

import logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

def calculate_distance(lat1, lon1, lat2, lon2):

"""计算两点间欧氏距离(简化版,实际用Haversine公式)"""

return ((lat1 - lat2)**2 + (lon1 - lon2)** 2)**0.5 * 111 # 1度≈111km

def generate_state_features(cleaned_orders: pd.DataFrame, vehicle_gps: pd.DataFrame, traffic_data: pd.DataFrame) -> pd.DataFrame:

"""生成Q-Learning状态特征(车辆位置+订单+路况)"""

# 1. 关联订单与车辆(假设1车1单,简化场景)

merged = pd.merge(vehicle_gps, cleaned_orders, left_on="current_order_id", right_on="order_id", how="left")

# 2. 计算状态特征

state_features = []

for _, row in merged.iterrows():

# 基础状态:位置、目的地、载重

state = {

"vehicle_id": row["vehicle_id"],

"order_id": row["order_id"],

"current_lat": row["lat"], "current_lon": row["lon"],

"dest_lat": row["dest_lat"], "dest_lon": row["dest_lon"],

"load_kg": row["load_kg"],

"remaining_time": (row["time_window_end"] - pd.Timestamp.now()).total_seconds() / 60 # 剩余时间(分钟)

}

# 路况特征:当前道路拥堵指数(关联GPS位置与交通数据)

current_road = traffic_data[traffic_data["road_id"] == row["current_road_id"]] # 假设GPS含道路ID

state["congestion_index"] = current_road["congestion_index"].iloc[0] if not current_road.empty else 0.5

# 距离特征:当前位置到目的地距离(km)

state["distance_to_dest"] = calculate_distance(

row["current_lat"], row["current_lon"], row["dest_lat"], row["dest_lon"]

)

state_features.append(state)

state_df = pd.DataFrame(state_features)

logger.info(f"生成状态特征:{len(state_df)}条,示例:\n{state_df.head(2)}")

return state_df② 特征数据生成(generate_feature_data.py,明确feature_path/label_path)

python

import pandas as pd

import joblib

import logging

from state_feature_generator import generate_state_features

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

def generate_feature_data(cleaned_orders_path: str, vehicle_gps_path: str, traffic_data_path: str) -> tuple:

"""生成状态特征矩阵(feature_path)和Q表策略(label_path)"""

# 1. 加载清洗后数据

orders = pd.read_parquet(cleaned_orders_path)

gps = pd.read_parquet(vehicle_gps_path)

traffic = pd.read_parquet(traffic_data_path)

# 2. 生成状态特征矩阵(feature_path指向的文件)

state_df = generate_state_features(orders, gps, traffic)

feature_path = "s3://logistics-data-lake/processed/qlearning_state_features.parquet"

state_df.to_parquet(feature_path, index=False)

# 3. 初始化label_path(Q表策略文件,训练后生成)

label_path = "s3://logistics-data-lake/processed/q_table_policy.joblib"

logger.info(f"""

【算法团队特征数据存储说明】

- feature_path: {feature_path}

存储内容:Q-Learning状态特征矩阵(Parquet格式),含列:

vehicle_id(车辆ID)、order_id(订单ID)、current_lat/lon(当前位置)、dest_lat/lon(目的地)、

load_kg(载重)、remaining_time(剩余时间窗口)、congestion_index(拥堵指数)、distance_to_dest(距目的地距离)

示例数据(前2行):\n{state_df.head(2)}

- label_path: {label_path}

存储内容:Q表策略模型(Joblib格式),结构为字典:{state_tuple: {action: q_value}}

state_tuple: (current_lat, current_lon, dest_lat, dest_lon, congestion_index, ...)

action: 0-5(6个动作:0=直行,1=左转,2=右转,3=加速,4=减速,5=等待)

q_value: 状态-动作对的期望价值

生成时机:Q-Learning训练完成后,由train_qlearning.py写入

""")

return feature_path, label_path, state_df数据特征变化对比表(原数据→清洗后→状态特征→Q表策略):

代码结构

算法团队仓库(algorithm-path-optimization)

text

algorithm-path-optimization/

├── data_processing/ # 数据清洗(Spark/Scala)

│ ├── src/main/scala/com/logistics/DataCleaning.scala # 清洗代码(含订单/车辆/交通)

│ └── build.sbt # 依赖:spark-core, spark-sql

├── feature_engineering/ # 特征工程(状态/动作/奖励)

│ ├── state_feature_generator.py # 状态特征生成(含距离/路况计算)

│ ├── reward_calculator.py # 奖励函数实现(时效+成本+体验)

│ ├── generate_feature_data.py # 特征数据生成(含feature_path/label_path说明)

│ └── requirements.txt # 依赖:pandas, geopandas, shapely

├── model_training/ # Q-Learning模型训练(核心)

│ ├── q_learning.py # Q-Learning算法实现(含原理注释)

│ ├── train_qlearning.py # 训练入口(Ray分布式+模拟环境)

│ ├── simulate_environment.py # Gym定制物流仿真环境(路网/订单/交通)

│ └── qlearning_params.yaml # 调参记录(ε=0.1, α=0.5, γ=0.9)

├── feature_store/ # Feast特征存储(动态特征服务)

│ ├── feature_repo/ # Feast特征仓库

│ │ ├── features.py # 定义实体(vehicle_id)、特征视图(实时位置/路况)

│ │ └── feature_store.yaml # Feast配置(在线Redis/离线Parquet)

│ └── deploy_feast.sh # 部署Feast到K8s脚本

└── mlflow_tracking/ # MLflow实验跟踪

└── run_qlearning_experiment.py # 记录ε/α/γ/奖励曲线/收敛状态

python

import numpy as np

import pandas as pd

import logging

from collections import defaultdict

import ray

from simulate_environment import LogisticsEnv # 定制仿真环境

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

class QLearningAgent:

def __init__(self, state_dim, action_dim, epsilon=0.1, alpha=0.5, gamma=0.9):

"""

Q-Learning智能体初始化

:param state_dim: 状态空间维度(特征数量)

:param action_dim: 动作空间维度(6个动作)

:param epsilon: ε-贪婪策略的探索率(默认10%探索)

:param alpha: 学习率(Q值更新步长,默认0.5)

:param gamma: 折扣因子(未来奖励权重,默认0.9)

"""

self.state_dim = state_dim

self.action_dim = action_dim

self.epsilon = epsilon # 探索率(ε-贪婪策略)

self.alpha = alpha # 学习率(α)

self.gamma = gamma # 折扣因子(γ)

# Q表:嵌套字典 {state: {action: q_value}},初始Q值为0

self.q_table = defaultdict(lambda: defaultdict(float))

def choose_action(self, state):

"""ε-贪婪策略选择动作:以ε概率探索(随机动作),1-ε概率利用(最大Q值动作)"""

if np.random.uniform(0, 1) < self.epsilon:

# 探索:随机选择动作(0-5)

return np.random.randint(0, self.action_dim)

else:

# 利用:选择当前状态下Q值最大的动作

state_actions = self.q_table[state]

if not state_actions: # 状态未见过,随机选

return np.random.randint(0, self.action_dim)

return max(state_actions, key=state_actions.get)

def update_q_value(self, state, action, reward, next_state):

"""Q值更新(核心公式):Q(s,a) ← Q(s,a) + α[r + γ·maxQ(s',a') - Q(s,a)]"""

current_q = self.q_table[state][action]

# 下一状态的最大Q值(若next_state未见过,max_q=0)

max_next_q = max(self.q_table[next_state].values()) if self.q_table[next_state] else 0

# 更新Q值

new_q = current_q + self.alpha * (reward + self.gamma * max_next_q - current_q)

self.q_table[state][action] = new_q

def train(self, env, episodes=1000):

"""训练Q-Learning智能体:与环境交互episodes轮"""

for episode in range(episodes):

state = env.reset() # 重置环境,获取初始状态

total_reward = 0

done = False

while not done:

# 1. 选择动作(ε-贪婪策略)

action = self.choose_action(state)

# 2. 执行动作,获取环境反馈(下一状态、奖励、是否结束)

next_state, reward, done, info = env.step(action)

# 3. 更新Q值

self.update_q_value(state, action, reward, next_state)

# 4. 转移到下一状态

state = next_state

total_reward += reward

# 每100轮打印训练进度

if episode % 100 == 0:

logger.info(f"Episode {episode}, Total Reward: {total_reward:.2f}, Epsilon: {self.epsilon}")

# 衰减探索率(随训练进行减少探索)

self.epsilon = max(0.01, self.epsilon * 0.995)

logger.info("Q-Learning训练完成,Q表收敛")

return self.q_table

# 示例:物流场景状态编码(将状态特征元组化,作为Q表键)

def encode_state(state_features: dict) -> tuple:

"""将状态特征字典编码为Q表键(元组)"""

return (

round(state_features["current_lat"], 4), round(state_features["current_lon"], 4),

round(state_features["dest_lat"], 4), round(state_features["dest_lon"], 4),

round(state_features["congestion_index"], 2), round(state_features["remaining_time"], 1),

round(state_features["load_kg"], 1)

)

python

import joblib

import pandas as pd

from q_learning import QLearningAgent, encode_state

from simulate_environment import LogisticsEnv

from feature_engineering.generate_feature_data import generate_feature_data

import ray

import logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

def train_and_save_policy():

# 1. 初始化Ray分布式训练

ray.init(num_cpus=4)

# 2. 生成特征数据(获取feature_path和label_path)

feature_path, label_path, state_df = generate_feature_data(

cleaned_orders_path="s3://logistics-data-lake/cleaned/orders_cleaned.parquet",

vehicle_gps_path="s3://logistics-data-lake/cleaned/gps_cleaned.parquet",

traffic_data_path="s3://logistics-data-lake/cleaned/traffic_cleaned.parquet"

)

# 3. 加载状态特征,初始化仿真环境

env = LogisticsEnv(state_df) # 定制物流仿真环境(路网+订单+交通)

state_dim = len(env.state_features[0]) # 状态特征维度

action_dim = 6 # 6个动作(0-5)

# 4. 训练Q-Learning智能体

agent = QLearningAgent(

state_dim=state_dim, action_dim=action_dim,

epsilon=0.1, alpha=0.5, gamma=0.9

)

q_table = agent.train(env, episodes=2000) # 训练2000轮

# 5. 保存Q表策略到label_path(Joblib文件)

joblib.dump(q_table, label_path)

logger.info(f"Q表策略已保存至{label_path},共{len(q_table)}个状态")

# 6. 记录MLflow实验

import mlflow

with mlflow.start_run(run_name="q_learning_path_optimization"):

mlflow.log_param("epsilon", agent.epsilon)

mlflow.log_param("alpha", agent.alpha)

mlflow.log_param("gamma", agent.gamma)

mlflow.log_metric("q_table_size", len(q_table))

mlflow.log_artifact(label_path) # 上传Q表文件

if __name__ == "__main__":

train_and_save_policy()业务团队仓库(business-path-optimization)

text

business-path-optimization/

├── api_gateway/ # API网关(Kong配置)

├── path_optimization_service/ # 路径优化服务(Go)

│ ├── main.go # FastAPI风格Go服务(调用Q表策略+Feast特征)

│ └── Dockerfile # 容器化配置

├── dispatch_system/ # 配送调度系统(Java+React)

│ ├── backend/ # Spring Boot后端(订单管理/路径渲染)

│ ├── frontend/ # React前端(司机APP/控制台)

│ └── sql/ # PostgreSQL表结构(路径历史/策略版本)

├── monitoring/ # 监控告警(Prometheus+Grafana)

└── deployment/ # K8s部署配置(服务/Ingress)

go

package main

import (

"encoding/json"

"fmt"

"log"

"net/http"

"path/to/joblib" // 伪代码:Joblib加载Q表

"path/to/feast" // 伪代码:Feast客户端

)

// 请求/响应结构体

type PathRequest struct {

VehicleID string `json:"vehicle_id"`

OrderID string `json:"order_id"`

CurrentLat float64 `json:"current_lat"`

CurrentLon float64 `json:"current_lon"`

}

type PathResponse struct {

VehicleID string `json:"vehicle_id"`

OptimalAction int `json:"optimal_action"` // 0-5动作

NextWaypoint struct {

Lat float64 `json:"lat"`

Lon float64 `json:"lon"`

} `json:"next_waypoint"`

ETA string `json:"eta"` // 预计到达时间

}

// 全局变量:加载Q表策略和Feast客户端

var qTable map[string]map[int]float64 // 伪代码:Q表结构

var feastClient *feast.Client

func init() {

// 加载Q表策略(label_path文件)

qTable = joblib.Load("s3://logistics-data-lake/processed/q_table_policy.joblib")

// 初始化Feast客户端(调用算法团队特征服务)

feastClient = feast.NewClient("feast-feature-service:6566") // K8s内部服务名

}

// 路径优化API处理函数

func optimizePathHandler(w http.ResponseWriter, r *http.Request) {

var req PathRequest

if err := json.NewDecoder(r.Body).Decode(&req); err != nil {

http.Error(w, "Invalid request", http.StatusBadRequest)

return

}

// 1. 调用Feast获取实时特征(路况/剩余时间)

features, err := feastClient.GetOnlineFeatures(

[]string{"congestion_index", "remaining_time"},

map[string]interface{}{"vehicle_id": req.VehicleID},

)

if err != nil {

http.Error(w, "Failed to get features", http.StatusInternalServerError)

return

}

// 2. 构造状态特征(编码为Q表键)

state := encodeState(req.CurrentLat, req.CurrentLon, features.DestLat, features.DestLon,

features.CongestionIndex, features.RemainingTime, 0) // 载重简化处理

// 3. 查询Q表,选择最优动作(最大Q值)

actionQValues := qTable[state]

if actionQValues == nil {

// 状态未见过,随机选择动作(探索)

actionQValues = make(map[int]float64)

for i := 0; i < 6; i++ {

actionQValues[i] = 0.0

}

}

optimalAction := 0

maxQ := -1e9

for action, q := range actionQValues {

if q > maxQ {

maxQ = q

optimalAction = action

}

}

// 4. 构造响应(动作→下一航点)

resp := PathResponse{

VehicleID: req.VehicleID,

OptimalAction: optimalAction,

NextWaypoint: calculateNextWaypoint(req.CurrentLat, req.CurrentLon, optimalAction),

ETA: calculateETA(req.CurrentLat, req.CurrentLon, features.DestLat, features.DestLon),

}

w.Header().Set("Content-Type", "application/json")

json.NewEncoder(w).Encode(resp)

}

func main() {

http.HandleFunc("/api/optimize-path", optimizePathHandler)

log.Println("Path optimization service started on :8080")

log.Fatal(http.ListenAndServe(":8080", nil))

}