d.run算力云上沐曦显卡安装llama factory

- [沐曦显卡安装llama factory](#沐曦显卡安装llama factory)

-

- 修复MCX500容器初始化报错

- 下载沐曦专业的pytorch

- [安装llama factory](#安装llama factory)

-

- [下载llama factory, 安装环境](#下载llama factory, 安装环境)

- 检验是否安装成功

- 参考内容

沐曦显卡安装llama factory

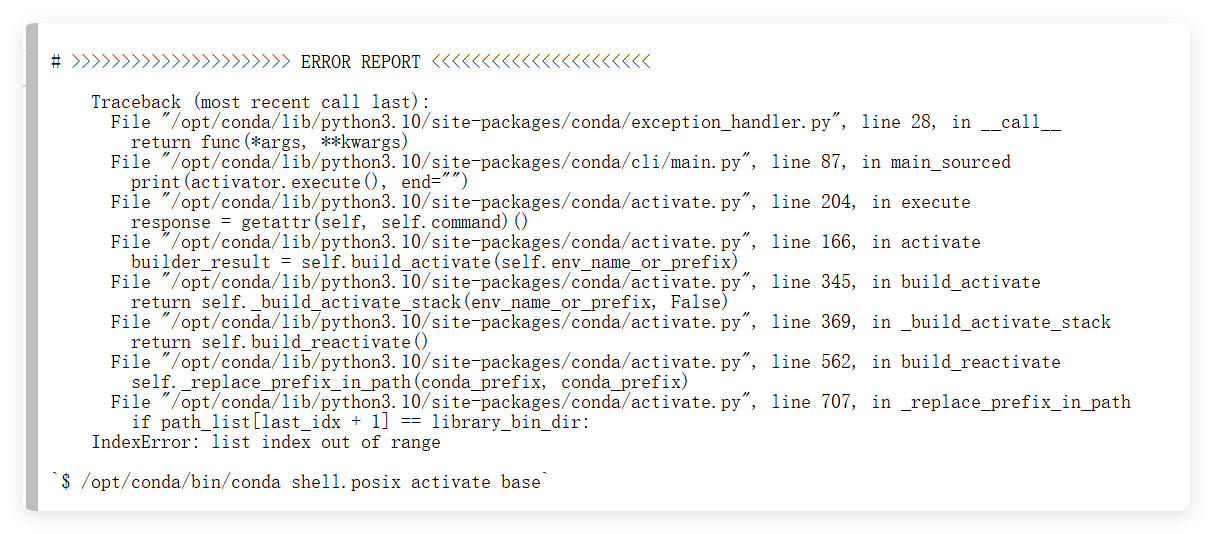

修复MCX500容器初始化报错

溯源:

bash

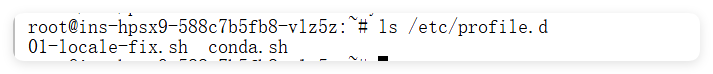

ls /etc/profile.d

应该是01-locale-fix.sh 、 conda.sh这两个文件导致的,首先用cat conda.sh

bash

# >>> conda initialize >>>

# !! Contents within this block are managed by 'conda init' !!

__conda_setup="$('/opt/conda/bin/conda' 'shell.posix' 'hook' 2> /dev/null)"

if [ $? -eq 0 ]; then

eval "$__conda_setup"

else

if [ -f "/opt/conda/etc/profile.d/conda.sh" ]; then

. "/opt/conda/etc/profile.d/conda.sh"

else

export PATH="/opt/conda/bin:$PATH"

fi

fi

unset __conda_setup

# <<< conda initialize <<<conda 崩溃不是"conda.sh 写错",而是 conda.sh + 算力平台 PATH 注入 + 重复激活 base,共同触发了 conda 在 reactivate 分支里的一个已知缺陷。

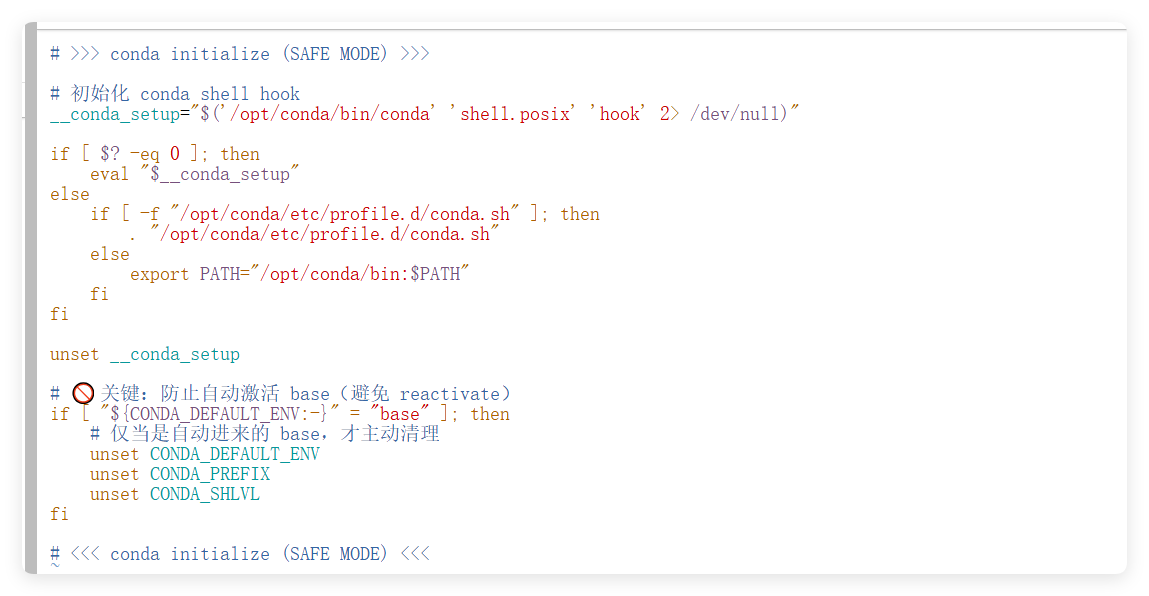

修复:

vim conda.sh ,将原本的内容覆盖写入以下信息

bash

# >>> conda initialize (SAFE MODE) >>>

# 初始化 conda shell hook

__conda_setup="$('/opt/conda/bin/conda' 'shell.posix' 'hook' 2> /dev/null)"

if [ $? -eq 0 ]; then

eval "$__conda_setup"

else

if [ -f "/opt/conda/etc/profile.d/conda.sh" ]; then

. "/opt/conda/etc/profile.d/conda.sh"

else

export PATH="/opt/conda/bin:$PATH"

fi

fi

unset __conda_setup

# 🚫 关键:防止自动激活 base(避免 reactivate)

if [ "${CONDA_DEFAULT_ENV:-}" = "base" ]; then

# 仅当是自动进来的 base,才主动清理

unset CONDA_DEFAULT_ENV

unset CONDA_PREFIX

unset CONDA_SHLVL

fi

# <<< conda initialize (SAFE MODE) <<<重启容器即可。

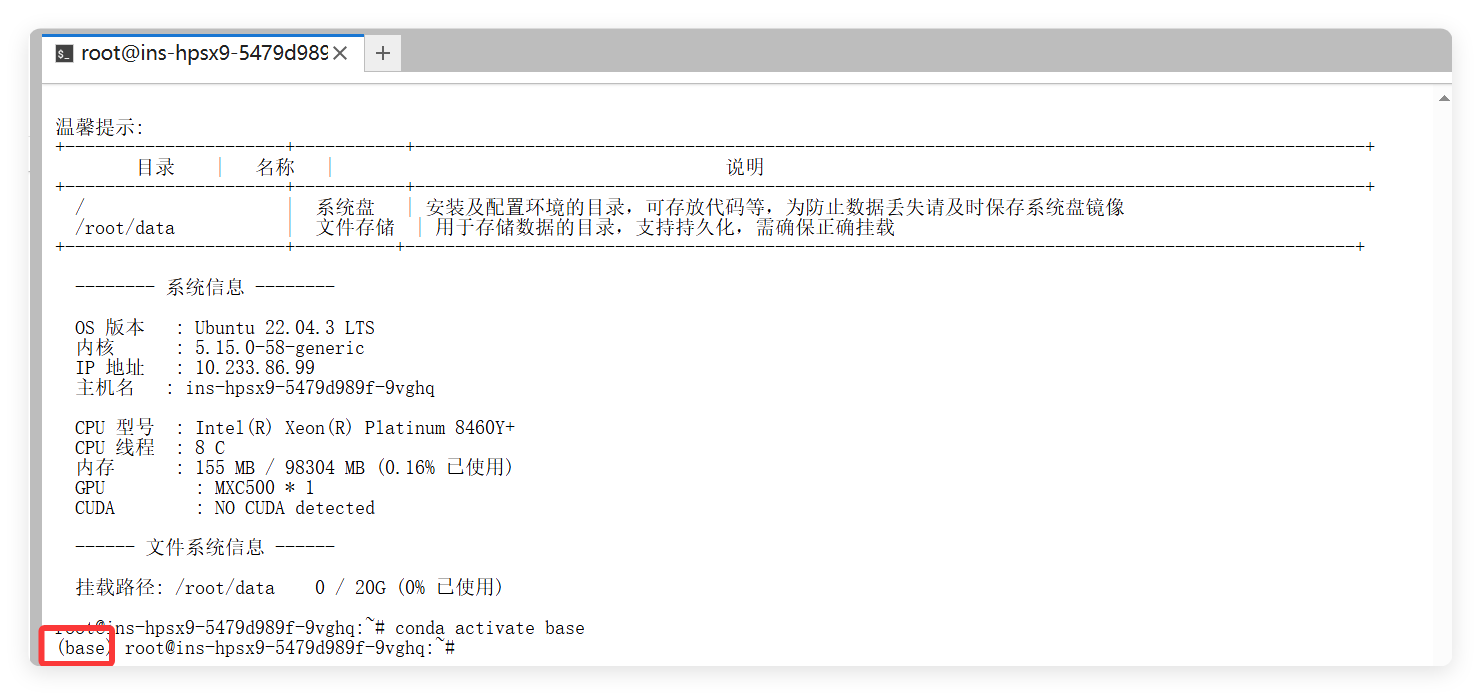

重启后就可以激活环境,如下图所示:

下载沐曦专业的pytorch

具体版本信息可以在平台上寻找:沐曦开发者里边的软件下载链接

使用命令下载:

bash

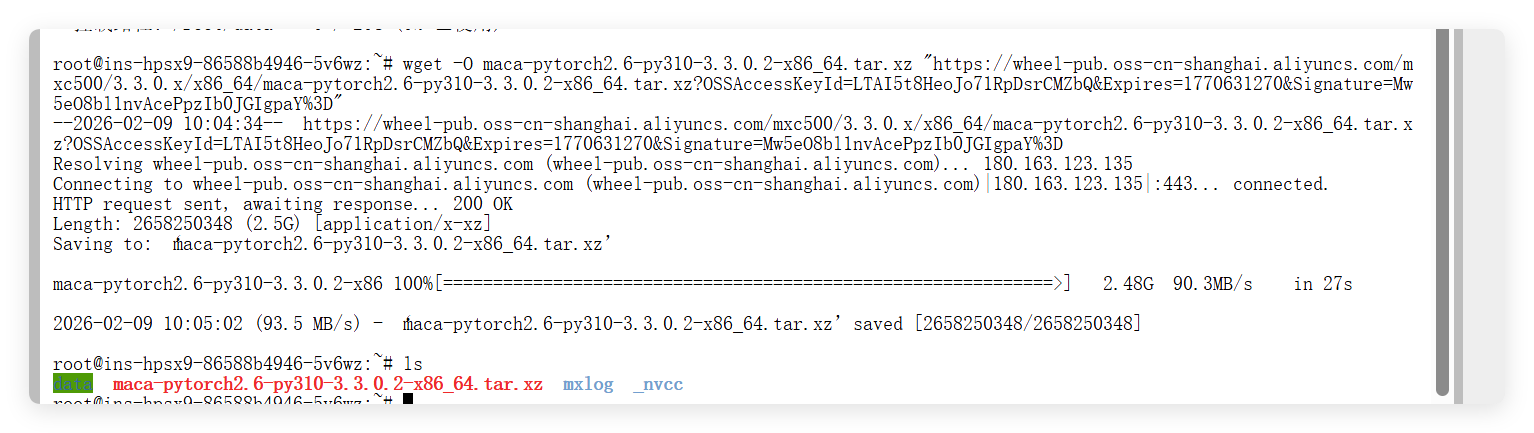

wget -O maca-pytorch2.6-py310-3.3.0.2-x86_64.tar.xz "https://wheel-pub.oss-cn-shanghai.aliyuncs.com/mxc500/3.3.0.x/x86_64/maca-pytorch2.6-py310-3.3.0.2-x86_64.tar.xz?OSSAccessKeyId=LTAI5t8HeoJo71RpDsrCMZbQ&Expires=1770631270&Signature=Mw5eO8bl1nvAcePpzIb0JGIgpaY%3D"下载完成,如下图所示:

解压文件:

bash

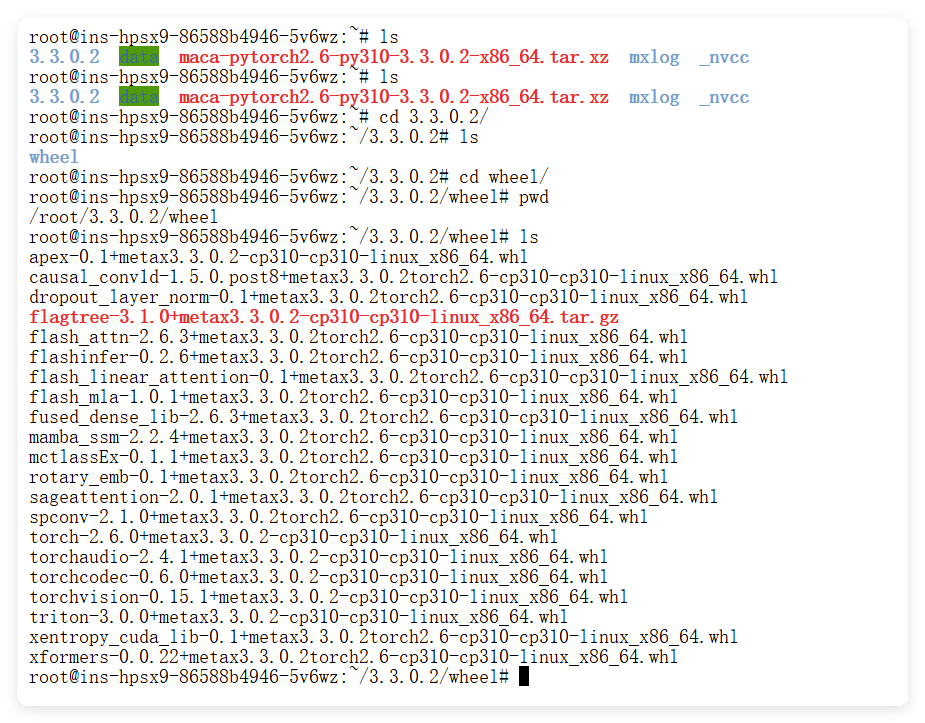

tar -xJf maca-pytorch2.6-py310-3.3.0.2-x86_64.tar.xz此时会解压到这个文件目录/root/3.3.0.2/wheel下,具体如下图所示:

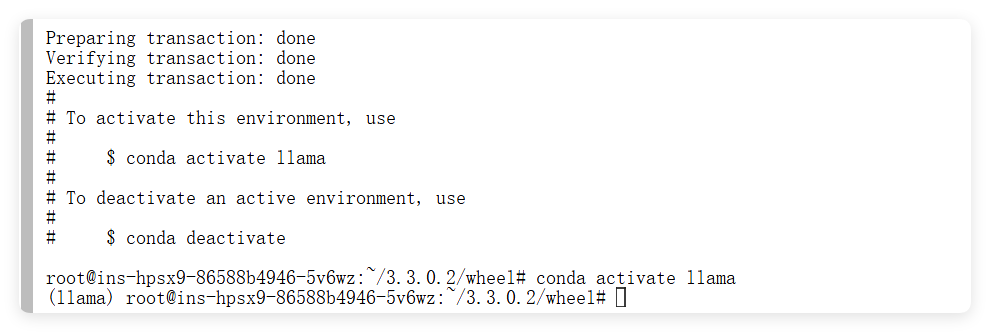

此时创建并且激活环境:

bash

conda create -n llama python=3.10

conda activate llama

安装numpy为1.26.0版本:

bash

pip install numpy==1.26.0安装pytorch

bash

cd /root/3.3.0.2/wheel

pip install torch-2.6.0+metax3.3.0.2-cp310-cp310-linux_x86_64.whl导入环境变量

bash

export MACA_PATH=/opt/maca

export LD_LIBRARY_PATH=${MACA_PATH}/lib:${MACA_PATH}/mxgpu_llvm/lib:${MACA_PATH}/ompi/lib:${LD_LIBRARY_PATH}

export MACA_CLANG_PATH=${MACA_PATH}/mxgpu_llvm/bin在命令行中执行这个命令:

bash

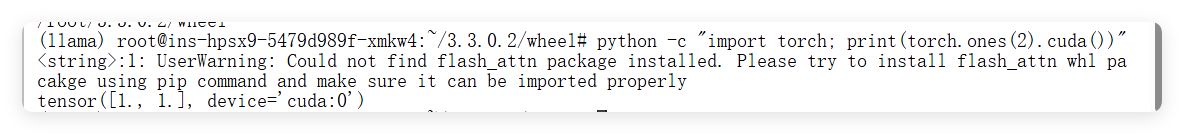

python -c "import torch; print(torch.ones(2).cuda())"如下图所示,显示安装成功。

继续安装相关包:

bash

pip install torchvision-0.15.1+metax3.3.0.2-cp310-cp310-linux_x86_64.whl

pip install torchaudio-2.4.1+metax3.3.0.2-cp310-cp310-linux_x86_64.whl

pip install torchcodec-0.6.0+metax3.3.0.2-cp310-cp310-linux_x86_64.whl

pip install triton-3.0.0+metax3.3.0.2-cp310-cp310-linux_x86_64.whl

pip install rotary_emb-0.1+metax3.3.0.2torch2.6-cp310-cp310-linux_x86_64.whl

pip install xentropy_cuda_lib-0.1+metax3.3.0.2torch2.6-cp310-cp310-linux_x86_64.whl

pip install flash_attn-2.6.3+metax3.3.0.2torch2.6-cp310-cp310-linux_x86_64.whl 安装llama factory

下载llama factory, 安装环境

bash

apt update

apt install -y git

git clone https://gh.llkk.cc/https://github.com/hiyouga/LLaMA-Factory.git

cd LLaMA-Factory

git checkout v0.9.3

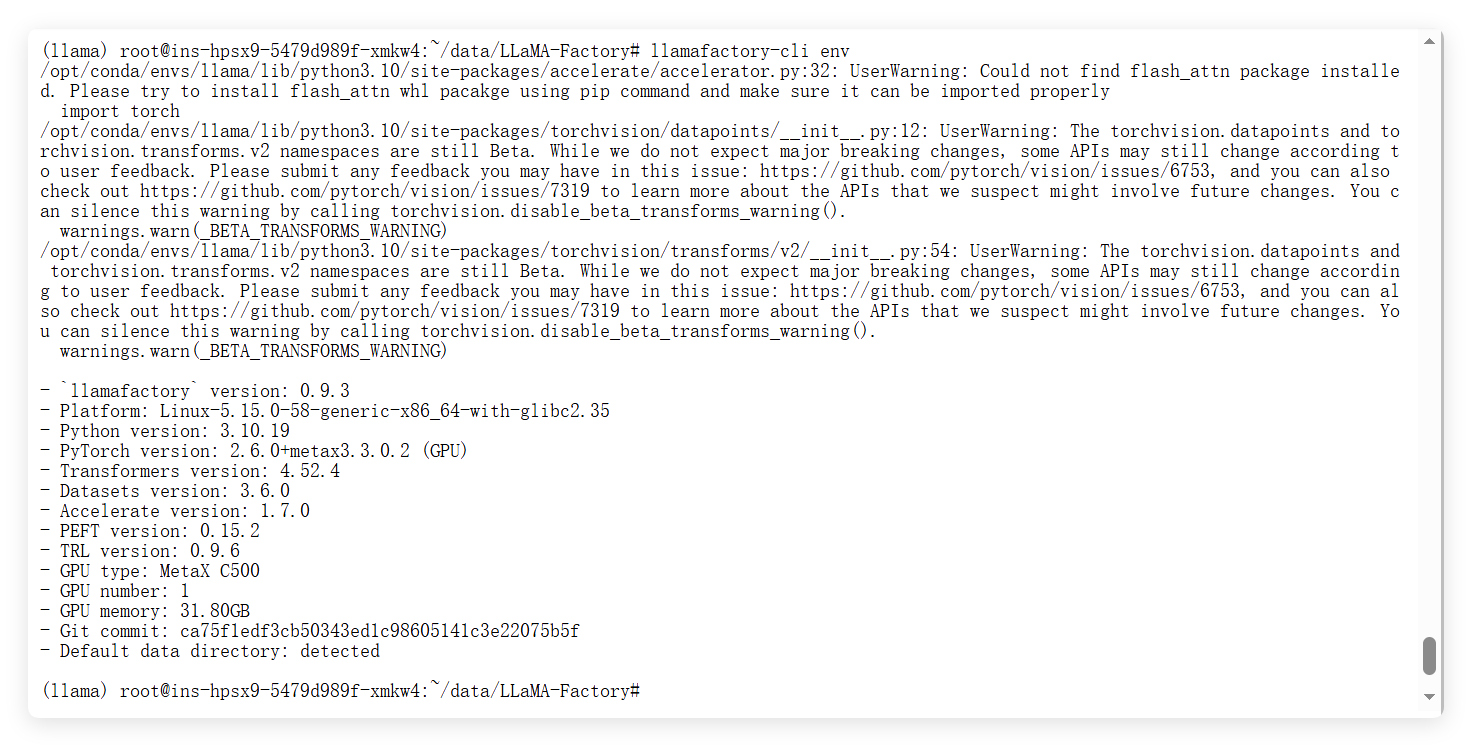

pip install -e .检验是否安装成功

bash

llamafactory-cli env安装成功的显示结果如下: