目录

二、上传spark需要的jar包到HDFS(方便yarn使用)

四、针对插入数据很慢进行优化(提高资源调度,实际业务中不需要)

备注:没有特别说明的都在atguigu用户下执行命令

一、安装spark

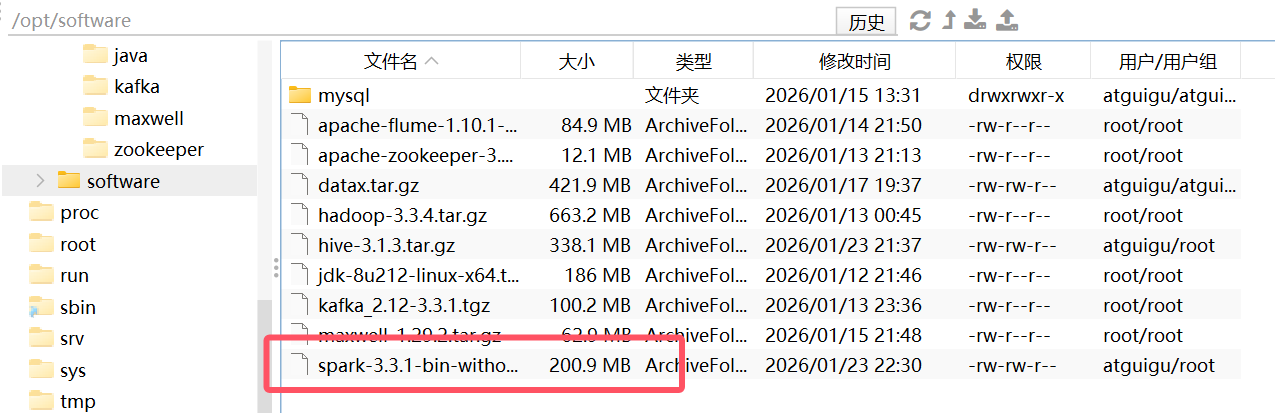

1.上传spark安装包到/opt/software目录下(hadoop102执行下面操作)

安装包下载:01-学习笔记尚硅谷数仓搭建-基础Linux环境搭建(使用3台主机模拟Hadoop集群)-CSDN博客

的资源8.spark目录下的spark-3.3.1-bin-without-hadoop.tgz

2.解压安装包(hadoop102执行下面命令)

cd /opt/software

tar -zxvf spark-3.3.1-bin-without-hadoop.tgz -C /opt/module/3.重命名(hadoop102执行下面命令)

mv /opt/module/spark-3.3.1-bin-without-hadoop /opt/module/spark4.重命名和修改spark-env.sh配置文件(hadoop102执行下面命令)

mv /opt/module/spark/conf/spark-env.sh.template /opt/module/spark/conf/spark-env.sh

vim /opt/module/spark/conf/spark-env.sh在内容最后增加下面内容

export SPARK_DIST_CLASSPATH=$(hadoop classpath)5.配置环境(hadoop102执行下面命令)

sudo vim /etc/profile.d/my_env.sh添加下面内容:

# SPARK_HOME

export SPARK_HOME=/opt/module/spark

export PATH=$PATH:$SPARK_HOME/bin6.刷新环境(hadoop102执行下面命令)

source /etc/profile.d/my_env.sh二、上传spark需要的jar包到HDFS(方便yarn使用)

1.启动HDFS(如果启动了就不用执行了)(hadoop102执行下面命令)

hdp.sh start2.在HDFS创建如下路径,用于存储历史日志。(hadoop102执行下面命令)

hadoop fs -mkdir /spark-history3.向HDFS上传Spark的jar包(过程有点慢)(hadoop102执行下面命令)

hadoop fs -mkdir /spark-jars

hadoop fs -put /opt/module/spark/jars/* /spark-jars4.修改hive-site.xml文件(hadoop102执行下面命令)

vim /opt/module/hive/conf/hive-site.xml在依赖处添加下面内容:

<!--Spark依赖位置(注意:端口号8020必须和namenode的端口号一致)-->

<property>

<name>spark.yarn.jars</name>

<value>hdfs://hadoop102:8020/spark-jars/*</value>

</property>

<!--Hive执行引擎-->

<property>

<name>hive.execution.engine</name>

<value>spark</value>

</property>添加后完整代码:

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!--配置Hive保存元数据信息所需的 MySQL URL地址-->

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://hadoop102:3306/metastore?useSSL=false&useUnicode=true&characterEncoding=UTF-8&allowPublicKeyRetrieval=true</value>

</property>

<!--配置Hive连接MySQL的驱动全类名-->

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.cj.jdbc.Driver</value>

</property>

<!--配置Hive连接MySQL的用户名 -->

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<!--配置Hive连接MySQL的密码 -->

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>000000</value>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>hadoop102</value>

</property>

<property>

<name>hive.metastore.event.db.notification.api.auth</name>

<value>false</value>

</property>

<property>

<name>hive.cli.print.header</name>

<value>true</value>

</property>

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

</property>

<!--Spark依赖位置(注意:端口号8020必须和namenode的端口号一致)-->

<property>

<name>spark.yarn.jars</name>

<value>hdfs://hadoop102:8020/spark-jars/*</value>

</property>

<!--Hive执行引擎-->

<property>

<name>hive.execution.engine</name>

<value>spark</value>

</property>

</configuration>三、测试安装是否成功

1.启动hive(hadoop102执行下面命令)

cd /opt/module/hive

hive2.创建测试表(hadoop102的hive客户端执行下面命令)

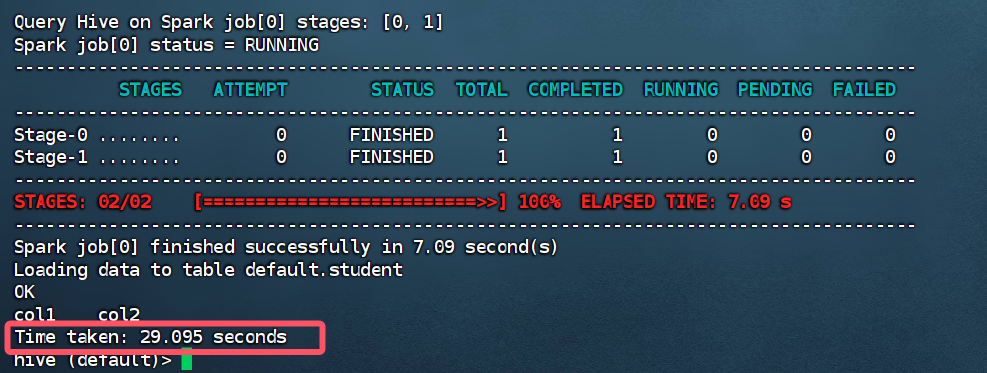

create table student(id int, name string);3.插入数据(会很慢,在下面会进行优化)(hadoop102的hive客户端执行下面命令)

insert into table student values(1,'abc');成功后:

4.退出hive(hadoop102的hive客户端执行下面命令)

quit;四、针对插入数据很慢进行优化(提高资源调度,实际业务中不需要)

1.在hadoop102的/opt/module/hadoop/etc/hadoop/capacity-scheduler.xml文件中修改参数值(hadoop102执行下面命令)

cd /opt/module/hadoop/etc/hadoop

vim capacity-scheduler.xml修改后完整代码:

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<property>

<name>yarn.scheduler.capacity.maximum-applications</name>

<value>10000</value>

<description>

Maximum number of applications that can be pending and running.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.maximum-am-resource-percent</name>

<value>0.8</value>

<description>

Maximum percent of resources in the cluster which can be used to run

application masters i.e. controls number of concurrent running

applications.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.resource-calculator</name>

<value>org.apache.hadoop.yarn.util.resource.DefaultResourceCalculator</value>

<description>

The ResourceCalculator implementation to be used to compare

Resources in the scheduler.

The default i.e. DefaultResourceCalculator only uses Memory while

DominantResourceCalculator uses dominant-resource to compare

multi-dimensional resources such as Memory, CPU etc.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.queues</name>

<value>default</value>

<description>

The queues at the this level (root is the root queue).

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.default.capacity</name>

<value>100</value>

<description>Default queue target capacity.</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.default.user-limit-factor</name>

<value>1</value>

<description>

Default queue user limit a percentage from 0.0 to 1.0.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.default.maximum-capacity</name>

<value>100</value>

<description>

The maximum capacity of the default queue.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.default.state</name>

<value>RUNNING</value>

<description>

The state of the default queue. State can be one of RUNNING or STOPPED.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.default.acl_submit_applications</name>

<value>*</value>

<description>

The ACL of who can submit jobs to the default queue.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.default.acl_administer_queue</name>

<value>*</value>

<description>

The ACL of who can administer jobs on the default queue.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.default.acl_application_max_priority</name>

<value>*</value>

<description>

The ACL of who can submit applications with configured priority.

For e.g, [user={name} group={name} max_priority={priority} default_priority={priority}]

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.default.maximum-application-lifetime

</name>

<value>-1</value>

<description>

Maximum lifetime of an application which is submitted to a queue

in seconds. Any value less than or equal to zero will be considered as

disabled.

This will be a hard time limit for all applications in this

queue. If positive value is configured then any application submitted

to this queue will be killed after exceeds the configured lifetime.

User can also specify lifetime per application basis in

application submission context. But user lifetime will be

overridden if it exceeds queue maximum lifetime. It is point-in-time

configuration.

Note : Configuring too low value will result in killing application

sooner. This feature is applicable only for leaf queue.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.root.default.default-application-lifetime

</name>

<value>-1</value>

<description>

Default lifetime of an application which is submitted to a queue

in seconds. Any value less than or equal to zero will be considered as

disabled.

If the user has not submitted application with lifetime value then this

value will be taken. It is point-in-time configuration.

Note : Default lifetime can't exceed maximum lifetime. This feature is

applicable only for leaf queue.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.node-locality-delay</name>

<value>40</value>

<description>

Number of missed scheduling opportunities after which the CapacityScheduler

attempts to schedule rack-local containers.

When setting this parameter, the size of the cluster should be taken into account.

We use 40 as the default value, which is approximately the number of nodes in one rack.

Note, if this value is -1, the locality constraint in the container request

will be ignored, which disables the delay scheduling.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.rack-locality-additional-delay</name>

<value>-1</value>

<description>

Number of additional missed scheduling opportunities over the node-locality-delay

ones, after which the CapacityScheduler attempts to schedule off-switch containers,

instead of rack-local ones.

Example: with node-locality-delay=40 and rack-locality-delay=20, the scheduler will

attempt rack-local assignments after 40 missed opportunities, and off-switch assignments

after 40+20=60 missed opportunities.

When setting this parameter, the size of the cluster should be taken into account.

We use -1 as the default value, which disables this feature. In this case, the number

of missed opportunities for assigning off-switch containers is calculated based on

the number of containers and unique locations specified in the resource request,

as well as the size of the cluster.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.queue-mappings</name>

<value></value>

<description>

A list of mappings that will be used to assign jobs to queues

The syntax for this list is [u|g]:[name]:[queue_name][,next mapping]*

Typically this list will be used to map users to queues,

for example, u:%user:%user maps all users to queues with the same name

as the user.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.queue-mappings-override.enable</name>

<value>false</value>

<description>

If a queue mapping is present, will it override the value specified

by the user? This can be used by administrators to place jobs in queues

that are different than the one specified by the user.

The default is false.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.per-node-heartbeat.maximum-offswitch-assignments</name>

<value>1</value>

<description>

Controls the number of OFF_SWITCH assignments allowed

during a node's heartbeat. Increasing this value can improve

scheduling rate for OFF_SWITCH containers. Lower values reduce

"clumping" of applications on particular nodes. The default is 1.

Legal values are 1-MAX_INT. This config is refreshable.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.application.fail-fast</name>

<value>false</value>

<description>

Whether RM should fail during recovery if previous applications'

queue is no longer valid.

</description>

</property>

<property>

<name>yarn.scheduler.capacity.workflow-priority-mappings</name>

<value></value>

<description>

A list of mappings that will be used to override application priority.

The syntax for this list is

[workflowId]:[full_queue_name]:[priority][,next mapping]*

where an application submitted (or mapped to) queue "full_queue_name"

and workflowId "workflowId" (as specified in application submission

context) will be given priority "priority".

</description>

</property>

<property>

<name>yarn.scheduler.capacity.workflow-priority-mappings-override.enable</name>

<value>false</value>

<description>

If a priority mapping is present, will it override the value specified

by the user? This can be used by administrators to give applications a

priority that is different than the one specified by the user.

The default is false.

</description>

</property>

</configuration>2.分发配置文件(hadoop102执行下面命令)

xsync capacity-scheduler.xml3.重启yarn(!!在hadoop103执行下面命令)

cd /opt/module/hadoop

stop-yarn.sh

start-yarn.sh4.验证(hadoop102执行下面命令)

cd /opt/module/hive

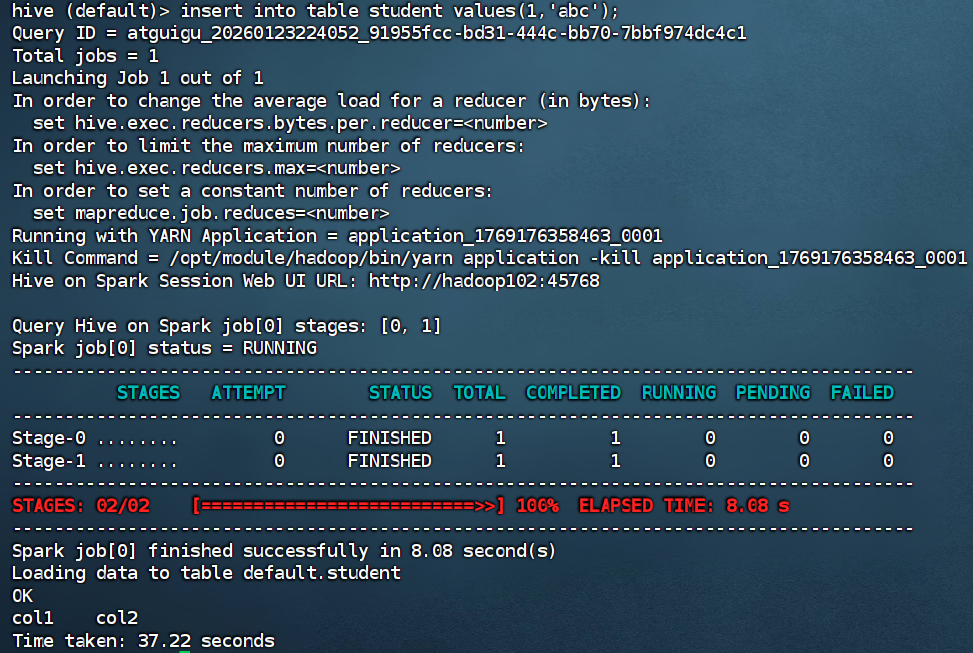

hive插入数据(hadoop102的hive客户端执行下面命令)

insert into table student values(1,'abc');如图减少了部分时间