💖💖作者:计算机编程小央姐

💙💙个人简介:曾长期从事计算机专业培训教学,本人也热爱上课教学,语言擅长Java、微信小程序、Python、Golang、安卓Android等,开发项目包括大数据、深度学习、网站、小程序、安卓、算法。平常会做一些项目定制化开发、代码讲解、答辩教学、文档编写、也懂一些降重方面的技巧。平常喜欢分享一些自己开发中遇到的问题的解决办法,也喜欢交流技术,大家有技术代码这一块的问题可以问我!

💛💛想说的话:感谢大家的关注与支持! 💜💜

💕💕文末获取源码

目录

基于Hadoop+Spark的全球经济指标分析与可视化系统实践-系统功能介绍

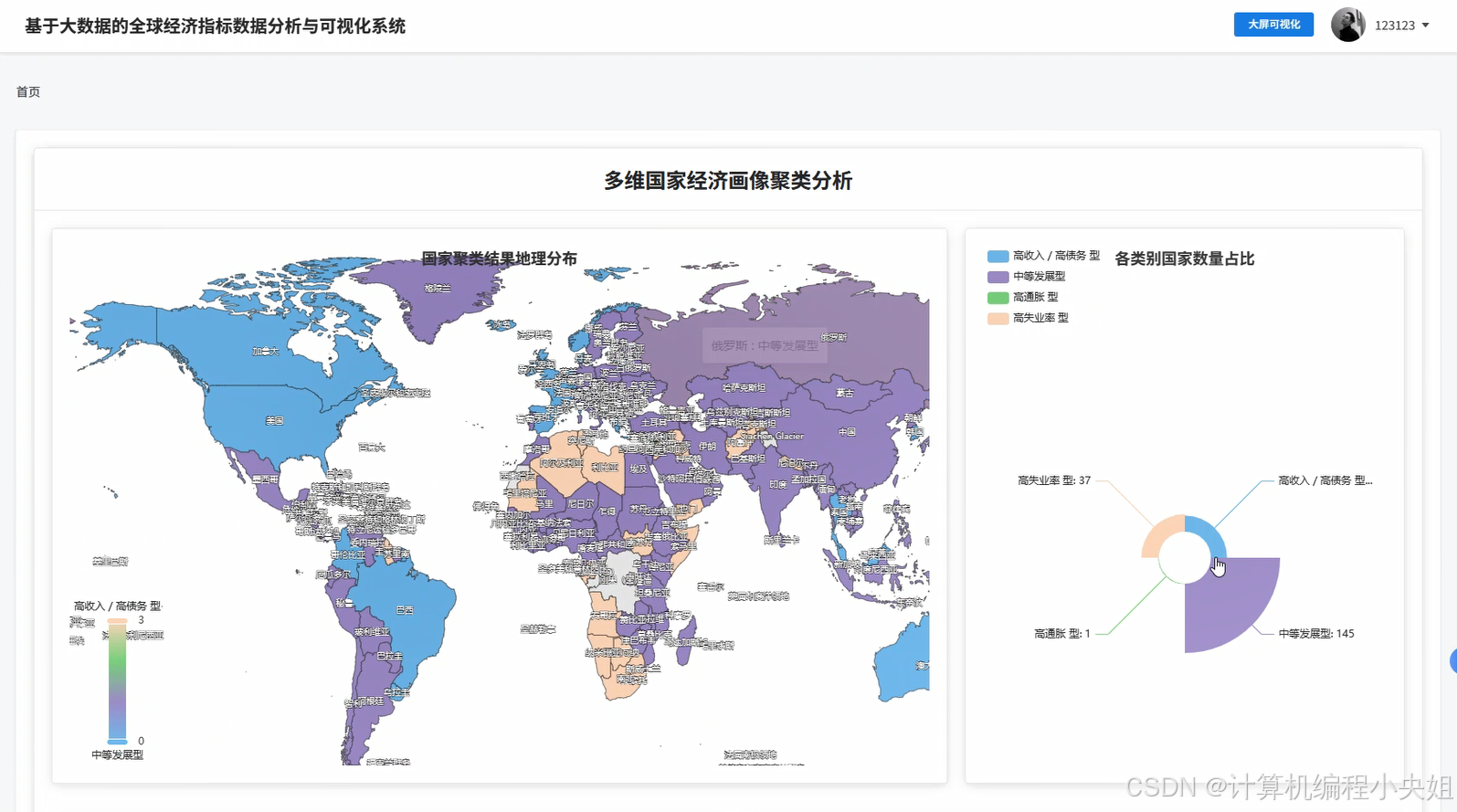

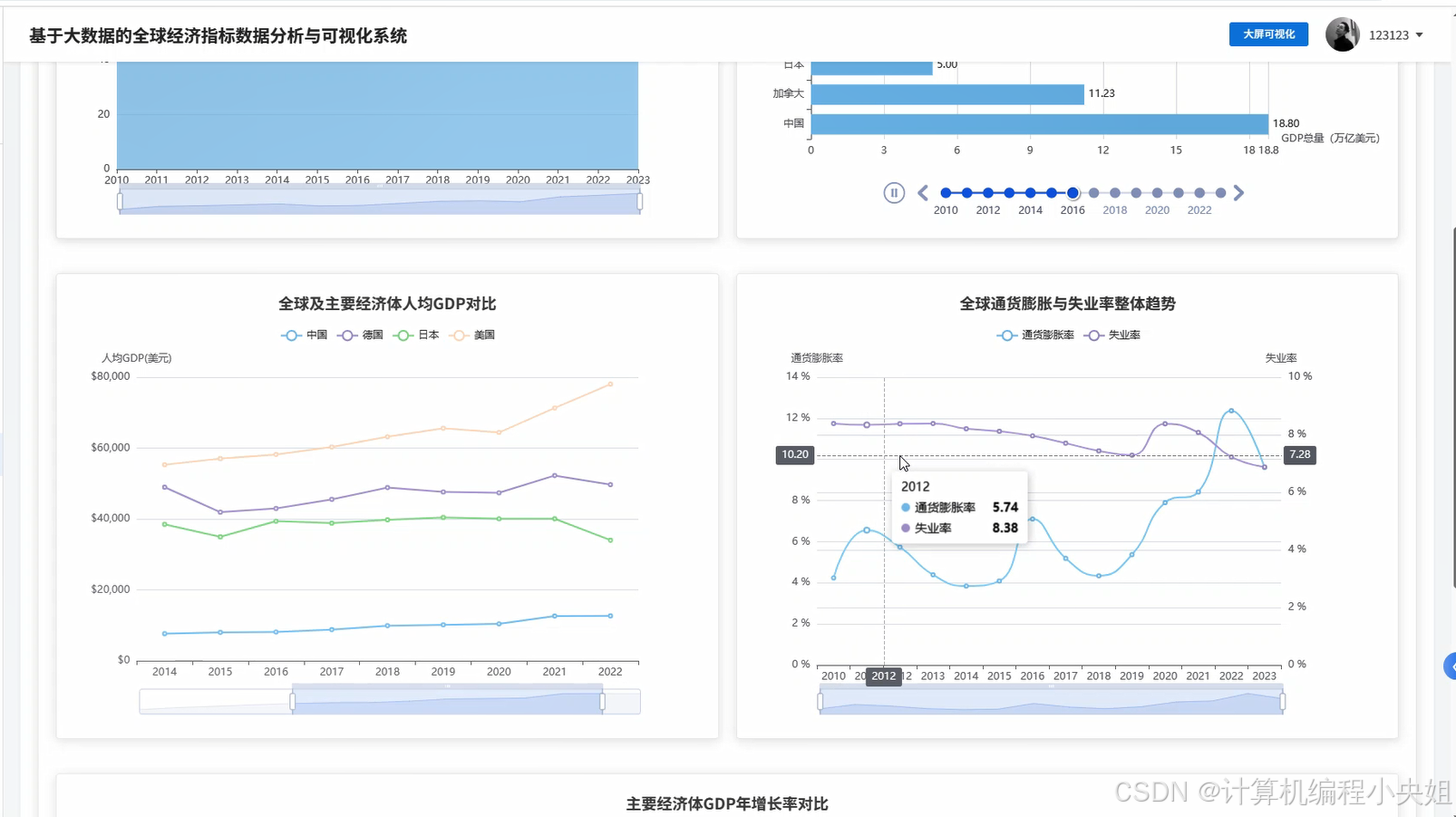

基于Hadoop+Spark的全球经济指标分析与可视化系统是一套专门针对大规模经济数据处理与分析的企业级解决方案,该系统充分利用Hadoop分布式文件系统的海量数据存储能力和Spark内存计算框架的高性能处理优势,实现对全球经济指标数据的深度挖掘和智能分析。系统采用Python作为主要开发语言,结合Django Web框架构建稳定的后端服务架构,通过Spark SQL和Pandas、NumPy等数据科学库实现复杂的经济数据计算和统计分析功能。前端采用Vue.js框架配合ElementUI组件库和Echarts可视化引擎,为用户提供直观友好的数据展示界面。系统核心功能包括全球GDP总量趋势分析、主要经济体对比分析、通胀失业率关联分析、政府财政健康度评估以及基于机器学习的国家经济画像聚类等多个维度的深度分析模块,通过HDFS存储世界银行等权威机构的海量经济数据,运用Spark的分布式计算能力实现TB级数据的快速处理和实时分析,最终通过MySQL数据库管理分析结果,为政策制定者、学术研究人员和金融分析师提供科学可靠的数据支撑和决策参考。

基于Hadoop+Spark的全球经济指标分析与可视化系统实践-系统技术介绍

大数据框架:Hadoop+Spark(本次没用Hive,支持定制)

开发语言:Python+Java(两个版本都支持)

后端框架:Django+Spring Boot(Spring+SpringMVC+Mybatis)(两个版本都支持)

前端:Vue+ElementUI+Echarts+HTML+CSS+JavaScript+jQuery

详细技术点:Hadoop、HDFS、Spark、Spark SQL、Pandas、NumPy

数据库:MySQL

基于Hadoop+Spark的全球经济指标分析与可视化系统实践-系统背景意义

随着全球化进程的不断深化,各国经济联系日益紧密,经济数据的复杂性和关联性也呈指数级增长,传统的数据分析方法已经难以应对海量、多维、实时的经济指标数据处理需求。世界银行、国际货币基金组织等权威机构每年产生的全球经济数据量达到PB级别,涵盖200多个国家和地区的GDP、通胀率、失业率、政府债务等数百项指标,这些数据具有时间跨度长、指标维度多、数据格式复杂等特点。同时,金融市场的快速变化要求对经济数据进行实时或准实时的分析处理,以便及时发现经济趋势变化和潜在风险。在这种背景下,传统基于关系型数据库和单机计算的分析系统已经无法满足大规模经济数据的存储、计算和分析需求,迫切需要引入大数据技术来解决数据处理瓶颈问题,提升分析效率和准确性。

本课题的研究具有重要的理论价值和实践意义,虽然作为毕业设计项目,规模和复杂度相对有限,但仍能在多个方面产生积极作用。从技术角度来看,通过将Hadoop和Spark等大数据技术应用于经济数据分析领域,可以验证大数据框架在处理结构化经济指标数据方面的有效性和可行性,为后续更大规模的经济数据分析系统建设提供技术参考。从应用角度来说,系统能够帮助用户更直观地理解全球经济发展趋势和各国经济状况差异,通过可视化展示使复杂的经济数据变得易于理解和分析。对于学术研究而言,系统提供的多维度经济指标分析功能可以为经济学相关研究提供数据支撑,虽然分析深度可能不如专业研究机构,但仍具有一定的参考价值。同时,本项目的实施过程也是对大数据技术栈的完整实践,有助于加深对分布式计算、数据处理流水线设计等关键技术的理解和掌握,为今后在相关技术领域的深入发展奠定基础。

基于Hadoop+Spark的全球经济指标分析与可视化系统实践-系统演示视频

企业级大数据技术栈:基于Hadoop+Spark的全球经济指标分析与可视化系统实践

基于Hadoop+Spark的全球经济指标分析与可视化系统实践-系统演示图片

基于Hadoop+Spark的全球经济指标分析与可视化系统实践-系统部分代码

python

from pyspark.sql import SparkSession

from pyspark.sql.functions import *

from pyspark.ml.clustering import KMeans

from pyspark.ml.feature import VectorAssembler

import pandas as pd

import numpy as np

from django.http import JsonResponse

spark = SparkSession.builder.appName("GlobalEconomicAnalysis").config("spark.sql.adaptive.enabled","true").getOrCreate()

def global_gdp_trend_analysis(request):

hdfs_path = "hdfs://localhost:9000/economic_data/world_bank_data.csv"

df = spark.read.option("header", "true").option("inferSchema", "true").csv(hdfs_path)

gdp_data = df.select("year", "GDP (Current USD)").filter(col("GDP (Current USD)").isNotNull())

yearly_gdp = gdp_data.groupBy("year").agg(sum("GDP (Current USD)").alias("total_gdp"))

yearly_gdp_sorted = yearly_gdp.orderBy("year")

gdp_growth = yearly_gdp_sorted.withColumn("prev_gdp", lag("total_gdp").over(Window.orderBy("year")))

gdp_growth = gdp_growth.withColumn("growth_rate", ((col("total_gdp") - col("prev_gdp")) / col("prev_gdp") * 100))

gdp_trend_stats = gdp_growth.agg(

avg("growth_rate").alias("avg_growth"),

stddev("growth_rate").alias("growth_volatility"),

max("total_gdp").alias("peak_gdp"),

min("total_gdp").alias("lowest_gdp")

).collect()[0]

crisis_years = gdp_growth.filter(col("growth_rate") < -2.0).select("year", "growth_rate")

recovery_years = gdp_growth.filter(col("growth_rate") > 4.0).select("year", "growth_rate")

trend_analysis = {

"yearly_data": [{"year": row.year, "gdp": float(row.total_gdp), "growth": float(row.growth_rate) if row.growth_rate else 0}

for row in gdp_growth.collect()],

"statistics": {

"average_growth": float(gdp_trend_stats.avg_growth) if gdp_trend_stats.avg_growth else 0,

"volatility": float(gdp_trend_stats.growth_volatility) if gdp_trend_stats.growth_volatility else 0,

"peak_gdp": float(gdp_trend_stats.peak_gdp),

"lowest_gdp": float(gdp_trend_stats.lowest_gdp)

},

"crisis_periods": [{"year": row.year, "decline": float(row.growth_rate)} for row in crisis_years.collect()],

"recovery_periods": [{"year": row.year, "growth": float(row.growth_rate)} for row in recovery_years.collect()]

}

return JsonResponse(trend_analysis)

def economic_clustering_analysis(request):

hdfs_path = "hdfs://localhost:9000/economic_data/world_bank_data.csv"

df = spark.read.option("header", "true").option("inferSchema", "true").csv(hdfs_path)

latest_year = df.agg(max("year")).collect()[0][0]

clustering_data = df.filter(col("year") == latest_year).select(

"country_name",

"GDP per Capita (Current USD)",

"Inflation (CPI %)",

"Unemployment Rate (%)",

"Public Debt (% of GDP)",

"GDP Growth (% Annual)"

).filter(

col("GDP per Capita (Current USD)").isNotNull() &

col("Inflation (CPI %)").isNotNull() &

col("Unemployment Rate (%)").isNotNull() &

col("Public Debt (% of GDP)").isNotNull() &

col("GDP Growth (% Annual)").isNotNull()

)

feature_cols = ["GDP per Capita (Current USD)", "Inflation (CPI %)", "Unemployment Rate (%)", "Public Debt (% of GDP)", "GDP Growth (% Annual)"]

assembler = VectorAssembler(inputCols=feature_cols, outputCol="features")

feature_df = assembler.transform(clustering_data)

kmeans = KMeans(featuresCol="features", predictionCol="cluster", k=4, seed=42)

model = kmeans.fit(feature_df)

clustered_df = model.transform(feature_df)

cluster_stats = clustered_df.groupBy("cluster").agg(

count("country_name").alias("country_count"),

avg("GDP per Capita (Current USD)").alias("avg_gdp_per_capita"),

avg("Inflation (CPI %)").alias("avg_inflation"),

avg("Unemployment Rate (%)").alias("avg_unemployment"),

avg("Public Debt (% of GDP)").alias("avg_debt"),

avg("GDP Growth (% Annual)").alias("avg_growth")

)

clustering_results = {

"country_clusters": [

{

"country": row.country_name,

"cluster": int(row.cluster),

"gdp_per_capita": float(row["GDP per Capita (Current USD)"]),

"inflation": float(row["Inflation (CPI %)"]),

"unemployment": float(row["Unemployment Rate (%)"]),

"debt_ratio": float(row["Public Debt (% of GDP)"]),

"growth_rate": float(row["GDP Growth (% Annual)"])

}

for row in clustered_df.collect()

],

"cluster_profiles": [

{

"cluster_id": int(row.cluster),

"country_count": int(row.country_count),

"avg_gdp_per_capita": float(row.avg_gdp_per_capita),

"avg_inflation": float(row.avg_inflation),

"avg_unemployment": float(row.avg_unemployment),

"avg_debt": float(row.avg_debt),

"avg_growth": float(row.avg_growth)

}

for row in cluster_stats.collect()

]

}

return JsonResponse(clustering_results)

def real_time_visualization_processing(request):

hdfs_path = "hdfs://localhost:9000/economic_data/world_bank_data.csv"

df = spark.read.option("header", "true").option("inferSchema", "true").csv(hdfs_path)

target_countries = ["United States", "China", "Japan", "Germany", "United Kingdom", "France", "India", "Italy", "Brazil", "Canada"]

major_economies = df.filter(col("country_name").isin(target_countries))

gdp_comparison = major_economies.groupBy("country_name").agg(

avg("GDP (Current USD)").alias("avg_gdp"),

avg("GDP per Capita (Current USD)").alias("avg_gdp_per_capita"),

avg("GDP Growth (% Annual)").alias("avg_growth"),

max("GDP (Current USD)").alias("max_gdp"),

min("GDP (Current USD)").alias("min_gdp")

).orderBy(desc("avg_gdp"))

inflation_unemployment_corr = major_economies.select("country_name", "year", "Inflation (CPI %)", "Unemployment Rate (%)")

correlation_data = inflation_unemployment_corr.filter(

col("Inflation (CPI %)").isNotNull() & col("Unemployment Rate (%)").isNotNull()

)

debt_risk_analysis = major_economies.select("country_name", "year", "Public Debt (% of GDP)", "GDP Growth (% Annual)")

high_debt_countries = debt_risk_analysis.filter(col("Public Debt (% of GDP)") > 80.0)

debt_growth_impact = high_debt_countries.groupBy("country_name").agg(

avg("Public Debt (% of GDP)").alias("avg_debt_ratio"),

avg("GDP Growth (% Annual)").alias("avg_growth_with_high_debt"),

count("year").alias("high_debt_years")

)

time_series_data = major_economies.select("country_name", "year", "GDP (Current USD)", "GDP Growth (% Annual)", "Inflation (CPI %)").orderBy("country_name", "year")

visualization_data = {

"gdp_rankings": [

{

"country": row.country_name,

"avg_gdp": float(row.avg_gdp) if row.avg_gdp else 0,

"avg_gdp_per_capita": float(row.avg_gdp_per_capita) if row.avg_gdp_per_capita else 0,

"avg_growth": float(row.avg_growth) if row.avg_growth else 0,

"gdp_range": {"max": float(row.max_gdp) if row.max_gdp else 0, "min": float(row.min_gdp) if row.min_gdp else 0}

}

for row in gdp_comparison.collect()

],

"correlation_analysis": [

{

"country": row.country_name,

"year": int(row.year),

"inflation": float(row["Inflation (CPI %)"]) if row["Inflation (CPI %)"] else 0,

"unemployment": float(row["Unemployment Rate (%)"]) if row["Unemployment Rate (%)"] else 0

}

for row in correlation_data.collect()

],

"debt_risk_assessment": [

{

"country": row.country_name,

"avg_debt_ratio": float(row.avg_debt_ratio),

"growth_impact": float(row.avg_growth_with_high_debt) if row.avg_growth_with_high_debt else 0,

"risk_years": int(row.high_debt_years)

}

for row in debt_growth_impact.collect()

],

"time_series": [

{

"country": row.country_name,

"year": int(row.year),

"gdp": float(row["GDP (Current USD)"]) if row["GDP (Current USD)"] else 0,

"growth": float(row["GDP Growth (% Annual)"]) if row["GDP Growth (% Annual)"] else 0,

"inflation": float(row["Inflation (CPI %)"]) if row["Inflation (CPI %)"] else 0

}

for row in time_series_data.collect()

]

}

return JsonResponse(visualization_data)基于Hadoop+Spark的全球经济指标分析与可视化系统实践-结语

💟💟如果大家有任何疑虑,欢迎在下方位置详细交流。