目录

[1 数据增强(Data Augmentation)](#1 数据增强(Data Augmentation))

[1.1 概念](#1.1 概念)

[1.2 增强方法](#1.2 增强方法)

[1.2.1 翻转](#1.2.1 翻转)

[1.2.2 切割](#1.2.2 切割)

[1.2.3 颜色](#1.2.3 颜色)

[1.2.4 其他](#1.2.4 其他)

[1.2.5 代码](#1.2.5 代码)

[2 微调](#2 微调)

[2.1 定义](#2.1 定义)

[2.2 常用方式](#2.2 常用方式)

[2.3 代码-热狗识别](#2.3 代码-热狗识别)

[3 锚框](#3 锚框)

[3.1 定义](#3.1 定义)

[3.2 锚框算法](#3.2 锚框算法)

[3.2.1 交并比(IoU)](#3.2.1 交并比(IoU))

[3.2.2 非极大值抑制(NMS)](#3.2.2 非极大值抑制(NMS))

[3.3 代码](#3.3 代码)

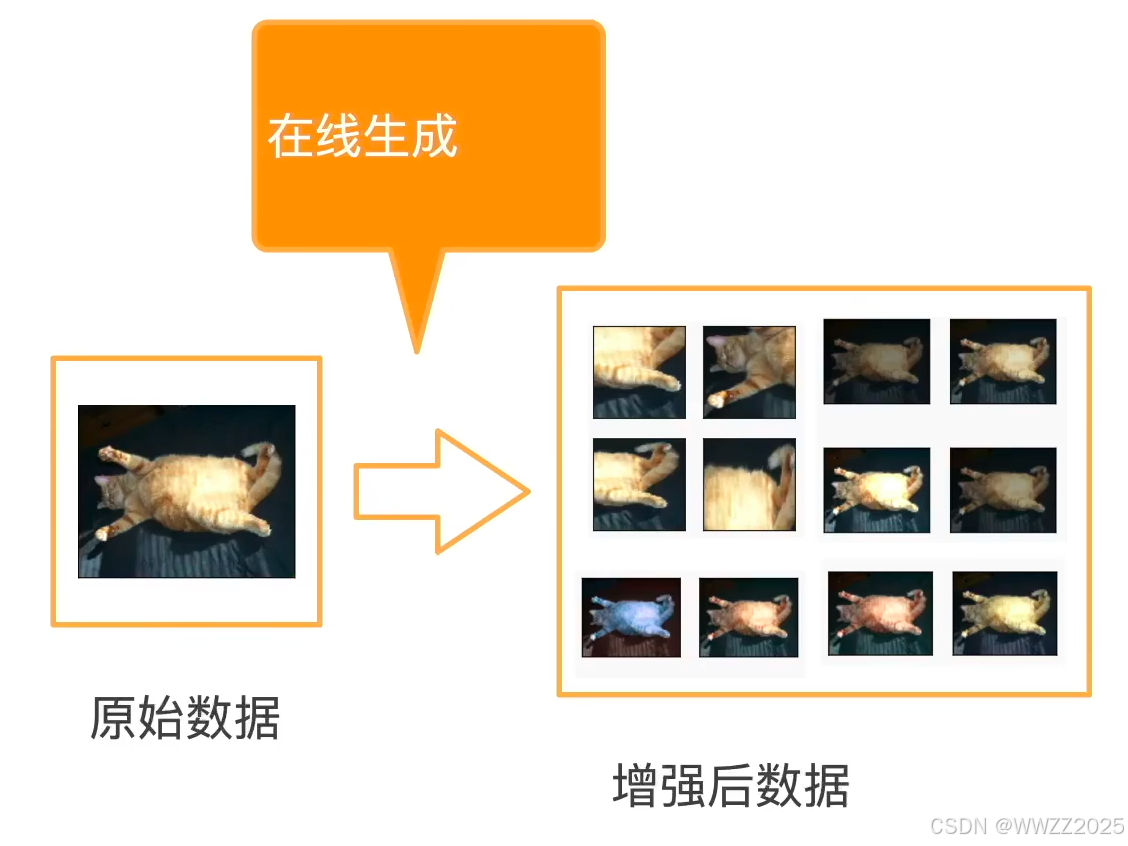

1 数据增强(Data Augmentation)

1.1 概念

增加已有数据集,使其有更多多样性(如语言里面加不同背景噪音、改变图片颜色和形状)。

1.2 增强方法

1.2.1 翻转

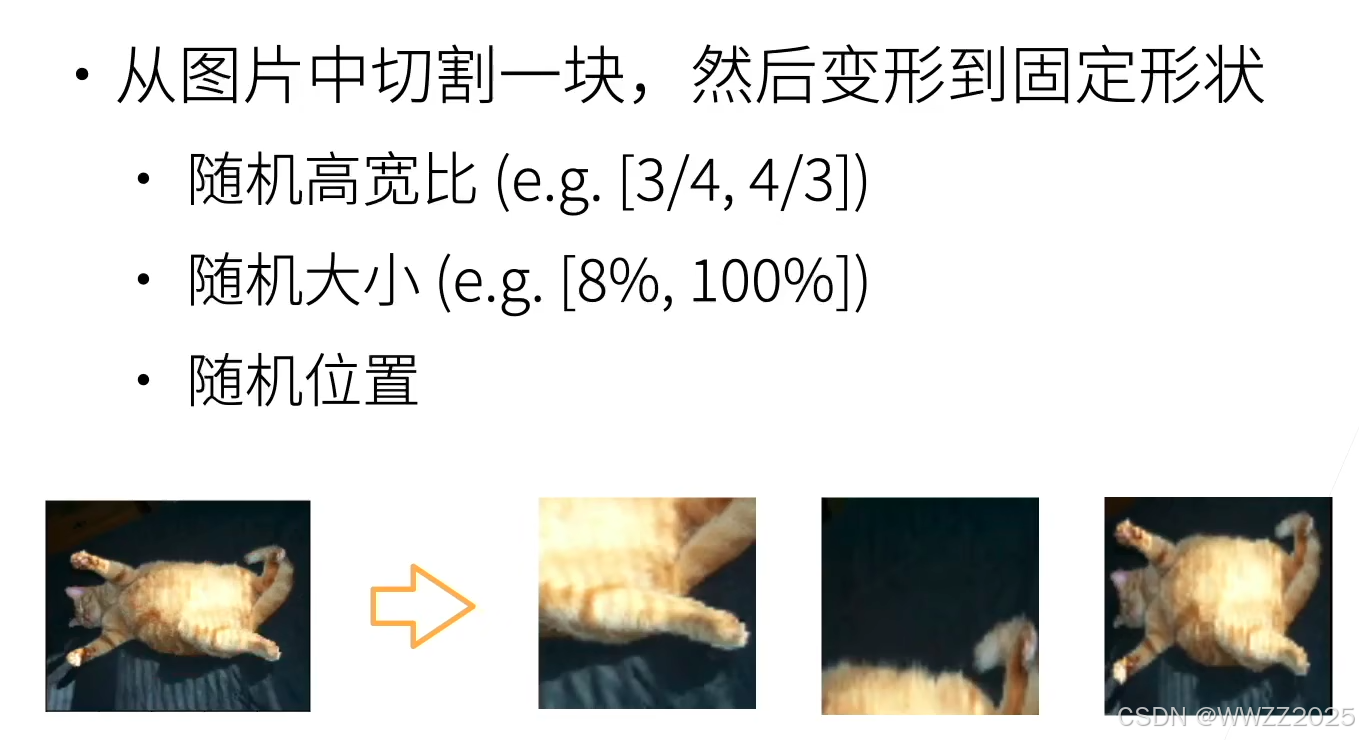

1.2.2 切割

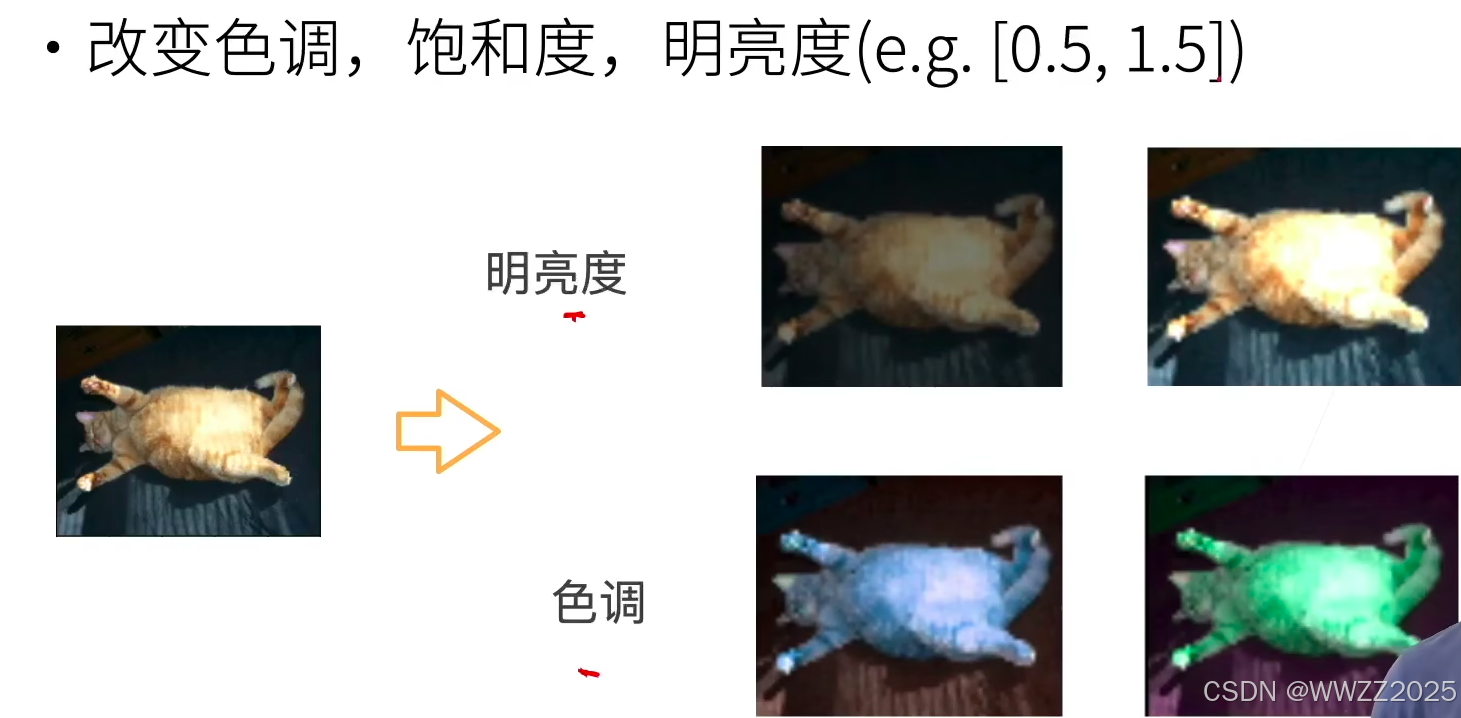

1.2.3 颜色

1.2.4 其他

1.2.5 代码

PS.CIFAR10数据集网页无法打开,可以更换其他的去做复现。

(1)库

%matplotlib inline import torch import torchvision from torch import nn from d2l import torch as d2l(2)常用图像增强方法

d2l.set_figsize() img = d2l.Image.open('../img/cat1.jpg') d2l.plt.imshow(img); def apply(img, aug, num_rows=2, num_cols=4, scale=1.5): Y = [aug(img) for _ in range(num_rows * num_cols)] d2l.show_images(Y, num_rows, num_cols, scale=scale)(3)翻转和旋转

apply(img, torchvision.transforms.RandomHorizontalFlip()) apply(img, torchvision.transforms.RandomVerticalFlip()) shape_aug = torchvision.transforms.RandomResizedCrop( (200, 200), scale=(0.1, 1), ratio=(0.5, 2)) apply(img, shape_aug)(4)改变颜色

apply(img, torchvision.transforms.ColorJitter( brightness=0.5, contrast=0, saturation=0, hue=0)) apply(img, torchvision.transforms.ColorJitter( brightness=0, contrast=0, saturation=0, hue=0.5)) color_aug = torchvision.transforms.ColorJitter( brightness=0.5, contrast=0.5, saturation=0.5, hue=0.5) apply(img, color_aug)(5)结合多种图片增强方法

augs = torchvision.transforms.Compose([ torchvision.transforms.RandomHorizontalFlip(), color_aug, shape_aug]) apply(img, augs)(6)训练

all_images = torchvision.datasets.CIFAR10(train=True, root="../data", download=True) d2l.show_images([all_images[i][0] for i in range(32)], 4, 8, scale=0.8); train_augs = torchvision.transforms.Compose([ torchvision.transforms.RandomHorizontalFlip(), torchvision.transforms.ToTensor()]) test_augs = torchvision.transforms.Compose([ torchvision.transforms.ToTensor()]) def load_cifar10(is_train, augs, batch_size): dataset = torchvision.datasets.CIFAR10(root="../data", train=is_train, transform=augs, download=True) dataloader = torch.utils.data.DataLoader(dataset, batch_size=batch_size, shuffle=is_train, num_workers=d2l.get_dataloader_workers()) return dataloader

2 微调

2.1 定义

在一个已经训练好的模型(预训练模型)基础上,再用你的目标任务的数据继续训练,使模型适应该任务。

PS.

(1)已经训练好的模型使用数据集不需与目标任务数据集一致,但相近的话微调效果会更好;

(2)直接在目标任务上面训练容量太小,模型学不会复杂特征;

(3)有很多开源预训练模型,详情可在github上面搜索。

2.2 常用方式

(1)冻结前面层,只训练后面层(最常见)

前面层学的是通用特征,不需要动;

只训练高层,让模型适应你的任务。

(2)训练所有层(完全微调)

适用于任务与预训练任务非常不一样;有足够数据。

(3) 加几层新的结构,只训练新增部分

常见于迁移学习中的 "feature extractor + classifier" 方式。

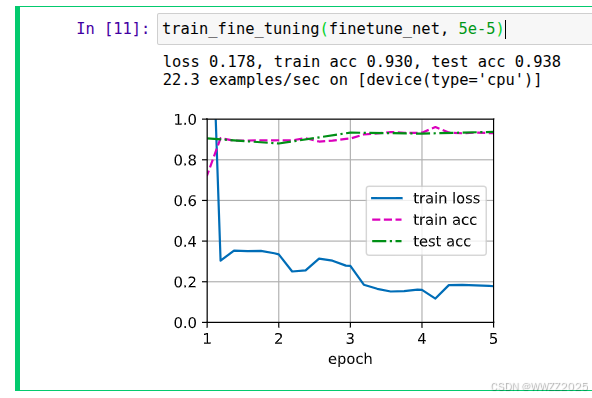

2.3 代码-热狗识别

(1)库

python%matplotlib inline import os import torch import torchvision from torch import nn from d2l import torch as d2l(2)获取数据集

python#@save d2l.DATA_HUB['hotdog'] = (d2l.DATA_URL + 'hotdog.zip', 'fba480ffa8aa7e0febbb511d181409f899b9baa5') data_dir = d2l.download_extract('hotdog') train_imgs = torchvision.datasets.ImageFolder(os.path.join(data_dir, 'train')) test_imgs = torchvision.datasets.ImageFolder(os.path.join(data_dir, 'test')) hotdogs = [train_imgs[i][0] for i in range(8)] not_hotdogs = [train_imgs[-i - 1][0] for i in range(8)] d2l.show_images(hotdogs + not_hotdogs, 2, 8, scale=1.4);(3)数据增强

python# 使用RGB通道的均值和标准差,以标准化每个通道 normalize = torchvision.transforms.Normalize( [0.485, 0.456, 0.406], [0.229, 0.224, 0.225]) train_augs = torchvision.transforms.Compose([ torchvision.transforms.RandomResizedCrop(224), torchvision.transforms.RandomHorizontalFlip(), torchvision.transforms.ToTensor(), normalize]) test_augs = torchvision.transforms.Compose([ torchvision.transforms.Resize([256, 256]), torchvision.transforms.CenterCrop(224), torchvision.transforms.ToTensor(), normalize])(4)定义和初始化模型

pythonpretrained_net = torchvision.models.resnet18(pretrained=True) pretrained_net.fc finetune_net = torchvision.models.resnet18(pretrained=True) finetune_net.fc = nn.Linear(finetune_net.fc.in_features, 2) nn.init.xavier_uniform_(finetune_net.fc.weight);(5)微调模型

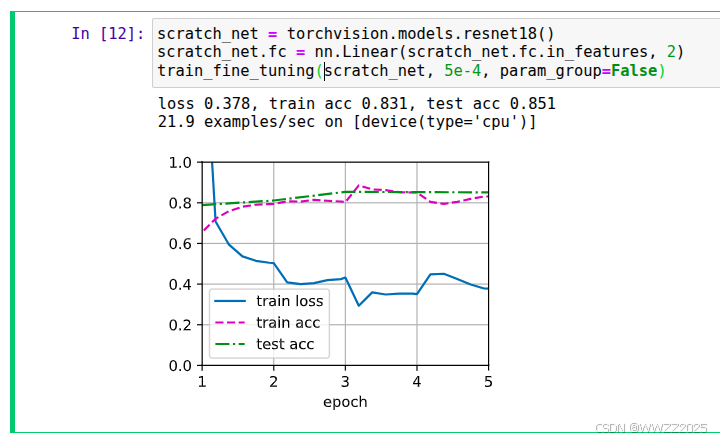

python# 如果param_group=True,输出层中的模型参数将使用十倍的学习率 def train_fine_tuning(net, learning_rate, batch_size=128, num_epochs=5, param_group=True): train_iter = torch.utils.data.DataLoader(torchvision.datasets.ImageFolder( os.path.join(data_dir, 'train'), transform=train_augs), batch_size=batch_size, shuffle=True) test_iter = torch.utils.data.DataLoader(torchvision.datasets.ImageFolder( os.path.join(data_dir, 'test'), transform=test_augs), batch_size=batch_size) devices = d2l.try_all_gpus() loss = nn.CrossEntropyLoss(reduction="none") if param_group: params_1x = [param for name, param in net.named_parameters() if name not in ["fc.weight", "fc.bias"]] trainer = torch.optim.SGD([{'params': params_1x}, {'params': net.fc.parameters(), 'lr': learning_rate * 10}], lr=learning_rate, weight_decay=0.001) else: trainer = torch.optim.SGD(net.parameters(), lr=learning_rate, weight_decay=0.001) d2l.train_ch13(net, train_iter, test_iter, loss, trainer, num_epochs, devices)

pythontrain_fine_tuning(finetune_net, 5e-5)

pythonscratch_net = torchvision.models.resnet18() scratch_net.fc = nn.Linear(scratch_net.fc.in_features, 2) train_fine_tuning(scratch_net, 5e-4, param_group=False)

3 锚框

3.1 定义

以每个像素为中心,生成多个缩放比和宽高比(aspect ratio)不同的边界框。

3.2 锚框算法

3.2.1 交并比(IoU)

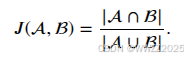

如果已知目标真实的边界框,则可以用交并比(IoU)计算两个框之间的相似度,公式为:

原理是:

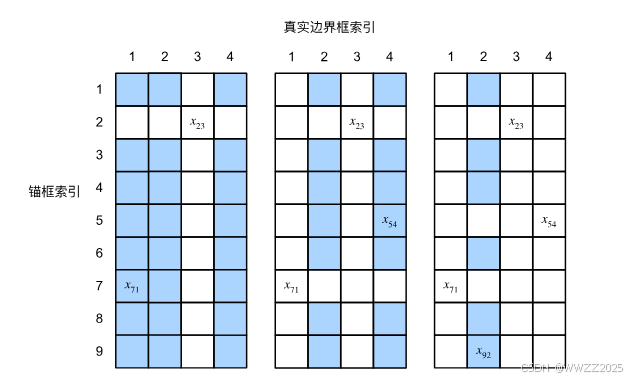

假设有4个标定好的框(横轴),算法生成了9个锚框索引(竖轴),计算IoU,取最大值如

,此时表示锚框2做为真实框3的边缘框,然后第2行第3列不再做为筛选范围,其他区域筛选第二大值,以此类推。

3.2.2 非极大值抑制(NMS)

作用是从一堆重叠的候选框中选出最好的框,去掉重复的框。工作流程为:选择置信度最高的框作为最终框;计算这个框与其他框的IoU;删除所有与置信度最高的框重叠太大的框;从剩下的框中,再选一个最高置信度的重复上述动作;只保留每个物体最合适的框。

3.3 代码

(1)库

python%matplotlib inline import torch from d2l import torch as d2l torch.set_printoptions(2) # 精简输出精度(2)生成多个锚框

python#@save def multibox_prior(data, sizes, ratios): """生成以每个像素为中心具有不同形状的锚框""" in_height, in_width = data.shape[-2:] device, num_sizes, num_ratios = data.device, len(sizes), len(ratios) boxes_per_pixel = (num_sizes + num_ratios - 1) size_tensor = torch.tensor(sizes, device=device) ratio_tensor = torch.tensor(ratios, device=device) # 为了将锚点移动到像素的中心,需要设置偏移量。 # 因为一个像素的高为1且宽为1,我们选择偏移我们的中心0.5 offset_h, offset_w = 0.5, 0.5 steps_h = 1.0 / in_height # 在y轴上缩放步长 steps_w = 1.0 / in_width # 在x轴上缩放步长 # 生成锚框的所有中心点 center_h = (torch.arange(in_height, device=device) + offset_h) * steps_h center_w = (torch.arange(in_width, device=device) + offset_w) * steps_w shift_y, shift_x = torch.meshgrid(center_h, center_w, indexing='ij') shift_y, shift_x = shift_y.reshape(-1), shift_x.reshape(-1) # 生成"boxes_per_pixel"个高和宽, # 之后用于创建锚框的四角坐标(xmin,xmax,ymin,ymax) w = torch.cat((size_tensor * torch.sqrt(ratio_tensor[0]), sizes[0] * torch.sqrt(ratio_tensor[1:])))\ * in_height / in_width # 处理矩形输入 h = torch.cat((size_tensor / torch.sqrt(ratio_tensor[0]), sizes[0] / torch.sqrt(ratio_tensor[1:]))) # 除以2来获得半高和半宽 anchor_manipulations = torch.stack((-w, -h, w, h)).T.repeat( in_height * in_width, 1) / 2 # 每个中心点都将有"boxes_per_pixel"个锚框, # 所以生成含所有锚框中心的网格,重复了"boxes_per_pixel"次 out_grid = torch.stack([shift_x, shift_y, shift_x, shift_y], dim=1).repeat_interleave(boxes_per_pixel, dim=0) output = out_grid + anchor_manipulations return output.unsqueeze(0)

pythonimg = d2l.plt.imread('../img/catdog.jpg') h, w = img.shape[:2] print(h, w) X = torch.rand(size=(1, 3, h, w)) Y = multibox_prior(X, sizes=[0.75, 0.5, 0.25], ratios=[1, 2, 0.5]) Y.shape

pythonboxes = Y.reshape(h, w, 5, 4) boxes[250, 250, 0, :] #@save def show_bboxes(axes, bboxes, labels=None, colors=None): """显示所有边界框""" def _make_list(obj, default_values=None): if obj is None: obj = default_values elif not isinstance(obj, (list, tuple)): obj = [obj] return obj labels = _make_list(labels) colors = _make_list(colors, ['b', 'g', 'r', 'm', 'c']) for i, bbox in enumerate(bboxes): color = colors[i % len(colors)] rect = d2l.bbox_to_rect(bbox.detach().numpy(), color) axes.add_patch(rect) if labels and len(labels) > i: text_color = 'k' if color == 'w' else 'w' axes.text(rect.xy[0], rect.xy[1], labels[i], va='center', ha='center', fontsize=9, color=text_color, bbox=dict(facecolor=color, lw=0)) d2l.set_figsize() bbox_scale = torch.tensor((w, h, w, h)) fig = d2l.plt.imshow(img) show_bboxes(fig.axes, boxes[250, 250, :, :] * bbox_scale, ['s=0.75, r=1', 's=0.5, r=1', 's=0.25, r=1', 's=0.75, r=2', 's=0.75, r=0.5'])(3)交并比

python#@save def box_iou(boxes1, boxes2): """计算两个锚框或边界框列表中成对的交并比""" box_area = lambda boxes: ((boxes[:, 2] - boxes[:, 0]) * (boxes[:, 3] - boxes[:, 1])) # boxes1,boxes2,areas1,areas2的形状: # boxes1:(boxes1的数量,4), # boxes2:(boxes2的数量,4), # areas1:(boxes1的数量,), # areas2:(boxes2的数量,) areas1 = box_area(boxes1) areas2 = box_area(boxes2) # inter_upperlefts,inter_lowerrights,inters的形状: # (boxes1的数量,boxes2的数量,2) inter_upperlefts = torch.max(boxes1[:, None, :2], boxes2[:, :2]) inter_lowerrights = torch.min(boxes1[:, None, 2:], boxes2[:, 2:]) inters = (inter_lowerrights - inter_upperlefts).clamp(min=0) # inter_areasandunion_areas的形状:(boxes1的数量,boxes2的数量) inter_areas = inters[:, :, 0] * inters[:, :, 1] union_areas = areas1[:, None] + areas2 - inter_areas return inter_areas / union_areas(4)训练数据集标注锚框

python#@save def assign_anchor_to_bbox(ground_truth, anchors, device, iou_threshold=0.5): """将最接近的真实边界框分配给锚框""" num_anchors, num_gt_boxes = anchors.shape[0], ground_truth.shape[0] # 位于第i行和第j列的元素x_ij是锚框i和真实边界框j的IoU jaccard = box_iou(anchors, ground_truth) # 对于每个锚框,分配的真实边界框的张量 anchors_bbox_map = torch.full((num_anchors,), -1, dtype=torch.long, device=device) # 根据阈值,决定是否分配真实边界框 max_ious, indices = torch.max(jaccard, dim=1) anc_i = torch.nonzero(max_ious >= iou_threshold).reshape(-1) box_j = indices[max_ious >= iou_threshold] anchors_bbox_map[anc_i] = box_j col_discard = torch.full((num_anchors,), -1) row_discard = torch.full((num_gt_boxes,), -1) for _ in range(num_gt_boxes): max_idx = torch.argmax(jaccard) box_idx = (max_idx % num_gt_boxes).long() anc_idx = (max_idx / num_gt_boxes).long() anchors_bbox_map[anc_idx] = box_idx jaccard[:, box_idx] = col_discard jaccard[anc_idx, :] = row_discard return anchors_bbox_map(5)标记类别和偏移量

python#@save def offset_boxes(anchors, assigned_bb, eps=1e-6): """对锚框偏移量的转换""" c_anc = d2l.box_corner_to_center(anchors) c_assigned_bb = d2l.box_corner_to_center(assigned_bb) offset_xy = 10 * (c_assigned_bb[:, :2] - c_anc[:, :2]) / c_anc[:, 2:] offset_wh = 5 * torch.log(eps + c_assigned_bb[:, 2:] / c_anc[:, 2:]) offset = torch.cat([offset_xy, offset_wh], axis=1) return offset #@save def multibox_target(anchors, labels): """使用真实边界框标记锚框""" batch_size, anchors = labels.shape[0], anchors.squeeze(0) batch_offset, batch_mask, batch_class_labels = [], [], [] device, num_anchors = anchors.device, anchors.shape[0] for i in range(batch_size): label = labels[i, :, :] anchors_bbox_map = assign_anchor_to_bbox( label[:, 1:], anchors, device) bbox_mask = ((anchors_bbox_map >= 0).float().unsqueeze(-1)).repeat( 1, 4) # 将类标签和分配的边界框坐标初始化为零 class_labels = torch.zeros(num_anchors, dtype=torch.long, device=device) assigned_bb = torch.zeros((num_anchors, 4), dtype=torch.float32, device=device) # 使用真实边界框来标记锚框的类别。 # 如果一个锚框没有被分配,标记其为背景(值为零) indices_true = torch.nonzero(anchors_bbox_map >= 0) bb_idx = anchors_bbox_map[indices_true] class_labels[indices_true] = label[bb_idx, 0].long() + 1 assigned_bb[indices_true] = label[bb_idx, 1:] # 偏移量转换 offset = offset_boxes(anchors, assigned_bb) * bbox_mask batch_offset.append(offset.reshape(-1)) batch_mask.append(bbox_mask.reshape(-1)) batch_class_labels.append(class_labels) bbox_offset = torch.stack(batch_offset) bbox_mask = torch.stack(batch_mask) class_labels = torch.stack(batch_class_labels) return (bbox_offset, bbox_mask, class_labels)(5)NMS

python#@save def offset_inverse(anchors, offset_preds): """根据带有预测偏移量的锚框来预测边界框""" anc = d2l.box_corner_to_center(anchors) pred_bbox_xy = (offset_preds[:, :2] * anc[:, 2:] / 10) + anc[:, :2] pred_bbox_wh = torch.exp(offset_preds[:, 2:] / 5) * anc[:, 2:] pred_bbox = torch.cat((pred_bbox_xy, pred_bbox_wh), axis=1) predicted_bbox = d2l.box_center_to_corner(pred_bbox) return predicted_bbox

python#@save def multibox_detection(cls_probs, offset_preds, anchors, nms_threshold=0.5, pos_threshold=0.009999999): """使用非极大值抑制来预测边界框""" device, batch_size = cls_probs.device, cls_probs.shape[0] anchors = anchors.squeeze(0) num_classes, num_anchors = cls_probs.shape[1], cls_probs.shape[2] out = [] for i in range(batch_size): cls_prob, offset_pred = cls_probs[i], offset_preds[i].reshape(-1, 4) conf, class_id = torch.max(cls_prob[1:], 0) predicted_bb = offset_inverse(anchors, offset_pred) keep = nms(predicted_bb, conf, nms_threshold) # 找到所有的non_keep索引,并将类设置为背景 all_idx = torch.arange(num_anchors, dtype=torch.long, device=device) combined = torch.cat((keep, all_idx)) uniques, counts = combined.unique(return_counts=True) non_keep = uniques[counts == 1] all_id_sorted = torch.cat((keep, non_keep)) class_id[non_keep] = -1 class_id = class_id[all_id_sorted] conf, predicted_bb = conf[all_id_sorted], predicted_bb[all_id_sorted] # pos_threshold是一个用于非背景预测的阈值 below_min_idx = (conf < pos_threshold) class_id[below_min_idx] = -1 conf[below_min_idx] = 1 - conf[below_min_idx] pred_info = torch.cat((class_id.unsqueeze(1), conf.unsqueeze(1), predicted_bb), dim=1) out.append(pred_info) return torch.stack(out)

pythonanchors = torch.tensor([[0.1, 0.08, 0.52, 0.92], [0.08, 0.2, 0.56, 0.95], [0.15, 0.3, 0.62, 0.91], [0.55, 0.2, 0.9, 0.88]]) offset_preds = torch.tensor([0] * anchors.numel()) cls_probs = torch.tensor([[0] * 4, # 背景的预测概率 [0.9, 0.8, 0.7, 0.1], # 狗的预测概率 [0.1, 0.2, 0.3, 0.9]]) # 猫的预测概率 fig = d2l.plt.imshow(img) show_bboxes(fig.axes, anchors * bbox_scale, ['dog=0.9', 'dog=0.8', 'dog=0.7', 'cat=0.9']) output = multibox_detection(cls_probs.unsqueeze(dim=0), offset_preds.unsqueeze(dim=0), anchors.unsqueeze(dim=0), nms_threshold=0.5) output fig = d2l.plt.imshow(img) for i in output[0].detach().numpy(): if i[0] == -1: continue label = ('dog=', 'cat=')[int(i[0])] + str(i[1]) show_bboxes(fig.axes, [torch.tensor(i[2:]) * bbox_scale], label)