一、引言

DCGAN(Deep Convolutional Generative Adversarial Networks)是 2015 年由 Alec Radford 等人提出的基于卷积神经网络的生成对抗网络变体 ,核心创新是用卷积层替代了传统 GAN 中的全连接层,并设计了一套标准化的网络架构和训练准则,大幅提升了 GAN 在图像生成任务中的稳定性和生成质量,成为图像生成领域的基础模型。

二、DCGAN 的核心背景

传统 GAN 采用全连接层构建生成器(G)和判别器(D),存在两个关键问题:

- 参数冗余:全连接层会破坏图像的空间结构,且参数量巨大,训练效率低。

- 训练不稳定 :容易出现模式崩溃(生成器只生成少数几种样本)、梯度消失等问题。

DCGAN 的目标就是通过卷积层的空间特征提取能力 和标准化的网络设计,解决上述痛点,让 GAN 能够稳定生成高质量的图像。

三、DCGAN 的核心架构设计

DCGAN 严格定义了生成器和判别器的网络结构规范,核心原则是移除全连接层、使用卷积 / 转置卷积层、加入批量归一化(Batch Normalization)。

1. 生成器(Generator,G)

生成器的作用是接收一个随机噪声向量 z(服从均匀分布或正态分布),通过一系列操作生成与真实图像尺寸一致的假图像。

网络结构(以生成 64×64 图像为例)

| 层类型 | 核心参数 | 作用 |

|---|---|---|

| 全连接层(仅输入层) | 将噪声向量 z(如 100 维)映射为高维特征图(如 1024×4×4) | 将一维噪声转换为三维特征图,为后续卷积操作提供基础 |

| 转置卷积层(反卷积) | 搭配批量归一化 + ReLU 激活 | 对特征图进行上采样,逐步放大尺寸(4×4 → 8×8 → 16×16 → 32×32 → 64×64) |

| 输出转置卷积层 | 激活函数为 Tanh | 生成最终图像,输出像素值范围为 [−1,1],与预处理后的真实图像匹配 |

关键设计要点

- 转置卷积(Transposed Convolution):实现特征图的上采样,替代传统的插值方法,保证空间结构的学习。

- 批量归一化(BN):加速训练收敛,防止梯度消失,同时提升生成样本的多样性。

- 激活函数:隐藏层用 ReLU(避免梯度消失),输出层用 Tanh(因为真实图像预处理后像素值归一化到 [−1,1])。

2. 判别器(Discriminator,D)

判别器的作用是接收真实图像 或生成器生成的假图像,输出一个概率值(0~1),判断输入图像是真实的还是伪造的。

网络结构(以输入 64×64 图像为例)

| 层类型 | 核心参数 | 作用 |

|---|---|---|

| 卷积层 | 搭配 LeakyReLU 激活 | 对图像进行下采样,提取空间特征,逐步缩小尺寸(64×64 → 32×32 → 16×16 → 8×8 → 4×4) |

| 全连接层(仅输出层) | 激活函数为 Sigmoid | 将最终特征图映射为一个概率值,判断图像真假 |

关键设计要点

- 步幅卷积(Strided Convolution):用步幅 > 1 的卷积替代池化层进行下采样,保留更多梯度信息,提升训练稳定性。

- LeakyReLU 激活:解决 ReLU 在负区间的 "死亡神经元" 问题,让判别器的梯度流动更顺畅。

- 无批量归一化:判别器的输入层和输出层不使用 BN,避免样本分布被过度平滑,影响判别能力。

3. DCGAN 的整体架构约束

为了保证训练稳定性,DCGAN 提出了严格的架构约束:

- 移除所有全连接层(仅生成器输入、判别器输出可保留)。

- 生成器使用转置卷积上采样,判别器使用步幅卷积下采样。

- 生成器和判别器的隐藏层都使用批量归一化(判别器输入 / 输出层除外)。

- 生成器隐藏层用 ReLU,输出层用 Tanh;判别器所有层用 LeakyReLU。

四、DCGAN 的训练原理

DCGAN 的训练遵循 GAN 的极小极大博弈思想,生成器和判别器交替训练。

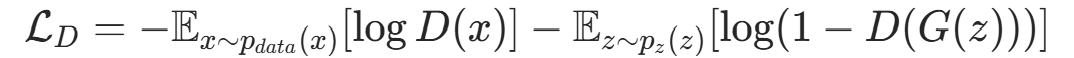

1. 损失函数

DCGAN 的损失函数与传统 GAN 一致,分为判别器损失和生成器损失:

- 判别器损失 :目标是最大化对真实图像的识别概率,最小化对假图像的识别概率。

- 生成器损失 :目标是最大化判别器对假图像的误判概率,让假图像尽可能接近真实图像。

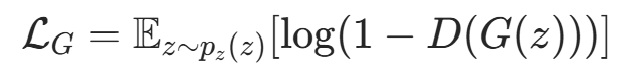

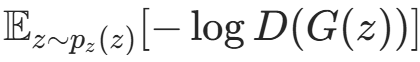

实际训练中,常将生成器损失替换为

实际训练中,常将生成器损失替换为  ,加速生成器的梯度收敛。

,加速生成器的梯度收敛。

2. 训练步骤

- 初始化:随机初始化生成器 G 和判别器 D 的参数。

- 训练判别器 :

- 采样一批真实图像 x 和一批随机噪声 z,生成假图像 G(z)。

- 计算判别器损失

,反向传播更新判别器参数。

,反向传播更新判别器参数。

- 训练生成器 :

- 采样一批随机噪声 z,生成假图像 G(z)。

- 计算生成器损失

,反向传播更新生成器参数。

,反向传播更新生成器参数。

- 重复步骤 2-3:直到判别器的准确率接近 50%(无法区分真假图像),训练收敛。

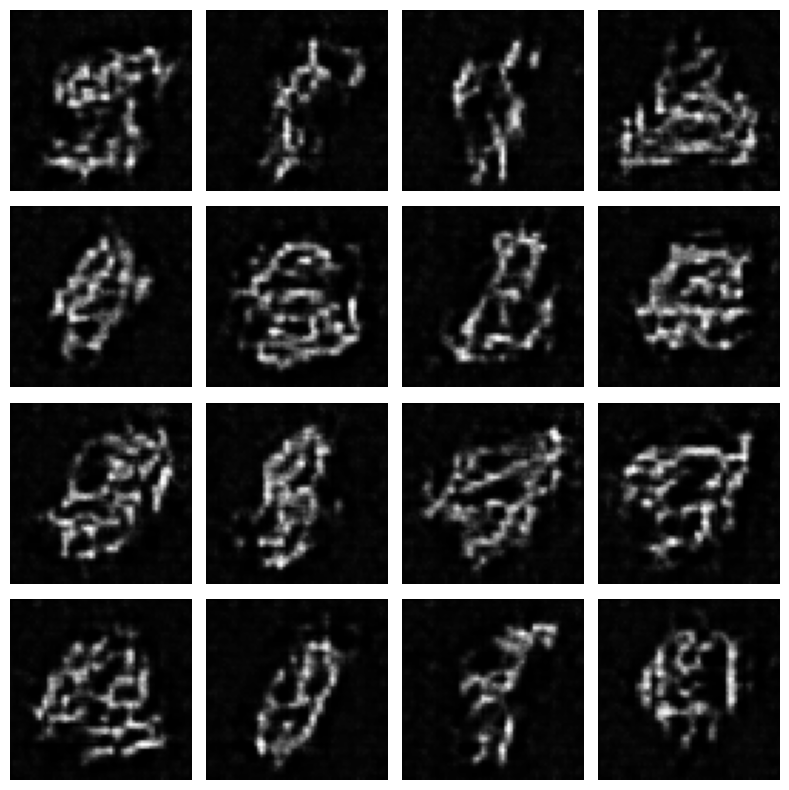

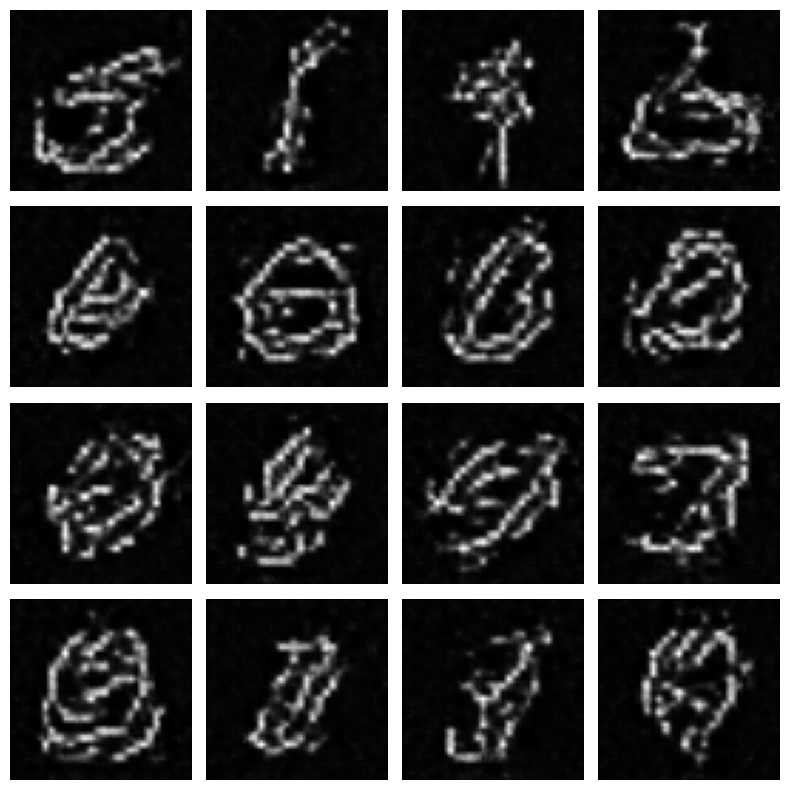

五、基于手写数字数据集(MNIST)的深度卷积生成对抗网络(DCGAN)的Python代码完整实现(适配魔搭社区运行)

python

import torch

import torch.nn as nn

import torch.optim as optim

import torchvision

import torchvision.transforms as transforms

from torch.utils.data import DataLoader, Dataset

import matplotlib.pyplot as plt

import numpy as np

import os

# ====================== 1. 超参数配置 ======================

DEVICE = torch.device("cuda" if torch.cuda.is_available() else "cpu")

NOISE_DIM = 100

IMG_CHANNELS = 1

IMG_SIZE = 64

BATCH_SIZE = 128

LEARNING_RATE = 0.0001 # 降低学习率,减缓判别器收敛速度

BETA1 = 0.5

EPOCHS = 20

SAVE_INTERVAL = 2 # 每2轮保存一次,减少可视化耗时

OUTPUT_DIR = "/mnt/dcgan_results"

os.makedirs(OUTPUT_DIR, exist_ok=True)

# ====================== 2. 数据加载 ======================

class MNISTDataset(Dataset):

def __init__(self, data, transform=None):

self.data = data

self.transform = transform

def __len__(self):

return len(self.data)

def __getitem__(self, idx):

img = self.data[idx].astype(np.float32)

img = np.expand_dims(img, axis=-1)

if self.transform:

img = transforms.ToPILImage()(img)

img = self.transform(img)

return img, 0

print("从torchvision国内镜像加载MNIST数据集...")

mnist_dataset = torchvision.datasets.MNIST(

root="/mnt/mnist_data",

train=True,

download=True,

transform=transforms.ToTensor()

)

train_data = mnist_dataset.data.numpy().astype(np.float32)

transform = transforms.Compose([

transforms.Resize(IMG_SIZE),

transforms.ToTensor(),

transforms.Normalize((0.5,), (0.5,))

])

dataset = MNISTDataset(train_data, transform=transform)

dataloader = DataLoader(dataset, batch_size=BATCH_SIZE, shuffle=True, num_workers=4)

# ====================== 3. 生成器 ======================

class Generator(nn.Module):

def __init__(self):

super(Generator, self).__init__()

self.main = nn.Sequential(

nn.ConvTranspose2d(NOISE_DIM, 1024, 4, 1, 0, bias=False),

nn.BatchNorm2d(1024),

nn.ReLU(True),

nn.ConvTranspose2d(1024, 512, 4, 2, 1, bias=False),

nn.BatchNorm2d(512),

nn.ReLU(True),

nn.ConvTranspose2d(512, 256, 4, 2, 1, bias=False),

nn.BatchNorm2d(256),

nn.ReLU(True),

nn.ConvTranspose2d(256, 128, 4, 2, 1, bias=False),

nn.BatchNorm2d(128),

nn.ReLU(True),

nn.ConvTranspose2d(128, IMG_CHANNELS, 4, 2, 1, bias=False),

nn.Tanh()

)

def forward(self, input):

return self.main(input)

# ====================== 4. 判别器 ======================

class Discriminator(nn.Module):

def __init__(self):

super(Discriminator, self).__init__()

self.main = nn.Sequential(

nn.Conv2d(IMG_CHANNELS, 128, 4, 2, 1, bias=False),

nn.LeakyReLU(0.2, inplace=True),

nn.Conv2d(128, 256, 4, 2, 1, bias=False),

nn.BatchNorm2d(256),

nn.LeakyReLU(0.2, inplace=True),

nn.Conv2d(256, 512, 4, 2, 1, bias=False),

nn.BatchNorm2d(512),

nn.LeakyReLU(0.2, inplace=True),

nn.Conv2d(512, 1024, 4, 2, 1, bias=False),

nn.BatchNorm2d(1024),

nn.LeakyReLU(0.2, inplace=True),

nn.Conv2d(1024, 1, 4, 1, 0, bias=False),

nn.Sigmoid()

)

def forward(self, input):

return self.main(input).view(-1, 1).squeeze(1)

# ====================== 5. 可视化函数 ======================

def visualize_generated_images(generator, epoch, fixed_noise):

generator.eval()

with torch.no_grad():

fake = generator(fixed_noise).detach().cpu()

fake = (fake + 1) / 2.0

fig, axes = plt.subplots(4, 4, figsize=(8, 8))

axes = axes.flatten()

for i in range(16):

axes[i].imshow(fake[i].squeeze(), cmap='gray')

axes[i].axis('off')

plt.tight_layout()

plt.savefig(os.path.join(OUTPUT_DIR, f"dcgan_epoch_{epoch}.png"))

plt.show()

plt.close()

generator.train()

# ====================== 6. 初始化模型/损失/优化器 ======================

netG = Generator().to(DEVICE)

netD = Discriminator().to(DEVICE)

# 权重初始化

def weights_init(m):

classname = m.__class__.__name__

if classname.find('Conv') != -1:

nn.init.normal_(m.weight.data, 0.0, 0.02)

elif classname.find('BatchNorm') != -1:

nn.init.normal_(m.weight.data, 1.0, 0.02)

nn.init.constant_(m.bias.data, 0)

netG.apply(weights_init)

netD.apply(weights_init)

# 损失函数:保持BCELoss,但后续用软标签

criterion = nn.BCELoss()

fixed_noise = torch.randn(16, NOISE_DIM, 1, 1, device=DEVICE)

# 优化器:降低学习率,减缓判别器收敛

optimizerD = optim.Adam(netD.parameters(), lr=LEARNING_RATE, betas=(BETA1, 0.999))

optimizerG = optim.Adam(netG.parameters(), lr=LEARNING_RATE, betas=(BETA1, 0.999))

# ====================== 7. 训练流程 ======================

print("开始训练DCGAN...")

for epoch in range(EPOCHS):

for i, (real_images, _) in enumerate(dataloader):

batch_size = real_images.size(0)

# --------------------------

# 步骤1:训练判别器

# --------------------------

netD.zero_grad()

real_images = real_images.to(DEVICE)

# 软标签(真实标签=0.9,假标签=0.1),避免判别器过拟合

label_real = torch.full((batch_size,), 0.9, device=DEVICE) # 真实标签≠1

output_real = netD(real_images)

lossD_real = criterion(output_real, label_real)

lossD_real.backward()

noise = torch.randn(batch_size, NOISE_DIM, 1, 1, device=DEVICE)

fake_images = netG(noise)

label_fake = torch.full((batch_size,), 0.1, device=DEVICE) # 假标签≠0

output_fake = netD(fake_images.detach())

lossD_fake = criterion(output_fake, label_fake)

lossD_fake.backward()

# 梯度裁剪,限制判别器梯度范围,防止其学习过快

torch.nn.utils.clip_grad_norm_(netD.parameters(), max_norm=1.0)

lossD = lossD_real + lossD_fake

optimizerD.step()

# --------------------------

# 步骤2:训练生成器

# --------------------------

# 每轮判别器训练后,训练2次生成器

for _ in range(2):

netG.zero_grad()

# 重新生成噪声和假图像,每次训练都有新的计算图

noise_gen = torch.randn(batch_size, NOISE_DIM, 1, 1, device=DEVICE)

fake_images_gen = netG(noise_gen)

label_gen = torch.full((batch_size,), 0.9, device=DEVICE) # 软标签

output_gen = netD(fake_images_gen) # 用新生成的假图像计算

lossG = criterion(output_gen, label_gen)

lossG.backward() # 每次都是新计算图,不会报错

optimizerG.step()

# --------------------------

# 打印日志

# --------------------------

if i % 50 == 0: # 频繁打印,监控训练状态

print(f"Epoch [{epoch + 1}/{EPOCHS}] | Batch [{i}/{len(dataloader)}] | "

f"Loss D: {lossD.item():.4f} | Loss G: {lossG.item():.4f}")

# 保存可视化图像

if (epoch + 1) % SAVE_INTERVAL == 0:

visualize_generated_images(netG, epoch + 1, fixed_noise)

# 保存模型

torch.save(netG.state_dict(), os.path.join(OUTPUT_DIR, "dcgan_generator.pth"))

torch.save(netD.state_dict(), os.path.join(OUTPUT_DIR, "dcgan_discriminator.pth"))

print(f"训练完成!模型和图像已保存到 {OUTPUT_DIR}")

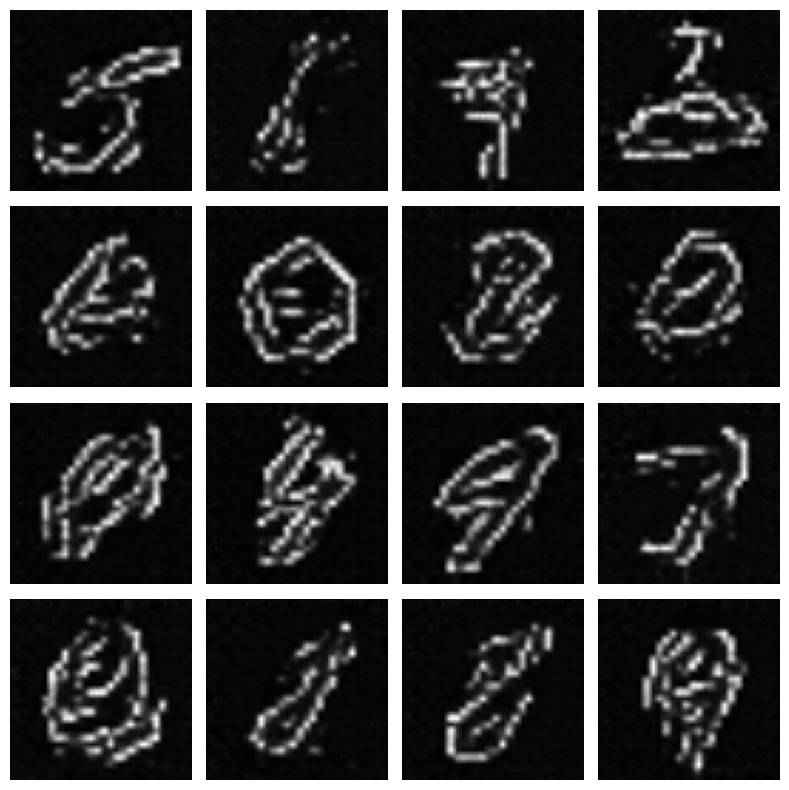

# ====================== 8. 加载模型生成新图像 ======================

def generate_new_images(generator_path, num_images=16):

gen = Generator().to(DEVICE)

gen.load_state_dict(torch.load(generator_path))

gen.eval()

noise = torch.randn(num_images, NOISE_DIM, 1, 1, device=DEVICE)

with torch.no_grad():

fake_images = gen(noise).detach().cpu()

fake_images = (fake_images + 1) / 2.0

fig, axes = plt.subplots(4, 4, figsize=(8, 8))

axes = axes.flatten()

for i in range(num_images):

axes[i].imshow(fake_images[i].squeeze(), cmap='gray')

axes[i].axis('off')

plt.tight_layout()

plt.savefig(os.path.join(OUTPUT_DIR, "dcgan_new_images.png"))

plt.show()

# 生成新图像

generate_new_images(os.path.join(OUTPUT_DIR, "dcgan_generator.pth"))六、程序运行结果展示

从torchvision国内镜像加载MNIST数据集...100%|██████████| 9.91M/9.91M [00:08<00:00, 1.17MB/s]

100%|██████████| 28.9k/28.9k [00:00<00:00, 142kB/s]

100%|██████████| 1.65M/1.65M [00:21<00:00, 76.8kB/s]

100%|██████████| 4.54k/4.54k [00:00<00:00, 11.6MB/s]开始训练DCGAN...Epoch [1/20] | Batch [0/469] | Loss D: 2.1708 | Loss G: 0.5276

Epoch [1/20] | Batch [50/469] | Loss D: 1.5406 | Loss G: 3.9286

Epoch [1/20] | Batch [100/469] | Loss D: 5.3495 | Loss G: 1.9732

Epoch [1/20] | Batch [150/469] | Loss D: 1.1190 | Loss G: 6.1902

Epoch [1/20] | Batch [200/469] | Loss D: 1.5062 | Loss G: 4.6198

Epoch [1/20] | Batch [250/469] | Loss D: 0.9461 | Loss G: 5.4162

Epoch [1/20] | Batch [300/469] | Loss D: 0.9710 | Loss G: 4.3616

Epoch [1/20] | Batch [350/469] | Loss D: 1.1507 | Loss G: 5.0401

Epoch [1/20] | Batch [400/469] | Loss D: 0.9243 | Loss G: 4.2266

Epoch [1/20] | Batch [450/469] | Loss D: 1.2518 | Loss G: 2.8673

Epoch [2/20] | Batch [0/469] | Loss D: 0.8829 | Loss G: 0.7438

Epoch [2/20] | Batch [50/469] | Loss D: 0.8359 | Loss G: 1.0813

Epoch [2/20] | Batch [100/469] | Loss D: 0.8420 | Loss G: 1.1348

Epoch [2/20] | Batch [150/469] | Loss D: 1.0122 | Loss G: 1.3260

Epoch [2/20] | Batch [200/469] | Loss D: 1.0445 | Loss G: 1.6667

Epoch [2/20] | Batch [250/469] | Loss D: 0.9326 | Loss G: 1.3084

Epoch [2/20] | Batch [300/469] | Loss D: 1.2907 | Loss G: 2.0412

Epoch [2/20] | Batch [350/469] | Loss D: 1.0242 | Loss G: 2.0492

Epoch [2/20] | Batch [400/469] | Loss D: 0.7988 | Loss G: 1.2610

Epoch [2/20] | Batch [450/469] | Loss D: 0.9509 | Loss G: 1.9066

Epoch [3/20] | Batch [0/469] | Loss D: 0.9584 | Loss G: 1.8820

Epoch [3/20] | Batch [50/469] | Loss D: 0.9681 | Loss G: 1.9810

Epoch [3/20] | Batch [100/469] | Loss D: 0.8502 | Loss G: 1.7589

Epoch [3/20] | Batch [150/469] | Loss D: 0.6859 | Loss G: 0.5957

Epoch [3/20] | Batch [200/469] | Loss D: 0.7526 | Loss G: 1.1577

Epoch [3/20] | Batch [250/469] | Loss D: 0.7219 | Loss G: 3.6288

Epoch [3/20] | Batch [300/469] | Loss D: 0.7027 | Loss G: 1.0138

Epoch [3/20] | Batch [350/469] | Loss D: 0.7143 | Loss G: 3.4034

Epoch [3/20] | Batch [400/469] | Loss D: 0.9341 | Loss G: 3.6831

Epoch [3/20] | Batch [450/469] | Loss D: 0.7445 | Loss G: 3.3457

Epoch [4/20] | Batch [0/469] | Loss D: 0.8757 | Loss G: 2.6956

Epoch [4/20] | Batch [50/469] | Loss D: 1.0799 | Loss G: 2.4216

Epoch [4/20] | Batch [100/469] | Loss D: 0.6973 | Loss G: 0.7042

Epoch [4/20] | Batch [150/469] | Loss D: 0.9525 | Loss G: 2.4783

Epoch [4/20] | Batch [200/469] | Loss D: 1.0951 | Loss G: 3.5422

Epoch [4/20] | Batch [250/469] | Loss D: 1.6415 | Loss G: 1.2649

Epoch [4/20] | Batch [300/469] | Loss D: 0.8471 | Loss G: 2.9468

Epoch [4/20] | Batch [350/469] | Loss D: 0.9345 | Loss G: 2.7839

Epoch [4/20] | Batch [400/469] | Loss D: 1.1969 | Loss G: 3.6052

Epoch [4/20] | Batch [450/469] | Loss D: 0.9039 | Loss G: 4.4108

Epoch [5/20] | Batch [0/469] | Loss D: 1.4938 | Loss G: 1.1262

Epoch [5/20] | Batch [50/469] | Loss D: 1.9520 | Loss G: 2.6721

Epoch [5/20] | Batch [100/469] | Loss D: 0.6844 | Loss G: 5.2247

Epoch [5/20] | Batch [150/469] | Loss D: 0.7017 | Loss G: 3.1289

Epoch [5/20] | Batch [200/469] | Loss D: 0.7574 | Loss G: 1.3402

Epoch [5/20] | Batch [250/469] | Loss D: 0.9655 | Loss G: 2.4521

Epoch [5/20] | Batch [300/469] | Loss D: 1.7337 | Loss G: 1.2062

Epoch [5/20] | Batch [350/469] | Loss D: 0.8413 | Loss G: 1.6437

Epoch [5/20] | Batch [400/469] | Loss D: 1.4664 | Loss G: 3.4659

Epoch [5/20] | Batch [450/469] | Loss D: 0.6853 | Loss G: 3.3151

Epoch [6/20] | Batch [0/469] | Loss D: 1.9123 | Loss G: 4.3569

Epoch [6/20] | Batch [50/469] | Loss D: 1.0977 | Loss G: 1.5598

Epoch [6/20] | Batch [100/469] | Loss D: 0.8919 | Loss G: 2.3875

Epoch [6/20] | Batch [150/469] | Loss D: 0.7074 | Loss G: 1.4607

Epoch [6/20] | Batch [200/469] | Loss D: 0.8017 | Loss G: 0.9157

Epoch [6/20] | Batch [250/469] | Loss D: 0.7380 | Loss G: 1.2918

Epoch [6/20] | Batch [300/469] | Loss D: 0.9090 | Loss G: 2.1925

Epoch [6/20] | Batch [350/469] | Loss D: 1.0250 | Loss G: 1.0616

Epoch [6/20] | Batch [400/469] | Loss D: 0.6741 | Loss G: 4.0006

Epoch [6/20] | Batch [450/469] | Loss D: 0.7075 | Loss G: 0.5061

Epoch [7/20] | Batch [0/469] | Loss D: 0.8104 | Loss G: 1.3678

Epoch [7/20] | Batch [50/469] | Loss D: 0.7031 | Loss G: 3.4249

Epoch [7/20] | Batch [100/469] | Loss D: 0.6952 | Loss G: 3.6088

Epoch [7/20] | Batch [150/469] | Loss D: 0.7150 | Loss G: 0.7401

Epoch [7/20] | Batch [200/469] | Loss D: 0.7040 | Loss G: 4.7408

Epoch [7/20] | Batch [250/469] | Loss D: 0.9014 | Loss G: 2.1112

Epoch [7/20] | Batch [300/469] | Loss D: 1.1584 | Loss G: 4.0534

Epoch [7/20] | Batch [350/469] | Loss D: 0.6744 | Loss G: 0.5244

Epoch [7/20] | Batch [400/469] | Loss D: 1.1609 | Loss G: 3.2443

Epoch [7/20] | Batch [450/469] | Loss D: 0.9932 | Loss G: 3.4742

Epoch [8/20] | Batch [0/469] | Loss D: 1.5099 | Loss G: 1.0275

Epoch [8/20] | Batch [50/469] | Loss D: 0.6853 | Loss G: 4.3555

Epoch [8/20] | Batch [100/469] | Loss D: 0.9935 | Loss G: 2.3611

Epoch [8/20] | Batch [150/469] | Loss D: 0.9354 | Loss G: 1.5195

Epoch [8/20] | Batch [200/469] | Loss D: 0.6857 | Loss G: 1.9244

Epoch [8/20] | Batch [250/469] | Loss D: 0.6704 | Loss G: 5.2297

Epoch [8/20] | Batch [300/469] | Loss D: 0.9662 | Loss G: 1.7270

Epoch [8/20] | Batch [350/469] | Loss D: 0.9975 | Loss G: 2.3233

Epoch [8/20] | Batch [400/469] | Loss D: 0.9695 | Loss G: 1.1076

Epoch [8/20] | Batch [450/469] | Loss D: 0.9373 | Loss G: 3.3470

Epoch [9/20] | Batch [0/469] | Loss D: 0.7451 | Loss G: 1.5023

Epoch [9/20] | Batch [50/469] | Loss D: 0.9831 | Loss G: 3.1059

Epoch [9/20] | Batch [100/469] | Loss D: 0.7012 | Loss G: 0.7434

Epoch [9/20] | Batch [150/469] | Loss D: 0.8744 | Loss G: 2.7144

Epoch [9/20] | Batch [200/469] | Loss D: 0.7544 | Loss G: 3.3559

Epoch [9/20] | Batch [250/469] | Loss D: 0.6979 | Loss G: 0.7598

Epoch [9/20] | Batch [300/469] | Loss D: 0.7465 | Loss G: 1.0092

Epoch [9/20] | Batch [350/469] | Loss D: 1.0470 | Loss G: 2.8844

Epoch [9/20] | Batch [400/469] | Loss D: 0.9028 | Loss G: 2.2601

Epoch [9/20] | Batch [450/469] | Loss D: 0.8671 | Loss G: 2.4446

Epoch [10/20] | Batch [0/469] | Loss D: 0.7961 | Loss G: 3.4328

Epoch [10/20] | Batch [50/469] | Loss D: 0.6834 | Loss G: 0.7423

Epoch [10/20] | Batch [100/469] | Loss D: 0.6740 | Loss G: 0.6470

Epoch [10/20] | Batch [150/469] | Loss D: 0.7462 | Loss G: 0.8433

Epoch [10/20] | Batch [200/469] | Loss D: 0.8191 | Loss G: 2.2682

Epoch [10/20] | Batch [250/469] | Loss D: 0.7129 | Loss G: 2.0259

Epoch [10/20] | Batch [300/469] | Loss D: 0.6653 | Loss G: 0.6850

Epoch [10/20] | Batch [350/469] | Loss D: 0.7506 | Loss G: 1.9749

Epoch [10/20] | Batch [400/469] | Loss D: 0.8216 | Loss G: 1.9553

Epoch [10/20] | Batch [450/469] | Loss D: 0.6675 | Loss G: 0.5138

Epoch [11/20] | Batch [0/469] | Loss D: 1.1311 | Loss G: 2.6437

Epoch [11/20] | Batch [50/469] | Loss D: 0.6862 | Loss G: 2.5772

Epoch [11/20] | Batch [100/469] | Loss D: 1.4509 | Loss G: 4.0899

Epoch [11/20] | Batch [150/469] | Loss D: 0.7212 | Loss G: 0.9579

Epoch [11/20] | Batch [200/469] | Loss D: 0.8229 | Loss G: 2.2899

Epoch [11/20] | Batch [250/469] | Loss D: 1.1708 | Loss G: 2.3894

Epoch [11/20] | Batch [300/469] | Loss D: 0.6696 | Loss G: 1.8462

Epoch [11/20] | Batch [350/469] | Loss D: 0.6964 | Loss G: 3.1833

Epoch [11/20] | Batch [400/469] | Loss D: 0.6692 | Loss G: 3.3642

Epoch [11/20] | Batch [450/469] | Loss D: 1.0112 | Loss G: 2.0791

Epoch [12/20] | Batch [0/469] | Loss D: 0.8499 | Loss G: 2.2213

Epoch [12/20] | Batch [50/469] | Loss D: 1.2542 | Loss G: 1.2443

Epoch [12/20] | Batch [100/469] | Loss D: 1.0569 | Loss G: 1.7441

Epoch [12/20] | Batch [150/469] | Loss D: 0.6618 | Loss G: 1.3477

Epoch [12/20] | Batch [200/469] | Loss D: 0.9838 | Loss G: 3.1484

Epoch [12/20] | Batch [250/469] | Loss D: 0.6801 | Loss G: 1.6013

Epoch [12/20] | Batch [300/469] | Loss D: 0.6694 | Loss G: 0.5869

Epoch [12/20] | Batch [350/469] | Loss D: 1.0790 | Loss G: 3.1024

Epoch [12/20] | Batch [400/469] | Loss D: 1.1934 | Loss G: 2.8954

Epoch [12/20] | Batch [450/469] | Loss D: 1.0823 | Loss G: 3.6676

Epoch [13/20] | Batch [0/469] | Loss D: 0.8369 | Loss G: 2.1750

Epoch [13/20] | Batch [50/469] | Loss D: 0.8390 | Loss G: 1.8887

Epoch [13/20] | Batch [100/469] | Loss D: 0.7034 | Loss G: 0.9770

Epoch [13/20] | Batch [150/469] | Loss D: 0.7695 | Loss G: 2.5608

Epoch [13/20] | Batch [200/469] | Loss D: 0.7925 | Loss G: 2.1907

Epoch [13/20] | Batch [250/469] | Loss D: 0.6753 | Loss G: 4.3709

Epoch [13/20] | Batch [300/469] | Loss D: 0.7085 | Loss G: 1.9311

Epoch [13/20] | Batch [350/469] | Loss D: 0.6730 | Loss G: 3.0410

Epoch [13/20] | Batch [400/469] | Loss D: 0.6685 | Loss G: 1.7539

Epoch [13/20] | Batch [450/469] | Loss D: 0.6622 | Loss G: 0.7681

Epoch [14/20] | Batch [0/469] | Loss D: 0.8858 | Loss G: 2.1199

Epoch [14/20] | Batch [50/469] | Loss D: 1.0525 | Loss G: 3.1640

Epoch [14/20] | Batch [100/469] | Loss D: 0.9215 | Loss G: 1.8566

Epoch [14/20] | Batch [150/469] | Loss D: 0.6797 | Loss G: 3.2213

Epoch [14/20] | Batch [200/469] | Loss D: 0.7985 | Loss G: 2.3294

Epoch [14/20] | Batch [250/469] | Loss D: 1.0877 | Loss G: 2.5763

Epoch [14/20] | Batch [300/469] | Loss D: 1.3120 | Loss G: 3.0843

Epoch [14/20] | Batch [350/469] | Loss D: 0.8477 | Loss G: 2.2537

Epoch [14/20] | Batch [400/469] | Loss D: 0.9851 | Loss G: 2.0667

Epoch [14/20] | Batch [450/469] | Loss D: 0.6654 | Loss G: 3.2403

Epoch [15/20] | Batch [0/469] | Loss D: 0.8710 | Loss G: 3.0576

Epoch [15/20] | Batch [50/469] | Loss D: 0.7603 | Loss G: 1.5943

Epoch [15/20] | Batch [100/469] | Loss D: 0.6644 | Loss G: 1.9039

Epoch [15/20] | Batch [150/469] | Loss D: 0.6912 | Loss G: 1.2042

Epoch [15/20] | Batch [200/469] | Loss D: 0.6968 | Loss G: 2.6189

Epoch [15/20] | Batch [250/469] | Loss D: 0.7948 | Loss G: 2.0796

Epoch [15/20] | Batch [300/469] | Loss D: 0.9306 | Loss G: 1.6763

Epoch [15/20] | Batch [350/469] | Loss D: 0.9299 | Loss G: 1.6771

Epoch [15/20] | Batch [400/469] | Loss D: 0.7415 | Loss G: 1.4402

Epoch [15/20] | Batch [450/469] | Loss D: 0.6741 | Loss G: 3.6130

Epoch [16/20] | Batch [0/469] | Loss D: 0.6626 | Loss G: 0.6639

Epoch [16/20] | Batch [50/469] | Loss D: 1.0347 | Loss G: 2.2367

Epoch [16/20] | Batch [100/469] | Loss D: 0.8694 | Loss G: 2.6128

Epoch [16/20] | Batch [150/469] | Loss D: 0.6732 | Loss G: 3.4630

Epoch [16/20] | Batch [200/469] | Loss D: 0.7178 | Loss G: 1.8072

Epoch [16/20] | Batch [250/469] | Loss D: 0.6804 | Loss G: 3.0593

Epoch [16/20] | Batch [300/469] | Loss D: 1.1273 | Loss G: 3.0471

Epoch [16/20] | Batch [350/469] | Loss D: 1.0540 | Loss G: 1.3286

Epoch [16/20] | Batch [400/469] | Loss D: 0.9364 | Loss G: 1.4344

Epoch [16/20] | Batch [450/469] | Loss D: 0.7399 | Loss G: 1.7272

Epoch [17/20] | Batch [0/469] | Loss D: 0.6756 | Loss G: 2.9577

Epoch [17/20] | Batch [50/469] | Loss D: 0.7366 | Loss G: 2.0685

Epoch [17/20] | Batch [100/469] | Loss D: 0.8686 | Loss G: 1.8757

Epoch [17/20] | Batch [150/469] | Loss D: 0.7398 | Loss G: 2.2752

Epoch [17/20] | Batch [200/469] | Loss D: 0.7116 | Loss G: 2.0095

Epoch [17/20] | Batch [250/469] | Loss D: 0.6575 | Loss G: 1.4080

Epoch [17/20] | Batch [300/469] | Loss D: 0.8262 | Loss G: 2.1688

Epoch [17/20] | Batch [350/469] | Loss D: 0.8233 | Loss G: 1.9111

Epoch [17/20] | Batch [400/469] | Loss D: 0.7287 | Loss G: 1.5342

Epoch [17/20] | Batch [450/469] | Loss D: 0.6668 | Loss G: 0.8807

Epoch [18/20] | Batch [0/469] | Loss D: 0.6579 | Loss G: 2.2685

Epoch [18/20] | Batch [50/469] | Loss D: 0.7302 | Loss G: 2.2726

Epoch [18/20] | Batch [100/469] | Loss D: 0.7309 | Loss G: 3.2043

Epoch [18/20] | Batch [150/469] | Loss D: 0.6820 | Loss G: 1.4188

Epoch [18/20] | Batch [200/469] | Loss D: 0.7248 | Loss G: 3.3850

Epoch [18/20] | Batch [250/469] | Loss D: 0.6572 | Loss G: 1.9384

Epoch [18/20] | Batch [300/469] | Loss D: 0.7678 | Loss G: 1.8783

Epoch [18/20] | Batch [350/469] | Loss D: 0.7120 | Loss G: 2.3230

Epoch [18/20] | Batch [400/469] | Loss D: 0.7105 | Loss G: 2.3518

Epoch [18/20] | Batch [450/469] | Loss D: 0.6637 | Loss G: 3.3475

Epoch [19/20] | Batch [0/469] | Loss D: 1.1106 | Loss G: 3.4016

Epoch [19/20] | Batch [50/469] | Loss D: 0.7051 | Loss G: 3.4518

Epoch [19/20] | Batch [100/469] | Loss D: 0.7397 | Loss G: 2.1134

Epoch [19/20] | Batch [150/469] | Loss D: 0.7456 | Loss G: 1.4523

Epoch [19/20] | Batch [200/469] | Loss D: 0.6756 | Loss G: 1.1840

Epoch [19/20] | Batch [250/469] | Loss D: 0.7366 | Loss G: 1.7398

Epoch [19/20] | Batch [300/469] | Loss D: 0.6858 | Loss G: 2.9870

Epoch [19/20] | Batch [350/469] | Loss D: 0.6895 | Loss G: 1.8878

Epoch [19/20] | Batch [400/469] | Loss D: 0.6808 | Loss G: 1.3570

Epoch [19/20] | Batch [450/469] | Loss D: 0.8016 | Loss G: 2.1683

Epoch [20/20] | Batch [0/469] | Loss D: 0.7355 | Loss G: 2.1935

Epoch [20/20] | Batch [50/469] | Loss D: 0.7750 | Loss G: 2.5211

Epoch [20/20] | Batch [100/469] | Loss D: 0.6912 | Loss G: 1.6371

Epoch [20/20] | Batch [150/469] | Loss D: 0.7779 | Loss G: 2.0711

Epoch [20/20] | Batch [200/469] | Loss D: 0.7376 | Loss G: 2.9342

Epoch [20/20] | Batch [250/469] | Loss D: 0.6559 | Loss G: 2.1006

Epoch [20/20] | Batch [300/469] | Loss D: 0.6697 | Loss G: 0.8720

Epoch [20/20] | Batch [350/469] | Loss D: 0.9896 | Loss G: 2.9946

Epoch [20/20] | Batch [400/469] | Loss D: 0.7127 | Loss G: 2.8892

Epoch [20/20] | Batch [450/469] | Loss D: 0.7422 | Loss G: 3.0263

训练完成!模型和图像已保存到 /mnt/dcgan_results

七、DCGAN 的优势与局限性

核心优势

- 训练稳定性提升:通过卷积层和批量归一化的设计,大幅降低了模式崩溃和梯度消失的概率。

- 生成质量高:能够生成具有清晰空间结构的图像(如手写数字、人脸、风景等)。

- 参数效率高:卷积层的权值共享特性,减少了参数量,训练速度快于全连接层 GAN。

- 迁移性强:架构简单通用,可作为后续复杂生成模型(如 CycleGAN、StyleGAN)的基础。

2. 局限性

- 生成图像分辨率有限:DCGAN 主要适用于 64×64 及以下分辨率的图像,高分辨率图像生成效果较差。

- 模式多样性不足:仍存在轻微的模式崩溃问题,生成的样本多样性有限。

- 训练依赖超参数:学习率、批次大小等超参数对训练结果影响较大,调参成本高。

- 缺乏语义控制:无法直接控制生成图像的语义特征(如生成 "戴眼镜的人脸""红色的猫")。

八、DCGAN 的典型应用

- 图像生成:生成手写数字(MNIST 数据集)、人脸(CelebA 数据集)、风景、动漫角色等。

- 图像修复:补全图像的缺失区域(如老照片修复)。

- 数据增强:生成训练样本,提升分类、检测等任务的模型性能。

- 风格迁移:作为基础架构,结合其他技术实现图像风格转换。

九、DCGAN 与传统 GAN 的对比

| 特性 | 传统 GAN | DCGAN |

|---|---|---|

| 网络结构 | 全连接层为主 | 卷积层 + 转置卷积层为主 |

| 空间特征提取 | 无,破坏图像结构 | 强,保留空间信息 |

| 训练稳定性 | 低,易模式崩溃 | 高,梯度流动顺畅 |

| 生成图像质量 | 低,模糊不清 | 高,细节丰富 |

| 参数量 | 大 | 小,权值共享 |

十、总结

DCGAN是一种改进的生成对抗网络,通过卷积层替代全连接层,显著提升了图像生成质量和训练稳定性。其核心架构包括生成器(使用转置卷积上采样)和判别器(使用步幅卷积下采样),并引入批量归一化和特定激活函数优化训练过程。实验表明,DCGAN能有效生成清晰的手写数字图像,解决了传统GAN的模式崩溃和梯度消失问题。虽然在高分辨率图像生成和语义控制方面存在局限,但其简洁通用的架构为后续生成模型奠定了基础,在图像修复、数据增强等领域具有重要应用价值。