ELK + Redis Docker 企业级部署落地方案

📋 方案概述

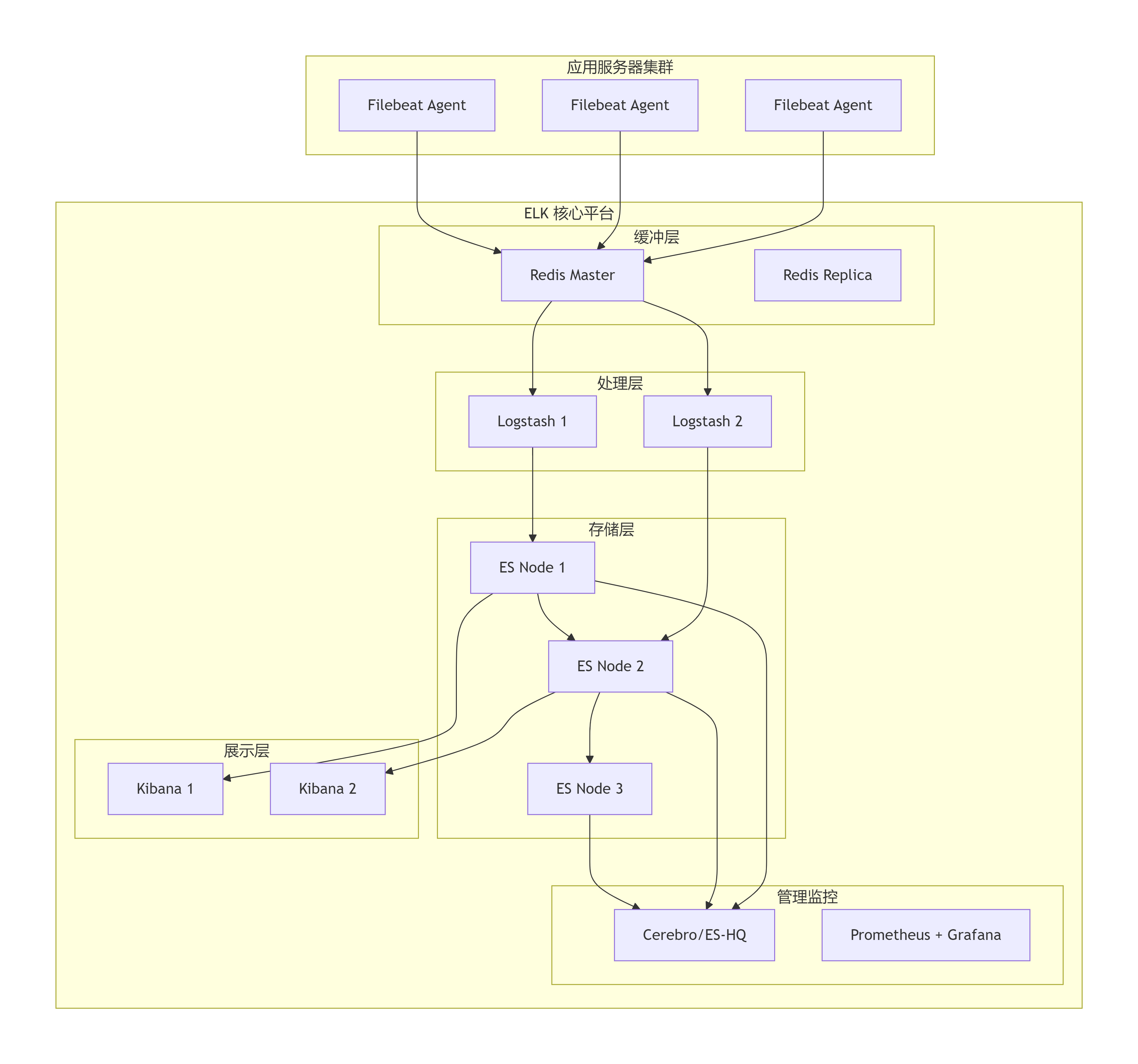

架构设计

🚀 一、准备工作

1.1 环境要求

# 最低硬件配置

- 内存: 16GB RAM (推荐32GB+)

- 存储: 200GB SSD (推荐NVMe)

- CPU: 8核 (推荐16核+)

- 网络: 千兆网卡

# 软件要求

- Docker 20.10+

- Docker Compose 2.0+

- 操作系统: Ubuntu 20.04/22.04, CentOS 8+

# 端口规划

┌─────────────┬─────────┬───────────────┐

│ 服务 │ 端口 │ 用途 │

├─────────────┼─────────┼───────────────┤

│ Elasticsearch│ 9200 │ HTTP API │

│ Elasticsearch│ 9300 │ 节点通信 │

│ Kibana │ 5601 │ Web界面 │

│ Logstash │ 5044 │ Beats输入 │

│ Logstash │ 5000 │ TCP输入 │

│ Redis │ 6379 │ 缓存队列 │

│ Cerebro │ 9000 │ ES集群管理 │

│ Prometheus │ 9090 │ 监控 │

│ Grafana │ 3000 │ 可视化 │

└─────────────┴─────────┴───────────────┘1.2 目录结构

elk-docker-enterprise/

├── .env # 环境变量配置

├── docker-compose.yml # 主编排文件

├── README.md # 部署文档

├── LICENSE

├── scripts/ # 维护脚本

│ ├── init.sh

│ ├── backup.sh

│ ├── restore.sh

│ └── health-check.sh

├── configs/ # 配置文件

│ ├── elasticsearch/

│ │ ├── elasticsearch.yml

│ │ ├── jvm.options

│ │ └── log4j2.properties

│ ├── kibana/

│ │ └── kibana.yml

│ ├── logstash/

│ │ ├── logstash.yml

│ │ ├── pipelines.yml

│ │ ├── patterns/ # Grok模式

│ │ └── conf.d/ # 管道配置

│ ├── filebeat/

│ │ └── filebeat.yml

│ └── redis/

│ └── redis.conf

├── data/ # 持久化数据

│ ├── elasticsearch/

│ ├── redis/

│ ├── logstash/

│ └── prometheus/

├── logs/ # 容器日志

├── certificates/ # TLS证书

│ ├── ca.crt

│ ├── elasticsearch.crt

│ └── elasticsearch.key

└── grafana/

├── dashboards/

└── provisioning/1.3 创建部署目录

# 创建项目目录结构

mkdir -p elk-docker-enterprise/{configs,data,logs,scripts,certificates,grafana}

cd elk-docker-enterprise

# 创建子目录

mkdir -p configs/{elasticsearch,kibana,logstash/{patterns,conf.d},filebeat,redis}

mkdir -p data/{elasticsearch,redis,logstash,prometheus}

mkdir -p grafana/{dashboards,provisioning}

mkdir -p certificates

mkdir -p logs/{elasticsearch,kibana,logstash,filebeat,redis}🐳 二、Docker Compose 编排文件

2.1 环境变量文件 (.env)

# ELK 版本配置

ELK_VERSION=8.11.0

REDIS_VERSION=7.2

# 网络配置

NETWORK_NAME=elk-network

SUBNET=172.20.0.0/16

# Elasticsearch 配置

ES_CLUSTER_NAME=elk-enterprise-cluster

ES_NODE_NAME=node-1

ES_DISCOVERY_TYPE=single-node

ES_HEAP_SIZE=4g

ES_JAVA_OPTS=-Xms4g -Xmx4g

# 安全配置

ELASTIC_PASSWORD=YourSecurePassword123!

KIBANA_PASSWORD=KibanaSecurePass456!

LOGSTASH_SYSTEM_PASSWORD=LogstashSystem789!

BEATS_SYSTEM_PASSWORD=BeatsSystemPass321!

# Redis 配置

REDIS_PASSWORD=RedisSecurePass654!

REDIS_MAXMEMORY=2gb

# 存储路径

ELK_DATA_PATH=/data/elk

ELK_LOGS_PATH=/var/log/elk

# 资源限制

ES_CPU_LIMIT=4

ES_MEMORY_LIMIT=8g

LOGSTASH_CPU_LIMIT=2

LOGSTASH_MEMORY_LIMIT=4g

KIBANA_CPU_LIMIT=1

KIBANA_MEMORY_LIMIT=2g

# 节点数量

ES_NODES_COUNT=3

LOGSTASH_NODES_COUNT=2

KIBANA_NODES_COUNT=22.2 Docker Compose 主文件 (docker-compose.yml)

version: '3.8'

networks:

elk-network:

driver: bridge

ipam:

config:

- subnet: ${SUBNET:-172.20.0.0/16}

attachable: true

volumes:

elasticsearch-data:

driver: local

driver_opts:

type: none

o: bind

device: ${ELK_DATA_PATH:-./data}/elasticsearch

redis-data:

driver: local

driver_opts:

type: none

o: bind

device: ${ELK_DATA_PATH:-./data}/redis

prometheus-data:

driver: local

services:

# ============== Redis 集群(缓冲队列)==============

redis-master:

image: redis:${REDIS_VERSION:-7.2}-alpine

container_name: redis-master

hostname: redis-master

restart: unless-stopped

command: redis-server /usr/local/etc/redis/redis.conf --requirepass ${REDIS_PASSWORD}

environment:

- REDIS_REPLICATION_MODE=master

volumes:

- ./configs/redis/redis.conf:/usr/local/etc/redis/redis.conf

- redis-data:/data

- ./logs/redis:/var/log/redis

ports:

- "6379:6379"

networks:

elk-network:

ipv4_address: 172.20.0.10

healthcheck:

test: ["CMD", "redis-cli", "-a", "${REDIS_PASSWORD}", "ping"]

interval: 10s

timeout: 5s

retries: 3

deploy:

resources:

limits:

cpus: '1'

memory: 2G

reservations:

cpus: '0.5'

memory: 1G

redis-replica:

image: redis:${REDIS_VERSION:-7.2}-alpine

container_name: redis-replica

hostname: redis-replica

restart: unless-stopped

command: redis-server /usr/local/etc/redis/redis.conf --requirepass ${REDIS_PASSWORD} --slaveof redis-master 6379

environment:

- REDIS_REPLICATION_MODE=slave

- REDIS_MASTER_HOST=redis-master

- REDIS_MASTER_PORT_NUMBER=6379

- REDIS_MASTER_PASSWORD=${REDIS_PASSWORD}

volumes:

- ./configs/redis/redis.conf:/usr/local/etc/redis/redis.conf

depends_on:

- redis-master

networks:

elk-network:

ipv4_address: 172.20.0.11

healthcheck:

test: ["CMD", "redis-cli", "-a", "${REDIS_PASSWORD}", "ping"]

interval: 10s

timeout: 5s

retries: 3

# ============== Elasticsearch 集群 ==============

elasticsearch-node1:

image: docker.elastic.co/elasticsearch/elasticsearch:${ELK_VERSION}

container_name: elasticsearch-node1

hostname: elasticsearch-node1

restart: unless-stopped

environment:

- node.name=elasticsearch-node1

- cluster.name=${ES_CLUSTER_NAME:-elk-enterprise-cluster}

- cluster.initial_master_nodes=elasticsearch-node1,elasticsearch-node2,elasticsearch-node3

- discovery.seed_hosts=elasticsearch-node2,elasticsearch-node3

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=${ES_JAVA_OPTS:--Xms4g -Xmx4g}"

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=false

- xpack.security.transport.ssl.enabled=false

- xpack.license.self_generated.type=basic

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- LOGSTASH_SYSTEM_PASSWORD=${LOGSTASH_SYSTEM_PASSWORD}

- KIBANA_SYSTEM_PASSWORD=${KIBANA_PASSWORD}

- BEATS_SYSTEM_PASSWORD=${BEATS_SYSTEM_PASSWORD}

ulimits:

memlock:

soft: -1

hard: -1

nofile:

soft: 65536

hard: 65536

volumes:

- elasticsearch-data:/usr/share/elasticsearch/data

- ./configs/elasticsearch/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- ./configs/elasticsearch/jvm.options:/usr/share/elasticsearch/config/jvm.options

- ./logs/elasticsearch:/usr/share/elasticsearch/logs

ports:

- "9200:9200"

- "9300:9300"

networks:

elk-network:

ipv4_address: 172.20.0.20

healthcheck:

test: ["CMD-SHELL", "curl -s -u elastic:${ELASTIC_PASSWORD} http://localhost:9200/_cluster/health | grep -q '\"status\":\"green\"'"]

interval: 30s

timeout: 10s

retries: 3

start_period: 60s

deploy:

resources:

limits:

cpus: '${ES_CPU_LIMIT:-4}'

memory: ${ES_MEMORY_LIMIT:-8g}

reservations:

cpus: '${ES_CPU_LIMIT:-2}'

memory: ${ES_MEMORY_LIMIT:-4g}

elasticsearch-node2:

image: docker.elastic.co/elasticsearch/elasticsearch:${ELK_VERSION}

container_name: elasticsearch-node2

hostname: elasticsearch-node2

restart: unless-stopped

environment:

- node.name=elasticsearch-node2

- cluster.name=${ES_CLUSTER_NAME:-elk-enterprise-cluster}

- cluster.initial_master_nodes=elasticsearch-node1,elasticsearch-node2,elasticsearch-node3

- discovery.seed_hosts=elasticsearch-node1,elasticsearch-node3

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=${ES_JAVA_OPTS:--Xms4g -Xmx4g}"

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=false

- xpack.security.transport.ssl.enabled=false

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- elasticsearch-data-node2:/usr/share/elasticsearch/data

- ./configs/elasticsearch/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- ./logs/elasticsearch-node2:/usr/share/elasticsearch/logs

networks:

elk-network:

ipv4_address: 172.20.0.21

depends_on:

- elasticsearch-node1

healthcheck:

test: ["CMD-SHELL", "curl -s http://localhost:9200/_cluster/health | grep -q '\"status\":\"green\"'"]

interval: 30s

timeout: 10s

retries: 3

elasticsearch-node3:

image: docker.elastic.co/elasticsearch/elasticsearch:${ELK_VERSION}

container_name: elasticsearch-node3

hostname: elasticsearch-node3

restart: unless-stopped

environment:

- node.name=elasticsearch-node3

- cluster.name=${ES_CLUSTER_NAME:-elk-enterprise-cluster}

- cluster.initial_master_nodes=elasticsearch-node1,elasticsearch-node2,elasticsearch-node3

- discovery.seed_hosts=elasticsearch-node1,elasticsearch-node2

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=${ES_JAVA_OPTS:--Xms4g -Xmx4g}"

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=false

- xpack.security.transport.ssl.enabled=false

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- elasticsearch-data-node3:/usr/share/elasticsearch/data

- ./configs/elasticsearch/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- ./logs/elasticsearch-node3:/usr/share/elasticsearch/logs

networks:

elk-network:

ipv4_address: 172.20.0.22

depends_on:

- elasticsearch-node1

healthcheck:

test: ["CMD-SHELL", "curl -s http://localhost:9200/_cluster/health | grep -q '\"status\":\"green\"'"]

interval: 30s

timeout: 10s

retries: 3

# ============== Logstash 集群 ==============

logstash-node1:

image: docker.elastic.co/logstash/logstash:${ELK_VERSION}

container_name: logstash-node1

hostname: logstash-node1

restart: unless-stopped

environment:

- LS_JAVA_OPTS=-Xmx2g -Xms2g

- XPACK_MONITORING_ENABLED=true

- XPACK_MONITORING_ELASTICSEARCH_URL=http://elasticsearch-node1:9200

- XPACK_MONITORING_ELASTICSEARCH_USERNAME=logstash_system

- XPACK_MONITORING_ELASTICSEARCH_PASSWORD=${LOGSTASH_SYSTEM_PASSWORD}

- ELASTICSEARCH_HOSTS=http://elasticsearch-node1:9200

volumes:

- ./configs/logstash/logstash.yml:/usr/share/logstash/config/logstash.yml

- ./configs/logstash/pipelines.yml:/usr/share/logstash/config/pipelines.yml

- ./configs/logstash/conf.d:/usr/share/logstash/pipeline

- ./configs/logstash/patterns:/usr/share/logstash/patterns

- ./logs/logstash:/usr/share/logstash/logs

ports:

- "5044:5044" # Beats input

- "5000:5000" # TCP input

- "5001:5001" # UDP input

- "9600:9600" # Logstash monitoring API

networks:

elk-network:

ipv4_address: 172.20.0.30

depends_on:

- elasticsearch-node1

- redis-master

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:9600"]

interval: 30s

timeout: 10s

retries: 3

start_period: 60s

deploy:

resources:

limits:

cpus: '${LOGSTASH_CPU_LIMIT:-2}'

memory: ${LOGSTASH_MEMORY_LIMIT:-4g}

reservations:

cpus: '1'

memory: 2g

logstash-node2:

image: docker.elastic.co/logstash/logstash:${ELK_VERSION}

container_name: logstash-node2

hostname: logstash-node2

restart: unless-stopped

environment:

- LS_JAVA_OPTS=-Xmx2g -Xms2g

- XPACK_MONITORING_ENABLED=true

- XPACK_MONITORING_ELASTICSEARCH_URL=http://elasticsearch-node2:9200

- XPACK_MONITORING_ELASTICSEARCH_USERNAME=logstash_system

- XPACK_MONITORING_ELASTICSEARCH_PASSWORD=${LOGSTASH_SYSTEM_PASSWORD}

- ELASTICSEARCH_HOSTS=http://elasticsearch-node2:9200

volumes:

- ./configs/logstash/logstash.yml:/usr/share/logstash/config/logstash.yml

- ./configs/logstash/pipelines.yml:/usr/share/logstash/config/pipelines.yml

- ./configs/logstash/conf.d:/usr/share/logstash/pipeline

- ./configs/logstash/patterns:/usr/share/logstash/patterns

- ./logs/logstash-node2:/usr/share/logstash/logs

networks:

elk-network:

ipv4_address: 172.20.0.31

depends_on:

- elasticsearch-node2

- redis-master

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:9600"]

interval: 30s

timeout: 10s

retries: 3

# ============== Kibana 集群 ==============

kibana-node1:

image: docker.elastic.co/kibana/kibana:${ELK_VERSION}

container_name: kibana-node1

hostname: kibana-node1

restart: unless-stopped

environment:

- SERVER_NAME=kibana-node1

- ELASTICSEARCH_HOSTS=http://elasticsearch-node1:9200

- ELASTICSEARCH_USERNAME=kibana_system

- ELASTICSEARCH_PASSWORD=${KIBANA_PASSWORD}

- SERVER_PUBLICBASEURL=http://localhost:5601

- XPACK_SECURITY_ENCRYPTIONKEY=${KIBANA_ENCRYPTION_KEY:-something_at_least_32_characters}

- XPACK_REPORTING_ENCRYPTIONKEY=${KIBANA_ENCRYPTION_KEY:-something_at_least_32_characters}

volumes:

- ./configs/kibana/kibana.yml:/usr/share/kibana/config/kibana.yml

- ./logs/kibana:/usr/share/kibana/logs

ports:

- "5601:5601"

networks:

elk-network:

ipv4_address: 172.20.0.40

depends_on:

- elasticsearch-node1

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:5601/api/status"]

interval: 30s

timeout: 10s

retries: 3

start_period: 90s

deploy:

resources:

limits:

cpus: '${KIBANA_CPU_LIMIT:-1}'

memory: ${KIBANA_MEMORY_LIMIT:-2g}

reservations:

cpus: '0.5'

memory: 1g

kibana-node2:

image: docker.elastic.co/kibana/kibana:${ELK_VERSION}

container_name: kibana-node2

hostname: kibana-node2

restart: unless-stopped

environment:

- SERVER_NAME=kibana-node2

- ELASTICSEARCH_HOSTS=http://elasticsearch-node2:9200

- ELASTICSEARCH_USERNAME=kibana_system

- ELASTICSEARCH_PASSWORD=${KIBANA_PASSWORD}

- SERVER_PUBLICBASEURL=http://localhost:5602

volumes:

- ./configs/kibana/kibana.yml:/usr/share/kibana/config/kibana.yml

- ./logs/kibana-node2:/usr/share/kibana/logs

ports:

- "5602:5601"

networks:

elk-network:

ipv4_address: 172.20.0.41

depends_on:

- elasticsearch-node2

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:5601/api/status"]

interval: 30s

timeout: 10s

retries: 3

# ============== 监控与运维工具 ==============

cerebro:

image: lmenezes/cerebro:latest

container_name: cerebro

restart: unless-stopped

ports:

- "9000:9000"

environment:

- ES_HOST=http://elasticsearch-node1:9200

- ES_USER=elastic

- ES_PASSWORD=${ELASTIC_PASSWORD}

networks:

elk-network:

ipv4_address: 172.20.0.50

depends_on:

- elasticsearch-node1

prometheus:

image: prom/prometheus:latest

container_name: prometheus

restart: unless-stopped

volumes:

- ./configs/prometheus/prometheus.yml:/etc/prometheus/prometheus.yml

- prometheus-data:/prometheus

ports:

- "9090:9090"

networks:

elk-network:

ipv4_address: 172.20.0.51

command:

- '--config.file=/etc/prometheus/prometheus.yml'

- '--storage.tsdb.path=/prometheus'

- '--web.console.libraries=/etc/prometheus/console_libraries'

- '--web.console.templates=/etc/prometheus/consoles'

- '--storage.tsdb.retention.time=30d'

- '--web.enable-lifecycle'

grafana:

image: grafana/grafana:latest

container_name: grafana

restart: unless-stopped

environment:

- GF_SECURITY_ADMIN_PASSWORD=admin

- GF_INSTALL_PLUGINS=grafana-piechart-panel

volumes:

- ./grafana/provisioning:/etc/grafana/provisioning

- ./grafana/dashboards:/var/lib/grafana/dashboards

ports:

- "3000:3000"

networks:

elk-network:

ipv4_address: 172.20.0.52

depends_on:

- prometheus⚙️ 三、配置文件

3.1 Elasticsearch 配置 (configs/elasticsearch/elasticsearch.yml)

# 集群配置

cluster.name: ${CLUSTER_NAME}

node.name: ${NODE_NAME}

node.roles: [master, data, ingest]

# 网络配置

network.host: 0.0.0.0

http.port: 9200

transport.port: 9300

http.cors.enabled: true

http.cors.allow-origin: "*"

# 发现配置

discovery.seed_hosts: ["elasticsearch-node1", "elasticsearch-node2", "elasticsearch-node3"]

cluster.initial_master_nodes: ["elasticsearch-node1", "elasticsearch-node2", "elasticsearch-node3"]

# 安全配置(基础版)

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: false

xpack.security.http.ssl.enabled: false

# 内存锁定

bootstrap.memory_lock: true

# 线程池配置

thread_pool.write.queue_size: 1000

thread_pool.search.queue_size: 1000

# 索引配置

action.auto_create_index: .monitoring*,.watches,.triggered_watches,.watcher-history*,.ml*

# 分片配置

cluster.routing.allocation.disk.threshold_enabled: true

cluster.routing.allocation.disk.watermark.low: 85%

cluster.routing.allocation.disk.watermark.high: 90%

cluster.routing.allocation.disk.watermark.flood_stage: 95%

# 慢查询日志

index.search.slowlog.threshold.query.warn: 10s

index.search.slowlog.threshold.query.info: 5s

index.search.slowlog.threshold.query.debug: 2s

index.search.slowlog.threshold.query.trace: 500ms

index.search.slowlog.threshold.fetch.warn: 1s

index.search.slowlog.threshold.fetch.info: 800ms

index.search.slowlog.threshold.fetch.debug: 500ms

index.search.slowlog.threshold.fetch.trace: 200ms

# 索引生命周期管理

xpack.ilm.enabled: true3.2 Logstash 配置

configs/logstash/logstash.yml

http.host: "0.0.0.0"

http.port: 9600

log.level: info

pipeline.workers: 4

pipeline.batch.size: 125

pipeline.batch.delay: 50

queue.type: persisted

queue.max_bytes: 1024mb

queue.checkpoint.writes: 1024

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch.username: "logstash_system"

xpack.monitoring.elasticsearch.password: "${LOGSTASH_SYSTEM_PASSWORD}"

xpack.monitoring.elasticsearch.hosts: ["http://elasticsearch-node1:9200"]

xpack.monitoring.elasticsearch.sniffing: falseconfigs/logstash/pipelines.yml

- pipeline.id: main

path.config: "/usr/share/logstash/pipeline/*.conf"

pipeline.workers: 4

queue.type: persisted

queue.max_bytes: 1gbconfigs/logstash/conf.d/redis-to-es.conf

input {

# Redis 输入(主队列)

redis {

host => "redis-master"

port => 6379

password => "${REDIS_PASSWORD}"

key => "filebeat-logs"

data_type => "list"

threads => 2

batch_count => 125

db => 0

}

# 备用 TCP 输入

tcp {

port => 5000

codec => json_lines

tags => ["tcp-input"]

}

# 健康检查端点

http {

port => 8080

response_headers => {

"Content-Type" => "text/plain"

}

response_code => 200

}

}

filter {

# 通用 JSON 解析

if [message] =~ /^{.*}$/ {

json {

source => "message"

remove_field => ["message"]

skip_on_invalid_json => true

}

}

# 添加元数据

mutate {

add_field => {

"[@metadata][logstash_host]" => "%{host}"

"[@metadata][timestamp]" => "%{@timestamp}"

}

}

# 解析时间戳

date {

match => ["timestamp", "ISO8601", "UNIX", "UNIX_MS"]

target => "@timestamp"

remove_field => ["timestamp"]

}

# GeoIP 处理

if [clientip] {

geoip {

source => "clientip"

target => "geo"

default_database_type => "City"

}

}

# 用户代理解析

if [agent] {

useragent {

source => "agent"

target => "user_agent"

}

}

# 去除敏感信息(示例)

if [password] {

mutate {

replace => { "password" => "[FILTERED]" }

}

}

# 根据标签路由

if "nginx" in [tags] {

# Nginx 日志特定处理

grok {

patterns_dir => ["/usr/share/logstash/patterns"]

match => { "message" => '%{NGINXACCESS}' }

}

}

if "apache" in [tags] {

# Apache 日志特定处理

grok {

match => { "message" => '%{COMBINEDAPACHELOG}' }

}

}

}

output {

# 输出到 Elasticsearch

elasticsearch {

hosts => ["elasticsearch-node1:9200", "elasticsearch-node2:9200"]

index => "logs-%{+YYYY.MM.dd}"

user => "logstash_internal"

password => "${LOGSTASH_SYSTEM_PASSWORD}"

template => "/usr/share/logstash/templates/logs-template.json"

template_name => "logs"

template_overwrite => true

action => "index"

retry_on_conflict => 3

timeout => 300

}

# 输出到死信队列(用于调试)

if "_jsonparsefailure" in [tags] {

file {

path => "/usr/share/logstash/dead_letter_queue/%{+YYYY-MM-dd}/%{host}.log"

codec => line { format => "%{message}" }

}

}

# 调试输出(开发环境)

if [env] == "development" {

stdout {

codec => rubydebug

}

}

}3.3 Kibana 配置 (configs/kibana/kibana.yml)

server.name: kibana

server.host: "0.0.0.0"

server.port: 5601

elasticsearch.hosts: ["http://elasticsearch-node1:9200", "http://elasticsearch-node2:9200"]

elasticsearch.username: "kibana_system"

elasticsearch.password: "${KIBANA_PASSWORD}"

monitoring.ui.container.elasticsearch.enabled: true

monitoring.ui.container.logstash.enabled: true

xpack.security.enabled: true

xpack.encryptedSavedObjects.encryptionKey: "xpack_encryption_key_here_for_production"

xpack.reporting.encryptionKey: "xpack_encryption_key_here_for_production"

# 国际化

i18n.locale: "zh-CN"

# 会话配置

server.publicBaseUrl: "http://localhost:5601"

server.maxPayloadBytes: 1048576

# 企业搜索

enterpriseSearch.host: "http://enterprise-search:3002"3.4 Redis 配置 (configs/redis/redis.conf)

# 基础配置

bind 0.0.0.0

port 6379

requirepass ${REDIS_PASSWORD}

maxmemory ${REDIS_MAXMEMORY:-2gb}

maxmemory-policy allkeys-lru

# 持久化

appendonly yes

appendfsync everysec

dir /data

# 日志

loglevel notice

logfile /var/log/redis/redis-server.log

# 性能优化

tcp-backlog 511

timeout 0

tcp-keepalive 300

daemonize no

# 内存优化

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

list-compress-depth 0

# 复制配置(主节点)

replica-serve-stale-data yes

replica-read-only yes

repl-diskless-sync no

repl-diskless-sync-delay 5

# 安全

rename-command FLUSHDB ""

rename-command FLUSHALL ""

rename-command CONFIG ""

rename-command KEYS ""3.5 Filebeat 配置(应用服务器)

# configs/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/*.log

- /var/log/nginx/*.log

- /var/log/apache2/*.log

- /var/log/syslog

- /var/log/messages

fields:

log_type: "system"

environment: "production"

application: "${HOSTNAME}"

fields_under_root: true

json.keys_under_root: true

json.add_error_key: true

tags: ["system", "os"]

- type: log

enabled: true

paths:

- /opt/applications/*/logs/*.log

multiline.pattern: '^\d{4}-\d{2}-\d{2}'

multiline.negate: true

multiline.match: after

fields:

log_type: "application"

tags: ["application"]

# 输出到 Redis

output.redis:

enabled: true

hosts: ["${REDIS_HOST}:6379"]

password: "${REDIS_PASSWORD}"

key: "filebeat-logs"

db: 0

timeout: 5

reconnect_interval: 1

max_retries: 3

bulk_max_size: 50

# 进程配置

processors:

- add_host_metadata:

when.not.contains.tags: forwarded

- add_cloud_metadata: ~

- add_docker_metadata: ~

- drop_fields:

fields: ["agent.ephemeral_id", "agent.id", "agent.name", "agent.type", "agent.version", "ecs.version", "host.architecture", "host.os.family", "host.os.kernel", "host.os.name", "host.os.platform", "host.os.version", "host.os.codename", "log.offset", "input.type"]

- rename:

fields:

- from: "message"

to: "raw_message"

ignore_missing: true

fail_on_error: false

# 日志配置

logging.level: info

logging.to_files: true

logging.files:

path: /var/log/filebeat

name: filebeat.log

keepfiles: 7

permissions: 0644🚀 四、部署脚本

4.1 初始化脚本 (scripts/init.sh)

#!/bin/bash

# ELK Docker 企业级部署初始化脚本

set -e

echo "========================================="

echo " ELK Docker 企业级部署初始化"

echo "========================================="

# 检查 Docker 和 Docker Compose

check_dependencies() {

echo "检查依赖..."

# 检查 Docker

if ! command -v docker &> /dev/null; then

echo "❌ Docker 未安装"

exit 1

fi

# 检查 Docker Compose

if ! command -v docker-compose &> /dev/null; then

echo "❌ Docker Compose 未安装"

exit 1

fi

# 检查 Docker 服务状态

if ! systemctl is-active --quiet docker; then

echo "启动 Docker 服务..."

sudo systemctl start docker

sudo systemctl enable docker

fi

echo "✅ 依赖检查通过"

}

# 创建必要的目录和权限

setup_directories() {

echo "创建目录结构..."

# 创建数据目录

sudo mkdir -p ${ELK_DATA_PATH:-./data}/{elasticsearch,redis,logstash,prometheus}

sudo mkdir -p ${ELK_LOGS_PATH:-./logs}/{elasticsearch,kibana,logstash,filebeat,redis}

# 设置 Elasticsearch 数据目录权限(Elasticsearch 需要特定的权限)

if [ -d "${ELK_DATA_PATH:-./data}/elasticsearch" ]; then

echo "设置 Elasticsearch 目录权限..."

sudo chown -R 1000:1000 "${ELK_DATA_PATH:-./data}/elasticsearch"

sudo chmod -R 755 "${ELK_DATA_PATH:-./data}/elasticsearch"

fi

# 创建配置目录

mkdir -p configs/{elasticsearch,kibana,logstash/{patterns,conf.d},filebeat,redis,prometheus}

mkdir -p grafana/{dashboards,provisioning}

mkdir -p certificates

mkdir -p scripts

echo "✅ 目录结构创建完成"

}

# 生成配置文件

generate_configs() {

echo "生成配置文件..."

# 复制配置文件(如果不存在)

if [ ! -f "configs/elasticsearch/elasticsearch.yml" ]; then

cp -n templates/elasticsearch.yml configs/elasticsearch/elasticsearch.yml

fi

if [ ! -f "configs/kibana/kibana.yml" ]; then

cp -n templates/kibana.yml configs/kibana/kibana.yml

fi

if [ ! -f "configs/logstash/logstash.yml" ]; then

cp -n templates/logstash.yml configs/logstash/logstash.yml

fi

if [ ! -f "configs/redis/redis.conf" ]; then

cp -n templates/redis.conf configs/redis/redis.conf

fi

# 生成示例 Logstash 管道配置

if [ ! -f "configs/logstash/conf.d/redis-to-es.conf" ]; then

cat > configs/logstash/conf.d/redis-to-es.conf << 'EOF'

input {

redis {

host => "redis-master"

port => 6379

password => "${REDIS_PASSWORD}"

key => "filebeat-logs"

data_type => "list"

}

}

filter {

# 添加你的过滤器配置

}

output {

elasticsearch {

hosts => ["elasticsearch-node1:9200"]

index => "logs-%{+YYYY.MM.dd}"

user => "elastic"

password => "${ELASTIC_PASSWORD}"

}

}

EOF

fi

echo "✅ 配置文件生成完成"

}

# 生成环境变量文件

setup_env() {

if [ ! -f ".env" ]; then

echo "生成 .env 文件..."

# 生成随机密码

ELASTIC_PASSWORD=$(openssl rand -base64 32 | tr -dc 'a-zA-Z0-9' | head -c 16)

KIBANA_PASSWORD=$(openssl rand -base64 32 | tr -dc 'a-zA-Z0-9' | head -c 16)

REDIS_PASSWORD=$(openssl rand -base64 32 | tr -dc 'a-zA-Z0-9' | head -c 16)

LOGSTASH_SYSTEM_PASSWORD=$(openssl rand -base64 32 | tr -dc 'a-zA-Z0-9' | head -c 16)

BEATS_SYSTEM_PASSWORD=$(openssl rand -base64 32 | tr -dc 'a-zA-Z0-9' | head -c 16)

cat > .env << EOF

# ELK 版本配置

ELK_VERSION=8.11.0

REDIS_VERSION=7.2

# 网络配置

NETWORK_NAME=elk-network

SUBNET=172.20.0.0/16

# Elasticsearch 配置

ES_CLUSTER_NAME=elk-enterprise-cluster

ES_NODE_NAME=node-1

ES_DISCOVERY_TYPE=single-node

ES_HEAP_SIZE=4g

ES_JAVA_OPTS=-Xms4g -Xmx4g

# 安全配置

ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

KIBANA_PASSWORD=${KIBANA_PASSWORD}

LOGSTASH_SYSTEM_PASSWORD=${LOGSTASH_SYSTEM_PASSWORD}

BEATS_SYSTEM_PASSWORD=${BEATS_SYSTEM_PASSWORD}

# Redis 配置

REDIS_PASSWORD=${REDIS_PASSWORD}

REDIS_MAXMEMORY=2gb

# 存储路径

ELK_DATA_PATH=./data

ELK_LOGS_PATH=./logs

# 资源限制

ES_CPU_LIMIT=4

ES_MEMORY_LIMIT=8g

LOGSTASH_CPU_LIMIT=2

LOGSTASH_MEMORY_LIMIT=4g

KIBANA_CPU_LIMIT=1

KIBANA_MEMORY_LIMIT=2g

EOF

echo "✅ .env 文件已生成"

echo "⚠️ 请保存以下密码:"

echo " Elasticsearch: ${ELASTIC_PASSWORD}"

echo " Kibana: ${KIBANA_PASSWORD}"

echo " Redis: ${REDIS_PASSWORD}"

echo " Logstash System: ${LOGSTASH_SYSTEM_PASSWORD}"

else

echo "✅ .env 文件已存在"

fi

}

# 拉取 Docker 镜像

pull_images() {

echo "拉取 Docker 镜像..."

# 拉取 ELK 镜像

docker-compose pull elasticsearch-node1

docker-compose pull kibana-node1

docker-compose pull logstash-node1

docker-compose pull redis-master

# 拉取监控工具镜像

docker-compose pull cerebro

docker-compose pull prometheus

docker-compose pull grafana

echo "✅ 镜像拉取完成"

}

# 设置系统参数

setup_system_params() {

echo "设置系统参数..."

# 增加虚拟内存限制(Elasticsearch 需要)

if [ $(sysctl -n vm.max_map_count) -lt 262144 ]; then

echo "增加 vm.max_map_count..."

sudo sysctl -w vm.max_map_count=262144

echo "vm.max_map_count=262144" | sudo tee -a /etc/sysctl.conf

fi

# 增加文件描述符限制

if [ $(ulimit -n) -lt 65536 ]; then

echo "增加文件描述符限制..."

echo "* soft nofile 65536" | sudo tee -a /etc/security/limits.conf

echo "* hard nofile 65536" | sudo tee -a /etc/security/limits.conf

echo "session required pam_limits.so" | sudo tee -a /etc/pam.d/common-session

fi

# 禁用交换空间

if [ $(sysctl -n vm.swappiness) -gt 1 ]; then

echo "降低 swappiness..."

sudo sysctl -w vm.swappiness=1

echo "vm.swappiness=1" | sudo tee -a /etc/sysctl.conf

fi

sudo sysctl -p

echo "✅ 系统参数设置完成"

}

# 主函数

main() {

echo "开始 ELK Docker 部署初始化..."

check_dependencies

setup_directories

setup_env

generate_configs

setup_system_params

pull_images

echo ""

echo "========================================="

echo " 初始化完成!"

echo " 下一步:"

echo " 1. 检查 .env 文件中的配置"

echo " 2. 运行: docker-compose up -d"

echo " 3. 访问 Kibana: http://localhost:5601"

echo "========================================="

}

# 执行主函数

main "$@"4.2 启动脚本 (scripts/start.sh)

#!/bin/bash

# ELK Docker 启动脚本

set -e

echo "========================================="

echo " 启动 ELK Docker 集群"

echo "========================================="

# 加载环境变量

if [ -f .env ]; then

export $(cat .env | grep -v '^#' | xargs)

fi

start_services() {

echo "启动服务..."

# 按顺序启动服务

echo "1. 启动 Redis 集群..."

docker-compose up -d redis-master redis-replica

echo "等待 Redis 就绪..."

sleep 10

echo "2. 启动 Elasticsearch 集群..."

docker-compose up -d elasticsearch-node1

sleep 30

# 检查 Elasticsearch 健康状态

echo "检查 Elasticsearch 集群状态..."

for i in {1..30}; do

STATUS=$(curl -s -u elastic:${ELASTIC_PASSWORD} http://localhost:9200/_cluster/health | jq -r '.status')

if [ "$STATUS" = "green" ] || [ "$STATUS" = "yellow" ]; then

echo "✅ Elasticsearch 集群状态: $STATUS"

break

fi

echo "等待 Elasticsearch 就绪 ($i/30)..."

sleep 10

done

echo "3. 启动 Logstash 集群..."

docker-compose up -d logstash-node1 logstash-node2

echo "4. 启动 Kibana 集群..."

docker-compose up -d kibana-node1 kibana-node2

echo "5. 启动监控工具..."

docker-compose up -d cerebro prometheus grafana

echo "✅ 所有服务启动完成"

}

check_services() {

echo ""

echo "检查服务状态..."

services=("redis-master" "elasticsearch-node1" "kibana-node1" "logstash-node1" "cerebro" "prometheus" "grafana")

for service in "${services[@]}"; do

if docker-compose ps | grep -q "$service.*Up"; then

echo "✅ $service 运行正常"

else

echo "❌ $service 运行异常"

fi

done

}

show_access_info() {

echo ""

echo "========================================="

echo " 访问信息"

echo "========================================="

IP_ADDRESS=$(hostname -I | awk '{print $1}')

echo "🔗 Kibana:"

echo " - 主节点: http://${IP_ADDRESS}:5601"

echo " - 备节点: http://${IP_ADDRESS}:5602"

echo " - 用户名: elastic"

echo " - 密码: ${ELASTIC_PASSWORD}"

echo ""

echo "🔗 Elasticsearch API:"

echo " - URL: http://${IP_ADDRESS}:9200"

echo " - 用户名: elastic"

echo " - 密码: ${ELASTIC_PASSWORD}"

echo ""

echo "🔗 监控工具:"

echo " - Cerebro (ES集群管理): http://${IP_ADDRESS}:9000"

echo " - Prometheus: http://${IP_ADDRESS}:9090"

echo " - Grafana: http://${IP_ADDRESS}:3000 (admin/admin)"

echo ""

echo "🔗 日志收集:"

echo " - Redis: ${IP_ADDRESS}:6379"

echo " - Logstash TCP: ${IP_ADDRESS}:5000"

echo " - Logstash Beats: ${IP_ADDRESS}:5044"

}

main() {

echo "当前目录: $(pwd)"

# 检查 Docker Compose 文件

if [ ! -f "docker-compose.yml" ]; then

echo "❌ docker-compose.yml 不存在"

exit 1

fi

start_services

check_services

show_access_info

echo ""

echo "📋 下一步:"

echo " 1. 访问 Kibana 设置索引模式"

echo " 2. 配置 Filebeat 收集日志"

echo " 3. 查看 Grafana 仪表板"

}

main "$@"4.3 健康检查脚本 (scripts/health-check.sh)

#!/bin/bash

# ELK 集群健康检查脚本

set -e

echo "========================================="

echo " ELK 集群健康检查"

echo "========================================="

# 颜色定义

RED='\033[0;31m'

GREEN='\033[0;32m'

YELLOW='\033[1;33m'

NC='\033[0m' # No Color

# 加载环境变量

if [ -f .env ]; then

export $(cat .env | grep -v '^#' | xargs)

fi

check_docker_services() {

echo "1. Docker 服务状态:"

echo "----------------------------------------"

docker-compose ps --format "table {{.Name}}\t{{.Status}}\t{{.Ports}}" | while read line; do

if [[ $line == *"Up"* ]]; then

echo -e "${GREEN}✓${NC} $line"

elif [[ $line == *"Exit"* ]] || [[ $line == *"unhealthy"* ]]; then

echo -e "${RED}✗${NC} $line"

else

echo " $line"

fi

done

}

check_elasticsearch() {

echo ""

echo "2. Elasticsearch 集群健康:"

echo "----------------------------------------"

if command -v curl &> /dev/null && command -v jq &> /dev/null; then

ES_RESPONSE=$(curl -s -u elastic:${ELASTIC_PASSWORD} http://localhost:9200/_cluster/health)

ES_STATUS=$(echo $ES_RESPONSE | jq -r '.status')

ES_NODES=$(echo $ES_RESPONSE | jq -r '.number_of_nodes')

ES_DATA_NODES=$(echo $ES_RESPONSE | jq -r '.number_of_data_nodes')

ES_PENDING_TASKS=$(echo $ES_RESPONSE | jq -r '.number_of_pending_tasks')

case $ES_STATUS in

"green")

COLOR=$GREEN

STATUS_ICON="✓"

;;

"yellow")

COLOR=$YELLOW

STATUS_ICON="⚠"

;;

"red")

COLOR=$RED

STATUS_ICON="✗"

;;

*)

COLOR=$RED

STATUS_ICON="?"

;;

esac

echo -e " 状态: ${COLOR}${STATUS_ICON} ${ES_STATUS}${NC}"

echo " 节点数: $ES_NODES (数据节点: $ES_DATA_NODES)"

echo " 待处理任务: $ES_PENDING_TASKS"

# 检查索引状态

echo ""

echo " 索引状态:"

curl -s -u elastic:${ELASTIC_PASSWORD} "http://localhost:9200/_cat/indices?v&s=index" | head -10

else

echo " 需要 curl 和 jq 工具"

fi

}

check_kibana() {

echo ""

echo "3. Kibana 状态:"

echo "----------------------------------------"

if command -v curl &> /dev/null; then

if curl -s -f http://localhost:5601/api/status > /dev/null; then

echo -e " ${GREEN}✓ Kibana 运行正常${NC}"

# 获取 Kibana 状态

KIBANA_STATUS=$(curl -s http://localhost:5601/api/status | jq -r '.status.overall.state')

echo " 状态: $KIBANA_STATUS"

else

echo -e " ${RED}✗ Kibana 无法访问${NC}"

fi

fi

}

check_redis() {

echo ""

echo "4. Redis 状态:"

echo "----------------------------------------"

if command -v redis-cli &> /dev/null; then

if redis-cli -a ${REDIS_PASSWORD} ping 2>/dev/null | grep -q "PONG"; then

echo -e " ${GREEN}✓ Redis 运行正常${NC}"

# 获取 Redis 信息

REDIS_INFO=$(redis-cli -a ${REDIS_PASSWORD} info 2>/dev/null)

# 内存使用

USED_MEMORY=$(echo "$REDIS_INFO" | grep "used_memory_human" | cut -d: -f2)

MAX_MEMORY=$(echo "$REDIS_INFO" | grep "maxmemory_human" | cut -d: -f2)

echo " 内存使用: $USED_MEMORY / $MAX_MEMORY"

# 连接数

CONNECTIONS=$(echo "$REDIS_INFO" | grep "connected_clients" | cut -d: -f2)

echo " 连接数: $CONNECTIONS"

# 队列长度

QUEUE_LENGTH=$(redis-cli -a ${REDIS_PASSWORD} llen filebeat-logs 2>/dev/null)

echo " 日志队列长度: $QUEUE_LENGTH"

else

echo -e " ${RED}✗ Redis 无法连接${NC}"

fi

fi

}

check_disk_space() {

echo ""

echo "5. 磁盘空间:"

echo "----------------------------------------"

df -h ${ELK_DATA_PATH:-./data} 2>/dev/null | grep -v Filesystem | while read line; do

USAGE=$(echo $line | awk '{print $5}' | tr -d '%')

if [ $USAGE -gt 90 ]; then

echo -e " ${RED}⚠ $line${NC}"

elif [ $USAGE -gt 80 ]; then

echo -e " ${YELLOW}⚠ $line${NC}"

else

echo -e " ${GREEN}✓ $line${NC}"

fi

done

}

check_system_resources() {

echo ""

echo "6. 系统资源:"

echo "----------------------------------------"

# CPU 使用率

CPU_USAGE=$(top -bn1 | grep "Cpu(s)" | awk '{print $2}' | cut -d'%' -f1)

echo -n " CPU 使用率: "

if [ $(echo "$CPU_USAGE > 80" | bc) -eq 1 ]; then

echo -e "${RED}${CPU_USAGE}% ⚠${NC}"

elif [ $(echo "$CPU_USAGE > 60" | bc) -eq 1 ]; then

echo -e "${YELLOW}${CPU_USAGE}%${NC}"

else

echo -e "${GREEN}${CPU_USAGE}% ✓${NC}"

fi

# 内存使用率

MEM_TOTAL=$(free -m | grep Mem | awk '{print $2}')

MEM_USED=$(free -m | grep Mem | awk '{print $3}')

MEM_USAGE_PERCENT=$((MEM_USED * 100 / MEM_TOTAL))

echo -n " 内存使用率: "

if [ $MEM_USAGE_PERCENT -gt 80 ]; then

echo -e "${RED}${MEM_USAGE_PERCENT}% ⚠${NC}"

elif [ $MEM_USAGE_PERCENT -gt 60 ]; then

echo -e "${YELLOW}${MEM_USAGE_PERCENT}%${NC}"

else

echo -e "${GREEN}${MEM_USAGE_PERCENT}% ✓${NC}"

fi

}

check_logstash_pipelines() {

echo ""

echo "7. Logstash 管道状态:"

echo "----------------------------------------"

if command -v curl &> /dev/null; then

for node in node1 node2; do

if curl -s http://localhost:9600/_node/pipelines?pretty 2>/dev/null | grep -q "main"; then

echo -e " ${GREEN}✓ Logstash $node 管道运行正常${NC}"

# 获取事件统计

EVENTS_IN=$(curl -s http://localhost:9600/_node/stats/pipelines?pretty 2>/dev/null | jq -r '.pipelines.main.events.in')

EVENTS_OUT=$(curl -s http://localhost:9600/_node/stats/pipelines?pretty 2>/dev/null | jq -r '.pipelines.main.events.out')

echo " 事件统计: 输入 $EVENTS_IN / 输出 $EVENTS_OUT"

else

echo -e " ${YELLOW}⚠ Logstash $node 管道状态未知${NC}"

fi

done

fi

}

generate_report() {

echo ""

echo "========================================="

echo " 健康检查报告"

echo "========================================="

TIMESTAMP=$(date "+%Y-%m-%d %H:%M:%S")

echo "检查时间: $TIMESTAMP"

check_docker_services

check_elasticsearch

check_kibana

check_redis

check_logstash_pipelines

check_disk_space

check_system_resources

echo ""

echo "========================================="

echo " 建议操作"

echo "========================================="

# 根据检查结果给出建议

if docker-compose ps | grep -q "Exit"; then

echo "🔧 有服务异常退出,建议运行: docker-compose logs [服务名]"

fi

if df -h ${ELK_DATA_PATH:-./data} 2>/dev/null | awk '{print $5}' | grep -q "9[0-9]%"; then

echo "💾 磁盘空间即将用尽,请清理旧索引或扩容"

fi

echo ""

echo "📋 常用命令:"

echo " docker-compose logs -f [服务名] # 查看日志"

echo " docker-compose restart [服务名] # 重启服务"

echo " docker-compose ps # 查看状态"

echo " ./scripts/backup.sh # 执行备份"

}

main() {

# 检查是否在项目目录

if [ ! -f "docker-compose.yml" ]; then

echo "请在 ELK 项目目录中运行此脚本"

exit 1

fi

generate_report

}

main "$@"🐳 五、Docker Swarm/Kubernetes 部署方案

5.1 Docker Stack 部署文件 (docker-stack.yml)

version: '3.8'

networks:

elk-overlay:

driver: overlay

attachable: true

configs:

elasticsearch-config:

file: ./configs/elasticsearch/elasticsearch.yml

kibana-config:

file: ./configs/kibana/kibana.yml

logstash-config:

file: ./configs/logstash/logstash.yml

redis-config:

file: ./configs/redis/redis.conf

secrets:

elastic-password:

external: true

kibana-password:

external: true

redis-password:

external: true

services:

redis-master:

image: redis:7.2-alpine

deploy:

mode: replicated

replicas: 1

placement:

constraints:

- node.role == manager

resources:

limits:

cpus: '1'

memory: 2G

reservations:

cpus: '0.5'

memory: 1G

configs:

- source: redis-config

target: /usr/local/etc/redis/redis.conf

command: redis-server /usr/local/etc/redis/redis.conf --requirepass $$REDIS_PASSWORD

secrets:

- redis-password

environment:

REDIS_PASSWORD_FILE: /run/secrets/redis-password

volumes:

- redis-data:/data

networks:

- elk-overlay

ports:

- target: 6379

published: 6379

mode: host

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:8.11.0

deploy:

mode: global

placement:

constraints:

- node.labels.elk.role == elasticsearch

resources:

limits:

cpus: '4'

memory: 8G

reservations:

cpus: '2'

memory: 4G

configs:

- source: elasticsearch-config

target: /usr/share/elasticsearch/config/elasticsearch.yml

secrets:

- elastic-password

environment:

- node.name={{.Node.Hostname}}

- cluster.name=elk-cluster

- discovery.seed_hosts=elasticsearch

- cluster.initial_master_nodes=elasticsearch

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms4g -Xmx4g"

- ELASTIC_PASSWORD_FILE=/run/secrets/elastic-password

volumes:

- elasticsearch-data:/usr/share/elasticsearch/data

networks:

- elk-overlay

ports:

- target: 9200

published: 9200

mode: host

- target: 9300

published: 9300

mode: host

logstash:

image: docker.elastic.co/logstash/logstash:8.11.0

deploy:

mode: replicated

replicas: 2

resources:

limits:

cpus: '2'

memory: 4G

reservations:

cpus: '1'

memory: 2G

configs:

- source: logstash-config

target: /usr/share/logstash/config/logstash.yml

volumes:

- ./configs/logstash/pipeline:/usr/share/logstash/pipeline

- ./configs/logstash/patterns:/usr/share/logstash/patterns

networks:

- elk-overlay

ports:

- target: 5044

published: 5044

mode: host

- target: 5000

published: 5000

mode: host

kibana:

image: docker.elastic.co/kibana/kibana:8.11.0

deploy:

mode: replicated

replicas: 2

placement:

constraints:

- node.labels.elk.role == kibana

resources:

limits:

cpus: '1'

memory: 2G

reservations:

cpus: '0.5'

memory: 1G

configs:

- source: kibana-config

target: /usr/share/kibana/config/kibana.yml

secrets:

- kibana-password

environment:

- ELASTICSEARCH_HOSTS=http://elasticsearch:9200

- ELASTICSEARCH_PASSWORD_FILE=/run/secrets/kibana-password

networks:

- elk-overlay

ports:

- target: 5601

published: 5601

mode: host

volumes:

elasticsearch-data:

driver: local

redis-data:

driver: local5.2 Kubernetes 部署文件示例 (k8s/elk-deployment.yaml)

apiVersion: v1

kind: Namespace

metadata:

name: elk

---

# Redis StatefulSet

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: redis

namespace: elk

spec:

serviceName: redis

replicas: 3

selector:

matchLabels:

app: redis

template:

metadata:

labels:

app: redis

role: master

spec:

containers:

- name: redis

image: redis:7.2-alpine

ports:

- containerPort: 6379

env:

- name: REDIS_PASSWORD

valueFrom:

secretKeyRef:

name: redis-secret

key: password

command: ["redis-server"]

args: ["--requirepass", "$(REDIS_PASSWORD)"]

volumeMounts:

- name: redis-data

mountPath: /data

resources:

requests:

memory: "1Gi"

cpu: "500m"

limits:

memory: "2Gi"

cpu: "1"

volumeClaimTemplates:

- metadata:

name: redis-data

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 10Gi

---

# Elasticsearch StatefulSet

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: elasticsearch

namespace: elk

spec:

serviceName: elasticsearch

replicas: 3

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

initContainers:

- name: increase-vm-max-map

image: busybox

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

containers:

- name: elasticsearch

image: docker.elastic.co/elasticsearch/elasticsearch:8.11.0

env:

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: cluster.name

value: "elk-cluster"

- name: discovery.seed_hosts

value: "elasticsearch-0.elasticsearch,elasticsearch-1.elasticsearch,elasticsearch-2.elasticsearch"

- name: cluster.initial_master_nodes

value: "elasticsearch-0,elasticsearch-1,elasticsearch-2"

- name: ES_JAVA_OPTS

value: "-Xms4g -Xmx4g"

- name: ELASTIC_PASSWORD

valueFrom:

secretKeyRef:

name: elasticsearch-secret

key: password

ports:

- containerPort: 9200

name: http

- containerPort: 9300

name: transport

volumeMounts:

- name: elasticsearch-data

mountPath: /usr/share/elasticsearch/data

resources:

requests:

memory: "4Gi"

cpu: "2"

limits:

memory: "8Gi"

cpu: "4"

volumeClaimTemplates:

- metadata:

name: elasticsearch-data

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 50Gi📊 六、监控与告警配置

6.1 Prometheus 配置 (configs/prometheus/prometheus.yml)

global:

scrape_interval: 15s

evaluation_interval: 15s

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'elasticsearch'

metrics_path: '/_prometheus/metrics'

static_configs:

- targets: ['elasticsearch-node1:9200', 'elasticsearch-node2:9200']

basic_auth:

username: 'elastic'

password: '${ELASTIC_PASSWORD}'

- job_name: 'logstash'

static_configs:

- targets: ['logstash-node1:9600', 'logstash-node2:9600']

metrics_path: '/_node/stats'

- job_name: 'redis'

static_configs:

- targets: ['redis-master:6379']

metrics_path: '/metrics'

- job_name: 'node-exporter'

static_configs:

- targets: ['node-exporter:9100']

- job_name: 'cadvisor'

static_configs:

- targets: ['cadvisor:8080']6.2 Grafana 仪表板导入

创建 grafana/provisioning/dashboards/dashboard.yml:

apiVersion: 1

providers:

- name: 'default'

orgId: 1

folder: ''

type: file

disableDeletion: false

editable: true

options:

path: /var/lib/grafana/dashboards6.3 ELK 监控告警规则

# configs/prometheus/rules/elk-rules.yml

groups:

- name: elk_alerts

rules:

- alert: ElasticsearchClusterRed

expr: elasticsearch_cluster_health_status{color="red"} == 1

for: 2m

labels:

severity: critical

annotations:

summary: "Elasticsearch cluster is red"

description: "Cluster {{ $labels.cluster }} is in red state for more than 2 minutes."

- alert: ElasticsearchClusterYellow

expr: elasticsearch_cluster_health_status{color="yellow"} == 1

for: 5m

labels:

severity: warning

annotations:

summary: "Elasticsearch cluster is yellow"

description: "Cluster {{ $labels.cluster }} is in yellow state for more than 5 minutes."

- alert: ElasticsearchHighMemoryUsage

expr: elasticsearch_jvm_memory_used_bytes / elasticsearch_jvm_memory_max_bytes > 0.8

for: 5m

labels:

severity: warning

annotations:

summary: "Elasticsearch high memory usage on {{ $labels.instance }}"

description: "JVM memory usage is {{ $value | humanizePercentage }}."

- alert: RedisMemoryHighUsage

expr: redis_memory_used_bytes / redis_memory_max_bytes > 0.85

for: 5m

labels:

severity: warning

annotations:

summary: "Redis high memory usage on {{ $labels.instance }}"

description: "Redis memory usage is {{ $value | humanizePercentage }}."

- alert: LogstashPipelineDown

expr: logstash_node_pipeline_events_out_total offset 5m - logstash_node_pipeline_events_out_total == 0

for: 2m

labels:

severity: critical

annotations:

summary: "Logstash pipeline is down on {{ $labels.instance }}"

description: "No events processed in the last 5 minutes."🔧 七、备份与恢复策略

7.1 备份脚本 (scripts/backup.sh)

#!/bin/bash

# ELK 数据备份脚本

set -e

echo "========================================="

echo " ELK 数据备份"

echo "========================================="

# 加载环境变量

if [ -f .env ]; then

export $(cat .env | grep -v '^#' | xargs)

fi

BACKUP_DIR="/backup/elk"

TIMESTAMP=$(date +"%Y%m%d_%H%M%S")

BACKUP_PATH="${BACKUP_DIR}/${TIMESTAMP}"

create_backup_dir() {

echo "创建备份目录: $BACKUP_PATH"

mkdir -p $BACKUP_PATH/{elasticsearch,redis,configs,certificates}

}

backup_elasticsearch() {

echo "备份 Elasticsearch 数据..."

# 创建 Elasticsearch 快照仓库

curl -X PUT "http://localhost:9200/_snapshot/backup" \

-u elastic:${ELASTIC_PASSWORD} \

-H 'Content-Type: application/json' \

-d '{

"type": "fs",

"settings": {

"location": "/usr/share/elasticsearch/backup"

}

}' 2>/dev/null || true

# 创建快照

SNAPSHOT_NAME="snapshot_${TIMESTAMP}"

curl -X PUT "http://localhost:9200/_snapshot/backup/${SNAPSHOT_NAME}?wait_for_completion=true" \

-u elastic:${ELASTIC_PASSWORD} \

-H 'Content-Type: application/json' \

-d '{

"indices": "*,-.monitoring*,-.watcher-history*",

"ignore_unavailable": true,

"include_global_state": false

}'

# 复制快照到备份目录

docker cp elasticsearch-node1:/usr/share/elasticsearch/backup $BACKUP_PATH/elasticsearch/

echo "✅ Elasticsearch 备份完成"

}

backup_redis() {

echo "备份 Redis 数据..."

# 保存 Redis RDB 文件

docker exec redis-master redis-cli -a ${REDIS_PASSWORD} save

# 复制 RDB 文件

docker cp redis-master:/data/dump.rdb $BACKUP_PATH/redis/

echo "✅ Redis 备份完成"

}

backup_configs() {

echo "备份配置文件..."

# 备份所有配置文件

cp -r configs $BACKUP_PATH/

cp docker-compose.yml $BACKUP_PATH/

cp .env $BACKUP_PATH/

# 备份证书

if [ -d "certificates" ]; then

cp -r certificates $BACKUP_PATH/

fi

echo "✅ 配置文件备份完成"

}

create_archive() {

echo "创建备份归档..."

cd $BACKUP_DIR

tar -czf elk_backup_${TIMESTAMP}.tar.gz ${TIMESTAMP}

# 清理临时文件

rm -rf ${TIMESTAMP}

BACKUP_FILE="elk_backup_${TIMESTAMP}.tar.gz"

BACKUP_SIZE=$(du -h $BACKUP_FILE | cut -f1)

echo "✅ 备份归档创建完成: $BACKUP_FILE ($BACKUP_SIZE)"

# 保留最近7天的备份

echo "清理旧备份..."

find $BACKUP_DIR -name "elk_backup_*.tar.gz" -mtime +7 -delete

}

main() {

echo "开始 ELK 数据备份..."

create_backup_dir

backup_elasticsearch

backup_redis

backup_configs

create_archive

echo ""

echo "========================================="

echo " 备份完成!"

echo " 备份文件: $BACKUP_DIR/$BACKUP_FILE"

echo "========================================="

}

main "$@"🎯 八、企业级最佳实践

8.1 安全加固建议

# 1. 使用 TLS 加密

# 生成证书

openssl req -x509 -nodes -days 365 -newkey rsa:2048 \

-keyout certificates/elk.key -out certificates/elk.crt \

-subj "/C=CN/ST=Beijing/L=Beijing/O=Company/CN=elk.company.com"

# 2. 配置防火墙

# 只允许必要的端口访问

sudo ufw allow 22/tcp

sudo ufw allow 5601/tcp # Kibana

sudo ufw allow 9200/tcp # Elasticsearch API (仅内部)

sudo ufw enable

# 3. 定期更新密码

./scripts/rotate-passwords.sh

# 4. 启用审计日志

# 在 Elasticsearch 配置中添加:

xpack.security.audit.enabled: true

xpack.security.audit.logfile.events.include: authentication_failed, access_denied8.2 性能优化

# Docker Compose 资源限制优化

services:

elasticsearch:

deploy:

resources:

limits:

cpus: '4'

memory: 8G

reservations:

cpus: '2'

memory: 4G

ulimits:

memlock:

soft: -1

hard: -1

nofile:

soft: 65536

hard: 65536

# Elasticsearch JVM 优化

# configs/elasticsearch/jvm.options

-Xms4g

-Xmx4g

-XX:+UseG1GC

-XX:MaxGCPauseMillis=200

-XX:InitiatingHeapOccupancyPercent=35

# Logstash 管道优化

pipeline.workers: 4

pipeline.batch.size: 125

pipeline.batch.delay: 50

queue.type: persisted

queue.max_bytes: 1gb8.3 高可用配置

# Docker Compose 高可用版本

version: '3.8'

x-logstash: &logstash-config

image: docker.elastic.co/logstash/logstash:${ELK_VERSION}

restart: unless-stopped

networks:

- elk-network

depends_on:

- elasticsearch-node1

- redis-master

services:

logstash-node1:

<<: *logstash-config

container_name: logstash-node1

hostname: logstash-node1

logstash-node2:

<<: *logstash-config

container_name: logstash-node2

hostname: logstash-node2

logstash-node3:

<<: *logstash-config

container_name: logstash-node3

hostname: logstash-node3

# 使用负载均衡器

nginx:

image: nginx:alpine

ports:

- "5601:5601"

volumes:

- ./configs/nginx/nginx.conf:/etc/nginx/nginx.conf

networks:

- elk-network

depends_on:

- kibana-node1

- kibana-node28.4 灾难恢复计划

# 灾难恢复检查清单

1. ✅ 定期备份验证

2. ✅ 多区域部署(如果可能)

3. ✅ 文档化的恢复流程

4. ✅ 定期恢复演练

5. ✅ 监控告警配置

6. ✅ 容灾切换流程

# 快速恢复命令

# 1. 停止服务

docker-compose down

# 2. 恢复数据

./scripts/restore.sh /backup/elk_backup_20240101_120000.tar.gz

# 3. 启动服务

docker-compose up -d

# 4. 验证恢复

./scripts/health-check.sh🚀 九、快速部署命令

9.1 一键部署脚本

#!/bin/bash

# elk-quick-deploy.sh - 快速部署脚本

# 克隆项目

git clone https://github.com/your-org/elk-docker-enterprise.git

cd elk-docker-enterprise

# 运行初始化

chmod +x scripts/*.sh

./scripts/init.sh

# 启动服务

./scripts/start.sh

# 健康检查

./scripts/health-check.sh

# 打开 Kibana

echo "打开浏览器访问: http://$(hostname -I | awk '{print $1}'):5601"9.2 常用操作命令

# 查看服务状态

docker-compose ps

docker-compose logs -f elasticsearch-node1

docker-compose logs -f --tail=100 logstash-node1

# 扩展 Logstash 节点

docker-compose up -d --scale logstash-node=4

# 重启单个服务

docker-compose restart kibana-node1

# 查看资源使用

docker stats

# 进入容器调试

docker exec -it elasticsearch-node1 bash

docker exec -it redis-master redis-cli -a ${REDIS_PASSWORD}

# 备份数据

./scripts/backup.sh

# 更新配置

docker-compose down

# 修改配置文件

docker-compose up -d📈 十、监控指标仪表板

10.1 Kibana 监控仪表板

创建以下 Kibana 仪表板:

-

系统概览仪表板

-

集群健康状态

-

节点资源使用率

-

索引统计

-

搜索/索引性能

-

-

日志流水线仪表板

-

Filebeat → Redis → Logstash → ES 数据流

-

各环节处理延迟

-

队列长度监控

-

错误率统计

-

-

业务日志分析仪表板

-

应用错误统计

-

用户行为分析

-

性能趋势

-

安全事件监控

-

10.2 Grafana 仪表板示例

{

"dashboard": {

"title": "ELK 集群监控",

"panels": [

{

"title": "Elasticsearch 集群健康",

"type": "stat",

"targets": [

{

"expr": "elasticsearch_cluster_health_status",

"legendFormat": "{{cluster}}"

}

]

},

{

"title": "节点 CPU 使用率",

"type": "timeseries",

"targets": [

{

"expr": "rate(container_cpu_usage_seconds_total{container_label_com_docker_compose_service=~\"elasticsearch.*\"}[5m]) * 100",

"legendFormat": "{{instance}}"

}

]

}

]

}

}🎉 部署完成检查清单

-

Docker 和 Docker Compose 安装完成

-

系统参数优化完成(vm.max_map_count 等)

-

目录结构和权限设置完成

-

配置文件生成完成

-

环境变量文件 (.env) 配置完成

-

Docker 镜像拉取完成

-

服务启动成功

-

Elasticsearch 集群状态为 Green/Yellow

-

Kibana 可正常访问

-

Redis 连接正常

-

Logstash 管道运行正常

-

Filebeat 配置完成并发送日志

-

监控工具(Cerebro/Prometheus/Grafana)正常运行

-

备份策略配置完成

-

告警规则配置完成

-

文档归档完成

这个企业级 Docker 部署方案提供了:

-

生产就绪的配置:安全、性能、高可用

-

完整的监控体系:Prometheus + Grafana + 内置监控

-

自动化运维:脚本化管理、备份、恢复

-

灵活的扩展性:支持 Docker Compose 和 Kubernetes

-

全面的文档:部署、配置、运维全流程指南