linux C++ onnxruntime yolov8 视频检测Demo

目录

项目目录

效果

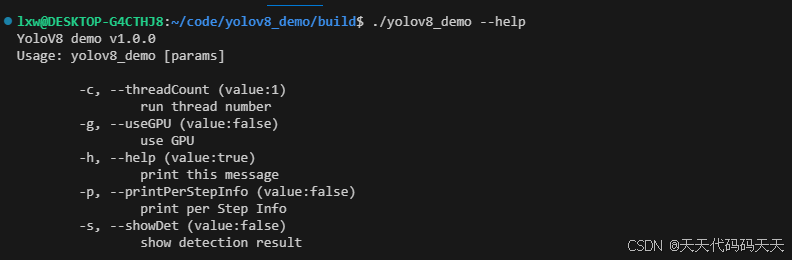

./yolov8_demo --help

./yolov8_demo -c=2 -p=true

./yolov8_demo -c=1 -s=true

CMakeLists.txt

# cmake needs this line

cmake_minimum_required(VERSION 3.0)

# Define project name

project(yolov8_demo)

# Release模式下的编译指令

# SET(CMAKE_BUILD_TYPE "Release")

# set(CMAKE_C_FLAGS_RELEASE "${CMAKE_C_FLAGS_RELEASE} -s")

# set(CMAKE_CXX_FLAGS_RELEASE "${CMAKE_CXX_FLAGS_RELEASE} -std=c++17 -pthread -Wall -Wl")

# Debug模式下的编译指令

SET(CMAKE_BUILD_TYPE "Debug")

set(CMAKE_C_FLAGS_RELEASE "${CMAKE_C_FLAGS_DEBUG}")

set(CMAKE_CXX_FLAGS_DEBUG "${CMAKE_CXX_FLAGS_DEBUG} -std=c++17 -pthread")

set(OpenCV_LIBS opencv_videoio opencv_imgcodecs opencv_imgproc opencv_core opencv_dnn opencv_highgui)

include_directories(

/usr/local/include/opencv4

${PROJECT_SOURCE_DIR}/include

${PROJECT_SOURCE_DIR}/include/onnxruntime

)

link_directories(

${PROJECT_SOURCE_DIR}/lib/onnxruntime # 第三方动态库文件

/usr/local/lib/

)

#递归指定源码的路径

file(GLOB_RECURSE SRCS ${PROJECT_SOURCE_DIR}/src/*.cpp)

# Declare the executable target built from your sources

add_executable(yolov8_demo ${SRCS})

# Link your application with OpenCV libraries

target_link_libraries(yolov8_demo

-lonnxruntime

${OpenCV_LIBS}

)代码

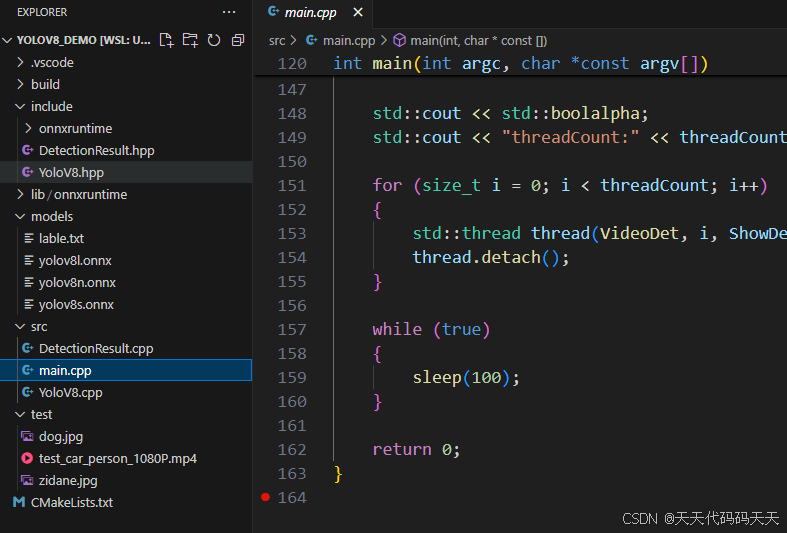

main.cpp

#include <opencv2/core.hpp>

#include <opencv2/highgui.hpp>

#include <iostream>

#include <YoloV8.hpp>

#include <unistd.h>

#include <sys/syscall.h>

#include <thread>

int VideoDet(int index, bool showDet, bool useGPU, bool printPerStepInfo)

{

size_t threadId = static_cast<size_t>(syscall(SYS_gettid));

// std::cout << "index:" << index << " thread id: " << threadId << std::endl;

cv::VideoCapture capture("./test/test_car_person_1080P.mp4");

// 检查视频是否成功打开

if (!capture.isOpened())

{

std::cout << "无法读取视频文件" << std::endl;

return -1;

}

int frameCount = capture.get(cv::CAP_PROP_FRAME_COUNT); // 获取视频帧数

double fps = capture.get(cv::CAP_PROP_FPS); // 获取帧率

int delay = int(1000 / fps); // 根据帧率计算帧间间隔时间

// delay=1;

std::string model_path = "./models/yolov8n.onnx";

std::string lable_path = "./models/lable.txt";

int GPUCount = 2;

int device_id = 0;

if (index >= GPUCount)

{

device_id = index % GPUCount;

}

else

{

device_id = index;

}

// device_id=0;

YoloV8 yoloV8(model_path, lable_path, useGPU, device_id);

yoloV8.index = index;

yoloV8.threadId = threadId;

yoloV8.videoFps = fps;

yoloV8.frameCount = frameCount;

// std::cout << "device_id:" << yoloV8.device_id << std::endl;

// vector<DetectionResult> detectionResult;

// Mat frame=cv::imread("../test/dog.jpg");

// yoloV8.Detect(frame, detectionResult);

// std::cout << "detectionResult size:" << detectionResult.size() << std::endl;

string winname = "detectionResult-" + std::to_string(index);

while (true)

{

double start = (double)cv::getTickCount();

delay = int(1000 / fps);

Mat frame;

bool success = capture.read(frame); // 读取一帧数据

// 检查是否成功读取帧

if (!success)

{

std::cout << "index:" << index << ",读取完毕" << std::endl;

yoloV8.PrintAvgCostTime();

break;

}

vector<DetectionResult> detectionResult;

yoloV8.Detect(frame, detectionResult);

// std::cout <<"index:"<<index<< " thread id: " << threadId << " detectionResult size: " << detectionResult.size() << std::endl;

yoloV8.detectionResultSize = detectionResult.size();

if (printPerStepInfo)

{

yoloV8.PrintCostTime();

yoloV8.PrintAvgCostTime();

}

if (showDet)

{

yoloV8.Draw(frame, detectionResult);

imshow(winname, frame);

double costTime = ((double)getTickCount() - start) / getTickFrequency();

delay = delay - costTime;

if (delay <= 0)

{

delay = 1;

}

if (waitKey(delay) == 27) // 通过按下ESC键退出循环

{

break;

}

}

}

capture.release(); // 释放视频对象

if (showDet)

{

cv::destroyWindow(winname);

}

return 0;

}

int main(int argc, char *const argv[])

{

int threadCount = 1;

bool showDet = false;

bool useGPU = false;

bool printPerStepInfo = true;

const char *keys ="{h help || print this message}"

"{c threadCount | 1 | run thread number}"

"{s showDet | false | show detection result}"

"{g useGPU | false | use GPU}"

"{p printPerStepInfo | false | print per Step Info}";

cv::CommandLineParser parser(argc, argv, keys);

if(parser.has("help"))

{

parser.about("YoloV8 demo v1.0.0");

parser.printMessage();

return 0;

}

threadCount=parser.get<int>("threadCount");

showDet=parser.get<bool>("showDet");

useGPU=parser.get<bool>("useGPU");

printPerStepInfo=parser.get<bool>("printPerStepInfo");

std::cout << std::boolalpha;

std::cout << "threadCount:" << threadCount << ",showDet:" << showDet<< ",useGPU:" << useGPU << ",printPerStepInfo:" << printPerStepInfo << std::endl;

for (size_t i = 0; i < threadCount; i++)

{

std::thread thread(VideoDet, i, showDet, useGPU, printPerStepInfo);

thread.detach();

}

while (true)

{

sleep(100);

}

return 0;

}

#include <opencv2/core.hpp>

#include <opencv2/highgui.hpp>

#include <iostream>

#include <YoloV8.hpp>

#include <unistd.h>

#include <sys/syscall.h>

#include <thread>

int VideoDet(int index, bool showDet, bool useGPU, bool printPerStepInfo)

{

size_t threadId = static_cast<size_t>(syscall(SYS_gettid));

// std::cout << "index:" << index << " thread id: " << threadId << std::endl;

cv::VideoCapture capture("./test/test_car_person_1080P.mp4");

// 检查视频是否成功打开

if (!capture.isOpened())

{

std::cout << "无法读取视频文件" << std::endl;

return -1;

}

int frameCount = capture.get(cv::CAP_PROP_FRAME_COUNT); // 获取视频帧数

double fps = capture.get(cv::CAP_PROP_FPS); // 获取帧率

int delay = int(1000 / fps); // 根据帧率计算帧间间隔时间

// delay=1;

std::string model_path = "./models/yolov8n.onnx";

std::string lable_path = "./models/lable.txt";

int GPUCount = 2;

int device_id = 0;

if (index >= GPUCount)

{

device_id = index % GPUCount;

}

else

{

device_id = index;

}

// device_id=0;

YoloV8 yoloV8(model_path, lable_path, useGPU, device_id);

yoloV8.index = index;

yoloV8.threadId = threadId;

yoloV8.videoFps = fps;

yoloV8.frameCount = frameCount;

// std::cout << "device_id:" << yoloV8.device_id << std::endl;

// vector<DetectionResult> detectionResult;

// Mat frame=cv::imread("../test/dog.jpg");

// yoloV8.Detect(frame, detectionResult);

// std::cout << "detectionResult size:" << detectionResult.size() << std::endl;

string winname = "detectionResult-" + std::to_string(index);

while (true)

{

double start = (double)cv::getTickCount();

delay = int(1000 / fps);

Mat frame;

bool success = capture.read(frame); // 读取一帧数据

// 检查是否成功读取帧

if (!success)

{

std::cout << "index:" << index << ",读取完毕" << std::endl;

yoloV8.PrintAvgCostTime();

break;

}

vector<DetectionResult> detectionResult;

yoloV8.Detect(frame, detectionResult);

// std::cout <<"index:"<<index<< " thread id: " << threadId << " detectionResult size: " << detectionResult.size() << std::endl;

yoloV8.detectionResultSize = detectionResult.size();

if (printPerStepInfo)

{

yoloV8.PrintCostTime();

yoloV8.PrintAvgCostTime();

}

if (showDet)

{

yoloV8.Draw(frame, detectionResult);

imshow(winname, frame);

double costTime = ((double)getTickCount() - start) / getTickFrequency();

delay = delay - costTime;

if (delay <= 0)

{

delay = 1;

}

if (waitKey(delay) == 27) // 通过按下ESC键退出循环

{

break;

}

}

}

capture.release(); // 释放视频对象

if (showDet)

{

cv::destroyWindow(winname);

}

return 0;

}

int main(int argc, char *const argv[])

{

int threadCount = 1;

bool showDet = false;

bool useGPU = false;

bool printPerStepInfo = true;

const char *keys ="{h help || print this message}"

"{c threadCount | 1 | run thread number}"

"{s showDet | false | show detection result}"

"{g useGPU | false | use GPU}"

"{p printPerStepInfo | false | print per Step Info}";

cv::CommandLineParser parser(argc, argv, keys);

if(parser.has("help"))

{

parser.about("YoloV8 demo v1.0.0");

parser.printMessage();

return 0;

}

threadCount=parser.get<int>("threadCount");

showDet=parser.get<bool>("showDet");

useGPU=parser.get<bool>("useGPU");

printPerStepInfo=parser.get<bool>("printPerStepInfo");

std::cout << std::boolalpha;

std::cout << "threadCount:" << threadCount << ",showDet:" << showDet<< ",useGPU:" << useGPU << ",printPerStepInfo:" << printPerStepInfo << std::endl;

for (size_t i = 0; i < threadCount; i++)

{

std::thread thread(VideoDet, i, showDet, useGPU, printPerStepInfo);

thread.detach();

}

while (true)

{

sleep(100);

}

return 0;

}